International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Kuldeep Tripathi1, Dipti Ranjan Tiwari2

1Master of Technology, Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India 2Assistant Professor, Department of Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India ***

Abstract - This comprehensive review paper intricately delves intothe fascinatingworldofdecodingfacialexpressions for emotionrecognition byleveragingdeeplearningmodels.It meticulously navigates through the complexities of facial expressionanalysis, sheddinglightontheevolutionaryjourney of deep learning architectures specifically designed for emotion recognition tasks. The journey spans from the inception of convolutional neural networks (CNNs) to the advancements inrecurrent neuralnetworks (RNNs) andtheir innovative hybrids, encapsulating a wide spectrum of models meticulously crafted to capture the subtle nuances of human emotions. The review critically examines the challenges and limitations that researchersfaceinthecurrentmethodologies, including issues related to dataset biases and variations in emotional expression across different cultures. It also delves into the ethical implications and societal impacts associated with the deployment of emotion recognition systems, underscoringthe importance ofresponsibleAIdevelopmentin this domain. The review paper explores emerging trends such as multimodal emotion recognition and delves into the potential applications of emotion recognition technology in diverse fields such as healthcare, education, and humancomputer interaction. By providing valuable insights into future research directions, it offers a glimpse into the promising possibilities that lie ahead in this rapidly evolving field of study.

Key Words: Emotionrecognition,Facialexpressions,Deep learning,Convolutionalneuralnetworks(CNNs),Recurrent neuralnetworks(RNNs)

The roots of "Exploring Emotions of Deciphering Facial ExpressionsforEmotionRecognitionwithDeepLearning" can be traced back to the intersection of psychology, computerscience,andartificialintelligence.Overtheyears, emotionrecognitionhascapturedtheinterestofresearchers in these fields as they seek to unravel the intricacies of human expression and how machines can accurately interpret them. The advent of deep learning, especially convolutionalneuralnetworks(CNNs),hasbroughtabouta significant transformation in this area by empowering computers to analyze facial expressions in a manner that mimicshumanperception.Thisinnovativeapproachenables theextractionofdetailedfeaturesfromfacialimages,which

are then utilized to categorize emotions with impressive precision.Thejourneyofthisprojecthasbeencharacterized by milestones in data collection, facilitated by extensive facial expression databases, and advancements in algorithms, including the creation of complex neural network structures. With each step forward, researchers delvedeeperintothecomplexitiesofemotionalexpression, pushingtheboundariesofwhatcanbeachievedintherealm of artificial emotional intelligence. Through this historical evolution, "Exploring Emotions of Deciphering Facial ExpressionsforEmotionRecognitionwithDeepLearning" serves as a testament to the continuous quest to comprehend and replicate human emotional capabilities throughcomputationalmethods.

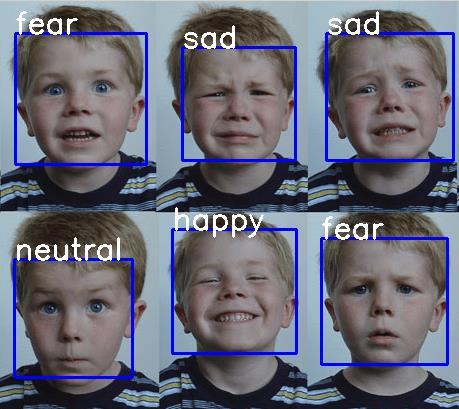

Facialexpressionrecognitionisanemergingfieldinartificial intelligence that focuses on teaching machines how to interpret and understand human emotions through facial movements. This area of research utilizes advanced deep learning techniques, specifically convolutional neural networks (CNNs), to analyze facial features and patterns. Researcherstrainthesemodelsonlargedatasetscontaining labeled facial images to help machines accurately classify emotionslikehappiness,sadness,anger,surprise,andmore. However, a significant challenge is capturing the subtle nuancesandcontext-dependentnatureofhumanemotions, as expressions can vary greatly based on cultural backgrounds and individual differences. Despite these complexities,facialexpressionrecognitionhasvastpotential in various applications, including human-computer interaction,healthcarediagnostics,andmarketingresearch. As technology advances, further progress in this field is expected to deepen our understanding of emotions and enhance the capabilities of AI systems in recognizing and respondingtohumancues.

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

2.1.Importance of Emotion Recognition in Various Fields

Emotion recognition is a critical component that has a profound impact on various industries, transforming the way we engage with technology, comprehend human actions,andenhanceourgeneralwelfare.Thistechnology has significantly advanced the healthcare sector by facilitatingpreciseidentificationandmanagementofmental healthconditionsthroughtheanalysisoffacialexpressions, vocal tones, and other physiological cues to evaluate the emotionalwell-beingofpatients.Additionally,intherealm of education, emotion recognition has revolutionized the learningprocessbyofferingtailoredfeedbackandadjusting educational materials according to students' levels of involvementandemotionalreactions,therebyimprovingthe overalleducationalexperience.

In the realm of customer service and marketing, emotion recognitionallowscompaniestoassessconsumersentiment, customizeproductsandservicestofulfillspecificemotional needs, and improve overall customer satisfaction. Within human-computerinteraction,emotionrecognitionenriches the user experience by empowering machines to perceive andreacttohumanemotions,facilitatingmoreorganicand instinctive interactions. Furthermore, in the realms of security and law enforcement, it assists in identifying deceptive behavior and recognizing potential threats through the analysis of facial expressions and other nonverbal cues. Emotion recognition holds significant importance across various fields, providing invaluable insightsintohumanemotionsandbehavior,andpropelling advancements in technology and human-centered applications.

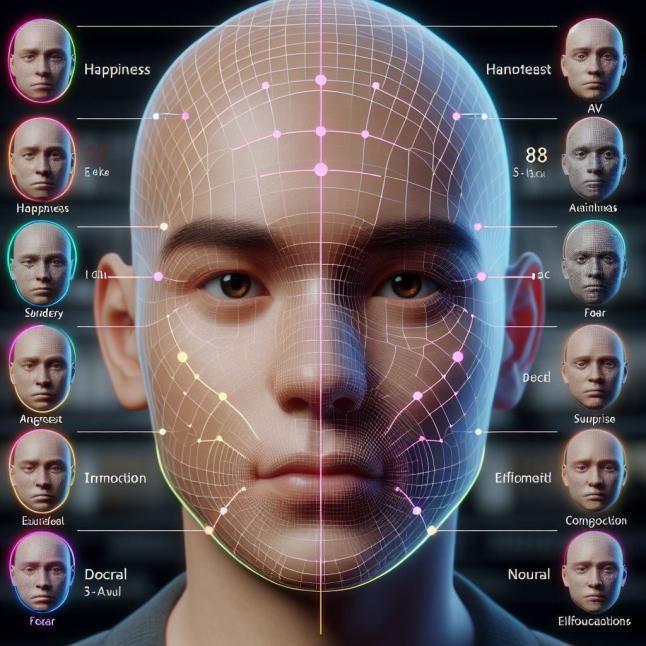

Facial affect recognition through the application of sophisticateddeeplearningmethodologieshasexperienced notable progress in recent years, empowering artificial intelligence systems to precisely perceive and decipher human emotional states based on facial cues. Various fundamentaldeeplearningframeworksandmethodologies havebeenutilizedinthisdomain.

3.1.Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) have become a popularchoiceforfacialemotionrecognitiontasksbecause oftheireffectivenessinextractingimportantfeaturesfrom image data. These networks are structured with layers of convolutionalandpoolingoperations,enablingthemtolearn hierarchical representations of facial expressions. By analyzing different levels of detail in an image, CNNs can accurately detect and classify emotions based on facial features. This capability makes them a valuable tool in variousapplicationssuchasemotionrecognitioninhumancomputerinteraction,surveillancesystems,andhealthcare technologies. Overall, CNNs have proven to be a powerful and versatile tool for analyzing facial emotions in a wide rangeofcontexts.

RecurrentNeuralNetworks(RNNs),withaspecialfocuson LongShort-TermMemory(LSTM)networks,playacrucial role in analyzing temporal relationships in facial emotion recognitiontasks.Theseadvancedneuralnetworkshavethe ability to effectively analyze sequences of facial images or videoframes,allowingthemtotrackandcomprehendthe evolutionofemotionsovertime.Byleveragingthepowerof RNNs, particularly LSTM networks, researchers and developerscanenhancetheaccuracyandefficiencyoffacial emotion recognition systems, ultimately leading to more International Research

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

robust and reliable results in various applications and industries.

3.3.Transfer Learning

Transferlearningisapowerfultechniqueinthefieldofdeep learningthatinvolvesutilizingpre-trainedmodelslikeVGG, ResNet, or MobileNet. These models have already been trained on extensive image datasetssuchasImageNet. By fine-tuning these pre-trained models on specific datasets related to facial emotions, researchers can significantly improve the performance of the models with minimal amountsoflabeleddata.Thisprocessallowsforfasterand moreefficienttrainingofdeeplearningmodelsfortaskslike facial emotion recognition. By leveraging the knowledge gained from the initial training on large-scale datasets, transfer learning enables researchers to achieve higher accuracyandbetterresultsinavarietyofapplications.

3.4.Attention Mechanisms

Attentionmechanismsareacriticalcomponentinmachine learningmodelsthatenablethemtoprioritizespecificparts oftheinputdata.Bydoingso,thesemechanismsallowthe model to focus on important facial features while disregardinglessrelevantones.Thisisespeciallybeneficial in the field of facial emotion recognition, where different regionsofthefacecancarryvaryingdegreesofemotional information.Byutilizingattentionmechanisms,modelscan betteridentifyandinterprettheemotionalcuesdisplayedon the face, leading to more accurate and reliable emotion recognitionresults.

3.5.Data

Data augmentation techniques play a crucial role in enhancing the diversity of training data for deep learning models,particularlyinthefieldoffacialemotionrecognition. By incorporating methods like rotation, scaling, and cropping, the dataset is artificially expanded to prevent overfittingandenhancethegeneralizationcapabilitiesofthe models.Thismeansthatthemodelsarebetterequippedto accurately recognize and interpret facial emotions, ultimatelyleadingtoimprovedperformanceandaccuracyin real-worldapplications.

3.6.Ensemble Learning

Ensemble learning is a powerful technique that involves combiningthepredictionsofmultipledeeplearningmodels to enhance overall performance. This approach entails training several models with varying architectures or initializationsandthenaggregatingtheiroutputstoachieve superior accuracy in tasks such as facial emotion recognition.Byleveragingthestrengthsofindividualmodels andmitigatingtheirweaknessesthroughensemblelearning, researchers can significantly boost the effectiveness and reliability of their predictive models. This collaborative

approachallowsforamorecomprehensiveanalysisofthe dataandenablesthecreationofamorerobustandaccurate systemforrecognizingfacialemotions.

3.7.Multi-modal Fusion

Integratinginformationfromvarioussourcessuchasfacial images, audio recordings, and text data can significantly enhance the effectiveness and accuracy of emotion recognition systems. By utilizing advanced deep learning techniques like multi-modal fusion networks, it becomes possible to combine and analyze data from different modalitiestoenhancetheoverallperformanceofthesystem. This integration allows for a more comprehensive understanding of emotions and leads to more robust and reliable recognition results. Ultimately, the use of multimodalfusionnetworksenablesemotionrecognitionsystems to achieve higher levels of accuracy and efficiency in analyzingandinterpretingcomplexemotionalcues.

Psychological theories regarding facial expressions and emotionsprovidevaluableinsightsintothewaysinwhich humans perceive, convey, and decipher emotions through facial signals. Numerous fundamental theories have been posited:

4.1.Facial Feedback Hypothesis

Thistheorypositsthatfacialexpressionsplayadualrolein ouremotionalexperiences.Itsuggeststhatnotonlydofacial expressions reflect our emotions, but they also have the powertoinfluenceouremotionalstate.Inotherwords,the waywemoveourfacialmusclescanactuallyimpacthowwe feel.Forinstance,ifweforceourselvestosmile,itcantrigger feelingsofhappinesswithinus.Thishypothesishighlights theintricaterelationshipbetweenourfacialexpressionsand our emotions, showing that they are interconnected in a profoundway.Theideathatchangingourfacialexpressions can lead to corresponding changes in our emotional experiences sheds light on the complex nature of human emotionsandthe ways inwhichouroutwardexpressions canshapeourinnerfeelings.

CharlesDarwin,arenownedscientist,putforththetheory thatfacialexpressionsplayacrucialroleintheprocessof evolution.Accordingtohisresearch,facialexpressionsare notjustrandommovementsbutratheradaptiveresponses to various environmental stimuli. Darwin believed that certainfacialexpressions,likethoseoffearoranger,have evolved over time as innate signals to effectively communicate emotional states and aid in the survival of species. This theory suggests that our facial expressions serveapurposebeyondjustsimplecommunication,butare

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

deeply rooted in our evolutionary history as a means of adaptationandsurvival.

AccordingtoEkman'stheory,therearesixbasicuniversal emotionsthatcanbeexpressedandunderstoodbypeople fromdifferentculturesallaroundtheworld.Theseemotions includehappiness,sadness,surprise,fear,anger,anddisgust. Toprovetheuniversalityoftheseemotions,Ekmancarried out thorough cross-cultural research. Additionally, he createdtheFacialActionCodingSystem(FACS),amethod usedtoaccuratelyandobjectivelyanalyzefacialexpressions. Thisgroundbreakingworkhashadasignificantimpacton the fields of psychology, sociology, and communication studies, providing valuable insights into human emotions andbehaviorsacrossdiversepopulations.

Thetheorypositsthatemotionsarearesultofphysiological arousal,meaningthatourbody'sresponsetostimulileadsto the experience of emotions through our interpretation of thesebodilychanges.Inessence,ourfeelingsofemotionare a direct result of the physical reactions that our body undergoes in reaction to external triggers. This theory suggeststhatouremotionsarecloselylinkedtoourbodily responses, and that our perception and understanding of these changes play a crucial role in how we experience different emotions in various situations. It highlights the intricate connection between our physical and emotional states,emphasizingtheimportanceofourinterpretationof bodilysensationsinshapingouremotionalexperiences.

4.5.Cognitive Appraisal Theory

Thistheorysuggeststhatemotionsarenotsimplyrandom reactions,butrathertheresultofourcognitiveevaluations orappraisalsofagivensituation.Emotionsareintricately tied to our interpretations of events, which can include considerationssuchashowrelevanttheeventsaretoour goals,expectations,andbeliefs.Itisthroughtheseappraisals that we experience a range of emotional responses, as different interpretations can lead to varying emotional reactions. In essence, our emotions are not just fleeting feelings, but are deeply connected to our thoughts and perceptionsoftheworldaroundus.

4.6.Social Constructivist

Thistheoryhighlightstheimportanceofsocialandcultural influences in shaping how individuals experience and express emotions. It posits that emotions are not solely individual experiences but are constructed through interactions with others within a specific cultural context. Cultural norms, values, and socialization processes play a significantroleininfluencinghowemotionsareperceived andexpressedbyindividuals.Byconsideringtheimpactof

social and cultural factors, this theory provides a more comprehensive understanding of the complexities of emotionalexperiences.

4.7.Component Process Model

According to this model, emotions are not just simple reactionsbutareactuallymadeupofvariouspartsthatwork togethertocreateouroverallemotionalexperience.These componentsincludehowwefeelsubjectively,thephysical changesinourbody,ourthoughtsaboutthesituation,how weexpressouremotions,andtheactionsweareinclinedto take. It is the interaction between these different componentsthatshapesthecomplexityofouremotionsand influenceshowweexperienceandexpressthem.

5.IMPORTANCE OF FACIAL EXPRESSIONS IN COMMUNICATIONANDSOCIALINTERACTION

Facialexpressionsplayacrucialroleincommunicationand socialinteraction,servingaspowerfulnonverbalcuesthat convey emotions, intentions, and interpersonal dynamics. Here are several reasons why facial expressions are importantinthesecontexts:

5.1.Emotion Communication

Facial expressions serve as a fundamental method of conveying emotions. They offer prompt and frequently unequivocal cues regarding an individual's emotional condition,enablingotherstocomprehendandreactsuitably to their sentiments. Whether it be a grin denoting joy, a creased brow indicating worry, or a scowl expressing sorrow,facialexpressionscommunicateintricateemotional details.

5.2.Social Connection

Facial expressions play a crucial role in enhancing social interactions by communicating empathy, comprehension, and unity. The act of mirroring or reflecting another individual's facial expressions promotes mutual understandingandcultivatesasharedemotionalconnection. Forinstance,reciprocatingasmileinresponsetosomeone else's smile can establish a harmonious cycle of positivity thatreinforcesinterpersonalrelationships.

5.3.Nonverbal Communication

Whileverbalcommunicationarticulatesexplicitinformation, facial expressions frequently communicate implicit or nuanced messages that enhance and enrich verbal communication.Theyhavetheabilitytoconveysubtletiesof tone, intention, and sincerity that words alone may not completely encapsulate, thereby augmenting the overall clarityanddepthofcommunication.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

5.4.Emotion Regulation

Facial expressions also serve a vital function in the regulation of our emotions. The process of conveying emotionsthroughfacialexpressionsaidsintheprocessing and regulation of our feelings, resulting in emotional catharsis and alleviation. Furthermore, purposefully managingourfacialexpressionscanimpactouremotional encounters and relationships with others, enabling us to projectachosenemotionalfacade.

5.5.Social Perception and Judgment

We frequently form swift assessments and evaluations of individualsbyobservingtheirfacialexpressions.Facialcues play a significant role in shaping our views on reliability, affability, proficiency, and various other social characteristics, ultimately impacting our attitudes and conducttowardsothers.Theseimmediatejudgmentshave thepotential tosteerthetrajectoryofsocial engagements andconnections.

5.6.Cultural and Contextual Significance

Facial expressions are shaped by cultural norms, social context, and individual idiosyncrasies. Proficiency in discerningandinterpretingfacialexpressionsnecessitatesa keenawarenessoftheseelements,asasingleexpressioncan conveydiverseconnotationsorunderstandingsdepending on the cultural or situational backdrop. Appreciation of culturalsubtletiesenrichesinterculturalcommunicationand fostersempathy.

6.HYBRIDARCHITECTURESCOMBININGCNNSAND

Hybrid architectures that integrate Convolutional Neural Networks(CNNs)andRecurrentNeural Networks(RNNs) haveemergedaspowerfulsolutionsfortasksrequiringthe integrationofspatialandtemporalinformation.Onenotable architecture, Convolutional Recurrent Neural Networks (CRNNs), combines the feature extraction capabilities of CNNswiththesequentialdatamodelingabilitiesofRNNs. Thisfusionisbeneficialinawiderangeofapplications,from generating descriptions for images to analyzing videos, where grasping both the content and context is essential. Furthermore,ConvolutionalLSTMs(ConvLSTMs)enhance theLSTMframeworkbyincorporatingconvolutionallayers, which are adept at processing spatial and temporal informationsimultaneously,therebyimprovingtaskssuchas video prediction and action recognition. Two-stream networks,whichutilizeparallelCNNandRNNstreams,excel incapturingbothappearanceandmotiondetails,whichare crucial for tasks like action recognition. By incorporating attention mechanisms, these architectures are further refined, allowing for selective focus on relevant spatial or temporal features. These hybrid approaches demonstrate thecollaborativepotential ofCNNsandRNNs,opening up

new opportunities in computer vision and sequence modelingbyharnessingtheircomplementarystrengths in processingspatialandtemporaldata.

Transferlearningandpretrainedmodelshavesignificantly propelled emotion recognition tasks by capitalizing on knowledge acquired from extensive datasets in related domains. By utilizing pretrained models, such as those trained on broad image recognition tasks like ImageNet, researchers can leverage the intricate hierarchical representationsofvisualfeaturesalreadyacquiredbythese models.Transferlearningentailsrefiningthesepretrained models on smaller, domain-specific datasets for emotion recognition tasks. This method enables efficient training with limited labeled data, addressing the issue of data scarcity frequently encountered in emotion recognition studies. Furthermore, pretrained models can capture universal features of facial expressions, including facial landmarks, texture patterns, and spatial relationships, crucial for precise emotion recognition. By integrating transfer learning and pretrained models, researchers can attain heightened accuracy, quicker convergence, and enhanced generalization in emotion recognition systems, therebypropellingadvancementsinhealthcare,education, human-computerinteraction,andotherfields.

In this section of the literature survey of the emotion of facialexpressionrecognition,wehavestudiedthe previous research paper based on it, and summary of previous researchworkisgivenbelow:

Amina. Theutilizationofdeeplearningtechniquesisbeing applied to infer affective-cognitive states in real-time. Specifically, facial expressions play a critical role in the recognition of emotions in individuals with autism. This articledelvesintotheprimaryobjectiveofsurveyingvarious studiesthatfocusontheemotionalstatesofindividualswith autism.Todeterminethemosteffectivesolution,athorough comparison of existing emotion-detecting systems is conductedwithinthepaper.Throughthisanalysis,theaimis toenhanceourunderstandingofhowtechnologycanaidin identifying and interpreting emotions in individuals with autism, ultimately contributing to improved support and careforthispopulation.

Senthil & Geetishree. This paper introduces a groundbreaking method for intelligent facial emotion recognitionbyextractingoptimalgeometricalfeaturesfrom facial landmarksthroughtheutilizationof VGG-19s(Fully Convolutional Neural Networks). The proposed approach incorporatesHaarcascadeforfacialdetection,enablingthe system to accurately determine distance and angle measurements. By combining geometrical features with

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

deeplearningtechniques,thesystemachievesaremarkable level of accuracy when it comes to facial emotion recognition. The experimental results demonstrate high accuracy rates on well-known datasets such as MUG and GEMEP,showcasingtheeffectivenessandreliabilityofthe proposedmethodology.

Chen. Intheresearchpaper,a model forrecognizinguser emotionswasintroducedwiththeaimofanalyzingemotions related to microblog public opinion events. The model focused on identifying three main emotional categories in text: "joy", "anger", and "sadness". These emotions were extracted through the collection and preprocessing of comments from microblog public opinion events. Furthermore, the motivational text was transformed into word vectors using Word2vec, a popular technique in naturallanguageprocessing.Itisimportanttonotethatthe emotion recognition in this model is solely based on text analysisanddoesnotrelyonfacialexpressions.Theresults of the study indicated that the deep learning model surpassedexistingmethodsinrecognizingemotionsinsocial mediaplatforms.

Qizheng. Thisarticleprovidesacomprehensiveoverviewof variousexpressiondatasetscommonlyusedinthefield.It thendelvesintotheclassificationofexpressionrecognition methodsthatarebasedondeeplearning,focusingonboth featureextractionandfeatureclassification.Additionally,the article analyzes several network enhancements in expression recognition that are based on Deep Neural Networks(DNNs),specificallylookingatimprovementsin thenetworkstructure.Deeplearningplaysacriticalrolein theaccuraterecognitionoffacialexpressionsinemotions. Furthermore,theutilizationofrichdatasetshasbeenshown to significantly enhance the generalization capabilities of facialexpressionrecognitionnetworks,ultimatelyleadingto morerobustandreliableresults.

Nizamuddin & Rajeev. This paper introduces a novel approachtofacialemotionrecognitionusingaconvolutional neural network-based spatial deep learning model. The modelwasspecificallydesignedtoextractthemostrelevant features from facial images while reducing computational complexity.Byfocusingonkeyfacialfeatures,theproposed model significantly improves accuracy on the FER-2013 dataset,whichisknownforitschallengessuchasocclusions, scale variations, and illumination changes. The enhanced model not only enhances accuracy but also addresses the limitationsoftraditionalmethodsbyprioritizingmajorfacial featuresintherecognitionprocess.Overall,thisinnovative approach offers a promising solution for improving facial emotionrecognitioninreal-worldapplications.

Nimra et al. This article explores the utilization of a CNN modelasafeatureextractorfordetectingemotionsthrough facial expressions.Thestudyleveragestwodatasets,FER2013andCohnExtended(CK+),totesttheaccuracyratesof various deep learning models. The experimental results

revealed that CNN, ResNet-50, VGG-16, and Inception-V3 achieved accuracy rates of 84.18%, 92.91%, 91.07%, and 73.16% respectively. These findings underscore the effectiveness of deep learning models in accurately recognizingemotions.Particularly,Inception-V3stoodout withanimpressiveaccuracyrateof97.93%ontheFER-2013 dataset, showcasing its superior performance in emotion detectiontasks.

Mubashir et al. In the research paper, the authors implementedatechniquecalledstackedsparseauto-encoder forfacialexpressionrecognition(SSAE-FER)toenhancethe accuracyofunsupervisedpre-trainingandsupervisedfinetuningprocesses.Thismethodaimstoimprovetheefficiency of facial expression recognition through the use of lightweightdeeplearningmodels.Theresultsofthestudy showedahighlevelofsuccess,withanimpressiveaccuracy rateof92.50%achievedontheJAFFEdatasetandaneven more remarkable accuracy rate of 99.30% on the CK+ dataset. These findings demonstrate the effectiveness and potential of the SSAE-FER approach in the field of facial expressionrecognition.

Kalyani et al. Thispaperintroducesasophisticatedmultimodal human emotion recognition web application that takesintoaccountvariousmodalitiessuchasspeech,text, and facial expressions to accurately extract and analyze emotions of individuals participating in interviews. The system utilizes deep learning techniques for facial expression analysis, allowing for a more thorough understandingoftheemotionsbeingconveyed.Inaddition tofacialexpressions,deeplearningisalsoappliedtospeech signalsandinputtexttofurtherenhancetheaccuracyand effectiveness of the emotion recognition process. This comprehensiveapproachensuresamorerobustandreliable emotionrecognitionsystemthatcaneffectivelycaptureand interpret the nuances of human emotions in various contexts.

Parismita et al.Thispaperdelvesintotheapplicationofa deep learning-based image processing technique for the identification of human emotions based on facial expressions,withaparticularfocusoninfantsagedbetween one to five years. The utilization of deep learning in the realmofhumanemotionrecognitionthroughfacialcuesis highlighted.Emotionsareclassifiedintodistinctcategories suchasAnger,Disgust,Fear,Happiness,Sadness,Surprise, andNeutrality.Thestudyaimstoexploretheintricaciesof emotion detection in young children through advanced technologicaltools,sheddinglightonthepotentialbenefits andchallengesassociatedwiththisinnovativeapproach.

Mayuri et al. Intheresearcharticle,theauthorsintroduced agroundbreakingapproachcalledRobustFacialExpression Recognition using an Evolutionary Algorithm with Deep Learning (RFER-EADL) model. This innovative model incorporates histogram equalization to standardize the intensityandcontrastlevelsofimagesdepictingthesame

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

individuals and facial expressions. By leveraging Deep Learning techniques, the RFER-EADL model is able to accuratelyrecognizeemotionsinfacialimages.Toenhance the performance of the model, the authors employed COA and TLBO algorithms to optimize DenseNet and LSTM models, further improving the overall accuracy and efficiencyofthefacialexpressionrecognitionsystem.

Sumon et al. In this research paper, the focus was on utilizingfivedifferentdeeplearningmodelsforthetaskof speech emotion recognition. The models included in the study were the Convolution Neural Network (CNN), Long Short Term Memory (LSTM), Artificial Neural Network (ANN),Multi-LayerPerceptron(MLP),andacombinationof CNNandLSTM.Itisimportanttonotethattheemphasiswas specifically on recognizing emotions from human speech, ratherthanfacialexpressions.Theresearchaimedtoexplore theeffectivenessofthesedeeplearningmodelsinaccurately detectingandclassifyingemotionsbasedonspeechpatterns. By focusing on speech rather than facial expressions, the study delved into the potential of these models in understandingandinterpretingthecomplexitiesofhuman emotionsconveyedthroughspokenwords.

Anwar et al. TheTLDFER-ADAStechnique,whichstandsfor TransferLearningforDriverAssistanceSystems,hasbeen developed to significantly improve image quality and accuracyinfacialemotionrecognitiontasks.Thisinnovative approach combines the power of Transfer Learning with advancedalgorithmstoenhancetheperformanceofdriver assistance systems. Moreover, the technique leverages Manta Ray Foraging Optimization, a cutting-edge optimization method, to further improve the accuracy of emotionclassificationinreal-timescenarios.Byintegrating thesestate-of-the-arttechnologies,TLDFER-ADASoffersa comprehensivesolutionforenhancingsafetyandefficiency in driver assistance systems through advanced facial emotionrecognitioncapabilities.

Tarun et al. This study focused on enhancing the convolutional neural network (CNN) technique for identifying 7 fundamental emotions through facial expressions. The researchers explored various preprocessingtechniquestounderstandtheirimpactonthe performanceoftheCNNmodel.Byleveragingdeeplearning algorithms, the study aimed to improve the accuracy of emotionalrecognitionbasedonfacialfeatures.Therefined CNNtechniqueshowcasedpromisingresultsinaccurately detectingemotionsfromfacialexpressions,highlightingthe potential for advancements in emotion recognition technology.

Bharti et al. Anovelhybridmodelthatcombinesmachine learninganddeeplearningtechniqueshasbeenintroduced foridentifyingemotionsintext.Inthismodel,Convolutional Neural Network (CNN) and Bidirectional Gated Recurrent Unit (Bi-GRU) are utilized as deep learning techniques to enhancetheaccuracyofemotionrecognitionintextualdata.

The study focuses on the application of deep learning methods for analyzing and understanding emotions conveyedthroughwrittentext.Itisimportanttonotethat facial expressions are not taken into consideration in this text-based emotion recognition process, highlighting the emphasisonthelinguisticaspectsofemotionalexpression.

Sergey et al. Inthisresearchpaper,theauthorsconducteda thorough examination of cortical activation patterns in individuals when processing emotional information. Specifically,thestudyhonedinonpatientswithdepression andtheiruniqueresponsetoemotionalstimuli.Thefindings revealed that individuals with depression exhibited increasedactivationinkeyregionsofthebrain,suchasthe middleandinferiorfrontalgyri,thefusiformgyrus,andthe occipital cortex, during the recognition of emotional information.Thissuggeststhatindividualswithdepression mayhavedistinctneuralpathwaysinvolvedinprocessing emotions, which could provide valuable insights into the underlying mechanisms of the disorder. By focusing on corticalactivationduringemotionalinformationrecognition, thestudyshedslightontheintricateworkingsofthebrainin individualswithdepression,offeringadeeperunderstanding of how emotions are processed and regulated in this population.

Suraya et al. Inthisinformativearticle,theauthorshaveput forthagroundbreakingapproachtodetectingemotionsin social media through linguistic analysis, utilizing metaheuristic deep learning architectures. However, it is worth noting that they have overlooked the potential benefitsofincorporatingnaturallanguageprocessing(NLP) and deep learning (DL) concepts in the content-based categorizationissue,whichformsthefoundationofemotion recognitionintextualdocuments.Thestudyfocusesonthe innovativemethodofemotiondetectionthroughlinguistic analysis with the aid of metaheuristic deep learning techniques. The authors have successfully processed realtimesocialmediadatatoclassifyemotions,showcasingthe potentialofthisapproachinenhancingemotiondetection methods. This research sheds light on the importance of exploring various technologies and methodologies to advancethefieldofemotionrecognitionintextanalysis.

In conclusion, this analysis has explored the captivating realm of decoding facial expressions for emotion identification using deep learning techniques. After a thoroughexaminationofdifferentapproaches,datasets,and evaluationcriteria,itisclearthatdeeplearningalgorithms havemaderemarkableprogressinpreciselydetectingand analyzinghumanemotionsfromfacialsignals.Nevertheless, challengeslikedatasetpartiality,cross-culturaldifferences, and the elucidation of model decisions persist as crucial areasforfutureexplorationandadvancement.Despitethese obstacles, the encouraging outcomes highlighted in this studyemphasizethecapacityofdeeplearningto enhance International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

our comprehension of human emotions and enable applications in domains such as healthcare, humancomputerinteraction,andsocialrobotics.Aswepersistin unraveling the intricacies of emotional expression identification, interdisciplinary cooperation and inventive strategies will play a vital role in realizing the complete potentialofthistransformativetechnology.

1. Ahmad,M.,Saira,N.,Alfandi,O.,Khattak,A.M.,Qadri,S. F.,Saeed,I.A.,Khan,S.,Hayat,B.,&Ahmad,A.(2023). Facial expression recognition using lightweight deep learning modeling. Mathematical Biosciences and Engineering, 20(5), 8208–8225. https://doi.org/10.3934/mbe.2023357

2. Arora, T. K., Chaubey, P. K., Raman, M. S., Kumar, B., Nagesh, Y., Anjani, P. K., Ahmed, H. M. S., Hashmi, A., Balamuralitharan, S., & Debtera, B. (2022). Optimal facialfeaturebasedemotionalrecognitionusingdeep learning algorithm. Computational Intelligence and Neuroscience, 2022, 1–10. https://doi.org/10.1155/2022/8379202

3. Bharti,S.K.,Varadhaganapathy,S.,Gupta,R.K.,Shukla, P.K.,Bouye,M.,Hingaa,S.K.,&Mahmoud,A.(2022). Text-Based Emotion recognition using Deep learning approach. Computational Intelligence and Neuroscience, 2022, 1–8. https://doi.org/10.1155/2022/2645381

4. Bukhari, N., Hussain, S., Ayoub, M., Yu, Y., & Khan, A. (2022).DeepLearningbasedFrameworkforEmotion RecognitionusingFacialExpression.PakistanJournalof Engineering & Technology, 5(3), 51–57. https://doi.org/10.51846/vol5iss3pp51-57

5. Chen, Q. (2023). Summary of Research on Facial Expression Recognition. Highlights in Science, Engineering and Technology, 44, 81–89. https://doi.org/10.54097/hset.v44i.7200

6. Hazra,S.K.,Ema,R.R.,Galib,S.M.,Kabir,S.,&Adnan,N. (2022). Emotion recognition of human speech using deep learning method and MFCC features. RadìoelektronnìÌKomp’ûternìSistemi,4,161–172. https://doi.org/10.32620/reks.2022.4.13

7. Hilal,A.M.,Elkamchouchi,D.H.,Alotaibi,S.S.,Maray, M., Othman, M., Abdelmageed, A. A., Zamani, A. S., & Eldesouki, M. I. (2022). Manta Ray Foraging Optimization with Transfer Learning Driven Facial Emotion Recognition. Sustainability, 14(21), 14308. https://doi.org/10.3390/su142114308

8. Iqbal,J...M.,Kumar,M.S.,Mishra,G.,R,N.a.G.,N,N.S. A., Ramesh, J., & N, N. B. (2023). Facial emotion

recognition using geometrical features based deep learning techniques. International Journal of Computers, Communications & Control, 18(4). https://doi.org/10.15837/ijccc.2023.4.4644

9. Kalyani, B., Sai, K. P., Deepika, N. M., Shahanaz, S., & Lohitha, G. (2023). Smart Multi-Model Emotion RecognitionSystemwithDeeplearning.International JournalonRecentandInnovationTrendsinComputing and Communication, 11(1), 139–144. https://doi.org/10.17762/ijritcc.v11i1.6061

10. Khan, N., Singh, A., & Agrawal, R. (2023). Enhancing feature extraction technique through spatial deep learningmodelforfacialemotiondetection.Annalsof Emerging Technologies in Computing, 7(2), 9–22. https://doi.org/10.33166/aetic.2023.02.002

11. Li, C., & Li, F. (2023). Emotion recognition of social mediausersbasedondeeplearning.PeerJ.Computer Science, 9, e1414. https://doi.org/10.7717/peerjcs.1414

12. M, P. S. T. U. I. L. D. G. V. a. R. (n.d.). Human Emotion Recognition using Deep Learning with Special Emphasis on Infant’s Face. https://ijeer.forexjournal.co.in/archive/volume10/ijeer-100466.html

13. Mubeen,S.,Kulkarni,N.,Tanpoco,M.R.,Kumar,R.,M,L. N., & Dhope, T. (2022). Linguistic Based Emotion Detection from Live Social Media Data Classification Using Metaheuristic Deep Learning Techniques. InternationalJournalofCommunicationNetworksand Information Security, 14(3), 176–186. https://doi.org/10.17762/ijcnis.v14i3.5604