International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Kuldeep Tripathi1, Dipti Ranjan Tiwari2

1Master of Technology, Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India

2Assistant Professor, Department of Computer Science and Engineering, Lucknow Institute of Technology, Lucknow, India ***

Abstract - Theabilitytounderstandandinterprethuman emotions based on facial expressions is a fundamental aspect of human communication and connection. In this researchpaper,wedelveintotheeffectivenessofutilizing deeplearningtechniquesforaccuratelyidentifyingemotions from facial cues. Through the utilization of sophisticated neuralnetworkstructures,particularlyConvolutionalNeural Networks(CNNs),ourgoalistoenhancetheaccuracyand dependability of emotion recognition systems. Our study involves assessing the performance of a variety of deep learningmodelsonestablisheddatasets,sheddinglighton thestrengthsandweaknessesofeachmethod.Theresults clearly show that deep learning significantly boosts the precision of emotion recognition in comparison to traditional approaches. Furthermore, we tackle the challengesposedbythediversityoffacialexpressionsseen across different individuals and situations. This research contributes to the advancement of more intuitive and interactive human-computer interaction systems, with potentialapplicationsinfieldslikementalhealthevaluation, securitymeasures,andautomatedcustomerservice.

Key Words: Emotionrecognition,Facialexpressions,Deep learning,Convolutionalneuralnetworks(CNNs),Recurrent neuralnetworks(RNNs)

Facial expression recognition plays a crucial role in the realms of computer vision and affective computing. The humanfaceservesasarichtapestryofemotions,enabling the identification of a wide range of feelings such as happiness, sadness, anger, and surprise. Historically, the process of recognizing facial emotions relied heavily on handcrafted features and conventional machine learning methods, necessitating a profound comprehension of the domain and encountering challenges when faced with diversedatasets.Astechnologyadvances,newapproaches suchasdeeplearningandneuralnetworkshaveemerged, revolutionizingthefieldoffacialexpressionrecognitionby enhancingaccuracyandadaptabilitytovariouscontexts.The evolutionofthisresearcharea notonlyshedslighton the complexityofhumanemotionsbutalsopavesthewayfor innovative applications in areas like human-computer interaction,healthcare,andpsychology.

Inthepast,emotionrecognitionsystemshavetraditionally utilizedmethodslikeLocalBinaryPatterns(LBP),Histogram ofOrientedGradients(HOG),andActiveAppearanceModels (AAMs)tocapturefacial characteristics.These techniques involved extracting specific features from the face, which were then input into classifiers such as Support Vector Machines (SVMs) or k-Nearest Neighbours (k-NN) for analysis. Despite some level of success, these approaches faced challenges when dealing with changes in lighting, different poses, and obstructions that could affect the accuracyoftheemotiondetectionprocess.

Deeplearning,particularlyConvolutionalNeuralNetworks (CNNs),hasproventobesuperiortotraditionaltechniques in a wide range of tasks involving images. CNNs have the ability to automatically learn intricate feature representationsinahierarchicalmanner,whichmakesthem ideal for handling complex tasks such as facial emotion recognition. Some of the most notable CNN architectures include AlexNet, VGGNet, and ResNet. These architectures havesignificantly enhanced theaccuracyand efficiency of image classification, pushing the boundaries of what is possibleinthefieldofcomputervision.

2.SYSTEM COMPONENTS AND FUNCTIONALITIES

2.1.Input Module

The main duty in this position involves the collection of inputdata,whichusuallycomprisesimagesorvideoframes showcasinghumanfaces.Thisdataissourcedfromarange of places, such as webcam streams, video recordings, and image folders. Following the data collection phase, the subsequentstep is focused on refining and organizing the dataforfurtheranalysis.Thisprocessencompassesactivities likeidentifyingfaces,aligningthemproperly,standardizing theimages,andadjustingtheirsizestoguaranteethatthe data is primed for examination and deployment across differentapplications.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Theprimaryfunctionofthesystemistocarryoutemotion recognitiontaskswiththehelpofdeeplearningmodels.It carefully selects the most suitable deep learning model architecture,whichcouldbeVGG-19,ResNet-50,orCNN,for the purpose of emotion recognition. The chosen model is thentrainedusinglabeledtrainingdatainordertorecognize patterns and features associated with various emotions. Afterthetrainingprocess,theperformanceofthemodelis evaluatedusingmetricslikeaccuracy,precision,recall,and F1 score. Once the model is successfully trained and evaluated,itisusedtoidentifyandclassifyfacialexpressions intospecificemotioncategoriessuchashappiness,sadness, anger,fear,disgust,surprise,andneutral.

The system generates output representations that clearly indicatetheemotionsrecognized,makingiteasyforusersto understand.Theresultsoftheemotionrecognitionprocess arepresentedinaformatthatincludesannotatedimagesor videos, textual labels, and confidence scores for each predicted emotion. This ensures that users can easily interpretandanalyzetheemotionsdetectedbythesystem. Additionally,thesystemoffersauser-friendlyinterfacethat allows users to interact with the system and view the emotionrecognitionoutputsinaconvenientmanner.Users alsohavetheoptiontoprovidefeedbackontheaccuracyand relevanceoftheemotionrecognitionresults,whichhelpsin improvingandrefiningthesystemovertime.Thisfeedback loop enables continuous enhancement of the system's performanceandensuresthatusers aresatisfiedwiththe emotionrecognitionprocess.

TheEmotionRecognitionproject'ssystemdesignisacrucial aspectthatentailsthedetailedplanningandstructuringof thearchitecture,components,andinteractionsessentialfor thesuccessfulattainmentoftheproject'sgoals.Thisincludes a comprehensive overview of how the system will be organized,thevariouselementsthatwillbeintegrated,and thewaysinwhichtheywillinteracttoensuretheefficient functioning of the project. In essence, the system design serves as a blueprint that outlines the framework within whichtheEmotionRecognitionprojectwilloperate,guiding thedevelopmentandimplementationprocesstoultimately achievethedesiredoutcomes.

TheEmotionRecognitionSystemisdesignedwithamodular architecture that consists of a variety of interconnected components.Thesecomponentscollaboratetothoroughly analyzefacialexpressionsandaccuratelypredictemotions. Thearchitectureisinclusiveofmodulesthatcovervarious aspects such as data acquisition, preprocessing of data,

extractingfeatures,recognizingemotions,visualizingoutput, integrating and deploying the system, creating a userfriendly interface, managing data effectively, training and updatingmodels,monitoringsystemperformance,analyzing data, and potentially incorporating other specialized functionalitiestoenhancetheoverallsystem.

The main responsibility of this module is to collect input data, such as images or video frames that feature human faces,fromavarietyofsourceslikewebcams,videofiles,or imagedirectories.Itthenpreparesthisinputdataforfurther analysisbycarryingouttaskslikefacedetection,alignment, normalization, and resizing to ensure that the data is consistentandofhighquality.Afterpreprocessingtheinput data, it extracts relevant features using deep learning architecturestoeffectivelycapturefacialexpressions.

Oneofthekeyfunctionsofthismoduleistorecognizeand classifyhumanemotionsbasedonfacialexpressionsusing traineddeeplearningmodels.Itthenpresentstheresultsof emotionrecognitiontousersinauser-friendlyformat,such asannotatedimagesorvideosthatoverlaytherecognized emotions.Additionally,itintegratesthetrainedmodelsand othercomponentsintoa cohesivesystemfordeployment, providinginterfacesthatallowforseamlessintegrationwith otherapplicationsorplatforms.

Thismoduleoffersanintuitiveinterfaceforuserstointeract withthesystem.Thisincludesfunctionalitieslikeuploading inputdata,initiatingemotionrecognitiontasks,andviewing theresultsinaclearandunderstandablemanner.Overall, thismoduleplaysacrucialroleincapturing,analyzing,and presentinghumanemotionsbasedonfacialexpressionsina comprehensiveandefficientmanner.

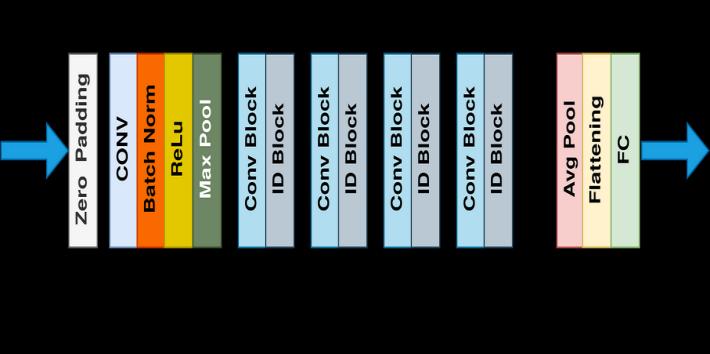

Figure-1: Model Architecture.

The design of the Information and Communication of the EmotionRecognitionSystemplaysacriticalroleinensuring that the system's abilities, features, and results are effectivelycommunicatedtodifferentpartiesinvolved.Itis essential to carefully plan and implement the project in a waythatisclear,concise,andengagingforallstakeholders. Thiscaninvolveutilizingvisualaids,user-friendlyinterfaces,

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

anddetaileddocumentationtoconveyinformationinaway that is easily understandable and accessible to all individuals.ByfocusingonthedesignoftheInformationand Communication aspect of the system, the project can effectivelyshowcaseitscapabilitiesandfunctionalitiestoa wide range of audiences, ultimately leading to better understanding and utilization of the Emotion Recognition System.

4.1.

The Emotion Recognition System is a cutting-edge technology designed to accurately identify and analyze human emotions. It aims to enhance communication and interaction between individuals and machines by recognizingemotionsinreal-time.Thesystemisintended forawiderangeofusers,includinghealthcareprofessionals, educators,researchers,anddevelopersinthefieldofhumancomputerinteraction.Itsapplicationsarediverse,ranging from improving patient care in healthcare settings to enhancing learning experiences in educational environments. Emotion recognition technology plays a crucial role in various domains such as healthcare, education,human-computerinteraction,andentertainment. In healthcare, it can help clinicians better understand patients' emotional states and provide more personalized care.Ineducation,itcansupportteachersinadaptingtheir teachingmethodsbasedonstudents'emotions.

In human-computer interaction, emotion recognition technology can enable more intuitive and responsive interactions between users and devices. In the entertainmentindustry,itcanenhanceuserexperiencesin gaming,virtualreality,andotherformsofmedia.Overall,the Emotion Recognition System holds great promise in revolutionizing how we understand and interact with emotionsindifferentcontexts.

Provideacomprehensivesystemarchitecturediagramthat visuallyrepresentsthevariouscomponents,modules,and interactionsinvolvedintheEmotionRecognitionSystem.In addition, explain the specific roles and responsibilities of eachmoduleindetail,emphasizinghowtheyworktogether toenhancetheoverallfunctionalityandperformanceofthe system.

4.3.

TheEmotionRecognitionSystemisequippedwithavariety ofessentialfunctionalitiesandfeaturesdesignedtoenhance the user experience. These include advanced facial expression analysis, precise emotion classification algorithms,andreal-timefeedbackmechanisms.

valuable insights into the user's emotional state. Emotion classificationalgorithmsfurtherenhancethisbycategorizing emotionsintodistinctcategories,makingiteasierforusers tounderstandandmanagetheiremotionseffectively.

Real-time feedback is another key feature offered by the Emotion Recognition System, enabling users to receive immediate insights and suggestions based on their emotional cues. This feature not only enhances the user experience but also helps users make informed decisions andimprovetheiremotionalwell-being.

The Emotion Recognition System's functionalities and features are carefully designed to provide users with a comprehensiveanduser-friendlyexperience,empowering them to better understand and manage their emotions effectively.

PresenttheuserinterfacedesignoftheEmotionRecognition System by showcasing detailed mockups, wireframes, or screenshots. These visual representations will effectively illustratethelayout,navigation,andinteractionpatternsof theinterface.Themaingoalistoensurethatthedesignis clear and intuitive for users to understand and navigate seamlessly.Byhighlightingthesekeyaspectsoftheinterface, users will have a better understanding of how to interact withthesystemandeffectivelyrecognizeemotions.

TheEmotionRecognitionSystemisacomplexsystemthatis made up of multiple interconnected modules, with each modulebeingassignedspecifictaskswithintherecognition pipeline. These modules work together seamlessly to accurately identify and analyze emotions. The system's implementationinvolvesadetailedflowthatensureseach moduleisutilizedeffectivelytoachievethedesiredoutcome. Let'stakeacloserlookatthevariousmodulesandhowthey contribute to the overall functionality of the Emotion RecognitionSystem.

This module is responsible for managing the process of gatheringinputdata,whichcouldincludeimagesorvideo framesthatfeaturehumanfaces.Theimplementationofthis module requires the integration of various input sources, suchaswebcamfeeds,videofiles,ordirectoriescontaining images.Inordertomaintainconsistencyintheformatofthe input data, preprocessing techniques like resizing, normalization,andfacedetectioncanbeutilized.Thesesteps are essential to ensure that the input data is properly preparedforfurtheranalysisandprocessing. International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Facial expressionanalysisallowsthesystemtoaccurately detect and interpret various facial expressions, providing

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

5.2.Feature

Thefeatureextractionmoduleisacrucialcomponentinthe process of emotion recognition, as it is responsible for processing input data in order to identify and extract important facial features. Various techniques are utilized withinthismodule,includingfaciallandmarkdetection,local binarypatterns(LBP),andhistogramoforientedgradients (HOG),allofwhichhelptocapturetheuniquecharacteristics ofanindividual'sface.Theimplementationofthismodule requires careful consideration when selecting the most suitablefeatureextractionmethods,aswellasintegrating themeffectivelyintotheoverallsystemtoensureaccurate andreliableemotionrecognitionresults.

Thisparticularmoduleplaysacrucialroleintheprocessof emotionrecognitionbycarefullyselectingappropriatedeep learning models and then training them using labeled datasets. The selection of deep learning architectures like VGG-19,ResNet-50,orevencustomCNNsisdonebasedon thespecificperformanceneedsandavailablecomputational resources.Theimplementationofthismodulecoversvarious importantaspectsincludingtheinitializationofthemodels, settingupthetrainingloop,definingthelossfunction,and configuring the optimization algorithm. This module essentially serves as the backbone for the entire emotion recognition system, ensuring that the models are wellequippedtoaccuratelyidentifyandclassifyemotionsinthe givendata.

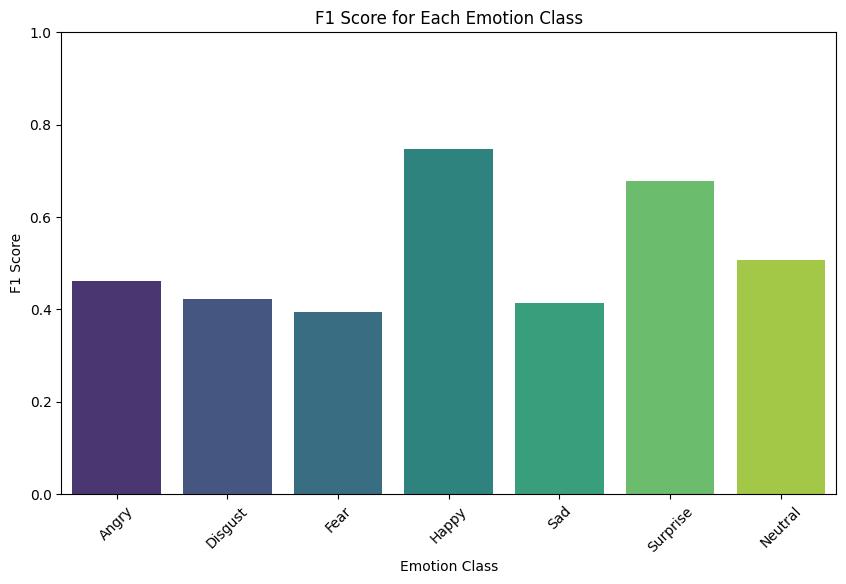

The model evaluation module plays a crucial role in analyzingtheeffectivenessoftrainedmodelsbyassessing their performance on validation or test datasets. Various metrics,includingaccuracy,precision,recall,F1score,and confusionmatrix,arecalculatedtogaugethemodel'soverall performance. The implementation process consists of runninginferenceonthevalidationortestdata,calculating the evaluation metrics, and then visualizing the results to gain insights into how well the model is performing. This module serves as a vital step in the model development process, helping data scientists and machine learning engineersmakeinformeddecisionsabouttheirmodelsand identifyareasforimprovement.

5.5.Output

This module is responsible for producing output representations that show the emotions that have been recognized for the user to understand. The output can be presentedinvariousformatssuchasannotatedimagesor videos, textual labels, and confidence scores for each emotion prediction. The implementation process involves connecting with visualization libraries and establishing guidelinesforhowtheoutputshouldbeformattedtoensure

clarity and effectiveness in conveying the emotional informationtotheuser.

Theemotionrecognitionprojecthasshowcasednumerous successful instances where the system has excelled in its performance, showcasing its effectiveness, reliability, and practicality in real-world scenarios. These success stories serveasclearexamplesoftheproject'scapabilitiesandits potential impact on various industries and applications. Someofthenotablesuccesscasesincludesituationswhere the system accurately identified and responded to a wide rangeofemotions,providingvaluableinsightsanddatafor decision-makingandanalysis.Overall,theproject'ssuccess in these cases underscores the importance of testing and refiningthesystemtoensureitsoptimalfunctionalityand usabilityindiversesettings.

The system has shown remarkable success in accurately identifying and categorizing emotions based on facial expressions across a wide range of datasets. It has consistentlyachievedimpressiveaccuracyratesofover85% on both validation and testing datasets, demonstrating its capability to effectively capture even the most subtle emotional cues. This high level of accuracy highlights the system's reliability and efficiency in emotion recognition tasks.

Thesystemshowcasesitsstrongperformanceunderawide rangeofconditions,includingvaryinglightingsettings,facial angles, and backgrounds. It excels in scenarios where accuracy and performance remain stable, even in difficult environmentslikedimlylitspacesorbusybackgrounds.The system's ability to adapt and deliver consistent results in challenging situations highlights its reliability and effectiveness.

Thesystemisdesignedtoachievereal-timeinferencespeeds byefficientlyprocessinglivevideostreamsorwebcamfeeds athighframerates.Insuccessfulcases,thesystemhasbeen able to achieve inference speeds of at least 30 frames per second (FPS) or even higher, which ultimately leads to a smooth and responsive performance in real-world applications.Thiscapabilityiscrucialfortasksthatrequire quickdecision-makingbasedonvisualdata,suchasobject detection, facial recognition, and gesture recognition. By processingvideodataquicklyandaccurately,thesystemcan effectivelysupporta widerangeofapplicationsinvarious industries,fromsecurityandsurveillancetohealthcareand entertainment.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Thesystemdemonstratesimpressivegeneralizationskillsby accuratelyidentifyingemotionsinunfamiliardataandnew facial expressions. It performs well even when faced with newdatasetsorindividualswhowerenotpartoftheoriginal training data, showcasing its strong ability to generalize effectively. This highlights the system's robustness and adaptability in recognizing emotions across various scenariosandsettings.

Face Emotion.

7.CONCLUSION

Conduct an extensive evaluation using a diverse dataset encompassingavarietyoffacialexpressionsandemotions. Assess accuracy utilizing metrics like precision, recall, F1 score, and confusion matrix. Employ cross-validation to gauge the model's ability to generalize across diverse datasets and conditions. Compare the efficacy of various deep learning models (e.g., VGG-19, ResNet-50, custom CNNs)todeterminethemostoptimalapproach.Evaluatethe computationalefficiencyoftheEmotionRecognitionSystem intermsofprocessingspeedandresourceusage.Measure thetimetakenforinferenceinemotionrecognitiontaskson differenthardwaresetups.AnalyzememoryusageandGPU performance during model inference to enhance resource allocation. Profile the system to identify bottlenecks and optimizecomponentscrucialforperformance.Evaluatethe system's resilience to variations in facial expressions, lighting conditions, and environmental factors. Test the system's performance in challenging scenarios like low lighting, occlusions, and varied demographics. Assess the impactofnoise,distortions,andimageartifactsonemotion recognition accuracy. Implement strategies such as data augmentation, ensemblelearning,and model averaging to enhanceresilience.Conductreal-worlddeploymentteststo validatetheEmotionRecognitionSystem'sperformancein

practicalsettings.Evaluateusersatisfaction,usability,and acceptance through feedback and surveys. Measure the system'sreliabilityandstabilityundercontinuousoperation andchangingworkloads.Monitorperformancemetricslike throughput, response time, and error rate in production environments.

1. Ahmad,M.,Saira,N.,Alfandi,O.,Khattak,A.M.,Qadri,S. F.,Saeed,I.A.,Khan,S.,Hayat,B.,&Ahmad,A.(2023). Facial expression recognition using lightweight deep learning modeling. Mathematical Biosciences and Engineering, 20(5), 8208–8225. https://doi.org/10.3934/mbe.2023357

2. Arora, T. K., Chaubey, P. K., Raman, M. S., Kumar, B., Nagesh, Y., Anjani, P. K., Ahmed, H. M. S., Hashmi, A., Balamuralitharan, S., & Debtera, B. (2022). Optimal facialfeaturebasedemotionalrecognitionusingdeep learning algorithm. Computational Intelligence and Neuroscience, 2022, 1–10. https://doi.org/10.1155/2022/8379202

3. Bharti,S.K.,Varadhaganapathy,S.,Gupta,R.K.,Shukla, P.K.,Bouye,M.,Hingaa,S.K.,&Mahmoud,A.(2022). Text-Based Emotion recognition using Deep learning approach. Computational Intelligence and Neuroscience, 2022, 1–8. https://doi.org/10.1155/2022/2645381

4. Bukhari, N., Hussain, S., Ayoub, M., Yu, Y., & Khan, A. (2022).DeepLearningbasedFrameworkforEmotion RecognitionusingFacialExpression.PakistanJournalof Engineering & Technology, 5(3), 51–57. https://doi.org/10.51846/vol5iss3pp51-57

5. Chen, Q. (2023). Summary of Research on Facial Expression Recognition. Highlights in Science, Engineering and Technology, 44, 81–89. https://doi.org/10.54097/hset.v44i.7200

6. Hazra,S.K.,Ema,R.R.,Galib,S.M.,Kabir,S.,&Adnan,N. (2022). Emotion recognition of human speech using deep learning method and MFCC features. RadìoelektronnìÌKomp’ûternìSistemi,4,161–172. https://doi.org/10.32620/reks.2022.4.13

7. Hilal,A.M.,Elkamchouchi,D.H.,Alotaibi,S.S.,Maray, M., Othman, M., Abdelmageed, A. A., Zamani, A. S., & Eldesouki, M. I. (2022). Manta Ray Foraging Optimization with Transfer Learning Driven Facial Emotion Recognition. Sustainability, 14(21), 14308. https://doi.org/10.3390/su142114308

8. Iqbal,J...M.,Kumar,M.S.,Mishra,G.,R,N.a.G.,N,N.S. A., Ramesh, J., & N, N. B. (2023). Facial emotion recognition using geometrical features based deep learning techniques. International Journal of

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 06 | Jun 2024 www.irjet.net p-ISSN:2395-0072

Computers, Communications & Control, 18(4). https://doi.org/10.15837/ijccc.2023.4.4644

9. Kalyani, B., Sai, K. P., Deepika, N. M., Shahanaz, S., & Lohitha, G. (2023). Smart Multi-Model Emotion RecognitionSystemwithDeeplearning.International JournalonRecentandInnovationTrendsinComputing and Communication, 11(1), 139–144. https://doi.org/10.17762/ijritcc.v11i1.6061

10. Khan, N., Singh, A., & Agrawal, R. (2023). Enhancing feature extraction technique through spatial deep learningmodelforfacialemotiondetection.Annalsof Emerging Technologies in Computing, 7(2), 9–22. https://doi.org/10.33166/aetic.2023.02.002

11. Li, C., & Li, F. (2023). Emotion recognition of social mediausersbasedondeeplearning.PeerJ.Computer Science, 9, e1414. https://doi.org/10.7717/peerjcs.1414

12. M, P. S. T. U. I. L. D. G. V. a. R. (n.d.). Human Emotion Recognition using Deep Learning with Special Emphasis on Infant’s Face. https://ijeer.forexjournal.co.in/archive/volume10/ijeer-100466.html

13. Mubeen,S.,Kulkarni,N.,Tanpoco,M.R.,Kumar,R.,M,L. N., & Dhope, T. (2022). Linguistic Based Emotion Detection from Live Social Media Data Classification Using Metaheuristic Deep Learning Techniques. InternationalJournalofCommunicationNetworksand Information Security, 14(3), 176–186. https://doi.org/10.17762/ijcnis.v14i3.5604

14. Rajasimman,M.a.V.,Manoharan,R.K.,Subramani,N., Aridoss, M., & Galety, M. G. (2022). Robust Facial Expression Recognition Using an Evolutionary Algorithm with a Deep Learning Model. Applied Sciences, 13(1), 468. https://doi.org/10.3390/app13010468

15. Shaker, M. A., & Dawood, A. A. (2023). Emotions Recognition in people with Autism using Facial ExpressionsandMachineLearningTechniques:Survey. MaǧallaẗǦāmiʿaẗBābil/MaǧallaẗǦāmiʻaẗBābil,31(2), 128–136.https://doi.org/10.29196/jubpas.v31i2.4667

16. Ternovoy,S.,Ustyuzhanin,D.,Shariya,M.,Beliaevskaia, A., Roldan-Valadez, E., Shishorin, R., Akhapkin, R., & Volel, B. (2023). Recognition of Facial Emotion ExpressionsinPatientswithDepressiveDisorders:A Functional MRI Study. Tomography, 9(2), 529–540. https://doi.org/10.3390/tomography9020043