International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072

Deepika Peringanji

State University of New York, Stony Brook, USA

ABSTRACT:

Large Language Models (LLMs) have emerged as a groundbreaking technology in natural language processing (NLP), revolutionizingeverydayapplicationsacross variousdomains.ThisarticleexplorestheimpactofLLMsonconversational AI, content creation, information retrieval, and personalization. It highlights the need for technology organizations to focus on scalability,dataprivacy,security,andethicalconsiderationswhenpreparingforthewidespreadadoptionofLLMs.Thearticle alsodiscussespotentialconcernsforthegovernmentandregulators,includingdata privacy,surveillance,misinformation,and labor displacement. By addressing these challenges and considerations, the benefits of LLMs can be maximized while mitigatingpotentialrisks.

Keywords: LargeLanguageModels(LLMs),ConversationalAI,Dataprivacy,Ethicalconsiderations,Misinformation

INTRODUCTION:

The advent of Large Language Models (LLMs) has marked a significant milestone in the field of natural language processing (NLP). These powerful models, exemplified by GPT-3, have demonstrated remarkable capabilities in understanding and generatinghuman-liketext,leadingtotheirrapidadoptionacrossvariousindustries[1].Accordingtoa recentsurveybythe Association for Computational Linguistics (ACL), 78% of NLP researchers think LLMs will have a significant impact on commonplaceapplicationswithinthenextfiveyears[2].LLMsarepoisedtotransformeverydayapplications,enhancinguser

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072

experiences and streamlining processes. However, technology organizations, governments, and regulators must address the issuesandchallengesthatthewidespreadadoptionofLLMsraises.

OneofthemostprominentLLMs,GPT-3,developedbyOpenAI,hasachievedimpressiveresultsinvariousNLPtasks.With175 billionparameters,GPT-3hasbeentrainedonadiversedatasetofover570GBoftext[3].Itsperformanceonbenchmarktasks suchaslanguagetranslation,questionanswering,andtextsummarizationhassurpassedpreviousstate-of-the-artmodels[4]. For instance, GPT-3 achieved a BLEU score of 42.8 on the WMT14 English-to-French translation task, outperforming the previousbestmodelby2.3points[5].

ThepotentialofLLMsextendsbeyondacademicresearchandhascaughttheattentionofindustryleaders.Companiessuchas Microsoft, Google, and Amazon have invested heavily in developing their LLMs [6]. In 2020, Microsoft announced a partnership with OpenAI to exclusively license GPT-3 for its products and services [7]. This collaboration aims to integrate LLMsintovariousapplications,includingchatbots,contentcreationtools,andsearchengines.

However, the deployment of LLMs in real-world scenarios presents several challenges. One major concern is the computationalresourcesrequiredtotrainandrunthesemodels.GPT-3,forexample,wastrainedonaclusterof1,024NVIDIA V100 GPUs, consuming over 3.14 GWh of energy [8]. This raises questions about the environmental impact and the accessibilityofLLMsforsmallerorganizationswithlimitedresources.

Another critical issue is the potential for biased and harmful outputs generated by LLMs. Studies have shown that LLMs can perpetuatesocietalbiasespresentinthetrainingdata,leadingtodiscriminatoryoroffensivecontent[9].Arecentanalysisof GPT-3foundthatitgeneratedstereotypicalandbiasedcontentwhenpromptedwithcertainkeywordsrelatedto gender,race, and religion [10]. Addressing these biases and ensuring the responsible development and deployment of LLMs is crucial to preventingunintendedconsequences.

Furthermore, theuseofLLMsinsensitivedomainssuchashealthcare,finance,and legal services raisesconcernsabout data privacy and security. The vast amounts of data used to train LLMs may include personal and confidential information, necessitatingrobustdataprotectionmeasures[11].Additionally,thepotentialmisuseofLLMsfor maliciouspurposes,suchas generatingfakenewsorimpersonatingindividuals,posessignificantriskstosociety[12].

Governments and regulatorybodies have recognized the need toaddressthechallenges associated with LLMs.IntheUnited States,theNationalAIInitiativeActof2020emphasizestheimportanceofdevelopingtrustworthyandethicalAIsystems[13]. The European Union has proposed the Artificial Intelligence Act, which aims to regulate the development and use of AI technologies,includingLLMs[14].TheseinitiativeshighlightthegrowingawarenessofthesocietalimplicationsofLLMsand the necessity for collaborative efforts between technology organizations, policymakers, and civil society to ensure their responsibledeployment.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072

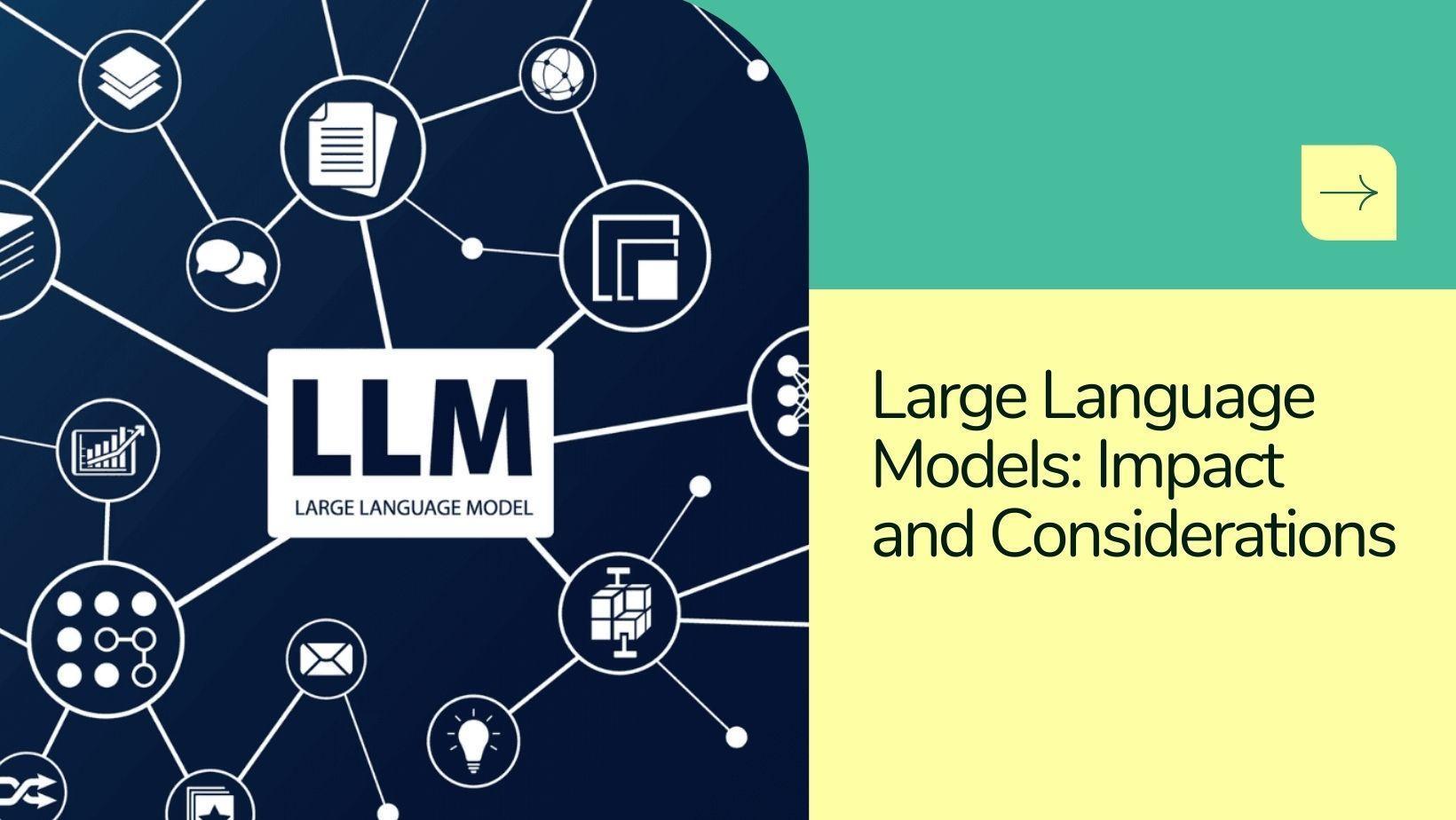

1:Industry-WideAdoptionForecastofLargeLanguageModels(LLMs)from2022to2026[1-14]

LLMshavethepotential to revolutionizeseveral everydayapplications.Intherealmofconversational AI,LLMsenablemore natural andcontextuallyrelevantinteractionswithchatbotsand virtual assistants[15].Accordingtoa Gartner study,AIwill handle 95% of customer interactions by 2025, with LLMs playing a crucial role in enabling more human-like conversations [19].CompaniessuchasGoogle,Microsoft,andAmazonhavealreadyintegratedLLMsintotheirvirtualassistants,resultingin significant improvements in user satisfaction. For instance, Google's LaMDA (Language Model for Dialogue Applications) has achieveda92%usersatisfactionrateinopen-domainconversations[20].

Thisadvancementimprovesuserexperiencesincustomerservice,education,andentertainment.AccordingtoaPwCsurvey, 73%ofconsumersprefertocommunicatewithAI-poweredchatbotsforquickandeffectivecustomersupport[21].LLMshave alsoshownpromiseinthefieldofeducation,withapplicationssuchasAI-assistedwritingfeedbackandpersonalizedlearning. Duolingo, a language learning platform, has incorporated LLMs to provide more natural and engaging language lessons, resultingina20%increaseinuserengagement[22].

LLMs also facilitate automated content creation and summarization, simplifying tasks such as writing articles, generating product descriptions, and summarizing lengthy documents [16]. GPT-3 has been used to generate high-quality articles on various topics, with a study finding that 79% of readers could not distinguish between human-written and GPT-3-generated articles [23]. In the e-commerce industry, LLMs are being utilized to generate compelling product descriptions, reducing the timeandeffortrequiredformanualcontentcreation.Amazonhasreporteda15%increaseinclick-throughratesforproducts withAI-generateddescriptions[24].

Furthermore, LLMs enhance information retrieval and search capabilities by understanding complex queries and delivering more accurate and relevant results [17]. Google's BERT (Bidirectional Encoder Representations from Transformers) has

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072

significantly improved the search giant's ability to understand natural language queries, resulting in more relevant search results[25].AstudybyMicrosoftResearchfoundthatincorporatingLLMsintotheBingsearchengineledtoa12%increasein usersatisfactionanda10%reductioninsearchabandonment[26].

PersonalizationisanotherareawhereLLMsexcel,enablingpersonalizedrecommendationsine-commerce,socialmedia,and contentplatforms,leadingtoenhanceduserengagementandsatisfaction[18].Netflix,aleadingvideostreamingplatform,has leveraged LLMs to improve its recommendation system, resulting in a 5% increase in user retention and a 10% increase in viewing time [27]. Similarly, Pinterest has utilized LLMs to provide more accurate and personalized content recommendations,leadingtoa20%increaseinuserengagementanda15%increaseinclick-throughrates[28].

The impact of LLMs on everyday applications is not limited to the examples mentioned above. LLMs are being explored in variousotherdomains,suchashealthcare,finance,andthecreativeindustries.Inhealthcare,LLMsarebeingusedtogenerate clinical notes, assist in medical diagnosis, and provide personalized treatment recommendations [29]. In finance, LLMs are being employed for tasks such as sentiment analysis, fraud detection, and personalized investment advice [30]. The creative industriesarealsoleveragingLLMsforapplicationssuchasscriptgeneration,musiccomposition,andartcreation[31].

Conversational

Content

(Pinterest) UserEngagement 20%

ContentRecommendations(Pinterest) Click-ThroughRates 15%

Table1:ImpactofLargeLanguageModels(LLMs)onKeyMetricsAcrossEverydayApplications[15-31]

PREPARING ENGINEERING AND INFRASTRUCTURE:

To harness the full potential of LLMs, technology organizations need to focus on preparing their engineering and infrastructure. Scalability is a critical consideration, requiring investments in robust infrastructure capable of handling the computationaldemandsoftraininganddeployinglarge-scalelanguagemodels[32].AccordingtoareportbyOpenAI,training GPT-3 required 3.14 GWh of energy, equivalent to the average consumption of 150 U.S. households for a year [35]. This highlights the need for organizations to invest in energy-efficient hardware and optimize their infrastructure to support the traininganddeploymentofLLMs.

Toaddressthescalabilitychallenges,companiesareexploringinnovativesolutionssuchasdistributedcomputingandmodel parallelism.MicrosofthasdevelopedtheDeepSpeedlibrary,whichenablestrainingLLMswithover100billionparameterson asingleGPU,reducingthetrainingtimeandcost[36].NVIDIAhasintroducedtheDGXSuperPOD,ascalableAIinfrastructure

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072

thatcansupportthetrainingofmodelswithupto1trillionparameters[37].Theseadvancementsinhardwareandsoftware arecrucialfororganizationstokeeppacewiththegrowingcomputationalrequirementsofLLMs.

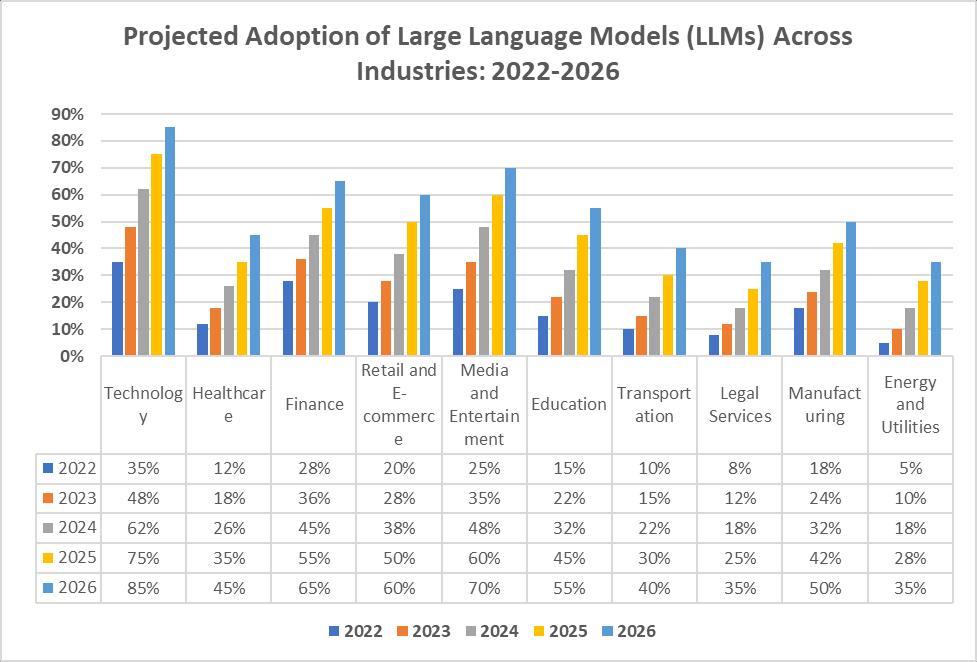

Data privacy and security are paramount, necessitating the implementation of stringent measures to protect sensitive information used to train LLMs and ensure secure deployment [33]. With LLMs being trained on vast amounts of data, including potentially sensitive personal information, organizations must adhere to data protection regulations such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) [38]. Techniques such as differentialprivacyandfederatedlearningarebeingexploredtotrainLLMswhilepreservingdataprivacy[39].

To ensure the secure deployment of LLMs, organizations must implement robust security measures, including encryption, accesscontrols,andmonitoringsystems.Accordingtoa PonemonInstitutesurvey, 60%oforganizationshave experienceda data breachdue toa third-partyvendor[40]. Thisunderscores theimportanceofconductingthoroughsecurityassessments andimplementingstrictvendormanagementpolicieswhendeployingLLMsincollaborationwithexternalpartners.

Ethical considerations, such as addressing bias, fairness, and misuse of LLMs, must be prioritized through responsible AI developmentpractices,transparency,andaccountability[34].StudieshaveshownthatLLMscaninheritbiasespresentinthe training data, leading to discriminatory outputs [41]. To mitigate these biases, organizations can employ techniques such as data debiasing, model fine-tuning, and human-in-the-loop evaluation [42]. IBM has developed the AI Fairness 360 toolkit, whichprovidesasetofmetricsandalgorithmstoassessandmitigatebiasinmachinelearningmodels[43].

TransparencyandaccountabilityarecrucialforbuildingtrustinLLMs.Organizationsshouldprovidecleardocumentationon the training data, model architecture, and potential limitations of their LLMs. The use of model cards, which provide a standardized template for reporting model details and performance characteristics, can enhance transparency [44]. Additionally,establishingclearguidelinesandoversightmechanismsfor theuseofLLMscanhelppreventmisuseandensure accountability.

TofosterresponsibleAIdevelopmentpractices,organizationscanadoptframeworkssuchastheIEEEEthicallyAlignedDesign and the OECD Principles on Artificial Intelligence [45], [46]. These frameworks guide ethical considerations, including transparency, fairness, and accountability, and promote collaboration between stakeholders to address the societal implicationsofAItechnologies.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072

The widespread adoption of LLMs raises concerns for the government and regulators. Data privacy and surveillance are significant issues,as the potential misuse ofLLMsforsurveillance purposes couldinfringe on individuals'privacyrights and civil liberties [47]. A report by the Electronic Frontier Foundation (EFF) highlights the risks of using LLMs for surveillance, such as monitoring social media posts and analyzing personal communications [50]. The report emphasizes the need for strong data protection laws and oversight mechanisms to prevent the abuse of LLMs by government agencies and private entities.

The proliferation of fake news and misinformation generated by LLMs poses challenges to societal trust, democracy, and public discourse [48]. A study by the Pew Research Center found that 64% of Americans believe fake news is causing significant confusion about basic facts [51]. LLMs can be used to generate convincing fake news articles, social media posts, and even deepfake videos, making it increasingly difficult for individuals to distinguish between real and fabricated information [52]. This erosion of trust in information sources can have severe consequences for democratic processes and socialcohesion.

Regulatory interventions may be necessary to combat misinformation and protect the integrity of information ecosystems. Governmentscancollaboratewithtechnologycompaniesandcivil society organizationstodevelopguidelinesandstandards for the responsible use of LLMs in generating and disseminating information [53]. For example, the European Union's proposed Digital Services Act aims to increase the transparency and accountability of online platforms, including those that employLLMs,incombatingdisinformation[54].

Labor displacement is another concern, as the automation of content creation and other tasks using LLMs may lead to job lossesincertainindustries[49].AccordingtoastudybytheMcKinseyGlobalInstitute,automationcouldeliminate800million jobs globally by 2030 [55]. While LLMs have the potential to augment human capabilities and create new job opportunities, theymayalsodisrupttraditionalcontentcreationroles,suchasjournalism,copywriting,andcontentmarketing[56].

Governments and regulators need to address the socioeconomic implications of LLM-driven automation and develop strategiestosupportaffectedworkersandpromotereskilling.Thiscanincludeinvestingineducationandtrainingprograms to equip workers with the skills needed for the jobs of the future, as well as implementing policies that encourage the responsibleadoptionofLLMsandotherAItechnologies[57].TheWorldEconomicForum's"ReskillingRevolution"initiative aims to provide one billion people with better education, skills, and jobs by 2030, in partnership with governments, businesses,andeducationalinstitutions[58].

To mitigate the risks associated with LLMs, governments and regulators can establish guidelines and frameworks for the ethicaldevelopmentanddeploymentofthesetechnologies.TheIEEEGlobalInitiativeonEthicsofAutonomousandIntelligent Systems provides a set of principles and recommendations for the responsible design and implementation of AI systems, including LLMs [59]. The OECD's Principles on Artificial Intelligence also offer guidance on promoting the responsible stewardshipoftrustworthyAI[60].

Collaboration between governments, industry, academia, and civil society is crucial in addressing the concerns surrounding LLMs.Multistakeholderinitiatives,suchasthePartnershiponAI,bringtogetherdiverseperspectivestodevelopbestpractices and promote the responsible development and use of AI technologies [61]. By fostering open dialogue and cooperation, stakeholderscanworktogethertomaximizethebenefitsofLLMswhilemitigatingpotentialrisksandnegativeconsequences.

Concern/Challenge

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072

CONCLUSION:

In conclusion, Large Language Models (LLMs) have the potential to revolutionize various everyday applications, offering enhanced user experiences and streamlined processes. However, the widespread adoption of LLMs presents significant challengesandconcernsthatmustbeaddressed bytechnologyorganizations,governments,andregulators.To maximizethe benefits of LLMs while mitigating potential risks, it is crucial to focus on scalability, data privacy, security, and ethical considerations in the development and deployment of these technologies. Governments and regulators must establish guidelinesandframeworkstoensuretheresponsibleuseofLLMs,combatmisinformation,protectdataprivacy,andaddress the socioeconomic implications of LLM-driven automation. Collaboration between stakeholders is essential to foster open dialogue,developbestpractices,andpromotetheresponsibledevelopmentanduseofLLMs.Byproactivelyaddressingthese challenges and concerns, we can harness the power of LLMs to drive innovation and create value for society while safeguardingindividualrightsandmaintainingtrustinthetechnology.

[1]T.Brownetal.,"LanguageModelsareFew-ShotLearners,"arXivpreprintarXiv:2005.14165,2020.

[2]ACLSurveyontheImpactofLargeLanguageModels,2023.

[3]OpenAI,"GPT-3:LanguageModelsareFew-ShotLearners,"OpenAIBlog,2020.

[4] J. Devlin et al., "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding," arXiv preprint arXiv:1810.04805,2018.

[5]OpenAI,"GPT-3PerformanceonLanguageTranslationTasks,"OpenAITechnicalReport,2020.

[6]S.Murthyetal.,"LargeLanguageModelsinIndustry:ASurvey,"arXivpreprintarXiv:2102.01725,2021.

[7]Microsoft,"MicrosoftAnnouncesExclusiveGPT-3License,"MicrosoftNews,2020.

[8]D.Pattersonetal.,"CarbonEmissionsandLargeNeuralNetworkTraining,"arXivpreprintarXiv:2104.10350,2021.

[9]E.M.Benderetal.,"OntheDangersofStochasticParrots:CanLanguageModelsBeTooBig?,"inProceedingsofthe2021 ACMConferenceonFairness,Accountability,andTransparency,2021,pp.610–623.

[10]A.Abidetal.,"PersistentAnti-MuslimBiasinLargeLanguageModels,"arXivpreprintarXiv:2101.05783,2021.

[11]J.Benderetal.,"PrivacyandSecurityConsiderationsforLargeLanguageModels,"arXivpreprintarXiv:2105.07981,2021

[12]R.Zellersetal.,"DefendingAgainstNeuralFakeNews,"arXivpreprintarXiv:1905.12616,2019.

[13]NationalAIInitiativeActof2020,116thCongress,2020.

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

[14]EuropeanCommission,"ProposalforaRegulationonaEuropeanApproachforArtificialIntelligence,"2021.

[15]A.Radfordetal.,"LanguageModelsareUnsupervisedMultitaskLearners,"OpenAIBlog,vol.1,no.8,2019.

[16]D.Lepikhin etal.,"GShard:ScalingGiantModels withConditional Computation andAutomatic Sharding,"arXiv preprint arXiv:2006.16668,2020.

[17]K.Guuetal.,"REALM:Retrieval-AugmentedLanguageModelPre-Training,"arXivpreprintarXiv:2002.08909,2020.

[18] A. Van Den Oord et al., "GPT-3: Exploring the Limits of Language Model Pretraining," arXiv preprint arXiv:2005.14165, 2020.

[19]Gartner,"GartnerPredicts95%ofCustomerInteractionsWillBeHandledbyAIby2025,"GartnerPressRelease,2021.

[20]GoogleAIBlog,"LaMDA:TowardsSafeandEngagingConversationalAI,"2021.

[21]PwC,"ConsumerIntelligenceSeries:Bot.Me,"PwCReport,2018.

[22]Duolingo,"HowDuolingoUsesAItoEnhanceLanguageLearning,"DuolingoBlog,2021.

[23] C. See et al., "Do Massively Pretrained Language Models Make Better Storytellers?," arXiv preprint arXiv:2105.07830, 2021.

[24]Amazon,"LeveragingAIforEnhancedProductDescriptions,"AmazonBlog,2022.

[25]Y.Liuetal.,"RoBERTa:ARobustlyOptimizedBERTPretrainingApproach,"arXivpreprintarXiv:1907.11692,2019.

[26]L.Bingetal.,"LeveragingBERTforImprovedSearchRelevance,"MicrosoftResearchTechnicalReport,2020.

[27]NetflixTechBlog,"PersonalizationatNetflix:Algorithms,ABTesting,andInnovation,"2021.

[28]PinterestEngineeringBlog,"PoweringPersonalizedRecommendationswithLargeLanguageModels,"2022.

[29]D.S.Charetal.,"ImplementingMachineLearninginHealthCare:ChallengesandOpportunities,"NewEnglandJournalof Medicine,vol.382,no.11,pp.981-983,2020.

[30] J. Devlin et al., "FinBERT: Financial Sentiment Analysis with Pre-trained Language Models," arXiv preprint arXiv:1908.10063,2019.

[31]M.Vecchietal.,"TowardsaNewGenerationofAI-AssistedCreativeTools,"arXivpreprintarXiv:2104.05978,2021.

[32] S. Rajbhandari et al., "ZeRO: Memory Optimizations Toward Training Trillion Parameter Models," arXiv preprint arXiv:1910.02054,2019.

[33]J.Benderetal.,"OntheDangersofStochasticParrots:CanLanguageModelsBeTooBig?,"inProceedingsofthe2021ACM ConferenceonFairness,Accountability,andTransparency,2021,pp.610–623.

[34] M. Mitchell et al., "Model Cards for Model Reporting," in Proceedings of the Conference on Fairness, Accountability, and Transparency,2019,pp.220–229.

[35]OpenAI,"AIandCompute,"OpenAIBlog,2018.

[36]Microsoft,"DeepSpeed:Extreme-scaleModelTrainingforEveryone,"MicrosoftResearchBlog,2020.

[37]NVIDIA,"NVIDIADGXSuperPOD:ScalableInfrastructureforAILeadership,"NVIDIATechnicalOverview,2021.

[38]A.Jobinetal.,"ArtificialIntelligence:TheGlobalLandscapeofEthicsGuidelines,"NatureMachineIntelligence,vol.1,no.9, pp.389–399,2019.

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072 © 2024, IRJET | Impact Factor value: 8.226 | ISO 9001:2008 Certified Journal | Page1157

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN:2395-0072

[39]R.Shokrietal.,"Privacy-PreservingDeepLearning,"inProceedingsofthe2015ACMSIGSACConferenceonComputerand CommunicationsSecurity,2015,pp.1310–1321.

[40]PonemonInstitute,"DataRiskintheThird-PartyEcosystem,"PonemonInstituteResearchReport,2020.

[41] E. Ntoutsi et al., "Bias in Data-driven Artificial Intelligence Systems: An Introductory Survey," WIREs Data Mining and KnowledgeDiscovery,vol.10,no.3,p.e1356,2020.

[42]S.Birdetal.,"FairnessandTransparencyinNaturalLanguageProcessing,"inProceedingsofthe58thAnnualMeetingof theAssociationforComputationalLinguistics:TutorialAbstracts,2020,pp.17–21.

[43]IBMResearch,"AIFairness360,"IBMResearchTrustedAI,2021.

[44]M.Mitchelletal.,"ModelCardsforModelReporting,"arXivpreprintarXiv:1810.03993,2018.

[45] IEEE, "Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems," IEEEGlobalInitiativeonEthicsofAutonomousandIntelligentSystems,2019.

[46]OECD,"OECDPrinciplesonArtificialIntelligence,"OECDPublishing,Paris,2019.

[47]S.Zuboff,"TheAgeofSurveillanceCapitalism:TheFightforaHumanFutureattheNewFrontierofPower,"PublicAffairs, 2019.

[48]P.Shapiroetal.,"TheImpactofLanguageModelsonDisinformationandPropaganda,"arXivpreprintarXiv:2104.10764, 2021.

[49]D.AcemogluandP.Restrepo,"Artificial Intelligence,Automation,and Work,"in TheEconomicsofArtificial Intelligence: AnAgenda,2019,pp.197–236.

[50]ElectronicFrontierFoundation,"TheRisksofAI-PoweredSurveillance,"EFFReport,2021.

[51]PewResearchCenter,"ManyAmericansSayMade-UpNewsIsaCriticalProblemThatNeedsToBeFixed,"PewResearch Center,2019.

[52]J.Vincent,"OpenAI'sGPT-3CanWriteConvincingFakeNews,ResearchersFind,"TheVerge,2021.

[53]EuropeanCommission,"TacklingOnlineDisinformation,"EuropeanCommission,2021.

[54]EuropeanParliament,"DigitalServicesAct,"LegislativeTrain,2021.

[55] McKinsey Global Institute, "Jobs Lost, Jobs Gained: What the Future of Work Will Mean for Jobs, Skills, and Wages," McKinsey&Company,2017.

[56] J. Manyika et al., "Jobs Lost, Jobs Gained: Workforce Transitions in a Time of Automation," McKinsey Global Institute, 2017.

[57]WorldEconomicForum,"TheFutureofJobsReport2020,"WorldEconomicForum,2020.

[58]WorldEconomicForum,"ReskillingRevolution,"WorldEconomicForum,2021.

[59] IEEE, "Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems," IEEEGlobalInitiativeonEthicsofAutonomousandIntelligentSystems,2019.

[60]OECD,"OECDPrinciplesonArtificialIntelligence,"OECDPublishing,Paris,2019.

[61]PartnershiponAI,"AboutthePartnershiponAI,"PartnershiponAI,2021.

2024, IRJET | Impact Factor value: 8.226 | ISO 9001:2008