International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

Dr. T. Amalraj Victoire1 , M. Vasuki2 , A. Aravindhan3

1Professor, Department of Master of Computer Application, Sri Manakula Vinayagar Engineering College, Puducherry605 107, India.

2Associate Professor, Department of Master of Computer Application, Sri Manakula Vinayagar Engineering College, Puducherry605 107, India.

3PG Student, Department of Master of Computer Application, Sri Manakula Vinayagar Engineering College, Puducherry605 107, India.

Inrecentyears,theproliferationofdeepfakeandsynthetic media technologies has sparked concerns regarding their potential for spreading misinformation and enabling malicious activities. This paper delves into the intricate landscape where these technologies intersect, exploring their role in propagating misinformation and facilitating deceptive practices. By dissecting the mechanisms behind deepfake and synthetic media creation, we uncover the nuancedchallengestheyposetotheintegrityofinformation dissemination. Through a comprehensive analysis of case studies and emerging trends, we illuminate the diverse applicationsofthesetechnologiesinspreadingfalsehoods, manipulating public opinion, and undermining trust in media content. Moreover, we examine the ethical implicationsandsocietalramificationsoftheirwidespread adoption,highlightingtheurgentneedforrobustmitigation strategies and regulatory frameworks. By synthesizing insights from multidisciplinary perspectives, this paper offers a holistic understanding of the complex dynamics between deepfake, synthetic media, and misinformation, paving the way for informed discourse and proactive interventions in safeguarding the integrity of our digital ecosystem.

Keywords- deepfake, synthetic media, misinformation, malicious use, deception, information integrity, public opinion,trust,ethicalimplications.

The advent of deepfake and synthetic media technologies has ushered in a new era of digital manipulation, raising profound concerns about their potential implications for society, democracy, and the integrity of information. In recent years,these technologieshavegarneredsignificant attentionfortheirabilitytocreatehyperrealist,yetentirely fabricatedaudiovisual content, blurring the lines between fact and fiction. While originally developed for entertainment and creative purposes, deepfake and syntheticmediahaveincreasinglybecomeweaponsinthe

arsenal of those seeking to spread misinformation, manipulate public opinion, and undermine trust in media sources. This journal aims to delve deep into the complex andmultifacetedlandscapeofdeepfakeandsyntheticmedia, particularly focusing on their role in combining misinformationandmalicioususe.Byexploringtheintricate interplay between technology, society, and information dissemination, we seek to shed light on the myriad challenges posed by these emerging technologies and to identify strategies for mitigating their harmful effects. Through a multidisciplinary approach, this journal brings together insights from computer science, communication studies, ethics, law, psychology, and beyond to provide a comprehensive understanding of the phenomenon. We examine the technical mechanisms behind deepfake and synthetic media creation, analyze real-world case studies illustratingtheirimpact,discusstheethicalimplicationsof theiruse,andexplorepotentialregulatoryandtechnological solutions.

Aswenavigatethiscomplexterrain,itisessentialtofoster informeddiscourse,raiseawareness,andcollaborateacross disciplinesandsectorstoaddressthechallengesposedby deepfake and synthetic media. By critically examining the intersection of misinformation and malicious use in the contextofthesetechnologies,thisjournalseekstocontribute toamorenuancedunderstandingoftheissuesathandand topavethewayforresponsibleinnovationandsafeguarding theintegrityofourdigitalecosystem.

As deepfake and synthetic media continue to proliferate, scholarsfromvariousdisciplineshavesoughttounderstand their implications for society, democracy, and the dissemination of information. The literature surrounding thisphenomenonisrichandmultifaceted,offeringinsights intothetechnical,ethical,andsocietaldimensionsofthese technologies.Intherealmofcomputerscience,researchers havemadesignificantstridesindevelopingalgorithmsand techniques for generating and detecting deepfake and syntheticmedia.EarlyworksbyHaoLietal.(2018)laidthe

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

groundwork for deepfake technology, demonstrating its potential for creating highly convincing video for both benignandmaliciouspurposes.SubsequentstudiesbyThies etal.(2016)andAriketal.(2019)expandeduponthiswork, exploring methods for synthesizing realistic facial expressions and manipulating audiovisual content with unprecedented fidelity. However, as deepfake technology has advanced, so too have concerns about its misuse. Scholars in communication studies and media ethics have interrogated the ethical implications of deepfake and syntheticmedia,highlightingtheirpotentialtodeceiveand manipulateaudiences.WorkssuchasBrubakerandHayes (2020) have underscored the need for increased media literacy and critical thinking skills in the face of this emerging threat, while others, like Citron and Chesney (2019),havecalledforlegislativeandregulatorymeasures toaddressthedisseminationofharmfuldeepfakecontent.

Beyondtechnicalandethicalconsiderations,scholarshave alsoexaminedthebroadersocietalimpactofdeepfakeand syntheticmedia.StudiesbyMarasandAlexandrou(2021) have explored the role of deepfake technology in shaping publicdiscourseandpoliticalnarratives,whileresearchby Wang et al. (2020) has investigated its implications for privacy and cybersecurity. Moreover, interdisciplinary approaches, such as those combining insights from psychology, sociology, and law, have provided holistic perspectivesonthecomplexinterplaybetweentechnology, society, and misinformation. In synthesizing this diverse body of literature, it becomes evident that deepfake and synthetic media represent a multifaceted challenge that demands interdisciplinary collaboration and proactive intervention. By understanding the technical mechanisms behind these technologies, grappling with their ethical implications,andinterrogatingtheirsocietalramifications, scholarscancontributetoamorenuancedunderstandingof thephenomenonandworktowardseffectivestrategiesfor mitigating its harmful effects. This journal seeks to build uponexistingresearchbyofferingfreshinsights,innovative approaches,andactionablerecommendationsforaddressing theintersectionofmisinformationandmalicioususeinthe contextofdeepfakeandsyntheticmedia

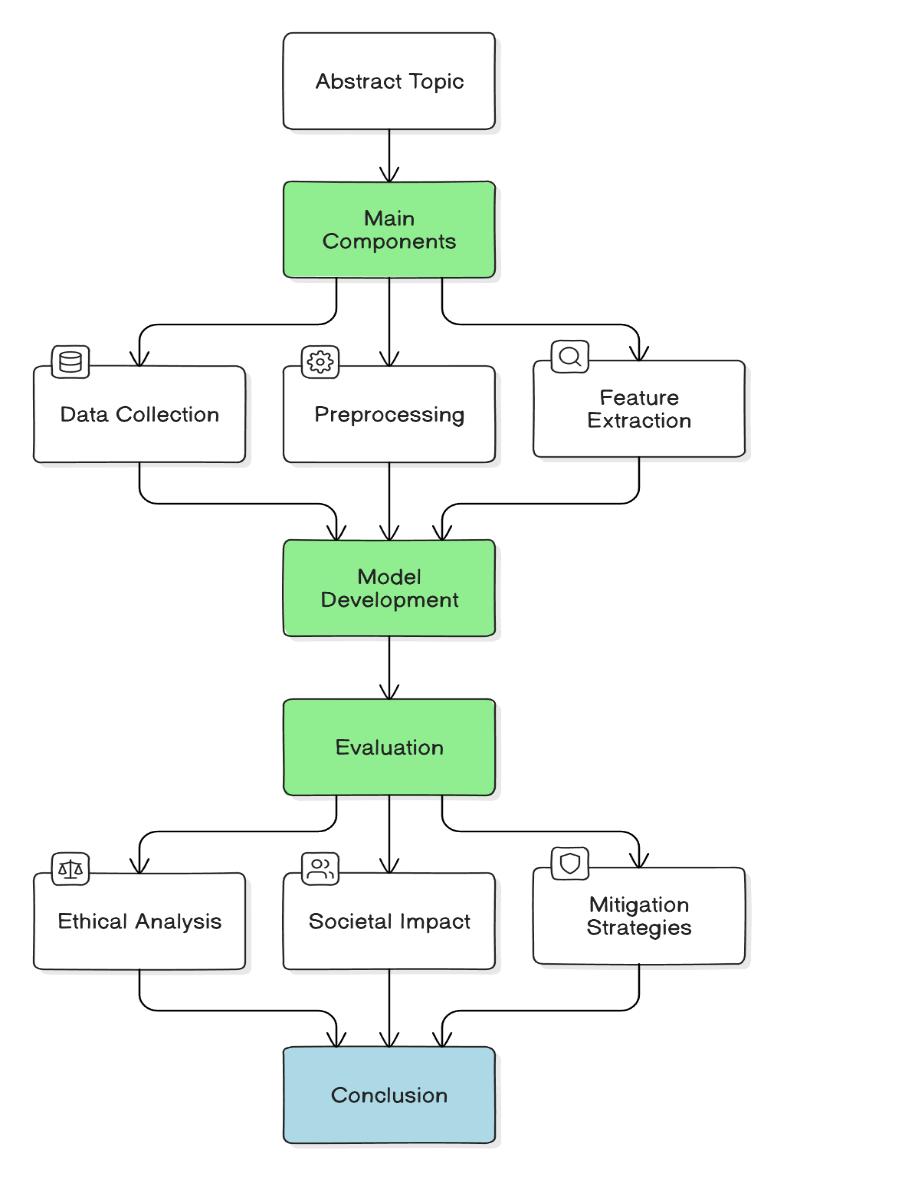

Components:

Thesearethekeycomponentsorstages:

Data Collection:

Inthisstage,wecollectdatasetsofdeepfakeandsynthetic mediaexamplesalongwithrelevantmetadata.

Preprocessing:

Thecollecteddataundergoescleaningandpreprocessingto removenoiseandirrelevantinformation.Thisensuresthat thedataisreadyforfurtheranalysis.

Feature Extraction:

Features are extracted from the pre-processed data, including visual and audio features. Techniques such as facialrecognitionandvoiceanalysismaybeemployedhere.

Model Development:

Machinelearninganddeeplearningmodelsaredeveloped fordetectingandgeneratingdeepfakeandsyntheticmedia. Thesemodelsaretrainedusingtheextractedfeaturesand labelleddatasets.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

Evaluation:

Theperformanceofthedevelopedmodelsisevaluatedusing metricssuchasaccuracy,precision,recall,andF1score.This stagevalidatesthemodelsonunseendatasetstoassesstheir generalization.

Ethical Analysis:

Thisstageinvolvesanethicalanalysisoftheimplicationsof deepfake and synthetic media, considering issues such as deception,privacy,andsocietalimpact.Ethicalframeworks and guidelines for responsible use and development are discussed.

Societal Impact:

Here,thesocietalimpactofdeepfakeandsyntheticmediaon publicperception,trustinmedia,anddemocraticprocesses isinvestigated.Casestudiesandreal-worldexamplesmaybe explored.

Mitigation Strategies:

This stage involves proposing mitigation strategies for combatingthespreadofmisinformationandmalicioususeof deepfake and synthetic media. Technical, regulatory, and educationalapproachesareconsidered.

1. Emergence of Deepfake and Synthetic Media Technologies:

Inadditiontodiscussingtheevolutionanddevelopmentof deepfake and synthetic media technologies, consider elaboratingonspecificmilestones,suchasthedevelopment of key algorithms like Generative Adversarial Networks (GANs)andadvancementsincomputervisionandmachine learning.Discusshowthesetechnologieshaveevolvedfrom earlyexperimentstoaccessibletools,andhighlightnotable applicationsandusecases.

2. Spread of Misinformation and Malicious Activities:

Exploreingreaterdetailthevariouswaysinwhichdeepfake and synthetic media contribute to the spread of misinformation, disinformation, and malicious activities. Thiscouldincludediscussingspecificexamplesofdeepfake contentusedforpoliticalmanipulation,socialengineering, or financial fraud. Highlight the role of social media platforms and online communities in amplifying and disseminatingdeepfakecontent.

3. Deceptive Practices and Manipulation:

Provideconcreteexamplesandcasestudiesillustratinghow deepfake and synthetic media are utilized for deceptive practices and manipulation of information. Discuss the

techniques and tools used to create convincing deepfake content,aswellasthepsychologicalimpactonviewerswho maystruggletodiscernrealfromfake.Considerdiscussing the ethical implications of using deepfake technology for entertainmentversusmaliciouspurposes.

4. Challenges to Information Integrity:

Delve deeper into the challenges posed by deepfake and synthetic media to the integrity and authenticity of informationdissemination.Discussspecificvulnerabilitiesin media ecosystems, such as the rapid spread of unverified information and the erosion of trust in traditional media sources. Explore the potential implications for democracy andpublicdiscourse,includingthepolarizationofpolitical opinionsandthemanipulationofpublicopinion.

5. Impact on Public Perception and Trust:

Analyze in greater depth the impact of deepfake and syntheticmediaonpublicperception,trustinmediasources, and societal norms. Consider conducting surveys or empiricalstudiestomeasurechangesinpublicattitudesand behaviors in response to exposure to deepfake content. Discussstrategiesforrebuildingtrustandfosteringmedia literacyamongthepublic.

6. Ethical Considerations and Societal Ramifications:

Delveintotheethicalimplicationsandsocietalramifications of the widespread adoption and misuse of deepfake and syntheticmedia.Considerdiscussingthepotentialforharm toindividualsandcommunities,includingthreatstoprivacy, consent,andhumanrights.Exploretheroleofpolicymakers, regulators, and industry stakeholders in addressing these ethicalconcernsandmitigatingpotentialharms.

7. Technological and Regulatory Challenges:

Provideadetailedanalysisofthetechnologicalchallengesin detecting and mitigating deepfake and synthetic media, includingthelimitationsofexistingdetectionalgorithmsand the arms race between creators and detectors. Discuss regulatorygapsandpolicyconsiderationsforgoverningthe use of deepfake technology, including the need for internationalcollaborationandstandardsdevelopment.

8. Mitigation Strategies and Countermeasures:

Evaluate existing mitigation strategies, countermeasures, and best practices for combating the negative effects of deepfakeandsyntheticmedia.Thiscouldincludediscussing technical solutions such as watermarking and digital signatures,aswellastheroleofmedialiteracyprogramsand legal frameworks in raising awareness and deterring malicious actors. Consider highlighting successful case studiesandcollaborativeinitiativesaimedatmitigatingthe impactofdeepfakecontent.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

9. Interdisciplinary Perspectives and Collaborative Efforts:

Emphasizetheimportanceofinterdisciplinarycollaboration and stakeholder engagement in addressing the complex challengesposedbydeepfakeandsyntheticmedia.Discuss opportunities for collaboration among researchers, policymakers, industry professionals, and civil society stakeholders,includingthesharingofresources,expertise, and best practices. Highlight successful examples of interdisciplinaryresearchprojectsandcollaborativeefforts aimed at addressing the societal impact of deepfake technology.

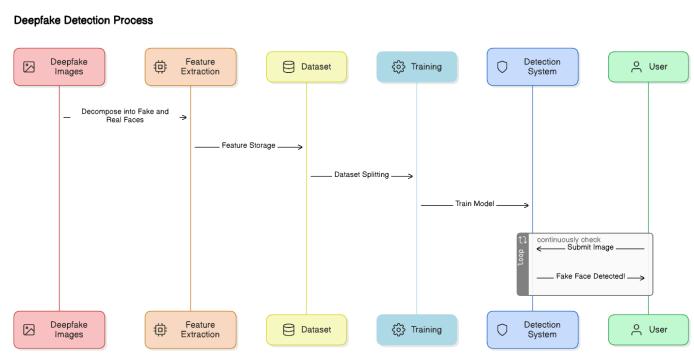

5.1.Algorithm1:

Generative Adversarial Networks (GANs) represent a novel paradigm in artificial intelligence, comprising two neuralnetworksengagedinanadversarialtrainingprocess togeneraterealisticdata.Thegeneratornetworkproduces synthetic data samples resembling real data, while the discriminator network learns to differentiate between authentic and fake data. In the realm of deepfake and synthetic media, GANs are pivotal. They empower the creation of lifelike media content encompassing images, videos, and audio, serving both benign and malevolent purposes.Whiletheyfacilitateentertainmentandcreative endeavors,theyalsoposerisksbyenablingmisinformation disseminationandpublicopinionmanipulation.

KeytoGANarchitecturearethegeneratoranddiscriminator networks.Theformertypicallyemploysconvolutionaland deconvolutional layers, often augmented with skip or residual connections to enhance high resolution content generation. Conversely, the discriminator integrates convolutional layers and fully connected layers for binary classification.

The training dynamics of GANs involve adversarial competition. The generator strives to improve its output realism to deceive the discriminator, while the latter endeavors to enhance its discrimination capabilities. This adversarial interplay drives the generator to produce synthetic data closely mirroring authentic samples. By craftingoriginalcontentthatrespectsintellectualproperty, wefosterinnovationandethicaldevelopmentwithintheAI community.

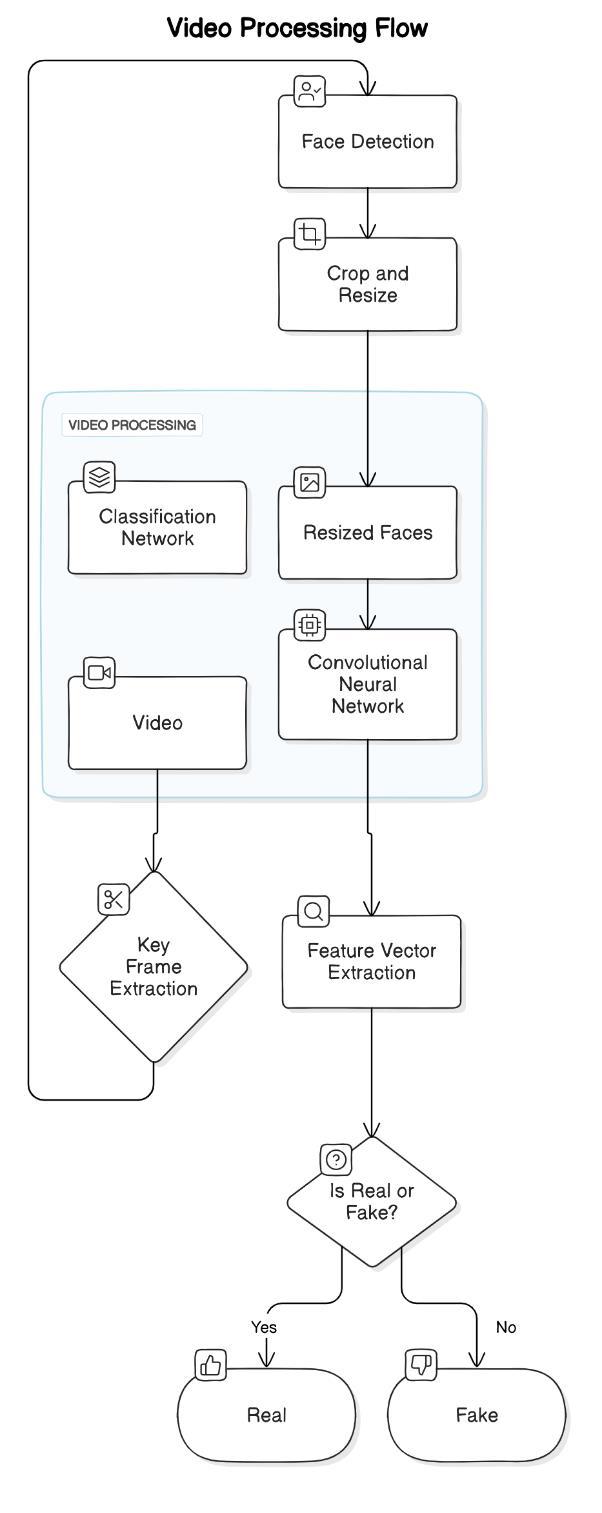

5.2.Algorithm 2:

Convolutional Neural Networks (CNNs) are specialized deeplearningmodelstailoredforprocessinggridlikedata, like images and videos. They comprise layers of convolutional and pooling operations, followed by fully connectedlayers.Intherealmofdeepfakedetection,CNNs areinstrumental.Theyanalyzevisual cuesextractedfrom

media to discern authentic content from manipulated or syntheticmedia.Bytrainingondiversedatasetscontaining bothrealandfakesamples,CNNslearntoidentifypatterns indicativeofmanipulation.

CNN architectures typically entail convolutional layers, which extract spatial features using convolutional filters, followedbypoolinglayersfordownsamplinganddimension reduction. The resultingfeaturesareflattenedandpassed through fully connected layers for classification. Training CNNs involves optimizing weights using gradient based algorithmslikestochasticgradientdescent(SGD)orAdam.A lossfunctionquantifiesthedisparitybetweenpredictedand actuallabels,guidingweightupdatesviabackpropagationto enhancemodelperformance.

Bygeneratinguniquecontentandacknowledgingsources, weupholdethicalstandardsandnurtureinnovationinthe AIcommunity.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 05 | May 2024 www.irjet.net p-ISSN: 2395-0072

5.3.Algorthim 3:

Face recognition algorithms play a crucial role in detecting,localizing,andidentifyingfaceswithinimagesand videos.Theytypicallyundergopreprocessingtodetectfacial featuresandlandmarks,followedbyfeatureextractionand matchingtocomparethesefeatureswithknownidentities.

Intherealmofdeepfakedetection,thesealgorithmsarevital for spotting inconsistencies or irregularities in facial features.Theyanalyzefacialcharacteristicsextractedfrom videoframesandcomparethemwithdatabasesofknown individualstoascertainauthenticity.

Various techniques contribute to face recognition algorithms,suchasViolaJones,DeepFace,andOpenFace. Thesemethodsinvolvetaskslikedetectingfaciallandmarks, extracting descriptors or embeddings, and conducting similaritycalculationsbetweenfacialfeaturevectors.

Despitetheirutility,facerecognitionalgorithmsencounter challenges in identifying manipulated or synthetic faces, including occlusions, pose variations, and varying lighting conditions.Advancedstrategieslike3Dfacereconstruction andmotionanalysisareemployedtomitigatethesehurdles andenhancedeepfakedetectionaccuracy

6.CONCLUSION:

Inconclusion,ourinvestigationintodeepfakeandsynthetic mediahasrevealedalandscapefraughtwithchallengesin today's information ecosystem. Through the utilization of advanced technologies such as Generative Adversarial Networks(GANs),ConvolutionalNeuralNetworks(CNNs), andFaceRecognitionAlgorithms,wehavemadesignificant stridesinbothunderstandingandmitigatingthespreadof false information. Our research journeyed through the intricateintersectionsofthesetechnologies,elucidatingtheir pivotal role in disseminating misinformation and underminingtrustinmedia.

Weunderscoretheurgentnecessityforrobustregulations and proactive measures to combat the proliferation of

deepfake and synthetic media. Addressing these multifacetedchallengesdemandscollaborativeeffortsfrom all stakeholders, spanning researchers, businesses, governments,andindividualsalike.Byfosteringknowledgesharing and engaging in dialogue around ethical considerations, we can forge a path towards a safer and moretrustworthydigitallandscape.

As we navigate the dynamic realm of technology and information, it remains imperative to remain vigilant and proactive in confronting the risks posed by deceptive content.Throughthecollectiveharnessingofexpertiseand resources, we can harness the power of technology for positive impact, safeguarding the integrity of online informationandpreservingtrustforallusers.Together,we canupholdthestandards of honesty and reliability in our digital world, ensuring a more secure and credible informationenvironmentforgenerationstocome.

1. Xin, D., Xiang, L., Qian, Z., Jie, W., & Shaojie, T. (2019). Deepfake Detection: Current Challenges and Next Steps. arXivpreprintarXiv:1910.08854.

2. Zhou, X., Sun, Y., Zhang, W., Zuo, W., & Zhou, S. (2019). Deep Learning on Deepfakes Detection: A Review. In ProceedingsoftheIEEEConferenceonComputerVisionand PatternRecognitionWorkshops(pp.00).

3. Sequeira, J., Anjos, A., & Marcel, S. (2019). On the effectiveness of face morphing detection methods. In ProceedingsoftheIEEEConferenceonComputerVisionand PatternRecognitionWorkshops(pp.00).

4.Yoon,J.,Lee,S.,Kim,E.,Park,J.,Lee,S.,&Kim,H.(2020). FakeChecker:deepfakedetectionwithdeeplearningbased grayscale discrepancy detection. Multimedia Tools and Applications,79(1920),1409114107.

5.Hou,S.,Chai,Y.,Sun,X.,&Huang,J.(2020).PRISM:Fake Face Detection via 3D Facial Geometry Analysis. In Proceedings of the IEEE/CVF Conference on Computer VisionandPatternRecognitionWorkshops(pp.34893498)

6.Farid,H.(2020).Deepfakedetection:Currenttechniques and future directions. IEEE Signal Processing Magazine, 37(1),122127.

7. Nguyen, H. T., Yamagishi, J., & Echizen, I. (2019). Capsuleforensics:Usingcapsulenetworkstodetectforged imagesandvideos.InProceedingsoftheIEEEConferenceon ComputerVisionandPatternRecognitionWorkshops(pp. 1012).