International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN:2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN:2395-0072

Mrs. Sneha Sankhe1,Mr. Mohd Sami Niyazuddin Gawandi2, Mr. Harsh Gharat3, Mr. Rugved Patil4

1,2,3Information Technology, Theem college of Engineering, Mumbai, India 1Professor, Department of Information Technology, Theem College of Engineering, Boisar, Maharashtra, India

Abstract - In an effort to enhance the quality of life and autonomyof disabled individuals, this research presents a novel approach to designing gesture-controlled home appliances throughthe utilizationofa ConvolutionalNeural Network (CNN) algorithm. The integration of technology into the lives of disabled individuals has the potential to significantly mitigate the challenges they encounter in performing everyday tasks. Leveraging the capabilities of CNNs, which have demonstrated remarkable proficiency in image recognition tasks, this study aims to develop a system that interprets andresponds tospecific gestures executed by individuals with disabilities. The proposed system harnesses the power of deep learning to accurately classify and recognize a diverse range of gestures, facilitating seamless interaction with household appliances. To realize this, a comprehensive dataset comprising a wide array of gestures relevant to household activities will be curated and utilized for training the CNN model. The model will undergo rigorous optimization and validation processes to ensure its reliability and generalizability. By enabling gesture-based control of home appliances, individuals with disabilities can exercise greater autonomy and independence in their domestic environments. The system holds the potential to empower users by affording them the ability to operate appliances such as lights, fans, and home entertainment systems through intuitive gestures, thereby eliminating the need for traditional physical interfaces. This not only enhances their sense of agency but also fosters a sense of inclusion and equality within their communities. Moreover, gesture-controlled appliances offer a user-friendly and intuitive means of interaction, potentially eliminating barriers posed by traditional control mechanisms that may require intricate motor skills. The implementation of this system necessitates a multi-faceted approach encompassing data collection, preprocessing, model architecture design, training, and deployment. The CNN model's accuracy and robustness will be systematically evaluated through a battery of tests, encompassing scenarios of varying complexity and environmental conditions. Furthermore, considerations will be given to the adaptability of the system, ensuring compatibility with a range of home appliances and minimal latency in gesture recognition. Ethical dimensions of the technology, including user privacy and data security, will be addressed through stringent measurestosafeguardsensitiveinformation.

Key Words: Hand Gesture, CNN, Hardware

The overview involves the development of a gesture-controlled system for home appliances, catering specificallytoindividualswithdisabilities.Thisinnovative approach harnesses the power of Convolutional Neural Networks (CNNs) to interpret and respond to various gestures, enabling seamless interaction with household devices. By utilizing deep learning techniques, the system aims to enhance user independence and empowerment, offering an intuitive and inclusive means of controlling everyday appliances. The project encompasses several crucial stages, including data collection, preprocessing, CNN model architecture design, training, validation, and deployment. Ethical considerations, privacy, and data securityareintegralcomponentsofthesystemdesignThe need for home automation is becoming increasingly evident as modern living continues to evolve. Home automation offers a solution to a variety of pressing challenges and demands in our daily lives. First and foremost, it enhances convenience and comfort by allowing homeowners to control and manage various aspects of their living space with unprecedented ease. From adjusting lighting and temperature to locking doors and monitoring security, home automation systems streamline the control of the home environment. Beyond convenience, homeautomationalsoplays a crucial role in enhancing energy efficiency and sustainability. Smart thermostats and lighting systems can optimize energy consumption, ultimately reducing utility bills and carbon footprints. For those with disabilities or limited mobility, home automation represents a means of regaining independence, as it enables them to manage their surroundings without physical exertion. Moreover, the integration of home security features, such as video doorbells and surveillance cameras, fosters a heightened senseofsafetyandpeaceofmind.

The concept of gesture-controlled technology has gained significant traction in recent years, driven by advancements in artificial intelligence and machine learning.Gesturerecognition,asubsetofhuman-computer interaction, involves interpreting human gestures and translating them into commands that computers or devices can understand and execute. This technology has

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN:2395-0072

found applications in various fields, including gaming, virtual reality, robotics, and accessibility solutions. For individuals with disabilities, traditional modes of interaction with technology may present substantial challenges. This disparity in accessibility highlights the pressing need for innovative solutions that cater to the diverse needs of disabled individuals, enabling them to interact with technology and their surroundings more independently.

Automized Gesture Controlled SP The proposed algorithm operates in conjunction with a program coded in Arduino, utilizing specific commands to activate the system through gesture recognition. This program is loaded onto the Arduino platform, which interfaces with the circuit to produce an output relayed through a twochannel relay. The gesture apparatus incorporates an infrared emitter that serves as the source of the infrared signal.Whenanobstacleorgestureispresentedinfrontof the device, the infrared signal emitted by the emitter is reflected back from the surface of the obstacle. This reflected signal is detected by the photodiodes integrated within the APDS-9960 gesture device. These signals are subsequently transformed into actionable commands based on a pre-loaded program within the Arduino, enabling control of electronic devices for activation and deactivation. These commands are then visually represented as arrow indicators on the OLED display for userfeedback.[1]

Gesture based Home appliance control system for Disabled People The proposed system employs advanced deep learning algorithms and artificial intelligence techniques as an alternative to costly, high-maintenance sensors. Thisapproachallowsfor remotecontrol ofhome appliances even in areas with limited or no internet connectivity, particularly beneficial in regions like rural areas. As a result, elderly and disabled individuals can conveniently operate their home appliances through our innovativesystem. [2]

Gesture Controlled Home Automation using CNN A system is introduced that automates sign recognition and detection using deep learning and artificial intelligence, reducing the need for costly and high-maintenance sensors. The system processes real-time RGB images, preprocessedtoenhancegestureclarity,utilizingMATLAB Rb for simulation. A novel approach in focuses on classifying multiple gestures simultaneously using a Long Short-TermMemory(LSTM)baseddeepnetworkinspired by Encoder-Decoder architecture, enhancing gesture recognitionaccuracy.[3]

A Home Appliance Control System with Hand Gesture based on Pose Estimation Authors: Yuuki Muranaka, Mohammed Al-Sada, Tatsuo Nakajima Several previous

studieshaveexploredtheutilizationofwearableterminals for hand-gesture input. While the advantages of gesture controlforhomeappliancesincludetouchlessandeyefree operation, wearable systems come with certain drawbacks. They necessitate the device to be worn, adjusted, and fitted, leading to time-consuming and cumbersome routines for daily use. Our approach tackles theselimitationsbyintegratingcamerasandhand-gesture detectioncapabilitiesdirectlyintovariousappliances.[4]

The system architecture for the gesture-controlled device designed to empower individuals with disabilities is structured to seamlessly combine various components andfunctionalities.Atitscore,thisarchitectureconsistsof a Gesture Recognition Module responsible for capturing and interpreting hand movements, a Processing Unit equipped with machine learning algorithms to recognize gestures and user intent, a User Interface that facilitates interaction and provides feedback, and an Appliance Control Interface for establishing connections with home appliances.

The design of a gesture-controlled system tailored for individuals with disabilities is a meticulously crafted framework that orchestrates the seamless interplay of its constituent elements. At its essence, this design revolves around the Gesture Recognition Module, the 10 system's sensory gateway, which captures intricate hand movements with precision. Preprocessing techniques refine the incoming data, preparing it for analysis by the Processing Unit. This unit, powered by sophisticated machine learning algorithms, deciphers the gestures, discerns user intent, and initiates corresponding actions. TheUser Interface,a crucial facet ofthe design, facilitates user interaction and feedback, while offering customizationoptionsandaccessibilityfeaturestocaterto adiverseuserbasewithuniqueneeds.Simultaneously,the Appliance Control Interface forms the bridge to connect with household devices, enabling the seamless execution ofappliancecontrolcommands.

Requirement analysis for a gesture-controlled device tailored for individuals with disabilities involves identifying and defining the specific needs and expectations of the users, as well as the technical requirementsofthesystem.

HardwareRequirements:

1. Camera

2. ArduinoUNO

3. Breadboard

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN:2395-0072

4. Male-femalejumperwires

5. LEDandMotors

SoftwareRequirements:

1. Python and Python Libraries(OpenCV, Cvzone, math,Numpy,etc.)

2. JupyterNotebook

3. VisualStudio

Alongwiththeaboverequirements,theConvolutional Neural Network (CNN) algorithm is used to train the model. They have revolutionized the field of computer vision and are widely used in various applications, including image classification, object detection, image segmentation,andmore.

3.3

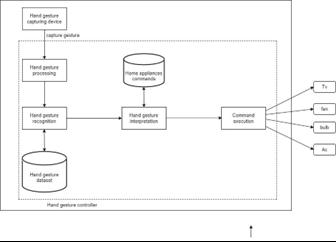

fig1.ProposedSystemofGesture-ControlledHome Appliances

The Proposed System of Gesture-Controlled Home Appliances is divided into three sides namely Client side, Backend processing, and Home interface. On the client side, the user has to submit a gesture, in this phase the user has to capture a gesture which is further captured andpassedtothesystemforgesturerecognition.Thenext phaseisbackendprocessingwherethesystemfetchesthe capturedgestureandstartsrecognizingthegesturemeans whether the gesture is accurate or not. Here, image processing by means of a Convolutional Neural Network (CNN) algorithm is done, and with the help of a gesture dataset, the gesture is recognized. Then a classified gesture comes out and it is converted into a command by the microcontroller. In the last phase, the predefined command for a specific home appliance is executed to changeitsstatus(ON\OFF).

fig2.flowdiagramforGesture-ControlledHome Appliances

An activity diagram is a diagram used in software engineering to model and visualize the workflow or flow of control within a system, process, or component. The user submits the gesture to the model for feature extractionandthetrainedmodelclassifiestheimageifthe gestureisaccuratethecommandbasedonthegesturewill be executed otherwise user has to resubmit the gesture. The command based on the gesture is send for the updatingappliancestatus.

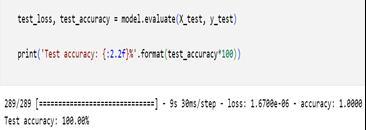

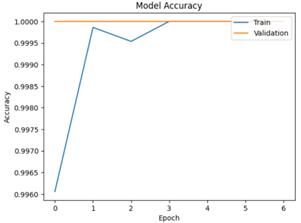

fig3.Accuracypercent

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN:2395-0072

4. Results

A gesture-based home automation system represents a groundbreakingleapinintuitiveandhands-freecontrolof household devices. This innovative solution enables users to effortlessly interact with their environment through simple hand movements, fostering a natural and accessible user interface. The hands-free operation not only promotes convenience but also accommodates individuals with physical disabilities, contributing to a more inclusive living space. Beyond its user-friendly aspects, gesture control enhances energy efficiency by allowing users to easily manage and optimize the operation of various devices. The system's customization options enable users to tailor gestures to specific actions, adding a personalized touch to the smart home experience. Integrating seamlessly with existing smart home ecosystems, this technology encapsulates a modern and tech-savvy aesthetic, while its scalability ensures adaptability to future technological advancements. In essence, a gesture-based home automation system signifiesatransformativeshifttowardsamoreconnected, efficient,andfuturisticlivingenvironment.

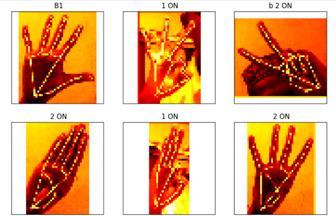

The above image is the GUI for the user to perform gestures. As per predefined gestures, the user has to perform a gesture to update the status (ON/OFF) of the appliances.

Fig5.ONLED

ThestatusoftheLEDi.e.OFFbeforeperformingadefined gesture for updating its status. Once a gesture get performedtheLEDwillgetswitchedON.sthestatusofthe LED i.e. ON after performing a defined gesture for updating its status. After, the LED is turned off once another gesture is performed otherwise it will continue glowinguntilthepowersourceisturnedoff.

In conclusion, the concept of "Gesture-Controlled Home Appliances for Disabled People Using CNN" represents a groundbreaking and transformative innovation in the realm of accessible technology. This system leverages Convolutional Neural Networks (CNN) to recognize and interpret hand gestures, offering a new dimension of controlandindependenceforindividualswithdisabilities. By simplifying the interaction with home appliances and eliminating physical barriers, it promotes inclusivity, convenience, and a higher quality of life for those facing mobility impairments, visual impairments, or other disabilities. The project offers a multitude of advantages thatcansignificantlyenhancethelivesofindividualswith disabilities and promote inclusivity. One of the primary benefitsisthesubstantialimprovementinaccessibility,as the system provides an alternative means of controlling home appliances, reducing reliance on physical switches andexternalassistance.

[1] Neethu PS, Dr. R Suguna, Dr. Divya Sathish, “Real Time Hand Gesture Recognition System”, InternationalJournalofEmergingTechnologiesin

International Research Journal of Engineering and Technology (IRJET) e-ISSN:2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN:2395-0072

EngineeringResearch(IJETER),Volume5,Issue3, March2017.

[2] Mr.KunalA.Wankhade,Prof.GauriN.Zade,“Sign LanguageRecognitionForDeafAndDumbPeople Using ANFIS”, International Journal of Science Engineering and Technology Research (IJSETR), Volume3,Issue5,May2014.

[3] ManishaU.Kakde,AmitM.Rawate,“HandGesture Recognition System for Deaf and Dumb People Using PCA”, International Journal of Engineering Science and Computing, Volume 6, Issue 7, July 2016.

[4] Harish Kumar Kaura, Vipul Honrao, Sayali Patil, Pravish Shetty, “Gesture Controlled Robot using Image Processing”, International Journal of Advanced Research in Artificial Intelligence (IJARAI),Volume2,Issue5,2013.

[5] Priyanka D. Hatwar, Neha A. Wahile, Isha M. Padiya, “Hand Gesture Recognition using Inertial Motion Units(IMUs)”, International Journal of Emerging Technologies in Engineering Research (IJETER),Volume5,Issue3,March2017.

[6] Rafiqul Zaman Khan, Noor Adnan Ibraheem, “HandGestureRecognition”,InternationalJournal of Artificial Intelligence & Applications (IJAIA), Volume3,Issue4,July2012

[7] Rishad E K, Vyshakh C B, Shameer U Shahul, “Gesture Controlled Speaking Assistance for DumpandDeaf”,NationalConferenceOnDiscrete Mathematics&Computing(NCDMC),2017.

[8] Raj,JenniferS.,andJ.VijithaAnanthi."Automation Using IoT in the Greenhouse Environment." Journal of Information Technology 1, no. 01 (2019):38-47.

[9] Prakhyath Rai, Ananya Alva, Gautami K. Mahale, Jagruthi S. Shetty, Manjushree A. N., “Gesture Recognition System”, International Journal of Computer Science and Mobile Computing (IJCSMC), Volume 7, Issue. 5, May 2018, pg.164 –175.