International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

Prof. Bharath Bharadwaj B S1 , Ms. Ashwini S2 , Mr. Basavaraj 3 , Mr. Rahul Raj H4 , Mr.Shreevishnu M5

1 Assistant Professor, Dept. of Computer Science and Engineering, Maharaja Institute of Technology Thandavapura 2,3,4,5Students, Dept of Computer Science and Engineering, Maharaja Institute of Technology Thandavapura ***

Abstract - IndividualDistinguishingproofinnovationhas7 acquired critical fascination as of late where it is finding applications in different areas, for example, security, reconnaissance and policing. In this paper it fosters an answerforrecognizeanindividualwhosedatasetsareput awayindatasetbyuseofUnmannedAeronauticalVehicle (UAV). As of late customary techniques might confront difficulties inactuallycheckingandrecognizing people. In thisway,intheproposedframeworkwiththehighlevelPC visioninnovationandcalculationslikeLBPH,HaarFountain theframeworkinteractionthecaughtlivevideoobservation utilizingdronewhereitcatchesthelivevideowiththefitting pointof37.5degrees.Thisstrategygivesexactnessof90%.

Key Words: Unmanned Aeronautical Vehicle, LBPH, Haar Cascade.

With the fast development of urbanization, the administrationandobservationofthicklypopulatedregions have become progressively intricate and testing. Conventionaltechniquesforindividualdistinguishingproof andchecking,likemanualwatchesorfixedCCTVcameras, frequentlybattletoadapttothedynamicandblockednature of metropolitan conditions. In light of these difficulties, arisingadvanceslikeautomatedflyingvehicles(UAVs)offer apromisinganswerforimprovereconnaissancecapacitiesin thickregions.

Thispaperpresentsanoriginalmethodologyforindividual ID in thick regions utilizing drone reconnaissance. By utilizing progressions in drone innovation and PC vision calculations,theproposedframeworkplanstoaddressthe constraints of customary reconnaissance techniques and work on the proficiency and adequacy of individual recognizableproofinpackedmetropolitansettings.

Drones furnished with high-goal cameras and complex picture handling abilities can possibly give exhaustive inclusion of thickly populated regions according to an airborneviewpoint.Dissimilartofixedcameras,dronesoffer adaptability and portability, permitting them to explore throughcloggedroads,screenenormousgroups,andaccess hard-to-arrive at areas effortlessly. This versatility empowersongoingobservingofdynamiccircumstancesand improves situational mindfulness for policing, security faculty,andmetropolitanorganizers.

Throughthisexploration,weexpecttoshowtheattainability andadequacyofinvolvingdronesforindividualIDinthick regionsandfeaturethelikelyutilizationsofthisinnovation in upgrading public wellbeing, security, and metropolitan preparation. By utilizing the capacities of UAVs and high level PC vision procedures, we imagine a future where drone-based reconnaissance assumes an essential part in guaranteeing the security and prosperity of metropolitan populacesinthicklypopulatedregion.

The vital goal of this study is to foster a coordinated frameworkthatcanpreciselyrecognizepeopleinsidethick metropolitan conditions utilizing drone-based reconnaissance. This includes the plan and execution of vigorousPCvisioncalculationsequippedforidentifyingand perceiving people from airborne symbolism caught by rambles.Highlevelstrategies,forexample,swarmdivision, highlightextraction,HaarFountainandLBPHwillbeutilized todefeatthedifficultiespresentedbycomplexfoundations, impediments,andvarietiesbyallaccounts.

Involving drones for individual ID in thick regions could servedifferentthoughtprocesses,goingfromsecurityand reconnaissancetolookandprotecttasksorevengroupthe board. Here is a breakdown of likely thought processes: Security and Reconnaissance, Policing, and Salvage, Crisis Reaction, Group The board, Metropolitan Preparation and Foundation The executives, Boundary Security. It's fundamentaltoconsiderthemoralandlawfulramifications of involving drones for individual recognizable proof in thickly populated regions, including security concerns, informationassurance,andguaranteeingconsistencewith guidelines administering drone tasks and observation exercises.

1.4.1

IntheareaofinnovationFacerecognitionistreatedasthe requesting and essentially applied approach. The recognizable proof of each face present in a picture is the significant errand of the face identification. Here the executionisfinishedutilizingOpenCV.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

i. Stackingtheinfopictures.

ii. Changing over the information pictures into dark scalepictures.

iii. ApplyingtheHaaroverflowandLBPclassifier.

iv. Contrastingbothclassifierinlightoftheprecision andtime.

a. Importingtherequiredlibraries

b. Takingtheimageswhicharecapturedbythe camera.

c. Toprocesstheimagethroughtheclassifiersit isconvertedintograyscaleimage.

d. ImagewillbeloadedusingOpenCVeBydefault, imagewillbeloadedintoBGRcolorspace.

1.4.2 HAAR CASCADE CLASSIFER

i. Loading the input image using built in function cv2.imread(img_path),herethepassingtheimage pathasaninputparameter

ii. Converting it to gray scale mode and then displayingit

iii. LoadingtheHaarcascadeclassifier

Fig. 1 represents the Haar like feature. It consists of edge featureandlinefeature.Inthegray-scaleimagethewhite barrepresentsthepixelsthatareclosertothelightsource [1].

Haar value calculation:

Pixel value = (Sum of the Dark pixels ∕ Number of Dark pixels)−(SumoftheLightpixels∕NumberofLightpixels)(1)

HaarClassifierisanitemlocationcalculation.Todistinguish thearticleandtorecognizewhatitis;thehighlightswillbe removedfromthepicture.UtilizingCondition(1)Haarpixel worthcanbedetermined[1820]

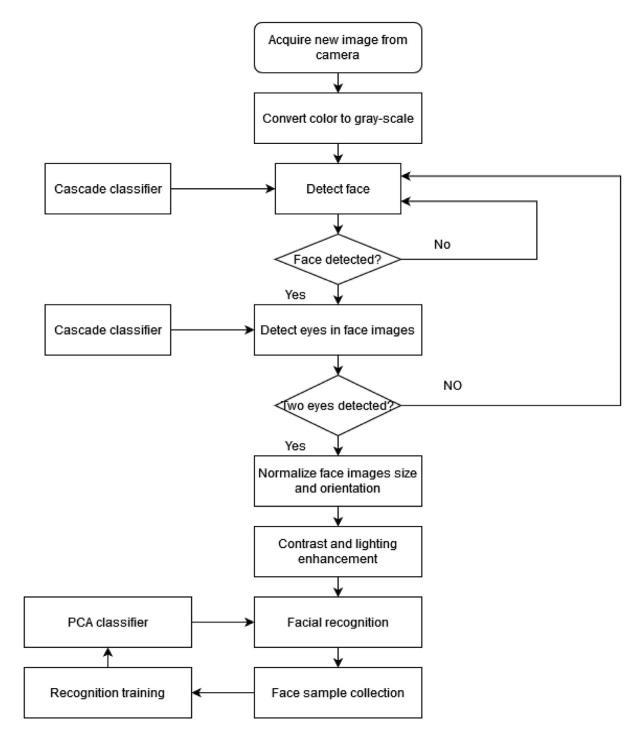

Fig1.representstheflowchartoftheHaarcascadeclassifier. Oncethecamera acquirestheimageitconvertstheimage intogray-scale.Thecascadeclassifierdetectstheface,ifthe faceisdetectedthentheclassifieronceagainchecksforthe botheyesinthedetectedfaceandiftwoeyesaredetectedit normalizes the face images size and orientation. Then the imageisprocessedforfacerecognitionwheretheimageis compared with the face sample collection [24-25]. ImportanceofHaarCascadeclassifieristhatthePerception precisionismoreandthePositiverateisless

Face acknowledgment in human-thick puts is larger part dependedonfixedcamerashavinginclusionregions.Which flopsindistinguishinganindividualfromsignificantdistance or even bombs in perceiving an individual for ID. It even dealswithissuesinthesituationoffixedcameraandinany event, coving all over thick region will be a significant hindrance.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

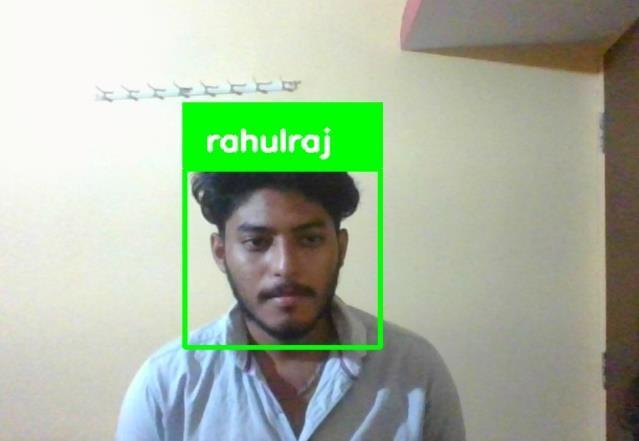

Every one of the understudies of the class should enroll themselves by entering the expected subtleties and afterwardtheirpictureswillbecaughtandputawayinthe dataset. During every meeting, appearances will be distinguishedfromlivewebbasedvideoofhomeroom.The facesrecognizedwillbecontrastedandpicturespresentin thedataset.Ontheoffchancethatmatchfound,therewould be signal sound to convey that the individual is distinguished.

Ordinarilythiscyclecanbepartitionedintofourphases:

1. Dataset Creation

Pictures of understudies are caught utilizing a web cam. Differentpicturesofsingleunderstudywillbeprocuredwith changedsignalsandpoints.Thesepicturesgothroughprehandling. The pictures are edited to get the District of Interest(returnoninitialcapitalinvestment)whichwillbe additionally utilized in acknowledgment process. Subsequentstageistoresizetheeditedpicturestospecific pixelposition.Thenthesepictureswillbechangedoverfrom RGBtodarkscalepictures.Andafterwardthesepictureswill be saved as the names of particular understudy in an organizer.

2. Face Detection

Face location here is performed utilizing Haar-Fountain ClassifierwithOpenCV.HaarOutpouringcalculationshould bepreparedtoidentifyhumancountenancesbeforeittends to be utilized for face recognition. This is called include extraction.TheHaaroverflowpreparinginformationutilized isaxmlrecordHaarcascade_frontalface_default.Hereweare utilizing detectMultiScale module from OpenCV. This is expectedtomakeasquareshapearoundthecountenances in a picture. It has got three boundaries to considerscaleFactor,minNeighbors,minSize.scaleFactorisutilizedto demonstrate how much a picture should be decreased in eachpicturescale.minNeighborsdeterminesthenumberof neighbors every up-and-comer square shape that should have.Higherqualitiesnormallydistinguisheslessfacesyet identifies top notch in picture. minSize indicates the base article size. Of course it is (30,30) [8]. The boundaries utilizedinthisframeworkisscaleFactorandminNeighbors withthequalities1.3and5separately.

3. Face Recognition

Face acknowledgment interaction can be partitioned into three steps prepare preparing information, train face recognizer,expectation.Herepreparinginformationwillbe thepicturespresentinthe dataset.Theywill be relegated withawholenumbernameoftheunderstudyithasaplace with. These pictures are then utilized for face acknowledgment.Facerecognizerutilizedinthisframework is Neighborhood Paired Example Histogram. At first, the

rundownofneighborhoodparallelexamples(LBP)ofwhole faceisacquired.TheseLBPsarechangedoverintodecimal number and afterward histograms of that multitude of decimalqualitiesaremade.Towardtheend,onehistogram will be shaped for each picture in the preparation information. Afterward, during acknowledgment process histogram of the face to be perceived is determined and afterwardcontrastedandthegenerallyfiguredhistograms and returns the best paired mark related with the understudyithasaplacewith[9].

Aterthedistinguishingproofofthedistinctiveindividualis coordinatedwiththeinformationbaseitquicklygivesthe bell sound to the administrator who oversees it which resemblesalarmingthem.

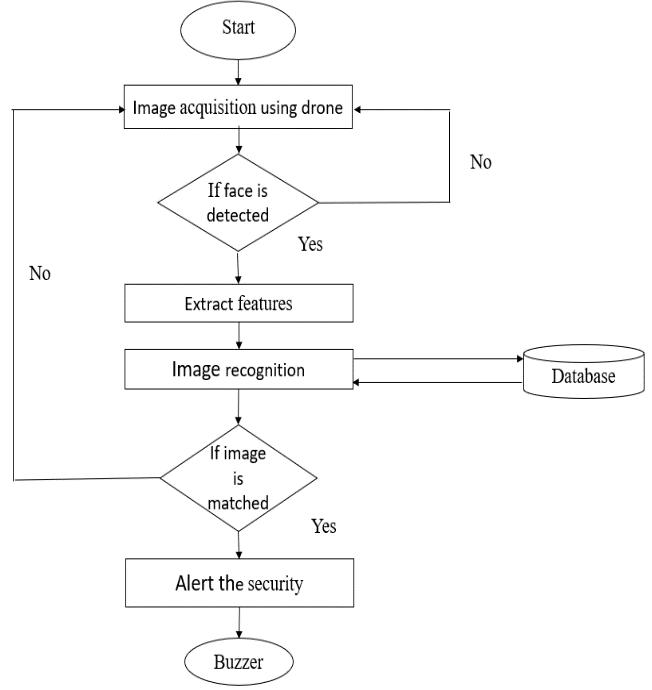

Planning a framework engineering for individual recognizableproofinathickregionutilizingdronesincludes a few parts andcontemplations.Hereisa significantlevel outlineofsuchadesign: Fig 3: System Architecture

Weusedadatasetofimagesofstudentstakeninavarietyof settingsto trainand evaluatetheperformance of our face recognition system. The dataset incorporates an assorted scopeofpictures,envelopingdifferentlightingconditions, points, looks, and impediments regularly experienced in genuinesituations.Wegatheredimagesofstudentsfroma

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

variety of sources, including classroom settings, outdoor settings,andindoorfacilitieslikecafeteriasandlibraries,to ensure comprehensive coverage and generalization. Each picture in the dataset is clarified with bouncing boxes showing the area of the understudy's face, working with directedlearningandassessmentassignments.Weusedthe Haarcascadeclassifierasacrucialfacedetectioncomponent in addition to collecting student images for our face recognition system. In computer vision, the Haar cascade classifier is a popular method for detecting objects. It is particularlyeffectiveandaccurateatdetectingfaces.

We curated a dataset of student images for our project, capturingawiderangeofeducationalsettingscenarios.With aninitialfacedetectionaccuracyof90%,theHaarcascade classifier was utilized to facilitate the process. The Local BinaryPatternsHistogram(LBPH)algorithmwasthenused forfacerecognition,andouraccuracywas85percent.Our system,whichwasincorporatedintoaplatformfordrones, processeddatainrealtimeatarateof10framespersecond. Notably,weputinplaceanalertingmechanismtoletusers know when they see a student's face, enhancing security measures and situational awareness. These outcomes highlightthefunctionalappropriatenessofourframeworkin instructivesettings,whileconversationsonrestrictionsand potential improvements prepare for additional advancementsinfaceacknowledgmentinnovation.

Allinall,utilizingdronesforindividualIDinthickregions givesapromisingarrangementhugepotentialfordifferent applications,includingsecurity,reconnaissance,andgroup the board. By utilizing headways in drone innovation, PC vision,andcorrespondenceframeworks,anextensivedesign can be created to recognize people inside jam-packed conditionareas.

Inanycase,it'scriticaltoaddressdifficulties,forexample, protection concerns, lawful consistence, and specialized constraintstoguaranteethecapableandmoralsendingof suchframeworks.Moreover,progressing innovative work endeavors are important to improve the precision, versatility,andvigoroftheframeworkengineering.

Generally, with cautious thought of these variables and persistent refinement, the coordination of robots for individual recognizable proof in thick regions holds extraordinary commitment for improving situational mindfulness, public wellbeing, and security in different settings.

[1] Z.Zaheer,A.Usmani,E.KhanandM.A.Qadeer,"Aerial surveillance system using UAV," 2016 Thirteenth International Conference on Wireless and Optical CommunicationsNetworks(WOCN),Hyderabad,2016

[2] AnujPuri,“ASurveyofUnmannedAerialVehicles(UAV) for Traffic Surveillance” ,Department of Computer ScienceandEngineeringUniversityofSouthFlorida

[3] A.A.Shah,Z.A.Zaidi,B.S.ChowdhryandJ.Daudpoto, "Real time face detection/monitor using raspberry pi andMATLAB,"2016IEEE10thInternationalConference on Application of Information and Communication Technologies(AICT),Baku,2016

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

[4] Y.Ganesh,R.RajuandR.Hegde,"SurveillanceDronefor LandmineDetection,"2015InternationalConferenceon Advanced Computing and Communications (ADCOM), Chennai,2015

[5] L.A.Elrefaei,A.Alharthi,H.Alamoudi,S.AlmutairiandF. Alrammah, "Real-time face detection and tracking on mobile phones for criminal detection," 2017 2nd InternationalConferenceonAnti-CyberCrimes(ICACC), Abha,2017

[6] D.PietrowandJ.Matuszewski,"Objectsdetectionand recognitionsystemusingartificialneuralnetworksand drones,"2017SignalProcessingSymposium(SPSympo), Jachranka

[7] J. Zhu and Z. Chen, "Real Time Face Detection System Using Adaboost and Haar-like Features," 2015 2nd International Conference on Information Science and ControlEngineering,Shanghai,2015

[8] K. Sung and T. Poggio. Example-based learning for viewbased face detection. In IEEE Patt. Anal. Mach. Intell.,volume20,pages39–51,1998.

[9] H. Rowley, S. Baluja, and T. Kanade. Neural networkbased face detection. In IEEE Patt. Anal. Mach. Intell., volume20,pages22–38,1998.

[10] D. Roth, M. Yang, and N. Ahuja. A snowbased face detector.InNeuralInformationProcessing12,2000.

[11] H. Schneiderman and T. Kanade. A statistical methodfor3Dobjectdetectionappliedtofacesandcars. InInternationalConferenceonComputerVision,2000.

[12] M. Yang and D. J. Kriegman, "Detecting faces in images: a survey "IEEE Trans. Pattern Analysis and MachineIntelligence,vol.24,no.1,january2002.