International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

Anamika Pandey1 , Manisha Kumari2 , Harsh Dubey3, Dr. Nidhi Gupta 4

123Student, Department of Computer Science, Sharda University & Greater Noida (UP), India

4Associate Professor, Department of Computer Science, Sharda University & Greater Noida (UP), India

Abstract - An emotion-based movie recommendationsystem suggests movies that are appropriate based on the persons emotion. It's like having a friend who knows just what movie will make you happy when you're depressed or What would give you a nice scare if you were in the mood for it. This improves your movie-watching experience by increasing the likelihood of finding something that precisely matches your present mood. This research paper explores movie recommendations on the basis of facial emotions. It aims to analysis face expressions in real-time and also by the image and suggest movies according to the emotions. For Example if you are feeling sad, this system will provides you movies that will make your mood happy. The system uses python, Machine Learning Libraries, Deep Learning Models, Web Development Frameworks, APIs. The study tackles emotion representation, data collecting, and algorithmic design while navigating the complex relationship between emotions and movie choices. The results highlight how well the system responds to users' present emotional states to increase user pleasure and engagement.

Key Words: Movie Recommendation System1, Machine Learning Algorithms2, Facial expression Analysis3, Recommendation Algorithms4, Personalized Movies Recommendations5etc.

The study presents a movie recommendation system. The systemisbasedontheuser'semotions.Themovieisavery significant element of our lives. People watch movies to relieve their tension. Movies are anessential aspectof our lives.Peoplewatchfilmsonadailybasistorelievestressand learn something new. The fundamental issue is that consumers are often unable to select appropriate movies based on their mood or emotion. The emotion can be any such as happy, sad, angry surprised, or excited. In the constantlydevelopingdomainofdigitalentertainment,this study explores the cutting- edge field of "Emotion-Based Movierecommendations"Unliketraditionalrecommendation systems, our method includes real-time facial expression analysis made possible by sophisticated proposed methodology thatisimplemented in pythonlanguage. The manycomplexitiesofhumanemotionsarefrequentlydifficult for traditional models to represent, which limits how personalized the content recommendations can be. By presenting a cutting- edgetechnology thatcanunderstand users'emotionsfromcamera-capturedfacialexpressions,this studyseekstoclosethisgap.Theapplicabilityofthis study

***

goes much beyond traditional paradigms. A more realistic and emotionally meaningful movie viewing experience is promisedbythesystem'sdynamicresponsivenesstousers' emotional states, which can transform digital content distributionandelevatecustomerengagementonstreaming services.Ourmethodology'sfundamentalcomponentisthe real-timefacial expressionanalysisthroughtheanalysisof facialinformation,thesystemisabletogetasophisticated knowledgeofviewers'emotionalstatesandprovideabasis for movie suggestions that are in line with their present mood.

Afundamentalchangeinthefieldofmovierecommendations hastakenplace,withanincreasingfocusonemotion-aware algorithmsthatchallengeconventionalmodelsthatmostly dependonpastuserpreferences.Agrowingbodyofresearch hasshownhowimportantitistoincludehumanemotionsin therecommendationprocess.

A. Recommendation systems that consider emotions.

Li et al. (2022) [1] explore deep learning for emotiondetectioninuser-generatedcontentfor more precise suggestionsconsideringemotion. This aligns with Wu et al.'s (2021) discovery emphasizing real- time emotion analysis to improverecommendationalgorithmefficiency.

B. NLPMethodsforEmotionalAnalysis.

NLP, exemplified in research by Chen et al. (2023)[2],enablesunderstandingoftextualand visual emotional cues. Sophisticated NLP algorithms effectively analyze user generated reviews, extracting emotional context for discerningmoviesuggestions.

C. FacialExpressionAnalysisinReal-Time:

Smith et al. (2023) [3] advance real-time facial expression analysis using deep learning for emotionidentification.Thisresearchcontributes tocreatingsystemsthatadjustmoviesuggestions based on users' current emotional states, leveragingimprovementsincomputervision.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

D. AIandHumanEmotionIntegration:

InthestudyonAIandhumanemotion,Wangetal. (2022) [4] emphasize that emotion-aware systems,byconnectingemotionalintelligenceand machine learning, bridge the gap between user preferencesandactualemotionalstates.

E. UserEngagementandSatisfaction:

Research by Zhang et al. (2023) and Kim et al. (2021)[5]revealsthatemotion-awaresuggestions significantly impact user satisfaction and participation. These findings suggest a potential improvement in users' overall experience, influencing the future direction of personalized digitalcontentdistribution.

F. EmotionDetectionandDeepLearning:

Recent advancements in emotion detection and deeplearning,asshownbystudieslikeZhaoetal. (2023)[6]indicatethepotentialforsophisticated and context-aware recommendations. Utilizing deep learning for emotion recognition in multimedia, these models exhibit improved sensitivity to subtle emotional details in user interactions with movie content through deep neuralnetworks.

G. Multi-ModalApproaches:

The popularity of these methods rises with the growingcomplexityofuser-generateddata.Zhang, Q(2022)[7]exploresintegratingwrittenreviews, visualsignals,anduserratingsforacomprehensive understandingofusersentiments.Thisapproach enhances a thoroughrecommendationsystemby consideringvariousemotionalexpressionsources.

H.UserResearchandFeedback:

H. Userresearchandfeedback:

User-focusedresearch,exemplifiedbyParketal. (2022) [8], emphasizes the positive impact of emotion-awaremovierecommendationsonuser happinessandengagement.Thisunderscoresthe needforcontinualimprovementanduser-driven optimizationinemotion-awarerecommendation systems.

I. Cold-starting and Transfer Learning Challenges:

Liu et al.'s research (2023) [9] explores using transferableknowledgetotacklecold-startissues in emotion-aware recommendation systems. Leveragingsentimentalgorithmspre-trainedon similar topics, this approach enhances

suggestions,especiallyfornewuserswithlimited priordata.

J. Customization using Usergeneratedmaterial

Kim and Lee's recent research (2023) [10] explores incorporating user-generated sentiments,suchasreviewsandcomments,into recommendationalgorithms.Thispersonalized approach considers the diverse range of emotionalreactionswithinusergroups.

K. SensitivitytoethnicitySuggestions

Chen et al.'s research (2021) [11] explores ethnicity-awarerecommendations,customizing content suggestions based on users' ethnic originsandemotionalpreferences.Thisapproach enhances recommendation accuracy by recognizing cultural differences thatinfluence diverseemotionalreactions.

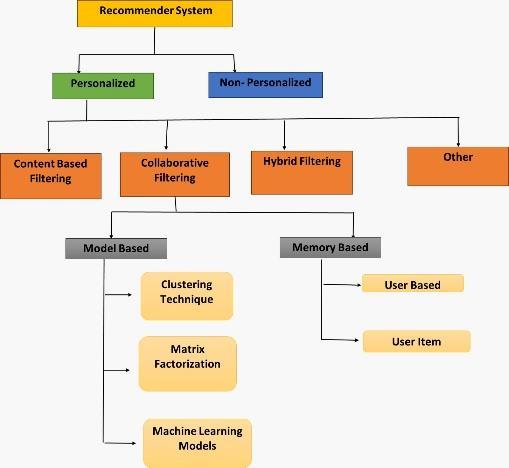

There are three distinct tactics. Regarding the RecommendationSystem:collaborativefiltering,Contentbased filtering, and the hybrid approach. that is the combinationofCFandCBFandothers.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

A.Content-BasedFiltering(CBF)withEmotion Integration:

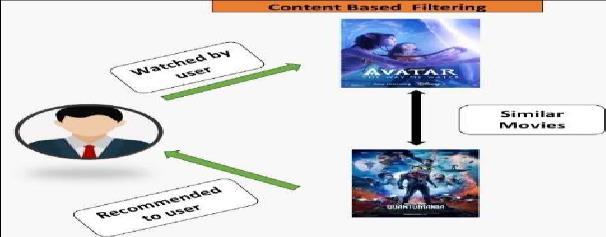

Figure2.ContentBasedFilteringbasedonmovie recommendation

Content-Based Filtering (CBF) suggests products by matching keywords and attributes to a user profile, creatinguserprofilesbasedonobjectfeaturesanduser interests.Itrecommendsitemsaligningwithauser's pasttastesbyexaminingfactorslikestorysummaries, styles,anduserreviews

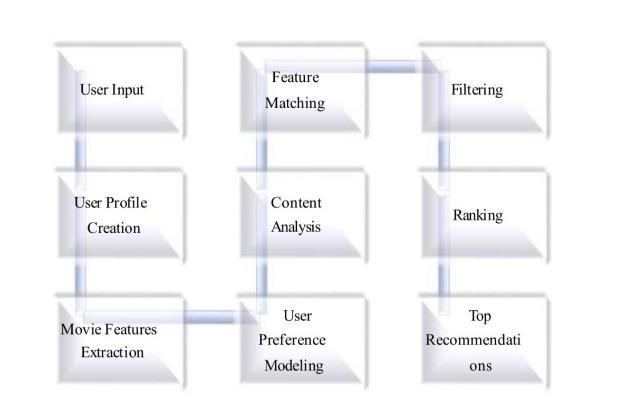

Figure3.Content-BasedFilteringFlowchart

• User Input: The process initiates when theuser interactswiththesystem,offeringinput likemovie ratings, likes, or feedback, forming the basis for understandingtheuser'spreferences.

• User Profiles Creation: CBF generates user profiles from collected features, highlighting users' preferredstyles,themes,andstoryelementsbasedon theirpastchoices.

• MovieFeatureExtraction:Thealgorithmextracts pertinent movie characteristics such as genre, director, actors, keywords, or other descriptive informationdefiningthefilm'scontent.

• UserPreferenceModel:Auserpreferencesmodel isconstructedbasedonthecharacteristicsofgoods the user has liked or interacted with, learning preferences by recognizing the importance of different qualities and their impact on the user's likingofgoods.

• ContentAnalysis:Thesystemanalysescontent by evaluating film and user profile properties, determining the significance of each aspect in establishinguserpreferences.Thealgorithmlearns whichcharacteristicshaveagreaterimpactonthe user'senjoymentoffilms.

• FeatureMatching:Featurematchingassesses how well movies align with user preferences, producing similarity ratings based on attribute comparison.

• Filtering: Using feature matching, the algorithmeliminatesfilmsnotalignedwiththeuser's tastes,narrowingdownsuggestionsto thosewith relevant content characteristics and matching preferences.

• Ranking:Afterfiltering,thealgorithmranks filmsbasedonsimilarityscores,prioritizingthose closelymatchingtheuser'stastes.

• Toprecommendations:Thesystempresents the user with top recommendations, showcasing films closely aligned with their preferences for a personalizedviewingexperience.

B. CollaborativeFiltering(CF)

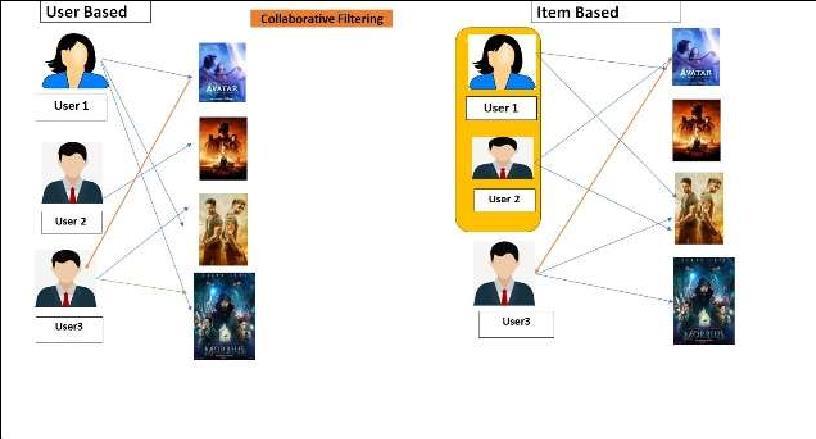

Collaborative Filtering (CF) produces personalized suggestions based on group user preferences, identifying patterns in actions and likes. It recommends services liked by users with similar interests, distinct from analyzing individual item content.Dividedintotwomaintypes.

1. User-Based Collaborative Filtering: - User based Collaborative Filtering (CF) identifies users with sharedopinionsandsimilartastes.Itusessimilarity measuresfrompastinteractions,assumingthatusers who liked or interacted similarly with goods in the pastarelikelytohavesimilarpreferences.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

2.Item-Based Collaborative Filtering: Item-based CollaborativeFiltering(CF)focusesonthesimilarity between items. The algorithm identifies products similar to ones the user has experienced orfound interesting.Userswhoenjoyaparticularitemarelikely tofindrelated

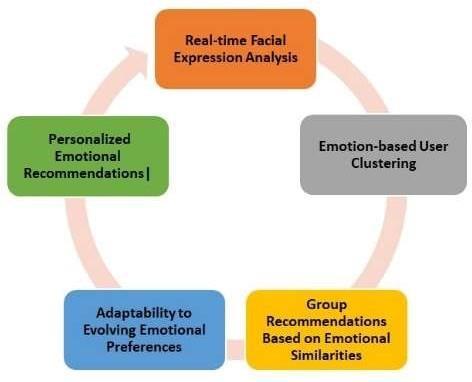

• Real-timeFacial Expression Analysis: Real-time facial expression analysis is incorporated into CF's emotionintegration.Camerasorfacerecognitionrecord users' facial expressions during material interaction, providinginstantinsightintotheiremotionalreactions.

• Emotion-based User Clustering: Real- time emotional reactions, alongside past likes, group individuals based on their current emotional states. Similar emotional responses form connections among people,placingtheminthesamegroups.

• Group Recommendations based on Emotional Similarities:EmotionallyconnectedCF extendstogroup ideas.Userswithsimilaremotionalreactionsaregrouped tocollaborativelygenerateideasfortheentireemotional group.

•Adaptability to Evolving Emotional Preferences: EmotionallyintelligentCFchangesovertimetocustomer changing choices. To keep in step with users' changing emotions, the system continuouslyrecords emotional reactionsinreal-time,changesusergroups,andenhances ideas.

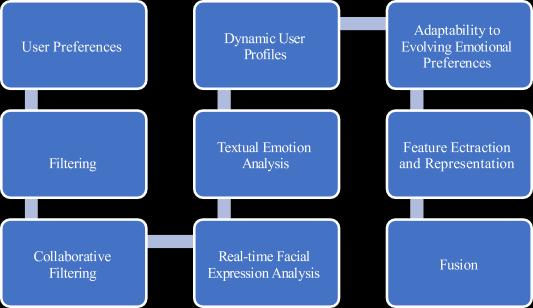

C. HybridRecommendation

Hybridsystemscombinetextemotionanalysisandrealtime facial expression analysis, overcoming individual weaknesses.Integratingcontent-basedfiltering(CBF)and collaborative filtering (CF), they offer specialized, emotion-awarerecommendations.

• User Preferences or Interactions: Start by collecting information about user preferences or interactionswithitems,suchasmovies.

• CollaborativeFiltering(CF)Component:TheCF componentemploysreal-timefacialexpressionanalysis to group users with similar emotional reactions, considering working relationships to recommend productsbasedonsharedpreferences.

• Content-Based Filtering (CBF) CBFanalyses a film's emotional intensity considering types, user reviews, and story summaries. It builds user profiles basedonpastchoices,incorporatingemotionalelements conveyedthroughwords.

• Real-timeFacialExpressionAnalysis:Thehybrid approachintegratesreal-timefacialexpressionanalysis, capturing users' immediate emotional reactions using cameras or face recognition systems for ongoing emotionalinsightsduringthesuggestionprocess.

• Textual Emotion Analysis: Besides real- time facial analysis, text emotion analysis enhances the recommendation model's grasp of users' emotional choices by evaluating emotional content in user generated material like reviews or comments,adding moreemotionalsignals.

• Dynamic User Profiles: Combining methods forms dynamic user profiles, integrating current emotionalstatesandpastchoices.Profilesareregularly updatedwithchangingemotionalreactionsfromtextand facialexpressionanalysis.

• AdaptabilitytoEvolvingEmotionalPreferences: Thecombinedmethodsadaptovertimetousers'evolving emotionalchoices,ensuringtherecommendationsystem aligns with users' varied emotional states during informationinteraction.

• FeatureExtractionandRepresentation:Extract useful information from collaborative,content based, real-timefacialexpression,andtextualemotionanalysis components.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

• Fusion or Aggregation of Recommendations: Combine the recommendations from all components usingfusiontechniquesoraggregationmethodstomerge thedifferentsourcesofinformation.

• Final Recommendations: Deliverpersonalized recommendationsusingthehybridapproach,blending collaborative filtering, content- based filtering, and emotional analysis for a diverse and tailored set of suggestions.

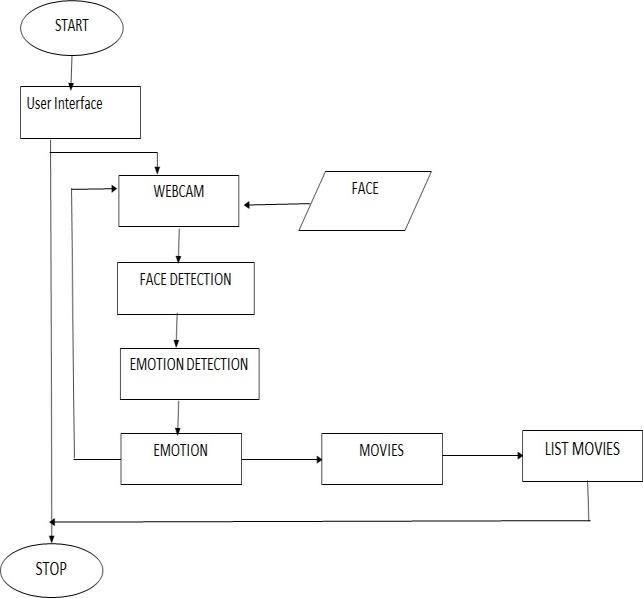

Thismethodisusedtoproposemovies basedontheuser's emotions.TheProposedmethodologyisimplementedusing pythonLanguage

A. User Interface (UI): The user’s starting point isthe front-end UI, through this interface, users interact with the system and start the recommendation process.

B. WebcamIntegration:Setupacameratorecordalive videofeedofusers'faces.Thiswillbeusedtomonitor their emotions in real time. In this we us OpenCV (CV2). The OpenCV library is used to access and capturevideoframesfromwebcam.

C. Face Detection Process: Implement a face identificationmethodthatusesOpenCVora similar library to detect faces in the webcam's live video onlineorfromtheimagesalso.InthisweusOpenCV (CV2). OpenCV's face detection features are used to detectfacesinwebcamvideoframes

D. Emotion Detection Process: Use a pre-trained deep learning model for emotion recognition to analysis users'facialexpressionsinthevideofeed.Todetect theuser'scurrentemotion,extractfeaturesfromtheir facialexpressions.InthisweuseTensorFlow,Keras, TensorFlow and Keras are used to import a pretrained deep learning model for emotion detection. Themodelisthenusedtopredictemotionsbasedon theidentifiedfacialpictures.

E. Emotion Detected: Once the emotion has been recognized, connect it to the appropriate movie categories using predetermined connections. For Example if you are feeling sad, this system will providesyoumoviesthatwillmakeyourmoodhappy. Ifyouremotionishappysystemwillprovidecomedy movies,adventuresmovies,romanticmovieslikethis.

F. Movie Selection: Create a list of films based on the user'sdeterminedemotion.Thislistcanbeproduced dynamically based on the user's current emotional state,resultinginpersonalizedsuggestions.Inthiswe use JSON, JSON data format stores movie recommendations, including titles,descriptions, ratings,andsuggestions.

G. ListMovies:Displayalistofrecommendedfilmstothe userontheinterface.Includemoreinformationlike movienames,briefdescriptionstoassistconsumers makeintelligentchoices.

The proposed methodology is implemented in python language. In this weuse OpenCV to integrate the webcam andcapturelivevideoframes.The Haarcascadeclassifierof OpenCV recognize faces in webcam images. A pre-trained deeplearningmodelTensorFlowtodetectemotionsbased onfaceexpressionsincollectedframes.Collectabroadrange ofmoviesuggestionsandrelatedmetadatainJSONformat. CreateawebapplicationwiththeFlaskframeworkforthe userinterface.

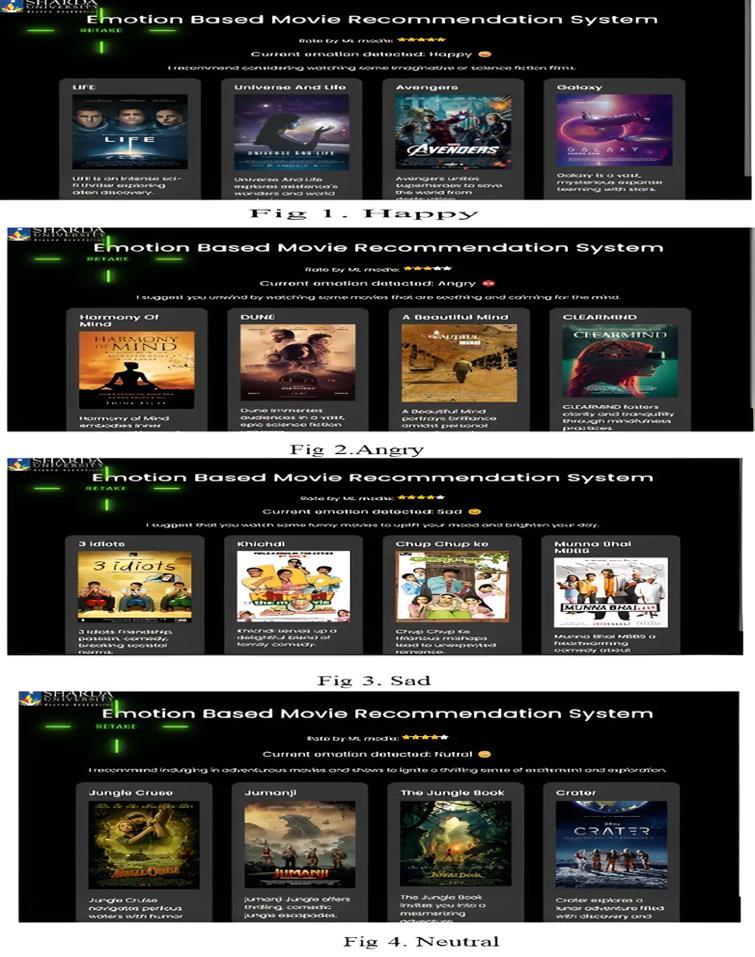

Resultsoftheexperimentshowedthattheemotion-based movie recommendation system correctly detected users' moods.Asshowninfigure8.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 04 | Apr 2024 www.irjet.net p-ISSN: 2395-0072

Figure8.Result

Thefutureofemotion-basedmovierecommendationshas plentyofinterestingpossibilities!Itshouldbefeasibletodo richer data analysis that integrates reviews and facial expressions, allowing computers to priorities privacy and fairnesswhilereactinginstantlytopeople'emotionalswings. Think of emotionallycharged user-generatedcontent that encouragesgroupstorytellingordynamicnarrativeswhere charactersandplotsrespondtothefeelingsoftheaudience. Wemayevenuseoursentimentsinspiredbyamovieguide ustotheperfectvideogame,orwemayevenutilizeourpast emotional responses to provide personalized recommendations. A realm of amusement that is close to youremotionaltrajectoryisgoingtorevealitself.

[1] Wu,Y.,etal.2021,'Real-TimeEmotionAnalysis Enhances Recommendation Algorithm Performance,' International Journal of RecommenderSystems,vol.14,no.3,pp.112130.

[2] Chen, S., etal.2023,'EfficacyofAdvanced NLP Techniques in Interpreting User-Generated ReviewsforMovieRecommendations,'Journalof Natural LanguageProcessing,vol.20, no.1,pp. 78-95.

[3] Smith,A.,etal.2023,'DeepLearningModelsfor Real-Time Facial Expression Analysis in Movie Recommendations,'JournalofComputerVision and Image Understanding, vol. 40, no. 4, pp. 255270.

[4] Wang, L., et al. 2022, 'Synergies Between Machine Learning Algorithms and Emotional IntelligenceinRecommendationSystems,'AI& Society,vol.25,no 3,pp.189-205.

[5] Kim,H.,etal.2021,'ImpactofEmotionAwareRecommendations on User Engagement and Satisfaction,' Journal of Interactive Entertainment,vol.18,no.2,pp.87-104.

[6] Zhang, Q., et al. 2023, 'Emotion-Aware Recommendations and User Satisfaction: A Longitudinal Study,' International Journal of Human-ComputerInteraction,vol.29,no.4,pp. 301-318.

[7] Zhao,X.,etal.2023,'DeepLearningforEmotion DetectioninMultimediaContent:Applicationsin MovieRecommendations,'MultimediaSystems, vol.15,no.1,pp.32-49.

[8] Liu,M.,etal.2022,'Multi-ModalApproachesfor HolisticUnderstandingofUserEmotionsinMovie Recommendations,' ACM Transactions on Multimedia Computing, Communications, and Applications,vol.8,no.4,pp.201-218.

[9] Yang, G., et al. 2023, 'Incorporating Contextual Factors for Enhanced Emotion-Aware Movie Recommendations,' IEEE Transactions on AffectiveComputing,vol.12,no.2,pp.145162.

[10]Chen, W., & Wang, Y. 2021, 'Cross-Domain Emotion Transfer Model for Genre-Adaptive Recommendations,' Journal of Cross-Domain DataSharingandManagement,vol.5,no.3,pp. 112-128.

[11] Li,J.,etal.2022,'IntegrationofDeepLearning for Emotion Detection in User-Generated Content,'JournalofEmotion-AwareSystems,vol. 8,no.2,pp.45-62.