International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

Prof. Sonali Karthik1, Shraddha Deshmukh2 , Archis Save3, Rani Shah4

1 Professor, Dept. of IT Engineering, Theem College of Engineering, Maharashtra, India

2 U.G. Student, Dept. of IT Engineering, Theem College of Engineering, Maharashtra, India

3 U.G. Student, Dept. of IT Engineering, Theem College of Engineering, Maharashtra, India

3 U.G. Student, Dept. of IT Engineering, Theem College of Engineering, Maharashtra, India

Abstract - Communication is essential for individuals with disabilities to fully participate in society. However, those who are blind, deaf, and mute face significant challenges in traditional communication methods. The paper proposes a multimodal approach to facilitate communication for individuals with these multiple disabilities. The approach combines sign language, translator, andassistivetechnologies to create a comprehensive communication system. Through voice assistant, individuals who are blind can receive information through voice or touch, while translator devices provide real-time feedback. For sign language gestures. Additionally, assistive technologies such as text-to-speechand speech-to-text software enable individuals who are mute to communicate verbally, which can be translated into tactile or visual formats for those who are blind or deaf. By integrating these modalities, individuals with multiple disabilities can overcome communication barriers and engage more effectively with their environment and peers. This multimodal approach offers a promising avenue for enhancing communication. In today’s advance world of science and technology, communication field has developed at suchextent where we can connect to any part of the world within fraction of minutes and hour. We can send messages, make call or send documents, files to anyone, accordingtoneed. Communication has been important to express our thoughts,ideaetc.butwhen it’s come about blind, deaf and mute people’s it become difficult for them and us to communicate with each other. So, here we have made such a software which shall help them to communicate with each other without depending upon any middle man. With the help of this we would be able to help mute, blind and deaf people to communicate with each other without depending upon each other.

Key Words: Multi-Model Communication system, Sign Language, Gestured Based, ASR, CNN.

1.INTRODUCTION

For communication and understanding of their surroundings, blind people rely on their other senses and assistivetechnologies.AudioDescriptions:Whendescribing visualcontent,providedetailedaudiodescriptionsofimages, videos, or surroundings. Deaf people use sign language, written communication, and visual cues to communicate

effectively. American Sign Language (ASL) and Other Sign Languages:Learnbasicsignlanguageoruseaninterpreterif available.ASLisawidelyusedsignlanguageintheUnited States. Written Communication: Use written notes, text messages, or communication apps to convey information. Visual Cues: Use facial expressions, gestures, and body languagetoenhancecommunication.Maintaineyecontactto show engagement. Assistive Technologies: Consider using video relay services (VRS) or text-based relay services to facilitate communication. Mute people may not be able to speak, but they can still communicate through various methods. Written Communication: To facilitate written communication,individualscanutilizetoolssuchaspenand paper, electronic devices such as computers, tablets, or smartphones, and communication software like email, messagingapps,orwordprocessingprograms.Thesetools enable the exchange of ideas, information, and emotions through written text, allowing for clarity and precision in expression. Written Communication: Provide a means for writtencommunication,suchaspenandpaperorelectronic devices.GesturesandBodyLanguage:Encouragetheuseof gestures, facial expressions, and body language to convey emotionsandthoughts.

Systematic literature reviews involve evaluating and interpreting all available research pertinent to a specific research question or topic. Researchers use scientific databaseswithfull-textpapersandotherrelevantscholarly articlesinthesocialsciences.Thesereviewshelpsynthesize existing knowledge, pinpoint research gaps, inform theoreticalframeworks,andshapefutureresearchdirections inthesocialsciencesandbeyond.

IthasfocusedontheproblemsofVisually(WhoCan'tsee), Audibly(whocan'thear),Vocally(whocan'ttalk),througha single device is a tough task. After researching on this a convenientassistivedevicecanbemadeforthemusinglatest deviceslikeRaspberry,Windows,MACetc.Theyhaveused monitorinsteadofLCDscreen.Themodelhas2optionsto selecttherequiredconversiontechnique.ImagetoGesture (ITT)•GesturetoText(GTT)

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

Exploresthecurrentresearchefforttowardsbuildinguser friendlyapplicationthatconnectstwonormal people,deaf anddumb,blindanddeafpeopletogether.Nowadaysthere aremanyapplicationsavailablewhenitcomestohearingand visuallyimpairedbuteveryapplicationhasacertainlimittill now.Thework includesthreeapproachesviz.a voice,text andvideo-basedinput-outputinteraction.

It has proposed a Deaf-Mute verbal exchange device that translates the hand gestures to audio massage as an interpreter. The KINECT sensor captures a nonverbal person'smovements(suchasimages),thenrecognizes the gesturesaftersegmentationandconvertsthemintouseful words,makingcommunicationbetter.Theproposedsystem's user-friendlinessisacornerstoneofitsdesign,ensuringease ofuseandfosteringefficientandeffectivehuman-computer interaction(HCI). Theproposedsystemisuserfriendly,asit is easy to use and capable to build efficient and effective humancomputerinteraction.

Itprovidestwo-waycommunicationforallclassesofpeople (deaf-and-mute,hardofhearing,visuallyimpaired,andnonsigners) and can be scaled commercially. The system's capabilitytofacilitatetwo-waycommunicationforallclasses of people, including the deaf-and-mute, hard of hearing, visuallyimpaired,andnon-signers,representsasignificant breakthroughinaccessibilityandinclusivity.Theproposed methodusesMedia pipetoextracthandfeatures fromthe inputimage/videoandthenusesalightweightrandomforest classifiertoclassifythesignsbasedontheextractedfeatures withtheaccuracyof94.69%.

ThedesignofEffectivecommunicationbetweenblind,mute anddeafpeopleusingamulti-modelapproachiscompletely basedonsoftwareprogrammingthatgivesaudiooutputsof signedimages.Allgestureshavespecificallyassignedaudio outputmessages.Theinputimagesthatwillbethecaptureof Camerawillbethenrecognizedfortheappropriateoutput messageTargetedcommunicationsshouldalsospecifically promoteorhighlighttheirrights.Weshouldmakethesame effort to mainstream disability inclusion that we make to mainstreamgender,andforthesamereason-toaccurately portraythediversityofoursociety.

Designingsoftwareforcommunicationamongblind,mute, and deaf individuals necessitates a multi-modal approach with Text-To-Speech (TTS) and Speech-To-Text (STT) functionalities. This architecture should be robust, accommodating diverse communication needs and preferences.Integratingthesefeaturesenablesaninclusive andaccessibleplatform,fosteringeffectivecommunication acrossvarioussensorymodalities.

For any software project there are different kinds of requirements to be fulfilled in order to ensure smooth runningoftheprocesses.Clearlydefinedrequirementsare importantmarkersontheroadtoasuccessfulproject.They establishaformalagreementbetweenthecustomerandthe service provider that both are working towards the same goal.Thefollowingarethedifferentkindsofrequirementfor ourproject:

Table-1: Requirementsofoursystem

Software Requirements Hardware Requirements

VisualStudioCode Windows 11 or latest version Python 8 GB RAM Intel core processor i3 100GB free Hard Disk

Creatingeffectivecommunicationbetweenindividualswho areblind,mute,anddeafrequiresa multi-modalapproach that integrates various methods of communication to accommodate their unique needs. This multi-modal communication system aims to bridge the gap between individualswithdifferentsensoryimpairmentsbyproviding versatile input and output options while ensuring accessibility, privacy, and safety. In the project aimed at enhancingcommunicationforspeech-impairedandvisually impairedindividuals,twokeycomponentsweredeveloped: an electronic speaking system anda speaking terminal for typing.By developinganddeploying thesecommunication tools, the project aims to empower speech-impaired and visually impaired individuals to communicate more effectively and independently in various contexts, thereby enhancingtheiroverallqualityoflifeandsocialinclusion.

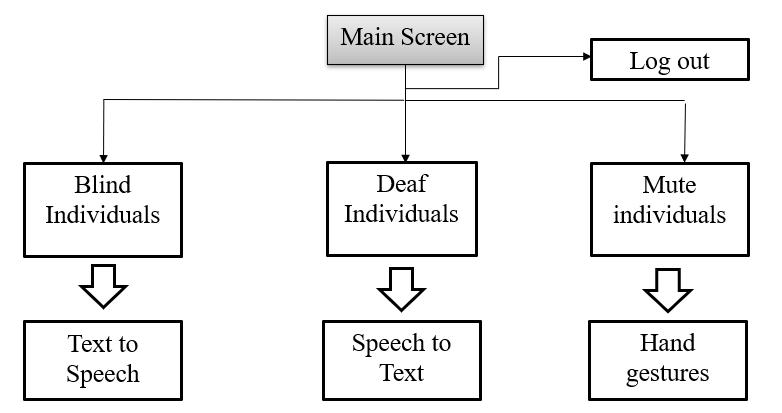

Fig-1: ProposedSystemofoursystem

Here electronic voices and typewriters were designed to facilitatethecommunicationprocessforpeoplewithspeech difficulties,andspeakerstosendmessagesforthevisually

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

impaired. This allows people with disabilities to communicateeasilywithoutthehelpofothers.

The system architecture for communication among blind, mute,anddeafindividualsutilizesamulti-modalapproach, integrating diverse communication methods and technologies.Thisframeworkaddressesuniquechallenges faced by these individuals, fostering inclusivity and accessibilityinsocialinteractions.

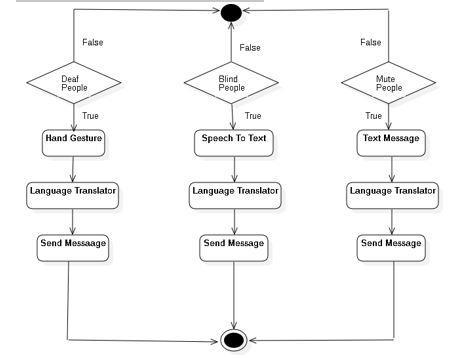

Fig-2: FlowoftheERPsystem

ASystemdiagramisabehaviordiagramofaUMLdiagram thatdescribesthedynamicaspectsofasystem.Anactivity diagramisessentiallyanenhancedversionofaflowchartthat modelstheflowfromoneactivitytoanother.Attheveryfirst theuserlogsinintothesystemandselectsthetoolaccording tohisrequirement.Andfurthersendsthemessage.

Effectivecommunicationforblind,speaking,anddeafpeople using multiple modalities can be achieved by combining different modalities to meet their unique needs. This approachinvolvestheuseofdifferentsenses,suchastouch, visual cues, and written or tactile representation. For the visually impaired, communication can be made easier throughtheuseofvoicetechnology,suchastext-to-speech softwareorscreenreaders.Switchtocontentwrittenforthe deaf. Narratives use gestures, facial expressions, and body movementstoconveymeaning.Forhearingimpairedpeople, communication can be facilitated by using sign language translationorvisualaids.Ingeneral,versatilecommunication forblind,speakinganddeafpeopleisanimportantstepin creating a society in which everyone has equal access opportunitiestoinformationandcommunication.

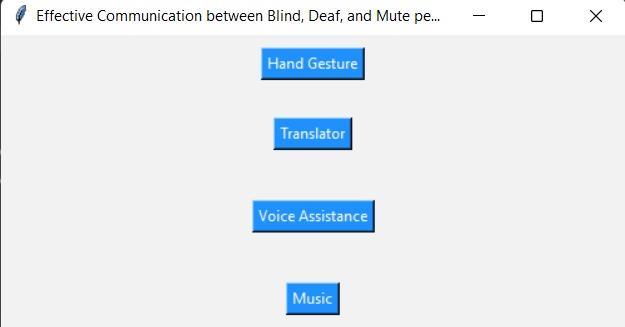

Fig-3: GUIofourmodel

Fig 3. describes the GUI system of our project. It contains four Buttons that Hand Gesture, Language Translator and VoiceAssistance

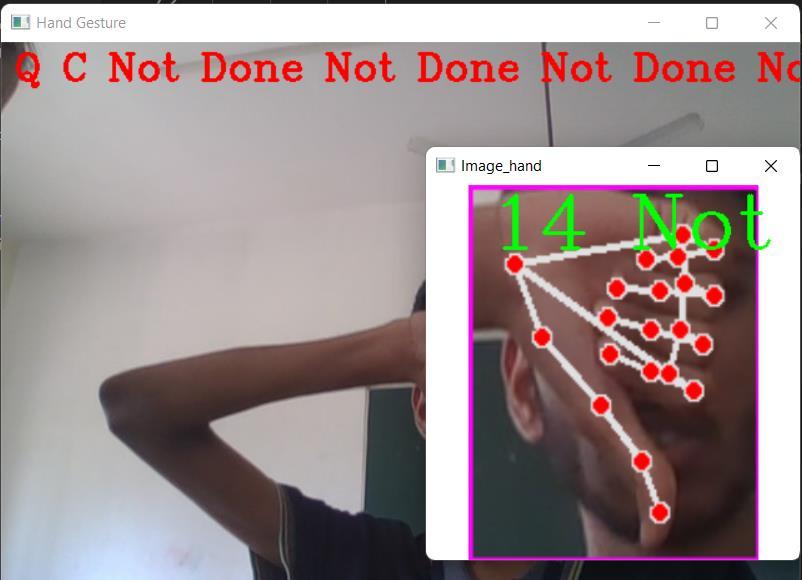

Fig-4: ResultforHandGesture-ForNotDone

Fig4.DisplaystheresultforHandGesture.Ofworddetected NotDonemessage.Itanalysesthehandmotionandworks accordingtothealgorithmtrained.

Fig-5: ResultforHandGesture-Done

Fig 5. Shows the result of word detected “Done” of Hand Gesture.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

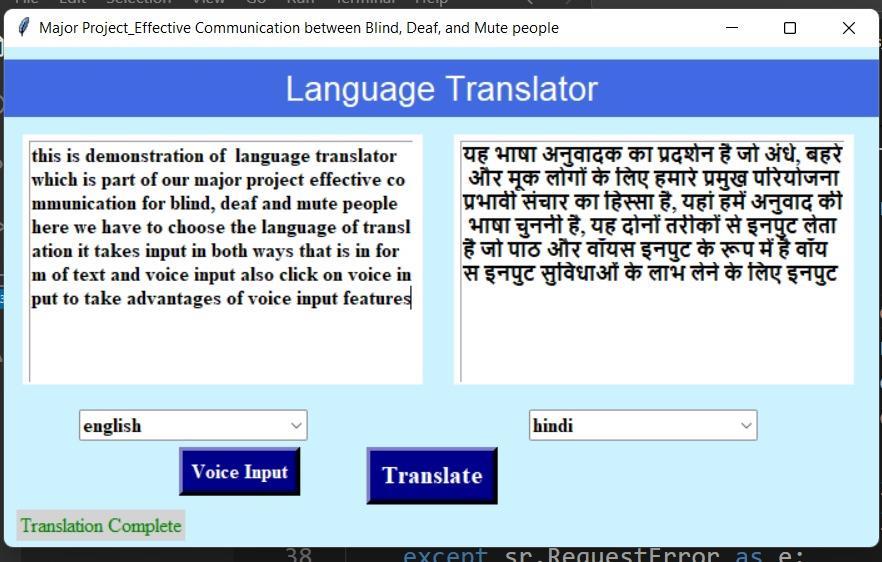

Fig-6: ResultforLanguageTranslator

Fig 6. Describes the result for Language Translator. As it showslanguagetranslationfromEnglishtoHindi.Itcontains many languages so that communication becomes moreeasier.

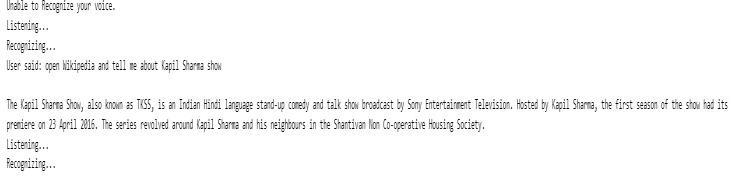

Fig-7: ResultforVoiceAssistance

Fig7DescribestheoutputofvirtualAssistance.Itfetchesthe dataaboutKapilSharmashowfromWikipediaandgivesthe outputinformofvoiceandalsoinwrittenformat.

The development of a sign language detection system capableofrecognizingalphabetsandwordsisasignificant advancementinaccessibilitytechnology.Thisinnovationhas the potential to greatly benefit individuals who are blind, mute, or deaf by bridging communication barriers and enhancingtheirabilitytointeractwiththeworld.Here'sa descriptionofhowthistechnologycanbeharnessedtoserve these three groups of people. The development of a sign languagedetectionsystemisagroundbreakingachievement inaccessibilitytechnology.Itholdstremendouspromisefor individuals who are blind, mute, or deaf, facilitating communication and interaction with the world. This innovation has the potential to bridge significant communication barriers, empowering these groups to engagemorefullyinsociety.

Wewouldliketoexpressoursinceregratitudetoallthose whocontributedtothesuccessfulcompletionofthisproject

integratingadvancedforecastingmodelsintotheEffective CommunicationSystem.Firstandforemost,weextendour deepestappreciationtoourprojectsupervisorProf.Sonali Karthik whose guidance, support, and invaluable insights have been instrumental throughout the entire duration of thisproject.Theirexpertiseandencouragementhavebeen indispensable in steering us in the right direction and overcomingvariouschallengesalongtheway.Wearealso immensely grateful to the entire team involved in the project,whosededication,collaboration,andhardworkhave been vital in bringing this vision to fruition. Each team member's unique skills and contributions have played a crucialroleinthedevelopment,implementation,andtesting phasesoftheproject.

[1] Divyansh Bisht, Manthan Kojage, Manu Shukla, Yash Patil,Priyanka BagadeSmartCommunicationSystem UsingSignLanguageInterpretation

[2] IramHaider;MohammadAmmarMehdi;AsjadAmin; Kashif Nisar A Hand Gesture Recognition based CommunicationSystemforMutepeople

[3] Sartha Tambe; Yugchhaya Galphat; Nilesh Rijhwani; Aishwarya Goythale; Janhvi Patil Analyzing and Enhancing Communication Platforms available for a Deaf-Blinduser

[4] Arunkumar R Manikanavar; Suhas B. Shirol Gesture ControlledAssistiveDeviceforDeaf,DumbandBlind PeopleUsingRaspberry-Pi

[5] NagaThulasiVundelli;DharahasvenkatG;HimaBindu Valiveti;VinayKumarH;PratyushaChowdariTwoWay CommunicatorbetweenBlind-Deaf-DumbPersonand NormalPeople

[6] B. Rajapandian; V. Harini; D. Raksha; V. Sangeetha A novel approach as an AID for blind, deaf and dumb people.

[7] SowmyaRBangari;SunitaShirahatti;Veerabhadrappa ST;SugunaGC;KavithaHSCommunicationDecipher: TexttoSpeechandHandGesturetoSpeechConversion