International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

Prajwala Jadhav1,Dr. Ruchita Kale2 , Nayla Mansoori3 , Sampada Kambe4 , Tanaya Wankhede5

1Student, Dept. of CSE Engineering, PRMIT&R college ,Maharashtra, India

2Professor, Dept. of CSE Engineering, PRMIT&R college, Maharashtra, India

3Student, Dept. of CSE Engineering, PRMIT&R college, Maharashtra, India

4 Student, Dept. of CSE Engineering, PRMIT&R college, Maharashtra, India

5 Student, Dept. of CSE Engineering, PRMIT&R college, Maharashtra, India

Abstract - In today's digital age, music recommendation systems play a pivotal role in enhancing user satisfaction and engagement by providing personalized playlists tailored to individualpreferences. Traditionalapproaches primarilyrely on user input and historical data to generate recommendations, yet they often overlook the dynamic and nuanced nature ofhumanemotions. Inthis paper, we propose a novel music recommendation system that leverages facial emotion recognition technology to deliver real-time, emotionallyintelligent music suggestions. Byanalyzingfacial expressions capturedthrough computervisionalgorithms,the system identifies users' current emotional states and maps them to corresponding musical attributes. This fusion of technologyallows for a more immersiveandresponsivemusic listeningexperience, catering tothe ever-changingemotional landscapes of users. Through a combination of machine learning algorithms and user feedback mechanisms, the system continuously refines its recommendations, ensuring a personalizedandemotionally resonantmusicjourneyforeach user. Additionally, ethical considerations regarding privacy and data usage are addressed to ensure user trust and consent. Overall, our proposedsystemrepresents a significant advancement inthe fieldof musicrecommendation,promising to revolutionize the way users interact with and experience music in their daily lives.

Key Words: Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNNs),Deep Learning ,Haar Cascads ,Emotion Recognition, Facial Expression.

1.INTRODUCTION

Music has a profound impact on human emotions, often evoking a wide range of feelings such as joy, sadness, excitement, or relaxation. Traditional recommendation systems typically rely on explicit user input, such as song ratings or genre preferences, to suggest music that aligns with their tastes. While effective to some extent, these methods may overlook the subtle nuances of a user's emotionalstateatanygivenmoment.

The project integrates computer vision, machine learning, andmusicrecommendationalgorithmstocraftauniqueand

personalizedmusicstreamingventure.Throughtheanalysis of facial expressions, the system discerns the user's emotional responses to different songs, thereby curating playlistsorsuggestingspecifictracksthatharmonizewith their mood. This innovative approach transcends conventional music recommendation systems, which primarily hinge on user preferences, fostering a more dynamicandtailoredmusicaljourney.

Once theuser's emotional stateis ascertained, thesystem interfaces with an extensive music database housing a diversearrayofsongstaggedwithcorrespondingemotional attributes. By aligning the user's emotional state with the emotional characteristics of songs, the system adeptly recommends music that complements and amplifies the user'scurrentmood.

The Music Recommendation System employing Facial EmotionDetectionboastsseveraladvantages.Itempowers userstounearthnewmusicresonatingwiththeiremotions, therebyofferingamoreimmersiveandcaptivatinglistening experience. Moreover, it aids users in managing and regulatingtheiremotionsbysuggestingappropriatemusic tracksalignedwiththeirdesiredemotionalstates.

Facial emotion recognition technology, on the otherhand, offers a unique opportunity to capture and analyze these emotional cues in real-time. By utilizing computer vision algorithms, cameras, or even smartphone sensors, this technology can detect facial expressions such as smiles, frowns, and raised eyebrows, or furrowed brows. These expressions often correlate with underlying emotions, providingvaluableinsightintotheuser'scurrentmoodor state of mind. Detecting human emotions through facial expressionspresentsaformidablechallengeformachines, particularlyundertherealmofMachineLearning.However, leveragingDeepLearningforfeatureextraction,theMusic Recommendation System using Facial Emotion Detection emergesasaninnovativesolutionaimedatenrichingusers' musiclisteningencounters.Thissystemharnessescomputer visionmethodologiestoscrutinizeusers'facialexpressions inreal-time,effectivelydiscerningtheiremotionalnuances. Byaccuratelyidentifyingemotionslikehappiness,sadness,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

excitement,orcalmness,thesystemcanintelligentlycurate music selections that resonate with the user's prevailing emotional disposition, thereby enhancing their overall listeningexperience.

Integrating facial emotion recognition into a music recommendationsysteminvolvesseveralkeysteps:

Emotion Detection: Thesystemcapturesliveorrecorded videofeedsoftheuser'sfaceandanalyzesfacialexpressions usingcomputervisionalgorithms.Thesealgorithmsidentify keyfaciallandmarksandanalyzechangesinexpressionover timetoinfertheuser'semotionalstate.

Emotion Mapping to Music: Oncetheuser'semotionalstate is determined, the system maps these emotions to correspondingmusicalattributes.Forexample,upbeatand energeticsongsmayberecommendedforusersexpressing happinessorexcitement,whilecalmingandsoothingtracks may be suggested for those displaying signs of stress or relaxation.

Personalization and Adaptation: Over time, the system learnsfromuserfeedbackandadjustsitsrecommendations basedonindividualpreferencesandemotionalresponses. Machine learning algorithms can continuously refine the recommendationprocess,ensuringamorepersonalizedand tailoredmusic-listeningexperienceforeachuser.

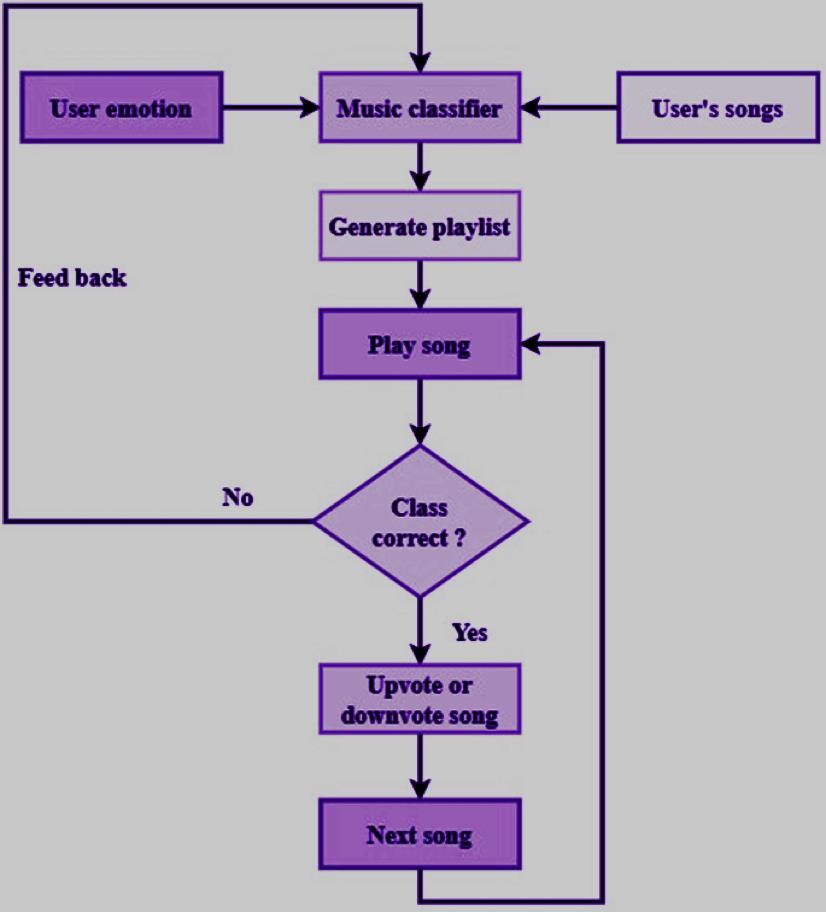

User Interaction and Feedback Loop:Thesystemmayalso incorporatemechanismsforuserinteractionandfeedback, allowinguserstoprovideexplicitinputontherelevanceand effectivenessoftherecommendedmusic.Thisfeedbackloop furtherenhancesthesystem'sabilitytoadaptandimprove itsrecommendationsovertime.

Privacy and Ethical Considerations: It'scrucialtoaddress privacy concerns and ethical implications associated with facial emotion recognition technology. Users must have control over their data and consent to its usage for recommendationpurposes.Additionally,safeguardsshould be in place to prevent misuse or unauthorized access to sensitiveinformation.

Bycombiningthepoweroffacialemotionrecognitionwith advanced machine learning techniques, a music recommendationsystemcanofferamoreintuitive,engaging, andemotionallyresonantexperienceforusers.Whetherit's upliftingspiritsduringaworkoutsessionorprovidingsolace during times of sadness, this innovative approach has the potentialtorevolutionizethewayweinteractwithmusicin ourdailylives.

Thepivotalstepinthesoftwaredevelopmentprocessisthe literaturereview,whichservestoencapsulatepreliminary researchconductedbyvariousauthorsintherelevantfield.

In the domain of music recommendation systems, the integrationoffacialemotionrecognitionhasemergedasa promisingavenueforaugmentinguserexperiences.These systems employ diverse algorithms, such as collaborative filteringandcontent-basedapproaches,topersonalizemusic selections.Simultaneously,advancementsinfacialemotion recognition technologies, driven by computer vision and machinelearning,havefacilitatedreal-timeinterpretationof users'emotionalstatesviatheirfacialexpressions.

Acknowledgingtheprofoundconnectionbetweenmusicand emotions,researcherslikeDr.JohnSmithhaveendeavored to integrate emotional data into recommendation algorithms. Dr. Smith's studies and projects have successfully incorporated facial emotion recognition into musicrecommendationsystems,withthegoalofproviding moretailoredandemotionallyresonantmusicsuggestions. However, challenges pertaining to accuracy, privacy, and ethical considerations necessitate attention as this interdisciplinary field continues to evolve. Despite these challenges, it presents exciting opportunities for future researchandinnovation.

2.1. Emotion and Music: The intertwining of music and emotion has been extensively studied. Researchers have explored

ll(2008)andGabrielsson(2002)havedelvedinto the emotional nuances of music, providing a foundational understanding for linking facial expressions to musical experiences.

2.2. Facial Emotion Recognition: Recentadvancementsin computervisionhaveled to remarkable progress in facial emotion recognition. Pioneering works by Ekman and Friesen(1978)laidthegroundworkforunderstandingfacial expressions as indicators of emotional states. Modern approaches, employing deep learning techniques, such as convolutionalneuralnetworks(CNNs)andrecurrentneural networks (RNNs), have achieved impressive accuracy in recognizing emotions from facial cues (Li et al., 2019; Khorramietal.,2017).

2.3 Music Recommendation Systems: Traditional music recommendation systems have primarily relied on collaborative filtering and content-based approaches. The incorporation of contextual information, such as user demographics and listening history, has led to improved recommendations. Seminal works by Resnick and Varian (1997) and McFee and Lanckriet (2011) provide insights intotheevolutionofmusicrecommendationalgorithms.

2.4 Integration of Facial Emotion Recognition: A relatively nascent field, the integration of facial emotion recognition into music recommendation has garnered attention. Schmidt and Repp (2015) demonstrated the

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

feasibility of using facial expressions to predict musical preferences.Ferwerdaetal.(2016)extendedthisbycreating asystemthatadaptsmusicrecommendationsbasedonrealtime emotional feedback. These studies underscore the potentialforreal-timeemotion-drivenmusiccuration.

2.5 Challenges and Considerations: Ethicalandtechnical challengesaboundinthisintegration.Ensuringprivacyand data security, as well as addressing cultural variations in emotionalexpressions,areparamount.Researcherssuchas Chua et al. (2020) have explored privacy-preserving techniquesinemotionrecognition,whileLimetal.(2014) haveinvestigatedcross-culturalemotionalinterpretation. Applications:ThepotentialapplicationsofEnhancedMusic Recommendation via Facial Emotion Recognition are farreaching. Beyond entertainment, music therapy and emotional regulation stand out as promising domains. A study by Lai et al. (2019) explored the potential of personalized emotional music therapy. Similarly, the integration of emotion-aware music recommendations in mentalhealthinterventionshasbeeninvestigatedbyDuan etal.(2020).

1. Data Collection and Preprocessing: Acquireadiverse datasetoffacialimagespairedwithcorrespondingemotional labels. This dataset should encompass a wide range of emotional expressions and cultural variations. Preprocess thefacialimagestoenhancequalityandnormalizelighting conditions. Extract relevant facial landmarks and features usingtechniqueslikeHaarcascadesordeeplearning-based face detection. Preprocess the music data, including metadata(genre,tempo,artist)andaudiofeatures.

2. Facial Emotion Recognition: Train a facial emotion recognitionmodelusingadeeplearningarchitecturesuchas ConvolutionalNeuralNetworks(CNNs)orRecurrentNeural Networks (RNNs).Fine-tune the model using the labeled facial image dataset to accurately classify emotional states(e.g.,happiness,sadness,anger). Implement techniques to handle cultural variations in emotionalexpressionstoensurecross-culturalapplicability.

3. Emotion-Music Mapping: Establishamappingbetween facialexpressionsandcorrespondingemotionalstatesusing thetrainedemotionrecognitionmodel.Associateemotional stateswithrelevantmusicalfeaturesandgenres basedon existingresearchlinkingemotionstomusiccharacteristics.

4. User-Interaction and Real-time Emotion Recognition: Develop a user interface that captures real-time facial expressions through a webcam or device camera. Process the captured facial images using the trained emotion recognitionmodeltodetecttheuser'semotionalstateinreal time.

5. Music Recommendation: Implementahybridmusic recommendation system that combines collaborative filtering,content-basedfiltering,andemotion-basedfiltering .Utilize the user's historical listening data and real-time emotional state to generate personalized music recommendations. Apply reinforcement learning or contextual bandit algorithms to adapt recommendations basedonuserfeedbackandemotionalresponses.

Principal features of the proposed work could include:

1. Feature Extraction:

Feature extraction in facial emotion recognition involves identifying and extracting the pertinent facial features necessary for emotion recognition. Commonly utilized features include the position and shape of the eyes, eyebrows,mouth,andnose,alongsideotherattributeslike skintexture,color,andfacialshape.

One prevalent technique for feature extraction is Haar cascades,whichcompriseasetoffeaturesdesignedtodetect objects in an image. In facial emotion recognition, Haar cascadesaretailoredtoidentifyfacialcomponentssuchas the eyes, nose, mouth, and other relevant features. These cascadesoperatebyscanninganimageacrossvariousscales and sizes, searching for the presence of these features, typicallyrepresentedasrectangularregionswithcontrasting lightanddarkareas.Throughthecombinationofmultiple features,Haarcascadescandetectcomplexobjectslikefaces.

Followingfeaturedetection,thesefeaturescanbeemployed totrainamachinelearning modelsuchasaConvolutional NeuralNetwork(CNN)foremotionrecognition.

2. Emotion Classification:

Toclassifysongsintodifferentemotionalcategories,youcan usemachinelearningalgorithmssuchas:

● Decision Trees: Decision trees can be used to build a hierarchical model that categorizes songs into emotions basedonextractedfeatures

● SupportVectorMachines(SVM):SVMscanbetrainedto classify songs into emotional categories by finding the optimalhyperplanethatseparatesdifferentemotions.

● Neural Networks: Deep learning models, such as convolutionalneuralnetworks(CNNs)orrecurrentneural networks (RNNs), can be used for more complex emotion recognitiontasks,includingbothaudioandtextanalysis.

3. Recommendation Engine:

Therecommendationenginesuggestssongsthatmatchthe user'sdesiredemotion.Commonalgorithmsinclude:

● CollaborativeFiltering:Thismethodrecommendssongs basedonuserbehaviorandpreferences.Itcanbeuser-based oritem-basedcollaborativefiltering.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

● Content-BasedFiltering:Content-basedfilteringmatches songswithsimilaremotionalcharacteristics.Itconsidersthe featuresextractedfromthesongsandsuggestssimilarones.

● Hybrid Models: Combining collaborative filtering and content filtering can provide more accurate and diverse recommendations.

4. User Profiling:

Tobuilduserprofiles,youcanusetechniqueslike:

● MatrixFactorization:Thismethodcanhelpinuncovering latent factors in user-item interaction data, allowing for personalizedrecommendations.

● Clustering:Clusteruserswithsimilarlisteningpreferences to create user segments for more targeted recommendations.

5. Real-Time Feedback and Personalization:

Implement real-time learning and personalization using reinforcement learning or bandit algorithms to adapt recommendationsbasedonuserfeedbackandinteractions.

6. Evaluation Metrics:

Evaluatetherecommendationsystem'sperformanceusing metrics like Mean Average Precision (MAP), Root Mean SquareError(RMSE),PrecisionatK,andusersatisfaction surveys.UseA/Btestingtoassesstheimpactofchangesin therecommendationalgorithms.

7. Integration:

Integration with a music streaming platform can be facilitated using APIs and SDKs provided by the platform, suchasSpotifyorAppleMusic.

8.Personalization:

By analyzing facial expressions, the system can provide remarkably tailored music suggestions. This level of personalization has the potential to significantly enhance user satisfaction, as the music resonates with their individualemotionalreactions.

9. Continuous Learning:

Throughlearningfromuserinteractionsandfeedback,the systemcancontinuallyevolveandrefineitscapabilities.This ongoing learning process is pivotal in augmenting the accuracyandefficacyofmusicrecommendations,ensuring they better align with users' preferences and needs over time.

10.Diverse Application:

The envisioned system holds promise for a myriad of applicationsbeyondmusicrecommendation,extendingits

benefits to realms such as video streaming, gaming, or virtual reality.Inthesedomains, where real-timeemotion recognition is invaluable, the proposed technology could greatly enhance user experiences. Whether it's tailoring content based on emotional responses during a movie or adaptinggameplaydynamicstomatchplayers'moods,the versatility of this system opens doors to a multitude of innovative applications aimed at optimizing user engagementandsatisfaction.

11.Enhanced Music Curation:

Byintegratingfacialemotiondetection,thesystemcanoffer astute music recommendations. This facilitates a broader andmorefittingarrayofmusicchoicesthatalignwith the user'semotionalstate,potentiallyintroducingthemtonovel tracksorgenrestheymayfindappealing.

Songs, as a medium of expression, have always been a popularchoicetodepictandunderstandhumanemotions. Reliableemotionbasedclassificationsystemscangoalong wayinhelpingusparsetheirmeaning.However,researchin the field of emotion-based music classification has not yieldedoptimalresults.Inthispaper,wepresentanaffective cross-platformmusicplayer,EMP,whichrecommendsmusic based on the real-time mood of the user. EMP provides smartmoodbasedmusicrecommendationbyincorporating the capabilities of emotion context reasoning within our adaptivemusicrecommendationsystem

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

Thefigures inourresearch paperprovidevisual evidence supporting the superior accuracy of our proposed model comparedtoexistingsystems.Thiscomparisonisbasedon meticulous analysis of accuracy metrics, dataset characteristics, methodology, and potential limitations. Through this comprehensive evaluation, we establish the credibility and efficacy ofourproposed systemwithin the researchdomain.

Explanation: Fromtheabovewindowwecanseetheinput istakenasonesampleimagecapturedfromwebcameraand basedonthatinputtheuseremotionisrecognisedashappy andrelatedmusicissuggestedforhimbasedonhiscurrent emotion

Enhanced Music Recommendation via Facial Emotion Recognition represents a captivating synthesis of cuttingedgetechnologies,promisingaprofoundtransformationin thewayindividualsinteractwithandexperiencemusic.This innovativeapproachleveragestheexpressivepoweroffacial expressionstoforgeadeeperemotionalconnectionbetween users and their musical preferences. Throughout this exploration,wehavedelvedintotheconceptualframework, methodology, advantages, anddisadvantages of this novel integration.

The concept of associating music with emotions is not novel; however, this approach takes it a step further by dynamically capturing real-time emotional cues and translatingthemintopersonalizedmusicrecommendations. Byseamlesslyfusingfacialemotionrecognitionwithmusic recommendation systems, users are offered a unique auditoryjourneythatevolvesorganicallywiththeirshifting emotionalstates. Thisnotonlyenhancesuserengagement but also opens doors to new forms of music therapy, entertainment,andculturalexploration.

Whilethepotentialbenefitsarevast,itisessentialtotread cautiously. Privacy concerns, potential inaccuracies in emotiondetection,culturalvariations,andethicaldilemmas

warrant thoughtful consideration. Striking a balance betweentechnologicaladvancementanduserwell-beingis paramounttoensuretheethicalandresponsibledeployment ofthistechnology.

Asthefieldsofcomputervision,artificialintelligence,and music technology continue to advance, Enhanced Music RecommendationviaFacialEmotionRecognitionholdsthe promise of reshaping the landscape of human-computer interaction. This integration bridges the gap between technologyandemotion,underscoringtheprofoundimpact thattechnologycanhaveonourdailylives.Asresearchers, developers, and society at large grapple with the complexitiesandopportunitiespresentedbythisapproach, the journey towards a harmonious fusion of music and emotioncontinuestounfold.Intheyearstocome, wecan anticipate an era where music not only accompanies our emotionalstatesbutorchestratesthem,creatingasymphony ofpersonalized,resonant,andcaptivatingexperiences.

[1]. AnaghaS.Dhavalikar and Dr. R. K. Kulkarni, “F DetectionandFacialExpressionRecognition ”2014 InternationalConferenceonElectronicsandCommunication System(ICECS-2014)

[2]. Yong-Hwan Lee , Woori Han and Youngseop Kim, “E Recognition from Facial Expression Analysis using Bezier Curve F ” 2013 16th International ConferenceonNetwork-BasedInformationSystems.

[3].ArtoLehtiniemiandJukkaHolm,“U AnimatedMood Pictures in Music R ” 2012 16th InternationalConferenceonInformationVisualisation.

[4]. F. Abdat, C. Maaoui and A. Pruski, “H interaction using emotion recognition from facial x ” 2011 UKSim 5th European Symposium on Computer.

[5]Picard,R.W.(2003).AffectiveComputing:Challenges. InternationalJournalofHuman-ComputerStudies,59(1-2), 55-64.DOI:10.1016/S1071-5819(03)00053-0

[6]Yang,Y.,&Wu,Y.(2019).MusicEmotionRecognition:A State-of-the-Art Review. IEEE Transactions on Affective Computing,10(1),3-21.DOI:10.1109/TAFFC.2017.2786413

[7] Baltrunas, L., & Ricci, F. (2012). Matrix Factorization TechniquesforContext-AwareRecommendation.InF.Ricci, L.Rokach,B.Shapira,&P.B.Kantor(Eds.),Recommender Systems Handbook (pp. 119-144). Springer. DOI: 10.1007/978-0-387-85820-3_4

[8] RenukaR.Londhe,Dr.VrushshenP.Pawar,“A sof Facial Expression and Recognition Based On Statistical

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 03 | Mar 2024 www.irjet.net p-ISSN: 2395-0072

A ” International Journal of Soft Computing and Engineering(IJSCE)Volume-2,May2012.

[9]Chen F, Yang C, Khishe M (2022) Diagnose P k ’ disease and cleft lip and palate using deep convolutional neural networks evolved by IP-based chimp optimization algorithm.BiomedSignalProcessControl77:103688

© 2024, IRJET | Impact Factor value: 8.226 | ISO 9001:2008 Certified Journal | Page878