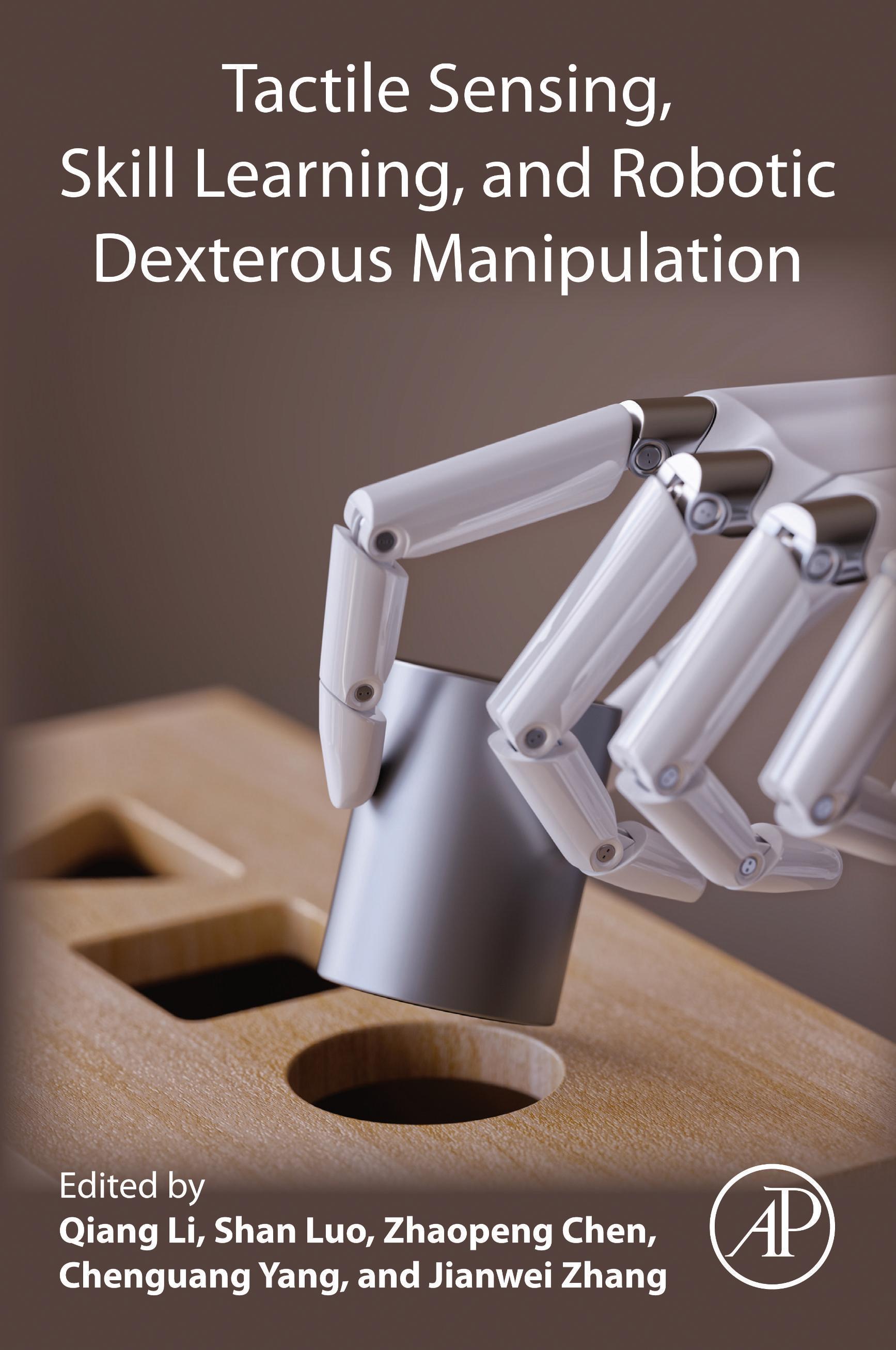

TactileSensing,Skill Learning,andRobotic Dexterous Manipulation

Editedby QiangLi

CenterforCognitiveInteractionTechnology,BielefeldUniversity Bielefeld,Germany

ShanLuo

DepartmentofComputerScience,UniversityofLiverpool Liverpool,UnitedKingdom

ZhaopengChen

FacultyofMathematics,InformaticsandNaturalScience DepartmentInformatics,UniversityofHamburg Hamburg,Germany

ChenguangYang

BristolRoboticsLab,UniversityoftheWestofEngland Bristol,UnitedKingdom

JianweiZhang

FacultyofMathematics,InformaticsandNaturalScience DepartmentInformatics,UniversityofHamburg Hamburg,Germany

AcademicPressisanimprintofElsevier 125LondonWall,LondonEC2Y5AS,UnitedKingdom 525BStreet,Suite1650,SanDiego,CA92101,UnitedStates 50HampshireStreet,5thFloor,Cambridge,MA02139,UnitedStates TheBoulevard,LangfordLane,Kidlington,OxfordOX51GB,UnitedKingdom

Copyright©2022ElsevierInc.Allrightsreserved.

MATLAB® isatrademarkofTheMathWorks,Inc.andisusedwithpermission. TheMathWorksdoesnotwarranttheaccuracyofthetextorexercisesinthisbook. Thisbook’suseordiscussionofMATLAB® softwareorrelatedproductsdoesnotconstitute endorsementorsponsorshipbyTheMathWorksofaparticularpedagogicalapproachor particularuseoftheMATLAB® software.

Nopartofthispublicationmaybereproducedortransmittedinanyformorbyanymeans, electronicormechanical,includingphotocopying,recording,oranyinformationstorageand retrievalsystem,withoutpermissioninwritingfromthepublisher.Detailsonhowtoseek permission,furtherinformationaboutthePublisher’spermissionspoliciesandourarrangements withorganizationssuchastheCopyrightClearanceCenterandtheCopyrightLicensingAgency, canbefoundatourwebsite: www.elsevier.com/permissions.

Thisbookandtheindividualcontributionscontainedinitareprotectedundercopyrightbythe Publisher(otherthanasmaybenotedherein).

Notices

Knowledgeandbestpracticeinthisfieldareconstantlychanging.Asnewresearchand experiencebroadenourunderstanding,changesinresearchmethods,professionalpractices,or medicaltreatmentmaybecomenecessary.

Practitionersandresearchersmustalwaysrelyontheirownexperienceandknowledgein evaluatingandusinganyinformation,methods,compounds,orexperimentsdescribedherein.In usingsuchinformationormethodstheyshouldbemindfuloftheirownsafetyandthesafetyof others,includingpartiesforwhomtheyhaveaprofessionalresponsibility.

Tothefullestextentofthelaw,neitherthePublishernortheauthors,contributors,oreditors, assumeanyliabilityforanyinjuryand/ordamagetopersonsorpropertyasamatterofproducts liability,negligenceorotherwise,orfromanyuseoroperationofanymethods,products, instructions,orideascontainedinthematerialherein.

ISBN:978-0-323-90445-2

ForinformationonallAcademicPresspublications visitourwebsiteat https://www.elsevier.com/books-and-journals

Publisher: MaraConner

AcquisitionsEditor: YuraR.Sonnini

EditorialProjectManager: EmilyThomson

ProductionProjectManager: PrasannaKalyanaraman

Designer: VictoriaPearson

TypesetbyVTeX

Tactilesensingandperception

1.GelTiptactilesensorfordexterousmanipulationin

DanielFernandesGomesandShanLuo 1.1Introduction

JiaqiJiangandShanLuo

2.6.1Visualguidancefortouchsensing33

2.6.2Guidedtactilecrackperception34

2.6.3Experimentalsetup36

2.6.4Experimentalresults37 2.7Conclusionanddiscussion

3.Multimodalperceptionfordexterousmanipulation

GuanqunCaoandShanLuo

3.1Introduction

3.2Visual-tactilecross-modalgeneration

3.2.2Experimentalresults48

3.3Spatiotemporalattentionmodelfortactiletextureperception

3.3.1Spatiotemporalattentionmodel51

3.3.2Spatialattention52

3.3.3Temporalattention52

3.3.4Experimentalresults54

3.3.5Attentiondistributionvisualization55 3.4Conclusionanddiscussion

4.Capacitivematerialdetectionwithmachinelearning forroboticgraspingapplications

HannesKisner,YitaoDing,andUlrikeThomas

4.2.1Capacitanceperception62

4.3.1Datapreparation68

4.3.2Classifierconfigurations70

5.Admittancecontrol:learningfromhumansthrough collaboratingwithhumans

NingWangandChenguangYang

5.1Introduction 83

5.2Learningfromhumanbasedonadmittancecontrol 85

5.2.1Learningataskusingdynamicmovementprimitives85

5.2.2Admittancecontrolmodel87

5.2.3Learningofcompliantmovementprofilesbasedon biomimeticcontrol87

5.3Experimentalvalidation 90

5.3.1Simulationtask90

5.3.2Handovertask92

5.3.3Sawingtask92

5.4Humanrobotcollaborationbasedonadmittancecontrol 93

5.4.1Principleofhumanarmimpedancemodel94

5.4.2Estimationofstiffnessmatrix95

5.4.3Stiffnessmappingbetweenhumanandrobotarm98

5.5Variableadmittancecontrolmodel 98

5.6Experiments 100

5.6.1Testofvariableadmittancecontrol100

5.6.2Human–robotcollaborativesawingtask102

5.7Conclusion 106 References 106

6.Sensorimotorcontrolfordexterousgrasping–inspirationfromhumanhand

KeLi

6.1Introductionofsensorimotorcontrolfordexterousgrasping 109

6.2Sensorimotorcontrolforgraspingkinematics 111

6.3Sensorimotorcontrolforgraspingkinetics 120

6.4Conclusions

7.Fromhumantorobotgrasping:forceandkinematic synergies

AbdeldjallilNaceri,NicolòBoccardo,LorenzoLombardi, AndreaMarinelli,DiegoHidalgo,SamiHaddadin, MatteoLaffranchi,andLorenzoDeMichieli

7.1Introduction 133

7.1.1Humanhandsynergies134

7.1.2Theimpactofthesynergiesapproachonrobotichands136

7.2Experimentalstudies 137

7.2.1Study1:forcesynergiescomparisonbetweenhumanand robothands137

7.2.2Resultsofforcesynergiesstudy139

7.2.3Study2:kinematicsynergiesinbothhumanandrobot hands139

7.2.4Resultsofkinematicsynergiesstudy142

7.3Discussion 144

7.3.1Forcesynergies:humanvs.robot144

7.3.2Kinematicsynergies:humanvs.robot145

7.4Conclusions

8.Learningform-closuregraspingwithattractiveregion inenvironment

RuiLi,ZhenshanBing,andQiQi

8.1Background 149

8.2Relatedwork 150

8.2.1Closureproperties150

8.2.2Environmentalconstraints151

8.2.3Learningtograsp151

8.3Learningaform-closuregraspwithattractiveregionin environment 152

8.3.1Attractiveregioninenvironmentforfour-pingrasping152

8.3.2LearningtoevaluategraspqualitywithARIE156

8.3.3LearningtograspwithARIE161

8.4Conclusion 166 References 167

9.Learninghierarchicalcontrolforrobustin-hand manipulation

TingguangLi

9.1Introduction 171

9.2Relatedwork 173

9.3Methodology 174

9.3.1Hierarchicalstructureforin-handmanipulation175

9.3.2Low-levelcontroller176

9.3.3Mid-levelcontroller177

9.4Experiments 178

9.4.1Trainingmid-levelpoliciesandbaseline179

9.4.2Dataset180

9.4.3Reachingdesiredobjectposes180

9.4.4Robustnessanalysis181

9.4.5Manipulatingacube182

9.5Conclusion 183

10.Learningindustrialassemblybyguided-DDPG

YongxiangFan

10.1Introduction 187

10.2Frommodel-freeRLtomodel-basedRL 189

10.2.1Guidedpolicysearch189

10.2.2Deepdeterministicpolicygradient190

10.2.3ComparisonofDDPGandGPS191

10.3Guideddeepdeterministicpolicygradient 192

10.4Simulationsandexperiments 194

10.4.1Parameterlists195

10.4.2Simulationresults195

10.4.3Experimentalresults198

10.5Chaptersummary 199 References 200

PartIII

Robotichandadaptivecontrol

11.ClinicalevaluationofHannes:measuringtheusability ofanovelpolyarticulatedprosthetichand MariannaSemprini,NicolòBoccardo,AndreaLince, SimoneTraverso,LorenzoLombardi,AntonioSucci, MicheleCanepa,ValentinaSqueri,JodyA.Saglia,PaoloAriano, LuigiReale,PericleRandi,SimonaCastellano, EmanueleGruppioni,MatteoLaffranchi,andLorenzoDeMichieli

11.1Introduction 205 11.2Preliminarystudy 206

11.2.1Datacollection207 11.2.2Outcomes207

11.3TheHannessystem 209

11.3.1Analysisofsurveystudyanddefinitionofrequirements209 11.3.2Systemarchitecture209

11.4PilotstudyforevaluatingtheHanneshand 212 11.4.1Materialsandmethods213 11.4.2Results215

11.5ValidationofcustomEMGsensors 218 11.5.1Materialsandmethods218 11.5.2Results220

11.6Discussionandconclusions 222 References 224

12.Ahand-armteleoperationsystemforrobotic dexterousmanipulation

ShuangLi,QiangLi,andJianweiZhang

12.1Introduction

12.2Problemformulation

12.3Vision-basedteleoperationfordexteroushand 230

12.3.1Transteleop230

12.3.2Pair-wiserobot–humanhanddatasetgeneration233

12.4Hand-armteleoperationsystem

12.5Transteleopevaluation

12.5.1Networkimplementationdetails237

12.5.2Transteleopevaluation238

12.5.3Handposeanalysis240

12.6Manipulationexperiments

13.Neuralnetwork-enhancedoptimalmotionplanning forrobotmanipulationunderremotecenterof motion

HangSuandChenguangYang

13.1Introduction

13.2Problemstatement

13.2.1Kinematicsmodeling250

13.2.2RCMconstraint251

13.3Controlsystemdesign

13.3.1Controllerdesignmethod255

13.3.2RBFNN-basedapproximation256

13.3.3Controlframework257 13.4Simulationresults

14.Towardsdexterousin-handmanipulationofunknown objects

QiangLi,RobertHaschke,andHelgeRitter 14.1Introduction

14.3Reactiveobjectmanipulationframework

14.3.1Localmanipulationcontroller–positionpart269

14.3.2Localmanipulationcontroller–forcepart270

14.3.3Localmanipulationcontroller–compositepart271 14.3.4Regraspplanner272

14.4Findingoptimalregrasppoints

14.4.1Graspstabilityandmanipulability273

14.4.2Objectsurfaceexplorationcontroller274

14.5Evaluationinphysics-basedsimulation 276

14.5.1Localobjectmanipulation277

14.5.2Large-scaleobjectmanipulation279

14.6Evaluationinarealrobotexperiment 284

14.6.1Unknownobjectsurfaceexplorationbyonefinger284

14.6.2Unknownobjectlocalmanipulationbytwofingers288

14.7Summaryandoutlook

15.Robustdexterousmanipulationandfingergaiting undervariousuncertainties

YongxiangFan

15.1Introduction 297

15.2Dual-stagemanipulationandgaitingframework 301

15.3Modelingofuncertainmanipulationdynamics 301

15.3.1State-spacedynamics301

15.3.2Combiningfeedbacklinearizationwithmodeling304

15.4Robustmanipulationcontrollerdesign 305

15.4.1Designscheme305

15.4.2Designofweightingfunctions307

15.4.3Manipulationcontrollerdesign308

15.5Real-timefingergaitsplanning 309

15.5.1Graspqualityanalysis309

15.5.2Position-levelfingergaitsplanning310

15.5.3Velocity-levelfingergaitsplanning311

15.5.4Similaritiesbetweenposition-levelandvelocity-level planners313

15.5.5Fingergaitingwithjumpcontrol314

15.6Simulationandexperimentstudies 315

15.6.1Simulationsetup315

15.6.2Experimentalsetup316

15.6.3Parameterlists317

15.6.4RMCsimulationresults318

15.6.5RMCexperimentresults323

15.6.6Fingergaitingsimulationresults325

15.7Chaptersummary 329 References 330

A.Keycomponentsofdexterousmanipulation:tactile sensing,skilllearning,andadaptivecontrol

QiangLi,ShanLuo,ZhaopengChen,ChenguangYang,and JianweiZhang

Contributors

PaoloAriano,CenterforSustainableFutureTechnologies,IstitutoItalianodi Tecnologia,Torino,Italy

ZhenshanBing,DepartmentofInformatics,TechnicalUniversityofMunich,Munich, Germany

NicolòBoccardo,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova, Italy

MicheleCanepa,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova, Italy

GuanqunCao,smARTLab,DepartmentofComputerScience,UniversityofLiverpool, Liverpool,UnitedKingdom

SimonaCastellano,CentroProtesiINAIL,VigorsodiBudrio(BO),Italy

ZhaopengChen,UniversityofHamburg,FacultyofMathematics,Informaticsand NaturalScience,DepartmentInformatics,GroupTAMS,Hamburg,Germany

LorenzoDeMichieli,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova, Italy

YitaoDing,LabofRoboticsandHumanMachineInteraction,ChemnitzUniversityof Technology,Chemnitz,Germany

YongxiangFan,FANUCAdvancedResearchLaboratory,FANUCAmerica Corporation,UnionCity,CA,UnitedStates

DanielFernandesGomes,smARTLab,DepartmentofComputerScience,University ofLiverpool,Liverpool,UnitedKingdom

EmanueleGruppioni,CentroProtesiINAIL,VigorsodiBudrio(BO),Italy

SamiHaddadin,ChairofRoboticsandSystemsIntelligence,MunichInstituteof RoboticsandMachineIntelligence(MIRMI),TechnicalUniversityofMunich (TUM),Munich,Germany

CentreforTactileInternetwithHuman-in-the-Loop(CeTI),Dresden,Germany

RobertHaschke,CenterforCognitiveInteractionTechnology(CITEC),Bielefeld University,Bielefeld,Germany

DiegoHidalgo,ChairofRoboticsandSystemsIntelligence,MunichInstituteof RoboticsandMachineIntelligence(MIRMI),TechnicalUniversityofMunich (TUM),Munich,Germany CentreforTactileInternetwithHuman-in-the-Loop(CeTI),Dresden,Germany

JiaqiJiang,smARTLab,DepartmentofComputerScience,UniversityofLiverpool, Liverpool,UnitedKingdom

HannesKisner,LabofRoboticsandHumanMachineInteraction,ChemnitzUniversity ofTechnology,Chemnitz,Germany

MatteoLaffranchi,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova, Italy

KeLi,InstituteofIntelligentMedicineResearchCenter,DepartmentofBiomedical Engineering,ShandongUniversity,Jinan,Shandong,China

QiangLi,UniversityofBielefeld,Bielefeld,Germany CenterforCognitiveInteractionTechnology(CITEC),BielefeldUniversity, Bielefeld,Germany

RuiLi,SchoolofAutomation,ChongqingUniversity,Chongqing,China

ShuangLi,UniversitätHamburg,Hamburg,Germany

TingguangLi,TencentRoboticsX,Shenzhen,China

AndreaLince,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova,Italy

LorenzoLombardi,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova, Italy

ShanLuo,smARTLab,DepartmentofComputerScience,UniversityofLiverpool, Liverpool,UnitedKingdom DepartmentofComputerScience,UniversityofLiverpool,Liverpool,United Kingdom

AndreaMarinelli,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova, Italy

AbdeldjallilNaceri,ChairofRoboticsandSystemsIntelligence,MunichInstituteof RoboticsandMachineIntelligence(MIRMI),TechnicalUniversityofMunich (TUM),Munich,Germany

QiQi,SchoolofAutomation,ChongqingUniversity,Chongqing,China

PericleRandi,CentroProtesiINAIL,VigorsodiBudrio(BO),Italy

LuigiReale,Areasanitàesalute,ISTUDFoundation,Milano,Italy

HelgeRitter,CenterforCognitiveInteractionTechnology(CITEC),Bielefeld University,Bielefeld,Germany

JodyA.Saglia,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova,Italy

MariannaSemprini,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova, Italy

Contributors

ValentinaSqueri,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova, Italy

HangSu,DipartimentodiElettronica,InformazioneeBioingegneria,Politecnicodi Milano,Milano,Italy

AntonioSucci,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova,Italy

UlrikeThomas,LabofRoboticsandHumanMachineInteraction,Chemnitz UniversityofTechnology,Chemnitz,Germany

SimoneTraverso,RehabTechnologiesLab,IstitutoItalianodiTecnologia,Genova, Italy

NingWang,BristolRoboticsLaboratory,UniversityoftheWestofEngland,Bristol, UnitedKingdom

ChenguangYang,BristolRoboticsLaboratory,UniversityoftheWestofEngland, Bristol,UnitedKingdom

JianweiZhang,UniversitätHamburg,Hamburg,Germany UniversityofHamburg,FacultyofMathematics,InformaticsandNaturalScience, DepartmentInformatics,GroupTAMS,Hamburg,Germany

This page intentionally left blank

Preface

Dexterousmanipulationisaverychallengingresearchtopicanditiswidely requiredincountlessapplicationsintheindustrial,service,marine,space,and medicalrobotdomains.Therelevanttasksincludepick-and-placetasks,peg-inhole,advancedgrasping,in-handmanipulation,physicalhuman–robotinteraction,andevencomplexbimanualmanipulation.Sincethe1990s,mathematical manipulationtheories(AMathematicalIntroductiontoRoboticManipulation, R.M.Murray,Z.X.Li,andS.S.Sastry,1994)havebeendevelopedandwehave witnessedmanyimpressivesimulationsandrealdemonstrationsofdexterous manipulation.Mostofthemneedtoassume:

1. anaccurateobjectgeometrical/physicalmodelandaknownroboticarm/hand kinematic/dynamicmodel,

2. therobothasthedexterousmanipulationskillsforthegiventask.

Unfortunately,asthesetwostrongassumptionscanonlybefeasibleinatheoreticalmodel,physicssimulation,orwell-customizedstructuralenvironment, previousresearchworkisbiasedtowardsmotionplanningandimplementation. Becauseoftheinherentuncertaintyoftherealworld,simulationresultsarerelativelyfragileindeployinginrealapplicationsandpronetofailedmanipulationif theassumptionsdeviatefromtherealrobotandobjectmodel.Thedemonstrated experimentswillalsofailifthestructuralenvironmentischanged.Examples canbechangesinkinematic/dynamicmodelsduetowearandtear,imperfectly calibratedhand–eyeratios,andachangeinthemanipulatedobject.

Inordertodealwiththeuncertaintyfromdynamicinteractionandimplementation,itisnecessarytoexploitsensorsandthesensory-controlloopto improvetherobots’dexterouscapabilityandrobustness.Currently,thebestdevelopedsensorfeedbackinroboticsisvision.Vision-basedperceptionand controlhavelargelyimprovedtherobustnessofrobotsinrealapplications.One missingaspectforvision-poweredrobotsistheirapplicationinthecontextof contact.Thisabsenceismainlybecausevisionisnotthebestmodalitytomeasureandmonitorcontactbecauseofocclusionissuesandnoisymeasurements. Onthispoint,tactilesensingisacrucialcomplementarymodalitytoextract unknowncontact/objectinformationrequiredinmanipulationtheories.Itprovidesthemostpracticalanddirectinformationforobjectperceptionandaction decisions.

Apartfromsensorfeedback,anotherunresolvedissuefordexterousmanipulationishowtogeneratetherobot’smotion/forcetrajectory–skillsforthe tasks.Giventhediversityofthetasks,itisunpracticaltohardcodeallkinds ofmanipulationskillsforroboticarmsandhands.Inspiredbyimitation,one solutionistoextract,represent,andgeneralizetheseskillsfromhumandemonstration.Thentherobotsuseadaptivecontrollerstoimplementthelearnedskills ontheroboticarmandhand.Inrecentyearswehavealsoseenresearcherscombineskillrepresentationandtransferinonestep–exploringandlearningthe dexterouscontrollerautomatically.

Inthiseditedbook,weinvitedtheresearchersworkinginthreeresearch directions–tactilesensing,skilllearning,andadaptivecontrol–todrawa completepictureofdexterousroboticmanipulation.Allofthemhavetop-level publicationrecordsintheroboticsfield.Weareconfidentthatthecontributed chapterscanprovidebothscientistsandengineerswithanup-to-dateintroductiontothesedynamicanddevelopingdomainsandpresenttheadvancedsensors, perception,andcontrolalgorithmsthatwillinformtheimportantresearchdirectionsandhaveasignificantimpactonourfuturelives.Concretelythereaders cangainthefollowingknowledgefromthisbook:

1. tactilesensinganditsapplicationstothepropertyrecognitionandreconstructionofunknownobjects;

2. humangraspinganddexterousskillrepresentationandlearning;

3. theadaptivecontrolschemeanditslearningbyimitationandexploration;

4. concreteapplicationshowrobotscanimprovetheirdexteritybymodern tactilesensing,interactiveperception,learning,andadaptivecontrolapproaches.

Aseditors,webelievesynthesizingintelligenttactileperception,skilllearning,andadaptivecontrolisanessentialpathtoadvancingstate-of-the-artdexterousroboticmanipulation.Wehopethatreaderswillenjoyreadingthisbookand finditusefulfortheirresearchjourney.Wewouldliketothankallauthors,and wearegratefulforsupportfromtheDEXMANprojectfundedbytheDeutsche Forschungsgemeinschaft(DFG)andtheNaturalScienceFoundationofChina (NSFC)(Projectnumber:410916101),supportfromtheDFG/NSFCTransregio CollaborativeProjectTRR169“CrossmodalLearning,”andsupportfromEPSRCproject“ViTac:Visual-TactileSynergyforHandlingFlexibleMaterials” (EP/T033517/1).WealsoexpressourappreciationtoEmilyThomsonandSonniniRuizYurafromElsevierfortheirencouragementandcoordinationtomake thisbookpossible.

QiangLi ShanLuo ZhaopengChen ChenguangYang JianweiZhang Bielefeld

June2021

GelTiptactilesensorfor dexterousmanipulationin clutter

DanielFernandesGomesandShanLuo smARTLab,DepartmentofComputerScience,UniversityofLiverpool,Liverpool,UnitedKingdom

1.1Introduction

Ashumans,robotsneedtomakeuseoftactilesensingwhenperformingdexterousmanipulationtasksinclutteredenvironmentssuchasathomeandin warehouses.Insuchcases,thepositionsandshapesofobjectsareuncertain, anditisofcriticalimportancetosenseandadapttotheclutteredscene.With cameras,Lidars,andotherremotesensors,largeareascanbeassessedinstantly[1].However,measurementsobtainedusingsuchsensorsoftensuffer fromlargeuncertainties,occlusions,andvarianceoffactorslikelightconditions andshadows.Thankstothedirectinteractionwiththeobject,tactilesensingcan reducethemeasurementuncertaintiesofremotesensorsanditisnotaffected bythechangesoftheaforementionedsurroundingconditions.Furthermore, tactilesensinggainsinformationofthephysicalinteractionsbetweentheobjectsandtherobotend-effectorthatisoftennotaccessibleviaremotesensors, e.g.,incipientslip,collisions,anddetailedgeometryoftheobject.Asdexterous manipulationrequirespreciseinformationoftheinteractionswiththeobject, especiallyinmomentsofin-contactornear-contact,itisofcrucialimportance toattaintheseaccuratemeasurementsprovidedbytactilesensing.Forinstance, failingtoestimatethesizeofanobjectby1mm,oritssurfacefrictioncoefficient,during(andalsorightbefore)agraspmightresultinseverelydamaging thetactilesensorordroppingtheobject.Incontrast,failingtoestimatetheobjectshapebyafewcentimeterswillnotmakeabigimpactonthemanipulation. Tothisend,cameravisionandotherremotesensorscanbeusedtoproduce initialestimationsoftheobjectandplanmanipulationactions,whereastactile sensingcanbeusedtorefinesuchestimatesandfacilitatethein-handmanipulation[2,3].

Theusageoftactilesensorsformanipulationtaskshasbeenstudiedsince[4] andinthepastyearsawiderangeoftactilesensorsandworkingprincipleshave beenstudiedintheliterature[2,3,5],rangingfromflexibleelectronicskins[6], TactileSensing,SkillLearning,andRoboticDexterousManipulation https://doi.org/10.1016/B978-0-32-390445-2.00008-8 Copyright©2022ElsevierInc.Allrightsreserved. 3

fiberoptic-basedsensors[7],andcapacitivetactilesensors[8]tocamera-based opticaltactilesensors[9,10],manyofwhichhavebeenemployedtoaidrobotic grasping[11].Electronictactileskinsandflexiblecapacitivetactilesensorscan beadaptedtodifferentbodypartsoftherobotthathavevariouscurvaturesand geometryshapes.However,duetothenecessityofdielectricsforeachsensing element,theyproduceconsiderablylow-resolutiontactilereadings.Forexample,aWTStactilesensorfromWeissRoboticsusedin[12–14]has14 × 6taxels (tactilesensingelements).Incontrast,camera-basedopticaltactilesensorsprovidehigher-resolutiontactileimages.However,ontheotherside,theyusually haveabulkiershapeduetotherequirementofhostingthecameraandthegap betweenthecameraandthetactilemembrane.

Opticaltactilesensorscanbegroupedintwomaingroups:marker-based andimage-based,withtheformerbeingpioneeredbythe TacTip sensors[15] andthelatterbythe GelSight sensors[16].Asthenamesuggests,marker-based sensorsexploitthetrackingofmarkersprintedonasoftdomedmembraneto perceivethemembranedisplacementandtheresultingcontactforces.Bycontrast,image-basedsensorsdirectlyperceivetherawmembranewithavarietyof imagerecognitionmethodstorecognizetextures,localizecontacts,andreconstructthemembranedeformations,etc.Becauseofthedifferentworkingmechanisms,marker-basedsensorsmeasurethesurfaceonalowerresolutiongridof points,whereasimage-basedsensorsmakeuseofthefullresolutionprovided bythecamera.Some GelSight sensorshavealsobeenproducedwithmarkers printedonthesensingmembrane[17],enablingmarker-basedandimage-based methodstobeusedwiththesamesensor.Bothfamiliesofsensorshavebeen producedwitheitherflatsensingsurfacesordomed/finger-shapedsurfaces.

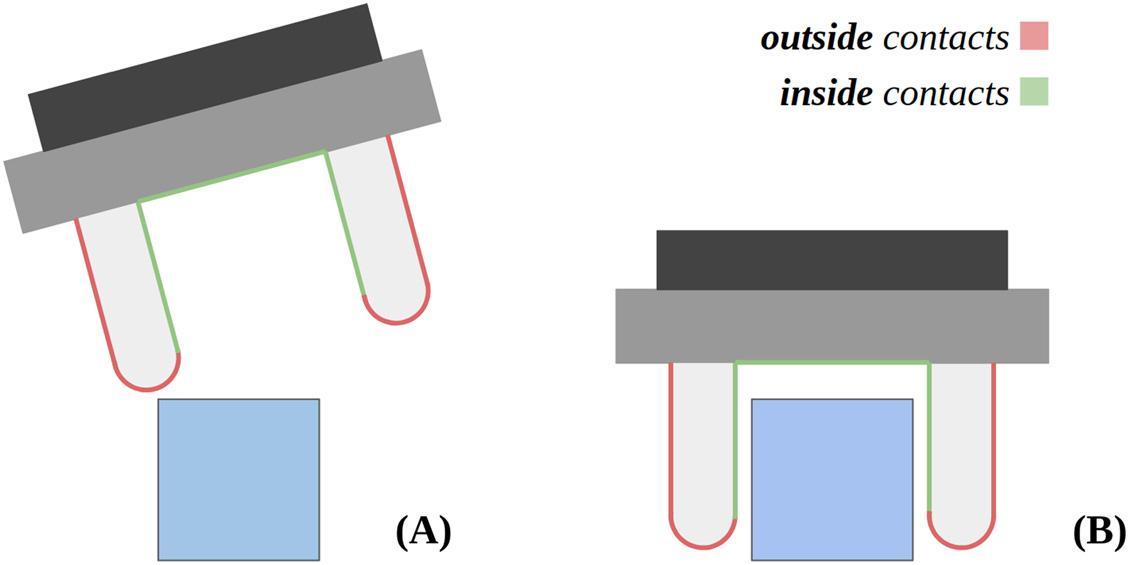

Inthischapter,wewillfirstreviewexistingopticaltactilesensorsinSection 1.2,andthenwewilllookindetailintooneexampleofsuchimage-based tactilesensors,i.e.,the GelTip [18,19],inSection 1.3.The GelTip isshaped asafinger,andthusitcanbeinstalledontraditionalandoff-the-shelfgrippers toreplaceitsfingersandenablecontactstobesensedinsideandoutsidethe graspclosurethatareshowninFig. 1.1.InSection 1.4,wewilllookintoexperimentscarriedoutusingthe GelTip sensorthatdemonstratehowcontactscan belocalized,andmoreimportantly,theadvantages,andpossiblyanecessity,of leveragingall-aroundtouchsensingindexterousmanipulationtasksinclutter. Inparticular,experimentscarriedoutinaBlocksWorldenvironmentshowthat thedetectedcontactsonthefingerscanbeusedtoadaptplannedactionsduring thedifferentmomentsofthereach-to-graspmotion.

1.2Anoverviewofthetactilesensors

Comparedtoremotesensorslikecameras,tactilesensorsaredesignedtoassess thepropertiesoftheobjectsviaphysicalinteractions,e.g.,geometry,texture,humidity,andtemperature.Alargerangeofworkingprincipleshavebeenactively proposedintheliteratureinthepastdecades[2,5,20].Anopticaltactilesensor

FIGURE1.1 Therearetwodistinctareasofcontacthighlightedintherobotgripperduringamanipulationtask: (A) outsidecontactswhentherobotisprobingorsteeringtheobjecttobegrasped; (B) insidecontactswhentheobjectiswithinthegraspclosure,whichcanguidethegrasping.

usesacameraenclosedwithinitsshellandpointingatitstactilemembrane(an opaquewindowmembranemadeofasoftmaterial)tocapturetheproperties oftheobjectsfromthedeformationscausedtoitstactilemembranebytheincontactobject.Suchcharacteristicsensurethatthecapturedtactileimagesare notaffectedbytheexternalilluminationvariances.Toperceivetheelastomerdeformationsfromthecapturedtactileimages,multipleworkingprincipleshave beenproposed.Wegroupsuchapproachesintwocategories:markertracking andrawimageanalysis.Opticaltactilesensorscontrastwithelectronictactile skinsthatusuallyhavelowerthicknessandarelessbulky.Theyareflexibleand canadapttodifferentbodypartsoftherobotthathavevariouscurvaturesandgeometryshapes.However,eachsensingelementofmostofthetactileskins,e.g., acapacitivetransducer,hasthesizeofafewsquaremillimetersorevencentimeters,whichresultsinalimitedspatialresolutionofthetactileskins.Here wedonotcoversuchskinsastheseareanextensivetopiconitsown;however, wepointthereadertotwosurveysthatextensivelycoverthesesensors[21,22].

1.2.1Marker-basedopticaltactilesensors

Thefirstmarker-basedsensorproposalcanbefoundin[23];however,morerecentlyanimportantfamilyofmarker-basedtactilesensorsistheTacTipfamily ofsensorsdescribedin[9].Sinceitsinitialdomed-shapedversion[15],different morphologieshavebeenproposed,includingtheTacTip-GR2[24]ofasmaller fingertipdesign,TacTip-M2[25]thatmimicsalargethumbforin-handlinear manipulationexperiments,andTacCylindertobeusedincapsuleendoscopyapplications.Thankstotheirminiaturizedandadapteddesign,TacTip-M2[25]and TacTip-GR2[24]havebeenusedasfingers(orfingertips)inroboticgrippers. AlthougheachTacTipsensorintroducessomemanufacturingimprovementsor novelsurfacegeometries,thesameworkingprincipleisshared:whitepinsare

imprintedontoablackmembranethatcanthenbetrackedusingcomputervision methods.

AsshowninTable 1.1,therearealsootheropticaltactilesensorsthattrack themovementsofmarkers.In[26],anopticaltactilesensornamedFingerVisionisproposedtomakeuseofatransparentmembrane,withtheadvantage ofgainingproximitysensing.However,theusageofthetransparentmembrane makesthesensorlacktherobustnesstoexternalilluminationvarianceassociated withtouchsensing.In[27],semiopaquegridsofmagentaandyellowmakers paintedonthetopandbottomsurfacesofatransparentmembraneareproposed, inwhichthemixtureofthetwocolorsisusedtodetecthorizontaldisplacements oftheelastomer.In[28],greenfluorescentparticlesarerandomlydistributed withinthesoftelastomerwithblackopaquecoatingsothatahighernumberof markerscanbetrackedandusedtopredicttheinteractionwiththeobject,accordingtotheauthors.In[29],asensorwiththesamemembraneconstruction method,fourRaspberryPIcameras,andfisheyelenseshasbeenproposedfor opticaltactileskins.

1.2.2Image-basedopticaltactilesensors

Ontheothersideofthespectrum,theGelSightsensors,initiallyproposed in[16],exploittheentireresolutionofthetactileimagescapturedbythesensor camera,insteadofjusttrackingmarkers.Duetothesoftopaquetactilemembrane,thecapturedimagesarerobusttoexternallightvariationsandcaptureinformationofthetouchedsurface’sgeometrystructure,unlikemostconventional tactilesensorsthatmeasurethetouchingforce.Leveragingthehighresolution ofthecapturedtactileimages,high-accuracygeometryreconstructionsareproducedin[31–36].In[31],thissensorwasusedasfingersofaroboticgripperto insertaUSBcableintothecorrespondingporteffectively.However,thesensor onlymeasuresasmallflatareaorientedtowardsthegraspclosure.In[37,38], simulationmodelsoftheGelSightsensorsarealsocreated.

MarkerswerealsoaddedtothemembraneoftheGelSightsensors,enablingapplyingthesamesetofmethodsthatwereexploredintheTacTip sensors.Therearesomeothersensordesignsandadaptationsforroboticfingers in[10,39,40].In[10],mattealuminumpowderwasusedforimprovedsurface reconstruction,togetherwiththeLEDsbeingplacednexttotheelastomerand theelastomerbeingslightlycurvedonthetop/externalside.In[39],theGelSlimisproposed,adesignwhereinamirrorisplacedatashallowandoblique angleforaslimmerdesign.Thecamerawasplacedonthesideofthetactile membrane,suchthatitcapturesthetactileimagereflectedontothemirror.A stretchytexturedfabricwasalsoplacedontopofthetactilemembranetopreventdamagestotheelastomerandtoimprovetactilesignalstrength.Recently, anevenmoreslimdesignof2mmhasbeenproposed[41],whereinanhexagonalprismaticshapinglensisusedtoensureradiallysymmetricalillumination. In[40],DIGITisalsoproposedwithaUSB“plug-and-play”portandaneasily replaceableelastomersecuredwithasinglescrewmount.

TABLE1.1 Asummaryofinfluentialmarker-basedopticaltactilesensors.

Sensorstructure Illuminationandtactilemembrane

TacTip [15] The TacTip hasadomed (finger)shape, 40 × 40 × 85 mm,and tracks127pins.Ituses theMicrosoftLifeCam HDwebcam.

TacTip-M2 [25]

TacTip-GR2 [24]

Ithasathumb-likeor semicylindricalshape, withTacTip-M2 32 × 102 × 95 mm,andit tracks80pins.

Ithasaconeshapewitha flatsensingmembrane andissmallerthanthe TacTip, 40 × 40 × 44 mm, tracks127pins,anduses theAdafruitSPYPI camera.

TacCylinder [30]

FingerVision [26]

Subtractive colormixing [27]

Green markers [28]

Acatadioptricmirroris usedtotrackthe 180markersaroundthe sensorcylindricalbody.

ItusesaELPCo.

USBFHD01M-L180 camerawithan180 degreefisheyelens.Ithas approximately 40 × 47 × 30 mm.

N/A

Multicamera skin [29]

Thesensorhasaflat sensingsurface,measures 50 × 50 × 37 mm,andis equippedwithanELP USBFHD06HRGB camerawithafisheye lens.

Ithasaflatprismatic shapeof 49 × 51 × 17 45 mm.Four Picamerasareassembled ina 2 × 2 arrayand fisheyelensesareusedto enableitsthinshape.

Themembraneisblackontheoutside withwhitepinsandfilledwith transparentelastomerinside.Initially themembranewascastfromVytaFlex 60siliconerubber,thepinspaintedby hand,andthetipfilledwithoptically clearsiliconegel(Techsil,RTV27905); however,currentlytheentiresensorcan be3Dprintedusingamultimaterial printer(StratasysObjet260Connex), withtherigidpartsprintedinVero Whitematerialandthecompliantskin intherubber-likeTangoBlack+.

Themembraneistransparent,made withSiliconesInc.XP-565,with4mm ofthicknessandmarkersspacedby 5mm.Nointernalilluminationisused, asitthemembranetransparent.

Twolayersofsemiopaquecolored markersisproposed.SortaClear12from Smooth-On,clearandwithIgnite pigment,isusedtomaketheinnerand outersides.

Itiscomposedofthreelayers:stiff elastomer,softelastomerwithrandomly distributedgreenfluorescentparticlesin it,andblackopaquecoating.Thestiff layerismadeofELASTOSIL®RT601 RTV-2andispoureddirectlyontopof theelectronics,thesoftlayerismadeof Ecoflex™GEL(shorehardness000-35) withthemarkersmixedin,andthefinal coatlayerismadeofELASTOSIL®RT 601RTV-2(shorehardness10A)black silicone.Acustomboardwithanarray ofSMDwhiteLEDsismountedonthe sensorbase,aroundthecamera.