Foreword

Terrence J. Sejnowski

This handbook brings together a wide range of knowledge from many different areas of science and engineering toward the realization of what is called “living machines”— man-made devices having capabilities shared with creatures that evolved in nature. Living machines are not easy to build, for many reasons. First, nature evolved smart materials that are assembled at the molecular level, compared with current technology that is assembled from macroscopic parts; second, bodies are built from the inside out, turning food into materials that are used to replace all the proteins inside cells, which themselves are periodically replaced; third, some of the most difficult problems in seeing, hearing, and moving around have not been solved, and our intuition on how nature has solved them is misleading. Take, for example, vision.

The MIT AI Lab was founded in the 1960s and one of its first projects was a substantial grant from a military research agency to build a robot that could play ping pong. I once heard a story that the principal investigator forgot to ask for money in the grant proposal to build a vision system for the robot, so he assigned the problem to a graduate student as a summer project. I once asked Marvin Minsky whether or not it was a true story. He snapped back that I had it wrong: “We assigned the problem to undergraduate students.” A document from the archives at MIT confirms his version of the story (Papert, 1966).

The intuition that it would be easy to write a vision program is based on what we find easy to do. We are all experts at vision because it is important for our survival and evolution had millions of years to get it right. This misguided the early AI pioneers into thinking that writing a vision program would be easy. Little did anyone know in the 1960s that it would take more than 50 years and a million times increase in computer power before computer vision would begin to reach human levels of performance (Sejnowski, 2018).

A closer look at vision reveals that we may not even understand what computational problems nature solved. It has been almost universally assumed by researchers in computer vision that the goal of vision is to create an internal model of the outside world. However, a complete and accurate internal model of the world may not be necessary for most practical purposes, and might not even be possible given the low sampling rate of current video cameras. Based on evidence from psychophysics, physiology, and anatomy, Patricia Churchland, V. S. Ramachandran, and I came to the conclusion that the brain represents only a limited part of the world, only what is needed at any moment to carry out the task at hand (Churchland et al., 1994). We have the illusion of high resolution everywhere because we can rapidly reposition our eyes. The apparent modularity of vision also is an illusion. The visual system integrates information from other streams, including signals from the reward system indicating the values of objects in the scene, and the motor system actively seeks information by repositioning sensors, such as moving eyes and, in some species, ears to gather information that may lead to rewarding actions. Sensory systems are not ends in themselves; they evolved to support the motor system, to make movements more efficiently and survival more likely.

Writing computer programs to solve problems was the dominant method used by AI researchers until recently. In contrast, we learn to solve problems through experience with

the world. We have many kinds of learning systems, including declarative learning of explicit events and unique objects, implicit perceptual learning, and motor learning. Learning is our special power. Recent advances in speech recognition, object recognition in images, and language translation in AI have been made by deep learning networks inspired by general features found in brains, such as massively parallel simple processing units, a high degree of connectivity between them, and the connection strengths learned through experience (Sejnowski, 2018). There is much more we can learn from nature by observing animal behavior and looking into brains to find the algorithms that are responsible.

Adaptability is essential for surviving in a world that is uncertain and nonstationary. Reinforcement learning, which is found in all invertebrates and vertebrates, is based on exploring the various options in the environment, and learning from their outcomes. The reinforcement learning algorithm found in the basal ganglia of vertebrates, called temporal difference learning, is an online way to learn a sequence of actions to accomplish a goal (Sejnowski et al., 2014). Dopamine cells in the midbrain compute the reward prediction error, which can be used to make decisions and update the value function that predicts future rewards. As learning improves, exploration decreases, eventually leading to pure exploitation of the best strategy found during learning.

One of the problems with reinforcement learning is that it is very slow, since the only feedback from the world is whether or not reward is received at the end of a long sequence of actions. This is called the temporal credit assignment problem. The limitation of vision discussed earlier, attending to only one object at a time, makes it easier for reinforcement learning to narrow down the number of possible sensory inputs that contribute to obtaining rewards, making this a feature rather than a bug. Reinforcement learning coupled with deep learning networks recently produced AlphGo, a neural network that defeated the world’s best Go players (Sejnowski, 2018). Go is an ancient board game of great complexity and, until recently, the best Go programs were far beneath the capability of humans. What this remarkable success has revealed is that multiple synergistic learning systems, each of which has limitations, working together can achieve complex behaviors.

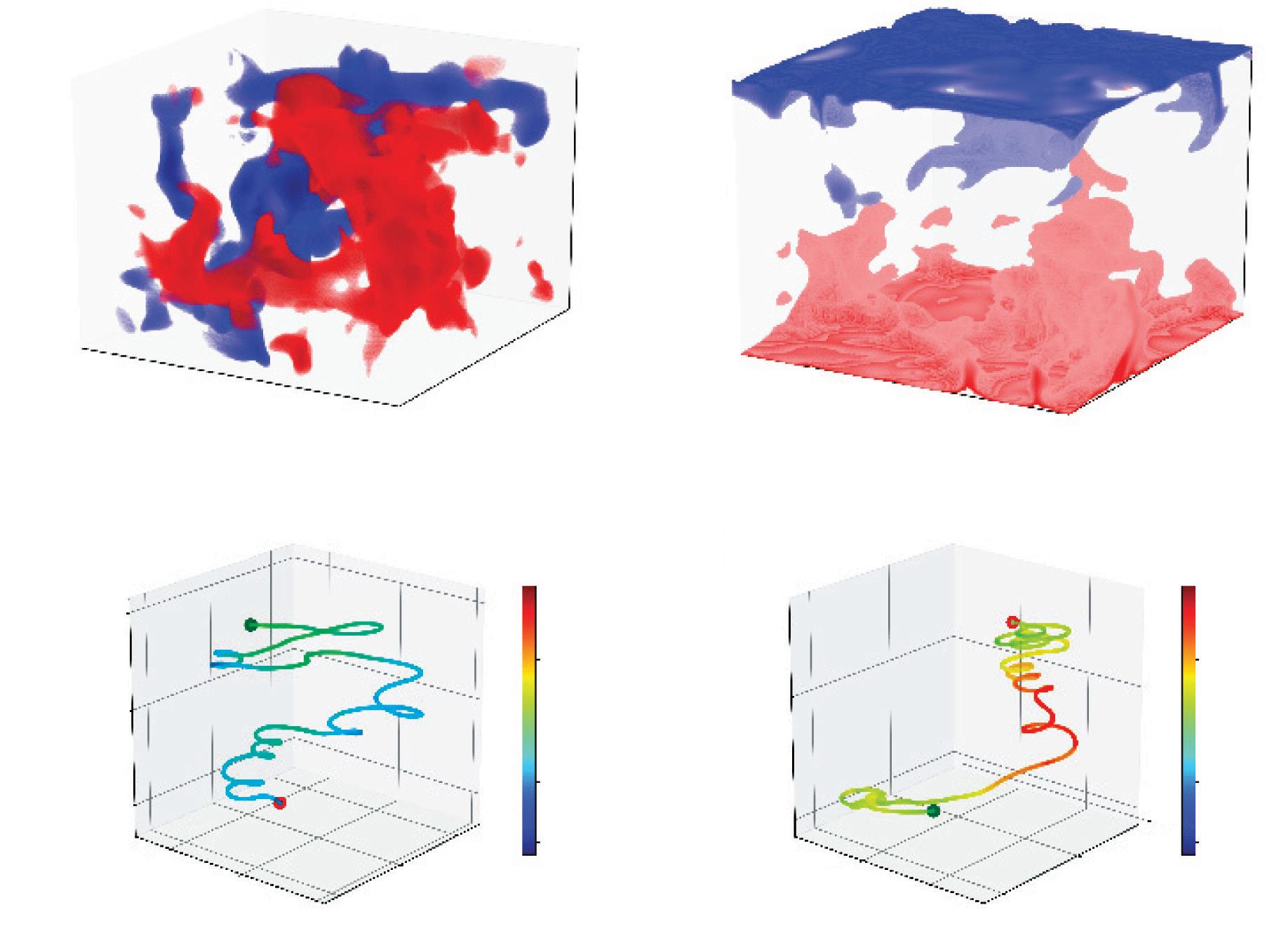

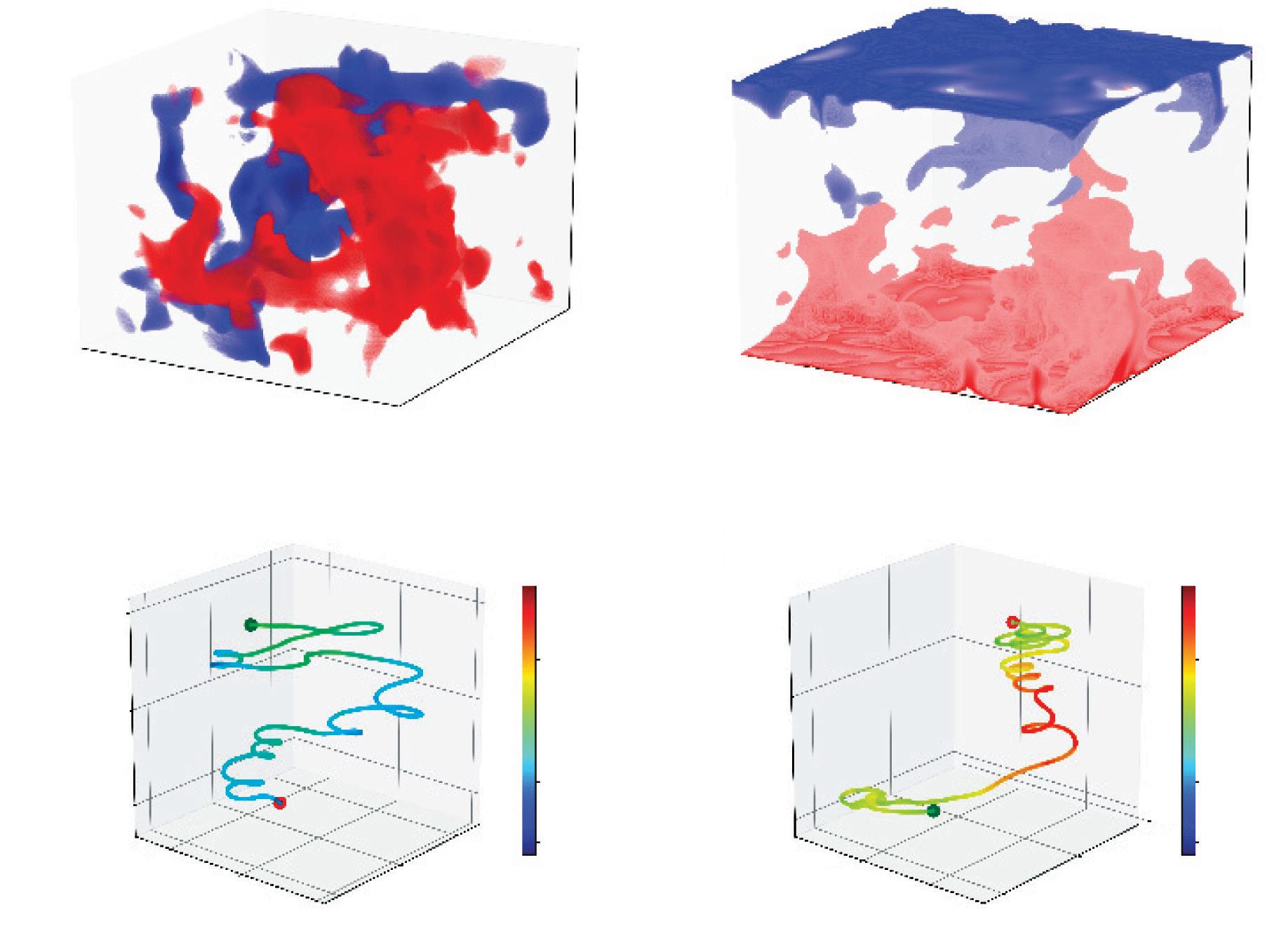

Building bodies has proven an even more difficult problem than vision. In the 1980s, a common argument for ignoring nature was that it is foolish to study birds if you want to build a flying machine. But the Wright Brothers, who built the first flying machine, studied gliding birds with great interest and incorporated their observations into the design of wings, as Prescott and Verschure point out in Chapter 1 of Living Machines. Soaring birds often rely on ascending thermal plumes in the atmosphere as they search for prey or migrate across large distances (Figure F1). Recently, reinforcement learning was used to teach a glider to soar in turbulent thermals in simulations (Reddy et al., 2016) and in the field (Reddy et al., in press). The control strategy that was learned produced behavior similar to that of soaring birds (Figure F2). This is another

Figure F1 Soaring birds have wingtip feathers that break up the air-flow from below. They also curve these feathers upward to create arrays of small winglets.

Photo courtesy of John Lienhard.

Figure F2 Simulations of a glider learning to soar in a thermal. Lower panels: Snapshots of the vertical velocity (a) and the temperature fields (b) in our numerical simulations of 3d rayleigh– Bénard convection. For the vertical velocity field, the red and blue colors indicate regions of large upward and downward flow, respectively. For the temperature field, the red and blue colors indicate regions of high and low temperature, respectively. Upper panels: (a) Typical trajectories of an untrained and (b) a trained glider flying within a rayleigh– Bénard turbulent flow. The colors indicate the vertical wind velocity experienced by the glider. The green and red dots indicate the start and the end points of the trajectory, respectively. The untrained glider takes random decisions and descends, whereas the trained glider flies in characteristic spiraling patterns in regions of strong ascending currents, as observed in the thermal soaring of birds and gliders.

reproduced from Gautam reddy, Antonio Celani, Terrence J. Sejnowski and Massimo Vergassol, Learning to soar in turbulent environments, Proceedings of the National Academy of Sciences of the United States of America, 113 (33), pp. E4877–E4884, Figure 1, doi.org/10.1073/pnas.1606075113, a, 2016.

example of how AI is beginning to tackle problems in the real world. Have you noticed that jet planes are sprouting winglets at the tips of wings, similar to the wingtips found on soaring birds (Figure F1)? These save millions of dollars of jet fuel. Evolution has had millions of years to optimize flying and it would be foolish to ignore nature.

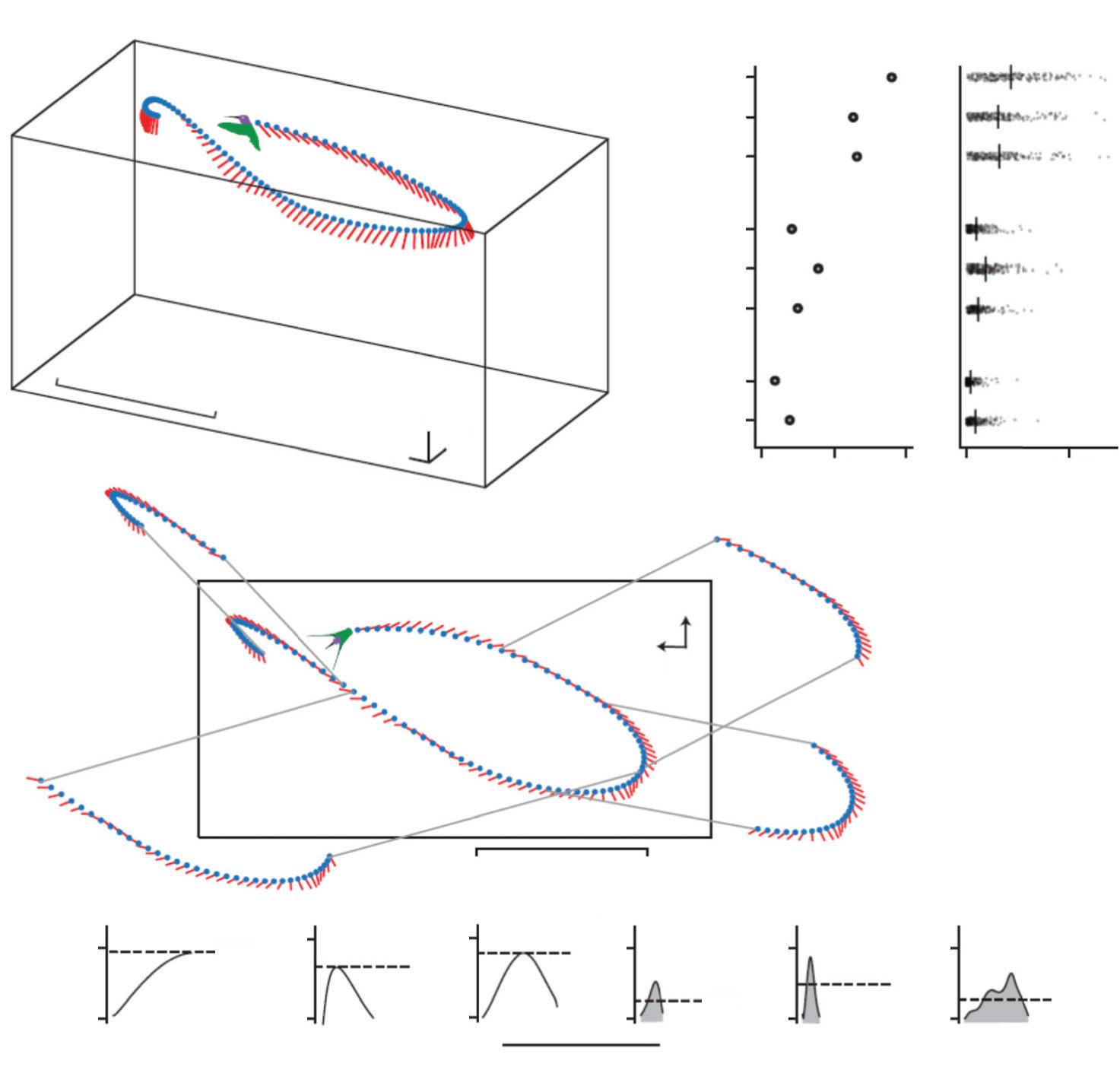

Agility is another holy grail for living machines. Hummingbirds are renowned for their ability to maneuver with great speed and precision. Maneuverability requires the rapid integration of sensory information into control systems to avoid obstacles and reach goals, such as the nectar in flowers. A recent study of over 25 species of hummingbird revealed that fast rotations and sharp turns evolved by recruiting change in wing morphology, whereas acceleration maneuvers evolved by recruiting changes in muscle capacity (Figure F3) (Dakin et al., 2018).

Humans invented the wheel for efficient transportation, an engineering achievement that seems to have eluded nature. The reality is that nature was far cleverer and instead invented

(a)

(b)

(a)

Figure F3 Flight performance of hummingbirds. (a) A tracking system recorded body position (blue dots) and orientation (red lines) at 200 frames per second. These data were used to identify stereotyped translations, rotations, and complex turns. The sequence in (a) shows a bird performing a pitch- roll turn (PrT) followed by a deceleration (decHor), an arcing turn (Arc), and an acceleration (AccHor).The sequence duration is 2.5 s, and every fifth frame is shown. (b) The number of translations, rotations, and turns recorded in 200 hummingbirds. (c) Example translations and rotations illustrating the performance metrics.

From roslyn dakin, Paolo S. Segre, Andrew d. Straw, douglas L. Altshuler, Morphology, muscle capacity, skill, and maneuvering ability in hummingbirds, Science, 359 (6376), pp. 653–657, doi: 10.1126/science.aao7104, © 2018 The Authors. reprinted with permission from AAAS.

walking. Take a wheel with a 6-foot radius. As it turns, one spoke after another is in contact with the ground, supporting all the other spokes that are off the ground. A far more efficient design only needs two spokes: the one on the ground and the next one to hit the ground, exchanging them along the way. The bipedal wheel even works well over uneven ground. This illustrates Orgel’s second rule: Evolution is cleverer than you are. Leslie Orgel was a chemist who studied the origin of life at the Salk Institute.

Sensorimotor coordination in living machines is a dynamical process that requires the integration of sensory information with control systems that have a layered architecture: Reflexes in the spinal cord provide rapid correction to unexpected perturbations, but are inflexible; another

Acknowledgments

This book has come about through the contributions of a great many people. We are particularly grateful to the chapter authors for their incredible research in living machines, their inspired writing, and their patience and understanding with the rather long and complex process of assembling the manuscript for this handbook (unfortunately for us, it did not self-assemble as some living machines are able to do!). Beyond those who have directly contributed, there is also a very much larger set of researchers and students, at all career stages—particularly, those that have participated in our conferences and summer schools—who have inspired the ideas in this handbook and have kept us motivated by challenging our preconceptions and delighting us with their energy and enthusiasm. We would particularly like to thank the following for their advice, ideas and encouragement: Ehud Ahissar, Joseph Ayers, Mark Cutkosky, Terrence Deacon, Mathew Diamond, Paul Dean, Marc Desmulliez, Peter Dominey, Frank Grasso, José Halloy, Leah Krubitzer, Maarja Kruusmaa, David Lane, Uriel Martinez, Barbara Mazzolai, Bjorn Merker, Giorgio Metta, Ben Mitchinson, Edvard Moser, Martin Pearson, Roger Quinn, Peter Redgrave, Scott Simon, and Stuart Wilson.

The editors have benefited enormously from the help of staff in their own institutions. Michael Szollosy, who we have acknowledged as an assistant editor, both contributed to the final section of the book “Perspectives” and edited and wrote the introduction to that section. Anna Mura has made a colossal contribution through her tireless work in planning and promoting the Living Machines (LM) conference series, now in its seventh edition, and the Barcelona Summer School on Cognition, Brain, and Technology, now in its eleventh year; we also wish to acknowledge all the local organizers of the LM conferences and the LM International Advisory Board. We would like to thank our co-investigators in the two Convergence Science Network (CSN) coordination action projects, who helped conceive of the LM Handbook, particularly Stefano Vassanelli who also co-ordinated two schools on neurotechnology. The editors are grateful to the support teams in their own institutions, particularly, Carme Buisan and Mireia Mora from the SPECS group at the Department of Information and Communication Technologies at Universitat Pompeu Fabra, and Ana MacIntosh and Gill Ryder from the University of Sheffield and Sheffield Robotics.

The possibility for this handbook has also come about through projects funded by the European Union Framework Programme. The CSN coordination action (grant numbers 248986 and 601167) has been the key integrator, but other collaborative projects also stand behind many of the ideas and technologies seen in this book. Specific ones that we have participated in and benefited from include BIOTACT: BIOmimetic Technology for vibrissal ACtive Touch (FP7 ICT-215910), EFAA: Experimental Functional Android Assistant (FP7 ICT-270490), WYSIWYD: WhatYouSayIsWhatYouDid (FP7 ICT- 612139), SF: Synthetic Forager (FP7 ICT217148), cDAC: The role of consciousness in adaptive behavior: A combined empirical, computational and robot based approach (ERC-2013-ADG-341196), CEEDS: The Collective Experience of Empathic Data Systems (FP7-ICT-258749), CA-RoboCom: Robot Companions for Citizens (FP7-FETF-284951) and the European FET Flagship Programme through the Human Brain Project (HBP-SGA1 grant agreement 720270).

We would like to thank the editorial staff at Oxford University Press for their help in putting the handbook together. Finally, we would like to express our gratitude to our families and friends for their patience, understanding, and tireless love and support.

Contents

Contributors xvii

Section I Roadmaps

1 Living Machines: An introduction 3

Tony J. Prescott and Paul F. M. J. Verschure

2 A Living Machines approach to the sciences of mind and brain 15

Paul F. M. J. Verschure and Tony J. Prescott

3 A roadmap for Living Machines research 26

Nathan F. Lepora, Paul F. M. J. Verschure, and Tony J. Prescott

Section II Life

4 Life 51

Tony J. Prescott

5 Self-organization 55

Stuart P. Wilson

6 Energy and metabolism 62

Ioannis A. Ieropoulos, Pablo Ledezma, Giacomo Scandroglio, Chris Melhuish, and John Greenman

7 Reproduction 73

Matthew S. Moses and Gregory S. Chirikjian

8 Evo-devo 82

Tony J. Prescott and Leah Krubitzer

9 Growth and tropism 99

Barbara Mazzolai

10 Biomimetic materials 106

Julian Vincent

11 Modeling self and others 114

Josh Bongard

12 Towards a general theory of evolution 121

Terrence W. Deacon

Section III Building blocks

13 Building blocks 133

Nathan F. Lepora

14 Vision 136 Piotr Dudek

15 Audition 147

Leslie S. Smith

16 Touch 153

Nathan F. Lepora

17 Chemosensation 160

Tim C. Pearce

18 Proprioception and body schema 168

Minoru Asada

19 Electric sensing for underwater navigation 176

Frédéric Boyer and Vincent Lebastard

20 Muscles 182

Iain A. Anderson and Benjamin M. O’Brien

21 Rhythms and oscillations 189

Allen Selverston

22 Skin and dry adhesion 200

Changhyun Pang, Chanseok Lee, Hoon Eui Jeong, and Kahp-Yang Suh

Section IV Capabilities

23 Capabilities 211

Paul F. M. J. Verschure

24 Pattern generation 218

Holk Cruse and Malte Schilling

25 Perception 227

Joel Z. Leibo and Tomaso Poggio

26 Learning and control 239

Ivan Herreros

27 Attention and orienting 256

Ben Mitchinson

28 Decision making 264

Nathan F. Lepora

29 Spatial and episodic memory 271

Uğur Murat Erdem, Nicholas Roy, John J. Leonard, and Michael E. Hasselmo

30 Reach, grasp, and manipulate 277

Mark R. Cutkosky

31 Quadruped locomotion 289

Hartmut Witte, Martin S. Fischer, Holger Preuschoft, Danja Voges, Cornelius Schilling, and Auke Jan Ijspeert

32 Flight 304

Anders Hedenström

33 Communication 312

Robert H. Wortham and Joanna J. Bryson

34 Emotions and self-regulation 327

Vasiliki Vouloutsi and Paul F. M. J. Verschure

35 The architecture of mind and brain 338

Paul F. M. J. Verschure

36 A chronology of Distributed Adaptive Control 346

Paul F. M. J. Verschure

37 Consciousness 361

Anil K. Seth

Section V Living machines

38 Biomimetic systems 373

Tony J. Prescott

39 Toward living nanomachines 380

Christof Mast, Friederike Möller, Moritz Kreysing, Severin Schink, Benedikt

Obermayer, Ulrich Gerland, and Dieter Braun

40 From slime molds to soft deformable robots 390

Akio Ishiguro and Takuya Umedachi

41 Soft-bodied terrestrial invertebrates and robots 395

Barry Trimmer

42 Principles and mechanisms learned from insects and applied to robotics 403

Roger D. Quinn and Roy E. Ritzmann

43 Cooperation in collective systems 411

Stefano Nolfi

44 From aquatic animals to robot swimmers 422

Maarja Kruusmaa

45 Mammals and mammal-like robots 430

Tony J. Prescott

46 Winged artifacts 439

Wolfgang Send

47 Humans and humanoids 447

Giorgio Metta and Roberto Cingolani

Section VI Biohybrid systems

48 Biohybrid systems 459

Nathan F. Lepora

49 Brain–machine interfaces 461

Girijesh Prasad

50 Implantable neural interfaces 471

Stefano Vassanelli

51 Biohybrid robots are synthetic biology systems 485

Joseph Ayers

52 Micro- and nanotechnology for living machines 493

Toshio Fukuda, Masahiro Nakajima, Masaru Takeuchi, and Yasuhisa Hasegawa

53 Biohybrid touch interfaces 512

Sliman J. Bensmaia

54 Implantable hearing interfaces 520

Torsten Lehmann and André van Schaik

55 Hippocampal memory prosthesis 526

Dong Song and Theodore W. Berger

Section VII Perspectives

56 Perspectives 539

Michael Szollosy

57 Human augmentation and the age of the transhuman 543

James Hughes

58 From sensory substitution to perceptual supplementation 552

Charles Lenay and Matthieu Tixier

59 Neurorehabilitation 560

Belén Rubio Ballester

60 Human relationships with living machines 569

Abigail Millings and Emily C. Collins

61 Living machines in our cultural imagination 578

Michael Szollosy

62 The ethics of virtual reality and telepresence 587

Hannah Maslen and Julian Savulescu

63 Can machines have rights? 596

David J. Gunkel

64 A sketch of the education landscape in biomimetic and biohybrid systems 602

Anna Mura and Tony J. Prescott

65 Sustainability of living machines 614

José Halloy

Index 623

Contributors

Iain A. Anderson

Auckland Bioengineering Group, The University of Auckland, New Zealand

Minoru Asada

Graduate School of Engineering, Osaka University, Japan

Joseph Ayers

Marine Science Center, Northeastern University, USA

Belén Rubio Ballester

SPECS, Institute for Bioengineering of Catalonia (IBEC), the Barcelona Institute of Science and Technology (BIST), Barcelona, Spain

Sliman J. Bensmaia

Department of Organismal Biology and Anatomy, University of Chicago, USA

Theodore W. Berger

Department of Biomedical Engineering, University of Southern California, Los Angeles, USA

Josh Bongard

Department of Computer Science, University of Vermont, USA

Frédéric Boyer

Automation, Production and Computer Sciences Department, IMT Atlantique (former Ecole des Mines de Nantes), France

Dieter Braun

Systems Biophysics, Center for Nanoscience, Ludwig-Maximilians-Universität München, Germany

Joanna J. Bryson

Department of Computer Science, University of Bath, UK

Gregory S. Chirikjian

Department of Mechanical Engineering, Johns Hopkins University, USA

Roberto Cingolani

Istituto Italiano di Tecnologia, Genoa, Italy

Emily C. Collins

Sheffield Robotics, University of Sheffield, UK

Holk Cruse

Faculty of Biology, Universität Bielefeld, Germany

Mark R. Cutkosky

School of Engineering, Stanford University, USA

Terrence W. Deacon

Anthropology Department, University of California, Berkeley, USA

Piotr Dudek

School of Electrical & Electronic Engineering, The University of Manchester, UK

Uğur Murat Erdem

Department of Mathematics, North Dakota State University, USA

Martin S. Fischer

Institute of Systematic Zoology and Evolutionary Biology with Phyletic Museum, Friedrich-Schiller-Universität Jena, Germany

Toshio Fukuda

Institute for Advanced Research, Nagoya University, Japan

Ulrich Gerland

Theory of Complex Biosystems, Technische Universität München, Garching, Germany

John Greenman

Bristol Robotics Laboratory, University of the West of England, UK

David J. Gunkel

Department of Communication, Northern Illinois University, USA

José Halloy

Paris Interdisciplinary Energy Research

Institute (LIED), Université Paris Diderot, France

Yasuhisa Hasegawa

Department of Micro-Nano Systems Engineering, Nagoya University, Japan

Michael E. Hasselmo

Department of Psychological and Brain Sciences, Center for Systems Neuroscience, Boston University, USA

Anders Hedenström

Department of Biology, Lund University, Sweden

Ivan Herreros

SPECS, Institute for Bioengineering of Catalonia (IBEC), the Barcelona Institute of Science and Technology (BIST), Barcelona, Spain

James Hughes

Institute for Ethics and Emerging Technologies, Boston, USA; and University of Massachusetts, Boston, USA

Ioannis A. Ieropoulos

Centre for Research in Biosciences, University of the West of England, UK

Auke Jan Ijspeert

Biorobotics Laboratory, EPFL, Switzerland

Akio Ishiguro

Research Institute of Electrical Communication, Tohoku University, Japan

Hoon Eui Jeong

School of Mechanical and Advanced Materials Engineering, Ulsan National Institute of Science and Technology, South Korea

Moritz Kreysing

Max Planck Institute of Molecular Cell Biology and Genetics, Dresden, Germany

Leah Krubitzer

Centre for Neuroscience, University of California, Davis, USA

Maarja Kruusmaa

Centre for Biorobotics, Tallinn University of Technology, Estonia

Vincent Lebastard

Automation, Production and Computer Sciences Department, IMT Atlantique (former Ecole des Mines de Nantes), France

Pablo Ledezma

Bristol Robotics Laboratory, UK, and Advanced Water Management Centre, University of Queensland, Australia

Chanseok Lee

School of Mechanical and Aerospace Engineering, Seoul National University, South Korea

Torsten Lehmann

School of Electrical Engineering and Telecommunications, University of New South Wales, Australia

Joel Z. Leibo

Google DeepMind and McGovern Institute for Brain Research, Massachusetts Institute of Technology (MIT), USA

Charles Lenay

Philosophy and Cognitive Science, University of Technology of Compiègne, France

John J. Leonard

Computer Science and Artificial Intelligence Laboratory, Massachusetts Institute of Technology, USA

Nathan F. Lepora

Department of Engineering Mathematics and Bristol Robotics Laboratory, University of Bristol, UK

Hannah Maslen

Oxford Uehiro Centre for Practical Ethics, University of Oxford, UK

Christof Mast

Systems Biophysics, Ludwig-MaximiliansUniversität München, Germany

Barbara Mazzolai

Center for Micro-BioRobotics, Istituto Italiano di Tecnologia, Italy

Chris Melhuish

Bristol Robotics Laboratory, University of the West of England, UK

Giorgio Metta

Istituto Italiano di Tecnologia, Genoa, Italy

Abigail Millings

Department of Psychology, University of Sheffield, UK

Ben Mitchinson

Department of Psychology, University of Sheffield, UK

Friederike Möller

Systems Biophysics, Ludwig-MaximiliansUniversität München, Germany

Matthew S. Moses

Applied Physics Laboratory, Johns Hopkins University, USA

Anna Mura

SPECS, Institute for Bioengineering of Catalonia (IBEC), the Barcelona Institute of Science and Technology (BIST), Barcelona, Spain

Masahiro Nakajima

Center for Micro-nano Mechatronics, Nagoya University, Japan

Stefano Nolfi

Laboratory of Autonomous Robots and Artificial Life, Institute of Cognitive Sciences and Technologies (CNR-ISTC), Rome, Italy

Benjamin M. O’Brien

Auckland Bioengineering Institute, The University of Auckland, New Zealand

Benedikt Obermayer

Berlin Institute for Medical Systems Biology, Max Delbrück Center for Molecular Medicine, Berlin, Germany

Changhyun Pang

School of Chemical Engineering, SKKU Advanced Institute of Nanotechnology, Sungkyunkwan University, South Korea

Tim C. Pearce

Department of Engineering, University of Leicester, UK

Tomaso Poggio

Department of Brain and Cognitive Sciences, McGovern Institute for Brain Research, Massachusetts Institute of Technology, USA

Girijesh Prasad School of Computing, Engineering and Intelligent Systems, Ulster University, Londonderry, UK

Tony J. Prescott

Sheffield Robotics and Department of Computer Science, University of Sheffield, UK

Holger Preuschoft Institute of Anatomy, Ruhr-Universität Bochum, Germany

Roger D. Quinn

Mechanical and Aerospace Engineering Department, Case Western Reserve University, USA

Roy E. Ritzmann

Biology Department, Case Western Reserve University, USA

Nicholas Roy

Computer Science and Artificial Intelligence Laboratory, Massachusetts Institute of Technology, USA

Julian Savulescu

Oxford Uehiro Centre for Practical Ethics, University of Oxford, UK

Giacomo Scandroglio

Bristol Robotics Laboratory, University of the West of England, UK

Cornelius Schilling Biomechatronics Group, Technische Universität Ilmenau, Germany

Malte Schilling Center of Excellence for Cognitive Interaction Technology, Universität Bielefeld, Germany

Severin Schink

Theory of Complex Biosystems, Technische Universität München, Garching, Germany

Allen Selverston

Division of Biological Science, University of California, San Diego, USA

Wolfgang Send

ANIPROP GbR, Göttingen, Germany

Anil K. Seth

Sackler Centre for Consciousness Science, University of Sussex, UK

Leslie S. Smith

Department of Computing, Science and Mathematics, University of Stirling, UK

Dong Song

Department of Biomedical Engineering, University of Southern California, USA

Kahp-Yang Suh

School of Mechanical and Aerospace Engineering, Seoul National University, South Korea

Michael Szollosy

Sheffield Robotics, University of Sheffield, UK

Masaru Takeuchi

Department of Micro-Nano Systems Engineering, Nagoya University, Japan

Matthieu Tixier

Institut Charles Delaunay, Université de Technologie de Troyes, France

Barry Trimmer

Biology Department, Tufts University, USA

Takuya Umedachi

Graduate School of Information Science and Technology, The University of Tokyo, Japan

André van Schaik

Bioelectronics and Neuroscience, MARCS Institute for Brain, Behaviour and Development, Western Sydney University, Australia

Stefano Vassanelli

Department of Biomedical Sciences, University of Padova, Italy

Paul F. M. J. Verschure

SPECS, Institute for Bioengineering of Catalonia (IBEC), the Barcelona Institute of Science and Technology (BIST), and Catalan Institute of Advanced Studies (ICREA), Spain

Julian Vincent

School of Engineering, Heriot-Watt University, UK

Danja Voges

Biomechatronics Group, Technische Universität Ilmenau, Germany

Vasiliki Vouloutsi

SPECS, Institute for Bioengineering of Catalonia (IBEC), the Barcelona Institute of Science and Technology (BIST), Barcelona, Spain

Stuart P. Wilson

Department of Psychology, University of Sheffield, UK

Hartmut Witte

Biomechatronics Group, Technische Universität Ilmenau, Germany

Robert H. Wortham

Department of Computer Science, University of Bath, UK

Living Machines: An introduction

Tony J. Prescott1 and Paul F. M. J. Verschure2

1 Sheffield Robotics and Department of Computer Science, University of Sheffield, UK

2 SPECS, Institute for Bioengineering of Catalonia (IBEC), the Barcelona Institute of Science and Technology (BIST), and Catalan Institute of Advanced Studies (ICREA), Spain

Homo sapiens has moved beyond its evolutionary inheritance by progressively mastering nature through the use of tools, the harnessing of natural principles in technology, and the development of culture. Quality of life in our modern industrialized societies is far removed from the conditions in which our early ancestors existed and we have reached a stage where we ourselves have become the main driving force of global change, recognized now as a distinct geological era— the Anthropocene. However, our better health, longer life expectancy, greater prosperity, ubiquitous user-friendly devices, and expanding control over nature are creating challenges in multiple domains—personal, social, economic, urban, environmental, and technological. Globalization, the demographic shift, the rise of automation and of the digital life, together with resource depletion, climate change, income inequality, and armed conflict with autonomous weaponry are creating unprecedented hazards.

For the ancient Greek philosophers, such as Socrates, Aristotle, and Epicurus, the major question was how to find eudaemonia or “the good life.” We face the same question today, but in a technologically advanced, rapidly changing, and hyperconnected world. We are also faced with a paradox—the prosperity that we have achieved through science and engineering risks becoming a threat to our quality of life. Where in the nineteenth and early twentieth century technological advances seemed to be automatically coupled to a general improvement in the quality of life, now this relationship is not so obvious anymore. Hence, how can we assure that our species will continue to thrive? The answer must involve greater consideration for each other and respect for the ecosystems that support us, but the future development of science and technology will also play a crucial role.

The gap between the natural and the human-made is narrowing as this book will show. Beyond the pressures that we have already noted, humanity is faced with an even stranger and unprecedented future—not only will our artifacts become increasingly autonomous, we may also be transitioning towards a post-human or biohybrid era in which we incrementally merge with the technology that we have created.

As we move towards this future we believe there is a need to review and reconsider how we develop, deploy, and relate to the artifacts that we are creating and that will help define what we are becoming. Our specific approach is biologically grounded. We seek to learn from the natural world about how to create sustainable systems that support and enhance life, and we see this as a way to make better technologies that are more human-compatible.

The approach we take is also convergent—including science, technology, humanities, and the arts—as we look to deal with both the physics and metaphysics of the new entities, interfaces, and implants we are developing, and to discover what this research tells us about the human condition.

Figure 1.1 Mimicking the appearance or principles of flight. (a) The steam- driven ‘pigeon’ designed by Archytas of Tarentum (428–347 bc), considered to be the founder of mathematical mechanics. The artificial bird was apparently suspended by wires but nevertheless astonished watching crowds. (b) The Wright brothers designed and built the world’s first successful flying machine, the Kitty Hawk, which took to the air in 1903. Their breakthrough was achieved through a careful study

(a)

(b)

(c)

We call our field “living machines” because we consider that a single unifying science can help us to understand both the biological things that we are and the kinds of synthetic things that we make. In doing so, we knowingly dismiss a Cartesian dualism that splits minds from bodies, humans from animals, and organisms from artifacts, and we celebrate the richness of our own evolved biological sentience that is enabling us, through our science and technology, to make better sense of ourselves.

The living machines opportunity

Contemporary technology is still far removed from the versatility, robustness, and dependability of living systems. Despite tremendous progress in material science, mechatronics, robotics, artificial intelligence, neuroscience, and related fields, we are still unable to build systems that are comparable with insects. There are a number of reasons for this, but two stand out as fundamental and intrinsic limitations. On one hand, artifacts are still very limited in autonomously dealing with the real world, especially when this world is populated by other agents. On the other, we face fundamental challenges in scaling and integrating the underlying component technologies. The approach to addressing these two bottlenecks, followed in this handbook, is to translate into engineering the fundamental principles underlying the still unsurpassed ability of natural systems to operate and sustain themselves in a myriad of different real world niches. Nature provides the only example of sustainability, adaptability, scalability, and robustness that we know. Harnessing these principles should render a radically new class of technology that is renewable, adaptive, robust, self-repairing, potentially social, moral, perhaps even conscious (Prescott et al. 2014; Verschure, 2013). This is the realm of living machines.

Within the domain of living machines we distinguish two classes of entities—biomimetic systems that harness the principles discovered in nature and embody them in new artifacts, and biohybrid systems that couple biological entities with synthetic ones in a rich and close interaction so forming a new hybrid bio-artificial entity.

Biomimetics is an approach that is as ancient as civilization itself. In the fourth century bc the Greek philosopher Archytas of Tarentum built a self-propelled flying machine made from wood and powered by steam that was structured to resemble a bird (Figure 1.1, top). As history progresses we can observe an important shift from mimicking the form of natural processes, such as the shape and movement of bird wings, to the extraction of principles of lift and drag that, for instance, allowed the Wright brothers to realize the first flying machines (Figure 1.1, middle). Hence, the domain of biomimetics has learned the important lesson to look beyond the surface of living systems and understand and re-use the principles they embody. Today, we are able to mimic the winged flight of birds with good accuracy (see Hedenström, Chapter 32, this

of bird flight and aerodynamics using a home- built air tunnel that uncovered some of the key principles of flight. or, in the words of orville Wright, “learning the secret of flight from a bird was a good deal like learning the secret of magic from a magician.” (McCullough, 2015). (c) SmartBird (described in detail in Send, Chapter 46, this volume) is one of a number of biomimetic robots created by the german components manufacturer Festo, who have also built a robotic elephant trunk, swimming robot manta rays, and a flying robot jellyfish. SmartBird demonstrates how an understanding of the principles of flight is now enabling researchers to build models of natural systems that capture their specific capabilities.

(b) Image: Library of Congress. (c) Image: Uli Deck/DPA/PA Images.