https://ebookmass.com/product/deep-learning-on-edgecomputing-devices-design-challenges-of-algorithm-andarchitecture-1st-edition-xichuan-zhou/

Instant digital products (PDF, ePub, MOBI) ready for you

Download now and discover formats that fit your needs...

Deep Learning for Medical Image Analysis 1st Edition S. Kevin Zhou

https://ebookmass.com/product/deep-learning-for-medical-imageanalysis-1st-edition-s-kevin-zhou/

ebookmass.com

Artificial Intelligence and Machine Learning for EDGE Computing 1st Edition Rajiv Pandey

https://ebookmass.com/product/artificial-intelligence-and-machinelearning-for-edge-computing-1st-edition-rajiv-pandey/

ebookmass.com

The Cloud-to-Thing Continuum: Opportunities and Challenges in Cloud, Fog and Edge Computing 1st ed. Edition Theo Lynn

https://ebookmass.com/product/the-cloud-to-thing-continuumopportunities-and-challenges-in-cloud-fog-and-edge-computing-1st-ededition-theo-lynn/ ebookmass.com

JavaScript A Beginner's Guide Fifth Edition John Pollock

https://ebookmass.com/product/javascript-a-beginners-guide-fifthedition-john-pollock/

ebookmass.com

Cancer Sourcebook Kevin Hayes

https://ebookmass.com/product/cancer-sourcebook-kevin-hayes/

ebookmass.com

Information Technology Project Management 9th Edition Schwalbe

https://ebookmass.com/product/information-technology-projectmanagement-9th-edition-schwalbe/

ebookmass.com

The Palgrave Handbook of Islam in Africa 1st Edition Fallou Ngom

https://ebookmass.com/product/the-palgrave-handbook-of-islam-inafrica-1st-edition-fallou-ngom/

ebookmass.com

Grief Before and During the COVID-19 Pandemic: Multiple Group Comparisons Maarten C. Eisma Phd & Aerjen Tamminga Msc

https://ebookmass.com/product/grief-before-and-during-thecovid-19-pandemic-multiple-group-comparisons-maarten-c-eisma-phdaerjen-tamminga-msc/

ebookmass.com

Corporate Finance: Asia-Pacific Edition John Graham

https://ebookmass.com/product/corporate-finance-asia-pacific-editionjohn-graham/

ebookmass.com

Adapted Physical Education and Sport 6th Edition, (Ebook PDF)

https://ebookmass.com/product/adapted-physical-education-andsport-6th-edition-ebook-pdf/

ebookmass.com

DEEPLEARNING ONEDGE COMPUTING DEVICES

This page intentionally left blank

DEEPLEARNING ONEDGE COMPUTING DEVICES

DesignChallengesofAlgorithm andArchitecture

XICHUANZHOU

HAIJUNLIU

CONGSHI

JILIU

Elsevier

Radarweg29,POBox211,1000AEAmsterdam,Netherlands TheBoulevard,LangfordLane,Kidlington,OxfordOX51GB,UnitedKingdom 50HampshireStreet,5thFloor,Cambridge,MA02139,UnitedStates

Copyright©2022TsinghuaUniversityPress.PublishedbyElsevierInc.Allrightsreserved.

Nopartofthispublicationmaybereproducedortransmittedinanyformorbyanymeans, electronicormechanical,includingphotocopying,recording,oranyinformationstorageand retrievalsystem,withoutpermissioninwritingfromthepublisher.Detailsonhowtoseek permission,furtherinformationaboutthePublisher’spermissionspoliciesandourarrangements withorganizationssuchastheCopyrightClearanceCenterandtheCopyrightLicensingAgency, canbefoundatourwebsite: www.elsevier.com/permissions.

Thisbookandtheindividualcontributionscontainedinitareprotectedundercopyrightbythe Publisher(otherthanasmaybenotedherein).

Notices

Knowledgeandbestpracticeinthisfieldareconstantlychanging.Asnewresearchandexperience broadenourunderstanding,changesinresearchmethods,professionalpractices,ormedical treatmentmaybecomenecessary.

Practitionersandresearchersmustalwaysrelyontheirownexperienceandknowledgein evaluatingandusinganyinformation,methods,compounds,orexperimentsdescribedherein.In usingsuchinformationormethodstheyshouldbemindfuloftheirownsafetyandthesafetyof others,includingpartiesforwhomtheyhaveaprofessionalresponsibility.

Tothefullestextentofthelaw,neitherthePublishernortheauthors,contributors,oreditors, assumeanyliabilityforanyinjuryand/ordamagetopersonsorpropertyasamatterofproducts liability,negligenceorotherwise,orfromanyuseoroperationofanymethods,products, instructions,orideascontainedinthematerialherein.

LibraryofCongressCataloging-in-PublicationData

AcatalogrecordforthisbookisavailablefromtheLibraryofCongress

BritishLibraryCataloguing-in-PublicationData

AcataloguerecordforthisbookisavailablefromtheBritishLibrary

ISBN:978-0-323-85783-3

ForinformationonallElsevierpublications visitourwebsiteat https://www.elsevier.com/books-and-journals

Publisher: MaraConner

AcquisitionsEditor: GlynJones

EditorialProjectManager: NaomiRobertson

ProductionProjectManager: SelvarajRaviraj

Designer: ChristianJ.Bilbow

TypesetbyVTeX

Preface vii Acknowledgementsix

PART1Introduction 1.Introduction3

1.1. Background3

1.2. Applicationsandtrends5

1.3. Conceptsandtaxonomy8

1.4. Challengesandobjectives13

1.5. Outlineofthebook14 References16

2.Thebasicsofdeeplearning19

2.1. Feedforwardneuralnetworks19

2.2. Deepneuralnetworks22

2.3. Learningobjectivesandtrainingprocess29

2.4. Computationalcomplexity33 References34

PART2Modelandalgorithm

3.Modeldesignandcompression39

3.1. Backgroundandchallenges39

3.2. Designoflightweightneuralnetworks40

3.3. Modelcompression47 References56

4.Mix-precisionmodelencodingandquantization59

4.1. Backgroundandchallenges59

4.2. Rate-distortiontheoryandsparseencoding61

4.3. Bitwisebottleneckquantizationmethods65

4.4. Applicationtoefficientimageclassification67 References73

5.Modelencodingofbinaryneuralnetworks75

5.1. Backgroundandchallenges75

5.2. Thebasicofbinaryneuralnetwork77

5.3. Thecellularbinaryneuralnetworkwithlateralconnections79

5.4. Applicationtoefficientimageclassification84 References92

PART3Architectureoptimization

6.Binaryneuralnetworkcomputingarchitecture97

6.1. Backgroundandchallenges97

6.2. Ensemblebinaryneuralcomputingmodel98

6.3. Architecturedesignandoptimization102

6.4. Applicationofbinarycomputingarchitecture105 References108

7.Algorithmandhardwarecodesignofsparsebinarynetwork on-chip111

7.1. Backgroundandchallenges111

7.2. Algorithmdesignandoptimization115

7.3. Near-memorycomputingarchitecture120

7.4. Applicationsofdeepadaptivenetworkonchip124 References135

8.Hardwarearchitectureoptimizationforobjecttracking139

8.1. Backgroundandchallenges139

8.2. Algorithm140

8.3. Hardwareimplementationandoptimization143

8.4. Applicationexperiments147 References152

9.SensCamera:Alearning-basedsmartcameraprototype155

9.1. Challengesbeyondpatternrecognition155

9.2. Compressiveconvolutionalnetworkmodel159

9.3. Hardwareimplementationandoptimization164

9.4. ApplicationsofSensCamera166 References175 Index 179

Preface

Wefirststartedworkinginthefieldofedgecomputing-basedmachine learningin2010.Withprojectfunding,wetriedtoacceleratesupport vectormachinealgorithmsonintegratedcircuitchipstosupportembeddedapplicationssuchasfingerprintrecognition.Inrecentyears,withthe developmentofdeeplearningandintegratedcircuittechnology,artificial intelligenceapplicationsbasedonedgecomputingdevices,suchasintelligentterminals,autonomousdriving,andAIOT,areemergingoneafter another.However,therealizationofanembeddedartificialintelligenceapplicationinvolvesmultidisciplinaryknowledgeofmathematics,computing science,computerarchitecture,andcircuitandsystemdesign.Therefore wearrivedattheideaofwritingamonographfocusingontheresearch progressofrelevanttechnologies,soastofacilitatetheunderstandingand learningofgraduatestudentsandengineersinrelatedfields.

Deeplearningapplicationdevelopmentbasedonembeddeddevicesis facingthetheoreticalbottleneckofhighcomplexityofdeepneuralnetwork algorithms.RealizingthelightweightofvariousfastdevelopingdeeplearningmodelsisoneofthekeystorealizeAIOTpervasiveartificialintelligence inthefuture.Inrecentyears,wehavebeenfocusingonthedevelopment ofautomateddeeplearningtoolsforembeddeddevices.Thisbookcovers someofthecutting-edgetechnologies,currentlydevelopinginembedded deeplearning,andintroducessomecorealgorithms,includinglightweight neuralnetworkdesign,modelcompression,modelquantization,etc.,aimingtoprovidereferenceforthereaderstodesignembeddeddeeplearning algorithm.

Deeplearningapplicationdevelopmentbasedonembeddeddevicesis facingthetechnicalchallengeoflimiteddevelopmentofintegratedcircuit technologyinthepost-Mooreera.Toaddressthischallenge,inthisbook, weproposeandelaborateanewparadigmofalgorithm-hardwarecodesign torealizetheoptimizationofenergyefficiencyandperformanceofneural networkcomputinginembeddeddevices.TheDANoCsparsecodingneuralnetworkchipdevelopedbyusistakenasanexampletointroducethe newtechnologyofmemorycomputing,hopingtogiveinspirationtoembeddeddesignexperts.Webelievethat,inthepost-Mooreera,thesystem collaborativedesignmethodacrossmultiplelevelsofalgorithms,software, andhardwarewillgraduallybecomethemainstreamofembeddedintelli-

gentdesigntomeetthedesignrequirementsofhighreal-timeperformance andlowpowerconsumptionundertheconditionoflimitedhardwareresources.

Duetotimeconstraintsandtheauthors’limitedknowledge,theremay besomeomissionsinthecontent,andweapologizetothereadersforthis.

XichuanZhou

Acknowledgements

Firstofall,wewouldliketothankallthestudentswhoparticipatedinthe relevantworkfortheircontributionstothisbook,includingShuaiZhang, KuiLiu,RuiDing,ShengliLi,SonghongLiang,YuranHu,etc.

Wewouldliketotaketheopportunitytothankourfamilies,friends, andcolleaguesfortheirsupportinthecourseofwritingthismonograph. Wewouldalsoliketothankourorganization,SchoolofMicroelectronicsandCommunicationEngineering,ChongqingUniversity,forproviding supportiveconditionstodoresearchonintelligenceedgecomputing.

Themaincontentofthisbookiscompiledfromaseriesofresearch, partlysupportedbytheNationalNaturalScienceFoundationofChina (Nos.61971072and62001063).

WearemostgratefultotheeditorialstaffandartistsatElsevierand TsinghuaUniversityPressforgivingusallthesupportandassistanceneeded inthecourseofwritingthisbook.

This page intentionally left blank

PART1 Introduction

This page intentionally left blank

CHAPTER1 Introduction

1.1Background

Atpresent,thehumansocietyisrapidlyenteringtheeraofInternetof Everything.TheapplicationoftheInternetofThingsbasedonthesmart embeddeddeviceisexploding.Thereport“Themobileeconomy2020” releasedbyGlobalSystemforMobileCommunicationsAssembly(GSMA) hasshownthatthetotalnumberofconnecteddevicesintheglobalInternetofThingsreached12billionin2019[1].Itisestimatedthatby2025 thetotalscaleoftheconnecteddevicesintheglobalInternetofThings willreach24.6billion.Applicationssuchassmartterminals,smartvoice assistants,andsmartdrivingwilldramaticallyimprovetheorganizational efficiencyofthehumansocietyandchangepeople’slives.Withtherapid developmentofartificialintelligencetechnologytowardpervasiveintelligence,thesmartterminaldeviceswillfurtherdeeplypenetratethehuman society.

Lookingbackatthedevelopmentprocessofartificialintelligence,at akeytimepointin1936,BritishmathematicianAlanTuringproposed anidealcomputermodel,thegeneralTuringmachine,whichprovided atheoreticalbasisfortheENIAC(ElectronicNumericalIntegratorAnd Computer)borntenyearslater.Duringthesameperiod,inspiredby thebehaviorofthehumanbrain,AmericanscientistJohnvonNeumann wrotethemonograph“TheComputerandtheBrain”[2]andproposed animprovedstoredprogramcomputerforENIAC,i.e.,VonNeumann Architecture,whichbecameaprototypeforcomputersandevenartificial intelligencesystems.

Theearliestdescriptionofartificialintelligencecanbetracedbackto theTuringtest[3]in1950.Turingpointedoutthat“ifamachinetalks withapersonthroughaspecificdevicewithoutcommunicationwiththe outside,andthepersoncannotreliablytellthatthetalkobjectisamachine oraperson,thismachinehashumanoidintelligence”.Theword“artificial intelligence”actuallyappearedattheDartmouthsymposiumheldbyJohn McCarthyin1956[4].The“fatherofartificialintelligence”defineditas “thescienceandengineeringofmanufacturingsmartmachines”.Theproposalofartificialintelligencehasopenedupanewfield.Sincethen,the DeepLearningonEdgeComputingDevices https://doi.org/10.1016/B978-0-32-385783-3.00008-9

Copyright©2022TsinghuaUniversityPress. PublishedbyElsevierInc.Allrightsreserved. 3

Figure1.1 Relationshipdiagramofdeeplearningrelatedresearchfields. academiahasalsosuccessivelypresentedresearchresultsofartificialintelligence.Afterseveralhistoricalcyclesofdevelopment,atpresent,artificial intelligencehasenteredaneweraofmachinelearning.

AsshowninFig. 1.1,machinelearningisasubfieldoftheoreticalresearchonartificialintelligence,whichhasdevelopedrapidlyinrecentyears. ArthurSamuelproposedtheconceptofmachinelearningin1959andconceivedtheestablishmentofatheoreticalmethod“toallowthecomputer tolearnandworkautonomouslywithoutrelyingoncertaincodedinstructions”[5].Arepresentativemethodinthefieldofmachinelearningisthe supportvectormachine[6]proposedbyRussianstatisticianVladimirVapnikin1995.Asadata-drivenmethod,thestatistics-basedSVMhasperfect theoreticalsupportandexcellentmodelgeneralizationability,andiswidely usedinscenariossuchasfacerecognition.

Artificialneuralnetwork(ANN)isoneofthemethodstorealize machinelearning.Theartificialneuralnetworkusesthestructuraland functionalfeaturesofthebiologicalneuralnetworktobuildmathematical modelsforestimatingorapproximatingfunctions.ANNsarecomputing systemsinspiredbythebiologicalneuralnetworksthatconstituteanimal brains.AnANNisbasedonacollectionofconnectedunitsornodes calledartificialneurons,whichlooselymodeltheneuronsinabiologicalbrain.Theconceptoftheartificialneuralnetworkcanbetracedback totheneuronmodel(MPmodel)[7]proposedbyWarrenMcCulloch andWalterPittsin1943.Inthismodeltheinputmultidimensionaldata aremultipliedbythecorrespondingweightparametersandaccumulated,

andtheaccumulatedvalueiscalculatedbyaspecificthresholdfunction tooutputthepredictionresult.Later,FrankRosenblattbuiltaperceptron system[8]withtwolayersofneuronsin1958,buttheperceptronmodel anditssubsequentimprovementmethodshadlimitationsinsolvinghighdimensionalnonlinearproblems.Until1986,GeoffreyHinton,aprofessor intheDepartmentofComputerScienceattheUniversityofToronto,inventedthebackpropagationalgorithm[9]forparameterestimationofthe artificialneuralnetworkandrealizedthetrainingofthemultilayerneural networks.

Asabranchoftheneuralnetworktechnology,thedeeplearningtechnologyhasbeenagreatsuccessinrecentyears.Thealgorithmicmilestone appearedin2006.HintoninventedtheBoltzmannmachineandsuccessfullysolvedtheproblem[10]ofvanishinggradientsintrainingthemultilayerneuralnetworks.Sofar,theartificialneuralnetworkhasofficially enteredthe“deep”era.In2012,theconvolutionalneuralnetwork[11] anditsvariantsinventedbyProfessorYannLeCunfromNewYorkUniversitygreatlyimprovedtheclassificationaccuracyofthemachinelearning methodsonlarge-scaleimagedatabasesandreachedandsurpassedpeople’s imagerecognitionlevelinthefollowingyears,whichlaidthetechnical foundationforthelarge-scaleindustrialapplicationofthedeeplearning technology.Atpresent,thedeeplearningtechnologyiseverdeveloping rapidlyandachievedgreatsuccessinsubdivisionfieldsofmachinevision [12]andvoiceprocessing[13].Especiallyin2016,DemisHassabis’sAlpha GoartificialintelligencebuiltbasedonthedeeplearningtechnologydefeatedShishiLi,theinternationalGochampionby4:1,whichmarkedthat artificialintelligencehasenteredaneweraofrapiddevelopment.

1.2Applicationsandtrends

TheInternetofThingstechnologyisconsideredtobeoneoftheimportantforcesthatleadtothenextwaveofindustrialchange.Theconceptof theInternetofThingswasfirstproposedbyKevinAshtonofMITin2009. Hepointedoutthat“thecomputercanobserveandunderstandtheworld byRFtransmissionandsensortechnology,i.e.,empowercomputerswith theirownmeansofgatheringinformation”[14].Afterthemassivedata collectedbyvarioussensorsareconnectedtothenetwork,theconnection betweenhumanbeingsandeverythingisenhanced,therebyexpandingthe boundariesoftheInternetandgreatlyincreasingindustrialproductionefficiency.Inthenew“waveofindustrialtechnologicalchange”,thesmart

terminaldeviceswillundoubtedlyplayanimportantrole.Asacarrierfor connectionofInternetofThings,thesmartperceptionterminaldevicenot onlyrealizesdatacollection,butalsohasfront-endandlocaldataprocessingcapabilities,whichcanrealizetheprotectionofdataprivacyandthe extractionandanalysisofperceivedsemanticinformation.

Withtheproposalofthesmartterminaltechnology,thefieldsofArtificialIntelligence(AI)andInternetofThings(IoT)havegraduallymerged intotheartificialintelligenceInternetofThings(AI&IoTorAIoT).On onehand,theapplicationscaleofartificialintelligencehasbeengradually expandedandpenetratedintomorefieldsrelyingontheInternetofThings; ontheotherhand,thedevicesofInternetofThingsrequiretheembedded smartalgorithmstoextractvaluableinformationinthefront-endcollection ofsensordata.TheconceptofintelligenceInternetofThings(AIoT)was proposedbytheindustrialcommunityaround2018[15],whichaimedat realizingthedigitizationandintelligenceofallthingsbasedontheedge computingoftheInternetofThingsterminal.AIoT-orientedsmartterminalapplicationshaveaperiodofrapiddevelopment.Accordingtoa third-partyreportfromiResearch,thetotalamountofAIoTfinancingin theChinesemarketfrom2015to2019wasapproximately$29billion,with anincreaseof73%.

ThefirstcharacteristicofAIoTsmartterminalapplicationsisthehigh datavolumebecausetheedgehasalargenumberofdevicesandlargesize ofdata.Gartner’sreporthasshownthatthereareapproximately340,000 autonomousvehiclesintheworldin2019,anditisexpectedthatin2023, therewillbemorethan740,000autonomousvehicleswithdatacollectioncapabilitiesrunninginvariousapplicationscenarios.TakingTeslaas anexample,witheightexternalcamerasandonepowerfulsystemonchip (SOC)[16],theautonomousvehiclescansupportend-to-endmachinevisionimageprocessingtoperceiveroadconditions,surroundingvehicles andtheenvironment.Itisreportedthatafrontcamerawitharesolution of1280 × 960inTeslaModel3cangenerateabout473GBofimagedata inoneminute.Accordingtothestatistics,atpresent,Teslahascollected morethan1millionvideodataandlabeledtheinformationaboutdistance,acceleration,andspeedof6billionobjectsinthevideo.Thedata amountisashighas1.5PB,whichprovidesagooddatabasisforimprovementoftheperformanceoftheautonomousdrivingartificialintelligence model.

ThesecondcharacteristicofAIoTsmartterminalapplicationsishighlatencysensitivity.Forexample,thevehicle-mountedADASofautonomous

vehicleshasstrictrequirementsonresponsetimefromimageacquisitionandprocessingtodecisionmaking.Forexample,theaverageresponsetimeofTeslaautopilotemergencybrakesystemis0.3s(300ms), andaskilleddriveralsoneedsapproximately0.5sto1.5s.Withthe data-drivenmachinelearningalgorithms,thevehicle-mountedsystem HW3proposedbyTeslain2019processes2300framespersecond(fps), whichis21timeshigherthanthe110fpsimageprocessingcapacityof HW2.5.

ThethirdcharacteristicofAIoTsmartterminalapplicationsishigh energyefficiency.Becausewearablesmartdevicesandsmartspeakersin embeddedartificialintelligenceapplicationfields[17]aremainlybatterydriven,thepowerconsumptionandenduranceareparticularlycritical. Mostofthesmartspeakersuseavoiceawakeningmechanism,whichcan realizeconversionfromthestandbystatetotheworkingstateaccordingto therecognitionofhumanvoicekeywords.Basedontheembeddedvoice recognitionartificialintelligencechipwithhighpowerefficiency,anovel smartspeakercanachievewake-on-voiceatstandbypowerconsumption of0.05W.Intypicalofflinehuman–machinevoiceinteractionapplication scenarios,thepowerconsumptionofthechipcanalsobecontrolledwithin 0.7W,whichprovidesconditionsforbattery-drivensystemstoworkfora longtime.Forexample,Amazonsmartspeakerscanachieve8hoursof batteryenduranceinthealwayslisteningmode,andtheoptimizedsmart speakerscanachieveupto3monthsofendurance.

Fromtheperspectiveoffuturedevelopmenttrends,thedevelopment goaloftheartificialintelligenceInternetofThingsisachievingubiquitous pervasiveintelligence[18].Thepervasiveintelligencetechnologyaimsto solvethecoretechnicalchallengesofhighvolume,hightimesensitivity, andhighpowerefficiencyoftheembeddedsmartdevicesandfinallyto realizethedigitizationandintelligenceofallthings[19].Thebasisofdevelopmentistounderstandthelegalandethicalrelationshipbetweenthe efficiencyimprovementbroughtbythedevelopmentoftheartificialintelligencetechnologyandtheprotectionofpersonalprivacy,soastoimprove theefficiencyofsocialproductionandtheconvenienceofpeople’slives underthepremiseofguaranteeingthepersonalprivacy.Webelievethat pervasiveintelligencecalculationfortheartificialintelligenceInternetof Thingswillbecomeakeytechnologytopromoteanewwaveofindustrial technologicalrevolution.

1.3Conceptsandtaxonomy

1.3.1Preliminaryconcepts

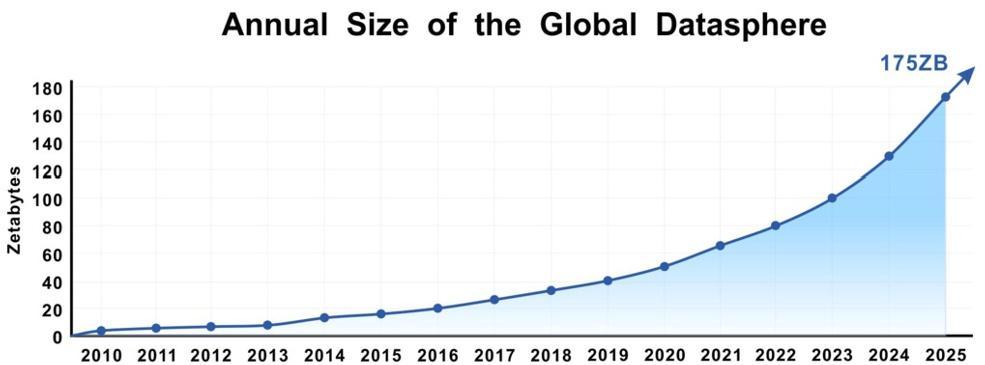

Data,computingpower,andalgorithmsareregardedasthreeelementsthat promotethedevelopmentofartificialintelligence,andthedevelopmentof thesethreeelementshasbecomeaboosterfortheexplosionofthedeep learningtechnology.Firstofall,theabilitytoacquiredata,especiallylargescaledatawithlabels,isaprerequisiteforthedevelopmentofthedeep learningtechnology.Accordingtothestatistics,thesizeoftheglobalInternetdatain2020hasexceeded30ZB[20].Withoutdataoptimizationand compression,theestimatedstoragecostalonewillexceedRMB6trillion, whichisequivalenttothesumofGDPofNorwayandAustriain2020. WiththefurtherdevelopmentoftheInternetofThingsand5Gtechnology,moredatasourcesandcapacityenhancementsatthetransmissionlevel willbebrought.Itisforeseeablethatthetotalamountofdatawillcontinue todeveloprapidlyathigherspeed.Itisestimatedthatthetotalamountof datawillbe175ZBby2025,asshowninFig. 1.2.Theincreaseindata sizeprovidesagoodfoundationfortheperformanceimprovementofdeep learningmodels.Ontheotherhand,therapidlygrowingdatasizealsoputs forwardhighercomputingperformancerequirementsformodeltraining.

Secondly,thesecondelementofthedevelopmentofartificialintelligenceisthecomputingsystem.Thecomputingsystemreferstothe hardwarecomputingdevicesrequiredtoachieveanartificialintelligence system.Thecomputingsystemissometimesdescribedasthe“engine”that supportstheapplicationofartificialintelligence.Inthedeeplearningera ofartificialintelligence,thecomputingsystemhasbecomeaninfrastructureresource.WhenGoogle’sartificialintelligenceAlphaGo[21]defeated KoreanchessplayerShishiLiin2016,peoplelamentedthepowerfulartificialintelligence,andthehuge“payment”behinditwaslittleknown:1202

Figure1.2 Globaldatagrowthforecast.

Figure1.3 Developmenttrendoftransistorquantity.

CPUs,176high-performanceGPUs,andtheastonishingpowerof233kW consumedinagameofchess.

Fromtheperspectiveofthedevelopmentofthecomputingsystem,the developmentofVLSIchipsisthefundamentalpowerfortheimprovementofAIcomputingperformance.Thegoodnewsisthatalthoughthe developmentofthesemiconductorindustryhasperiodicfluctuation,the well-known“Moore’slaw”[22]inthesemiconductorindustryhasexperiencedthetestfor50years(Fig. 1.3).Moore’slawisstillmaintainedin thefieldofVLSIchips,largelybecausetherapiddevelopmentofGPUhas madeupfortheslowdevelopmentofCPU.Wecanseefromthefigure thatin2010thenumberofGPUtransistorshasgrownmorethanthat ofCPUs,CPUtransistorshavebeguntolagbehindMoore’slaw,andthe developmentofhardwaretechnologies[23]suchasspecialASICsfordeep learningandFPGAheterogeneousAIcomputingacceleratorshaveinjected newfuelfortheincreaseinartificialintelligencecomputingpower.

Lastbutnotleast,thethirdelementofartificialintelligencedevelopmentisanalgorithm.Analgorithmisafinitesequenceofwell-defined, computer-implementableinstructions,typicallytosolveaclassofspecific problemsinfinitetime.Performancebreakthroughinthealgorithmand applicationbasedondeeplearninginthepast10yearsisanimportantreasonforthemilestonedevelopmentofAItechnology.So,whatisthefuture developmenttrendofdeeplearningalgorithmsintheeraofInternetofEverything?Thisproblemisoneofthecoreproblemsdiscussedinacademia andindustry.Ageneralconsensusisthatthedeeplearningalgorithmswill developtowardhighefficiency.

Figure1.4 Comparisonofcomputingpowerdemandsandalgorithmsfordeeplearningmodel.

OpenAI,anopenartificialintelligenceresearchorganization,has pointedoutthat“thecomputingresourcerequiredbyadvancedartificial intelligencedoublesapproximatelyeverythreeandahalfmonths”.The computingresourceoftrainingalargeAImodelhasincreasedby300,000 timessince2012,withanaverageannualincreaseof11.5times.Thegrowth rateofhardwarecomputingperformancehasonlyreachedanaverageannualincreaseof1.4times.Ontheotherhand,theimprovementofthe efficiencyofhigh-efficiencydeeplearningalgorithmsreachesannualaveragesavingofabout1.7timesofthecomputingresource.Thismeansthat aswecontinuetopursuethecontinuousimprovementofalgorithmperformance,theincreaseofcomputingresourcedemandspotentiallyexceeds thedevelopmentspeedofhardwarecomputingperformance,asshownin Fig. 1.4.ApracticalexampleisthedeeplearningmodelGPT-3[24]for naturallanguageprocessingissuedin2020.Onlythecostofmodeltraining andcomputingresourcedeploymenthasreachedabout13milliondollars. Ifthecomputingresourcecostincreasesexponentially,thenitisdifficult toachievesustainabledevelopment.Howtosolvethisproblemisoneof thekeyproblemsinthedevelopmentofartificialintelligencetowardthe pervasiveintelligence.

1.3.2Twostagesofdeeplearning:trainingandinference

Deeplearningisgenerallyclassifiedintotwostages,trainingandinference. First,theprocessofestimatingtheparametersoftheneuralnetworkmodel basedonknowndataiscalledtraining.Trainingissometimesalsoknownas theprocessofparameterlearning.Inthisbook,toavoidambiguity,weuse

theword“training”todescribetheparameterestimationprocess.Thedata requiredinthetrainingprocessiscalledatrainingdataset.Thetrainingalgorithmisusuallydescribedasanoptimizationtask.Themodelparameters withthesmallestpredictionerrorofthedatalabelsonthetrainingsample setareestimatedthroughgradientdescent[25],andtheneuralnetwork modelwithbettergeneralizationisacquiredthroughregularization[26].

Inthesecondstage,thetrainedneuralnetworkmodelisdeployedinthe systemtopredictthelabelsoftheunknowndataobtainedbythesensorin realtime.Thisprocessiscalledtheinferenceprocess.Trainingandinference ofmodelsareliketwosidesofthesamecoin,whichbelongtodifferent stagesandarecloselyrelated.Thetrainingqualityofthemodeldetermines theinferenceaccuracyofthemodel.

Fortheconvenienceofunderstandingthesubsequentcontentofthis book,wesummarizethemainconceptsofmachinelearninginvolvedin thetrainingandinferenceprocessasfollows.

Dataset. Thedatasetisacollectionofknowndatawithsimilarattributesorfeaturesandtheirlabels.Indeeplearning,signalssuchasvoices andimagesacquiredbythesensorareusuallyconvertedintodataexpressionformsofvectors,matrices,ortensors.Thedatasetisusuallyclassified intoatrainingdatasetandatestdataset,whichareusedfortheestimation oftheparametersoftheneuralnetworkmodelandtheevaluationofneural networkinferenceperformancerespectively.

Deeplearningmodel. Inthisbook,wewillnameafunction f (x; θ ) fromtheknowndata x tothelabel y tobeestimatedasthemodel,where θ isacollectionofinternalparametersoftheneuralnetwork.Itisworth mentioningthatindeeplearning,theparametersandfunctionformsof themodelarediverseandlargeinscale.Itisusuallydifficulttowritethe analyticalformofthefunction.Onlyaformaldefinitionisprovidedhere.

Objectivefunction. Theprocessofdeeplearningmodeltrainingis definedasanoptimizationproblem.Theobjectivefunctionoftheoptimizationproblemgenerallyincludestwoparts,alossfunctionanda regularizationterm.Thelossfunctionisusedtodescribetheaverageerror ofthelabelpredictionoftheneuralnetworkmodelonthetrainingsamples.Thelossfunctionisminimizedtoenhancetheaccuracyofthemodel onthetrainingsampleset.Theregularizationtermisusuallyusedtocontrolthecomplexityofthemodeltoimprovetheaccuracyofthemodelfor unknowndatalabelsandthegeneralizationperformanceofthemodel.

1.3.3Cloudandedgedevices

Edgecomputing[27]referstoaconceptinwhichadistributedarchitecturedecomposesandcutsthelarge-scalecomputingofthecentralnode intosmallerandeasier-to-managepartsanddispersesthemtotheedge nodesforprocessing.Theedgenodesareclosertotheterminaldevicesand havehighertransmissionspeedandlowertimedelay.AsshowninFig. 1.5, thecloudreferstothecentralserversfarawayfromusers.Theuserscan accesstheseserversanytimeandanywherethroughtheInternettorealize informationqueryandsharing.Theedgereferstothebasestationorserver closetotheuserside.Wecanseefromthefigurethattheterminaldevices [28]suchasmonitoringcameras,mobilephones,andsmartwatchesare closertotheedge.Fordeeplearningapplications,iftheinferencestage canbecompletedattheedge,thentheproblemoftransmissiontimedelaymaybesolved,andtheedgecomputingprovidesservicesneardata sourcesorusers,whichwillnotcausetheproblemofprivacydisclosure. Datashowthatcloudcomputingpowerwillgrowlinearlyinfutureyears, withacompoundannualgrowthrateof4.6%,whereasdemandattheedge isexponential,withacompoundannualgrowthrateof32.5%.

Theedgecomputingterminalreferstothesmartdevicesthatfocuson real-time,secure,andefficientspecificscenariodataanalysisonuserterminals.Theedgecomputingterminalhashugedevelopmentprospectsinthe fieldofartificialintelligenceInternetofThings(AIoT).Alargenumber

Figure1.5 Applicationscenariosofcloudandedge.

ofsensordevicesintheInternetofThingsindustryneedtocollectvarioustypesofdataathighfrequency.Edgecomputingdevicescanintegrate datacollection,calculation,andexecutiontoeffectivelyavoidthecostand timedelayofuploadingthedatatocloudcomputingandimprovethesecurityandprivacyprotectionofuserdata.AccordingtoanIDCsurvey, 45%ofthedatageneratedbytheInternetofThingsindustryin2020will beprocessedattheedgeofthenetwork,andthisproportionwillexpand inthefutureyears.“2021EdgeComputingTechnologyWhitePaper”has pointedoutthatthetypicalapplicationscenariosofedgecomputingsmart terminalsincludesmartcarnetworking/autonomousdriving,industrialInternet,andsmartlogistics.Thevaluesofultralowtimedelay,massivedata, edgeintelligence,datasecurity,andcloudcollaborationwillpromptmore enterprisestochooseedgecomputing.

1.4Challengesandobjectives

Inrecentyears,deeplearninghasmadebreakthroughsinthefieldsofmachinevisionandvoicerecognition.However,becausethetrainingand inferenceofstandarddeepneuralnetworksinvolvealargenumberofparametersandfloating-pointcomputing,theyusuallyneedtoberunon resource-intensivecloudserversanddevices.However,thissolutionhas thefollowingtwochallenges.

(1)Privacyproblem.Sendinguserdata(suchasphotosandvoice)to thecloudwillcauseaseriousprivacydisclosureproblem.TheEuropean Union,theUnitedStates,etc.havesetupstrictlegalmanagementand monitoringsystemsforsendingtheuserdatatothecloud.

(2)Highdelay.Manysmartterminalapplicationshaveextremelyhigh requirementsfortheend-to-enddelayfromdatacollectiontocompletion ofprocessing.However,theend-cloudcollaborativearchitecturehasthe problemthatdatatransmissiondelayisuncertainandisdifficulttomeet theneedsofhightimesensitivitysmartapplicationssuchasautonomous driving.

Edgecomputingeffectivelysolvestheaboveproblemandhasgraduallybecomearesearchhotspot.Recently,edgecomputinghasmadesome breakthroughsintechnology.Ononehand,algorithmdesigncompanies havebeguntoseekmoreefficientandlightweightdeeplearningmodels (suchasMobileNetandShuffleNet).Ontheotherhand,hardwaretechnologycompanies,especiallychiptechnologycompanies,haveinvested heavilyinthedevelopmentofspecialneuralnetworkcomputingaccel-

erationchips(suchasNPU).Howtominimizeresourceconsumptionby optimizingalgorithmsandhardwarearchitectureonedgedeviceswithlimitedresourcesisofgreatsignificancetothedevelopmentandtheapplication ofAIoTinthe5Gandeven6Gera.

Thedeeplearningedgecomputingtechnologybasedonsmartterminalswilleffectivelysolvetheabovetechnicalchallengesofdeeplearning cloudcomputing.Thisbookfocusesonthedeeplearningedgecomputing technologyandintroduceshowtodesign,optimize,anddeployefficient neuralnetworkmodelsonembeddedsmartterminalsfromthethreelevelsofalgorithms,hardware,andapplications.Inthealgorithmtechnology, neuralnetworkalgorithmsforedgedeeplearningisintroduced,includinglightweightneuralnetworkstructuredesign,pruning,andcompression technology.Thehardwaretechnologydetailsthehardwaredesignandoptimizationmethodsofedgedeeplearning,includingalgorithmandhardware collaborativedesign,nearmemorycomputing,andhardwareimplementationofintegratedlearning.Fortheapplicationprogram,eachpartbriefly introducestheapplicationprogram.Inaddition,asacomprehensiveexample,theapplicationofsmartmonitoringcameraswillbeintroducedas aseparatepartattheendofthisbook,whichintegratesalgorithminnovationandhardwarearchitectureinnovation.

1.5Outlineofthebook

Thisbookaimstocomprehensivelycoverthelatestprogressinedge-based neuralcomputing,includingalgorithmmodelsandhardwaredesign.To reflecttheneedsofthemarket,inthisbook,weattempttosystematically summarizetherelatedtechnologiesofedgedeeplearning,includingalgorithmmodels,hardwarearchitectures,andapplications.Theperformance ofdeeplearningmodelscanbemaximizedontheedgecomputingdevices throughcollaborativealgorithm-hardware-codedesign.

Thestructureofthisbookisasfollows.Accordingtothecontent,it includesthreepartsandninechapters.Part 1 isIntroduction,including twochapters(Chapters 1–2);Part 2 isModelandAlgorithm,including threechapters(Chapters 3–5);andPart 3 isArchitectureOptimization, includingfourchapters(Chapters 6–9).

Thefirstchapter(Introduction)mainlydescribesthedevelopmentprocess,relatedapplications,anddevelopmentprospectsofartificialintelligence,providessomebasicconceptsandtermsinthefieldofdeeplearn-

ing,andfinallyprovidestheresearchcontentandcontributionsofthis book.

Thesecondchapter(TheBasicofDeepLearning)explainstherelevant basisofdeeplearning,includingarchitecturesoffeedforwardneuralnetworks,convolutionalneuralnetworks,andrecurrentneuralnetworks,as wellasthetrainingprocessofthenetworkmodelsandperformanceand challengesofthedeepneuralnetworksonAIoTdevices.

Chapter 3 (ModelDesignandCompression)discussesthecurrent lightweightmodeldesignandcompressionmethods,includingefficient lightweightnetworkdesignsbypresentingsomeclassicallightweightmodelsandthemodelcompressionmethodsbydetailedlyintroducingtwo typicalmethods,modelpruningandknowledgedistillation.

Chapter 4 (Mix-PrecisionModelEncodingandQuantization)proposes amixedprecisionquantizationandencodingbitwisebottleneckmethod fromtheperspectiveofquantizationandencodingofneuralnetworkactivationbasedonthesignalcompressiontheoryinwirelesscommunication, andcanquantifytheneuralnetworkactivationfromafloatingpointtype toalow-precisionfixedpointtype.ExperimentsonImageNetandother datasetsshowthatbyminimizingthequantizationdistortionofeachlayer thebitwisebottleneckencodingmethodrealizesstate-of-the-artperformancewithlow-precisionactivation.

Chapter 5 (ModelEncodingofBinaryNeuralNetworks)focusesonthe binaryneuralnetworkmodelandproposesahardware-friendlymethod toimprovetheperformanceofefficientdeepneuralnetworkswithbinaryweightsandactivation.Thecellularbinaryneuralnetworkincludes multipleparallelbinaryneuralnetworks,whichoptimizethelateralconnectionsthroughgroupsparseregularizationandknowledgedistillation. ExperimentsonCIFAR-10andImageNetdatasetsshowthatbyintroducingoptimizedgroupsparselateralpathsthecellularbinaryneural networkcanobtainbetterperformancethanotherbinarydeepneuralnetworks.

Chapter 6 (BinaryNeuralNetworksComputingArchitecture)proposes afullypipelinedBNNacceleratorfromtheperspectiveofhardwareaccelerationdesign,whichhasabaggingintegratedunitforaggregatingmultiple BNNpipelinestoachievebettermodelprecision.Comparedwithother methods,thisdesigngreatlyimprovesmemoryfootprintandpowerefficiencyontheMNISTdataset.

Chapter 7 (AlgorithmandHardwareCodesignofSparseBinary Network-on-Chip)proposesahardware-orienteddeeplearningalgorithm-