HISTORY OF MALARIA AND ITS TREATMENT

1Morag Dagen*

University of the West of Scotland, Blantyre, Glasgow, United Kingdom

1.1 INTRODUCTION

Malaria is endemic in many countries and is particularly prevalent in tropical regions. The World Health Organization (WHO) estimates that 3.3 billion people were at risk of infection in 2013, leading to around 200 million cases and more than half a million deaths worldwide. An estimated 90% of malaria deaths occur in Africa, with more than two-thirds of these being in children aged under 5 years. Of the communicable diseases, only tuberculosis causes more fatalities. Malaria and poverty are closely linked, with the burden of malaria morbidity and mortality being borne by the world’s poorest communities. This has the effect of holding back economic growth and creating a cycle of poverty that is difficult to break. The prevalence of this debilitating disease has particularly devastating effects in regions where war and famine have destroyed social infrastructure.1

The name, malaria, derives from the mediaeval Italian words for bad air-mal aria. The then widely held miasma theory of humoral medicine attributed many infectious diseases to the presence of foul or corrupt air, poisoned by noxious vapors produced by putrefying materials. In terms of public health measures, this led to the removal of foul smelling waste that could be a source of many infections, and the draining of ditches and ponds containing stagnant water. Stagnant water plays an important role in the spread of malaria, as this is where mosquitoes lay their eggs and where their larvae develop. Around 30 of the 400 species of anopheline mosquitoes transmit malarial infections with varying efficiencies.

Malaria is caused by protozoan parasites of the genus Plasmodium, which are transmitted into the blood supply of human hosts by the bite of female anopheles mosquitoes. The species of protozoa that are responsible for malaria infections in humans are Plasmodium vivax, P. malariae, P. ovale, P. falciparum, and P. knowlesi. P. vivax and P. falciparum pose the greatest threat to public health, with P. falciparum being more prevalent and causing the greatest morbidity and mortality. P. vivax can develop in the mosquito host at lower temperatures than P. falciparum and survives at higher altitudes Consequently, it has a wider geographical distribution, which explains why it causes more infections than P. falciparum in regions outside Africa. The parasite also has a dormant liver stage that can reactivate after months or even years, causing relapses without reinfection. P. falciparum is a very ancient human parasite, and DNA studies have shown that it coevolved with humans-an example of parasite host cospeciation. The other parasite species transferred to humans from other primates.2 For example,

*Author is retired but was previously lecturer in chemistry at the University of the West of Scotland.

Antimalarial Agents. http://dx.doi.org/10.1016/B978-0-08-101210-9.00001-9

P. knowlesi causes malaria in monkey species in parts of Southeast Asia, and there have been reports of human infections in recent years.1,3

Many of the symptoms of malaria, such as chills, high fever, profuse sweating, headache, muscle pains, malaise, diarrhea, and vomiting commonly occur in other diseases. However, malaria is characterized by periodic paroxysmal febrile episodes (i.e., sudden recurrence or intensification of fever), and untreated infection leads to enlargement of the spleen. P. falciparum can cause potentially fatal damage to lungs, liver, and kidneys, as well as severe anemia and coma in cerebral malaria that frequently result in death. Chronic infection with P. malariae can cause kidney damage, leading to nephrotic syndrome which may be fatal. Repeated malarial infections are extremely debilitating and make the sufferer susceptible to other diseases.4

From the first appearance of Homo sapiens in East Africa, the evolution of humans and their parasites has run in parallel, and as early humans migrated out of Africa to the wider world, their parasites spread with them. Many factors, such as the development of agriculture and the concentration of populations in urban settlements, facilitated the spread of infection, as did the development of trading routes and population movements caused by conflict, conquest, and colonization.

1.2 MALARIA IN ANTIQUITY

Fevers have been reported throughout recorded human history that can almost certainly be attributed to malaria. Epidemics of paroxysmal fevers associated with enlarged spleens (splenomegaly) were reported in the Nei Ching (the Chinese Canon of Medicine) almost 5000 years ago. An Egyptian papyrus from 1500 BC describes similar fevers, rigors, and splenomegaly. Ancient Indian scriptures from around 3000 years ago contain descriptions of fevers that are believed to be malaria, while Cuneiform tablets from the 6th century BC, excavated from the library at Nineveh, describe deadly malaria-like fevers occurring in Mesopotamia. These were attributed to the god of pestilence, which was depicted as a mosquito-like insect. The Corpus Hippocratorum are the collected works attributed to Hippocrates of Kos (450-370 BC) and contain clear clinical descriptions of tertian fevers which occurred every 3 days, and quartan fevers which occurred every 4 days. It was noted that such fevers were associated with late summer and autumn, and were prevalent in damp, low-lying, marshy areas. It is now known that these fevers were caused by P. vivax and P. malariae. It is clear that P. falciparum was also present in Ancient Greece from the descriptions of patients who suffered periodic fevers in the autumn before lapsing into a coma that was frequently fatal. This is symptomatic of cerebral malaria, which is caused by P. falciparum and no other species of human malaria parasite. Hippocrates also described splenomegaly in malaria cases and associated the disease with the presence of standing water, although he attributed it to the drinking of stagnant water.5

Malaria has shaped the course of human history since antiquity, but the spread of malaria has often been determined by changes to the environment caused by human activity, including population movements and agricultural practices such as deforestation and irrigation. Rome was built on the famous seven hills around the Tiber basin-a region of fertile land that was prone to intermittent flooding and provided the ideal environmental conditions for the intensive agriculture necessary for the growth of the urban population. The clearing of land for farming, and the use of timber for construction and charcoal, led to extensive deforestation which destroyed the great forests of Latium around Rome. This caused the water table to rise and increased the risk of flooding. Indeed, both Pliny the Elder and Pliny

the Younger reported frequent flooding of the Tiber in the 1st centuries BC and AD. The formerly fertile agricultural area of the ager Pomptinus (the Pontine field) turned to marshland, and was renamed Pomptinae paludaes (the Pontine Marshes).6 These stagnant marshes provided extensive breeding sites for anopheles mosquitoes, and the association between low-lying marshy areas and disease was clearly recognized by the Romans. Toward the end of the 2nd century BC, Varro (a Roman authority on agriculture) recommended that houses should be built on elevated positions so that “little beasts” would be blown away, and in the middle of the 1st century AD, Columella wrote that “stinging creatures” from the marshes spread disease.7

In the first half of the 1st century, the Roman writer Celsus described malaria with great accuracy in De Medicina, noting the importance of seasons and climactic conditions, as well as malaria’s association with marshes and swamps. He described three types of malarial fever-quartan, tertian, and the more lethal semitertian. These are now known to be caused by P. malariae, P. vivax, and P. falciparum, respectively. In fact, malaria was endemic in the countryside around Rome, and remained so until the draining of the Pontine Marshes in the 20th century. In ancient Rome, slaves and the urban poor were most vulnerable to the disease, as the wealthy could escape to their country estates during the summer and autumn when the risk of infection was greatest. Unsurprisingly, the first recorded slave revolt in Roman history was in the Pontine Marshes in 198 BC. There are also many references to malaria causing disease and debility in Roman armies throughout the Roman Empire, particularly in large cities and army camps in low-lying areas when troops were packed together in winter quarters. For example, the fort of Carnutum on the Danube was surrounded by wetlands, and had a poor reputation for disease. Even Caracalla’s troops were affected by malarial fevers during the invasion of Caledonia in 208 AD.8

At the height of the Roman Empire, drainage systems were built that excluded malaria from the countryside surrounding Rome-the Campagna. After the fall of Rome in 410 AD, however, the city was reduced from an imperial capital with a population of over a million to a provincial town surrounded by swamps. 9 By the Dark Ages, the Campagna had fallen into ruin, and, by the Mediaeval period, Rome was surrounded by uninhabitable, pestilential marshes. Around the end of the first millennium, Rome repeatedly came under attack during disputes between the Papacy and various Holy Roman Emperors, but the attacking armies were invariably devastated by fevers. The Campagna remained sparsely populated until the marshes were drained in the 1930s.

In the 1990s, archeologists discovered an infant cemetery in Lugnano dating from around 450 AD, which provided evidence of the spread of malaria up the valley of the Tiber (Lugnano is situated on the River Tiber about 98 km north of Rome). The discovery of such an infant cemetery is quite unique as infants were rarely given a proper burial during the Roman period. Indeed, the remains of infants are frequently found in sewers and rubbish pits throughout the Roman world.10 The excavation discovered that 47 infants had been buried in the cemetery during a single summer, which indicates an epidemic of such virulence that most of the population and virtually all of the pregnant women were infected.4,11 Sacrifices and ritual items associated with the burials, during what was nominally a Christian period, suggest that the survivors of the epidemic feared witchcraft. The region around Lugnano was prone to flooding in the past, with reports of malaria in agricultural workers as late as the 19th century. Pools of water left over from such flooding would have provided ideal breeding conditions for the anopheline mosquito vector, leading to an epidemic in a population who may have had little previous exposure to the disease. There is a high rate of miscarriage in P. falciparum infection, and maternal malaria causes high rates of neonatal mortality. Indeed, P. falciparum DNA was isolated from one of the excavated infant skeletons indicating that P. falciparum had reached Lugnano by the 5th century.2 It is clear from

the writings of Celsus that P. falciparum infection was not uncommon in early 1st century Rome, but it was not active across the entire Italian peninsula. By contrast, the disease was present in Greece from the 5th century BC, and appears to have been introduced to Sicily by Greek colonists around 2500 years ago. It then took 15,000 years for malaria to spread throughout Italy, reaching the north around 1,000 AD.2

1.3 MALARIA AND NATURAL SELECTION

Archaeological science has provided further evidence of the spread of malaria. A number of inherited hemoglobinopathies (genetic defects in hemoglobin) and other genetic polymorphisms that affect red blood cells are associated with protection against malaria. Thus, the prevalence of these mutations in populations is a powerful indicator of either current or past exposure to endemic malaria. Normal hemoglobin is a tetramer of two alpha and two beta-globin chains. Sickle-cell anemia is caused by a point mutation in the beta-globin gene that leads to changes in the protein that distort red blood cells into a sickle shape. Sickled cells break down prematurely causing anemia and jaundice. They are also inflexible and can block capillaries, causing tissue hypoxia that can damage lungs, kidneys, spleen, and brain. Without treatment, homozygotes (individuals with two identical alleles of the defective gene) are unlikely to survive childhood. However, heterozygote children gain some protection against P. falciparum malaria, and are 10 times less likely to die from the disease than children lacking the defective gene. This protective effect has led to a greater than 30% frequency of the sickle-cell gene in parts of Africa. As a whole, Africa is home to 70% of those people with at least one copy of the sickle-cell allele, and 85% of homozygotes containing both alleles. This reflects the cost of endemic P. falciparum to the African population over many generations.4,12 However, cases of sickle-cell anemia are not restricted to Africa. Traces of fossilized, sickled red blood cells that are strongly suggestive of endemic P. falciparum have been detected in the skeleton of a young man from the Roman period excavated on an island in the Persian Gulf. The sickle-cell trait is also found in India, Arabia, and around the Mediterranean, providing an indicator of the evolutionary pressure exerted by malaria in these regions over the years.2

The thalassemias are anemias caused by gene mutations that result in the effective loss of either the alpha or beta-globin chains of hemoglobin. Such hemoglobin binds oxygen less efficiently than the normal α2β2 form. Under normal conditions, this disadvantages the carrier. However, both heterozygotes and homozygotes are up to 50% less likely to be infected by malaria.4 Raised frequencies of thalassemias are found throughout most of Africa, the Mediterranean, the Middle East and Arabian peninsula, Central and Southeast Asia, the Indian subcontinent, Southern China, the Philippines, New Guinea, and Melanesia-areas where malaria is believed to have been endemic 2000 years ago. Thalassemias cause characteristic changes to the skeleton, and evidence of the condition has been found on human remains excavated around the Mediterranean and in the Near East. The oldest date to 10,000 years ago and were found in a submerged village off the Israeli coast. Remains showing similar changes have been found in Metapontum (a Greek colony in the south of Italy) from the 5th century BC, Tuscany from the 3rd century AD, and Pisa from around the 6th century AD. This pattern is consistent with malaria spreading relatively slowly from the south to the north of Italy. It is believed that the mutations that result in traits such as the thalassemias arose only once and were spread by human migration, since distinctive mutations found in different parts of the world do not occur elsewhere.2,13

1.4 FROM THE DARK AGES ONWARD

For centuries, malaria remained a barrier to social and economic development because of the detrimental physical and mental effects of repeated malarial infections on affected populations-both in the tropics and more temperate regions. Medical authorities from Hippocrates onward are consistent in their descriptions of not only the physical debility, but also the mental distress and depression of the populations in malarious regions. Even today, with improved healthcare, many survivors of malaria are left with neurological or mental health problems. Malaria also caused high infant mortality, population decline, and even depopulation in badly affected areas. By the Middle Ages, malaria had spread along trade routes into northern, central, and eastern Europe, as well as Scandinavia, the Balkans, Russia, and the Ukraine. In Britain, marshy areas around river estuaries were severely affected by endemic malaria. However, the genetic polymorphisms selected by malaria, such as thalassemia and sickle-cell trait, are not found in the indigenous populations of Northern Europe suggesting that the disease did not become important there until around 1000 years ago.4

There are frequent descriptions of tertian and quartan fevers in European and Russian literature from the Mediaeval Warm Period. Dante in the 13th century describes “one who has the shivering of the quartan,” while Chaucer in the 14th century wrote “you should have the quartan’s pain, or some ague that may be your bane”; in England, malaria was described as ague until the 19th century.14 During the 16th century, many English marshlands were notoriously unhealthy and to be avoided by travelers. For example, the topographer John Norden suffered a “most cruel quartan fever” on visiting the Essex coast in the 1590s.15 Shakespeare also mentioned ague in eight of his plays-a clear indication that the condition was familiar to English audiences. Some physicians of the period provided detailed observations of the disease. William Harvey was best known for his description of blood circulation in 1628, but he also produced a treatise containing detailed clinical descriptions of the pre- and post-mortem features of malarial fevers. These observations include a description of obstructed circulation, which is generally observed in P. falciparum infection, and is most often seen in cerebral malaria.

There is some debate about whether malaria in Britain was due to P. vivax or P. falciparum. Both cause tertian fevers, but P. vivax can persist in the liver for many years as a dormant hyponozite, which can reactivate and cause relapses. This persistence would enhance the survival of P. vivax in colder climes, where it is exposed to long winters and cold summers. Tertian fevers due to P. vivax malaria are described as benign, as vivax infection is rarely a primary cause of death, unlike P. falciparum. However, the very high death rates in English marshland areas indicate that the endemic agues were far from benign. This, along with Harvey’s observations, suggests P. falciparum may have been present in England during the 18th and 19th centuries. On the other hand, it is equally possible that the very high death rates caused by marsh fevers were due to a particularly virulent strain of P. vivax.14

Although there is uncertainty concerning P. falciparum infections in England, there is clear evidence of P. falciparum infections on the European continent following the World War I. For example, in the 1920s, high death rates occurred in Russia and Poland as a result of P. falciparum epidemics transmitted by European strains of mosquito. Although, European mosquitoes do not act as a vector for modern tropical strains of P. falciparum under laboratory conditions that does not necessarily reflect field conditions at the time. Huldén et al. also report that in Finland and Holland, mosquitoes survived inside warm stables and houses and were capable of infecting humans throughout the winter.16

There is good evidence that P. falciparum, P. vivax, and possibly P. malariae were brought to the New World by Europeans in the 15th century.17 Initially, the Caribbean and parts of Central and South

America were badly affected. Subsequently, from the middle of the 19th century, the introduction of large numbers of African slaves to the Southern States of America resulted in malaria becoming firmly established there. It then spread across the continent with European settlers and their slaves, and had an important role in the defeat of the British in the American War for Independence. Indeed, the determination of the British to control the pestilential South probably cost them the war as troops were incapacitated by illness. Among the worst affected was the 71st (Highland) regiment, with two-thirds of the officers and men, including the commander, rendered unfit for service by fevers and agues. The devastation of this regiment was an important factor in the British losing the Battle of Yorktown, and their ultimate defeat in the war.18

1.5 JESUIT’S BARK

The New World has a very important impact in the history of malaria, as it was in South America that the first effective treatment for the disease was discovered. The powdered bark of the cinchona tree (an evergreen found in the tropical Andes) was used by indigenous people to treat fever, and Jesuit missionaries seeking new cures discovered its effectiveness in treating malaria.19 In 1632, it was brought to Rome where it was of great interest to the church authorities because of events that had occurred 9 years earlier. After the death of Pope Gregory XV in 1623, members of the conclave gathered to elect his successor. However, there was an outbreak of malaria that led to the death of 8 cardinals and 30 other officials within a month. Many others fell ill, including the newly elected Pope Urban VIII who fled the Vatican to higher ground. Unsurprisingly, Pope Urban instructed Jesuit missionaries to seek new medicines in the Americas-a venture that proved remarkably successful with the discovery of cinchona bark’s antimalarial properties. In 1643, the Spanish Jesuit Cardinal Juan de Lugo instructed Gabrielle Fonseca, the pope’s physician, to carry out an empirical study at the Santo Spirito Hospital in Rome.20 This proved successful and de Lugo began distributing the medicine free of charge to the poor of Rome, where it was known as cardinal’s bark or Jesuits’ bark. By 1649, there were instructions on appropriate application and doses in the Schedula Romana and the Jesuits were supervising a substantial trade that involved large shipments of cinchona being regularly transported from Peru to Europe.21

To begin with, cinchona bark was not universally accepted for the treatment of fevers or agues. In part, this may have been resistance to a “miracle cure” at a time when most medical treatments were at best useless and frequently harmful. Moreover, the quality of the product would have been variable. Different species of the genus Cinchona produce variable amounts of the active alkaloids responsible for the bark’s therapeutic effect. Merchants could not always distinguish cinchona bark from that of other species, and many other types of bitter bark were used. Also, as with all herbal medicines, the content of the active component varies according to growing conditions, climate, and the length and quality of storage, so calculating an appropriate dose would have been difficult. There were also religious considerations. Many Protestants were prejudiced against the Jesuit powder. In Puritan England, Oliver Cromwell refused to take the “powder of the devil,” even though he suffered greatly and died of a tertian ague in 1658.14

Thomas Sydenham, credited with being one of the founders of epidemiology, was a captain in Cromwell’s army during the English Civil War (the War of the Three Kingdoms). He also opposed the use of cinchona powder in the treatment of ague, but his medical observations and practical experience caused him to change his mind, and he recommended the powder in his Methodus curandi febres

of 1666. Robert Talbor (1642-81) was of the same era and gained fame and fortune by producing a successful patent antimalarial medicine, which was essentially an infusion of cinchona powder with opium in white wine. Prior to this, he had taken up an apprenticeship with an apothecary. However, he abandoned his apprenticeship to move to the Essex marshes (an area where malaria was endemic) and spent many years experimenting and perfecting his malaria cure. When his medicine was successfully used to cure Charles II of an ague, Talbor was rewarded with a knighthood, and was appointed as Physician Royal in 1672. Subsequently, Talbor became famous throughout Europe, and treated many royal and aristocratic patients.22

In 1712, Francesco Torti of Modena reported that only intermittent agues responded to treatment with Peruvian bark.23 Cinchona bark was also expensive and a scarce commodity as it could only be imported from South America. Therefore, several physicians sought more readily available alternatives. While searching for alternatives amongst native plants, the British physician Samuel James administered bitter-tasting willow bark to patients, believing that it would have similar properties to cinchona. He observed that it had an antipyretic effect, which was later shown to be due to salicylic acid. However, it had no direct effect on malaria parasites.24

Cinchona bark became widely recognized as an effective treatment for malaria, but it was typically beyond the reach of all but the wealthy. As a result, marsh fevers and agues remained a scourge in low-lying areas throughout the known world, especially in hot summers when streams and ponds were reduced to pools and puddles that were ideal for breeding anopheles mosquitoes. English parish registers of the 17th and 18th centuries show that adult and child mortality rates were 2-3 times higher in marshland communities than in neighboring parishes, with half of all deaths occurring in children under 10 years old. As a result, the average life expectancy in marshland communities was only 30 years. Vicars of marshy areas rarely lived in their parishes, being forced away by “the prevalence of agues.”15,25

1.6 ISOLATION OF THE ACTIVE PRINCIPLE

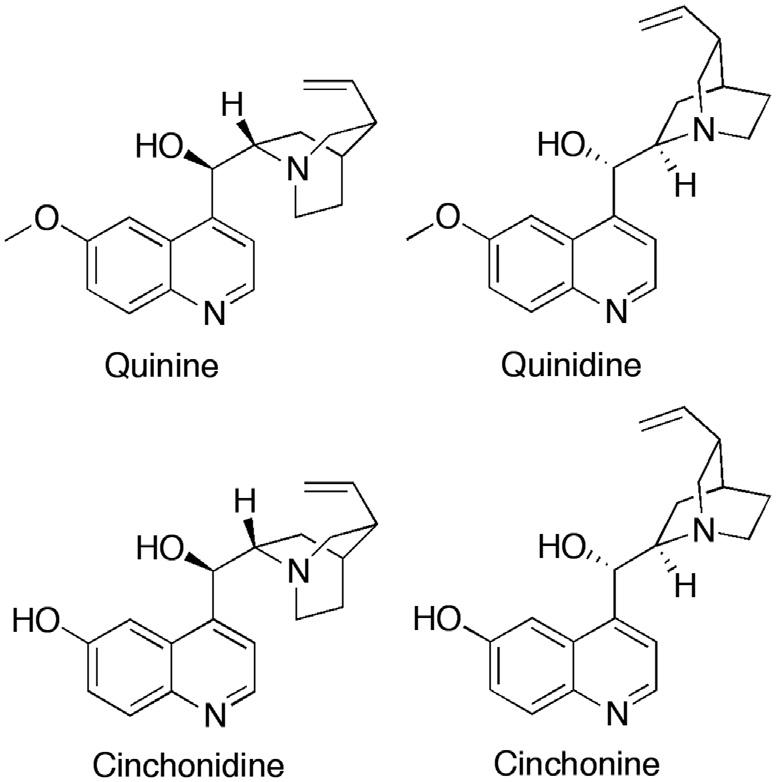

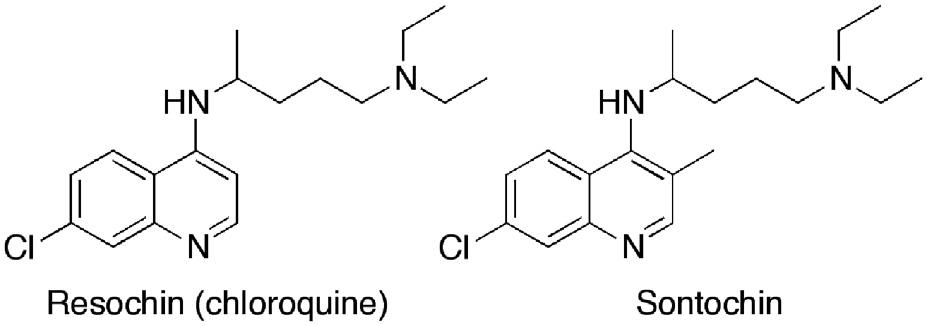

In the 19th century, physicians and scientists sought to discover the active principle responsible for the therapeutic properties of cinchona bark. In 1811, the Portuguese physician Bernardino Antonio Gomez isolated a crystalline substance, which he called cinchonin. The following year, the German chemist Friedlieb Ferdinand Runge also isolated material that he believed was the active principle. It is likely that both men isolated impure quinine-one of several active alkaloids found in cinchona. The French chemists Pierre Joseph Pelletier and Joseph Bienaimé Caventou modified Gomez’s procedure and, in 1820, published their method for the isolation of the alkaloid quinine (Fig. 1.1) from the bark of Cinchona cordifolia. In what was an important innovation in drug discovery, they encouraged medical colleagues to clinically test purified salts of quinine. The pure substance proved to be highly effective and, unlike the unpalatable cinchona bark, it could be dispensed and administered in small volumes of predictable potency. Caventou and Pelletier published very precise details of the purification method but did not patent it, thus enabling the rapid establishment of several quinine-producing factories. This, in turn, stimulated the beginning of the modern pharmaceutical industry. Initially, Caventou and Pelletier manufactured quinine in their own pharmacies, and, in 1821, Pelletier was able to send supplies of quinine to treat a malaria epidemic in Barcelona. By 1826, Pelletier owned a factory that was producing around 3600 kg of quinine sulfate annually.26

The demand for quinine was huge, and the drug proved enormously important in promoting 19thcentury colonialism. Up until that time, malaria was a constant scourge for European colonists, whether they be merchant, missionary, military, or migrant, and it significantly hampered European expeditions of discovery and commerce in the tropics. West Africa was one of the worst affected areas and was called “the white man’s grave” as 50%-70% of Europeans died within a year, This is neatly summed up by the refrain of a 19th-century British sea shanty-”Beware and take care of the bight of Benin. There’s one that comes out for forty goes in.”27 Similar problems were encountered across imperial possessions from the Caribbean to the Indian subcontinent, and the average life expectancy of British officials and merchants in the tropics could be measured in months. By 1850, the British government was using nine tons of quinine annually in India alone. It is hardly surprising that the majority of British emigration was to the safer temperate colonies of Canada, New Zealand, South Africa, and South Australia.4

In order to produce the large amounts of quinine needed by European colonial powers, tons of bark had to be exported to Europe from South America every year, and the demand placed great pressure on what was a limited resource. Moreover, exporting countries such as Bolivia, Colombia, Ecuador, and Peru banned the export of seeds and plants to protect their monopoly, and European plant hunters were closely monitored. Their baggage was regularly searched and any plant material found could be destroyed. Alternatively, they were simply refused access to the most productive plantation areas. Both the Dutch and British were particularly interested in establishing cinchona plantations in their Far East colonies in order to break the South American monopoly. Although some seeds were successfully smuggled out of South America and planted in Java, Ceylon, and India, they either failed to thrive or produced trees that gave poor yields of quinine.

Another approach was to develop strains of cinchona in Europe that produced better yields of quinine. The British pharmaceutical company Howard & Sons began production of quinine alkaloids in 1823, with quinine becoming their most profitable product. John Eliot Howard was trained in chemistry and botany, and used his expertise to evaluate cinchona imports at the London docks. However, he also

FIGURE 1.1 Quinine and related plant alkaloids.

grew cinchona in the greenhouse of his London home and developed a hybrid strain that produced a good spectrum of the alkaloids quinine, quinidine, cinchonidine, and cinchonine in useful quantities (Fig. 1.1).

Despite Howards’s efforts, the real breakthrough came toward the latter half of the 19th century when seeds capable of producing quinine alkaloids of high quality were finally smuggled out of South America and used to establish cinchona plantations in British and Dutch-held colonies. For example, at the instigation of the India Office, a British plant-collecting expedition led by Robert Spruce of Kew Gardens went to South America in 1859 and collected seeds and plants that were sent to India. Appropriate strains were selected and cinchona was grown in southern India and the Darjeeling hills. From 1866 to 1868, some of the earliest clinical trials in history were carried out in Madras, Bombay, and Calcutta to evaluate the efficacies of the four alkaloids.27 Sulfates of the alkaloids were used to treat more than three and a half thousand patients, and all four compounds were found to have similar efficacies with cure rates above 98%.28 A distribution system was set up using Indian post offices to make the medication widely available across the country.

In 1865, an English alpaca farmer called Charles Ledger, with the help of his loyal South American servant Manuel Tacra Mamami, obtained cinchona seeds from Bolivia. Ledger received £50 from the British government for his efforts, and a similar amount from the Dutch. However, Mamami’s reward was to be executed by the Bolivian government. When Ledger’s seeds reached London, the Indian plantations were already well established and Kew had no interest in new varieties. The Dutch, on the other hand, successfully used the new strain to grow cinchona plantations in Java, Indonesia. The trees grown from these seeds were called C. ledgeriana, and they produced bark with an exceptionally high quinine content of up to 13%. After 1890, quinine became the major alkaloid used in malaria treatments, with Indonesian cinchona bark taking over the market. By the 1930s, the Dutch Indonesian plantations were producing the raw material for 97% of the world’s quinine, and this monopoly lasted until the Japanese invasion of Java in 1942.29

1.7 QUININE TOXICITY AND PHARMACOKINETICS

Until the 1940s, quinine was unrivalled for the treatment of malaria. However, the drug was not without its problems. It has a low therapeutic index, and a wide range of adverse effects of varying severity. Cinchonism is the term used to describe the common side effects observed, which include headache, nausea, tinnitus, and hearing loss. More serious side effects include abdominal pain, diarrhea, vomiting, vertigo, major hearing loss, and visual disturbances, including complete loss of sight. Hypoglycemia can also occur, particularly in pregnant women, and hypotension can occur following rapid intravenous administration. Less common, but more serious side effects of quinine treatment include asthma, skin lesions, thrombocytopenia, liver damage, and psychotic episodes.

During the 1930s and 1940s, it was not unusual for European expatriates in Africa to self-medicate with quinine if they believed they had “a touch of malaria.” One of the potential hazards of quinine self-medication was the development of blackwater fever. This frequently fatal condition was described in 1822 by a staff surgeon called Tidlie who worked with the African Company in West Africa, and involved severe fever followed by the passage of dark urine. Most affected patients died within a few days and recently arrived Europeans were particularly susceptible. In the decades that followed, further incidents were reported, especially in Africa. It became clear that the condition was an acute complication

of malaria and it appeared to be associated with quinine treatment and prophylaxis. In 1884, John Farrell Easmon coined the term blackwater fever in a review of the literature that contained detailed clinical case studies. He identified that the discolored urine was due to the presence of hemoglobin caused by intravascular lysis of red blood cells. He also reported that the condition was associated with nephritis (inflamed kidneys) and should be treated by liberal administration of quinine. However, the pathological mechanisms were not understood at the time. By the late 1960s, it was clear that massive hemolysis resulted in high hemoglobin concentrations in the renal filtrate, which led to tubular necrosis and acute renal failure.7

Blackwater fever is associated with using quinine and the risk is greatest if recipients are heavily infected by P. falciparum, and where a high percentage of erythrocytes contain malarial parasites. Quinine is a very effective parasiticidal agent, but rapid destruction of the parasites results in the breakdown of infected cells and release of hemoglobin into the plasma. If the level of parasitemia is high, the large quantity of hemoglobin produced in a short time period is toxic to the kidneys as it damages epithelial cells, which obstruct the tubular lumina and cause acute renal failure. People who have red cell defects such as glucose-6-phosphatase deficiency are at higher risk. New synthetic antimalarial agents kill malarial parasites at a slower rate, resulting in slower release of hemoglobin and less risk to the kidneys.

Quinine remains an important antimalarial drug today, almost 200 years since it was isolated and purified. However, the development of drug resistance in malaria parasites is possibly the greatest challenge faced by control programs. Resistance to quinine was first reported in 1910, but resistance develops slowly and is generally incomplete. Reduced P. falciparum sensitivity to quinine has been reported in Asia and South America, but appears to be uncommon in Africa. Malaria recrudescence rates for quinine treatment have changed little over the last 3 decades, suggesting that the efficacy of quinine is essentially unchanged.

Treatment with quinine can fail due to the pharmacokinetic properties of the drug. Treatment responses are dependent on age, pregnancy, immunity, and severity of disease. Moreover, as the patient’s condition improves, increased doses are required as there is an increase in clearance and volume of distribution. It has been proposed that increasing the dose on the third day of treatment would be beneficial, particularly in resistant P. falciparum infections. However, this protocol has not been widely accepted. Another important cause of treatment failure is the quality of the medication. Substandard drugs are a worldwide problem. Studies on antimalarial drugs available in a number of African countries have shown a large proportion of them to be of low quality. Problems include high levels of impurities, lower than stated levels of the active ingredient, and, in some cases, complete absence of the active ingredient. A further cause of treatment failure is poor compliance. Treatment involves three doses a day for 7 days, but quinine’s side effects can deter compliance. Nevertheless, quinine remains the treatment of choice in P. falciparum infection, and in malaria during the first trimester of pregnancy. It is also a vital component of many combination-therapy drug regimens.28

1.8 DISCOVERY OF THE PARASITE

The Plasmodium parasites that infect humans have a complex life cycle.30 Infection of humans occurs following the bite of a female mosquito, then the parasite progresses through various stages in the blood and the liver, before it infects the next mosquito to bite (Chapter 2). This complex process was

gradually elucidated over more than a century31-36 and was considered vital research because of the negative impact malaria had on world trade, armies of occupation, and colonial administration.

Giovanni Lancisi was an eminent anatomist and physician to three popes. In 1716, he described postmortem studies that revealed a dark-colored pigment in the tissues of victims of periodic fevers. He also reported that draining swamps reduced malaria, and suggested that mosquitoes spread the disease.37 In 1849, Rudolph Virchow established that the dark brown or black pigment found at postmortem came from the blood. The Italian, Corrado Tommasi-Crudell, and the eminent German microbiologist, Theodor Klebs, isolated a bacterium from the Pontine Marshes, which they believed was responsible for malaria. They called it Bacillus malariae, and injected it into rabbits where it caused fever and enlargement of the spleen. It was against this background that a young French military doctor, Charles Laveran, began the work that led to his discovery of the actual malaria parasite. In 1878, he was posted to Algiers where military hospitals were full of malaria cases. Postmortem examination of patients who had died of malignant malaria due to P. falciparum revealed the characteristic pigment in the blood, spleen, and other organs, but gave no indication as to the cause. Laveran began to look for the pigment in wet blood films taken from patients, finding it first in leukocytes and then erythrocytes. Crescent and spherical-shaped bodies containing the pigment were also present. In 1880, he observed pigmented spherical bodies with flagella-like filaments that were moving in the blood of a new patient. He went on to examine the blood of nearly 200 patients and identified crescents in the 148 patients who had malaria, but none in those who were free of the disease. He also showed that the crescents were removed by treatment with quinine. He proposed that clear spots observed in the red blood cells grew as they acquired pigment until the erythrocytes burst (hemolysis) and produced fever. This was a momentous discovery as it represented the first protozoan parasite detected in man. Laveran named the microbe Oscillaria malariae.

In December 1880, he presented his findings to the French Academy of Medical Sciences. However, eminent scientists of the day, including Pasteur and Koch, were not convinced that he had found anything other than the breakdown products of red blood cells.38,39 Laveran persevered, and, at the Santo Spirito Hospital in Rome, he demonstrated that the same parasites that he had found in Algiers were in the blood of Roman patients. The eminent Italian malariologists, Ettore Marchiafava and Angelo Celli, observed these results, but remained unconvinced, maintaining their belief that B. malariae was the infectious agent. In 1884, Laveran returned to the Val-de-Grâce School of Military Medicine in Paris, where he invited Pasteur to view the motile, flagellated spheres under the microscope. Pasteur was immediately convinced and Marchiafava and Celli finally acknowledged their mistake. In the following year, Camillo Golgi described two distinct species of malarial protozoa: P. malariae was responsible for quartan fevers, while P. vivax caused tertian fevers. Golgi also demonstrated that recurrent fevers coincided with the rupture of erythrocytes and the release of parasites into the bloodstream, confirming Laveran’s conjecture.40

Laveran left the army to continue his study of protozoal infections at the Pasteur Institute, and in 1889, the Academy of Sciences awarded him the Bréant Prize for his discovery of the malarial parasite. In 1894, he gave a report to the International Congress of Hygiene at Budapest, where he stated that he had failed to discover the parasite in air, water, or soil. Consequently, he was convinced that the microbe existed outside the human body in another host. He referred to Manson’s discovery of the filarial worm and its transmission by mosquitoes, and was one of the first scientists to suggest that the mosquito also played that role in malaria. Laveran disliked the word “malaria” which he regarded as an unscientific term because it derived from the now discredited miasma theory. Instead, he coined the

term paludisme-a term which is still used in France. In 1907, he was awarded the Nobel Prize in Physiology or Medicine “in recognition of his work on the role played by protozoa in causing diseases.” He used his prize for the establishment of a laboratory devoted to tropical diseases at the Pasteur Institute. In 1884, Marchiafava and Celli described an active “amoeboid ring” in the erythrocytes of malaria patients, which they initially thought was different from the microorganism discovered by Laveran. They named it Plasmodium and this eventually took precedence over Laveran’s choice of name. In 1890, Golgi and Marchiafava were able to distinguish between the parasites causing benign tertian fevers (P. vivax), and those causing malignant tertian fevers (P. falciparum). A fourth human malaria parasite was reported in 1922, when John Stephens described P. ovale-a species that was found in West Africa and was similar to P. vivax.

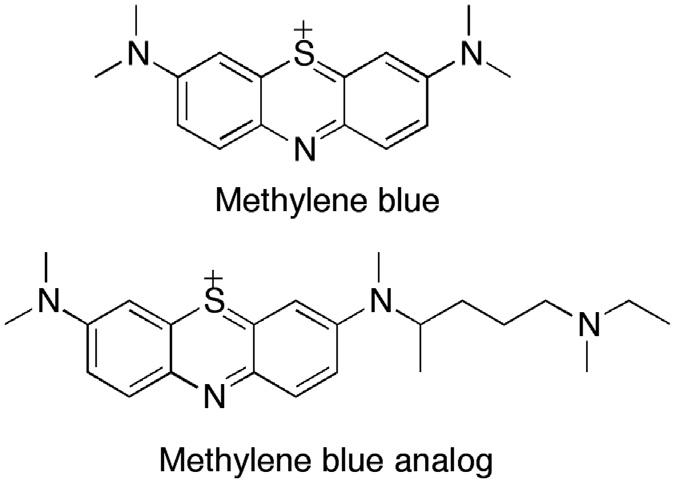

Laveran was the first to discover that a protozoan could infect human blood cells. However, unknown to European investigators, the Russian physiologist Vasily Danilewsky had discovered a number of parasites in the blood of birds, including what he described as “pseudovacuoles.” These were, in fact, unstained malaria parasites. By 1885, he had described Plasmodium and other intraerythrocytic parasites of birds, but his work was published in Russian and did not become widely known until it was published in French in 1889. This promoted a search for malaria parasites in birds, reptiles, and mammals, which was greatly assisted by a new staining method using methylene blue and eosin that was discovered by Yuri Romanovsky in St. Petersburg.38 In 1891, Romanovsky used this staining method to examine the blood of malaria patients, and noted that the parasites were clearly damaged in patients who had been treated with quinine. He concluded that the therapeutic effect of quinine was due to its action on the parasites, and not directly on the patient-the first evidence of a drug treatment destroying an infecting microorganism. However, the discovery received little attention at the time.26 Later that year in Berlin, Paul Ehrlich reasoned that if the malaria parasites could take up the methylene blue stain, they might also be poisoned by it in vivo. He administered the dye to two patients who were suffering from malaria, and both recovered. Ehrlich did not follow up on this work, but it was the first instance of a specific disease being cured by a synthetic drug.41

In 1897, two students (Eugene Opie and William MacCallum) at John’s Hopkins Medical School were studying Haemoproteus columbae, which is a malaria-like parasite of birds. They observed granular nonmotile cells and motile, flagellated cells similar to those described by Laveran. They also found that a flagellated cell fused with a granular cell to produce a worm-like cell. MacCallum correctly interpreted this as sexual reproduction. He suggested that the flagellated cells were male gametes, the granular cells female gametes, and the worm-like product of fusion of the zygote. He correctly predicted that malaria parasites in humans underwent a similar process.38

1.9 MOSQUITO-MALARIA HYPOTHESIS

Despite the rapid advances in malaria research described in Section 1.8, the mechanism by which the parasite is transmitted to humans remained unsubstantiated. In 1877, while working in Taiwan, Patrick Manson identified the life cycle of the nematode worm (Wuchereria bancrofti) that caused elephantiasis, and established that mosquitoes were the vectors that transmitted this disease to humans. He postulated that mosquitoes could also be the vector of malaria, in part because the disease is associated with marshes and swamps where mosquitoes breed.8 However, he believed that humans might become infected by drinking water contaminated by infected mosquitoes, or that parasites were transferred

between hosts on the proboscis of a mosquito. In 1879, Tommasi-Crudeli and Klebs suggested that infection resulted from breathing in the dust from dried-up ponds where infected mosquitoes had died. In 1882, the English-born, American physician Albert King proposed, to some derision, that malaria could be eradicated from Washington by encircling the city with a high-mesh screen to exclude mosquitoes. The following year he published his mosquito-malaria doctrine-a list of 19 observations, all of which were consistent with malaria being spread by mosquitoes.42

In 1894, Manson was still working on the problem of malaria transmission, but was now based in London where he met Ronald Ross-a doctor who was on leave from the Indian Medical Service. Under the microscope, Manson showed Ross the flagellated spheres obtained from the blood of malaria patients, and encouraged him to begin research into malaria transmission on his return to India, thus began an extremely fruitful collaboration. Ross returned to Secunderabad, where he spent 2 years searching for the malaria parasite by meticulously examining thousands of mosquitoes fed on malaria-infected blood. Unfortunately, Ross had no training in entomology, and no access to reference works, and so he was studying the wrong species of mosquito. However, he was an indefatigable and meticulous experimenter, who described his findings in great detail. Eventually, in 1897, he studied a new species of “dapple-winged” mosquito that had been fed on the blood of a volunteer containing the crescents characteristic of P. falciparum. He found pigmented cells embedded in the gut wall of the mosquitoes killed 2 days after feeding. In mosquitoes killed after longer periods, the pigmented cells were larger. He also found similar cells in the gut of “barred-back” mosquitoes fed on the blood of a patient with benign tertian infection.43,44 Ross’s results demonstrated developmental stages of human plasmodium parasites in anopheles mosquitoes.

Ross wrote up his findings in a report, which he showed to Surgeon Major John Smyth. Smyth added a confirmatory note, and the findings were sent to Manson along with Ross’s slides. A paper was then published in the British Medical Journal that included illustrations of the slides drawn by Manson, and further comments from Manson, Sutton, Bland, and Thin.44 At the same time, Ross received orders to move to Kherwara-an isolated spot a thousand miles away to the north, where there was no malaria. He requested his superiors that he be allowed to return to Secunderabad to continue his research, but was met with a reprimand instead. Manson petitioned vigorously to both the government of India and the Director General of the Indian Medical Service that Ross should be allowed to continue with his vital research. However, Ross was transferred to Calcutta where there were certainly hospital facilities but few malaria cases to study; his work had been interrupted at a critical stage, apparently for procedural reasons. On Manson’s advice, he began to study malaria in birds, and discovered that the avian malaria parasite P. relictum was transmitted by “grey” mosquitoes believed to be Culex fatigans. When mosquitoes that had fed on infected birds were allowed to feed on healthy birds, these too developed malaria. It was also observed that mosquitoes that had fed on infected birds developed pigmented cells on the surface of the gut that increased in size and released worm-like structures that moved to the mosquitoes’ salivary glands. Ross had revealed the life cycle of P. relictum in culicine mosquitoes, and he predicted that human malaria was transmitted in the same way.45

The Italian zoologist, Battista Grassi, was also convinced that mosquitoes were the malaria vectors, so he studied species of mosquitoes found in malarial regions. He focused on three species: Anopheles claviger and two Culex species. In 1898, Grassi, Bignami, and Bastianelli reported a well-controlled experiment where a healthy volunteer from a malaria-free region had contracted tertian malaria after being been bitten by an infected A. claviger, confirming anopheline mosquitoes as the human malaria vector.46 Over the following 2 years, the Italian researchers described the life cycles of P. falciparum,

P. vivax, and P. malariae in both the human and mosquito host, and proved that only female anopheline mosquitoes could transmit malaria.38

In 1899, Ross was sent by the newly formed Liverpool School of Tropical Medicine to Sierra Leone in West Africa, where he demonstrated the development of P. falciparum, P. vivax, and P. malariae in A. gambiae and A. funestus-the most important malaria vectors in Africa. He also raised money for the initiation of control measures. Rubbish that could hold stagnant water was cleared, pools were drained, roads were leveled to remove puddles, and rivers were straightened to eliminate areas of still water.47 Ross was awarded the first Nobel Prize in Physiology or Medicine in 1902 “for his work on malaria, by which he has shown how it enters the organism and thereby has laid the foundation for successful research on this disease and methods of combating it.”

By the beginning of the 20th century, it was scientifically proven that malaria was transmitted by biting mosquitoes; however, this had little initial impact on public health. Therefore, Manson carried out two experiments designed to convince the public of the mosquito-malaria theory, and to encourage preventative measures to reduce exposure to mosquitoes. The first experiment involved exposing a healthy volunteer (his son Patrick) to mosquitoes that had fed on the blood of patients infected with benign tertian malaria caused by P. vivax. Eleven days after being bitten, Patrick was unwell and feverish, but no parasites were detected in his blood. After 3 days of fever, he began to feel better, but on the fifth day, tertian parasites were detected in his blood. Fever returned and his spleen became tender and enlarged. Treatment with quinine was then initiated. Some tertian parasites and gametes were found in his blood, but by early evening, his blood was clear of parasites. The following day, he felt better and his spleen had returned to normal. There was no further recurrence of malaria.

Manson’s second experiment was carried out in the Roman Campagna near Ostia at the mouth of the Tiber-a highly malarious zone where the indigenous inhabitants suffered from malarial cachexia (weakness and wasting of the body), and migrant laborers from healthy regions quickly succumbed to fever. A specially constructed wooden hut was sent from England that provided mosquito protection in the form of mosquito netting, wire screens on doors and windows, and bed nets. Healthy volunteers spent July to September working outside during the day, but stayed in the hut between dusk and dawn. Despite not taking quinine, none of the volunteers contracted malaria. These results led Manson to conclude that Europeans in malaria-affected areas should avoid native houses, protect themselves from mosquito bites, and destroy mosquito-breeding sites.48 Grassi carried out a larger-scale experiment on the Carpaccio Plains-another region with endemic malaria. A total of 112 volunteers were protected from mosquitoes from sunset to sunrise with only five contracting malaria, whereas 415 unprotected volunteers all contracted malaria.38 These studies amply demonstrated that malaria could be prevented by using protective measures against biting mosquitoes between dusk and dawn.

1.10 COMPLETING THE LIFE CYCLE

The life cycle of the Plasmodium parasite was not completely understood until the middle of the 20th century. Since parasites were not seen in the blood for around 10 days after infection, Grassi suggested that there could be a developmental stage in a tissue other than blood. However, he abandoned the idea when Fritz Schaudinn mistakenly described the Plasmodium sporozoite penetrating an erythrocyte. MacCallum had described the developmental stages of avian parasites in the liver and spleen of birds in 1898 (Section 1.8), and evidence accumulated of parasites developing in other nucleated cells in

various species of birds. By the 1940s, many believed that there must be an exoerythrocytic form in primates. Hepatocystus kochi is a monkey parasite that is related to Plasmodium and was studied by Laveran. It does not infect blood cells, but develops in the parenchymal cells of the liver, suggesting the possibility of liver stages in other primate parasites. In 1947, Henry Shortt and Cyril Garnham, at the Ross Institute of the London School of Hygiene and Tropical Medicine, demonstrated that malaria parasites multiplied in the liver before they developed in the blood by exposing rhesus monkeys to 500 A. maculopennis atroparvus mosquitoes infected with P. cynomolgi. Within a week, they found exoerythrocytic stages in the liver. They also found hepatocyte stages of P. vivax in human volunteers. Later in 1949 and 1954, they found the equivalent stages of P. falciparum and P. ovale, respectively, and the exoerythrocytic stages of P. malariae were detected in chimpanzees by Robert Bray in 1960.38 The final major piece of the puzzle was the cause of relapse seen in some temperate strains of P. vivax. In 1982, Wojciech Krotoski and Garnham discovered dormant exoerythrocytic stages called hyponozites in liver biopsies of chimpanzees infected with P. vivax. 49

1.11 MALARIA CONTROL

The understanding of how malaria is transmitted led to various vector-control schemes that involved the destruction of larval breeding sites and the introduction of increased personal protection against mosquito bites. For example, changes in agricultural practices-particularly drainage and land reclamation-reduced the numbers of anopheline mosquitoes by removing still and stagnant waters. The introduction of root crops as winter fodder enabled farmers to maintain higher numbers of animals throughout the year, thus diverting zoophilic species of anopheles from feeding on humans. Human exposure to mosquitoes was further reduced by locating animal sheds further away from housing, and building houses that were more mosquito-proof by including house screens, good ventilation, and bed nets. Better healthcare and the lower cost of quinine also helped to control the disease. Because of these measures, there was a marked decline in malaria in Northern Europe and America during the late 19th and early 20th centuries. Controlling malaria had important socioeconomic, health, and military advantages. For example, malaria proved an important factor in the bankruptcy of the French company building the Panama Canal. The project started in 1882 and was led by Ferdinand De Lesseps, who had constructed the Suez Canal. The camps built to house the workers were of a very high standard, but as mosquitoes were seen as merely a nuisance, screens were not fitted. Moreover, lavish gardens were created in the compounds where the trees were protected from ants with water-filled pottery rings-a perfect environment for mosquito larvae. The death toll from disease was high, with 30 fatalities occurring during the surveying and planning stage alone. An estimated 30,000 workers died during the French project, and the company was declared bankrupt in 1889. Accounts from the time suggest that in some parts of the jungle two out of three Europeans died from either malaria or yellow fever. Yellow fever is a viral disease that is spread by the mosquito Aedes Aegypti

Malaria and yellow fever also posed problems for the US military when they occupied Cuba following the defeat of the Spanish in the Spanish-American war of 1898, with roughly 80% of Americans sent to the island coming down with fever. Major William Gorgas was appointed chief sanitary officer in Havana, and was charged with eradicating malaria and yellow fever. Diseased patients were isolated by screening them with mosquito netting, buildings were fumigated to kill mosquitoes, and breeding sites were drained or covered with kerosene; these measures proved very successful.

The defeat of malaria and yellow fever in Cuba now made the completion of the Panama Canal a realistic proposition-an important priority for the US military as American warships in the Pacific had been unable to get to the Caribbean during the Spanish-American War. Scientific experts wanted Gorgas to be appointed to the commission overseeing the project when it began in 1904, but they failed to get their wish. Instead, the army appointed him to the canal zone as an advisor. At first, he was regarded as a bit of an annoyance by the civilian commission administering the project, but then came the rain and the mosquitoes. The first death from yellow fever occurred in November 1904, and within 3 months, there were scores of further deaths that sparked fear and alarm amongst the engineers and laborers. Ships that arrived with new workers left with greater numbers fleeing the fever. Gorgas introduced the same control measures as he had employed in Havana, adding quinine as a prophylactic. However, he met a great deal of resistance, particularly from a commanding officer called Admiral John Walker who was resistant to any spending that was not directly related to earth-moving. Eventually, Walker and the commission attempted to have Gorgas replaced. Fortunately, President Theodore Roosevelt intervened. Gorgas, the hero of Havana, would stay, and a new chief engineer was appointed. By 1906, yellow fever was eliminated from the canal zone, and malaria was contained among the work force. During the decade of work involved in completing the canal, approximately 2% of the workforce was hospitalized at any one time. This contrasted with 30% during the French project.50

In 1914, the US Congress allocated funds for malaria control in the United States, with control measures being established around military bases in the malarious regions of the southern states. Because of the Great Depression, Roosevelt’s Works Progress Administration dug thousands of miles of ditches, and drained hundreds of thousands of acres in the South. The Tennessee Valley Authority was created in 1933 for the economic development of a region where 30% of the population was affected by malaria. The mosquito vector A. quadrimaculatus was found to breed at the edges of large bodies of water where there was vegetation, and so the banks of reservoirs were cleared of vegetation and engineered at an angle designed to leave larvae stranded when the water level was lowered. Screens on windows and doors were also installed in houses throughout the region. By 1947, the disease was essentially eliminated from the area and, by 1951, the United States was considered free of malaria.51

Great progress was also made in Italy. At the end of the 19th century, malaria was endemic across one-third of the country where 10% of the population lived. In addition, around two million hectares of land remained uncultivated because of malaria. Largely due to the efforts of Angelo Celli, the state organized free distribution of quinine to inhabitants of malaria endemic areas. Larvicidal methods of vector control initially involved petroleum derivatives being sprayed at breeding sites, then a copper arsenite called Paris green was used from 1921 onward. Land management techniques such as drainage and reforestation were also employed to remove breeding sites. For example, the Feniglia Forest Reserve in Tuscany was developed to recover an area that had been reduced to swamp by overgrazing.52

In Egypt, the company records of the Suez Canal Company showed increasing mortality from malaria at the end of the 19th century. The company approached Ronald Ross for advice and there was a dramatic fall in the death rate after the introduction of mosquito controls in 1902. For example, deaths in November fell to 174 compared with 352 in November the previous year. In December, deaths fell to 73 compared with 265 the previous year. Ross represented the Liverpool School of Tropical Medicine in numerous expeditions aimed at establishing malaria control programs in the tropics, as well as Greece and Cyprus.53 His efforts bore fruit in reducing morbidity and mortality in many tropical regions including West Africa, Lagos, the Gold Coast, British Central Africa, Mauritius, India, and Hong Kong.54

These early control campaigns clearly demonstrated that attacking the mosquito vectors of malaria was one of the most effective ways of reducing the transmission of disease in endemic areas. Not only did malaria mortality fall, but mortality due to other causes also decreased. When the debilitating effects of malaria were removed, other diseases were less likely to be fatal.

1.12 WORLD WAR I

The World War I was fought, not only on the Western Front in France and Belgium, but also in Eastern Europe, the Middle East, Africa, and Asia. Despite the fact that malaria had been an important factor in military campaigns throughout history, military commanders were ill prepared for its impact on their troops. Much of the fighting took place in regions where malaria was, or had been, endemic, and the conditions of war did much to undermine the progress that had been made in controlling the disease prior to the war-particularly in Italy and Greece. Troop movements and military action created ideal conditions for the outbreak of malaria epidemics which devastated civilians and soldiers alike. In areas containing populations of suitable anopheles vectors, transmission of the disease was increased by deploying large numbers of infected colonial troops from tropical regions alongside nonimmune soldiers. Control measures were frequently poorly organized and depended on the cooperation of local commanders, while clinical treatment was dependent on supplies of quinine. Malaria mortality is estimated to have been around 0.6% amongst Allied troops, whereas for the Central Powers the figure was over 4%. The lower mortality rate observed for Allied forces could have been due to better disease management and quinine treatment regimens, or the preventive measures developed by Ronald Ross, who visited the front to advise Allied commanders. It could also be a reflection of the numbers of colonial African and Indian troops, who would be expected to have a lower risk of mortality due to acquired immunity. Conversely, colonial troops represented a reservoir of infection, and soldiers suffering from the anemia of chronic malaria would succumb more readily to battle injury or other health challenges. Deployment of colonial troops also introduced new parasite strains to the European theater of war.

Quinine was administered as a preventive measure to those who had not been infected, or to prevent recurrent infection due to failed primary treatment. However, there was controversy and confusion surrounding quinine treatment and prophylaxis at the time, with the British placing a greater emphasis on avoiding contact with mosquitoes. In a War Office survey of 129 officers on the effectiveness of quinine, four felt that it was of definite value and 115 felt that it was of no value. Frequently, when quinine prophylaxis was ordered it was not enforced. Clinical trials into efficacy were not standardized or properly controlled, so the results were difficult to interpret. Furthermore, supplies were not always reliable. War profiteering resulted in quinine preparations that contained significantly less than the stated dose, and substandard medication inevitably led to substandard prophylaxis.55

The incidence of malaria on the Western Front was not high, although some prisoners of war in Flanders were infected in German prisoner-of-war camps. This, combined with the flooding caused by breaching the dykes, led to a resurgence of indigenous P. vivax infections in the area. Morbidity and mortality due to malaria was higher on the Eastern Front amongst Russian, Romanian, and Albanian troops fighting in low-lying marshy regions where there were regular seasonal epidemics.

Not surprisingly, malaria was a constant threat in the African campaigns and the British navy strove to prevent the import of medical supplies into German colonies. However, in German East Africa, sufficient quinine was produced at the Research Institute in Tanganyika to fully supply the German East

African Expeditionary Army. The ready availability of quinine and the extensive use of black African conscripts and mercenaries (Askaris) gave the Germans a clear military advantage over the British and French in highly malarious regions. This was despite the high incidence of blackwater fever experienced by German-born soldiers as a result of using quinine so extensively (64% of noncombat fatalities were due to blackwater fever). It is perhaps surprising that the Germans had a better understanding than the British and French of the difficulties of waging war with nonimmune troops in malarious regions, given the latter’s colonial experience and the contributions that scientists from both nations had made to malaria research. Even British-Indian troops fell victim to P. falciparum, though they would be expected to have a degree of immunity as they came from regions where the infection was endemic. Presumably, in Africa, they encountered different strains of the parasite.

Elsewhere, there were malaria epidemics in Macedonia, Palestine, Mesopotamia, and Egypt. There were even epidemics in England and Italy following the return of malaria-infected troops. The Macedonian epidemic led to 10 casualties for every one caused by combat, and the French, British, and German forces were effectively immobilized for 3 years. When ordered to attack, one French General replied: “Regret that my army is in hospital with malaria.” Nearly 80% of 120,000 French troops deployed in Macedonia were hospitalized with malaria, prompting the French to improve the provision of quinine prophylaxis to troops, and to evacuate malaria cases. Subsequently, French forces experienced a lower incidence of malaria than the British. Between 1916 and 1918, British forces recorded over 160,000 hospitalizations due to malaria, compared to 24,000 men recorded killed, wounded, taken prisoner, or missing in action. The British eventually followed the French example by introducing a quininetreatment protocol and removing heavily infected cases from the front. For example, 25,000 men were evacuated with chronic malaria in 1917. Vector control methods were introduced, including drainage, treatment of breeding sites, fumigation, and mosquito nets. However, problems with supplies of quinine, nets, and insecticides meant that these had limited impact.

Indian troops sent to the Sinai introduced malaria to that region. In Mesopotamia, an epidemic affected British troops as soon as they were deployed to the area in early 1916. There were even cases on naval vessels, with a higher incidence on those ships anchored close to shore. The majority of infections were initially due to P. vivax, but, by 1918, there was a greater proportion of P. falciparum infections. Malaria also played a key role in the British defeat by the Turks at Kut in 1916, as well as the overall defeat of the Turkish army by the end of the war. By 1918, the British had developed effective control measures against the infection, but as they advanced north from Palestine and Mesopotamia, they overran Turkish positions that lacked control measures, and so malaria increased once more amongst Allied troops.

Between 1900 and 1914, there were only two cases of malaria amongst British soldiers based solely in Britain.55 However, by 1918, 38,000 British soldiers requiring malaria treatment had returned to England from Macedonia, Egypt, Mesopotamia, and Africa. This resulted in nearly 500 cases of locally transmitted vivax malaria being detected in England during the war. It was found that the indigenous A. atroparvus strain of mosquito spread the parasite among the local population, and investigators of this outbreak confirmed that malaria could still become endemic in parts of England.15 Several recruits undergoing military training in British military camps also contracted malaria, with 30 cases recorded in one regiment. The main source of infection was relapsing P. vivax infection in returned troops. In 1919, the Ministry of Health made malaria a notifiable disease to facilitate prompt identification of disease foci. Affected soldiers and sailors were quarantined in specialized hospitals to control the epidemic and limit local transmission.

In Italy there was a devastating epidemic in 1917-18, which killed tens of thousands of soldiers and civilians. The prewar malaria control measures collapsed, hospitals in occupied areas were requisitioned by Austrian forces, and there were shortages of quinine-all of which resulted in the worst Italian malaria epidemic of the 20th century. Giovanni Battista Grassi came out of retirement to run a dispensary and research station during the crisis.

In 1919, there were increases in reported cases of local transmission in Vienna, Albania, and Corsica due to infected demobilized troops. There were also epidemics of P. falciparum malaria in the Ukraine and Russia as far north as Archangel in the Arctic Circle. In Russia, there were over 350,000 cases in 1919, but this grew to nearly six million in 1924, with more than 10% of cases resulting in fatalities.55

Much of the devastating effect that the World War I had on social, economic, and political progress was due to the resurgence of malaria, as control measures faltered and collapsed. Infrastructure was destroyed and population movements either spread the parasites to new environments, or returned them to environments where they had previously been controlled or even eradicated.

1.13 SPREAD OF VECTORS

The experience of the World War I demonstrated the consequences of introducing malaria parasites to areas where there were mosquito vectors. However, there was also the risk of introducing effective mosquito vectors into new areas. As the extent and speed of postwar travel increased, the risk of spreading disease also increased. Where resources were available, efforts were made to police borders with inspections of potential breeding areas such as puddles, ponds, and other standing water in the vicinity of airports, rail yards, and harbors.

Raymond Shannon was an entomologist working for the Rockefeller Foundation in the city of Natal in North East Brazil. One Sunday morning in March 1930, he was taking a walk when he saw a strange larva wriggling in a puddle. He identified it as the African mosquito A. gambiae-one of the most efficient malaria vectors as it has adapted to living in human communities and feeds almost exclusively on human blood. It is likely that these mosquitoes had crossed the Atlantic in the fast French destroyers that were used for mail delivery at the time.50 They were now breeding in an area where coastal salt marshes had recently been drained by a system of dikes to create hay fields. Unfortunately, the dikes provided perfect breeding conditions

The considerable threat posed by the potential spread of A. gambiae was recognized, but the responsibility for dealing with the problem lay with the local city authorities. Shannon and his colleagues recommended opening the dikes to flood the area with salt water, which would effectively eradicate the breeding population at the small cost of a hay harvest. Officials disagreed and so nothing was done. In April, there was a malaria epidemic with more than 100 cases and a significant number of deaths. In January 1931, the outbreak exploded into a ferocious epidemic with 10,000 cases. A campaign was mounted, which involved fumigating houses and oiling or draining breeding sites. By the end of the year, A. gambiae was eliminated in the city, but it was not eradicated in the countryside. In the following years, further outbreaks occurred in communities within a 150-mile radius of Natal, with significant numbers of fatalities. At the same time, Brazil was suffering from a lengthy period of drought, which meant that the health services were stretched to breaking point in having to deal with both famine and disease, as well as the numbers of people that had fled stricken areas and were now in refugee camps. Malaria outbreaks in a few isolated villages were not a priority.

In 1938, the worst malaria epidemic recorded in the Western hemisphere struck Brazil with 100,000 cases recorded, resulting in an estimated 14,000-20,000 deaths; precise figures are not known as many rural deaths were not recorded. Entire communities were infected with devastating effects. Harvesters were too sick to gather crops, hospitals ran out of beds, pharmacies ran out of drugs, and food-delivery systems collapsed. As a result, many people died from relatively mild infections. The Brazilian medical authorities were shocked by the virulence of the disease, as they were more accustomed to an endemic form of malaria that was haphazardly spread by local vectors. An emergency antimalarial service was created by Brazil’s president Getâlio Vargas, with a staff of 4000 under the leadership of Fred Soper-a Rockefeller associate. The epidemic area was divided into small zones with an antimalarial team assigned to each. With the power to enter and inspect every building, their weapons were indoor insecticides and larvicides. Drainage projects eliminated standing water, and local people were taught to cover the shallow wells that provided rural water supplies. As well as these measures, Soper set up road checkpoints over a wide area where cars and trucks were fumigated. When 12 A. gambiae were found in a car leaving the infested area, fumigation posts were established on further roads and at train stations. Ships and planes were also boarded, inspected, and fumigated. Such measures were shown to be justified when mosquitoes were found on Pan American Airways planes arriving in Brazil from Africa.

At the height of this African entomological invasion, A. gambiae was found over an area of over 12,000 square miles in three Brazilian states. In a year, Soper’s methods had driven it out of all but two small towns, which were soon dealt with by local authorities. Soper’s achievement was regarded as one of the greatest triumphs of public health, and he received many honors and accolades for saving the Americas from the world’s most dangerous malaria vector, including a gold medal that was struck to honor his leadership of the Pan American Sanitary Bureau. Soper’s philosophy was to control malaria by eradicating the mosquito vector, and a chemical was soon to be discovered that seemed to be the ideal tool for achieving that goal worldwide.

1.14 SYNTHETIC DRUGS AND INSECTICIDES UP TO THE WORLD WAR II

1.14.1

SYNTHETIC DRUGS

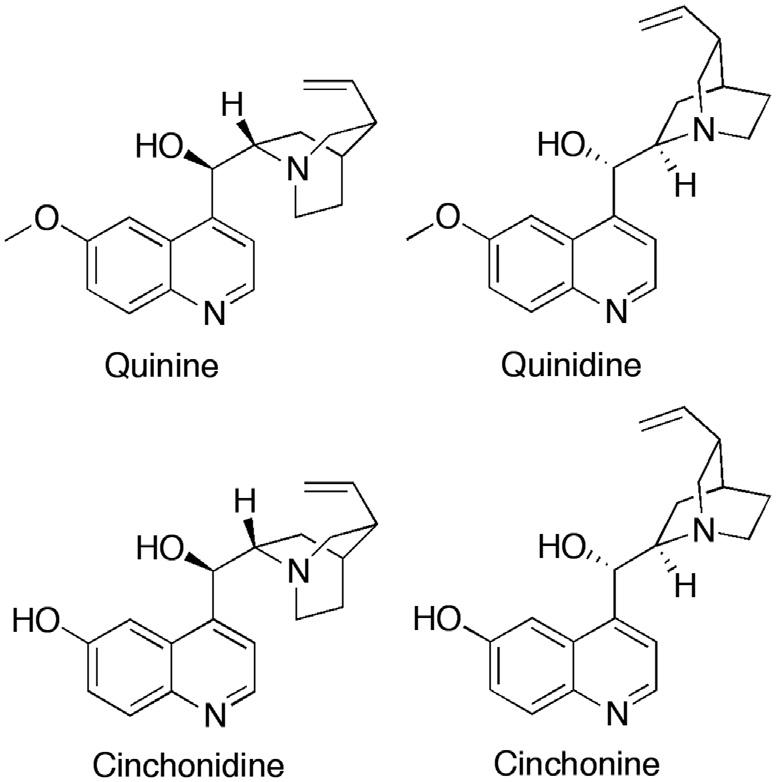

In 1854, Adolph Strecker determined the molecular formula of quinine as C20H24N2O2. Although the structure of quinine was still unknown, the 18 year-old William Henry Perkins attempted to synthesize it in 1856 via an oxidative condensation of two molecules of N-allyl toluidine. His rather simplistic rationale was that the oxidative condensation would produce a product with the same molecular formula as quinine. Not surprisingly, this proved unsuccessful and the reaction produced a complex tarry mixture. Perkin decided to repeat the experiment using aniline as a simpler amine. However, the sample of aniline was not pure and also contained ortho- and para-methylaniline. Again, Perkin obtained a complex tarry mixture. However, alcohol extraction yielded crystals of an excellent purple dye that he called mauveine. This was the first of the aniline dyes to be synthesized and it sparked the beginning of the synthetic dye industry.56 New dyes were developed, some of which were used as stains in medicine and microbiology. This facilitated improvements in microscopic analysis and microbiology, which proved invaluable in the identification of the various stages in the Plasmodium parasite’s life cycle. One of the companies that owes its origins to the production of synthetic dyes is the German company Bayer. It proved hugely successful and soon branched out into pharmaceutical research and development. One of its priorities after the World War I was to search for synthetic alternatives to quinine,

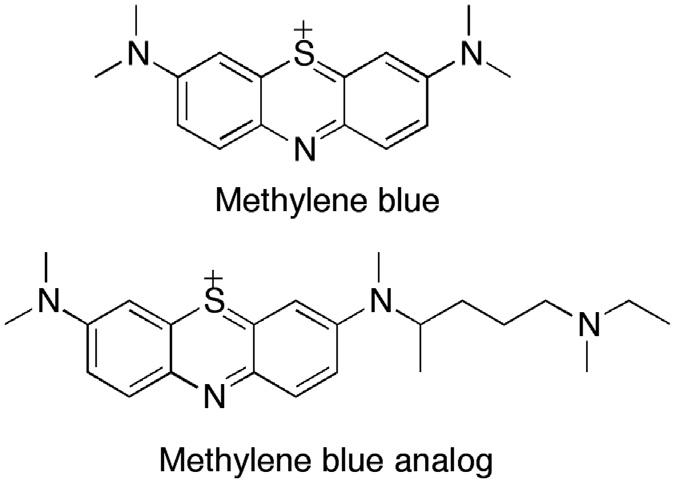

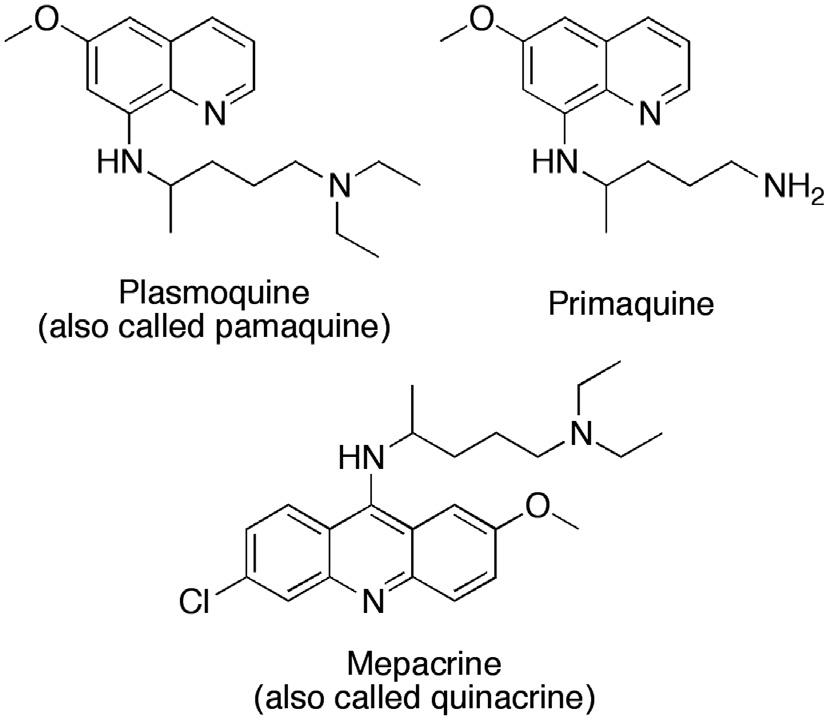

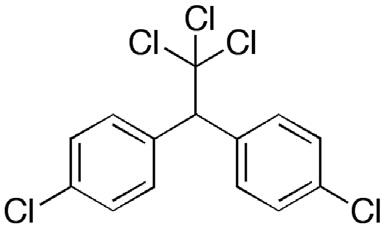

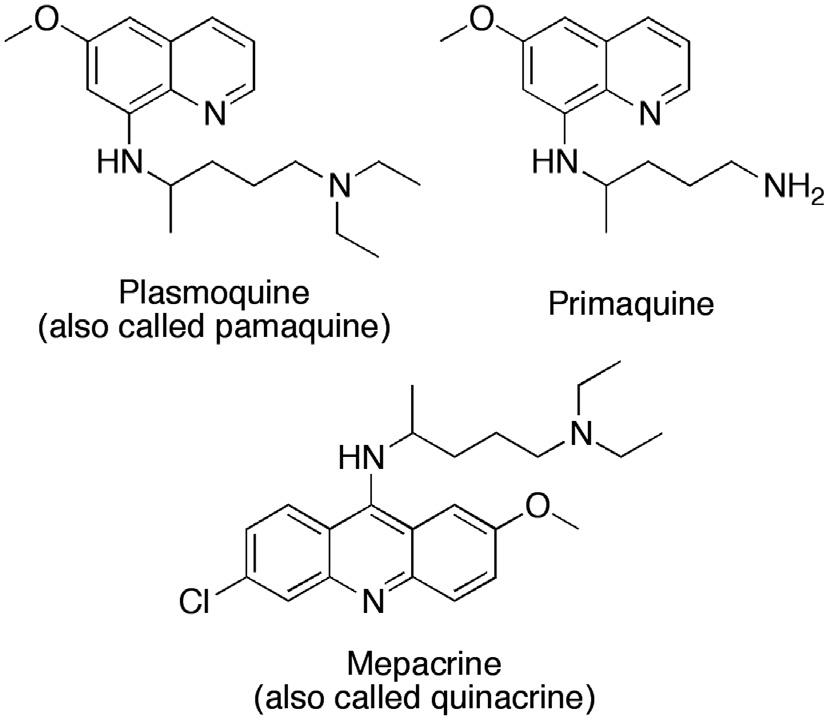

inspired by the shortage of quinine in Germany during the war years. Using methylene blue as a lead compound (Fig. 1.2), Bayer’s research team developed a number of antimalarial compounds, using an avian malaria model to test for activity. A key modification was the replacement of a methyl group with a dialkylaminoalkyl side chain to give the analog shown in Fig. 1.2. This side chain was subsequently added to different heterocyclic systems and, by 1925, Bayer had produced an 8-aminoquinoline called plasmoquine (also called plasmochin or pamaquine), which destroyed the gametocytes of P. falciparum and was the first compound to prevent relapses in P. vivax infection (Fig. 1.3).57 Clearly, this new compound had a different mode of action from quinine. However, there were problems with toxicity. Moreover, although plasmoquine was very active against avian malaria, it was less effective against human parasites. It was not until 1952 that the related compound primaquine was introduced (Fig. 1.3). This compound was better tolerated and could be used to treat latent liver parasites of P. vivax and P. ovale. Mepacrine (Fig. 1.3) (also known as Atebrin or quinacrine) was developed in 1932 and proved effective against P. falciparum 58 The structure contains the same basic side chain as plasmoquine, but it is situated on an acridine heterocyclic ring rather than a quinoline ring.

FIGURE 1.2 Structures of methylene blue and a methylene blue analog.

FIGURE 1.3 Early examples of antimalarial agents.