Visit to download the full and correct content document: https://ebookmass.com/product/programming-voice-controlled-iot-applications-with-al exa-and-raspberry-pi-john-allwork/

More products digital (pdf, epub, mobi) instant download maybe you interests ...

Programming the Raspberry Pi, Third Edition: Getting Started with Python Simon Monk

https://ebookmass.com/product/programming-the-raspberry-pi-thirdedition-getting-started-with-python-simon-monk/

Hacking Electronics: Learning Electronics with Arduino and Raspberry Pi, 2nd Edition Simon Monk

https://ebookmass.com/product/hacking-electronics-learningelectronics-with-arduino-and-raspberry-pi-2nd-edition-simon-monk/

Raspberry Pi Electronics Projects for the Evil Genius 1st Edition Norris

https://ebookmass.com/product/raspberry-pi-electronics-projectsfor-the-evil-genius-1st-edition-norris/

Arduino and Raspberry Pi Sensor Projects for the Evil Genius 1st Edition Chin

https://ebookmass.com/product/arduino-and-raspberry-pi-sensorprojects-for-the-evil-genius-1st-edition-chin/

Programming Kotlin Applications: Building Mobile and Server-Side Applications With Kotlin Brett Mclaughlin

https://ebookmass.com/product/programming-kotlin-applicationsbuilding-mobile-and-server-side-applications-with-kotlin-brettmclaughlin/

Integration of Mechanical and Manufacturing Engineering with IoT R. Rajasekar

https://ebookmass.com/product/integration-of-mechanical-andmanufacturing-engineering-with-iot-r-rajasekar/

Simple and Efficient Programming with C#: Skills to Build Applications with Visual Studio and .NET 2nd Edition Vaskaran Sarcar

https://ebookmass.com/product/simple-and-efficient-programmingwith-c-skills-to-build-applications-with-visual-studio-andnet-2nd-edition-vaskaran-sarcar/

Simple and Efficient Programming with C# : Skills to Build Applications with Visual Studio and .NET 2nd Edition Vaskaran Sarcar

https://ebookmass.com/product/simple-and-efficient-programmingwith-c-skills-to-build-applications-with-visual-studio-andnet-2nd-edition-vaskaran-sarcar-2/

Beginning Programming with Python For Dummies 3rd Edition John Paul Mueller

https://ebookmass.com/product/beginning-programming-with-pythonfor-dummies-3rd-edition-john-paul-mueller/

Programming

Voice-controlled

IoT Applications with Alexa and Raspberry Pi

import credentials constants

UTC_FORMAT = “%Y-%m-%dT%H:%M:%S.00Z”

TOKEN_URI = “https://api.amazon.com/auth/o2/token”

Token access constants

CLIENT_ID = credentials.key[‘CLIENT_ID’]

def get_access_token():

CLIENT_SECRET = credentials.key[‘CLIENT_SECRET’]

token_params = { “grant_type” : “client_credentials”, “scope”: “alexa::proactive_events”, “client_id”: CLIENT_ID, “client_secret”: CLIENT_SECRET } token_headers = { “Content-Type”: “application/json;charset=UTF-8” } response = requests.post(TOKEN_URI, headers=token_headers, books Dr John Allwork

● This is an Elektor Publication. Elektor is the media brand of Elektor International Media B.V.

PO Box 11, NL-6114-ZG Susteren, The Netherlands

Phone: +31 46 4389444

● All rights reserved. No part of this book may be reproduced in any material form, including photocopying, or storing in any medium by electronic means and whether or not transiently or incidentally to some other use of this publication, without the written permission of the copyright holder except in accordance with the provisions of the Copyright Designs and Patents Act 1988 or under the terms of a licence issued by the Copyright Licencing Agency Ltd., 90 Tottenham Court Road, London, England W1P 9HE. Applications for the copyright holder's permission to reproduce any part of the publication should be addressed to the publishers.

● Declaration

The Author and Publisher have used their best efforts in ensuring the correctness of the information contained in this book. They do not assume, and hereby disclaim, any liability to any party for any loss or damage caused by errors or omissions in this book, whether such errors or omissions result from negligence, accident, or any other cause.

All the programs given in the book are Copyright of the Author and Elektor International Media. These programs may only be used for educational purposes. Written permission from the Author or Elektor must be obtained before any of these programs can be used for commercial purposes.

● British Library Cataloguing in Publication Data

A catalogue record for this book is available from the British Library

● ISBN 978-3-89576-531-5 Print

ISBN 978-3-89576-532-2 eBook

● First edition

© Copyright 2023: Elektor International Media B.V.

Editor: Alina Neacsu

Prepress Production: Jack Jamar | Graphic Design, Maastricht

Elektor is part of EIM, the world's leading source of essential technical information and electronics products for pro engineers, electronics designers, and the companies seeking to engage them. Each day, our international team develops and delivers high-quality content - via a variety of media channels (including magazines, video, digital media, and social media) in several languages - relating to electronics design and DIY electronics. www.elektormagazine.com

Programming Voice-controlled IoT Applications with Alexa and Raspberry Pi

About the Author

Dr. John Allwork was born in 1950 in Kent, England and became interested in electronics and engineering at school. He went to Sheffield University on their BEng Electrical and Electronic Engineering course. There he developed an interest in computers and continued his education on an MSc course in Digital Electronics and Communication at UMIST. After two years working for ICL as a design, commissioning and test Engineer he returned to UMIST where he graduated with a Ph.D. in ‘Design and Development of Microprocessor Systems’.

He worked for several years in technical support and as a manager in electronics distribution, working closely with Intel Application Engineers and followed this with design work using the Inmos Transputer systems.

Having taught at Manchester Metropolitan University he retired in 2011 but has kept up his interest in electronics and programming as well as his other occupation of travelling, walking, geocaching and spending time on his allotment.

Introduction

This book is aimed at anyone who wants to learn about programming for Alexa devices and extending that to Smart Home devices and controlling hardware, in particular the Raspberry Pi.

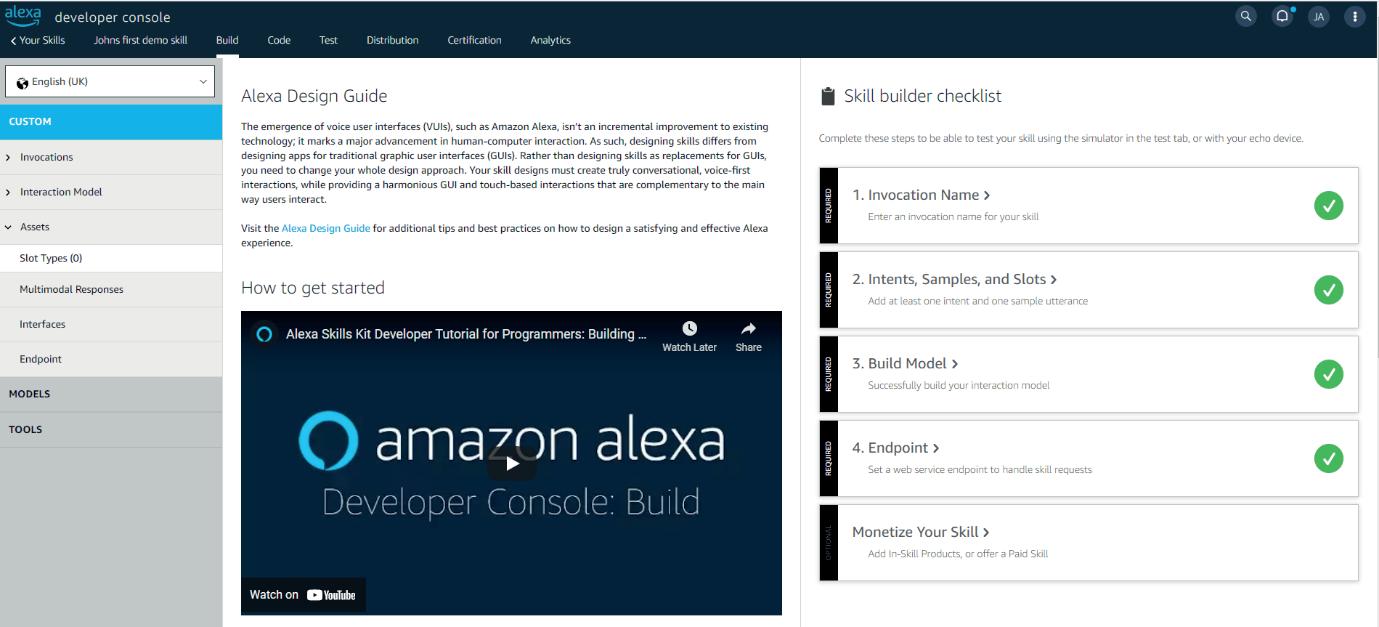

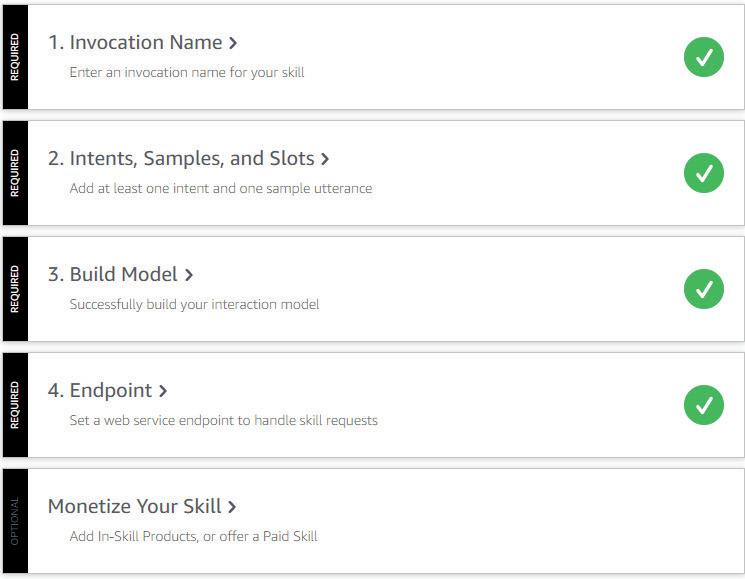

It covers Alexa programming concepts from the basic concepts of Alexa Voice service, the interaction model and the skill code which runs on AWS (Amazon Web Services) Lambda.

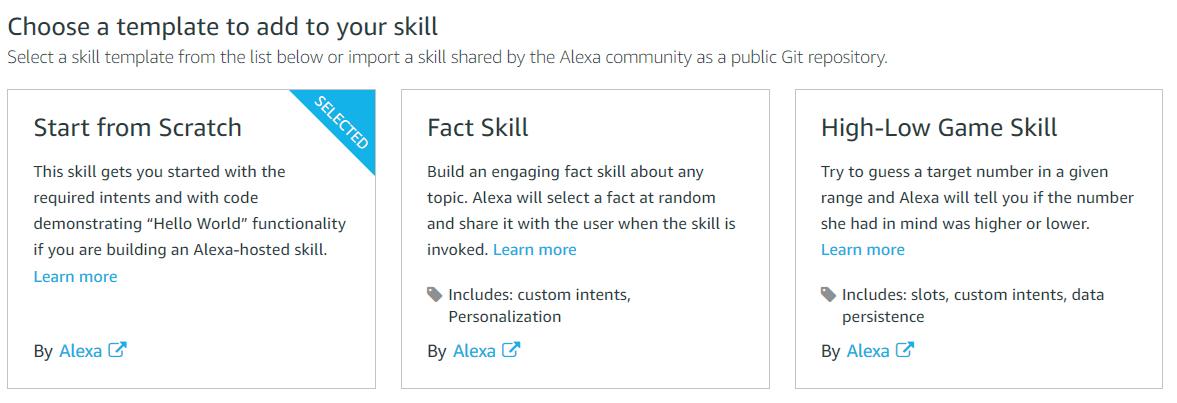

It takes the reader through all stages of creating skills to certification and publishing, including writing skills that involve in-skill purchasing. It discusses different ways of creating skills, then moves on to creating visual skills using APL (Alexa Presentation Language) for screen-based Alexa devices.

The book then moves on to cover different ways of controlling hardware including the Internet of Things and Smart Home devices. There are interfaces with the Raspberry Pi using MQTT and SQS communication, displaying on the Pi using Node-RED and Python code.

Although mostly based on Python, Node.js examples or links are also provided. The full code is provided in a separate document.

Please note that Alexa skill development, the developer console and APL versions have changed since writing this book, so please bear with the author if there are slight differences.

I do not pretend to know all there is about Alexa and Raspberry Pi programming – they seem to advance faster than I can follow! I have a background in hardware and software design. I am sure that there are areas where some programmers may be offended by my code and that there may be better ways to write it, but I have written and tried all the examples and know they work. I hope the examples will spur you on to find solutions to your own problems. Should you need more information then please try the online help and the Raspberry Pi or Alexa forums: alexa.design/slack is particularly good. There are plenty of programmers out there willing to help solve your problems, often extremely quickly; certainly faster than I would get back to you!

I wish to thank my friends for encouraging me, especially Dr. Hugh Frost, Andy Marsh and Dr. John Nichols; the Alexa staff: in particular Jeff Nunn, Jeff Blankenburg and Ryan J Lowe; helpers on the alexa.design/slack group, including Andy Whitworth; subscribers of my YouTube and GitHub channels who have made encouraging comments; and the many anonymous people on the internet, forums, blogs and websites who have answered many questions, not just my own – keep up the good work. Not least of all I would like to thank my wife Penny, for supporting me throughout.

And of course, you for buying the book!

Programming Voice-controlled IoT Applications with Alexa and Raspberry Pi

Chapter 1 • Chapter 1 Alexa History and Devices

Alexa is the virtual voice assistant and Echo is the device.

The standard Amazon Echo device general release was in 2015. In 2019, newer versions were released, with more rounded designs and better audio.

Amazon Echo Dot was released in 2016 with a smaller design than the standard Echo. Various releases and designs, including a kid’s version, have continued to the 5th generation with a clock and improved LED display in 2022.

In 2017, Amazon released a combination of the Dot and Show, called the Echo Spot. In the same year, the Echo Show was released and featured a slanted, 7-inch touchscreen, camera and speaker. This later changed to a 10-inch screen (Echo Show 10), and more recently, added a 360-rotating display.

The Echo Show 5 came in 2019, (2nd gen in 2021), as well as Echo Show 8 and an Echo Show 15 in 2021 designed for wall mounting.

There are other devices too, including the Button, Flex, Input, Look and recently the Astro robot.

Here are some of my devices (not including smart devices). From the top: Echo Show 8, Fire TV stick, Echo Auto, my original Echo dot, and the Echo Spot.

Even though many devices have a screen, you should always design for ‘voice first’.

1.1 Alexa voice service and AWS Lambda

Alexa Voice Service is Amazon’s cloud service that processes the audio, determines the appropriate action (AVS) and returns a response to the device. For the skill to produce the appropriate response, designers need to implement two major parts: the interaction model and the skill code which runs on AWS (Amazon Web Services) Lambda.

The interaction model is what your users say and how they communicate with your skill. AWS Lambda is a serverless, event-driven computing service that lets you run your code. Lambda can be triggered by many AWS services and you only pay for what you use.

When a user interacts with an Echo device, AVS sends a request to the skill which is running on AWS Lambda. The skill replies with a response that is turned into a speech and/ or visual response back to the user.

1.2 Pricing

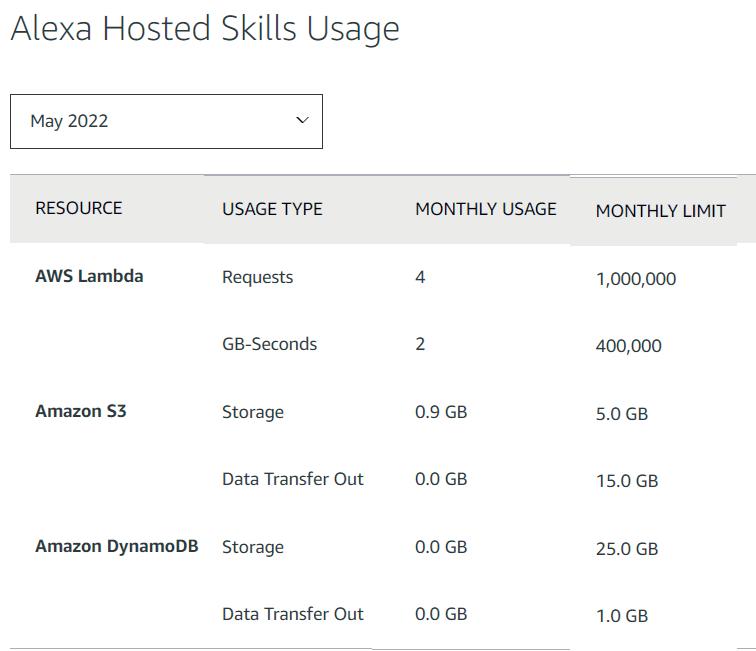

Although there are charges for AWS Lambda, the AWS Lambda free tier includes one million free requests per month and 400,000 GB-seconds of compute time per month, as well as 500 Mb storage. As you can see, this is more than enough for a beginner. For more information, see https://aws.amazon.com/lambda/pricing/

For developers whose skills use more than this, Amazon provides Promotional Credits which reward those who build cloud-hosted applications, software, or tools for sustainability-related work.

For FAQ see reference 1.

1.3

Alexa skills

There are a few different types of Alexa skills2. You may already have realized that a skill communicating with an Alexa device is different from one switching on your lights or telling you there’s someone at your front door.

Programming Voice-controlled IoT Applications with Alexa and Raspberry Pi

At the moment, there are 15 different types of Alexa skills. The more common ones are:

and Custom

Automotive applications

Voice and visual (APL) applications

Provide news and short content information

Voice and visual driven game skills

Skills to control audio content

Skills to control smart home devices

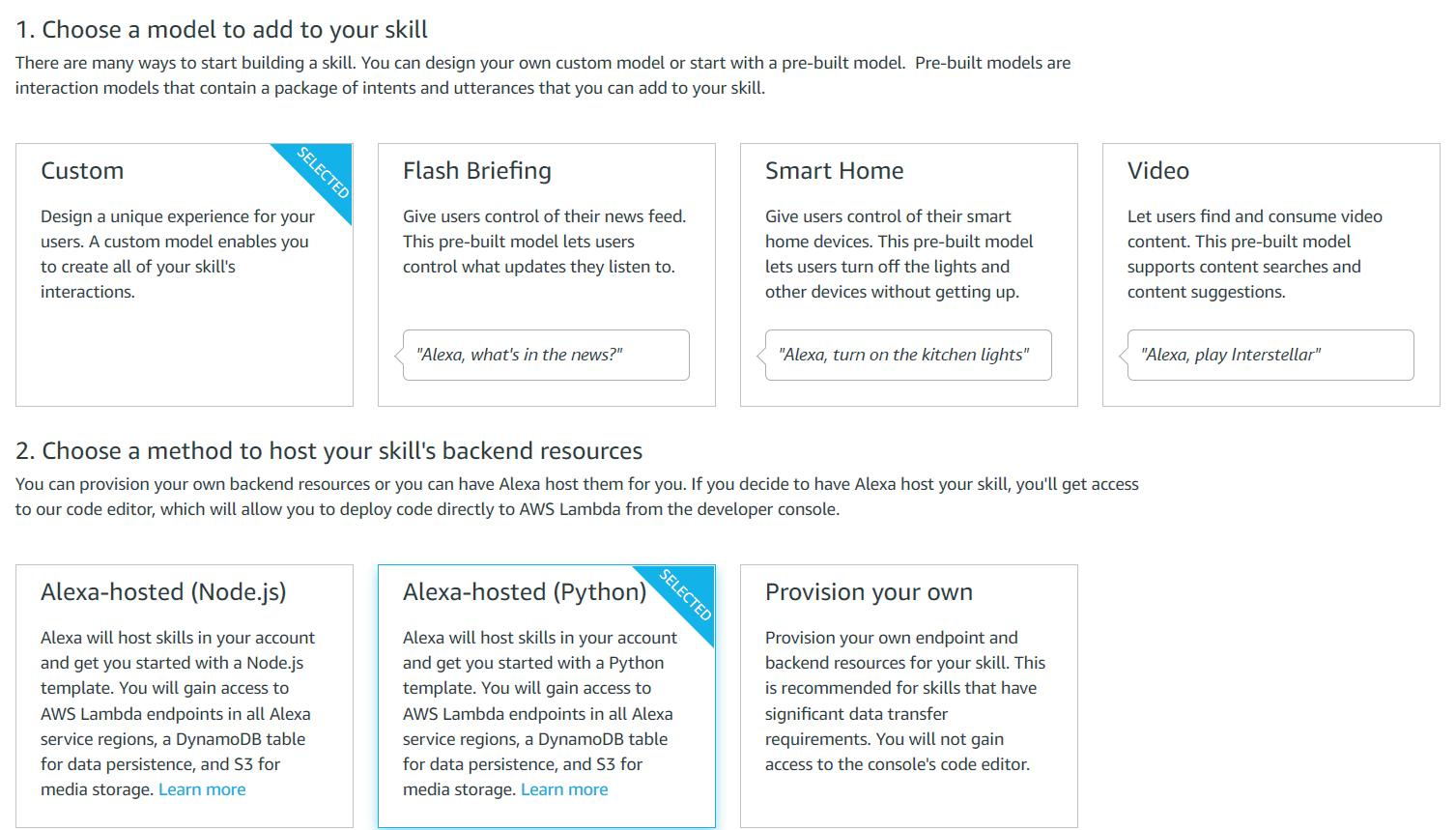

Control video devices and content

We’ll be concentrating on Custom skills. Blueprint pre-built skills are also available and easy to develop but have reduced options for user experience. We’ll also study smart home skills, of course.

1.4 Supported programming languages

AWS Lambda natively supports Java, Go, PowerShell, Node.js, C#, Python, and Ruby code. This book will mainly use Python, but it also provides code snippets and links for Node.js.

1.5 Terminology – Invocation, Utterances, Intents and Slots

As with learning anything new, there is new terminology to be understood. You will soon meet (or may already have met) Invocation, Utterances, Intents and Slots.

1.5.1 Alexa Wake word

This is the word used to start your Alexa device listening to your command. Currently, there are five wake words: ‘Alexa’ (the default), ‘Amazon’, ‘Echo’, ‘Computer’ and ‘Ziggy’. You can change these for your devices, but not invent new ones.

1.5.2 Invocation

The ‘invocation’ is the phrase used to trigger your skill, e.g.: ‘Alexa, open Johns’ weather skill’ or ‘Alexa, launch my cooking skill’.

1.5.3 Utterances

Utterances are the phrases that your user says to make a request. There can be many ways to achieve the same result, e.g.: What’s the time? What’s the time now? What time is it? – you will have to think of as many possible ways that your user can interact with your skill. Nevertheless, Alexa will build your model and try to find similar utterances.

All the possible ways to do this can be difficult to describe (considering, for instance, how many different ways and types of pizza someone might order), so Amazon has recently announced Alexa Conversations to help with this. For more information, see reference 3.

1.5.4 Intents and requests

The utterances are linked to one intent in the code. For instance, all the ‘time’ utterances would be linked to the same intent, e.g., GetTimeIntent, and this would trigger a GetTimeIntent function in our skill code.

There are two types of Intents:

• Built-in Intents

- Standard built-in intents: These are provided by default by Amazon that every skill must have, e.g.: AMAZON.StopIntent, AMAZON.CancelIntent, AMAZON.FallbackIntent, etc. and include: AMAZON.YesIntent, and AMAZON.NoIntent, intents for screen control (e.g., scroll up/ down/ left / right) and media intents (pause, repeat, resume), and also an AMAZON.SendToPhoneIntent. You can see these when you add an intent, and select “Use an existing intent from Alexa’s built-in library”

- The Alexa Skills Kit also provides a library of specific built-in intents and includes intents such as Actor intents, Books, Calendar, LocalBusiness, Music, TV, Series, WeatherForecast, etc.

These intend to add functionality to your skill without you having to provide any sample utterances. For example, the WeatherForecast includes a search action (What is), an object (WeatherForecast), location (London) and date (tomorrow) . We won’t cover them in this book, see:

https://developer.amazon.com/en-US/docs/alexa/custom-skills/built-in-intent-library.html

• Custom Intents

These are created as required for the skill (e.g., GetTimeIntent) If you use an Amazon template, the code for the built-in intents is provided for you. There are three types of requests that the skill can send:

• A Launch request that runs when our skill is invoked (as a result of the user saying, ‘Alexa open …’ or ‘Alexa, launch ...’).

• An Intent request which contains the intent name and variables passed as slot values.

• A SessionEnded request, which occurs when the user exits the skill, or there is an unmatched user’s response (although you may be able to trap this out with AMAZON. FallbackIntent).

This information is all packaged and sent as a request (and returned as a response) as a JSON file. We’ll look at the JSON code later.

1.5.5 Slots

A slot is a variable that contains information that is passed to an intent. The user might say ‘What’s the time in London’. Here ‘London’ (or Paris or Rome) is passed as a slot variable to the intent code.

Programming Voice-controlled IoT Applications with Alexa and Raspberry Pi

Amazon provides built-in slot types, such as numbers, dates and times, as well as builtin list types such as actors, colors, first names, etc. In the previous example, we could use AMAZON.GB_CITY which provides recognition of over 15,000 UK and world-wide cities used by UK speakers.

However, some of these slots are being deprecated (including AMAZON.GB_CITY in favour of AMAZON.CITY), so check. The full list is covered at ‘List Slot Types:

https://developer.amazon.com/en-US/docs/alexa/custom-skills/slot-type-reference.html#list-slot-types

Alexa slot types fall into the following general categories:

• Numbers, Dates, and Times

• Phrases

• Lists of Items

Developers can create custom slots for variables that are specific to their skill. When we define our utterances, slots are shown in curly braces: {city}, e.g.:

Example:

Intent

GetTimeIntent

Utterance What is the time in Slot {city}

Utterances can have many slots and slot types.

The GetTimeIntent will trigger a function in your skill (which you might sensibly call GetTimeIntentFunction).

Slots are used to pass data from your VUI (voice user interface) to your program. As an example, we might have an Alexa skill that asks for your name.

The VUI might go like this:

User: “Alexa, Open What’s my name” (Invoke the skill – the launch request executes)

Alexa: “Welcome, please tell me your name”

User: “My name is John” (“John” is passed in a slot to myName intent)

Alexa: “Hello John” (Your intent picks John from the slot passed to it and responds)

●

At this point, the slot data is lost unless you save it. You can save data in a temporary folder but more often data is stored in session attributes, you will find out later.

1.5.6 Interaction model

The combination of the utterances and their related intents and slots make up the interaction model. This needs to be built by the developer console, and in doing so Alexa may recognize further utterances similar to those you have defined.

1.5.7 Endpoints

The endpoint is where your code is hosted. You can choose an Amazon-hosted, AWS Lambda ARN (Amazon Resource Name) site or host it yourself on an HTTPS site that you manage.

If you choose an AWS site, it will give you an ID beginning arn:aws:lambda and look like: arn:aws:lambda:<region>:function:<functionID>. You skill also has an ID looking something like this: amzn1.ask.skill.a0093469-4a50-4428-82e6-abcde990fgh3.

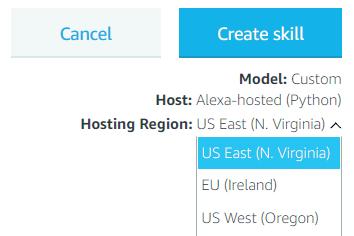

1.5.8 Regions

If using an AWS-hosted site, you should host your code in a region near to your user. We’ll see that there are lots of regions, but for some skills currently only North Virginia is available.

1.6 Skill Sessions

The period that your skill runs for is called a session. A skill session begins when a user invokes your skill and Alexa sends your skill a request. Your skill receives the request and returns a response for Alexa to speak to the user.

If the shouldEndSession parameter is ‘true’ the skill terminates, otherwise, the session remains open and expects the user to respond. If no user input occurs, a reprompt is sent if included in the code. If the user still doesn’t respond (after about 8 seconds), the session ends 4.

Skill connections and progressive responses may override these rules. For example, if a skill has to get further information from another source, e.g., when your taxi will be available, or pizza delivered.

1.7 Session attributes

Session attributes are used to hold data during a session, for example, your user’s name. When the session finally ends, the data is lost. To prevent this from happening, data can be stored permanently in persistent attributes. This can be held in a DynamoDb database which is provided as one of the AWS services and easily accessed using an Alexa-Hosted Skill. With an Alexa-Hosted Skill, you can build your model, edit your code and publish your skill all from within the developer console.

1.8 Request and response JSON

We saw in the figure above how the request is sent from the user (Alexa Voice Service) to your code and how the response is returned from your code. This data is passed in a JSON format. JSON (JavaScript Object Notation) is a lightweight data-interchange format. It is easy for humans to read and write and easy for machines to parse and generate” 5

The basic JSON request contains information on the active session, the context, the system information on the application (ID), user, deviceID, and the request itself:

{

“version”: “1.0” , “session”: { ( ..session parameters) }

“context”: { ( ..information on the Alexa device)

}

“System”: { …

“request”: {

“type”: “IntentRequest” , “requestId”: “amzn1.echo-api.request.745d…9a” ,

“locale”: “en-GB” ,

“timestamp”: “2022-04-14T09:27:01Z” ,

“intent”: { “name”: “HelloWorldIntent” , “confirmationStatus”: “NONE”

}

}

The reply JSON contains is the response speech and reprompt, as well as the state of the EndSession and session attributes.

{

“body”: {

“version”: “1.0” , “response”: {

“outputSpeech”: { “type”: “SSML” , “ssml”: “<speak>Welcome, you can say Hello or Help.</speak>”

},

“reprompt”: { “outputSpeech”: { “type”: “SSML” , “ssml”: “<speak>Welcome, you can say Hello or Help.</speak>” }

},

“shouldEndSession”: false, “type”: “_DEFAULT_RESPONSE” },

“sessionAttributes”: {},

“userAgent”: “ask-python/1.11.0 Python/3.7.12” } }

The response can get more complicated if there is a dialog session occurring (i.e., if the program hasn’t enough information to complete the intent request and has to ask for more).

We’ll look at the information passed in the JSON request and response and how to extract it in a later chapter.

1.9 Blueprint skills

Alexa provides blueprint skills, where you can ‘fill in the blanks’ to make your skill. These are worth looking at for some fun and information presenting skills.

The current categories are: At home, Kids recommended, Learning and knowledge, Fun and Games, Storyteller, Greetings and Occasions, Communities and Organizations, and Business 6. We won’t cover them here.

1.10 Summary

We’ve seen how the Alexa devices have developed from the original voice-only device to screen-based and robot devices, how the Alexa Voice service works and looked at terminology – Invocation, Utterances, Intents and Slots. Finally, we looked at a skill session and how data is passed and saved during a session and between sessions.

In the next chapter, we’ll see how to set up an Alexa account before moving on to our first Alexa skill.

1.11 References:

1. https://aws.amazon.com/lambda/faqs/

2. https://developer.amazon.com/en-US/docs/alexa/ask-overviews/list-of-skills.html

3. https://www.youtube.com/watch?v=1nYfRvg976E

4. https://developer.amazon.com/en-US/docs/alexa/custom-skills/ manage-skill-session-and-session-attributes.html

5. https://www.json.org/json-en.html

6. https://blueprints.amazon.com