Visit to download the full and correct content document: https://ebookmass.com/product/program-evaluation-for-social-workers-foundations-of -evidence-based-programs-7th-edition-ebook-pdf/

More products digital (pdf, epub, mobi) instant download maybe you interests ...

Program Evaluation for Social Workers: Foundations of Evidence-Based Programs Richard M. Grinnell Jr

https://ebookmass.com/product/program-evaluation-for-socialworkers-foundations-of-evidence-based-programs-richard-mgrinnell-jr/

Program Evaluation: An Introduction to an EvidenceBased Approach 6th Edition

https://ebookmass.com/product/program-evaluation-an-introductionto-an-evidence-based-approach-6th-edition/

Statistics for Evidence-Based Practice and Evaluation (SW 318 Social Work Statistics) – Ebook PDF Version

https://ebookmass.com/product/statistics-for-evidence-basedpractice-and-evaluation-sw-318-social-work-statistics-ebook-pdfversion/

Empowerment Series: Psychopathology: A Competency based Assessment Model for Social Workers 4th Edition, (Ebook PDF)

https://ebookmass.com/product/empowerment-series-psychopathologya-competency-based-assessment-model-for-social-workers-4thedition-ebook-pdf/

Principles

of

Research and Evaluation for Health Care Programs

https://ebookmass.com/product/principles-of-research-andevaluation-for-health-care-programs/

The ABCs of Evaluation: Timeless Techniques for Program and Project Managers (Research Methods for the Social Sciences Book 56) 3rd Edition, (Ebook PDF)

https://ebookmass.com/product/the-abcs-of-evaluation-timelesstechniques-for-program-and-project-managers-research-methods-forthe-social-sciences-book-56-3rd-edition-ebook-pdf/

Health Program Planning and Evaluation 3rd Edition, (Ebook PDF)

https://ebookmass.com/product/health-program-planning-andevaluation-3rd-edition-ebook-pdf/

Clinician’s Guide to Research Methods in Family Therapy: Foundations of Evidence Based Practice 1st Edition, (Ebook PDF)

https://ebookmass.com/product/clinicians-guide-to-researchmethods-in-family-therapy-foundations-of-evidence-basedpractice-1st-edition-ebook-pdf/

Program Evaluation: Methods and Case Studies 9th Edition, (Ebook PDF)

https://ebookmass.com/product/program-evaluation-methods-andcase-studies-9th-edition-ebook-pdf/

Preface

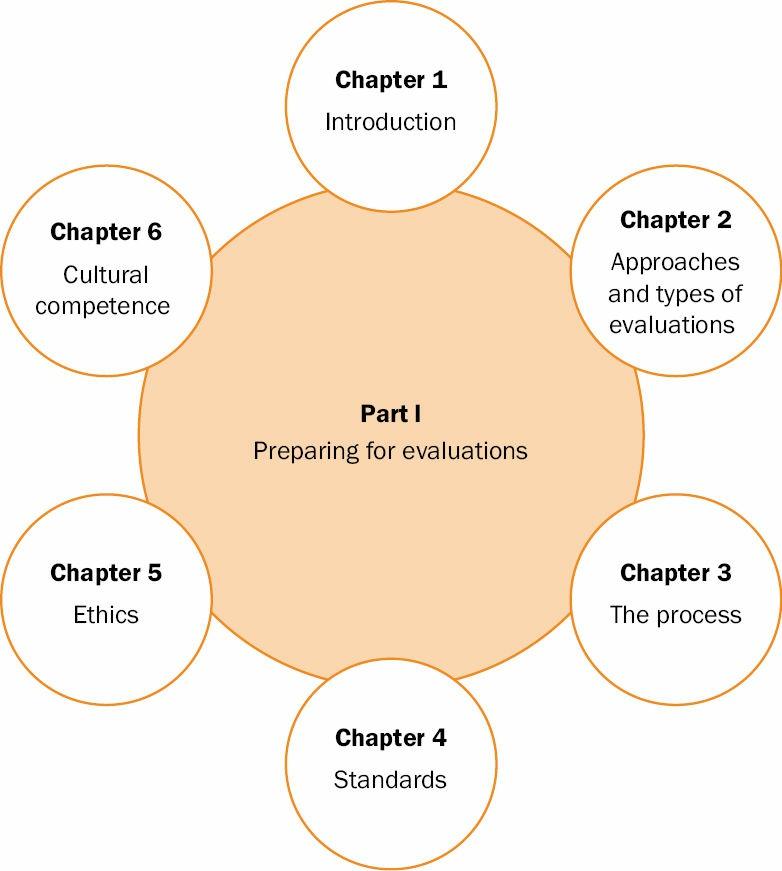

PARTI:PREPARINGFOREVALUATIONS

1.TowardAccountability

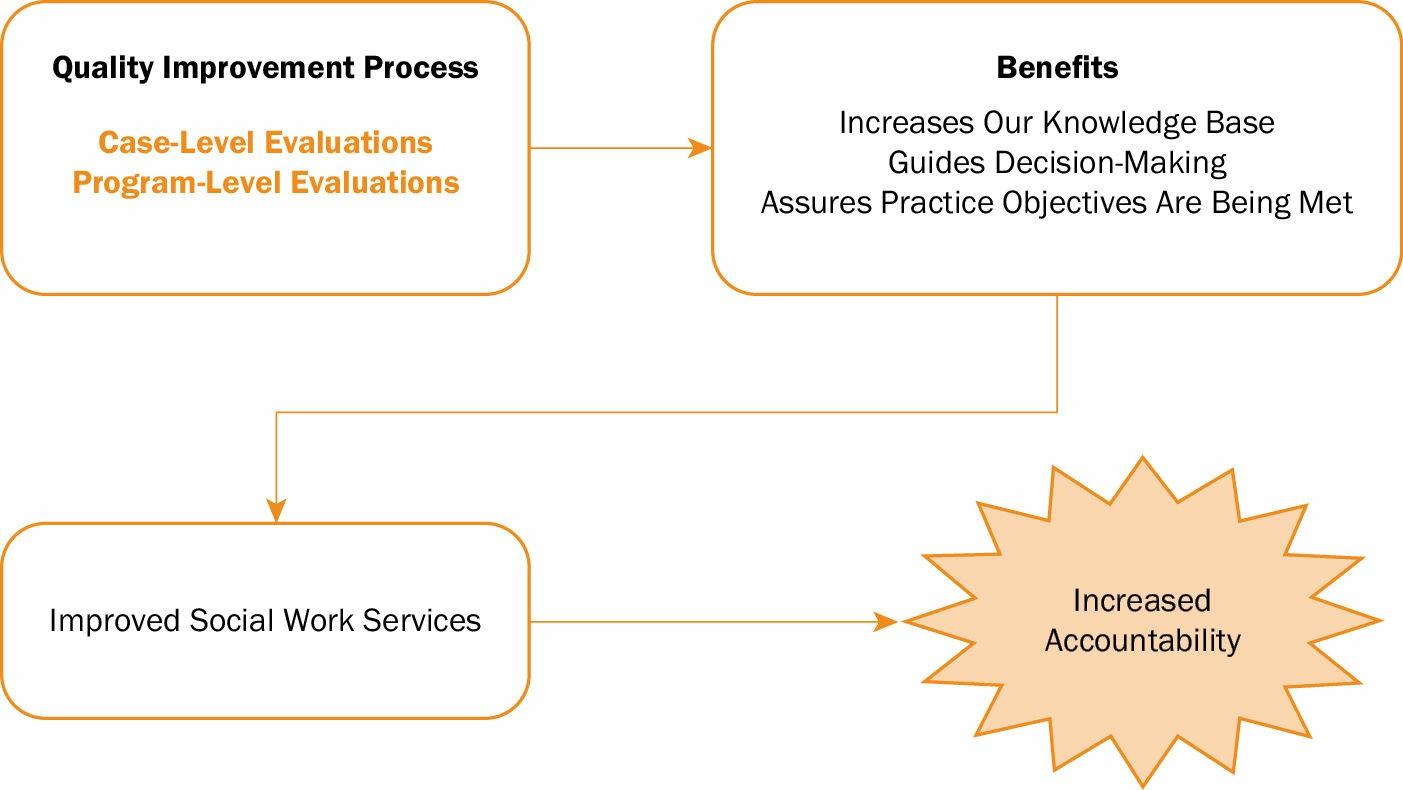

TheQualityImprovementProcess Case-LevelEvaluations Program-LevelEvaluations

Myth PhilosophicalBias

Fear(EvaluationPhobia)

ThePurposeofEvaluations IncreaseOurKnowledgeBase GuideDecision-MakingatAllLevels AssureThatClientObjectivesAreBeingMet AccountabilityCanTakeManyForms

ScopeofEvaluations

Person-In-EnvironmentPerspective Example:You’retheClient Program-In-EnvironmentPerspective Example:You’retheSocialWorker Research≠Evaluation

Data≠Information

Definition

Summary

2 ApproachesandTypesofEvaluations

TheProjectApproach

CharacteristicsoftheProjectApproach

TheMonitoringApproach CharacteristicsoftheMonitoringApproach AdvantagesoftheMonitoringApproach

FourTypesofEvaluations NeedsAssessment ProcessEvaluations

OutcomeEvaluations

EfficiencyEvaluations

InternalandExternalEvaluations

Summary

3 TheProcess

TheProcess

Step1:EngageStakeholders WhyStakeholdersAreImportanttoanEvaluation

TheRoleofStakeholdersinanEvaluation

STEP2:DescribetheProgram

UsingaLogicModeltoDescribeYourProgram

Step3:FocustheEvaluation TypesofEvaluations

NarrowingDownEvaluationQuestions

Step4:GatheringCredibleData

Step5:JustifyingYourConclusions

Step6:EnsuringUsageandSharingLessonsLearned Summary

4 Standards

TheFourStandards Utility Feasibility Propriety Accuracy StandardsVersusPolitics WhenStandardsAreNotFollowed Summary

5 Ethics

CodeofEthics

Step3:FocusingtheEvaluation

Step3A:RefineEvaluationQuestionThroughtheLiterature

Step3B:SelectinganEvaluationDesign

Step3C:SpecifyingHowVariablesAreMeasured

Step4:GatheringData

Step4A:SelectingEvaluationParticipants

Step4B:SelectingaDataCollectionMethod

Step4C:AnalyzingtheData

Step6:EnsureUsageandShareLessonsLearned RevealingNegativeFindings

SpecialConsiderations InternationalResearch ComputerandInternet-BasedResearchGuidance StudentsasSubjects/StudentsasResearchers Summary

6 CulturalCompetence

OurVillage WorkingwithStakeholderGroups YourEvaluationTeam

TheImpactofCulture

BridgingtheCultureGap CulturalAwareness

InterculturalCommunication

CulturalFrameworks

OrientationtoData

Decision-Making

Individualism

Tradition

PaceofLife

CulturallyCompetentEvaluators

DevelopCulturalAwareness

DevelopInterculturalCommunicationSkills

DevelopSpecificKnowledgeabouttheCulture

DevelopanAbilitytoAdaptEvaluations

Summary

PARTII:DESIGNINGPROGRAMS

7 TheProgram

TheAgency MissionStatements Goals

TheProgram NamingPrograms

AnAgencyVersusaProgram DesigningPrograms

Evidence-BasedPrograms WritingProgramGoals PreparingforUnintendedConsequences ProgramGoalsVersusAgencyGoals ProgramObjectives

Knowledge-BasedObjectives Affect-BasedObjectives

BehaviorallyBasedObjectives WritingProgramObjectives

Specific(S) Measurable(M) Achievable(A) Realistic(R) TimePhased(T) Indicators PracticeObjectives Example:Bob’sSelf-Sufficiency PracticeActivities

LogicModels PositionsYourProgramforSuccess SimpleandStraightforwardPictures ReflectGroupProcessandSharedUnderstanding ChangeOverTime Summary

8 TheoryofChangeandProgramLogicModels

ModelsandModeling ConceptMaps

TwoTypesofModels:OneLogic Examples

LogicModelsandEvaluationDesign Limitations

ModelsBeginwithResults

LogicModelsandEffectiveness

BasicProgramLogicModels

AssumptionsMatter

KeyElementsofProgramLogicModels

NonlinearProgramLogicModels

HiddenAssumptionsandDose

BuildingaLogicModel

FromStrategytoActivities

ActionStepsforaProgramLogicModel

CreatingYourProgramLogicModel

Summary

PARTIII:IMPLEMENTINGEVALUATIONS

9 PreparingforanEvaluation

PlanningAhead

Strategy1:WorkingwithStakeholders Strategy2:ManagingtheEvaluation Strategy3:Pilot-Testing Strategy4:TrainingDataCollectionStaff Strategy5:MonitoringProgress

Strategy6:ReportingResults InterimReporting

DisseminatingFinalResults

Strategy7:DevelopingaPlan Strategy8:DocumentingLessonsLearned Strategy9:LinkingBacktoyourEvaluationPlan

Summary

10.NeedsAssessments

WhatareNeedsAssessments?

DefiningSocialProblems

SocialProblemsMustBeVisible

DefiningSocialNeeds

TheHierarchyofSocialNeeds

FourTypesofSocialNeeds

PerceivedNeeds

NormativeNeeds

RelativeNeeds

ExpressedNeeds

SolutionstoAlleviateSocialNeeds

StepsinDoinganeedsAssessment

Step3A:FocusingtheProblem Example

Step4A:DevelopingNeedsAssessmentQuestions

Step4B:IdentifyingTargetsforIntervention

EstablishingTargetParameters

SelectingDataSources(Sampling)

Step4C:DevelopingaDataCollectionPlan ExistingReports

SecondaryData

IndividualInterviews

GroupInterviews

TelephoneandMailSurveys

Step4D:AnalyzingandDisplayingData

QuantitativeData

QualitativeData

Step6A:DisseminatingandCommunicatingEvaluationResults

Summary

11 ProcessEvaluations

Definition

Example

Purpose

ImprovingaProgram’sOperations GeneratingKnowledge EstimatingCostEfficiency

Step3A:DecidingWhatQuestionstoAsk

Question1:WhatIstheProgram’sBackground?

Question2:WhatIstheProgram’sClientProfile?

Question3:WhatIstheProgram’sStaffProfile?

Question4:WhatIstheAmountofServiceProvidedtoClients?

Question5:WhatAretheProgram’sInterventionsandActivities?

Question6:WhatAdministrativeSupportsAreinPlace?

Question7:HowSatisfiedAretheProgram’sStakeholders?

Question8:HowEfficientIstheProgram?

Step4A:DevelopingDataCollectionInstruments

EasytoUse

AppropriatetotheFlowofaProgram’sOperations ObtainingUserInput

Step4B:DevelopingaDataCollectionMonitoringSystem

DeterminingNumberofCasestoInclude DeterminingTimestoCollectData SelectingaDataCollectionMethod(s)

Step4C:ScoringandAnalyzingData

Step4D:DevelopingaFeedbackSystem

Step6A:DisseminatingandCommunicatingEvaluationResults

Summary

12 OutcomeEvaluations

Purpose Uses

ImprovingProgramServicestoClients GeneratingKnowledgefortheProfession

Step3:SpecifyingProgramObjectives PerformanceIndicatorsVersusOutcomeIndicators

Step4A:MeasuringProgramObjectives Pilot-TestingtheMeasuringInstrument

Step4B:DesigningaMonitoringSystem

HowManyClientsShouldBeIncluded? WhenWillDataBeCollected? HowWillDataBeCollected?

Step4C:AnalyzingandDisplayingData

Step4D:DevelopingaFeedbackSystem

Step6A:DisseminatingandCommunicatingEvaluationResults

Summary

13.EfficiencyEvaluations

CostEffectivenessVersusCost–Benefit

WhentoEvaluateforEfficiency

Step3A:DecidingonanAccountingPerspective TheIndividualProgram’sParticipants’Perspective TheFundingSource’sPerspective

ApplyingtheProcedure

Step3B:SpecifyingtheCost–BenefitModel LookingatCosts

LookingatBenefits

ApplyingtheProcedure

Step4A:DeterminingCosts

DirectCosts

IndirectCosts

ApplyingtheProcedure

Step4B:DeterminingBenefits

ApplyingtheProcedure

Step4C:AdjustingforPresentValue

ApplyingtheProcedure

Step4D:CompletingtheCost–BenefitAnalysis

ApplyingtheProcedure

Cost-EffectivenessAnalyses

ApplyingtheProcedure

AFewWordsaboutEfficiency-FocusedEvaluations

Summary

PARTIV:MAKINGDECISIONSWITHDATA

14 DataInformationSystems

Purpose

Workers’Roles AdministrativeSupport CreatingaCultureofExcellence

EstablishinganOrganizationalPlan

CollectingCase-LevelData

CollectingProgram-LevelData

CollectingDataatClientIntake

CollectingDataatEachClientContact

CollectingDataatClientTermination

CollectingDatatoObtainClientFeedback

ManagingData

ManagingDataManually

ManagingDatawithComputers

WritingReports

ALooktotheFuture

Summary

15 MakingDecisions

UsingObjectiveData

Advantages

Disadvantages

UsingSubjectiveData

Advantages

Disadvantages

MakingCase-LevelDecisions

Phase1:EngagementandProblemDefinition

Phase2:PracticeObjectiveSetting

Phase3:Intervention

Phase4:TerminationandFollow-Up

MakingProgram-LevelDecisions

ProcessEvaluations

OutcomeEvaluations

OutcomeDataandProgram-LevelDecision-Making

AcceptableResults

MixedResults

InadequateResults

Benchmarks

ClientDemographics

ServiceStatistics

QualityStandards

Feedback

ClientOutcomes

Summary

PARTV:EVALUATIONTOOLKIT

ToolA HiringanExternalEvaluator

PrincipleDuties

Knowledge,Skills,andAbilities

OverarchingItems

Step1:EngageStakeholders

Step2:DescribetheProgram

Step3:FocustheEvaluationDesign

Step4:GatherCredibleData

Step5:JustifyConclusions

Step6:EnsureUseandShareLessonsLearned

ToolB WorkingwithanExternalEvaluator

NeedforanExternalEvaluator WorkingwithExternalEvaluators

SelectinganEvaluator

ManaginganExternalEvaluator

ToolC ReducingEvaluationAnxiety

Stakeholders’Anxiety Evaluators’Anxiety InteractiveEvaluationPracticeContinuum

EvaluationCapacityBuildingFramework

DualConcernsModel

ToolD ManagingEvaluationChallenges

EvaluationContext

EvaluationLogistics

DataCollection

DataAnalysis

DisseminationofEvaluationFindings

ToolE UsingCommonEvaluationDesigns

One-GroupDesigns

One-GroupPosttest-OnlyDesign

Cross-SectionalSurveyDesign

LongitudinalDesigns

CohortStudies

One-GroupPretest–PosttestDesign

InterruptedTime-SeriesDesign

InternalValidity History

Maturation Testing

InstrumentationError

StatisticalRegression

DifferentialSelectionofEvaluationParticipants

Mortality

ReactiveEffectsofResearchParticipants

InteractionEffects

RelationsbetweenExperimentalandControlGroups

Two-GroupDesigns

ComparisonGroupPretest–PosttestDesign

ComparisonGroupPosttest-OnlyDesign

ClassicalExperimentalDesign

RandomizedPosttest-OnlyControlGroupDesign

ExternalValidity

Selection–TreatmentInteraction

SpecificityofVariables

Multiple-TreatmentInterference

ResearcherBias

Summary

ToolF BudgetingforEvaluations

HowtoBudgetforanEvaluation

HistoricalBudgetingMethod

RoundtableBudgetingMethod

TypesofCoststoConsiderinBudgetingforanEvaluation

ToolG UsingEvaluationManagementStrategies

Strategy1:EvaluationOverviewStatement

Strategy2:CreatingaRolesandResponsibilitiesTable

Strategy3:Timelines

BasicYearlyProgressTimeline

MilestoneTable

GanttChart

SharedCalendar

Strategy4:PeriodicEvaluationProgressReports

EvaluationProgressReport

EvaluationStatusReport

ToolH DataCollectionandSamplingProcedures

DataSource(S)

People

ExistingData

PeopleorExistingData?

SamplingMethods

ProbabilitySampling

NonprobabilitySampling

CollectingData

ObtainingExistingData

ObtainingNewData

DataCollectionPlan

Summary

ToolI TrainingandSupervisingDataCollectors

IdentifyingWhoNeedstoBeTrained

SelectingYourTrainingMethod

DefiningYourTrainingTopics

BackgroundMaterial

DataCollectionInstructions

OtherTrainingTopics

TipsforSuccessfulDataCollectionTraining

ToolJ EffectiveCommunicationandReporting

DevelopingYourCommunicationsPlan

ShortCommunications

InterimProgressReports

FinalReports

IdentifyingYourAudiences

ReportingFindings:PrioritizingMessagestoAudienceNeeds CommunicatingPositiveandNegativeFindings

TimingYourCommunications

MatchingCommunicationChannelsandFormatstoAudienceNeeds

DeliveringMeaningfulPresentations

PuttingtheResultsinWriting

CommunicationsThatBuildEvaluationCapacity

ToolK. DevelopinganActionPlan

ToolL MeasuringVariables

WhyMeasure?

Objectivity

Precision

LevelsofMeasurement

NominalMeasurement

OrdinalMeasurement

IntervalMeasurement

RatioMeasurement

DescribingVariables

Correspondence

Standardization

Quantification

Duplication

CriteriaforSelectingaMeasuringInstrument

Utility

SensitivitytoSmallChanges

Reliability

Validity

ReliabilityandValidityRevisited MeasurementErrors

ConstantErrors

RandomErrors

ImprovingValidityandReliability

Summary

ToolM MeasuringInstruments

TypesofMeasuringInstruments

JournalsandDiaries

Logs

Inventories

Checklists

SummativeInstruments

StandardizedMeasuringInstruments

EvaluatingInstruments

AdvantagesandDisadvantages

LocatingInstruments

Summary

Glossary References

Credits

Index

Preface

The first edition of this book appeared on the scene over two decades ago. As with the previous six editions, this one is also geared for graduate-level social work students as their first introduction to evaluating social serviceprograms Wehaveselectedandarrangeditscontentsoitcanbemainlyusedinasocialworkprogram evaluation course. Over the years, however, it has also been adopted in graduate-level management courses, leadership courses, program design courses, program planning courses, social policy courses, and as a supplementary text in research methods courses, in addition to field integration seminars Ideally, students shouldhavecompletedtheirrequiredfoundationalresearchmethodscoursepriortothisone.

Before we began writing this edition we asked ourselves one simple question: “What can realistically be covered in a one-semester course?” You’re holding the answer to our question in your hands; that is, students caneasilygetthroughtheentirebookinonesemester.

GOAL

Our principal goal is to present only the core material that students realistically need to know in order for them to appreciate and understand the role that evaluation has within professional social work practice Thus unnecessary material is avoided at all costs. To accomplish this goal, we strived to meet three highly overlappingobjectives:

1 Topreparestudentstocheerfullyparticipateinevaluativeactivitieswithintheprogramsthathirethem aftertheygraduate.

2 Topreparestudentstobecomebeginningcriticalproducersandconsumersoftheprofessionalevaluative literature

3.Mostimportant,topreparestudentstofullyappreciateandunderstandhowcase-andprogram-level evaluationswillhelpthemtoincreasetheireffectivenessasbeginningsocialworkpractitioners

CONCEPTUALAPPROACH

With our goal and three objectives in mind, our book presents a unique approach in describing the place of evaluation in the social services Over the years, little has changed in the way in which most evaluation textbooks present their material. A majority of texts focus on program-level evaluation and describe project types of approaches; that is, one-shot approaches implemented by specialized evaluation departments or externalconsultants Ontheotherhand,afewrecentbooksdealwithcase-levelevaluationbutplacethemost emphasisoninferentiallypowerful butdifficulttoimplement experimentalandmultiplebaselinedesigns.

Thisbookprovidesstudentswithasoundconceptualunderstandingofhowtheideasofevaluationcanbeusedin thedeliveryofsocialservices.

We collectively have over 100 years of experience of doing case- and program-level evaluations within the social services Our experiences have convinced us that neither of these two distinct approaches adequately reflects the realities in our profession or the needs of beginning practitioners. Thus we describe how data obtained through case-level evaluations can be aggregated to provide timely and relevant data for programlevel evaluations Such information, in turn, is the basis for a quality improvement process within the entire organization.We’reconvincedthatthisintegrationwillplayanincreasinglyprominentroleinthefuture.

We have omitted more advanced methodological and statistical material such as a discussion of celeration lines, autocorrelation, effect sizes, and two standard-deviation bands for case-level evaluations, as well as advancedmethodologicalandstatisticaltechniquesforprogram-levelevaluations.

Theintegrationofcase-andprogram-levelevaluationapproachesisoneoftheuniquefeaturesofthisbook

Some readers with a strict methodological orientation may find that our approach is simplistic, particularly the material on the aggregation of case-level data We are aware of the limitations of the approach, but we firmly believe that this approach is more likely to be implemented by beginning practitioners than are other morecomplicated,technicallydemandingapproaches.It’sourviewthatit’spreferabletohavesuchdata,even if they are not methodologically “airtight,” than to have no aggregated data at all In a nutshell, our approach isrealistic,practical,applied,and,mostimportant,student-friendly

THEME

Wemaintainthatprofessionalsocialworkpracticerestsuponthefoundationthataworker’spracticeactivities must be directly relevant to obtaining the client’s practice objectives, which are linked to the program ’ s objectives, which are linked to the program ’ s goal, which represents the reason why the program exists in the firstplace.Theevaluationprocesspresentedinourbookheavilyreflectstheseconnections.

Pressures for accountability have never been greater Organizations and practitioners of all types are increasingly required to document the impacts of their services not only at the program level but at the case levelaswell Continually,theyarechallengedtoimprovethequalityoftheirservices,andtheyarerequiredto dothiswithscarceresources Inaddition,fewsocialserviceorganizationscanadequatelymaintainaninternal evaluation department or hire outside evaluators. Consequently, we place a considerable emphasis on monitoring, an approach that can be easily incorporated into the ongoing activities of the social work practitionerswithintheirrespectiveprograms

Inshort,weprovideastraightforwardviewofevaluationwhiletakingintoaccount:

• Thecurrentpressuresforaccountabilitywithinthesocialservices

• Thecurrentavailableevaluationtechnologiesandapproaches

• Thepresentevaluationneedsofstudentsaswellastheirneedsinthefirstfewyearsoftheircareers

WHAT’SNEWINTHISEDITION?

Publishing a seventh edition may indicate that we have attracted loyal followers over the years Conversely, it also means that making major changes from one edition to the next can be hazardous to the book’s longstandingappeal.

New content has been added to this edition in an effort to keep information current while retaining material that has stood the test of time With the guidance of many program evaluation instructors and students alike, we have clarified material that needed further clarification, deleted material that needed deletion,andsimplifiedmaterialthatneededsimplification.

Like all introductory program evaluation books, ours too had to include relevant and basic evaluation content. Our problem here was not so much what content to include as what to leave out. We have done the customary updating and rearranging of material in an effort to make our book more practical and “student friendly” than ever before We have incorporated suggestions by numerous reviewers and students over the yearswhilestayingtruetoourmaingoal providingstudentswithausefulandpracticalevaluationbookthat theyactuallyunderstandandappreciate.

Let’snowturntothespecificsof“what’snew”

• Wehavesubstantiallyincreasedouremphasisthroughoutourbookon:

• Howtousestakeholdersthroughouttheentireevaluationprocess.

• Howtoutilizeprogramlogicmodelstodescribeprograms,toselectinterventionstrategies,todevelop andmeasureprogramobjectives,andtoaidinthedevelopmentofprogramevaluationquestions

• Howprofessionalsocialworkpracticerestsuponthefoundationthataworker’spracticeactivities mustbedirectlyrelevanttoobtainingtheclient’spracticeobjectives,whicharelinkedtotheprogram’s objectives,whicharelinkedtotheprogram’sgoal,whichrepresentsthereasonwhytheprogramexists inthefirstplace.

• Wehaveincludedtwonewchapters:

• Chapter8:TheoryofChangeandProgramLogicModels

• Chapter9:PreparingforanEvaluation

• WehaveincludedeightnewtoolsfortheEvaluationToolkit:

ToolA.HiringanExternalEvaluator

ToolB WorkingwithanExternalEvaluator

ToolD MeetingEvaluationChallenges

ToolF.BudgetingforEvaluations

ToolG UsingEvaluationManagementTools

ToolI TrainingandSupervisingDataCollectors

ToolJ.EffectiveCommunicationandReporting ToolK DevelopinganActionPlan

• WehavemovedtheEvaluationToolkittotheendofthebook(PartV)

• Wehavesignificantlyincreasedthenumberofmacro-practiceexamplesthroughoutthechapters.

• Wehaveexpandedthebook’sGlossarytoover620terms

• Studyquestionsareincludedattheendofeachchapter Thequestionsareintheorderthecontentis coveredinthechapter.Thismakesiteasyforthestudentstoanswerthequestions.

• Astudentself-efficacyquizisincludedattheendofeachchapter Instructorscanuseeachstudent’s scoreasoneofthemeasurementsforabehavioralpracticeobjectivethatcanbereportedtotheCouncil onSocialWorkEducation(2015).

Studentsareencouragedtotakethechapter’sself-efficacyquizbeforereadingthechapterandafter theyhavereadit Takingthequizbeforetheyreadthechapterwillpreparethemforwhattoexpectin thechapter,whichinturnwillenhancetheirlearningexperience kindoflikeoneofthethreatsto internalvalidly,initialmeasurementeffects

• Werepeatimportantconceptsthroughoutthebook Instructorswhohavetaughtprogramevaluation coursesforseveralyearsareacutelyawareoftheneedtokeepreemphasizingbasicconceptsthroughout thesemestersuchasvalidityandreliability;constantsandvariables;randomizationandrandom assignment;internalandexternalvalidly;conceptualizationandoperationalization;theoryofchange modelsandprogramlogicmodels;case-levelevaluationsandprogram-levelevaluations;accountability andqualityimprovement;standardizedandnonstandardizedmeasuringinstruments;confidentialityand anonymity;datasourcesanddatacollectionmethods;internalandexternalevaluations;practice activities,practiceobjectives,programobjectives,andprogramgoals;inadditiontostandards,ethics,and culturalconsiderations

Thuswehavecarefullytiedtogetherthesemajorconceptsnotonlywithinchaptersbutacrosschapters aswell.There’sdeliberaterepetition,aswestronglyfeelthattheonlywaystudentscanreallyunderstand fundamentalevaluationconceptsisforthemtocomeacrosstheconceptsthroughouttheentiresemester viathechapterscontainedinthisbook Readerswill,therefore,observeourtendencytoexplain evaluationconceptsinseveraldifferentwaysthroughouttheentiretext.

Now that we know “what’s new ” in this edition, the next logical question is: “What’s the same?” First, we didn’tdeleteanychaptersthatwerecontainedinthepreviousone Inaddition,thefollowingalsoremainsthe same:

• Wediscusstheapplicationofevaluationmethodsinreal-lifesocialserviceprogramsratherthanin artificialsettings

• Weheavilyincludehumandiversitycontentthroughoutallchaptersinthebook Manyofourexamples centeronwomenandminorities,inrecognitionoftheneedforstudentstobeknowledgeableoftheir specialneedsandproblems

Inaddition,wehavegivenspecialconsiderationtotheapplicationofevaluationmethodstothestudy

ofquestionsconcerningthesegroupsbydevotingafullchaptertothetopic(i.e.,Chapter6).

• Wehavewrittenourbookinacrispstyleusingdirectlanguage;thatis,studentswillunderstandallthe words.

• Ourbookiseasytoteachfromandwith.

• Wehavemadeanextraordinaryefforttomakethiseditionlessexpensive,moreestheticallypleasing,and muchmoreusefultostudentsthaneverbefore.

• Abundanttablesandfiguresprovidevisualrepresentationoftheconceptspresentedinourbook.

• Numerousboxesareinsertedthroughouttocomplementandexpandonthechapters;theseboxes presentinterestingevaluationexamples;provideadditionalaidstostudentlearning;andofferhistorical, social,andpoliticalcontextsofprogramevaluation.

• Thebook’swebsiteissecondtononewhenitcomestoinstructorresources

PARTS

PartI:PreparingforEvaluations

Before we even begin to discuss how to conduct evaluations in PartIII,Part I includes a serious dose of how evaluations help make our profession to become more accountable (Chapter 1) and how all types of evaluations(Chapter2) go through a common process that utilizes the program ’ s stakeholders right from the get-go (Chapter 3) Part I then goes on to discuss how all types of evaluations are influenced by standards (Chapter4),ethics(Chapter5),andculture(Chapter6).

So before students begin to get into the real nitty-gritty of actually designing social service programs (Part II) and implementing evaluations (Part III), they will fully understand the various contextual issues that all evaluativeeffortsmustaddress.PartI continues to be the hallmark of this book as it sets the basic foundation thatstudentsneedtoappreciatebeforeanykindofevaluationcantakeplace

PartII:DesigningPrograms

Students are aware of the various contextual issues that are involved in all evaluations after reading Part I. Theythenarereadytoactuallyunderstandwhatsocialworkprogramsareallabout thepurposeofPartII

This part contains two chapters that discuss how social work programs are organized (Chapter7) and how theory of change and program logic models are used not only to create new programs, to refine the delivery services of existing ones, to guide practitioners in the development of practice and program objectives, but to helpintheformulationofevaluationquestionsaswell(Chapter8)

Thechaptersandpartswerewrittentobeindependentofoneanother Theycanbeassignedoutoftheorderthey arepresented,ortheycanbeselectivelyomitted

We strongly believe that students need to know what a social work program is all about (Part II) before theycanevaluateit(PartIII) Howcantheydoameaningfulevaluationofasocialworkprogramiftheydon’t know what it’s supposed to accomplish in the first place? In particular, our emphasis on the use of logic models, not only to formulate social work programs but to evaluate them as well, is another highlight of the

PartIII:ImplementingEvaluations

After students know how social work programs are designed (Part II), in addition to being aware of the contextual issues they have to address (PartI) in designing them, they are in an excellent position to evaluate theprograms

The first chapter in Part III, Chapter 9, presents a comprehensive framework on preparing students to do an evaluation before they actually do one; that is, students will do more meaningful evaluations if they are preparedinadvancetoaddressthevariousissuesthatwillarisewhentheirevaluationactuallygetsunderway andtrustus,issueswillarise.

When it comes to preparing students to do an evaluation, we have appropriated the British Army’s official military adage of “the 7 Ps,” Proper Planning and Preparation Prevents Piss Poor Performance Not eloquently stated but what the heck it’s official so it must be right. Once students are armed with all their “preparedness,”thefollowingfourchaptersillustratethefourtypesofprogramevaluationstheycandowithall oftheir“planningskills”enthusiasticallyinhand

The remaining four chapters in PartIII present how to do four basic types of evaluations in a step-by-step approach Chapter 10 describes how to do basic needs assessments and briefly presents how they are used in the development of new social service programs as well as refining the services within existing ones It highlightsthefourtypesofsocialneedswithinthecontextofsocialproblems.

Once a program is up and running, Chapter 11 presents how we can do a process evaluation within the program in an effort to refine the services that clients receive and to maintain the program ’ s fidelity It highlights the purposes of process evaluations and places a great deal of emphasis on how to decide what questionstheevaluationwillanswer

Chapter 12 provides the rationale for doing outcome evaluations within social service programs It highlightstheneedfordevelopingasolidmonitoringsystemfortheevaluationprocess.

Once an outcome evaluation is done, programs can use efficiency evaluations to monitor their costeffectiveness/benefits the topic of Chapter 13 This chapter highlights the cost-benefit approach to efficiencyevaluationandalsodescribesthecost-effectivenessapproach.

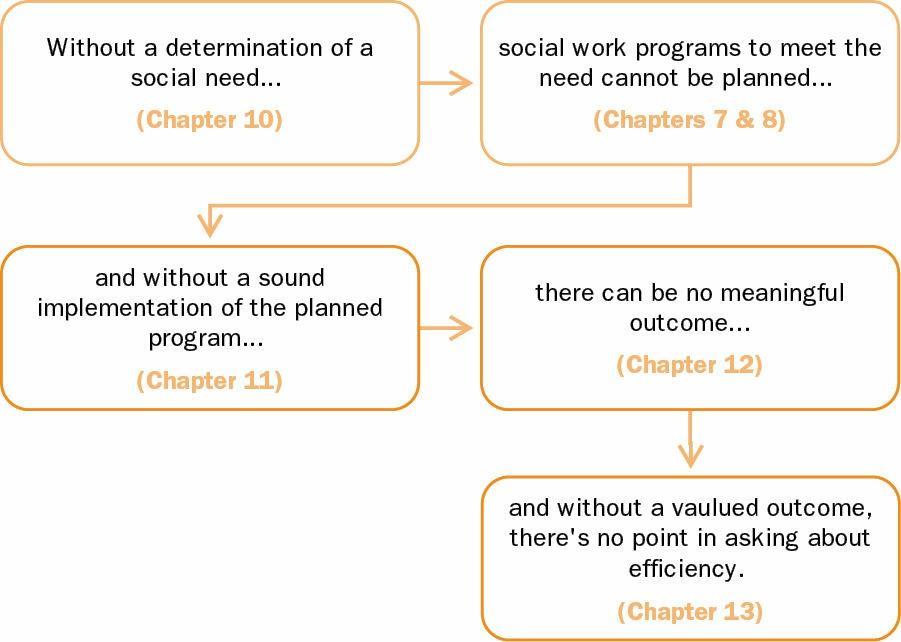

Insum,PartIIIclearlyacknowledgesthattherearemanyformsthatevaluationscantakeandpresentsfour of the most common ones Note that the four types of evaluation discussed in our book are linked in an orderedsequenceasoutlinedinthefollowingfigure.

PartIV:MakingDecisionswithData

Afteranevaluationiscompleted(PartIII),decisionsneedtobemadefromthedatacollected thepurposeof Part IV This part contains two chapters; the first describes how to develop a data information system (Chapter 14), and the second (Chapter 15) describes how to make decisions from the data that have been collectedwiththedatainformationsystemdiscussedinthepreviouschapter

PartV:EvaluationToolkit

PartV presents an Evaluation Toolkit that contains 13 basic “research methodology– type tools,” if you will, that were probably covered in the students’ foundational research methods courses The tools are nothing more than very brief “cheat sheets” that create a bridge between Part III (four basic types of evaluations) and PartIV (how to make decisions with data). So in reality, the tools briefly present basic research methodology thatstudentswillneedinorderforthemtoappreciatetheentireevaluationprocess

For example, let’s say an instructor requires students to write a term paper that discusses how to do a hypothetical outcome evaluation of their field placement (or work) setting. This of course, assumes they are

actually placed in a social work program that has some resemblance to the content provided in Chapter 7 It would be difficult, if not impossible, to write a respectable program outcome evaluation proposal without usingthefollowingtools:

ToolE.UsingCommonEvaluationDesigns

ToolH DataCollectionandSamplingProcedures

ToolL MeasuringVariables

ToolM.MeasuringInstruments

Obviously, students can easily refer to their research methods books they purchased for their past foundationalresearchmethodscoursestobrushupontheconceptscontainedinthetoolkit Infact,weprefer they do just that, since these books cover the material in more depth and breadth than the content contained inthetoolkit However,whatarethechancesthatthesebooksareontheirbookshelves?Webetslimtonone That’swhywehaveincludedatoolkit

Weinnowaysuggestthatalltheresearchmethodologystudentswillneedtoknowtodoevaluationsinthe realworldiscontainedwithinthetoolkit Itissimplynotasubstituteforabasicresearchmethodsbook(eg, Grinnell&Unrau,2014)andstatisticsbook(eg,Weinbach&Grinnell,2015)

INSTRUCTORRESOURCES

Instructors have a password-protected tab (Instructor Resources) on the book’s website that contains links Eachlinkisbrokendownbychapter.Theyareinvaluable,andyouareencouragedtousethem.

• StudentStudyQuestions

• PowerPointSlides

• ChapterOutlines

• TeachingStrategies

• GroupActivities

• ComputerClassroomActivities

• WritingAssignments

• True/FalseandMultiple-ChoiceQuestions

The field of program evaluation in our profession is continuing to grow and develop We believe this edition will contribute to that growth. An eighth edition is anticipated, and suggestions for it are more than welcome Pleasee-mailyourcommentsdirectlytorickgrinnell@wmichedu

Ifourbookhelpsstudentsacquirebasicevaluationknowledgeandskillsandassiststheminmoreadvanced evaluation and practice courses, our efforts will have been more than justified. If it also assists them to incorporateevaluationtechniquesintotheirpractice,ourtaskwillbefullyrewarded

RichardM Grinnell,Jr PeterA.Gabor YvonneA.Unrau