Ignition’s industry-leading technology, unlimited licensing model, and army of certified integration partners have ignited a SCADA revolution that has many of the world’s biggest industrial companies transforming their enterprises from the plant floor up.

With plant-floor-proven operational technology, the ability to build a unified namespace, and the power to run on-prem, in the cloud, or both, Ignition is the platform for unlimited digital transformation.

Visit inductiveautomation.com/ignition to learn more.

When we named our industrial application software “Ignition” fifteen years ago, we had no idea just how fitting the name would become...

With plant-floor-proven operational technology, the ability to build a unified namespace, and the power to run on-prem, in the cloud, or both, Ignition is the platform for unlimited digital transformation.

One Platform, Unlimited Possibilities

Ignition’s industry-leading technology, unlimited licensing model, and army of certified integration partners have ignited a SCADA revolution that has many of the world’s biggest industrial companies transforming their enterprises from the plant floor up. Visit inductiveautomation.com/ignition

Five system integrators show how they provide clients in multiple process industries with suitable and successful cybersecurity protections

Plant of the Year honorees push process control into the future

Systems integrator reaps rewards of derisking

With an experienced partner, you can achieve more.

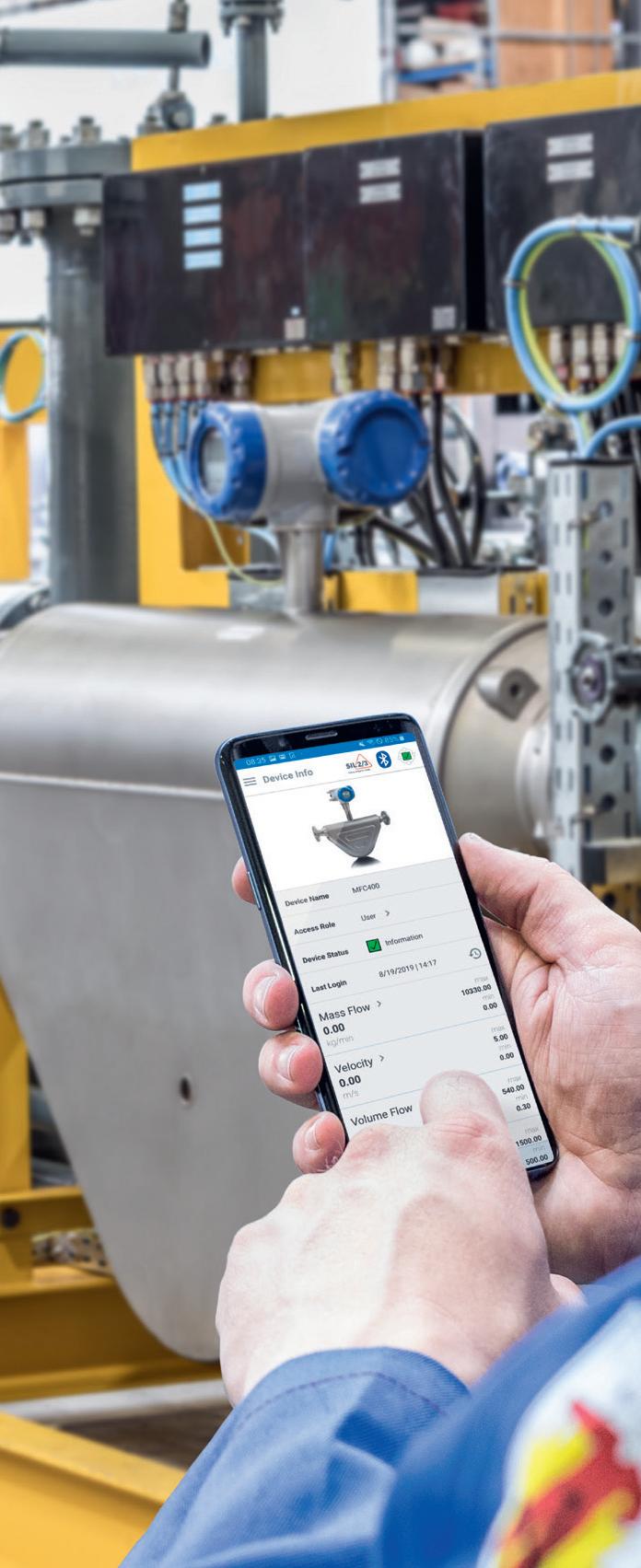

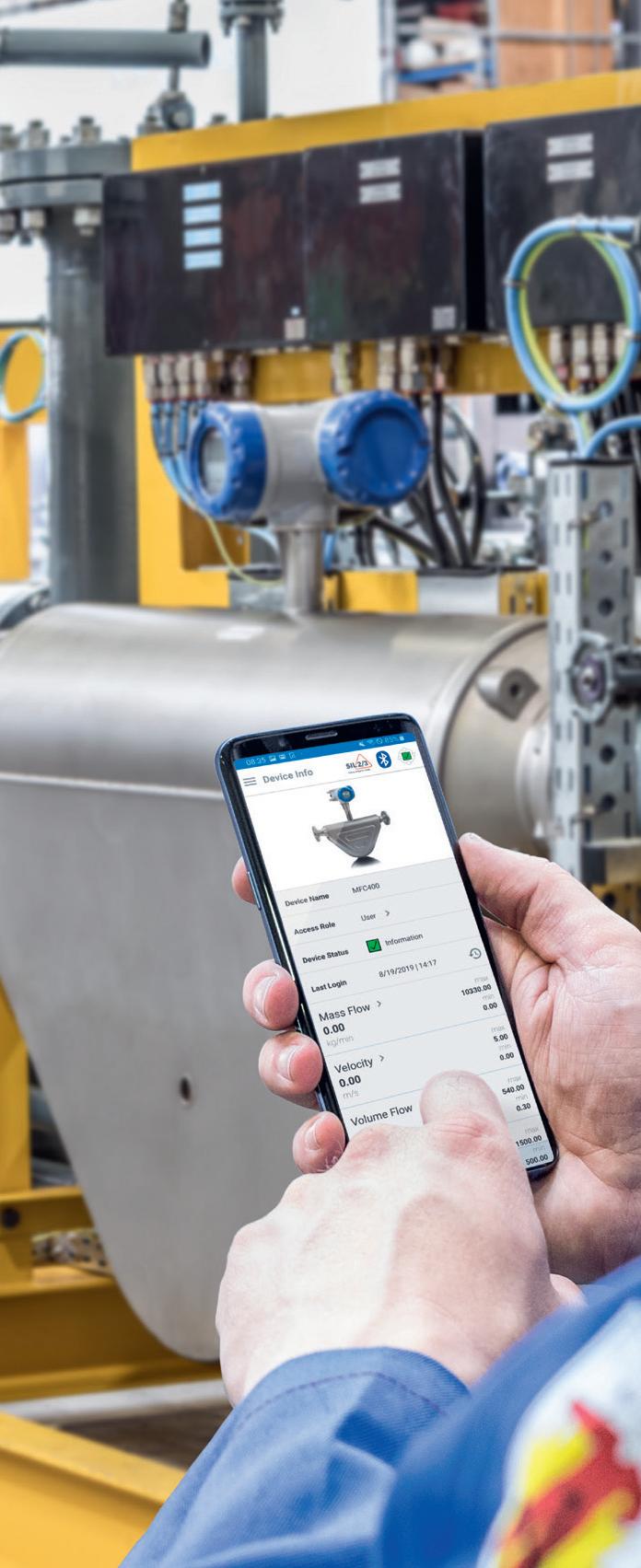

Optimizing processes and maximizing efficiency is important to remain competitive. We are the partner that helps you master yield, quality, and compliance. With real-time inline insights and close monitoring of crucial parameters, we support manufacturers to optimize processes, reduce waste, and increase yield.

Five system integrators show how they provide clients in multiple process industries with suitable and successful cybersecurity protections by Jim Montague

OF THE YEAR

FieldComm Group's Plant of the Year honorees illustrate the progress made in productivity, efficiency and sustainability by Len Vermillion

System integrator Arthur G. Russell proves new product concepts, designs and development by testing, standardizing, modularizing and partnering by Jim Montague

CONTROL (USPS 4853, ISSN 1049-5541) is published 10x annually (monthly, with combined Jan/Feb and Nov/Dec) by Endeavor Business Media, LLC. 201 N. Main Street, Fifth Floor, Fort Atkinson, WI 53538. Periodicals postage paid at Fort Atkinson, WI, and additional mailing offices. POSTMASTER: Send address changes to CONTROL, PO Box 3257, Northbrook, IL 60065-3257. SUBSCRIPTIONS: Publisher reserves the right to reject non-qualified subscriptions. Subscription prices: U.S. ($120 per year); Canada/Mexico ($250 per year); All other countries ($250 per year). All subscriptions are payable in U.S. funds.

Printed in the USA. Copyright 2024 Endeavor Business Media, LLC. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopies, recordings, or any information storage or retrieval system without permission from the publisher. Endeavor Business Media, LLC does not assume and hereby disclaims any liability to any person or company for any loss or damage caused by errors or omissions in the material herein, regardless of whether such errors result from negligence, accident, or any other cause whatsoever. The views and opinions in the articles herein are not to be taken as official expressions of the publishers, unless so stated. The publishers do not warrant either expressly or by implication, the factual accuracy of the articles herein, nor do they so warrant any views or opinions by the authors of said articles.

Choosing the right sensor doesn’t have to be like solving an advanced algebra equation. VEGAPULS 6X is one radar level sensor for any application, liquids or bulk solids, delivering reliable and precise measurements. Simply provide VEGA with your application details, and they’ll create a VEGAPULS 6X that’s tailored to your needs. The culmination of over 30 years of radar level measurement expertise, THE 6X® is the variable that makes level measurements simple.

Exploring the convergence of

Train for digital transformation

As manufacturers pursue digital transformation, leaders must upskill their workforces

A bridge to the future–if you want it

Justifying and testing new fieldbus concepts

INDUSTRY PERSPECTIVE

'Boundless automation' two years on

Process automation architecture now resembles the digital world

16 WITHOUT WIRES

Envision procedural automation in your future

Why it's a natural fit for normal but infrequent operations 17 IN PROCESS

Mary Kay O'Connor Center safe at home

Also, Aveva World tackles big digitalization

Mobilizing thin-client management

Pepperl+Fuchs and Rockwell Automation debut ThinManagerready tablets

35 RESOURCES

Calibration instills confidence Control 's monthly resources guide

36 ASK THE EXPERTS

Economics of pumping stations

How does changing speeds affect pump efficiency?

38 ROUNDUP

Building blocks (and I/O) multiply links I/O modules and terminal blocks provide new sizes, signals, networking and flexibility

40 CONTROL TALK

Keys to successful migration projects

How to get the most out of control system migration projects

42 CONTROL REPORT

Embrace boring Election judging parallels process control and automation

Every

ASK FOR

I N T T E R O P

Len Vermillion, lvermillion@endeavorb2b.com

Jim Montague, jmontague@endeavorb2b.com

Madison Ratcliff, mratcliff@endeavorb2b.com

Béla Lipták, Greg McMillan, Ian Verhappen

Exploring the convergence of industrial process control and space technology

HOUSTON, Texas, seemed like the perfect place to talk about outer space. Space City, after all, is home to mission control at NASA's Johnson Space Center, and has been the central point for decades of space exploration. But this was an industrial process control event—a largely terrestrial endeavor—so what was there to discuss about “out there"? Turns out, plenty.

Space Day at Yokogawa’s YNOW 2024 users’ conference piqued the interest of process control engineers and executives eager for collaborative opportunities. There’s opportunity and incentive for the process and space industries to learn from each other. Remember, “the needs of the many outweigh the needs of the few.” Spock would be proud. Process control conferences these days tend to focus on autonomous operations. Space is the ultimate use case for autonomous technologies. “Space is the pinnacle of remote operations. Everything we talk about in terms of making things secure, keeping workers safe, autonomous operations is applicable to space,” said Eugene Spiropoulos, senior technology strategist at Yokogawa, during a lively panel on IT/OT technology in space.

LEN VERMILLION Editor-in-Chief lvermillion@endeavorb2b.com

Rita Fitzgerald, rfitzgerald@endeavorb2b.com

Jennifer George, jgeorge@endeavorb2b.com

Operations Manager / Subscription requests Lori Goldberg, lgoldberg@endeavorb2b.com

VP/Market Leader - Engineering Design & Automation Group

Keith Larson

630-625-1129, klarson@endeavorb2b.com

Group Sales Director

Amy Loria

352-873-4288, aloria@endeavorb2b.com

Account Manager

Greg Zamin

704-256-5433, gzamin@endeavorb2b.com

Account Manager

Kurt Belisle

815-549-1034, kbelisle@endeavorb2b.com

Account Manager

Jeff Mylin

847-533-9789, jmylin@endeavorb2b.com

Subscriptions

Local: 847-559-7598

Toll free: 877-382-9187

Control@omeda.com

Jesse H. Neal Award Winner & Three Time Finalist

Two Time ASBPE Magazine of the Year Finalist

Dozens of ASBPE Excellence in Graphics and Editorial Excellence Awards

Four Time Winner Ozzie Awards for Graphics Excellence

However, he and fellow panelists pointed out the convergence of space tech and industrial controls is bidirectional. Andrea Course, digital Innovation program manager at Shell, flipped the discussion when she asked, “What can we learn in space that we can use here on Earth"?

While the resulting autonomy and efficiency should be enough to make business leaders salivate, engineers are aware that mirroring the two types of technologies will take some work. For it to happen, there must be more investment in intelligent devices and automation in the process industries. The biggest challenge of adapting space tech for use on Earth is that products are built specifically for the demands of space. “So you have to get creative,” said Wogbe Ofari, founder and chief strategist at WRX Companies. Engineers are problem solvers, and just as they once cracked the codes to get men to the moon 55 years ago, adopting technology built specifically for the demands of space and converting them for a refinery, deepwater drilling, or a chemical processing plant is well within our reach.

So maybe it’s time to reach from the stars.

"There’s opportunity and incentive for the process and space industries to learn from each other."

DUSTIN JOHNSON

Chief

Technology Officer, Seeq

" Companies that invest in modern technologies and upskilling can expect measurable improvements in productivity and efficiency, while also attracting and retaining a more motivated workforce."

As manufacturers worldwide pursue digital transformation, leaders must upskill their workforces

AFTER many digital transformation projects consisting of both successful adoptions and efforts stuck in pilot purgatory, manufacturers in many industries are recognizing that success requires more than adopting new technologies. It should be no surprise that the technical parts of digital transformation are far less important than its business and human aspects. In fact, the most important technological consideration is how well it supports people to adopt new practices and behaviors in pursuit of efficient workflows and increased business value.

Despite this understanding, many business leaders still initiate digital transformation programs without clearly outlining and communicating the rationale, business impacts or nature of changes and the steps for achieving them. They also commonly overlook the upskilling required to empower their workforce to use these new technologies, risking not only a subpar return on investment, but also contributing to today’s widening skills gaps.

The most forward-thinking organizations invest in innovative technologies, such as advanced analytics and generative artificial intelligence (genAI), which improve workflow efficiency, use of operations data and manufacturing insights, while also providing industry-relevant, just-in-time learning on demand. By pairing digital transformation with user empowerment, these companies create insights, and decrease the learning curve to understand them, yielding better business results, while easing the tasks of personnel who perform them.

The shortage of skilled labor across the industrial sector presents a fundamental challenge for organizations implementing long-term digital transformation. While the most knowledgeable employees are retiring, industry is simultaneously losing talent

to more popular fields that address evolving employee expectations more effectively. According to the World Economic Forum's "Future of Jobs Report 2023," 60% of workers will require training before 2027, but only half have access to adequate training opportunities. The same report found that, in all industries collectively, training workers to use AI and big data ranks third among company upskilling priorities over the next five years.

Cross-industry surveys find large salaries are no longer enough to retain employees. Workers recognize that longevity in a sector and their future employability depend on access to and successful adoption of new technologies. According to Deloitte, manufacturing employees are nearly three times less likely to leave an organization in the next year if they believe they can acquire skills that are important for the future. Similarly, Deloitte predicts that nearly 2 million manufacturing jobs could remain unfilled if organizations don’t address these skills gaps.

Successful digital transformation requires personnel to use new tools effectively to improve workflows. Companies that invest in modern technologies and upskilling can expect measurable improvements in productivity and efficiency, while also attracting and retaining a more motivated workforce.

For industrial organizations, modern, advanced analytics platforms are optimized to connect disparate data sources, so users can seamlessly combine and interrogate information regardless of its origin. These platforms provide a combination of intuitive, self-service tools for data cleansing, time-stamp alignment and contextualization, empowering subject matter experts (SME) to quickly derive reliable insights referencing all available data. With live connectivity built into the software, SMEs can apply their analyses

to near-real-time data, whether it’s stored in the cloud or onsite.

Access to these platforms lets users make tangible impacts in their organizations. For example, they can optimize operational efficiency and increase uptime, conduct root-cause analyses and mitigate issues, and/or monitor sustainability key performance indicators (KPI) in real time to decrease waste and emissions. Despite the relative ease of using these tools, companies must ensure their users are provided with sufficient training in software’s functions and features, and in general data analytics principles.

Traditionally, a primary hurdle for effective technical training was a lack of time, especially in sustained blocks, to engage in formal training courses, which often required multiple days offsite. Today’s technologies, however, pioneer new ways for industrial organizations to upskill their workforces, improving adoption with more time focused on value-added activities.

Recent investments in genAI demonstrate this new approach. GenAI lets workers obtain faster results, while

reducing the need for highly technical education and training. For example, engineers without formal training in programming languages can benefit from embedded and standalone genAI solutions, which lower the barrier to setting up complex analyses with advanced algorithms. This helps establish better project understanding, and facilitates improved collaboration with data-scientist colleagues.

Organizations must also ensure that users understand fundamental principles of interpretation, such as how to validate results. When enabling more workers to deploy complex machinelearning (ML) algorithms using simple prompts, it’s critical to precede that with the knowledge, understanding and ability to evaluate model validity and trustworthiness. It’s essential to pair every new technological deployment with a comprehensive and welldefined training path to ensure efficient and accurate outcomes.

Achieving success with advanced ML techniques and AI implementations requires giving workers sufficient training in these essential areas. Research shows that e-learning paths often yield better results than traditional classroom instruction, emphasizing

the importance of access to resources while the work is performed. When delivered as part of an intentional and sustained upskilling program, this capability can also enhance the likelihood of successful adoption.

Lowering the barrier for employees to engage in tasks and approaches that support agile and digitalized workforces often incites organic transformation initiatives at the grassroots, including scaling pilots and even starting new ones. Seeing these direct impacts on the business, in addition to pathways for career growth, drives and sustains personnel’s motivation in their roles, which simultaneously improves worker satisfaction and retention.

One U.S., multinational, oil-andgas company created a structured adoption plan to roll out AI-informed, advanced analytics technology across more than 50 sites globally. As adoption increased and experts in the technology emerged, the company followed a strategy known as “train the trainer,” which prioritizes first empowering internal champions, who understand where the technology should be implemented. These champions then facilitate training, and share tangible successes with new learners in the organization. Thanks to this community-led approach, the company has more than 4,000 unique users per month in the software platform, who can identify process anomalies and monitor equipment.

As with all new practices and procedures, fostering successful adoption requires providing staff with the right skills and training. The most successful digital transformation adopters are prioritizing just-in-time learning, empowering their workforces to use innovative technologies, which, in turn, motivates employees to pursue career growth, and push the boundaries of what’s possible for the business.

JOHN REZABEK Contributing Editor

JRezabek@ashland.com

"Remote I/O is expensive, and requires complicated and worrisome power strategies to match Foundation Fieldbus’ two-wire power redundancy."

FRANK was among a minority of plant maintenance supervisors, who had a large installed base of fieldbus devices, predominantly Foundation Fieldbus. Proficiency in fieldbus was vanishing from his company and suppliers due to retirements and reassignment to current marketing hype. There was also increasing attrition among his decades-old instruments, many of which were in service and under power for that entire period.

Frank had a few technicians, who were trained and knew the procedure for replacing one device with another that was one or more generations beyond. Some nuances needed engineering—such as when control function blocks from early generations needed to be reconfigured with more recent options. However, fieldbus looked like it was going the way of pneumatics because fewer and fewer individuals knew how to help with troubleshooting and repairing it.

All migrations are costly and typically have zero payout—obsolescence avoidance could arguably make the plant more reliable, but the chances of citing likely economic impacts wouldn’t finance the infrastructure change. One of Frank’s manufacturing reps thought he could revert to 4-20 mA/HART devices. That was challenging to accommodate on a one-by-one basis since there were not nearly enough twisted-pair cables in the field to bring in more than a few dozen devices.

Remote I/O is expensive, and requires complicated and worrisome power strategies to match Foundation Fieldbus' two-wire power redundancy. What would he need at the system side to bring in remote I/O, and did he have room for additional cards? It would have to be good for hazardous areas, and he would be gambling on whether his old cable would be adequate for the remote I/O backhaul.

Frank might be intrigued now if he became aware of an effort by the FieldComm Group to describe a concept for migrating Foundation

Fieldbus H1 (bit.ly/migratingH1) via the emerging (but far from mainstream) Ethernet Advanced Physical Layer (APL, www.ethernetapl.org). APL is two-wire Ethernet with power that can be deployed in hazardous areas.

Roughly a decade ago, the Fieldbus Foundation merged with the HART Communications Foundation to form the FieldComm Group, and it's been a leading organization developing standards for new field communications. The founding members are the same that were devoted to developing and marketing fieldbus, though the players have changed. If you’ve been a subscriber to the fieldbus vision—digital integration of field devices over a twisted-pair bus—you might feel like these technology providers had an obligation to define a way forward.

Foundation Fieldbus H1 was originally conceived to have H2, and later morphed into high-speed Ethernet (HSE). H2/HSE bridged the H1 networks together, and transparently supports the fieldbus protocol. But incarnations of this architecture were scarce, and it didn’t win supporting DCSs many projects. Instead, proprietary remote I/O supporting legacy, point-to-point wiring became the solution-du-jour thanks largely to support from “big oil” and speedy adoption by EPC firms. At the end of the day, suppliers must sell their wares—it’s how they achieve positive cash flow. This cruel but inexorable equation is why support for Foundation Fieldbus isn’t abundantly promoted in any supplier’s portfolio; it was outshone by simpler technology in proportion to the cost of manufacturing, marketing and support.

It's encouraging that FieldComm Group has invested the time and resources to publish its TR10365 concept for migrating the installed base of fieldbus devices. So I’ll continue next month imagining what sort of choices we’ll be making, and how to justify testing and investing in new concepts.

When we named our industrial application software “Ignition” fifteen years ago, we had no idea just how fitting the name would become...

fit ting th

Ignition’s industry-leading technology, unlimited licensing model, and army of certified integration partners have ignited a SCADA revolution that has many of the world’s biggest industrial companies transforming their enterprises from the plant floor up.

With plant-floor-proven operational technology, the ability to build a unified namespace, and the power to run on-prem, in the cloud, or both, Ignition is the platform for unlimited digital transformation.

Visit inductiveautomation.com/ignition to learn more.

As the operational technology (OT) world continues its co-option of IT best practices, the architecture of process automation systems is starting to look less like the multi-layer Purdue model of yesteryear and more closely resembles that of the rest of the digital world: purpose-built smart devices connected to virtualized networks of local computing power, complemented by essentially unlimited cloud-based support. Emerson announced its own “Boundless Automation” vision of industrial automation—field, edge and cloud— at its Emerson Exchange user group event two years ago, and to check in on progress to date Control sat down with Emerson CTO Peter Zornio for a discussion that also ranged from generative AI to next year’s Emerson Exchange in San Antonio.

Q: Hard to believe, but it's already been two years since you and the rest of the Emerson team unveiled the vision of Boundless Automation at Emerson Exchange 2022 in Grapevine, Texas. To start things off, can you summarize the essential concepts of Boundless Automation and its implications for the industrial enterprise?

A: Boundless Automation is fundamentally Emerson's vision of how we see the next generation of automation technology. It's also our vision for overcoming the issues introduced by how industy has implemented automation solutions these past 25 years. In that paradigm, there's typically a big data source on the production side, most often the automation system, but then there are software applications for reliability, for quality, and now for sustainability being put into place. What typically happens is that each one of those functional departments ends up creating its own silo of data that is tied to the databases and the architecture structures in their own area.

What we believe needs to happen is implementation of a common data fabric that allows you to get at all that data—not just from the automation world, but from those other silos—in a manner that is preintegrated with a common context. So, the majority of your data is there in one fabric, easily accessible by whatever kind of application needs it. This allows you to simultaneously optimize operations across various domains instead of being focused on just one of them. And that's increasingly important to customers as they fight to operate efficiently and cost competitively while increasing production, even as they add sustainability and other metrics to their goals.

Q: The year 2022 also marked Emerson taking a big equity stake in AspenTech, the acquisition of Inmation and an announcement by your CEO Lal Karsanbhai of Emerson’s intent to divest all those commercial and residential units and focus solely on industrial automation. What milestones have been achieved since?

A: Just a year ago, we released what we call the DeltaV Edge, which is a new product in the DeltaV portfolio. It's a node that sits on the DeltaV system and allows DeltaV data to pass through a one-way communications protocol—or even a physical data diode, if you like—to populate another set of processors running edge-based technology. It’s a node that uses what's called HCI, or hyperconverged infrastructure, which is a next-generation version of virtualization. What this node lets us do is create an entire digital twin of the DeltaV system, not just the process data, but alarm data, event data and configurations—essentially the complete context of the DeltaV data on the open edge side of that node.

Once you’re there you have access to all the more modern edge analysis tools. We added new capabilities for exporting the data via standards like JSON and MQTT, in addition to the OPC standards that we often use in the automation world. Another part of the Boundless Automation vision is allowing control to actually run on edge platforms, instead of only on purpose-built hardware. And we’ll have our first releases of that by the end of the next calendar year.

Q: It’s certainly no secret that the industries Emerson serves are facing a bit of an expertise crisis, with the waves of experienced operators and engineers retiring. What role does artificial intelligence, especially in the form of generative AI, promise to play in complementing the more traditional, analytics-based advisory tools like Plantweb Insight?

A: First, I’d remind your readers that industrial automation was AI long before AI was cool. Within AspenTech especially, the vast majority of their portfolio is around optimization, advanced control, planning and scheduling. That's all built on data models, but also first-principles models used in the design and simulation of process plants. What’s different from the AI we were using 25 years ago is that instead of using a narrowly defined algorithm trained on a specifically relevant set of data, generative AI uses a general-purpose model trained on broad data sets to answer a whole variety of questions.

In terms of the products Emerson develops and the value we can deliver to our customers, I think in terms of three buckets. The first is in the area of customer support: anyone who supplies an industrial or consumer product is working on developing an interactive copilot to guide its users. The second area is in the configuration of products. Industrial products, especially software and systems, require considerable site or application-specific configuration. We actually have a product today that can take configurations of older, competitive control systems and using AI quickly translate them into modern configurations for DeltaV. But I think the AI application that stirs everyone's imagination is the idea of an actual operations copilot that you can talk to—an interactive operator or operations advisor that you can ask for input on anything.

Boundless Automation consists of three complementary domains–field, edge and cloud–that work closely together to accomplish control and optimization tasks.

Q: I’m looking forward to connecting in person again next spring when the Exchange community will be gathering in San Antonio from May 19 to 22. Other than moving from what has usually been a fall cadence to the spring, any particular innovations and new things we should look out for?

A: Exchange attendees get to see in action all the things we just talked about—and more. But most of our users who participate say the biggest takeaway is the interaction with the other users they meet there, many of whom are facing the same challenges they are.

IAN VERHAPPEN

Solutions Architect

Willowglen Systems

Ian.Verhappen@ willowglensystems.com

"Looking at procedural automation as a logical sequence of steps makes it a natural fit for normal but infrequent operations."

THE definition of procedural automation is the “implementation of a specification of a sequence of tasks with a defined beginning and end that’s intended to accomplish a specific objective on a programmable mechanical, electric or electronic system.” By itself, this doesn’t say much, and you could argue it’s what any control program or application is meant to accomplish. However, the key words are “beginning and end.”

From an operational perspective, a procedure is one or more “implementation modules,” each consisting of a set of ordered tasks to provide plant operations with step-by-step instructions for accomplishing (implementing) and verifying the actions to be performed.

Looking at procedural automation as a logical sequence of steps makes it a natural fit for normal but infrequent operations. This includes startup, shutdown, product change, alarm response, abnormal situation response, complex or repetitive operations, equipment isolation and return to service, and regulatory requirement support.

Two automation styles are used to determine transition for procedural automation:

• State-based control: a state of a module, such as block valve open/close, PID manual/ auto/cascade, and pump run/stop.

• Sequence-based control: a set of actions in which the behavior of a procedure implementation module follows a set of rules with respect to its inputs and outputs.

There are also three degrees of automation and means of performing each task:

1. Manual—the operator is responsible for command, perform and verify work items.

2. Semi-automated—implementation modules are considered semi-automated, with operators and computers sharing coordinated responsibility for command, perform and verify work items.

3. Fully automated—implementation modules are considered fully automated when

the computer is responsible for the bulk of the command.

Implementing and maintaining procedural automation also comes with a cost, so there must be a corresponding benefit. A representative list of benefits arising from procedural automation includes:

• Improved safety performance—automating procedures and utilizing state awareness for alarm management reduces the workload on the operations staff during abnormal conditions, which reduces the probability of human error.

• Improved reliability—automated procedures can aid in maintaining maximum production rates, minimizing recovery time and avoiding shutdowns.

• Reduced losses from operator errors—automating procedures enable operations staffs to standardize operating procedures. A standardized approach reduces the likelihood of human error contributing to abnormal conditions, and reduces the time required to recover from abnormal conditions.

• Increased production by improving startups and shutdowns—operations benefit by achieving faster, safer and more consistent startup and shutdown of processes.

• Increased production and quality via efficient transitions—process transitions from one condition to another during normal operations, accomplishing transitions with reduced variability in less time.

• Improved operator effectiveness—reduces the time an operator spends carrying out repetitive tasks.

• Higher retention and improved dissemination of knowledge—automated procedures can be used to retain the knowledge of the process.

• Improved training—as knowledge and best practices are captured into automated procedures, t he resulting documentation and code can be used for training new operators about processes.

Safety and risk conference draws close to 400 visitors to numerous presentations

BUILDING on the momentum of its recent in-person and virtual events, the Mary Kay O’Connor Process Safety Center (MKOPSC) at Texas A&M University’s (TAMU) Engineering Experiment Station (TEES) attracted close to 400 attendees and 30 exhibitors at its Safety and Risk Conference 2024 (mkosymposium.tamu.edu) on Oct. 21-24 in College Station, Tex. The event was co-located with the 79th annual Instrumentation and Automation Symposium and the first Ocean Energy Safety Day.

Plucked from among the event’s many presentations and exhibits, MKOPSC’s steering committee also bestowed several awards at the conference, including:

• Harry West service award to Mark Slezak, process safety and risk engineering manager at Oxy;

• Lamiya Zahin memorial safety award to Austin Johnes, graduate student at TAMU;

• Best paper award to Ganesh Mohan, process safety and risk manager at Chevron;

• Most innovative booth award to Oxford Flow;

• Best overall booth to ProLytx Engineering; and

• Best booth honorable mention to Softek Engineering

Steve Horsch, technical leader at Dow’s 58-year-old Reactive Chemicals Group, presented “Using calorimetry to understand reactive chemical hazards,” and reported most users are aware they need to know reactions and rates to avoid containment losses when manufacturing chemicals, but few know about more subtle measurement nuances that can alter those reactions and rates.

For example, a simple equation can express measuring heat from accelerating rate calorimetry (ARC), but more complex calculations are needed to demonstrate constantvolume heat capacity. “Likewise, if a user needs to know how much energy is coming from the heat of a reaction, the real-world process may be producing 315 joules per gram (j/g), while the ARC only shows 240-260 j/g, which means the resulting time requirements will be off,” explained Horsch. “This is why it’s crucial to examine what’s providing data, where it’s coming from, and whether it matches your actual scenario. Reaction kinetics from an ARC can be corrected using Fisher’s equation, but there may also be more complex reaction kinetics due to external heat pooling, which is a big-deal nuance. It’s not easy to collect the right data, but if you don’t have it, then you won’t get the right result. ARC can provide a lot of information to determine safe operating limits, but its limits must also be understood.”

Faisal Kahn, director of the Mary Kay O'Connor Process Safety Center (MKOPSC) at Texas A&M University’s (TAMU) Engineering Experiment Station (TEES), introduces the speakers at its 2024 Safety and Risk Conference 2024 on Oct. 21-24 in College Station, Tex. Source: Jim Montague

In her presentation, “Learnings and improvements in process safety,” Bridget Todd, VP of enterprise health, safety and environment (HSE) at Baker Hughes, reported that its Enterprise Risk Avoidance program includes KPIs that can be tracked quarterly, reported back to managers, and contribute to highlevel HSE audits. To reach managers and get them to follow up on these practices, the risk avoidance program also requires competence evaluations to make sure participants are doing their jobs capably and safely.

For instance, Todd explained that one Baker Hughes client is an artificial lift team that was experiencing some communications and management problems, and found it had gaps in documenting its pump performance and pressure parameters. It subsequently engaged more closely with its front-line staff on risks and mitigations, and reminded all players why checking and documentation is important.

“Open, common metrics showed leading and lagging indicators, but it also demonstrated training compliance and engagement levels,” concluded Todd. “This enabled tighter governance of all their process safety tasks.”

For expanded coverage, visit www.controlglobal.com/ MKOPSC2024

With more than 3,800 visitors onsite and 1,000 more live-streaming online, Aveva World 2024 in Paris on Oct. 14-16 was reported to be the largest event in its history—all focused on overcoming barriers to using industrial software and digitalization to bring real results and value to the physical world.

“We can’t share data among applications and users by continuing to work in silos. That era has to be over, especially in the world of industrial software,” said Caspar Herzberg, CEO at Aveva (www.aveva.com), which is now wholly owned by Schneider Electric (www.se.com). “The two big changes lately are the scale and nature of changes occurring, such as

climate change. Most people don’t understand how bad it’s gotten, but the solution is humanity collaborating across borders and industries.”

For instance, Norway-based Elkem (www.elkem.com) operates 15 plants and 19 control centers to produce its silicon-based products. Over the years it's adopted several Aveva software packages, such as Unified Operations Center (UOC) for aggregating and displaying processes on the plant-floor at Elkem's Bremanger facility.

“We can analyze complex data from Aveva’s applications on one platform, and integrate results from competitors’ software, too," added Herzberg. "This drives efficiency more quickly, so users can cut emissions sooner, and develop the faith and confidence they need to collaborate.”

Jean-Pascal Tricoire, chairman of Schneider Electric; Mattei Zaharia, professor at University of California Berkley and CTO of Databricks; and Caspar Herzberg, CEO at Aveva, compare notes on large-scale data analytics at Aveva World 2024 on Oct. 14-16 in Paris. Source: Jim Montague

Rob McGreevy, chief product officer at Aveva, reported that multiple earlyadopters are already using the Connect platform and AI to optimize their processes. These users include:

This unified platform is likely to combine technologies from Aveva, Schneider Electric and Databricks (www.databricks.com), which are planning to release a joint solution in January 2025. In fact, Herzberg’s opening-day keynote was punctuated by an onstage discussion with JeanPascal Tricoire, chairman of Schneider Electric, and Mattei Zaharia, professor at University of California Berkley and CTO of Databricks, which supplies an AI-enabled, data-intelligence platform.

• Talison Lithium produces 40% of the world’s hard-rock lithium, and it’s employing Connect to reduce energy costs, improve efficiency and yield, increase uptime and reliability, and empower its workforce.

• etap and Red Sea Resorts are using Connect on renewable energy projects to maximize utilization, reduce energy losses, and improve reliability.

“The two big items that come up with customers lately are digitalization and decarbonization, but these efforts require partnerships to get their data right,” said Tricoire. “Digitalization is constantly shifting the human role, not to mention the way we live, so how can the C-level convince users that adopting it will let them to do what they couldn’t do before?”

• BAE Systems and Visony Production use Connect to enable real-time operations on floating production, storage and offloading (FPSO) vessels, such as optimizing fuel consumption to save £35-140 million.

Zaharia added, “AI makes massivescale, deep-learning possible, which can generate better-quality data, results and decisions. We’re excited to see how the new Aveva Connect unified, cloud-based industrial intelligence platform will take on challenges like sustainability.”

“Applying AI to the SCADA data from an FPSO’s pumps can show vibrations and current draws more easily,” added McGreevy. “Connect can also provide digital twins, including 3D models of pumps on the ship, and what devices need to be fixed.” For

• Emerson (www.emerson.com) announced Oct. 16 that it will provide pressure and temperature transmitters, ultrasonic gas leak detectors, pressure regulators, pressure safety valves and hydrogen expertise to HyIS-one’s new hydrogen refueling and storage facility in Busan, South Korea, which is reportedly the nation’s largest hydrogen refueling station for commercial vehicles, such as trucks and buses. The completed station will be able to store up to 1.5 tons of pressurized hydrogen, with a throughput of 350 kilograms per hour, or the capacity to charge more than 200 vehicles per day.

• Seeq (www.seeq.com) reported Oct. 16 that it’s natively integrating its data analytics software with Aveva’s (www. aveva.com) Connect industrial intelligence software to simplify access to operational data in context, and enable users to improve their operations and production.

• Ametek Inc. (www.ametek.com) reported Oct. 31 that it’s acquired Virtek Vision International (virtekvision.com), which provides laser-based projection and inspection systems. Virtek will join Ametek’s Electronic Instruments Group (EIG), and enable a wider range of automated, 3D

scanning and inspection products by its Creaform (creaform3d.com) division.

• Following its recent acquisition of Senix Corp. (www.senix. com), BinMaster (www.binmaster.com) reported Oct. 24 that it will manufacture and market its own line of ultrasonic distance and liquid-level sensors. The former Senis Ultrasonics line of ToughSonic TS-100 and TS-200 noncontact ultrasonic sensors will be offered in general-purpose and chemical-resistant models suitable for measuring distances up to 50 feet.

• Obrist Group (www.obrist.at) announced Oct. 30 that it’s licensing its pool of more than 252 filed and 128 granted worldwide patents to developers of sustainable energy solutions. Its intellectual property portfolio spans a range of technologies from electric vehicles with ranges exceeding 1,000 kilometers to synthetic fuels to large-scale facilities for converting solar energy into hydrogen and methanol.

• To expand further into Canada, Motion Industries Inc. (www. motion.com) agreed Oct. 29 to acquire Stoney Creek Hydraulics (www.schydraulics.ca), which specializes in precision hydraulic and pneumatic cylinder manufacturing and repairs.

Five system integrators show how they provide clients in multiple process industries with suitable and successful cybersecurity protections

BECAUSE the process industries and their control applications are famously diverse, it might be foolish to think they can learn much from each other. They feature starkly different processes and priorities, so they also face different cyber-threats and responses based on those priorities. However, as their users concentrate more intently on cybersecurity—and as they increasingly digitalize—common themes emerge that let them sharpen their focus on best practices they all can use.

“We still see many clients who aren’t adopting network segmentation to the level we'd like, so we’re still blocking and tackling,” says Scott Christensen, cybersecurity practice director at GrayMatter (graymattersystems.com), an industrial technology solutions consultant in Pittsburgh, Pa., and a certified member of the Control System Integrators Association (CSIA, www.controlsys.org). “This is changing priorities because users are putting visibility into their systems first, asking what assets they own, what PLCs do they have where, and how can they add value by operating more securely?”

Over the years, GrayMatter has helped about 1,500 water utilities implement their PLCs, SCADA/HMI systems, control panels and predictive analytics. However, many remain woefully underfunded and understaffed when it comes to cybersecurity. Some help may come to plantfloors from cool, new cybersecurity tools like zero-trust strategies that are emerging on the information technology (IT) side. However, some system integrators report these

by Jim Montague

promised blessings have yet to show up on the operations technology (OT) side. The two main reasons are that many process-industry manufacturers are adopting more basic cybersecurity measures first, and they remain reluctant to cede control of their networks to software-based functions.

“Zero-trust is still new, but it’s getting popular among IT users because it lets them more easily identify and decide who can join their networks, allow functions they do need, and block what they don’t need,” says John Peck, OT security manager at Gray Solutions LLC (graysolutions.com), a CSIA-certified system integrator in Lexington, Ky. “Zerotrust isn’t on the OT side yet because many users are concerned it and other recent cybersecurity tools could cause outages and safety issues, including unplanned shutdowns of their processes and equipment.”

Even though cybersecurity begins with asset awareness, Christensen reports many asset inventories it depends on are old, incomplete, and don’t have enough data about device behaviors. For example, GrayMatter recently worked with a large liquid natural gas (LNG) facility that had plenty of cybersecurity capabilities, including costly firewalls, intrusion detection, and OT visibility tools. However, within the first few minutes of GrayMatter’s visit, it found five PLCs that had been trying to dial Russian IP addresses for about six months. Christensen reports that GrayMatter remediated this situation by increasing visibility into thethe

LNG company’s assets and their behavior, and tuned their baseline intrusion-detection for greater accuracy.

“We evaluated what worked best in this situation, and for this LNG application, what proved to be the most useful was contextual filtering right below its perimeter firewall and in front of its assets, groups and facilities,” says Christensen. “This type of filtering detects behaviors outside of its baseline such as geofencing, and only allows traffic that originates from or is destined for the U.S. or other predefined locations. It establishes rules that authorize participants to join my network. All other traffic is blocked. In fact, we’ve learned that 60% of network traffic is usually white noise, and contextual filtering drops this out, too, which also improves latency, bandwidth and flexibility. I think the biggest lesson for everyone is that no one is running an industrial network security program that checks all the boxes. There’s always room to improve.”

One of the most useful ways users can determine the right cybersecurity approach is learning about its increasingly lengthy history and how more recent events continue to affect it. For example, early breaches due to malware like Stuxnet, Triton, Not Petya and many others continued to evolve in the wake of seemingly unrelated world events like the COVID-19 pandemic and Russia’s invasion and war in Ukraine.

“The pandemic drastically increased remote connections into operating environments, and initially many weren’t done using the most secure practices,” says Larry Grate, business development director for OT infrastructure and security at Eosys Group (www.eosysgroup.com), a CSIA-certified system integrator in Nashville, Tenn. “Most were driven by the need to support operations without the risk of having employees physically come into manufacturing environments. However, during past few years, we’ve seen a lot of work on remediation and securing remote access.”

Eosys works mostly in the food and beverage, chemicals, pulp and paper, and steel industries, but it’s also a member of the Manufacturing Information Sharing and Analysis Center (mfgISAC.org), which is a cybersecurity threat-awareness and mitigation community for small, medium and enterpriselevel manufacturers. Grate recommends joining MfgISAC,

Now that they’re been around for more than a few years, many of the best-known cybersecurity standards and guidelines have been added to, updated and refreshed a few times. Here’s their present status:

ISA/IEC 62443 —Several sections of the standard have been revamped or introduced recently. They include:

• 62443-2-1 that was published in August, and covers cybersecurity program policies, requirements and procedures for industrial automation and control system (IACS) asset owners;

• 62443-3-3 that revised its guidance on system security requirements and capabilities needed to construct an IACS that meets security levels targets, and shows users how to gauge their progress; and

• 62443-4-2 that further refined its cybersecurity requirements for components in control systems described in ISA-62443-3-3, as well as its emphasis on secure development lifecycles.

NIST Cybersecurity Framework (CSF) 2.0 and its supplementary resources were launched in February, following a multiyear update. It explicitly aims to help all organizations manage and reduce risk, not just those in its original target audience of critical infrastructure. CSF 2.0 also updated its core guidance, and created a suite of resources to help organizations achieve their cybersecurity goals, with added emphasis on governance and supply chains. In addition, NIST published its Special Publication (SP) 800-50r1 (Revision 1), “Building a cybersecurity and privacy learning program,” which provides updated guidance for developing and managing a robust cybersecurity and privacy learning program in the federal government.

EU NIS2 Directive —The European Union (EU) adopted Oct. 16 its first rules on implementing cybersecurity for critical entities and networks as part of its directive on measures for high, common cybersecurity levels across the EU. NIS2 Directive also details cybersecurity risk-management measures, and when an incident should be considered significant to be reported to national authorities.

as well as Dragos’ Operations Technology-Cyber Emergency Readiness Team (dragos.com/community/ot-cert), which provides free policy and procedure templates, intelligence sharing and cybersecurity best practices for perimeter defenses and other protections.

Once physical and network perimeters are established and evaluated, and assets and configurations within them are inventoried, Grate reports users must develop an incident-response and disaster-recovery plan for every cyber-threat they expect to face. “There are two main playbooks for today’s cyber-attacks. The first is how to respond to ransomware by separating networks and recovering equipment,” explains Grate. “The second is being able to disconnect landed operations, similar to how IT does it, and creating temporary air gaps when necessary.”

Japanese oil refiner Idemitsu Kosan Co., Ltd., recently updated its plant information management systems to protect against cyber-attacks. It had been using legacy OPC networking to share data between onsite control systems, its process information management system (PIMS) and its business intelligence (BI) software that analyzes operations data. However, this communication strategy required firewall ports on the periphery of the system to be left open, which constituted a serious vulnerability. Consequently, Idemitsu Kosan replaced the PIMS with help from Azbil Corp., and implemented networking with OPC Unified Architecture (UA) protocol, which restricted ports and improved the company’s cybersecurity. Azbil provided its total plant information management systems, which features longterm accumulation and sharing of operations data. In fact, the new network lets the BI software extract and display six months of data in 10 seconds, rather than the 90 seconds it used to require.

Source: Azbil

For instance, Eosys recently worked with a Tier 1 automotive parts manufacturer that previously had isolated islands of automation, but subsequently allowed a direct connection between its plant and enterprise networks. This caused it to suffer a compromise incident during which its IT staff could see callouts from ransomware on the OT side. “This automotive client tried to keep running in an islanded state until they could address their outage, remove the ransomware, reload their HMIs and other equipment, and start back up,” adds Grate. “Their system had to run for six months with this malware, which had used an encrypted key to gain entry. During that time, they couldn’t use any of their crippled devices.”

Once the ransomware was removed and the automotive client was fully operational again, Grate reports it and Eosys drafted an incident-response plan, which included creating a defensible architecture based on network segmentation with zones and conduits advocated by the ISA/ IEC 62443 cybersecurity standard. They also started authenticating users and passwords with an active-directory server (ADS), which identifies individuals based on their job roles. Finally, they built an asset inventory with vulnerability data, initiated network traffic evaluation, and adopted a zero-trust strategy, which is similar to whitelisting, but creates micro-perimeters that allow more granular segmentation than individual work cells, though not as granular as individual devices.

“This allows more precise segmentation by risk, and lets users define their risk appetite,” explains Grate. “Users are typically OK with accepting some risk. If a process is lowrisk, they can segment it by each process work cell or larger. If a process is high-risk, they can segment closer down to the devices. Once these risk levels are established, users can begin to implement secure, remote access by using multi-factor authentication”

Years and decades of reliable service makes legacy equipment and systems familiar, but it doesn’t make them cybersecure. In fact, older devices are typically more vulnerable than newer ones, which makes it especially important for them to be part of any thorough cybersecurity risk assessment (cyber-RA).

Andrew Harris, business development director for controls and instrumentation at ACS (www.acscm.com), a CSIAmember system integrator in Verona, Wis., near Madison, reports it’s vital for users to conduct a cyber-RA and get a third-party intrusion test, which identifies their vulnerabilities, and shows where they need to upgrade and protect their devices and networks. After that, they need to do a new cyber-RA every five years during the life of their machines to address new devices and connections that have likely been added in the interim.

For instance, ACS recently helped a large automotive manufacturer upgrade 28 internal combustion engine (ICE) test cells as part of a larger facility modernization (www. acscm.com/projects/facility-upgrade-internal-combustionengine-test-cells). These cells use dynamometers, outdated PLCs and a data acquisition (DAQ) system to R&D cycle calibrations, validate engine performance prior to production, and generate critical, proprietary data. However, they ran on aging technology, while replacement parts were increasingly hard to find, and only one software engineer with the expertise required by its custom, legacy DAQ system remained on staff. The cells were also limited in the testing they could support, requiring units under test to be moved to different locations onsite to finish testing.

To upgrade the cells in place without impeding ongoing testing operations, ACS and its client scanned the available 116,000-square-ft space, allocated 30,000 square feet for the new testing space, and implemented phased replacements of PLCs and a DAQ system for the 28 ICE test cells in ACS’ custom-built cabinets, which interface with all of the automaker’s existing facility and test systems. ACS integrated the entire system into its customized design package, and subsequently improved the reliability and accuracy of test results.

Likewise, upgraded software enables the automaker’s PLCs and DAQ system to pull information from multiple sources in the cells and unit under test, and synchronize it with one timestamp. To better network, access and monitor the cells, Harris reports that ACS and its client added Cisco’s managed Ethernet switches using regular TCP/IP protocol between the machines and their plant network, which secures its multiple potential failure points by disallowing unauthorized access or communications. It also installs the switches in all devices for its projects before shipping to their sites. These switches provide cybersecurity by operating three layers on each switch, including:

• Layer 1 is a programmable patch panel that establishes a connection between ports, which is just the basis of an unmanaged switch.

• Layer 2 is where data packets are pushed to their destinations, using media access control (MAC) addresses.

• Layer 3 is the network layer with firewall functions, which performs network monitoring between the switch and others in the IT area, and monitors network traffic for anomalous activity or sources.

The switches can be configured for users to set up communications with known devices. This tells users which test cells and other equipment are supposed to be on the network, distinguishes them from laptops and other devices that may be seen as anomalous, and adds MAC addresses for other authorized devices.

An upstream oil and gas producer operating wells in the multi-state, Denver-Julesburg shale formation employed SCADA systems for its remote well pads that relied on 3G and 4G wireless links that didn’t go through a virtual private network (VPN). However, connections in the SCADA system were made via publicly hosted, Internet protocol (IP) addresses, which were masked by voluminous, port-forwarding rules that were stored in Excel files, and made technical servicing complex. Nonetheless, these publicly hosted IP addresses still created major vulnerabilities for the oil and gas company and its IT department, which couldn’t turn off access to this network. To add firewall security to the SCADA links, but still maintain relatively easy access to the local area network (LAN) via remote connections, the company worked with automation supplier Black Label Services (BLS) and Phoenix Contact’s Industrial Security and Networking Services team. Together, they implemented Phoenix Contact’s TC mGuard RS4000 for the 4G Verizon network, mGuard Device Management (MDM) software, and mGuard Secure Cloud (mSC) service to boost the oil and gas firm’s cybersecurity with functions, such as an intelligent firewall and up to 250 IPsec-encrypted VPN tunnels. The new network eliminated the need for publicly hosted IP addresses and port-forwarding rules, which improved OT security, while connecting back to the SCADA system via a cellular network. Now, the oil and gas company controls who can access its sites remotely, and the IT team can quickly add or remove users as profiles or other needs change.

“Test cells produce lots of critical and proprietary data, so our automotive client wants to be confident none of it’s at risk of being lost or stolen,” says Harris. “This is just protecting information on physical equipment, so we’re not moving any of this data to the cloud or allowing any remote control. However, we still need to monitor this network’s connections and traffic to make sure that only communications with the automotive client’s locations are occurring. So far, no equipment has been compromised because we’re only monitoring switches and network traffic, and unauthorized and thirdparties can’t get out of the switches. Our client is already in the process of adding this solution to more locations.”

Because it’s an engineering company that also provides cybersecurity capabilities, Cybertrol Engineering (cybertrol.com) in Minneapolis, Minn., typically starts its projects by asking each customer what they know about their own systems. To sort out these issues, Cybertrol conducts

cyber-RAs along with its discovery process, but it also employs Cisco’s Cyber Vision software, which listens passively to networks where it’s deployed, and builds a functional map of which devices are talking to each other and what they’re talking about.

“Cyber Vision is especially helpful for OT because it doesn’t ruffle or interfere with plant-floor devices that often can’t handle typical network-sniffing software,” says Alexander Canfield, industrial information technology (IIT) manager at Cybertrol, which is also CSIA-certified, and works mainly in the food and beverage, life sciences, chemical, and medical device industries. “It also analyzes firmware, other components and software for vulnerabilities, and gives grades and advice about how to harden them.”

To further limit questionable network traffic, Cybertrol uses newer zero-trust strategies, which are similar to older whitelisting procedures that only allow communication between predefined devices. However, zerotrust is different and more advanced because it directs network traffic to permitted destinations by learning and baselining what’s allowed to talk to what, prompting users that acceptable communications are occurring, and allowing traffic to move back and forth.

Similarly, Cybertrol employs software proxies, which are look-up tables that direct traffic from internal devices to reach specific services based on lists of actual destinations and directions. Authorized communications that ask for an authorized destination are sent to it. However, if a device doesn’t ask for the right direction, then proxies provide defense by preventing its communications from going anywhere.

Canfield adds that proxies can be deployed by installing a local software service on a virtual machine (VM) running Linux or another operating system, which handles communication handshakes, and funnels authorized traffic through the demilitarized zone

(DMZ) between the firewalls that separate network segments. “The choice of a local service and VM usually depends on which SCADA/HMI platform the user is running,” explains Canfield. “Operating systems like Linux can do this, but others such as Windows can do it just as well. These services can also run in software containers like Docker, but that technology is relatively new, so it’s not as widely used yet. We can also put them in an onsite VM by using the functional design map and running services in the DMZ.”

Beyond directing communications and resolving patch issues, Cybertrol also helps users solve individual cybersecurity problems. For example, one of its food and beverage clients recently experienced a network outage just after adding managed switches to Layer 3 of the existing network at its brownfield facility. These stacked, out-of-thebox switches were deployed as a main distribution frame (MDF) because the customer’s IT staff was working with their OT colleagues, and they wanted to remotely access the OT network.

Unfortunately, the new switches were connected without being configured, or even being assigned Internet protocol (IP) addresses, and this caused the whole network to crash when the users tried to add firewalls to the MDF. This occurred because these networks typically use spanning-tree protocols to prevent loop problems, but this requires that all devices use the same types of address assignments and configurations for the network to function, otherwise they’ll conflict with one another. In addition, the food and beverage manufacturer also had mismatches in its virtual local area network (VLAN), and this created conflicts between the new switches and the IT network.

“When we set up spanning-tree protocols within networks, we define one switch that’s in charge, otherwise the

network tries to figure it out by going through its MAC addresses,” explains Canfield. “In this case, we also went to the network and added basic Layer 3 programming. This allows the devices to keep functioning until an overall network analysis could be conducted, where IP addresses, names, spanningtree topology and VLANs could be mapped out for further remediation.”

GrayMatter’s Christensen reports that software can create deny-all philosophies, such as zero-trust, which only allow network access and communications that are prescribed ahead of time. This is similar to the older strategy of whitelisting, which only allows communications at certain sizes, speeds and times. Christensen explains that whitelisting is a subset of zero-trust and deny-all strategies because it prevents all communications unless they come from known, wellbehaved sources, which define good behavior and baseline it, so they know what to deny.

Christensen reports that the best of today’s cybersecurity programs are extensions of process safety efforts and standards established 20 years ago, which have been carried through in guidelines for basic cybersecurity hygiene, such as NIST 2.0 and standards like ISA/IEC 62443. Over the years, GrayMatter even developed its own OT Cybersecurity Maturity Model based on the collective experiences of its many clients. The model details the present security environments and capabilities that GrayMatter found among its clients, and defines their relative risks and the solutions they need to improve their cybersecurity protections and postures (Figure 1).

“The model’s range is the increasing risk vectors, which require users to address and solve the cybersecurity tasks at Level 1 before they can work on the subsequent levels,” says Christensen. “With clients, we talk about

where they want to be. Is segmenting their networks, backing up their data, and having a disaster recovery plan on Levels 1 and 2 enough? They need to complete these tasks before they can do anomaly and breach detection on Level 3, which is where we say the average client should be. This is because detection requires comparing network traffic to baseline measurements that are made possible by completing Levels 1 and 2. Users must know what real assets they have and how they’re performing and communicating before they can identify anomalies or develop fake assets to serve as honeypot traps for intruders.”

Focus on defining current environment and defining needed capabilities to address risk associated

Figure 1: System integrator GrayMatter’s OT Cybersecurity Maturity Model details the present security environments and capabilities it found among its clients, and defines their relative risks and the solutions they need to improve their cybersecurity protections and postures.

In fact, GrayMatter launched its GrayMatter-Guard deceptive software three years ago, which creates decoys by using contextualized rules and filtering to talk to other networks. It learns what communications are allowed, and presents deceptive assets, such as PLCs, HMIs and VFDs that represent the devices they’re sitting in front of. They don’t allow intruders to go further into networks than the fake devices they’re communicating with.

“These honeypots divert and react to cyber-probes and likely intrusions, but they also show how intruders are going after our networks,” explains Christensen. “This turns intruders into our penetration testers. The beauty part is we can remediate cyber-intrusion at the same time that they’re attacking fake attack surfaces.”

To achieve cybersecurity at a fleet of facilities, system integrators apply many of the same principles they use at individual sites. For example, a global, canned beverage producer with eight plants in the U.S. and Canada recently acquired several new facilities that are all different with no standard networking. “Some of the new sites have some network segmentation separating IT and OT, and some don’t. Some have old networking hardware,

Source: GrayMatter

30-40 physical servers, and 10-yearold data storage devices,” says Paige Minier, senior digital transformation manager at Gray Solutions. “They wanted to upgrade all these systems at once, so we helped them standardized on new switches and servers.”

Minier reports the system integrator performed an in-depth discovery at each of the eight facilities, examined their network architectures and topologies, and built a frame and rack elevation diagram. It shows spaces for cables, what devices are on which rack, and what open space is available. This let Gray Solutions and the beverage company revamp and rebuild the plants’ networks, and document the makes and models of PLCs, HMIs and other devices they deployed.

“Four of the plants were fairly standardized on Hirschmann managed switches, which provided segmentation, but much of the hardware was also obsolete and didn’t define standard clients or active models,” explains Minier. “The other four plants had different models of Cisco’s managed switches, but many of them were obsolete, too, while lots components at the control panel-level were

unmanaged. Our discovery reports showed the plants’ present states and cybersecurity hygiene scoring, and detailed all of their end-of-life networks, servers and firewalls. Some sites had no segmentation, and others had never had any software patching, so there were tons of vulnerabilities.”

Even though these findings were grim, Minier adds they gave the beverage company a roadmap to a more secure state, which included a standardized and segmented network in accordance with CPwE and ISA/IEC 62443. It eventually implemented PaloAlto 3200 firewalls, Cisco Catalyst 9500 switches, and Cisco 9330 access switches with intermediate distributed frames (IDF).

“This was a very collaborative project, and had to be as our client learned to manage their cybersecurity,” adds Minier. “They also didn’t want static, one-time cybersecurity scans, but instead wanted to apply patches when necessary, and detect ongoing threats to the OT side. This was their next project, and they went with Nozomi’s network monitoring software after other capital projects were done.”

MUCH has changed since DuPont’s DeLisle chemical processing plant in Pass Christian, Miss., was named the first Plant of the Year honoree by FieldComm Group in 2002. Market dynamics for chemical processors, refineries, utilities and other processing industries have changed with the times, ushering in an era of innovative technology for field communications. It led to more productivity, better cost efficiency and progress toward sustainable operations.

Plant of the Year winners have spanned six continents, representing the chemical processing, upstream and downstream oil and gas, steel, utilities and wastewater sectors. Each winner is an upper quartile performer—a company in the top 25% or at the 75th percentile of an industry. They’ve realized more than $125 million in total operating expense savings and an estimated $800 of operations expense savings per device.

A common denominator is the use of HART, HART-IP, WirelessHART or Foundation Fieldbus technologies in their

FieldComm Group’s Plant of the Year honorees illustrate the progress made in productivity, efficiency and sustainability

BY LEN VERMILLION

plants. What’s unique about each plant is the way in which they integrated field communications with emerging technologies and procedures, such as completing entire digital transformations of plants, increasing reliability of communications and remote operations, adding predictive maintenance capabilities, creating sustainable operations, and, lately, the use of artificial intelligence in conjunction with fieldbus communications.

The feted plants showed innovative uses of real-time device diagnostics and process information integrated with control, information, asset management, safety systems and many other automation systems to lower operating costs, reduce unplanned downtime and improve operations. Meanwhile, winners consistently have above average scores for intelligent device adoption best practices.

In this article, we look back at a few of the success stories and how they mirrored many of the notable advancements in the process control sector overall.

The only two-time honoree, Danube Refinery of MOL Plc, located in Szazhalombatta, Hungary, was first recognized for its early endeavor into the then emerging trend of digital transformation. In the early 2010s, as wireless communication began to overtake tethered infrastructure in many sectors, industrial operations lagged. However, Gábor Bereznai, then head of maintenance engineering at MOL Danube, realized the benefits of integrating process instrument diagnostics and device utilization with a computerized maintenance management system (CMMS) and asset management system (AMS) with SAP process control, taking islands of systems that used to be separate and creating triggers for launching transmitters, control valves and positioners. “This was done by having the diagnostic system inform the CMMS about the valves. This data could then be used in morning meetings with our maintenance team and other staff to help us do risk assessments and identify other problems,” he said when the company won its second award in 2015.

Their digital transformation, which started in 2010, saw further developments fi ve years later. After winning the 2010 Plant of the Year award, Bereznai and his colleagues launched expansions and multiple diagnostic and maintenance projects to bring similar benefi ts to other facilities at MOL Danube. At the time, they’d expanded the use of FieldComm Group technologies to more than 4,700 connected devices on 15 operating units, including 42 WirelessHART devices and six gateways connected to SAP-PM CMMS.

Additional smart devices and enhancement of valve diagnostics and predictive maintenance resulted in saving $350,000 per year on potential shutdowns with smart device monitoring. MOL Danube also set up a cross-functional, risk-assessment team that evaluated 20,000 device notifications per year.

The work that MOL Danube did inspired FieldComm Group to develop JSON-based descriptors for key HART commands. Known as DeviceInfo Files these will soon be available for download from FieldComm Group’s web page.

Mangalore Refi nery and Petrochemicals Ltd. (MRPL) was at the forefront of India’s burgeoning oil, gas and petrochemical sector in 2018. Its “do things better” culture inspired it to be an innovator in hydrocarbon processing, adopting digital communications for process control and striving for more effective utilization of its resources and facilities.

Its use of FieldComm Group technologies was no different as it utilized installation savings and advanced diagnostics from more than 9,000 Foundation Fieldbus and 5,000 HART devices. At the time it was awarded the Plant of the Year, MRPL reported it saved more $6 million in project costs using those devices.

Beyond determining the technical advantages of Foundation Fieldbus and other FieldComm Group technologies, Basavarajappa Sudarshan, chief general manager for electrical and instrumentation at MRPL and project team leader, reported that he and his team had to convince colleagues, including operators and managers at MRPL, that migrating to digital communications would be worthwhile and wouldn’t hinder operations. They were successful.

MRPL started its journey into FieldComm Group technologies when it installed all-digital communications on its isomerization unit, including implementing all process control loops with Foundation Fieldbus with control in the field (CIF) functions, as well as HART transmitters used in its safety instrumented systems (SIS). On the strength of that success, MRPL also commissioned more than 10 process units, cogeneration plant and utilities at its refinery with Foundation Fieldbus, HART and WirelessHART.

Designing, building, integrating, commissioning and starting up a new process plant is difficult enough, but dealing with a hurricane and flooding at the same time is just plain unreasonable. Nevertheless, that’s what Chevron Phillips Chemical Company LP achieved when it undertook its U.S. Gulf Coast (USGC) petrochemicals project and built a new unit at the plant for ethylene production. Located at its Cedar Bayou facility in Baytown, Texas, the plant has a design capacity of 1.725 million metric tons/year (3.8 billion pounds/year).

The USGC ethylene project started in 2012. Mechanical completion was done at the end of 2017, and commissioning was fi nished and startup began in March 2018. Near the end of construction, the

Cedar Bayou facility also weathered Hurricane Harvey in August 2017, and used its smart HART and Foundation Fieldbus devices to help hasten the plant’s recovery.

The plant’s automation architecture consists of a distributed control system (DCS) with field control station (FCS) controllers and safety instrumented systems (SIS). “When Foundation Fieldbus and HART technology were chosen for this project, the DCS was selected because it offered an integrated asset management software platform to use with the digital information from the field instrumentation,” said Amit Ajmeri, DCS specialist for the USGC project at Chevron Phillips Chemical Company, when the project won the Plant of the Year designation in 2019.

Near the end of construction phase, Hurricane Harvey and its record-breaking rain halted the entire project. The plant experienced some flooding. Fortunately, most of the plant’s instruments, I/O, controls and field junction boxes were located above the flooding, and weren’t water damaged. The project team verified data for their healthy-device list before Harvey arrived, and confirmed most devices were still in the same healthy condition after the storm, so they didn’t have to perform any diagnostic checks for them.

To understand the difference data-based decision-making can have on process performance, look no further than Dow’s Texas Operations (TXO) on the Gulf Coast. Originally built in the 1940s in Freeport, Texas, as a plant to extract magnesium from seawater, the chemical company’s TXO operations grew, and its homes are now in Deer Park, Freeport, Houston, La Porte, Seadrift and Texas City.

Throughout the 2010s, integrated data fl ows from some 50,000 smart instruments became central to its reliability objectives, helping to save tens of millions of dollars by boosting overall equipment effectiveness (OEE) and trimming instrumentation-related production losses by 80%, according to the company.

thousands of dollars in savings. Dow also used IAMS to perform routine loop-checking and SIS validation on more than 5,000 loops and leveraged FieldComm Group’s FDI architecture to streamline device integration across the many varieties of smart instrumentation and device profile versions.

HMEL’s Guru Gobind Singh refinery in Bathinda, Punjab, is an industrial anchor for the economic development of northern India. It sits at the northern terminus of a 1,017-km pipeline from Mundra, Gujarat, on India’s west coast, where tankers deliver the refinery’s raw materials from abroad. Formed in 2007 as a public-private partnership joint venture between Hindustan Petroleum Corp. Ltd. (HPCL, a government of India enterprise) and Mittal Energy Investments Pte. Ltd. (MEIL) of Singapore, HPCLMittal Energy Ltd. (HMEL) broke ground on the new refinery in 2008 and commenced operations in 2012.