Expect smart thinking and insights from leaders and academics in data science and AI as they explore how their research can scale into broader industry applications.

Key Principles for Scaling AI in Enterprise: Leadership Lessons with Walid Mehanna

Enterprise Data Architecture in The Age of AI – How To Balance Flexibility, Control and Business Value by Nikhil Srinidhi

Maximising the Impact of your Data and AI Consulting Projects by Christoph Sporleder

Helping you to expand your knowledge and enhance your career.

CONTRIBUTORS

Philipp Diesinger

Robert Lindner

Gabriell Máté

Stefan Stefanov

Peter Bärnreuther

Sascha Netuschil

Tony Scott

Tarush Aggarwal

James Tumbridge

Robert Peake

Łukasz Gątarek

Nicole Janeway Bills

James Duez

Taaryn Hyder

Anthony Alcaraz

Anthony Newman

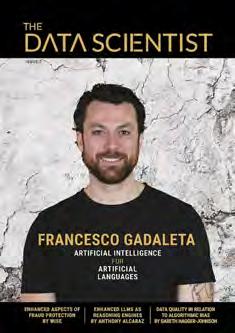

Francesco Gadaleta

EDITOR

Damien Deighan

DESIGN

Imtiaz Deighan imtiaz@datasciencetalent.co.uk

DISCLAIMER

The views and content expressed in Data & AI Magazine reflect the opinions of the author(s) and do not necessarily reflect the views of the magazine, Data Science Talent Ltd, or its staff. All published material is done so in good faith. All rights reserved, product, logo, brands and any other trademarks featured within Data & AI Magazine are the property of their respective trademark holders. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form by means of mechanical, electronic, photocopying, recording or otherwise without prior written permission. Data Science Talent Ltd cannot guarantee and accepts no liability for any loss or damage of any kind caused by this magazine for the accuracy of claims made by the advertisers.

Welcome to issue 11 of Data & AI Magazine , where we shift our focus to explore how traditional sectors are quietly building sustainable AI capabilities. After 10 issues of coverage about AI’s transformative potential, this issue examines the unglamorous but essential work of making AI deliver value in the real world.

Our cover story features a first for our magazine. It’s the story of a 3-way collaboration between GoTrial, Rewire, and Munich Re that exemplifies the kind of practical innovation our industry needs. Their data-driven approach to clinical trial risk mitigation addresses a fundamental challenge: 70-80% of trials fail to meet enrolment targets on time, with delays costing approximately $40,000 per day in operational expenses. By consolidating over one million clinical trials into a unified platform enriched with regulatory, epidemiological, and site-level information, they’re not just applying AI to healthcare — they’re engineering certainty where uncertainty has long been accepted as inevitable.

What makes this partnership particularly compelling is how it bridges the gap between data science and financial risk management. Munich Re’s ability to convert quantified risk into financial instruments demonstrates how AI’s true value emerges when technical capabilities meet real-world commercial needs.

This issue deliberately focuses on small and medium-sized enterprises in traditional sectors, moving beyond our typical coverage of large corporations and tech startups. Tarush Aggarwal, founder of the 5X allin-one data platform, shares insights on ‘How to Build Less, Deliver More’ which is a refreshingly practical approach to AI ROI that prioritises impact over technological sophistication.

Complementing this perspective, Tony Scott offers ‘Successful AI Strategies For Businesses in Traditional Sectors’, an anti-hype guide from a fractional CDO who understands that smaller enterprises can’t afford to chase every AI trend. These articles remind us that the most sustainable AI implementations often come from organisations that approach the technology with clear constraints and defined objectives.

We also feature Sascha Netuschil of Bonprix’s exploration of how GenAI is driving value in fashion retail, specifically through improving internal business processes and translation services. His work on building applications that make websites disability-friendly illustrates how AI’s most meaningful contributions often emerge in specialised, sector-specific applications rather than broad, general-purpose deployments.

THE CURRENT AI BUBBLE – SHADES OF THE DOTCOM ERA

I started recruiting in the late 90s during the dot-com bubble, and I see troubling parallels between then and now. In 1999, adding ‘.com’ to a company name enabled businesses with no clear product-market fit to raise significant capital; we’re seeing a similar phenomenon with AI. Every VC portfolio desperately needs an AI company, echoing the frantic investment in search engines and other internet companies two decades ago.

The sustainability questions are impossible to ignore. While companies like Anthropic and OpenAI have seen significant revenue growth in 2024, they continue to lose enormous sums of money with no clear path to even medium-term profitability. The major AI labs appear to have hit technical plateaus, and Sam Altman’s AGI promises seem increasingly unlikely to materialise on the timelines being suggested.

This reality check doesn’t diminish AI’s long-term potential, but it does demand that we approach it with the same rigour we apply to any other business technology. The organisations featured in this issue succeed precisely because they focus on solving specific problems rather than chasing technological possibilities.

What emerges from this issue is a clear pattern: the most successful AI implementations prioritise practical value over technical sophistication. The most impactful applications are those that address real operational challenges with measurable outcomes.

As we move forward, the industry’s maturation will be measured not by the sophistication of our models or the size of our funding rounds, but by our ability to consistently deliver ROI to organisations across all sectors. The leaders featured in this issue are already doing this work quietly, pragmatically, and profitably.

Thank you for joining us as we explore these more grounded approaches to AI implementation. The future of our field depends not on the next breakthrough algorithm, but on the sustained effort to make AI genuinely useful for the vast majority of organisations that operate outside Silicon Valley’s spotlight.

Damien Deighan Editor

By PHILIPP DIESINGER, ROBERT LINDNER, GABRIELL MÁTÉ, STEFAN STEFANOV, PETER BÄRNREUTHER

PHILIPP DIESINGER

is a data science executive with over 15 years of global experience driving AI-powered transformation across the life sciences industry. He has led high-impact initiatives at Boehringer Ingelheim, BCG, and Rewire, delivering measurable value through advanced analytics, GenAI, and data strategy at scale.

ROBERT LINDNER

is an expert in AI and data-driven decisionmaking. He is the owner of Rewire’s TSA offering and has led multiple AI/ ML product initiatives built on real-world data in the life sciences.

STEFAN STEFANOV is the chief engineer of the GoTrial clinical data platform with over 7 years of experience in leading and developing data and AI solutions for the life science sector. He is passionate about transforming intricate data into user-friendly, insightful visualisations.

GABRIELL FRITSCHE-MÁTÉ

is a data and technology expert leading technical efforts as CTO at GoTrial. With extensive experience in addressing challenges throughout the pharmaceutical value chain, he is committed to innovation in healthcare and life sciences.

PETER BÄRNREUTHER

is an expert in AIrelated risks, bringing over 10 years of experience from Munich Re. He is a physicist and economist by training and has focused on regulatory topics and emerging technologies such as crypto and AI.

Clinical trials evaluate the safety and efficacy of new medical interventions under controlled conditions. Late-phase trials are essential for regulatory approval and typically involve large patient populations across multiple geographically distributed sites. Given their complexity and cost, even modest delays can have significant financial implications: daily operational expenses can exceed $40,000, and potential revenue losses may reach $500,000 per day of delay [1]. Patient enrolment stands as the most significant bottleneck in clinical trial success, with 70-80% of trials failing to meet enrolment targets on time, necessitating protocol amendments, timeline extensions or additional sites that dramatically increase costs and delay innovative treatments from reaching patients [2,3]

Recognising the persistent challenges in clinical trial execution, three partners – GoTrial, Rewire, and Munich Re – have come together to pioneer a new, datadriven approach to de-risking clinical development:

● At its core is GoTrial’s unique platform, which consolidates and harmonises over one million clinical trials. This data is not only unified and cleaned, but also enriched with regulatory, epidemiological, and site-level information – and made accessible through a suite of analytical tools, including benchmarking KPIs, predictive models, and GenAI capabilities that extract structure and meaning from unstructured protocol documents.

● Building on this foundation, Rewire contributes advanced analytics and deep operational expertise to surface predictive insights on trial feasibility, site performance, and protocol complexity. Where required, this is complemented by targeted expert input to interpret patterns and validate results.

● Munich Re, in turn, adds a critical third layer: the ability to translate quantified operational risk into financial risk structures, enabling new forms of risk-sharing and exposure mitigation for sponsors.

Together, this approach represents a departure from conventional planning – shifting from experience-based assessments to a system of objective, evidence-led foresight that allows sponsors to design and manage trials with greater precision, accountability, and confidence than was previously possible. Because the approach draws on a complete set of global clinical study data, it removes the lingering uncertainty of ‘what might we have missed?’

This new approach to clinical development has begun to complement established trial operations by embedding deep, data-driven intelligence earlier in the process. Rather than focusing solely on the execution phase, this method brings structured analysis and predictive modelling into the design and planning stages, enabling sponsors to challenge assumptions, simulate scenarios, and anticipate risks before trials begin. Unlike traditional feasibility practices that often rely on local knowledge or prior experience, this approach leverages global insights at scale and transforms risk mitigation from a reactive task into a proactive, quantifiable discipline. In doing so, it opens up new opportunities for smarter planning – and even for risk-sharing models that were previously out of reach.

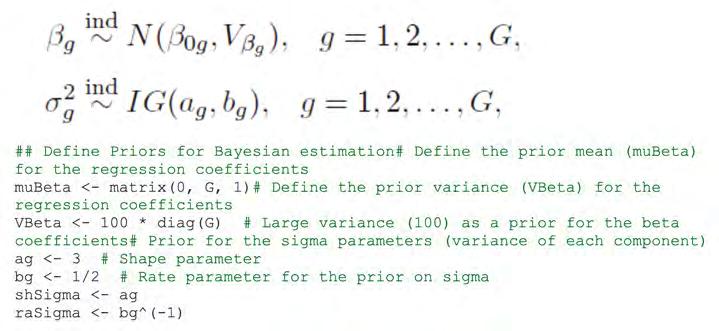

Site-level recruitment potential forecast for two clinical studies. Each axis represents an individual clinic, with the outer (red) boundary indicating theoretical site capacity and the inner (blue) area showing predicted actual enrolment performance. While Study A demonstrates alignment across most sites, Study B shows a significant performance gap across the majority of clinics – signalling elevated recruitment risk and the need for strategic site or protocol adjustments.

Site-level forecasting enables bottom-up recruitment prediction

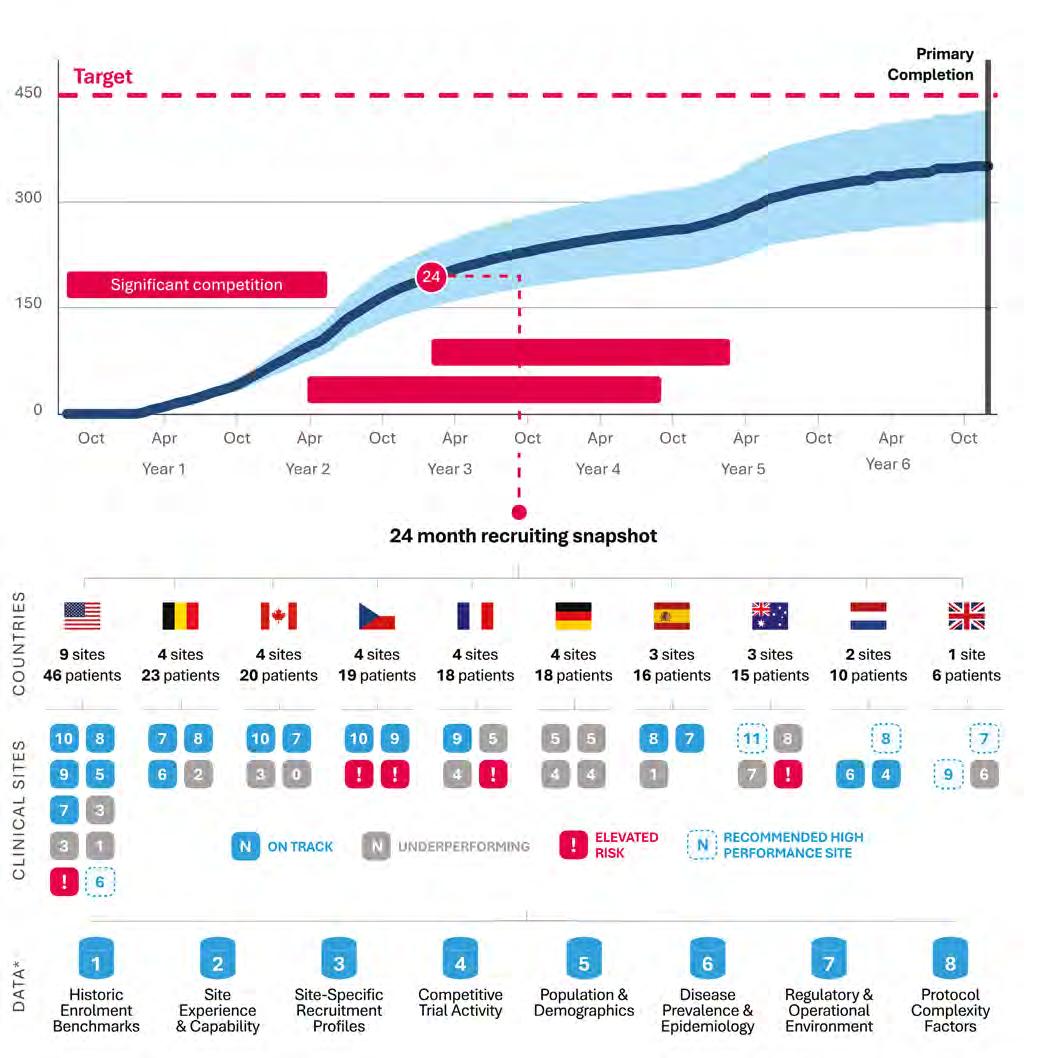

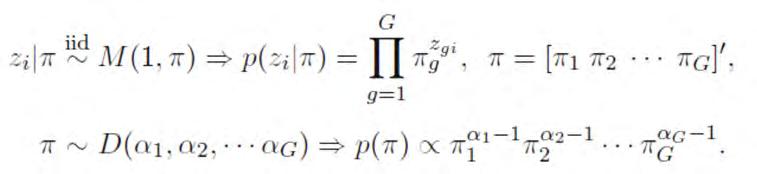

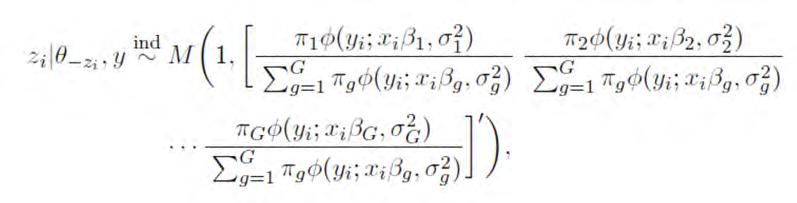

This chart demonstrates how a data-driven approach generates a site- and indication-specific forecast of patient enrolment. By integrating multiple data layers, the model estimates recruitment potential for each site and aggregates these predictions into the cumulative enrolment trajectory (dark blue curve) with its confidence range (light blue band).

The site colour coding provides immediate insight: Red indicates sites with elevated risk, Grey marks underperforming sites, Blue highlights sites performing well, and dashed blue outlines identify available high-performing sites recommended for inclusion.

In this example, rising competition in Year 2 coincides with declining recruitment performance, allowing early intervention before significant delays occur.

* The bottom row highlights the eight data dimensions feeding the model:

1. Historic Enrolment Benchmark: enrolment performance distributions from analogous historical trials.

2. Site Experience & Capability: therapeutic expertise, infrastructure readiness, and compliance history.

3. Site-Specific Recrui tment Profiles: site track records including past recruitment rates and activation timelines.

4. Competitive Trial Activity: ongoing and planned studies competing for patients and site resources.

5. Population & Demographics: density, age, and local factors influencing patient availability.

6. Disease Prevalence & Epidemiology: regional and indication-specific prevalence estimates.

7. Regulatory & Operational Environment: country-level approval timelines and historical startup delays

8. Protocol Complexity Factors: elements such as patient burden, procedure intensity, and inclusion/ exclusion complexity.

These eight data sources enable objective, granular, and dynamic predictions, transforming risk into actionable foresight.

1 | Data-driven enrolment forecasting and site selection Trial sponsors face a paradox: in an effort to reduce risk, they often fall back on familiar practices that unintentionally increase it. Frequently, sites are selected based on self-reported feasibility questionnaires [4] , prior working relationships or contracting convenience – leading to concentration at a small number of well-known institutions. For instance, leading academic centres may be running over 50 trials simultaneously, with predictable strain on patient access and investigator attention [2] . In other cases, top-tier hospitals are chosen despite lacking specific experience in the therapeutic area, as has been observed in complex oncology studies such as lung cancer trials [5]

To further manage perceived risk, protocols increasingly include a larger number of endpoints [6] and sites are selected based on historical performance – often limited to the sponsor’s or CRO’s own portfolio. While these instincts are understandable, they can overlook broader indicators of feasibility and miss higher-potential sites outside the known network. The data-driven approach rethinks this process from the ground up. Using global clinical trial data, population density, disease prevalence, historical site performance, and real-time competition signals, it builds a bottom-up forecast of enrolment potential –modelled at the site and indication level. Rather than asking sites what they think they can recruit, this method estimates what they are likely to recruit, based

on data from thousands of analogous studies and the regional context.

Two advances have made this possible at scale. First, large language models specialised in biomedical contexts now enable structured interpretation of eligibility criteria, protocol complexity, and therapeutic nuance – even when embedded in unstructured texts [7, 8] . Second, the GoTrial platform have aggregated and enriched clinical trial data globally, providing the statistical backbone needed to train predictive models across diverse study designs and settings.

The result is a site-level recruitment forecast that not only estimates expected enrolment at each site, but also flags geographic or operational risks – such as overlapping trials in the same indication or regulatory zone. These insights can be used not only to validate the current site plan, but also to recommend highpotential sites not yet part of the study. Sponsors can optimise their site portfolio for both efficiency and geographic reach – with a clear, data-backed view of how each region contributes to overall enrolment targets.

In one recent case, this methodology was applied to a late-phase paediatric asthma study. While the initial site plan appeared comprehensive, the eligibility criteria were significantly more restrictive than in analogous trials. Adjustments to both the site mix and protocol, guided by historical benchmarks, resulted in a more realistic and actionable enrolment strategy –increasing confidence without delaying study initiation.

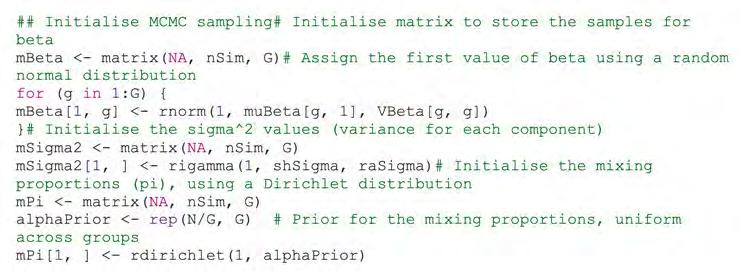

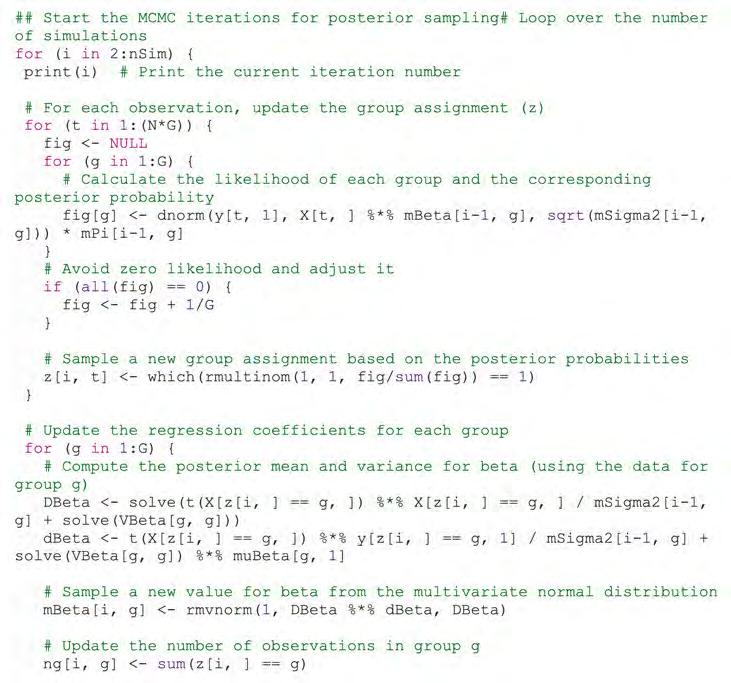

An analysis of 9,336 global breast cancer trials shows a strong inverse correlation between site-level competition and actual enrolment. When no competing trials were present at a site, enrolment reached 70% of the planned target. However, as the number of concurrent studies increased, performance dropped sharply, falling to just 40% with more than seven overlapping trials. These findings highlight the importance of systematic competition analysis using global trial registry data to support reliable enrolment forecasting and informed site selection.

| Quantifying and navigating recruitment competition

Even when eligibility and site selection are welloptimised, many clinical trials encounter recruitment delays due to an invisible force: competition from other studies, creating priority conflicts, depleting local patient pools, and stretching investigator capacity [5]

This bottleneck is especially pronounced in speciality care, where a small number of expert centres are tasked with enrolling across many overlapping protocols. Sponsors typically assess feasibility based on a snapshot of current activity, but overlook dynamic shifts in the trial landscape – including newly registered studies in the same indication, region, or at the same sites. Compounding this, many sponsors and CROs rely heavily on established site networks, which can place investigators under significant strain. For example, leading U.S. cancer centres are involved in a median of 56 concurrent trials – creating priority conflicts, depleting local patient pools, and stretching investigator capacity [5]. The new data-driven approach addresses this by systematically and repeatedly scanning for competing studies – not only those already enrolling, but also those expected to launch in the near or mid-term. Using structured registry data enriched with GenAI-

based interpretation of unstructured protocol texts, this method identifies overlapping inclusion criteria, geographic proximity, and shared therapeutic areas. It can quantify, for each site or region, the intensity of trial activity that could affect patient availability and investigator focus.

In a recent phase 3 trial feasibility analysis, over 80% of planned sites were found to be involved in multiple other studies with similar patient populations and overlapping timelines. This raised the risk of slower recruitment and site fatigue. Based on this insight, both site mix and enrolment projections required adjustment – avoiding high-competition clusters and increasing geographic diversity.

When analysed at scale, the impact of recruitment competition becomes measurable: in a study of over 9,000 global breast cancer trials since 2000, sites involved in more than seven concurrent studies experienced twice the enrolment shortfall compared to those with little or no competition. By treating recruitment competition as a measurable input – not a post hoc explanation – sponsors can preempt resource bottlenecks, stagger site activation, and gain a more accurate picture of the real operational landscape before the first patient is ever screened.

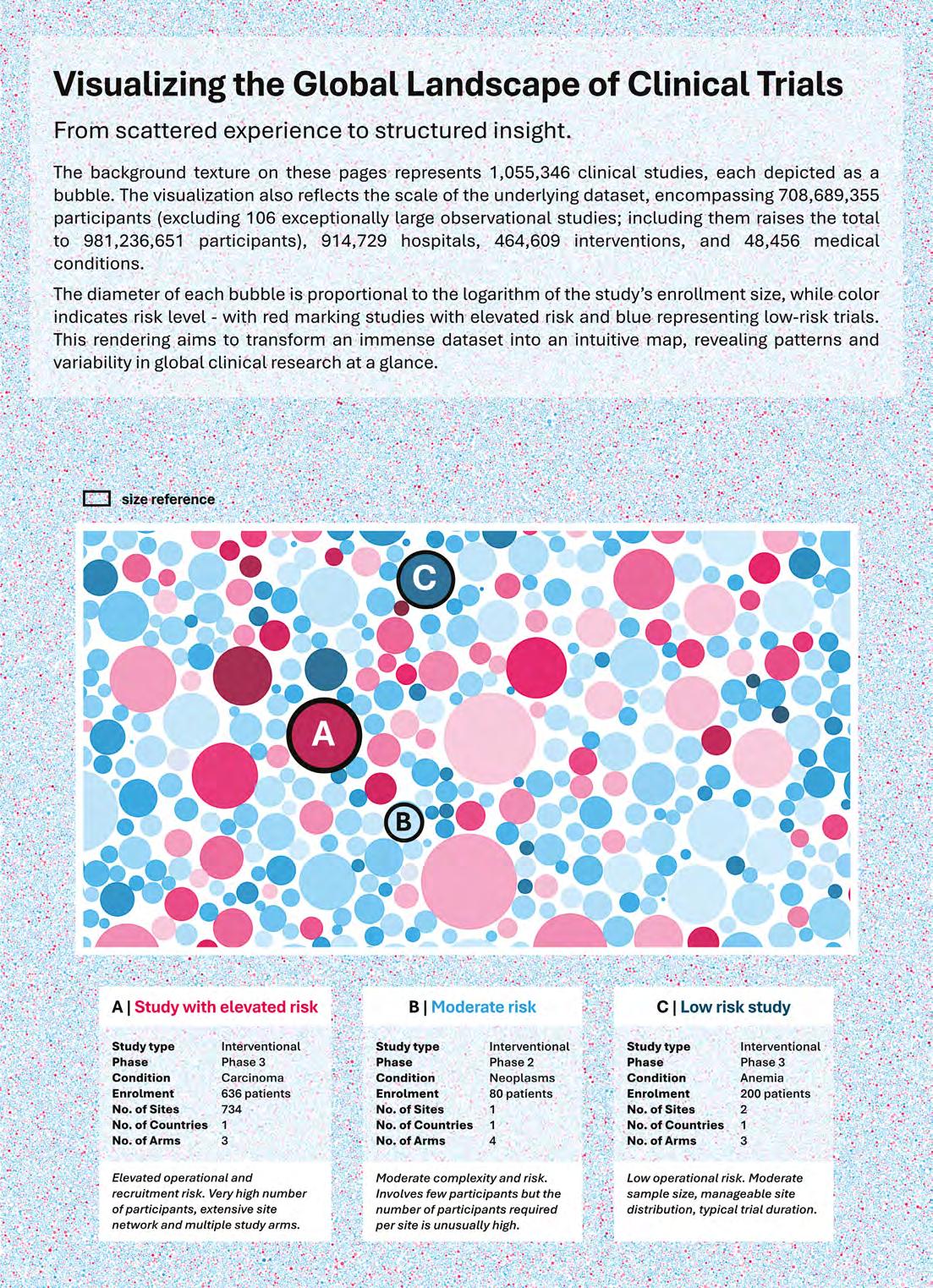

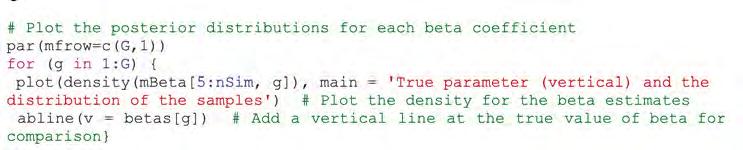

Elevated risk (29317 studies, 25.4%)

Low risk (86038 studies, 74.6%)

3 | De-risking clinical trials with data at scale

While operational excellence remains critical to clinical trial success, many in the industry are now asking a more foundational question: how can we measure trial risk in a consistent, evidence-based way – before the study even begins? Until recently, such assessments relied heavily on expert judgment and anecdotal experience. But with the availability of large-scale clinical study datasets, modern analytics and GenAI, that is starting to change.

Drawing on a uniquely large and structured clinical trial dataset – spanning over 900,000 sites across more than 200 countries, and involving upwards of 900 million participants – it is possible to systematically model risk across critical trial design parameters. These models rely on empirical data from operationally and medically comparable studies, matched by indication, phase, geography, and protocol characteristics. Rather than relying on anecdote or institutional memory, this approach builds grounded expectations for key

20,000 trials w. recruitment challenges

Recruitment Challanges:

>20,000 trials

Operational Complexity:

>8,000 trials

8000 trials w. very high operational complexity

outcomes such as enrolment performance, study duration, and operational complexity at both the site and portfolio level.

What sets this new generation of risk analytics apart is its ability to go beyond traditional structured fields. Using advanced techniques, including natural language processing, even unstructured protocol text can be mined to extract risk-relevant features: from the procedural burden on patients to the complexity of eligibility criteria. These dimensions, long considered subjective or hard to compare, can now be evaluated and benchmarked against thousands of historical studies.

Furthermore, data-driven risk quantification not only highlights elevated risk factors but often points directly to actionable mitigation strategies.

A wide range of clinical trial risk factors – more than 30 in total – can now be quantified using datadriven benchmarks. Among them, several high-impact dimensions are especially relevant during trial planning and design.

This metric evaluates how aggressively a study is targeting patient enrolment by benchmarking planned enrolment figures against historical norms for similar trials –matched by indication, phase, and design. When projected enrolment significantly exceeds the typical range, it signals a higher likelihood of recruitment delays or underperformance. The risk is quantified by analysing distributions of enrolment outcomes in comparable studies and flagging plans that deviate substantially from established patterns.

This factor assesses whether the planned trial duration is realistic by comparing it to timelines observed in similar historical studies. Trials with unusually compressed schedules – particularly in complex therapeutic areas – face a greater risk of delays, protocol amendments, or extensions. To quantify this risk, planned durations are positioned within the distribution of actual timelines from comparable trials. Timelines falling below typical benchmarks are flagged as high-risk.

This metric evaluates whether the number of planned sites is aligned with the trial’s scope and complexity. Excessively large site networks can introduce coordination challenges, increase onboarding and training burden, and compromise data consistency. Risk is quantified by benchmarking the proposed site count against historical distributions from comparable studies. Site numbers that exceed typical ranges are flagged as indicators of elevated operational complexity.

This factor assesses the number of countries involved in a trial relative to historical benchmarks. While a broader geographic reach can expand recruitment, it also increases exposure to regulatory variability, uneven site activation, and operational fragmentation. As the number of countries exceeds typical thresholds, the likelihood of coordination and compliance challenges rises accordingly.

This metric assesses the number of intervention arms in a trial relative to historical benchmarks from similar studies. Multi-arm designs place greater operational demands on site staff, increasing the need for training, coordination, and oversight. Trials with more arms than typically observed are associated with a higher risk due to the added logistical and compliance complexity.

The ability to quantify trial design risk across multiple dimensions marks a shift from intuition to evidence. It enables more grounded, objective decision-making in the early phases of trial planning – when the opportunity to prevent costly challenges is greatest.

And while no two trials are the same, they are no longer incomparable. With enough data, even the most complex trial designs can be seen through the lens of experience – not just from one company or one portfolio, but from the global record of clinical research. This provides a robust foundation for more informed risk management and consistently improved trial outcomes.

To make this new approach accessible and repeatable, the three partner companies have formalised it into a standardised product: Trial Success Assurance. Built on GoTrial’s global clinical data platform, enhanced by Rewire’s analytics and modelling capabilities, and supported by Munich Re’s risk transfer expertise, the product allows sponsors to apply this methodology in a structured, modular way. It is currently being used to support study design evaluation, site strategy optimisation, and data-driven feasibility planning. For more information, inquiries can be directed to tsa@rewirenow.com.

As clinical development continues to grow in complexity, the ability to plan with precision – rather than react under pressure – is becoming essential. By leveraging comprehensive data, modern analytics, and collaborative expertise, this new approach offers a way to bring greater objectivity, foresight, and resilience into trial planning. It doesn’t replace the need for operational excellence – it strengthens it, by ensuring that trials are set up to succeed from the very beginning. In an industry where each decision carries high stakes, the ability to move from judgment to evidence is not just an advantage – it's a necessary evolution.

[1] Getz K. How much does a day of delay in a clinical trial really cost? Appl Clin Trials. 2024 Jun 6;33(6).

[2] Fogel DB. Factors associated with clinical trials that fail and opportunities for improving the likelihood of success: a review. Contemp Clin Trials Commun. 2018 Aug 7;11.

[3] Bower P et al. Improving recruitment to health research in primary care. Fam Pract. 2009 Oct;26(5). doi:10.1093/fampra/cmp037

[4] Hurtado-Chong A et al. Improving site selection in clinical studies: a standardised, objective, multistep method and first experience results. BMJ Open. 2017 Jul 12;7(7):e014796. doi:10.1136/bmjopen-2016-014796

[5] Phesi. 2024 analysis of oncology clinical trial investigator sites [Internet]. Available from: www.phesi.com/news/global-oncology-analysis/ Accessed 2025 Jul 4.

[6] Markey N et al. Clinical trials are becoming more complex: a machine learning analysis of data from over 16,000 trials. Sci Rep. 2024 Feb 12;14(1):3514. doi:10.1038/s41598-024-53211-z

[7] Lee J et al. BioBERT: a pre-trained biomedical language representation model for biomedical text mining. Bioinformatics. 2020 Feb 15;36(4):1234-1240. doi:10.1093/bioinformatics/btz682

[8] Jin Q et al. Matching patients to clinical trials with large language models. Nat Commun. 2024 Nov 18;15(1):9074. doi:10.1038/s41467-024-53081-z

As part of the broader collaboration, Munich Re is developing a novel insurance solution to address one of the most persistent challenges in clinical trials: recruitment shortfalls.

Informed by the data-driven risk assessments generated through the Trial Success Assurance approach, this insurance product provides financial protection to sponsors if patient enrolment falls significantly short of plan.

The coverage is tailored based on quantitative risk indicators – such as protocol complexity, site saturation, and competitive trial activity – allowing for a data-grounded underwriting process. This marks a significant first step toward integrating risk-transfer mechanisms into clinical development, offering sponsors not only greater foresight but also a financial safety net when navigating complex or high-risk trials. If actual enrolment falls below a forecast generated through the Trial Success Assurance (TSA) approach, the policy provides financial compensation for additional costs – such as site reactivation or extended trial duration.

Who it’s for:

Sponsors with tight timelines, complex protocols, or a requirement for budget certainty.

Key features:

● Trigger: Enrolment falls short of TSApredicted baseline

● Coverage: Additional costs such as recruitment costs

● Limits: $3M - $50M

This marks one of the first offerings to apply actuarial risk-transfer models to clinical trial operations – enabling smarter funding, planning, and execution decisions.

With interdisciplinary degrees in automotive engineering and cultural anthropology from the Universities of Stuttgart and Hamburg, SASCHA NETUSCHIL gravitated towards data analytics and machine learning even before the term ‘data scientist’ entered mainstream vocabulary.

Today, as Domain Lead AI & Data Science Services at Bonprix, Sascha stands as the architect of the international fashion company’s robust Data Science and AI domain. Joining as a Web Analyst in 2015, he pioneered its establishment and expansion, now overseeing its operational responsibilities and strategic development across all departments. During this time, he has established high-performing AI and data science teams, scaled up robust infrastructure, established MLOps procedures and strategically shaped Bonprix’s overarching AI activities.

Sascha is a recognised AI and data science spokesperson for Bonprix, regularly sharing insights on AI implementation and innovation at conferences. His hands-on expertise is reflected in the AI and data science projects Sascha has implemented over the past decade, which include generative AI solutions, recommender and personalisation systems, real-time intent recognition, fraud detection, price optimisation algorithms, as well as attribution and marketing mix modelling.

Can you briefly tell us about your journey to leading AI and data science at Bonprix?

I've been at Bonprix for 12 years now. When I started, we had no machine learning or AI capabilities, and it was purely analytics being done.

I eventually recognised we could do more with our data than just building dashboards. We tackled two challenging first projects: building a marketing attribution model to measure campaign effectiveness and developing realtime session churn detection. Both were complex starting points, but they were successful and taught us valuable lessons.

From there, we grew organically. I started as the only data scientist,

though that wasn't even my title initially. We expanded into a team, then a department. Recently, the company consolidated all AI and data science teams into what we call a ‘domain’ to foster collaboration and create a unified approach.

That's the short version. We've worked on projects across many areas, primarily in sales and marketing, but our recent organisational restructuring is expanding our reach into other business areas.

What drove Bonprix's decision to invest heavily in GenAI, and how did you build the business case? It wasn't a strategic top-down decision. We grew organically,

We take a strictly benefit-oriented. approach. If we can identify a. strong use case that saves money or generates revenue, we take it.

project by project, based on clear business value. We take a strictly benefit-oriented approach. If we can identify a strong use case that saves money or generates revenue, we pursue it.

We have plenty of these opportunities because we handle our own product design and development, which involves significant manual work. Each project has delivered strong ROI, so our AI investment grew

naturally rather than through executive mandate.

You have nine people in your GenAI team. How is it structured?

The team evolved from a traditional data science group doing machine learning. We made early mistakes by focusing too heavily on data scientists and not enough on engineers. This led to lots of proofof-concepts and great models, but we struggled to get them into production.

Now we have a more balanced structure: four data scientists (including an offshore team that works as one integrated unit), three data engineers, one software engineer handling internal customer UI’s, plus project management. The offshore arrangement gives us flexibility in role allocation.

We learned we needed to invest more in engineering roles to handle operations and actually deploy models in production environments.

Are there patterns in your data scientists' backgrounds? Do they come from typical maths, statistics, physics backgrounds, or are they more computer science and ML focused?

It's a mix. We have people from mathematical backgrounds and others from machine learning. None come from pure software engineering, as they were already in data science before transitioning to AI.

Moving from classical data science to generative AI requires new skills. We took a learning-bydoing approach rather than formal upskilling programs, growing capabilities through projects. Three of our four data scientists have now built GenAI expertise, and we want all of them to develop these skills as we shift toward more GenAI applications.

Initially, we were perhaps too naive, thinking we could just give data scientists an interesting new topic without realising how much they'd need to learn.

What were the main skill gaps they had to address?

The technology itself isn't that complicated for experienced data scientists or developers. It’s using models, implementing RAG systems, though we haven't explored LoRA applications yet.

The real challenge is working with language. You need to learn prompt engineering, what works and what doesn't. This is completely different from traditional programming, where there's one language, one command, and it either works or it doesn't.

With GenAI, you need much more trial and error. You can't be certain that doing the same thing twice will produce identical outputs. This uncertainty and iterative approach represents a fundamental mindset shift from traditional software engineering or data science work.

You've mentioned the rapid pace of change and new skillsets required. How do you keep your team current with the constantly evolving GenAI landscape?

At some point, you have to step back and relax. New developments emerge daily, and trying to track everything becomes a full-time job. It's like buying any new technology. If you check what's available six months later, your purchase already seems outdated. AI moves even faster, but the principle remains the same.

We focus on finding what's available now and what does the job, then pick the best current option. When the next project comes up, we reassess. Is there something new that works better? But as long as our technical setup delivers results, we don't need to reevaluate every few weeks. Otherwise, you'd never complete any actual projects.

It's like buying a mobile phone. You shouldn't keep checking new offers for weeks afterwards because you'll

always think you made a bad deal. The same applies to AI. You have to live with your choices and focus on what works.

We do monitor what's happening in the field, but we only seriously evaluate new technologies when we have a new use case. Sometimes we revisit solutions from a year or two ago, but that essentially becomes a new project building on an old use case.

Moving to your use cases and the way you are tackling fashionspecific translation challenges. Why do standard translation tools fall short for fashion brands like Bonprix?

Fashion has specialised vocabulary that can't be translated wordfor-word. German, for example, incorporates many English terms from fashion and technology. Take ‘mom jeans’ – a trend that's been popular for years. Standard translation would convert this to ‘mama jeans’ in German, which makes no sense because Germans actually use ‘mom jeans’.

While some translation services might handle this specific example correctly, there are countless special cases where fashion terminology requires nuanced translation. Standard algorithms inevitably fall short when dealing with these industry-specific terms.

Additionally, we have our own defined communication style as a company. We follow specific language rules about what words to use and avoid. This combination of fashion-specific vocabulary and brand-specific language guidelines makes it difficult for standard translation software to deliver appropriate results.

With GenAI, you need much more trial. and error. You can’t be certain that doing the same. thing twice will produce identical outputs. This. uncertainty and iterative approach represents a fundamental mindset shift from traditional software engineering or data science work.

How has your translation tool been able to incorporate fashionrelevant language?

We built a RAG-like system that creates a unique prompt for each text we want to translate. The process works like this:

We have extensive humantranslated texts from previous work as reference material. When we receive a new text, we identify which product category it belongs to: men's fashion, women's fashion, outerwear, underwear, shoes, etc. We then pull relevant example texts from that specific category.

The system scans for specialised vocabulary words and crossreferences them against our lookup table. These terms, along with category-specific examples, get incorporated into the prompt. We also include static elements like our corporate language guidelines – rules about which words to use or avoid, with examples in all target languages.

So the complete process takes the input text, identifies the product category, builds a customised prompt with relevant examples and vocabulary rules, then sends this to the large language model for translation.

How do you ensure consistency and brand messaging while adapting to local market nuances across all your countries?

We've improved our existing process rather than replacing it entirely. Previously, external translators would handle texts, then internal native speakers would review them, so we already had humans in the loop.

We still use human review, but we're gradually building trust in the model. Initially, we maintain extensive human oversight, then reduce it over time as confidence grows. Eventually, we'll likely move to sample-based checking.

My philosophy is that we shouldn't apply different quality standards to AI-generated versus

human-generated content. People often distrust AI due to concerns about hallucinations, but human translations weren't 100% perfect either. When we found translation errors before, we'd discuss them with translators and fix them. We can do exactly the same now.

I think 1% errors are acceptable if you have a process to handle them. We can feed incorrect translations back into our prompts as examples of what not to do, allowing the system to improve.

It's interesting that people apply stricter quality rules to AI than to humans. Humans make errors, too, and if AI has the same error rate, the quality impact is identical. The difference is that we now measure AI errors more systematically than we ever did with human translations.

probably the best approach, though it doesn't scale easily.

Language is inherently more ambiguous than numbers. Surely if you give the same text to 10 translators, you'll get 10 different valid translations?

Exactly. With translation, there's often no clear right or wrong answer. Obviously, some translations are incorrect and contain typos or completely wrong words. But as you said, you can translate the same word multiple ways, and both are fine. One might be subjectively better, but what defines ‘right’?

How big an issue were hallucinations for you, and what did you do about them?

For us, it wasn't a major problem. People are often very afraid of hallucinations, but current model generations have improved significantly from the early days. They still occur, but several techniques work well for us.

We maintain human-in-the-loop processes for many texts, which is

We also have a fashion creation tool where product developers input natural language descriptions and receive images of how items would look. For this, we use a technique where another AI model checks the first model's results against specific criteria, ensuring only the item appears (no humans), showing front views, etc.

Another large language model or image generation model evaluates whether outputs meet these rules. If not, the process restarts. This creates a longer user experience, but using one GenAI model to validate another's results is a widely adopted technique for quality control.

Is that automated?

Yes. Users have a UI where they type what they want, and the interface helps with prompt creation so they don't need to think about prompting. The entire process of generating and reassessing images runs automatically in the background, then delivers the result.

What were the biggest technical and linguistic hurdles when building the system?

Initially, we saw many language mistakes, which were disheartening. We had to figure out how to teach the model what we didn't want, but this wasn't well-documented anywhere. We couldn't easily find examples of what works versus what doesn't.

We hit prompt size limits quickly. As prompts get bigger, results often deteriorate because models can't effectively use all the information in oversized prompts, even with large context windows. This led us to create individual prompts for each text rather than one massive prompt.

We also had to integrate these models into our development environment using APIs rather than UIs.

But, honestly, compared to our earlier machine learning projects, this was easier. Our current Gen AI processes aren't fully automated, and there's still a human clicking ‘send’. The background processing is much simpler than something like a fully automated personalisation model that calculates conditions for each user daily and pushes

directly to the shop.

So we've encountered fewer technical hurdles with GenAI than with our previous machine learning use cases.

How is GenAI helping with accessibility, compliance and making your website more disability-friendly?

Throughout the EU, laws require web content to be accessible to everyone. For fashion e-commerce sites with thousands of images, this creates a challenge. People with vision impairments need text descriptions of all pictures so their browser plugins can read them aloud.

We initially handled this manually, paying people to write descriptions for every image. As you can imagine, this became very expensive.

This is exactly what multimodal models excel at – taking an image and describing it in text. We've built a system that automatically describes our product images, though we had to fine-tune it beyond the vanilla model capabilities.

The result saves us significant money on what would have been purely manual work just five or ten years ago. We're very happy with this solution.

What's your technology stack for GenAI applications? Are you using cloud, open source models, or proprietary?

We primarily work on the Google Cloud Platform and try to use their available models. When we started, it was basically OpenAI or nothing, so we set up an Azure project solely to access OpenAI APIs while doing our main work on Google Cloud. We still use OpenAI models for some use cases, but for new projects, we look at Google's models first.

From my perspective, there are differences in models such as pricing and quality. But for standard

use cases (not super advanced reasoning), it doesn't matter much. You can get good results with different models.

For image generation, we use Stable Diffusion, though we're exploring Google's Veo for text-toimage and video capabilities, which are quite impressive. It's not in production yet.

We reassess options for each new project but don't constantly reevaluate existing ones. Since the field is relatively new, we don't have truly outdated processes yet.

We use mostly proprietary models rather than open source. It's about administrative overhead. Open source sounds great because it's ‘free’, but in a corporate context, you invest significant work and money in maintenance and administration.

And since you're not putting customer data through these models anyway, the open source route probably doesn't make sense? Exactly. Though when using OpenAI on Azure, they're also compliant with data regulations. From a compliance perspective, we can't use services hosted in the US, so we wait for European server availability, which is usually a few months after a US rollout.

There's still the ethical question of handling truly sensitive data. You probably shouldn't fully automate with AI for really sensitive information, like in healthcare.

In our case, we don't use customer data currently as our biggest use cases focus on products and articles. But our customer data is already stored on Google Cloud for other services, so it's covered under the same terms and conditions that keep data safe.

The APIs we use, like OpenAI's, don't store or use our data for further model training. Every business needs this guarantee, so providers couldn't sell to corporations without including it in their terms.

Are there computational costs and scaling challenges with proprietary models in your use cases?

Our costs haven't been significant because most use cases target internal processes. We have maybe 1,300 colleagues, with roughly 100 using these tools. That's very different from customer-facing services used by millions.

The cost factor increases dramatically with customer-facing applications. For us, currently, looking at our overall data warehousing and IT costs, GenAI doesn't have a major impact.

Looking to the future, what other potential GenAI use cases are you considering?

We've done a lot with text generation and now want to focus more on image generation. Beyond the inspirational images for product development I mentioned, we're working on automated content creation.

Currently, adapting images for different channels, such as our homepage, app, and social media, requires manual work. You might need to crop an image smaller while keeping the model centred, or expand it to a different aspect ratio by adding background elements like continuing a wall's stonework. We can now do this with AI visual models.

We're also exploring video content creation from images or text. Imageto-video is more interesting for us because we want to showcase our own fashion products. Text-to-video might generate nice outfits, but they wouldn't be our products. This is possible today with significant development investment, but it's not our top priority.

Our priority is creating short videos from existing images for our shop and social media. There's high potential in content creation because we need variants for personalisation. For example, a fall collection teaser featuring a family appeals to parents, but single people

or men's wear shoppers might prefer content showing just a man or a couple. AI can help us create these variants from existing content.

Regarding customer-facing AI systems, like the ‘help me’ chatbots getting lots of publicity, I think culture is changing. Eventually, people will expect every website to have natural language interaction capabilities. I'm aware of this trend, but given our finance-focused approach, the ROI wouldn't justify the investment right now. We're focusing on internal processes where we can achieve better results.

Still, we shouldn't ignore this shift. People increasingly use GenAI apps on their phones for everything, and they'll expect similar capabilities from other technologies, including websites and maybe even cars. Technology advances faster than culture, but culture does eventually catch up.

How are you measuring success and judging ROI for your GenAI initiatives?

Most of our first wave projects directly save money on external costs. For example, we no longer pay external translators because the savings are right there on the table. We can calculate exactly how much we save per month or year versus our internal costs, which are much lower. These use cases are very easy to justify.

It gets more complicated with projects like our image creation tool for product development. This doesn't replace external costs; it just enhances the creative process, which isn't easily measurable.

For these cases, we track usage levels. Since it's built into a workfocused UI, people wouldn't use it unless it's genuinely helpful for their job. Unlike general tools like ChatGPT, where you might waste time generating funny images, our tool serves a specific purpose. The novelty factor wears off, so sustained usage indicates real value.

Of course, there's initial higher usage as people try it out, but we monitor long-term adoption patterns. However, this doesn't give us the same level of investment assurance as direct cost savings.

This is a general IT problem, not AI-specific. Some systems are essential – people can't work without them – but others are harder to quantify. Take our ML recommendation features in the online shop. You can measure how many people use recommendations before purchasing, but that's probably not the only factor in their decision.

To really prove ‘I made X more money’, you'd need constant A/B testing, which has its own costs. Part of your audience wouldn't see the feature during testing periods.

Sometimes it's hard to measure effects, and we had this challenge before AI. At some point, you have to be convinced that something is valuable based on available evidence and what you observe.

Are there any final thoughts you'd like to share?

We're building these teams with lots of interesting use cases. It's a good environment for trying new things. The company provides guidance on which areas to focus on, but how you implement solutions and experiment with systems is quite free. We really get to see what works and how to achieve success.

We're always looking for good talent, and people can check our career page if interested. I think we have a great setup for experimentation, and my team members really enjoy that freedom.

I think culture is changing. Eventually, people will expect every website to have natural language interaction capabilities.

If you could build precise and explainable AI models without risk of mistakes, what would you build? Rainbird solves the limitations of generative AI for high-stakes applications.

A FRACTIONAL CDO’S ANTI-HYPE GUIDE TO DATA & AI FOR SMALL AND MEDIUM-SIZED ENTERPRISES

TONY SCOTT is a seasoned technology executive whose career ranges from software developer to Chief Information Officer. Beginning his journey as a consultant C/C++ developer at Logica, Tony built a deep technical foundation that led him into technology leadership roles at NatWest Bank, Conchango and EMC. Working as global enterprise architect, group digital transformation director and CIO at engineering companies Arup, Atkins and WSP, Tony championed data-driven decision-making. A consistent thread throughout Tony’s

career has been his focus on leveraging data and emerging technologies, especially AI, to unlock business value, drive innovation and improve outcomes. With his focus on bridging technology and business strategy, Tony advocates for pragmatic digital transformation where technology serves clear business goals. Today, he continues to shape the future of the data-driven business as an advisor, fractional CxO, board member and speaker, helping organisations harness the power of data and AI for sustainable competitive advantage.

TONY SCOTT

What are the biggest mistakes traditional small and medium-sized companies make when implementing AI?

The biggest mistake is FOMO – fear of missing out. Companies rush into AI without a plan, pursuing ‘AI for AI’s sake’. They’re missing critical data foundations: proper infrastructure, clean data, and understanding of their existing information systems.

Many see AI as a magic wand, especially after ChatGPT’s launch. This hype-driven approach ignores essential elements like governance, security, privacy, and ethics – all crucial for successful AI implementation.

My mantra comes from lean startup methodology: think big, start small, scale quickly. You need the vision, but companies often jump straight to big-ticket items, which becomes costly.

The other major mistake is treating AI as a technology project instead of a business transformation. AI should deliver measurable business benefits and ROI. You need leadership buy-in, which is actually easier in SMEs than large organisations, but still essential.

Can you share examples of companies jumping into AI without a proper strategy?

the wrong problems – ones that don’t advance your business strategically.

The ‘garbage in, garbage out’ principle has never been more relevant. Companies need sufficient, clean, consistent data. They need to understand their system architecture – are data sources tightly or loosely coupled? Often, they’re tightly coupled, requiring data hubs, warehouses, or lakes to centralise and clean information before building AI on top.

Security and privacy are critical. Customer data must comply with GDPR, Cyber Essentials, and ISO 27001. Without addressing business drivers, KPIs, and outcomes, you’re just doing technology for technology’s sake.

The fundamental question: What’s your current data health? Do you have dashboards, alerts, and analytics? Are you using data effectively before considering AI? Your entire data estate – business systems, IT systems, operational systems – all contain data that needs proper storage, access, and querying capabilities.

Data duplication creates major issues. If customer address changes don’t propagate across all systems, you get inconsistent information.

If every company just looks down at the. bottom line,.cutting costs and. automating, we’ve got a boring.future. ahead. Where.humans can step in is. around innovation..

I see ‘solutions looking for problems’ constantly since ChatGPT. A furniture manufacturer client kept insisting, ‘We want AI’ without defining their goals, KPIs, or business model. When I pressed deeper, they simply feared competitors had AI and felt they needed to catch up, but had no idea what to do with it.

A colleague worked with an e-commerce company desperate for an AI chatbot to solve customer problems. They spent heavily on implementation, but their real issue was order fulfilment. The chatbot actually made angry customers angrier. They eventually fixed the fulfilment problem – nothing to do with AI – and dropped the chatbot entirely.

Then there’s a flight training company with an impressive vision: using AI to connect student classroom behaviour with aircraft performance to improve training. Ambitious and transformative. But they had no data strategy, technology strategy, or IT foundation in place.

These failures result in wasted spending on tools, vendors, and staff for undefined problems. Projects get abandoned without delivering benefits. Since AI enables better human decision-making, building systems on faulty data produces wrong answers, eroding trust. The whole system can collapse like a house of cards.

The biggest missed opportunity? Using AI to solve

SMEs often use Power BI overlays or low-code/no-code solutions, but the core principle remains: clean, accessible data that drives business decisions and provides historical trends for AI prediction.

How do you assess an SME’s AI readiness in traditional sectors where digitisation might be limited?

I start with a leadership mindset. What’s their thinking around AI and data? Is it aligned to business strategy, or are they seeing it as a bandwagon? I always begin with business context first – what are their KPIs, objectives, and business outcomes?

A common thing I see is the desire to use AI for cost optimisation – very much bottom line focused. CFOs are looking down at the bottom line, wanting to automate and cut costs. But they should also look up at the top line for innovation, revenue growth, and newer business models. Really opening it to transformation.

I’m assessing their understanding and perhaps taking them on that journey to more ambitious but beneficial outcomes. If every company just looks down at the bottom line, cutting costs and automating, we’ve got a boring future ahead. Where humans can step in is around innovation.

The data estate and digital foundations all need evaluating upfront. There may be work needed before you can even consider AI. On the human side: leadership, culture, governance, ownership, compliance, depending on industry.

SMEs often think they’re too small for AI, but

TONY SCOTT

they’re sometimes surprised. There’ll be pockets where someone’s doing something quite advanced, perhaps not in their core role. You can gauge technical capability already.

I use a four-stage framework: digitise, organise, analyse, optimise – but in continuous loops. I’m currently working with a 30-person M&A company in London. From the outset, they understood they’re too small to automate people away. They want to make existing people more valuable, giving clients much better service. Very mature thinking.

What’s your framework for identifying high-impact AI use cases for SMEs?

I use a 90-day discovery phase about getting internal buy-in, momentum, and proving we can achieve value.

Month one is discovery and diagnosis. We sit down with key stakeholders, find their objectives, challenges, and decision-making processes. Are they data-driven or gut instinct? We do a data technology audit and map business objectives and goals.

Month two is prioritisation. From those business goals, we’ve probably got five to ten use cases –that’s the best number. We prioritise using a matrix: cost-benefit analysis, feasibility, and time to value. Hopefully, one or two key use cases come forward. We consider data privacy, regulatory issues, and risk, which might steer us away from particular use cases early. By month two’s end, you’ve picked a use case to pilot. Month three is designing that pilot. All metrics should be business metrics, success metrics, not about algorithms or model accuracy. It’s about reducing customer churn, machine downtime, whatever’s relevant to that industry. You get business ownership, buy-in, and authorisation to start work.

Everything must be defined in business language, especially budgeting. You want key stakeholders, including the CFO, involved. Look for relevant quick wins, measure ROI in business terms, but don’t have a big bang mentality – start small.

Define use cases in CFO-friendly language. You must score it and talk about measurable returns. Whether it’s customer churn, reducing delays, costs, or cycle times, it must generate genuine ROI where maintenance costs are less than benefits.

You also need to lay out a 24-months roadmap. Not fixed where you ‘arrive’ at AI, but ongoing because this world changes rapidly. You need quick wins upfront, proving investment value, but also take that longerterm view in the right direction.

Traditional leaders sometimes expect AI to be a magic wand. How do you help them understand that it requires investment like any business function? At the beginning, we talked about FOMO – companies rushing into AI with this sense of ‘we’re doing AI, we

need to keep up’. But you should only do AI to solve real-world pain points and become a better organisation.

Some SMEs think they’re too small – only bigger players have the money. But I think it’s the opposite. SMEs can move faster than bigger competitors.

There’s a mindset about cost-cutting and automation. To bring your organisation along for the long-term journey, it needs to apply across the board –from middle management down to frontline staff. It’s about making existing staff more valuable. There are so many news stories about big organisations cutting thousands of jobs with AI as the culprit.

SMEs are leaner anyway and don’t have that ability. If they can make staff more productive, doing things where humans add value – automating tasks rather than roles – they can move much faster than bigger competitors and do more valuable things for clients.

Another mindset is ‘we need a data scientist’. There’s a rush to hire AI experts and ‘give us AI’. It doesn’t work that way. You need data foundations first, your current analytics approach, before you have the maturity to bring in data scientists. It’s a journey you work through.

Don’t see AI as just a technology implementation. Don’t tell your CTO, ‘Implement AI for us’. It needs true business leadership shaped in proper business terms.

What data foundations and prerequisites must companies address before implementing AI? There are prerequisites – non-technical, cultural, and strategic. It’s what we were saying about not seeing AI purely for automating today’s ways of working, tomorrow. You can do that, but the much more exciting thing is augmenting current ways of working and freeing people up to give richer experiences internally or externally. Transforming your organisation into something new tomorrow.

If you’re solving problems with AI, you need to articulate those problems in business terms. You need ownership and accountability. Always having a business owner is really key.

The data piece – having clean, accessible, relevant data. The garbage in, garbage out principle. If AI is helping you make better, faster business decisions, you must trust the data. The data has to be clean first. A lot of organisations aren’t in that place already.

Just be sensible about team size or what you’ll have inside versus external partnering. Have expectations about setting up a function where you are today. You can be ambitious long-term, but know it’s a journey together.

The data has to be clean first. A lot of. organisations aren’t in that place already..

What are the key differences in AI strategy between a 500-person company versus a 5,000-person company?

I’ve worked in 5,000-plus organisations and actually think it’s more challenging in larger organisations. They have multiple business units, potentially competing priorities, and different visions. You’ve got a C-suite sitting across all that, and it’s really key to get buy-in from every single C-suite member. It often becomes a business change. Sometimes the technology side is easy, but the human side and getting buy-in are much harder in bigger organisations.

They’ll have much more complex technology estates, possibly legacy systems going back years or decades. They’ve got more resources and larger technology teams. You can call upon internal teams, and they’ll be more specialised. Better ability to invest, fund experiments, and innovate. They’ll possibly have better access to large technology players – AWS, Microsoft – maybe account managers they can leverage.

Smaller companies are much more centralised and have more tactical use cases. Leadership may not be C-suite level, but it’s more informal and can sometimes move quicker. Technically, it’s a more cloud-first, plug-and-play approach. Internal teams are leaner but sometimes wear multiple hats – more generalists, actually more skilled in a sense. But they’re more cost-

conscious, less open to experiments because they need quicker ROI.

In partnerships, they’re more open to bringing in partner companies to augment skills and provide knowledge transfer, hopefully forming strategic relationships.

To sum up, it can actually be easier. A smaller company will be more agile and, with the right approach, can perhaps move much faster.

Can you share an example where your strategic assessment completely changed what a company thought they needed to do with AI?

Going back to that manufacturing company example, they got stuck right at the beginning, saying, ‘We want AI, we want AI’. They were very scared that competitors had AI and would outpace them without really understanding what that meant.

It was really about rolling all the way back, focusing on the KPIs, getting data foundations in place, and getting them in fit state before even beginning that AI journey. Then, really mapping out those AI use cases and ROI on each, linking them to actual true business objectives, which was a challenge. They had different

areas with competing priorities, but it was really getting that leadership, that consistency of view and vision across the organisation. It was quite a journey.

We’ve been doing traditional data analytics for many years, and AI doesn’t invalidate those historical use cases – it just builds upon them. What’s the starting point? How does a company evaluate current performance? Things like dashboards and analytics –their relevance doesn’t go away because of AI. AI just takes you into more predictive worlds, doing more advanced stuff on top.

So in assessing maturity for AI, it’s always useful to see where the company is today in standard data analytics functions. If they don’t have that, it may make sense when sorting out the data estate to build those standard analytics functions before you even consider richer AI uses.

Can sophisticated, accurate reporting help organisations discover hidden business problems? Absolutely. In terms of reporting and analytics, there’s nothing new there, but some companies don’t have the infrastructure in place to do that well. You need the board asking questions about current business, current performance, and having that historical performance as well. I would always get foundations fixed first before rushing down the AI path.

Things like generative AI and large language models have given us new views on AI in recent years, but the same applies. If you’re training language models on internal data that’s not clean, outputs won’t be trustworthy.

A lot of organisations want to be AI first, but . they should never be human last. That’s really. a mantra and the cultural change management . side of it. .

I would fix today’s world – that view of the business today, standard analytics and reporting – then ultimately move toward a team that has all those elements. There are core foundational roles in data engineering – people doing actual plumbing of your data, making sure it’s clean. You need those in place first before you bring in analysts, then before you bring in data scientists on top.

What blind spots do non-technical business leaders have when evaluating AI opportunities, and how do these lead to failures?

One would be what we’ve talked about – AI is all about automation and cost saving. It’s ignoring the benefits of augmentation and the human in the loop. My preference

is to look for both. If you’re bringing your company and staff along with you, really look for opportunities to augment the mundane, rules-based, repetitive work they do to make them more valuable as people. There’s that human element – don’t just treat it as a technology implementation.

The other one is data complexity. Yes, how good is your data estate? But if you don’t have data engineers in place to manage that, it’s going to be very difficult moving forward. Don’t just say to your CTO, ‘AI is a technology project; implement AI’. It’s only about business outcomes. You’ve got to specify everything in terms of how it’s going to move the business forward. What’s the business return on investment? Have you prioritised things in a proper business way?

There’s a change management side to it. Don’t underestimate that – this applies to all digital transformation, not just AI. There’s a human element. You’re taking your organisation on a journey, so make sure you’re giving proper attention to change management.

How do you handle cultural resistance to AI in traditional sectors?

I mentioned earlier the news stories about threats to jobs. There’s genuine fear out there. In 2016, I was giving conference speeches in the engineering industry, making the case that automation is automating tasks, not roles. Yes, some people just want to cut costs and automate roles, but is that really the right decision? Look at every role and its tasks – you want to free people up to be more value-adding, things humans excel in: creativity, leadership, customer empathy, customer support. Free them from the burden of repetitive tasks rather than going straight down the path of cutting costs, cutting people.

To do that, you need to engage your staff, let them know your plans around AI, and assuming they’re not going to cut people, engage that front line early. Show them the quick wins you’re planning and how you’re going to improve their roles. Talk to them openly, honestly, and often. That phrase ‘human in the loop’ should really be the default.

Celebrate those wins and show people how AI is improving the internal environment. Try to remove that sense of it being a threat. A lot of organisations want to be AI first, but they should never be human last. That’s really a mantra and the cultural change management side of it.

Most organisations have company values reinforced in town halls, company meetings, and maybe posters around buildings. Just anchor everything you’re doing in those company values, so people see you’re not going against those.

AI companies talk about easy human replacement, but it should be more about enabling superior service delivery.

TONY SCOTT

How do you balance short-term wins with long-term AI strategy?

Both are important, and it is that balancing piece. You do need quick wins to set the scene and make the case. Without a long-term strategy, you’re not going in the right direction. If you just do quick wins, a lot of companies get stuck in endless loops of pilot after pilot after pilot. They build technical debt ultimately and fragmented solutions that don’t take them where they need to go.

On the other hand, if you just go for long-term transformation and forget the quick wins, people take their eye off the ball, you lose momentum, and potentially develop something that in 18 months is just not relevant. You did the wrong thing, or the market moved, or technology moved.

It is those quick wins that really give you proof of concept momentum. Perhaps you do experiments as you go to prove hypotheses, but you need that longterm anchor as well. That anchor is all about business value. Make sure everything you’re doing – be it a quick win or long-term strategy – is specified in terms of the business value you’re delivering.

Those quick wins are really the building blocks. We like to say the quick wins are the proof; the strategy is your plan for success.

What’s the ideal structure for a data and AI team in a 100-500 person traditional company?

For smaller companies, I recommend a hub-andspoke model. They might not afford a full-time CDO, so a fractional CDO can bring experience from larger organisations and cross-industry insights while working part-time. Full-time is possible if the budget allows and you find the right person.

The hub needs one or two data engineers handling the ‘plumbing’ – data pipelines and infrastructure that stays in place long-term. That’s crucially important. Then I build data analysts or BI specialists as spokes, ideally embedded in business units. They may work part-time across departments, depending on how many you have. The key is keeping them close to the business so they understand daily dynamics, challenges, and problems. They answer business questions through data.

Only when you’re ready – when pipelines are solid, engineers are doing their jobs, and infrastructure is sorted out – do you bring in data scientists. They handle predictive models, simulations, preventive maintenance, customer insights, whatever’s relevant.

Sometimes they partner with technology companies for strategic skill augmentation on an ad-hoc basis.

How important is domain knowledge when hiring for data roles in SMEs?

There are bigger sectors where domain knowledge transfers well. Financial services have many elements – moving from insurance to banking is easier than jumping to completely different industries. Someone with an engineering career understands infrastructure, predictive maintenance, and can move between subsectors within that wider sector. But moving from engineering to financial services or health technology would be much more difficult. So yes, domain knowledge applies at bigger sector levels, but moving within them is probably easier.

What are the main differences between implementing AI in traditional sectors versus digital-first industries? Digital-first industries tend to be more data-driven. They’re newer industries built on data, so measuring performance and developing predictive solutions is inherently easier. They’re already thinking analytically before using AI and have a better analytics sense.

Traditional sectors can still make that journey –that’s where digital transformation comes in. But it’s a much harder ask, as much human as technology. You’ve got to convince them that historical working methods are less appropriate for the future. Senior management, middle management – it can be challenging bringing them on that journey. You’ve really got to start small, prove use cases, and do it non-threateningly.

There are real complexity differences, too. With digital solutions, you’ve got user data at hand and can easily measure performance and behaviour over time.

But I’ve worked on systems measuring traffic behaviour on motorways, tracking vehicles, predicting changes and traffic impacts. It’s much more challenging. You need many simulations, much more data, factoring in weather, climate, marrying together data sets that might never have been combined before. It’s more complex, but outcomes can be much more transformative.

I’ve done similar work with airports, mapping transport systems – road, rail – showing impact on arrival patterns and check-in queue lengths. Many systems that perhaps had never been considered for combined analysis.

The rewards can be quite transformative. It’s more creative, using design thinking and hackathons with business teams to answer questions we’ve never been able to answer before, or discover insights we didn’t even know existed in our data.

TARUSH AGGARWAL is one of the leading experts on empowering businesses to make better datadriven decisions. A Carnegie Mellon computer engineering major, Tarush was the first data engineer at @Salesforce.com in 2011, creating the first framework (LMF) to allow data scientists to extract product metrics at scale.

Prior to 5X, Tarush was the global head of data platform at @WeWork. Through his work with the International Institute of Analytics (IIA), he’s helped over 2 dozen of the Fortune 1000 on data strategy and analytics.

He’s now working on 5X with a mission to help traditional companies organise their data and build game-changing AI.

You’ve focused heavily on traditional, non-digital sectors. Was this a conscious decision? How did you end up working mainly with real-world-first businesses?

The landscape has undergone significant changes over the last decade. Digital-first companies could adopt data and AI early because they had the resources and technical expertise. But traditional businesses are different.

These companies are naturally technology-averse. They employ mostly blue-collar and grey-collar workers who view technology as a risk. Their buying decisions prioritise risk minimisation – they buy what everyone else buys. Many have only recently invested in digitalisation through platforms like

SAP, Salesforce, and Oracle.

The result? Massive data silos and fragmentation. Different teams can’t access different datasets. Integrating new vendors with SAP and building custom APIs is expensive and complex. These businesses are now suffering from the very fragmentation they created.

However, there’s a tailwind: every company is considering AI. The reality is you can’t have an AI strategy without a data strategy. If you don’t understand your data, AI won’t help. Over the next five years, these companies will invest heavily in data platforms, cleanup, and AI products.

What are the main problems you see when traditional companies

start from scratch with data and AI?

Let me break this down from a decision-maker’s perspective. Everyone thinks they’re sitting on a gold mine of data and can activate it overnight. The reality is very different.

Companies focus on the ‘last mile’ – AI applications that create value when embedded directly into business operations. Think supply chain optimisation, inventory management, customer churn prediction, or demand forecasting. This is where the real value lies.

But here’s the mistake: they skip the foundational work. Before deploying AI applications, you need clean, centralised data in an automated warehouse with structured models that give you a clear view of your business.

Instead, most companies buy Power BI – Microsoft’s popular enterprise tool – and connect it directly to SAP or Salesforce to build basic dashboards. They think this makes them data-ready, but it’s just lightweight reporting on top of existing systems.

That won’t work. Just like posting on Instagram doesn’t make you a marketer, a few dashboards don’t make you data-driven. Companies want the end result without investing in the foundation.

If a CEO or CTO at a medium-sized company calls you and says, ‘We’ve bought data tools like Power BI or Snowflake, even hired a data analyst, but we’re not getting any value. Nothing’s working as expected’ – what’s your response? A data warehouse is an excellent tool – it’s the foundation for storing all your data. A data analyst’s job is to analyse that data and generate insights. But here’s the problem: if you’re a traditional business, you likely have manual processes, data entry issues, and missing data gaps because some processes still run on Google Sheets.