Expect smart thinking and insights from leaders and academics in data science and AI as they explore how their research can scale into broader industry applications.

Key Principles for Scaling AI in Enterprise: Leadership Lessons with Walid Mehanna

Maximising the Impact of your Data and AI Consulting Projects by Christoph Sporleder

How AI is Reshaping Startup Dynamics and VC Strategies by KP Reddy

Helping you to expand your knowledge and enhance your career.

Hear the latest podcast over on

CONTRIBUTORS

Anna Kopp

Maha Reinecke

Stefanie Babka

Tamanna Haque

Didem Un Ates

Nicole Janeway Bills

Sonja Stuchtey

Rosanne Werner

Agata Nowicka

Łukasz Gątarek

Bettina Knapp

Henrieke Max

Philipp Diesinger

Francesco Gadaleta

EDITOR

Damien Deighan

DESIGN

Imtiaz Deighan imtiaz@datasciencetalent.co.uk

DISCLAIMER

The views and content expressed in Data & AI Magazine reflect the opinions of the author(s) and do not necessarily reflect the views of the magazine, Data Science Talent Ltd, or its staff. All published material is done so in good faith. All rights reserved, product, logo, brands and any other trademarks featured within Data & AI Magazine are the property of their respective trademark holders. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form by means of mechanical, electronic, photocopying, recording or otherwise without prior written permission. Data Science Talent Ltd cannot guarantee and accepts no liability for any loss or damage of any kind caused by this magazine for the accuracy of claims made by the advertisers.

As we present our 10th issue of Data & AI Magazine , we find ourselves at a meaningful juncture that calls for both reflection and forward vision. Over the past nine issues, we’ve published more than 120 articles, bringing you insights from the forefront of enterprise data and AI transformation. This milestone offers us an opportunity to consider not just how far the field has advanced, but also who has been the catalyst for that advancement.

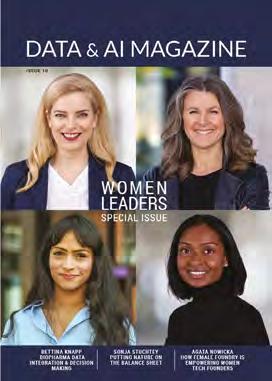

It was a deliberate choice to dedicate our landmark 10th issue to women leaders in data and AI. The contributions of women in this space have been profound, yet often underrepresented in industry narratives. The leaders featured on our cover and throughout these pages aren’t just excelling in their respective organisations – they’re reshaping how we think about data culture, AI adoption, and technological leadership itself.

In this issue, we hear from Stefanie Babka of Merck on winning hearts and minds to build a true data culture – a reminder that technology transformation is fundamentally human. Tamanna Haque from Jaguar offers a refreshingly candid perspective on balancing professional excellence with motherhood in the fast-paced world of AI. Microsoft’s Anna Kopp provides a framework for successful enterprise AI adoption that emphasises practical implementation over theoretical potential.

A recurring theme in this issue is the crucial role of product management in AI success. Two of our featured leaders highlight this often-overlooked aspect: Maha Reinecke demonstrates how product management principles are transforming data initiatives at Reinsurance Group of America, while Henrieke Max from Delivery Hero addresses the critical gaps in AI product management that can make or break implementation efforts.

We’re also featuring Agata Nowicka who shares how Female Foundry is creating new pathways for women tech entrepreneurs, and Bettina Knapp, another leader with a Product background, whose work at Boehringer Ingelheim demonstrates how integrated data systems are revolutionising biopharmaceutical decision-making. Rounding out our featured contributions, Sonja Stuchtey presents a compelling vision for integrating environmental valuation into investment practices at Land Banking Group – illustrating how data science is expanding into increasingly diverse and impactful domains.

What strikes me about this collection of voices is not just the technical expertise they represent, but the breadth of perspective they bring to our field. These leaders are applying data and AI to solve fundamentally different problems across pharmaceuticals, automotive, technology, insurance, venture capital, and environmental sectors. Their work comes at a critical moment for our industry. As Microsoft CEO Satya Nadella recently acknowledged, despite remarkable technical advancements and massive investments in AI, the technology is still not consistently delivering tangible value for businesses and consumers.

Nadella suggests AI will only prove its worth when it manifests in measurable economic growth and practical efficiencies, and not just in technical capabilities.

This value gap underscores why diverse leadership in data and AI isn’t just about representation. It’s about effectiveness. The leaders featured in this issue approach AI implementation with different questions, priorities, and frameworks for success. They demonstrate that the future of our field depends not just on algorithmic innovation but on diverse thought leadership that can translate technical possibility into practical value.

As Data & AI Magazine enters its next chapter, we remain committed to highlighting the full spectrum of voices advancing our industry. The perspectives shared in this issue remind us that the most transformative applications of data and AI come when we have diverse teams asking different questions and approaching problems from multiple angles.

Thank you for being part of our journey through these first ten issues. We look forward to continuing to bring you insights from the pioneers – of all backgrounds – who are defining the future of enterprise data and AI.

Damien Deighan Editor

ANNA KOPP has been director of Microsoft Digital at Microsoft Germany since 2015 and has been with the company since 2004 in multiple global roles in sales and operations. She’s the branch manager of the main office in Munich, global co-chair of Women@ Microsoft, co-founder and board member of Female CIO Circle, on the advisory board of CIOnet CIDO Women, and vice chair of the Board of Directors for Münchner Kreis.

Anna comes from Sweden where she studied international communication at the University of Stockholm, and has lived in Germany since 1992. In 2020 she was voted one of the most inspiring women in Germany, in 2023 she was chosen by The Promptah AI magazine as one of the Top 100 Role Models for a Better Future, and she has just been named one of the most innovative women in AI and Data by CIO Look magazine. She is a specialist in the new world of work and AI from a cultural, political and practical perspective. She advocates flexible working models and is a gender equality role model in the German tech industry. In her spare time she enjoys off-road motorbiking and sings in a rock band. She even won a Rock Oscar as Germany’s best singer once upon a time!

What’s the most important thing to consider when driving AI adoption in large enterprises?

ANNA KOPP

Apart from the technology itself, transformation and accountability are key. You can’t roll out new technology without guiding people through the change. It’s about more than just introducing tools; it’s also about re-evaluating and adapting processes.

Many companies treat transformation as something they can squeeze in whenever there’s a bit of spare time. But transformation isn’t a hobby. You need to assign someone to take ownership, manage the process end-to-end, and prioritise the starting points. You have to decide what technology will be used, who will use it, and how processes and workflows will need to change.

Another critical aspect is workforce upskilling. Implementing AI means addressing a skills gap because the technology is so new. Prompting AI to do tasks might seem simple at first, but there’s nuance in crafting effective prompts. AI is excellent at executing what you ask, but not necessarily what you intended. Learning how to prompt effectively is a skill that improves with consistent use.

Using AI involves more than a one-off training session: organisations need to allocate the time for employees to learn and integrate AI into their roles.

Should organisations have a chief AI officer or an equivalent role? And why is it important?

Absolutely. You need a chief transformation officer or a similar dedicated role; someone with the skills to drive change. Managing transformation involves specific competencies, like milestone tracking and prioritisation, which are distinct from standard management skills.

Many companies, including Microsoft, have introduced roles like the chief AI transformation leader. We did this three months ago because the skills required for AI transformation are unique. It’s a significant investment – these roles demand highly skilled individuals, and they don’t come cheap – but if you want the job done well, you need the best people. Having someone with expertise ensures the transformation happens efficiently and stays within budget.

This role should also report to IT, specifically to the chief information officer (CIO). It’s similar to how cybersecurity typically reports to the CIO through a chief information security officer (CISO). AI transformation is a technology-driven process, and IT is best equipped to oversee it.

Additionally, AI implementations require crossfunctional collaboration. For example, integrating AI involves data management, but it also needs to align with cybersecurity measures. These teams need to work together seamlessly, and it’s the responsibility of the transformation leader to facilitate that collaboration.

Does Microsoft have one chief AI transformation officer in a specific region, or are there multiple transformation leaders globally?

About eight years ago we implemented the Chief Transformation Office, starting with one global chief transformation officer. Each country then had its own chief transformation lead, supported by a few program managers. This structure was designed to oversee the overall digital transformation, which was a major focus at the time.

That transformation was significant, but now I think a better term is evolution. We’ve already digitally transformed, and now we’re evolving into the age of AI. Rather than having a dedicated chief AI transformation lead for each market, we’ve created a global organisation to drive the programmatic approach. This structure breaks things down and works directly with regional sales segment leads, often collaborating with roles like sales excellence managers and chiefs of staff in each market.

Using AI involves more than a one-off training session: organisations need to allocate the time for employees to learn and integrate AI into their roles.

When it comes to piloting AI solutions in large companies, who should be involved?

There are two critical aspects to consider: testing groups and governance boards.

Before you even start piloting, I always recommend establishing a governance board. This is essential because, once you begin, issues will inevitably arise. For instance, employees might feel disadvantaged, or unexpected challenges may emerge. A governance board is there to address these situations.

The board should include representatives from IT, HR, the works council (if applicable), your data privacy lead, and possibly your legal department. Often, the data privacy officer reports to the legal department, so they might serve as the representative. It’s important to have clear communication channels so employees know how to reach the board with concerns or questions. This ensures there’s a structured process for handling unforeseen issues that arise during implementation.

Once the governance board is in place, you can move on to testing. At Microsoft, we decided that the people who would eventually sell the product should be heavily involved in the testing phase. Since they work with it daily, their feedback is invaluable in identifying what works and what doesn’t. Alongside them, we included IT, HR, engineering, and the works council.

For countries with works councils (there are around 13 in Europe), it’s especially important to involve them early. Works councils represent employees’

ANNA KOPP

interests in labour relations and play a significant role in determining whether the technology can be implemented.

This is particularly crucial if the technology wasn’t developed in Europe. If you’re adopting software from the United States, China, or India for example, the engineers building it may not fully understand the specific regulations in various European countries. Early feedback from works councils helps ensure the technology or its configurations comply with local regulations, and can be adapted if necessary.

AI is a tool, like a hammer or screwdriver, that supports your work. But it’s still humans who decide how to use it.

If AI has the potential to be capable of replacing human workers, would organisations like works councils ensure that this won’t happen?

Works councils are there to protect employees and their jobs. But displacement isn’t going to happen overnight. Instead, it will evolve gradually, and specific types of jobs – particularly those that are highly data-driven –may be more susceptible.

Take translators, for example. That’s a role where AI has made incredible strides, and it’s now capable of handling translations very quickly and accurately. So it might not be a career someone wants to focus on today. But it’s not about all jobs disappearing; it’s about slowly replacing certain tasks or roles.

At the same time, we’re facing a paradox in the market. On one hand, HR departments say there’s a talent shortage; they can’t find the skills they need to run their organisations. On the other hand, there’s fearmongering about AI being a job killer. The reality lies somewhere in the middle.

To address this paradox, companies need to focus on two things simultaneously: automating what can be easily automated and upskilling or cross-skilling their workforce. These two efforts go hand in hand. You automate repetitive or routine tasks, and then you redeploy your workforce to focus on areas where human input is essential.

So far, we’re still seeing more jobs being created with AI than eliminated. Someone still has to engineer, develop, manage projects, interact with customers, and truly understand their needs. Even with sophisticated AI, there’s an ongoing need for a human in the loop: someone to take accountability and make final decisions. AI is a tool, like a hammer or screwdriver, that supports your work. But it’s still humans who decide how to use it.

Works councils play a key role in this evolution. They’re involved to ensure these principles are upheld:

protecting jobs while also supporting the company’s success. For a company to safeguard jobs in the long term, it needs to remain efficient. If there aren’t enough people to fill critical roles, the company has to turn to automation. It’s a complex balance, but works councils are part of ensuring that the process is fair and sustainable.

What does a well-functioning setup for AI adoption look like?

The first step is to get the basics right, and give employees time to engage with the material. There are a few key components to a good learning path:

First, if there are jobs that require AI-specific skills you need to establish an academy – a series of structured, sequential trainings. I usually describe it as a pyramid. At the base of the pyramid are foundational skills for all employees. These are broad competencies that everyone needs, regardless of their role.

The next layer is role-specific training. For example, what does a salesperson or marketer need to know to integrate AI into their specific job? This layer focuses on the skills and knowledge tied directly to roles within the organisation.

At the top of the pyramid, you have ad hoc, situation-specific training. These are small, snacksized trainings designed to address immediate needs. For example, someone might encounter a situation and wonder, ‘How can AI help me with this?’ or ‘How do I achieve a specific task using AI?’ This is where having a searchable, on-demand database of resources is crucial. Employees need quick and accessible answers without spending days in a classroom.

At Microsoft, we allocate ten learning days a year for training. These include mandatory sessions, as well as AI-specific learning days where employees can dive deeper into topics like prompt engineering.

Finally, businesses should supplement their internal training programs with external industryspecific content.

In summary, a strong learning path starts with foundational training for all employees, builds rolespecific competencies, and provides on-demand resources for immediate challenges. It’s a layered approach that evolves as employees learn and grow.

As issues arise during AI adoption, how does Microsoft ensure clarity on what employees should do in those situations?

This is where HR and IT collaboration becomes essential. There are certain things employees shouldn’t do with AI – guardrails that need to be established. These rules of engagement can sometimes be built into the product itself. For example, you could design the system so that if someone tries to do something inappropriate, the functionality is simply greyed out with a clear ‘no-go’ message.

ANNA KOPP

However, overly restricting the technology can reduce its value. Striking the right balance is critical, especially in regulated industries like finance, where compliance with data privacy laws is a priority. Companies need to develop clear guidelines tailored to their specific processes and culture. While universal principles for responsible AI exist, every organisation must create rules that reflect its unique needs and values.

This requires assembling a team to define these guidelines. It should also be clear what happens if someone violates them. For example, if a manager uses AI inappropriately by tracking an employee unfairly and giving them a lower bonus. Employees need to know exactly where to escalate the issue. This is where the governance board I mentioned earlier comes in. It provides an independent review to ensure fairness, holds people accountable, and determines consequences.

Establishing this kind of structure ensures employees feel protected while enabling responsible AI usage. Of course, it’s a complex challenge that varies from company to company.

What are some of the tactical elements Microsoft has implemented to support co-pilots and ensure adoption?

One of the tools we use is the Work Trend Index (WTI), which you can find on the Microsoft website. It’s a research initiative that gathers statistics on what employees and managers expect from AI. The top expectation from employees is that AI should handle the ‘boring stuff’; tasks like taking meeting notes, transcribing discussions and other repetitive administrative work.

We’ve all been in meetings where someone asks, ‘Who’s going to take notes?’ and everyone avoids eye contact. AI can take over those unenjoyable tasks, helping employees focus on more meaningful work. Beyond that, people want AI to help them keep order

amidst the chaos – organising information and making it easy to find what they need.

To meet these needs, we’ve created unified landing pages where everything related to AI is centralised.

These pages include:

● Training resources: Where to find training materials and courses.

● AI champions: A list of experts employees can reach out to for help.

● Office hours: Opportunities to ask questions and get guidance.

● Reference materials: Links to videos, white papers, and detailed guides on how AI works.

● Re sponsible AI guidelines: Clear recommendations and policies for ethical AI use.

● HR resources: Connections to HR guidelines and support.

Having all this information in one place ensures employees always know where to go for support. It’s about making engagement with AI as easy and intuitive as possible. Accessibility and simplicity are key to driving adoption.

We also have an organisation called Worldwide Learning at Microsoft which strategically develops training programs, because learning doesn’t just happen on its own; it has to be planned. If someone needs to be certified for a task, that requires structured training. Certification programs must be integrated into daily work routines, alongside other activities like dealing with customers.

This learning organisation sets the overall strategy, organises learning days, and designs the various types of training: academies for in-depth skill-building, snack-sized ad hoc training, videos, or searchable databases. These resources support ongoing learning.

The way we learn today is different from 30 years ago. Back then, you might attend a three-day classroom training for a new ERP system and then use that tool for 10–15 years. Today, with agile methodologies and rapid software updates (often new releases every two weeks) you need to continuously adapt.

ANNA KOPP

What criteria should companies use to pinpoint which business functions will benefit most from AI? We start by identifying roles or areas with the most specialised, content-heavy tasks. For example, sales teams working with CRM tools that handle large catalogues of products or SKUs – they need to deeply understand the products. Similarly, operational teams like HR, procurement, finance, and legal often benefit significantly.

Take legal tasks, for instance. Something as straightforward as drafting a non-disclosure agreement, checking when the last one expired, and creating a new one can be done quickly with AI. These are timeintensive, repetitive tasks – mostly involving copypasting and searching – that AI can handle efficiently.

The second recommendation is to start small. Find one specific problem and solve it. Don’t aim for a massive, all-encompassing platform right away. I discussed this in a TED Talk I gave about the Vasa ship – a Swedish warship that sank because too many big ideas were crammed into one project without proper planning. The same principle applies here: if you try to build a massive AI solution over several years, employees will lose patience and resort to quick fixes for their smaller problems.

Analyse your organisation and identify areas where the most time is wasted or where repetitive tasks occur frequently. Start with one problem and one solution. Invest just enough to see if it works. If it does, build on it. If it doesn’t, scrap it and move on to the next issue. By narrowing the focus to specific use cases, you can create a clear path to success. Solve small problems first, and over time, you’ll build a suite of effective, smaller solutions before tackling a larger platform. Additionally, leverage the tools and products already available. There are robust suites, like Microsoft’s, that help with the overall office environment. Instead of reinventing the wheel, focus on your line-ofbusiness applications and industry-specific tools. Also, consider how AI can enhance your service-level agreements (SLAs) and responsiveness to customers.

What are some of the key lessons you’ve learned from driving AI adoption in a multinational company like Microsoft?

Localisation is a big one. First, there’s language localisation, but it goes beyond just translating words and grammar. It’s about understanding cultural nuances. For example, in Germany, when addressing customers, we often use formal titles – like Herr Müller or Frau Müller – and we use formal speech, which we call Siezen . It’s hard to fully explain in English, but it’s about speaking formally versus informally. In contrast, in the U.S.,

For most employees, that’s the key: reducing the overwhelming workload so they can focus on what they enjoy and the work that made them choose their career in the first place

it’s much more casual: ‘Hey, John, how’s it going?’ You can’t just translate these cultural differences directly, and it’s important to account for them when you’re working across different markets.

Another important aspect is how AI can help with time zone challenges in global companies. For example, AI can summarise or catch you up on meetings or decisions made overnight, reducing the need for late-night or early-morning calls. While these basic functions – note-taking, recordings, transcripts, and summaries – might sound mundane, they’re incredibly impactful. If you can remove those repetitive tasks, it frees people up to focus on more innovative and strategic work.

Interestingly, the features people use the most are often the simplest ones because they save so much time and effort. I always say, when in doubt, start with the boring things. There’s a lot of them, and they often deliver the quickest wins.

We’re all so stretched thin, especially since COVID. We once had boundaries, like commuting time, to separate work and personal life. Now, with remote work, we start earlier, finish later, and the workload has only increased.

AI can help lighten that load. For most employees, that’s the key: reducing the overwhelming workload so they can focus on what they enjoy and the work that made them choose their career in the first place.

MAHA REINECKE is a seasoned professional in digital transformation, product development, and data analytics with over a decade of global experience in both startups and multinational corporations. She is currently the Executive Director of Data, Analytics and AI at Reinsurance Group of America, where she champions and accelerates the adoption of enterprise-wide data strategies and data platforms to drive efficiencies across the business, and the development and delivery of data products. Additionally, Maha spearheads the implementation of a data academy to enable RGA’s teams to enhance their data analysis skills, and supports the development of RGA’s global data strategy. She previously held positions at UBS Group AG and Logex Healthcare Analytics, where she focused on the development and implementation of data analytics products. In her native Singapore, Maha has extensive experience in digital transformation and healthcare analytics in the public and private healthcare industry.

Could you share some key milestones in your career that led you to your current role as Director of Data Analytics and AI at RGA?

My journey began with my first job, where I worked with a development team in a public hospital in Singapore. We were tasked with building a digitally integrated hospital by implementing electronic health record systems.

I was involved in defining requirements and collaborating with stakeholders, while considering the kind of data we needed to collect to analyse hospital operations and support decision-making. This was before the term ‘data-driven’ became widely used. Over time, I transitioned from planning and implementation to analysing data, identifying trends, and providing insights that helped streamline hospital processes.

That experience made me realise the potential of data in driving efficiency and innovation. I saw that data analytics would play an increasingly critical role in the future, which led me to change my career path towards data & analytics – both through on-the-job learning and formal courses – and that led me to where I am today.

Were there any significant events that served as stepping stones to your current leadership position?

After leaving Singapore and moving to London, I started engaging with startups. It was a new market and environment for me, so I focused on understanding how companies operated in this region. Connecting with founders and startup teams helped me appreciate their approach to problem-solving and innovation, and I started to collaborate on small projects, assisting with data analysis to understand market trends better. Coming from a healthcare background, working for a data product company in the healthcare space was a logical and natural choice. My domain knowledge and experience in implementing solutions positioned me well to contribute effectively.

This exposure to the entrepreneurial and startup ecosystem was eye-opening. In Singapore, I hadn’t seen innovation at this scale, and moving to London broadened my perspective on application development and data-driven decision-making.

What inspired you to move from a startup environment to a corporate one?

I’ve always worked in small businesses. Even in the hospital setting, whether public or private, it was a localised environment. I transitioned from public to private and then to a product company. By the time I made that shift, I had spent about ten years in healthcare. I wanted to test a hypothesis: Are data skills truly industry-neutral? Can I pivot to another field, apply my skills, and pick up subject matter knowledge on the job? The answer turned out to be yes.

Additionally, having always worked in smaller companies, I felt I was missing the exposure to a corporate culture. That led me to financial services. My long-term vision is to work towards a COO role because I see a strong alignment between operations, data, and management. My career path hasn’t been linear; I didn’t graduate with a data science degree. Ten years ago, such degrees didn’t even exist. Everything I learned was through real-world business contexts and data applications. That background shaped my decision to shift to corporate.

At the same time, I still value and apply the agility and innovative mindset I developed in startups and small businesses. I think that perspective is appreciated in corporate environments, where large-scale projects can sometimes become bogged down in bureaucracy or long-established but ultimately outdated processes.

How have the challenges you’ve faced shaped your leadership style?

I’ve always found myself in greenfield opportunities – every company and project I’ve worked on involved building and implementing something new or testing new ideas. That experience has made me resilient but also enhanced my solutions-oriented mindset and the need to collaborate and convince stakeholders.

I’m also not afraid of failure. My approach has always been to find solutions using the best possible resources available. If something doesn’t work, I pivot. Change doesn’t feel disruptive to me because I see it as part of the process of innovation and problem-solving.

Could you give us an overview of RGA’s journey in digital transformation, within what is a fairly mature sector?

RGA is a reinsurance company, meaning we provide insurance to insurance companies. We’re a relatively young company in the insurance industry – only 51 years old – but we have grown significantly in the last five years. Our business volumes have expanded rapidly, and it’s important for our internal operations, including in data management, to keep pace.

During this growth phase, we realised that we successfully managed the expanding workload, and identified areas where we could optimise data processes. We saw potential to reduce fragmentation, streamline workflows, and establish clear ownership structures. It was as if we had all the right ingredients and were now ready to refine our recipe. This realisation inspired us to evolve our approach, focusing on increased coordination and strategic methodology to better serve our growing business needs.

As an insurance company, risk is our business. We want our time to be spent on deriving insights rather than managing upstream data processes. Currently, the reality in data is that 80% of the effort goes into

collection, storage, and transformation, with only 20% spent on insights. Our goal is to flip that ratio, or at least reduce the time spent on backend processes.

The key objectives of our data strategy are to standardise our data frameworks and address challenges related to people, processes, and technology with the goal to enable the business to spend more time generating insights rather than processing data.

We’ve invested heavily in technology and data governance, and it’s an ongoing journey. We continually reassess how to improve our organisational structure, attract more talent to work with data across the company, and ensure our approach caters to different business needs.

What have been the most significant advancements at RGA in terms of that journey?

After the enterprise data strategy was conceived and communicated, we began building the enterprise data platform. This platform is designed to enable self-service analytics, distributed ownership, and federated governance.

The platform is constantly evolving, which is a significant achievement. We’re moving away from data sitting in silos within individual business units. Instead, we’re enabling cross-domain analysis, reducing duplicative and repetitive processes. As a result, the number of data products we utilise is growing, with each product having a dedicated owner who ensures proper documentation and governance. And more and more business units are getting on board, eager to create and share data assets with their consumers.

We’re also strengthening our data governance practices, including developing an enterprise data catalogue and improving data quality management. And while we have developed a framework for distributed ownership, it is now about ensuring the business units adopt it effectively. For example, what does it mean to be a data product owner, a data steward? Since this role is in addition to a person’s day job, we need to define responsibilities clearly and support those taking on these governance roles.

We want our time to be spent on deriving insights rather than managing upstream data processes.

Do you have different classes of data products at RGA?

There’s a lot of debate around what constitutes a data product but we haven’t imposed strict definitions. Currently, most data products are tables or data assets published in our marketplace or access repositories, allowing consumers to request access.

Beyond that, these data assets can be leveraged for analysis, and dashboards or reports built from these

assets can also be considered data products. We focus on providing the foundational building blocks that enable various forms of data utilisation.

Some users are data-savvy and require direct access to datasets, provided the right governance and security controls are in place to ensure appropriate data access. Others need automated pipelines where data flows seamlessly into dashboards, allowing them to monitor changes without handling raw data themselves. Since every business unit has different needs, our goal is to support all these use cases effectively.

How has your experience as a product manager influenced your approach to data and AI at RGA? I always say I am a data analyst first, who then gained exposure to product management. The way the industry has evolved has allowed me to merge both skill sets. My background in chemistry involved analysing large datasets, and that analytical mindset carries over to my work in data and AI. Product management, on the other hand, is a methodology I’ve learned that helps me apply structured thinking to my work.

One key principle I follow is not relying on a single perspective. Whether it’s interpreting data, designing an architecture, or structuring a workflow, I always compare multiple approaches before deciding on the best path forward.

Another important rule I follow is to never act impulsively. When people come with urgent requests, it’s easy to react immediately, but I’ve learned to apply reasoning first. Impulsive actions often stem from inadequate knowledge, so it’s crucial to take a step back and assess the full context before making decisions.

The concept of a data product also influences my approach. Unlike a project, which has a defined endpoint, a product must continuously deliver value at scale. Whether I’m creating a dashboard, sharing data assets, or designing tables, I always think about its role within the broader business and data ecosystem. Understanding the end users and their needs is critical – rather than just executing tasks, I focus on what they’re ultimately trying to achieve.

Effective data and AI strategy isn’t just about technology and tools – it’s also about streamlining processes. Technology provides the necessary uplift, but true value comes from the intersection of technical expertise, domain understanding, and user empathy. That overlap is where real impact happens.

Are there any specific methods or frameworks from product management that you’ve found effective in your current role?

Prioritising and breaking down the problem is key. One framework I frequently use is the ‘five whys’ methodology, originally developed by Sakichi Toyoda, the founder of Toyota Industries. Asking ‘why’ five

times helps uncover the root cause of a problem. Often, when people present multiple issues, they all stem from a single underlying cause – such as an incorrect data model or poorly structured data.

By addressing the root problem, I can implement a single solution rather than creating multiple workarounds. Always ask ‘why’ multiple times to determine what is truly important versus simply lifting and shifting an existing process.

User empathy is also crucial: who are the consumers, what are their needs, and how will they use the product? Will it effectively serve multiple users rather than just one? The goal is to develop and deliver at scale while reducing variation.

Often, when people say they want to move to the cloud, they see it as a project. But from a product management perspective, moving to the cloud is just a step, not the goal itself. My focus is always on the underlying problem – are we aiming for better compute and storage, or is the goal improved data accessibility and performance efficiency?

Another key approach is reviewing, refining, and iterating. Work in increments rather than committing to large-scale, long-term projects. Traditional financial institutions often plan three-to-five-year initiatives, whereas I normally work within a six-month roadmap. This approach allows me to be agile and adapt to new developments and innovations in the industry.

How has your product management background influenced how you prioritise projects within RGA’s initiatives?

This is where business context becomes crucial –aligning projects with business KPIs. It’s not about how many products I’ve published or how many requests I’ve serviced, but whether I’ve delivered a product that moves a business metric.

For example, if a team’s main challenge is inefficient data processing – spending too much time gathering, transforming, and storing data – then improving that workflow becomes a priority. Rather than focusing on arbitrary output metrics, I align my work with broader organisational goals.

Last year, RGA introduced a refreshed strategy that includes key elements such as an enterprise-first mindset, adaptability to change, and enabling worldclass functions (HR, administration, operations, data, and technology), which resonates deeply with me. My work directly supports these objectives.

Can you share an example where your product management approach directly contributed to the success of a data or AI project at RGA?

One project involves addressing fragmented data sources and redundant processing. We found that multiple teams were using the same data source but

generating several outputs. That inefficiency prompted me to investigate. Who are the consumers, what do they truly need, and why are so many versions necessary?

By analysing these issues, we realised that the data model itself could be simplified. Instead of running separate queries for each request, we are working on exposing data at a more granular level with proper governance and access controls. This way, users can generate their own outputs dynamically rather than relying on predefined reports.

This shift aligns with our broader goal of self-service analytics – enabling users to query and interpret data independently rather than submitting repeated service desk requests. The aim is to reduce manual ad hoc reports and streamline operations, freeing up the development team from handling routine data dumps and requests.

This project’s shaping to be a major step towards making data more accessible while optimising internal workloads.

How does your team handle the translation of data insights into strategic business decisions? Has your product management background influenced this process?

One key principle I follow is ensuring that my development team produces an outcome that allows the end user to enhance their decision-making ability. We do not want to build something simply because it was requested or has been used in the past, but we engage with the business domain experts and subject matter specialists early on to ensure we understand what they are trying to achieve. You could call it ‘beginning with the end’.

Using agile practices, we ensure that the solutions we develop remain modular and can easily adapt to changing business needs. Since business requirements evolve, having this iterative process helps us refine solutions within a short timeframe. Ultimately, we aim for the end user to be in a better position to gain data insights that will then translate into business decisions.

As part of RGA’s transformation efforts, you set up an internal Data Skills Academy. What motivated that initiative?

When I joined RGA, we were already a few years into our enterprise data strategy. Significant investments had been made in tools and technology, but we needed to increase awareness and adoption across the company. Many employees were unfamiliar with the tools and felt intimidated by them.

Having previously developed a similar program in another organisation, I saw the need to create a structured approach to upskilling employees. Without proper training and support, these investments would remain underutilised.

Some employees showed a strong interest in learning how to use these tools but lacked the guidance to do so effectively. Many found traditional upskilling methods – such as one-day workshops – too disconnected from their actual work. To bridge this gap, we designed a program that aligns directly with their job functions, providing hands-on learning opportunities.

By building the Data Skills Academy, we aim to help employees adopt and integrate these tools into their workflows, ultimately driving the success of our enterprise data strategy. This initiative ensures that our workforce is empowered to leverage data effectively, rather than relying on fragmented or siloed approaches.

Was the Academy aimed at certain roles or business areas?

The Academy was developed for everyone. Using my product management approach, I engaged with users across different business functions – leaders, middle management, and analysts – to understand their challenges, experiences with tools and technology, and learning preferences.

Through these conversations, I identified different user personas within RGA, recognising that each business unit has unique needs. This led to the development of an operating model that aligned with our enterprise strategy, which emphasises a winning mindset and the need to embrace change.

The core pillars of the operating model focused on curating tailored learning pathways based on user personas and job roles. The goal was not simply to have a certain number of employees learn Python by 2026. Instead, the focus was on providing content that aligned with their daily work and RGA’s technology stack.

For example, if someone primarily used Excel, the objective was not to push them towards Python but to enhance their skills with Power Pivot and Power Query to automate repetitive tasks. Many users were unaware of these built-in Excel features, which could significantly improve their efficiency.

For those with some coding knowledge, the focus was on advancing their skills – learning CI/CD, source control, and GitHub, ensuring they could leverage existing tools effectively.

Additionally, many actuaries, particularly those in the process of qualification, had backgrounds in mathematics, computer science, or econometrics, giving them some exposure to programming. The Academy allowed them to apply their academic experience at a practical level within their job roles.

Ensuring that our content and training providers catered to these varied personas was a crucial element of the Academy’s design.

What impact has the Academy had on the transformation journey as a whole, and have you been able to measure that impact?

Because it’s early days since we have implemented the Data Academy in RGA, most of the data we are collecting is related to user behaviour such as active user, time spent, courses completed, etc. That gives us some base level KPIs, which will help us improve the content and program as a whole. In addition, we collect qualitative data in the form of one-on-one feedback sessions, surveys, etc. to gain real user insights into how the platform is helping RGA colleagues.

For example, someone mentioned taking an Alteryx course on DataCamp and using that knowledge to refine a workflow. What previously took a full day of manual manipulation in Excel now takes just a few minutes in Alteryx. That’s a tangible success story.

I’ve also received messages from employees who appreciate the tools and training. Many have realised that learning about data isn’t just about coding; it’s also improved their ability to write better prompts for our internal generative AI tool. This has helped them get more accurate and useful responses to their enquiries.

We’re still early in this journey. Last year’s small pilot programs demonstrated strong demand and clear benefits, so this year we’re expanding the program enterprise-wide, with a focus on scaling the initiative and formally measuring its impact. Our goal is to align the Academy’s success with the broader enterprise data strategy and quantify the value of investing in data upskilling.

Looking back, what advice would you give to your younger self at the start of your career?

I would say to put myself out there more – beyond just my day job. Engaging with the professional community in my field would have been beneficial.

Also, I’d tell myself to take more risks and seek out opportunities earlier. I used to think I had to achieve certain milestones – another qualification, more experience, or a specific project – before pursuing what I really wanted.

Instead of letting these self-imposed barriers hold me back, I should have trusted my ability to navigate challenges. Because when faced with difficulties, I’ve always found a way to overcome them through diligence, persistence and perseverance.

By building the Data Skills Academy, we aim to help employees adopt and integrate these tools into their workflows, ultimately driving the success of our enterprise data strategy.

STEFANIE BABKA is a marketer with a second diploma in public relations, who is fascinated by the interface between people and technology. She has over 20 years’ experience in digital marketing, public relations and digital transformation in different industries (automotive, FMCG, science & technology), and for the last 10 years she’s led teams of different sizes.

Stefanie has published two books on social media, and as a pivotal employee of major corporations, she’s organised and supported countless big events, including IAA, Stars & Cars, Autosalon Geneva and Fashion Week. She’s launched more than 50 digital products, apps and websites, and ran campaigns and projects for more than 60 brands such as Mercedes, Smart, Dodge, Saab, Kit Kat, Wagner Pizza, Maggi, Beba, Purina, Perrier and Vittel.

At Merck, she led various big transformation projects, including the introduction of the digital workplace EVA (consolidating 160 intranets into one) and the change and comms stream of the Group Data Strategy. She’s also supported rebranding and the integration of newly acquired companies.

As the Global Head of Data Culture, Stefanie is responsible for communication, change management and upskilling programs on data and AI, including the Merck Data & Digital Academy and their enterprise-wide data & digital community. Stefanie is passionate about bringing together artificial intelligence and human intelligence to drive meaningful insights and data-driven decision-making.

STEFANIE BABKA

Stefanie, you have an interesting title. Tell us about yourself, how you stepped into this role and what you do as ‘Global Head of Data Culture’.

When we first established an enterprise-wide data strategy at Merck I led the Change & Communication workstream, and this is how I grew into this topic. As a result of this strategy, the Merck Data & AI Organisation – including a role for data culture – was established. And I was happy to take that role. This was, and still is, a perfect mission for me: I have a background in digital marketing and my data love comes from customer data. I have seen the power data can unlock if you target someone in the perfect perception moment with the perfectly tailored message.

Today, my team and I run the group-wide data & AI upskilling and change management initiatives at Merck to enhance the data culture in our company.

Merck is recognised as a leader in establishing a datadriven culture within a large corporation. Could you elaborate on the strategies used to develop a strong data culture within your organisation?

To build a strong data culture I always say we need to win the head, the heart, and the herd.

For winning the head, we focus on fostering the right skillset among our employees. This can be done by upskilling, reskilling or hiring. For upskilling we have two main approaches. The first is what we call ‘curiosity-sparked learning.’ This approach allows employees to find a starting point in a moment of curiosity and begin their learning journey. They can select their background, skill level, time budget, and topic of interest to find suitable training options in our comprehensive training hub. This flexibility empowers employees to take control of their learning at their own pace.

For those who prefer a more structured learning path, we offer guided learning programs. These range from simple data & AI literacy paths to our flagship program, Fast Track Upskilling for Data and Digital. This intensive program offers up to 10 weeks of full-time training, enabling participants to become what we call ‘citizen data scientists’. The beauty of this program lies in its practical approach – participants bring in their own projects, which allows them to tackle real business challenges or opportunities using real data. Additionally, each participant is paired with a mentor to ensure that by the end of the training, they not only gain knowledge and skills but also develop their own data or AI solution.

Winning the heart involves engaging our employees emotionally. We do this by creating a data appetite through storytelling and fun activities such as our Data Escape Room. These initiatives make learning enjoyable and memorable, helping employees see the value of data in a relatable way.

Lastly, for winning the herd, we emphasise the importance of community. We foster communities of practice to enhance exchange and learning across sectors and functions. This approach ensures that data culture is deeply rooted in our organisational practices, promoting collaboration and continuous improvement. By addressing these three areas – head, heart, and herd – we create a comprehensive strategy that embeds data culture throughout the organisation.

Can you share examples of successful initiatives that have improved data-driven decision-making at Merck? Certainly! One of our standout initiatives is our internal everyday AI companion, which we call myGPT Suite It is available to all employees to foster generative AI literacy at scale. It has quickly become one of the most used tools at Merck, allowing employees to save significant time in their daily tasks. These initiatives exemplify how data and AI are transforming our decision-making processes at Merck, allowing us to deliver innovative solutions more efficiently.

How do you measure the effectiveness of data literacy programs across different teams?

We use a combination of qualitative and quantitative metrics to measure effectiveness. Qualitatively, we gather insights from employee engagement surveys focused on winning the head (Do you feel you have the right skills?), heart (Are you looking forward to working with data & AI more?), and herd (Are you applying data-driven ways of working in your team?), along with training evaluations and feedback surveys.

Quantitatively, we rely on system data, such as the number of use cases in production within Foundry and the monthly user statistics for our everyday AI companion myGPT Suite. Notably, myGPT Suite ranks among the top 10 most used tools at Merck, even without including any mandatory services, indicating its widespread adoption and utility.

By addressing these three areas –head, heart, and herd – we create a comprehensive strategy that embeds data culture throughout the organisation.

STEFANIE BABKA

How do you ensure that employees at all levels understand the importance of data in their roles?

We’ve developed a comprehensive Data Culture Concept that targets five distinct groups within the organisation: executives, managers, data & AI practitioners, everyone at Merck, and physical operations (production and lab personnel) . This plan utilises different angles to spread ‘data love’ across the company. Executives create top-down excitement, while practitioners and enthusiasts are equipped with materials, tools, and arguments to foster a bottom-up data culture. By addressing the specific needs of each group, we ensure that every employee understands the

pivotal role data plays in their work and in the broader success of Merck.

How do you stay updated on the latest trends and technologies in data and AI?

Staying updated is crucial in our fast-evolving field, and I engage in a variety of activities to ensure our training remains up to date. This includes reading industry news, listening to relevant podcasts, participating in training sessions, and collaborating with our tech teams. This multifaceted approach allows me to keep abreast of the latest developments and ensures that we continuously integrate cutting-edge innovations into our data culture strategy.

If you could build precise and explainable AI models without risk of mistakes, what would you build? Rainbird solves the limitations of generative AI for high-stakes applications.

TAMANNA HAQUE

Lead data scientist at Jaguar Land Rover TAMANNA HAQUE talks about her latest challenge: motherhood. As a powerhouse in AI, itself an ever-progressive field, Tamanna reveals how she maintains a dynamic mode to stay ahead at work and the new road at home.

Tamanna’s journey with JLR started long before she was employed by them. Since childhood, she’s been passionate about the Jaguar brand, writing about it, attending previews, going to experience days and more. Jaguars turned her head, with their attractive, luxurious and powerful presence. She was fortunate to be a fan-turned-customer at 22, later becoming an employee at 25.

When Tamanna joined JLR in 2019, it was

I am in ‘Jaguar’ mode all the time!

Were there any tools, technologies, or strategies that helped you stay organised and connected during your maternity leave?

‘Keeping in touch’ time was valuable in helping me maintain contact with work and ease the transition back.

A lot of routine was gone initially, so I had to be smart and effective about the little ‘keeping in touch’ time I wanted to give my work life. A lot of preplanning and deciding what was worth that small fraction of time helped me to stay on top of things. Otherwise, nearly all of my time was spent on growing my baby… it is quite something getting to grips with parenting for the first time! My daughter is my top priority and I also made sure to focus on myself and my recovery.

To give a clearer picture of what I had to plan for and organise around, I’ll provide some insight into my role. I am constantly balancing line management, idea generation, stakeholder buyin, project to product delivery, technical advisory and more for my AI work, with a passionate,

as an original team member of the Vehicle Connected Data Science team. She enjoyed the unexpected challenge of shaping a new AI team (now nearly 40-strong across multiple countries) and jumping into the world of the connected vehicle, which creates interesting data and futuristic data science work.

Tamanna was soon promoted to lead data scientist, leading a cross-country team called ‘AI-PACE’ who are delivering customer-facing AI products at pace for the end-to-end vehicle experience. She is constantly engaging in a wealth of activities outside her role which ‘give back’ whilst helping her to find new limits to serve her clients better; she feels personally committed to them, having originated as one.

customer-first focus. I am in ‘Jaguar’ mode all the time! I often have a multitude of projects on the go and enjoy the pace.

Before leaving for maternity, I agreed roadmaps with my key stakeholders and had work planned to a fine detail. I occasionally checked in to field new ideas or step in if there were any impediments to delivery. For my team, I created an idea bank, reserved for them to explore in case their bandwidth allowed it. The roadmaps, explorations and skill-building tasks were carefully considered and tied into their development whilst adding value to the business.

There were personal activities (outside of my role, some outside my capacity at JLR) which I lined up to pick up, such as the Women in AI network (which I founded and chair at JLR), guest lecturing, patent filings, events I’d be speaking at, and more. The world of AI is more fast-paced than ever, and I kept up with this and with my industry (automotive), to keep on the ball creatively. These activities kept my soft skills, network and technical knowledge fresh, and I’m now much more selective about events I support or learning I embark on. Time is precious!

Staying connected in a very lightweight but purposeful manner worked for me, but I appreciated having the choice to totally switch off (as this can be less of a choice for others).

What was the most rewarding aspect of navigating motherhood alongside your career?

The most rewarding aspect is that each side enriches the other! As

TAMANNA HAQUE

a new mum I have picked up a lot of new skills which help me to be a better data science leader. For instance, handling new or unexpected situations at home has made me more adaptable –much like the essence of machine learning. I’m constantly improving, thinking more critically and faster on my feet in any setting.

Things have been quite full-on at times, especially in the initial weeks. Unpredictability and added responsibilities meant I needed to refine my time management and prioritisation skills, which reflects at work where I’m much more value-driven and efficient. Something has to be great to get my attention! The AI products we’re delivering are more innovative, appealing and valuable for the business and for our clients than ever before. Back to my personal life, I’m adopting the use of more AI to increase productivity; a simple example is voice dictation of messages. Generally, AI has helped me to capitalise on my time, and better keep up to date with my family, friends and network.

I also have a perspective about our luxurious vehicles as a parent now! I always stay up to date with our products, as this gives me a baseline on the vehicle experiences we offer today, and feeds into inspiration for how we can use AI to offer even better experiences tomorrow. With my personal perspective now broadened, I’m seeing some of our cars a bit differently and I’m loving some of them more than ever. My daughter and I are appreciating the Defender at the minute: we’re getting around with lots of space and capability from the iconic 4x4.

My previous car, the Jaguar F-TYPE, was a two-seater sports car. Very different, and enjoyed during a different phase of my life. The more product and personal perspectives I gain myself – or learn from our customers – helps me to create AI with ‘customer love’ and ultimately better their experiences.

I couldn’t have navigated motherhood and my career together without my amazing husband and family. I had lots of valuable support (which felt like training at times!) and daily personal time which helped me to show up as my best self in every setting, despite being a new mum.

Did you discover anything about yourself during this time that surprised you, either as a mother or as a data science leader?

Yes, the fact there are parallels in my approach to being a mum and being a data science lead. I now see how my approach to people and leadership probably touched on some natural maternal instincts I had already – the high attention I put on my team’s development, and skills such as teaching, emotional intelligence, compassion, time management, adaptability, planning and more. All of these qualities are exaggerated during parenting and I’m really enjoying growing a mini-me!

Has becoming a mother changed the way you lead your team?

Yes, it’s reinforced my beliefs about modern ways of working and also made me a better leader.

I lead with a great deal of energy, commitment and passion for our products, customers and business, always thinking about new ways

The more product and personal perspectives I gain myself – or learn from our customers – helps me to create AI with ‘customer love’ and ultimately better their experiences.

I can add value to the business, keeping up with trends in AI to support. This maintains a great feeling of pace and purpose in my squad and I strive to provide a working environment that I would have wanted for myself, serving up motivation to deliver quality results just like our SVs (Special Vehicles)!

Data science is quite demanding, so I support working in a way which optimises for what really matters – real, tangible value-add. Working in and now leading a cross-country team in tech and data science, I promote flexiblity, trust and productivity-led working to get lots of great things done! We are constantly delivering and creating use cases alongside building up our capabilities to deliver cutting-edge AI. Our working style therefore needs to be just as dynamic, helping to promote more success with our AI products, as well as more diversity and inclusion. Meetings or facetime are scheduled meaningfully. This way I’m saving

TAMANNA HAQUE

energy which is channelled back into my brain and I’m more healthy, happy and productive than ever.

I’m saving time which I can give to my daughter. Flexibility helps both sides, since some of my best thoughts have come up after-hours. Overall, I have the best work-life balance I’ve ever had, doing the best work I’ve ever done, and I’m obviously going to keep pursuing this to support myself and others around me.

What advice would you give to women planning their maternity leave in similarly fast-paced industries?

It’s really important to plan thoroughly before you leave for maternity; every baby, birth and postpartum experience is unique, and these are obviously your top priorities, so it’s hard to predict your ability or desire to ‘keep in touch’.

I would plan as if you were hoping to completely step away

during your maternity leave. A roadmap with project goals and agreements, key contacts, areas of support needed etc., will help your team and stakeholders to stay on track with deliverables. Providing personalised guidance to each team member about what you want them to learn over the period – as well as deliver – means you can help their development to keep progressing. Centralising all of this information is useful, so that relevant colleagues are empowered to know what you’re thinking and expecting, even when you’re not present!

There are ways to keep up with people and movements without opening your laptop and it’s also great to catch up socially sometimes.

Remember, there’s no need to stay connected with anything other than your baby, so plan really well to give yourself reassurance and to keep things moving (and moving the way you want them to). It’s true you won’t get the time back!

DIDEM UN ATES

is a renowned AI and Responsible AI executive with over 25 years of experience in management consulting (Capgemini, EY, Accenture) and industry roles (Motorola, Microsoft, Schneider Electric). As the founder of LotusAI Ltd, she advises private equity firms, hedge funds and financial institutions, including Goldman Sachs Asset Management and its portfolio companies, on AI/GenAI strategies, responsible AI implementation, and talent transformation.

Didem serves as Advisor and member of the AI/ Generative AI Council for Goldman Sachs Value Accelerator, sits on the Boards of the Edge AI Foundation and Wharton AI Studio, and is a certified AI Algorithm Auditor and Executive Coach. Her industry contributions have earned her recognition such as the Tech Women 100 Champion and Trailblazer 50 awards. In her tenure as Vice President of AI Strategy & Innovation at Schneider Electric, she led the development of the company’s AI/GenAI strategy and forged strategic partnerships with startups, VCs/PEs, and academia. At Microsoft, she was pivotal in launching Business AI Solutions at Microsoft Research and spearheaded diversity-focused programs, including Alice Envisions the Future / Girls in AI hackathons. In her role as head of Applied Strategy, Data & AI in Microsoft’s Chief Data Office, she oversaw Microsoft’s largest AI engagements, contributing to the OpenAI partnership.

Didem has led the operationalisation of Responsible AI at Microsoft, Accenture, and Schneider Electric, advising C-suite leaders on talent strategy adaptations and promoting responsible AI practices.

Can you tell us about your early experiences in the AI sector and how they shaped your career path, particularly during your time at Microsoft?

I’ve spent over 26 years in the technology sector, focusing mainly on disruptive technologies. For the past 13 years, I’ve been deeply involved in AI.

I witnessed the rise of traditional AI while I was working with Microsoft Ventures and Accelerators. Across accelerators worldwide, 80–90% of the highestgrowth startups were AI-focused. That’s when I realised AI was where I wanted to be. Steven Guggenheimer, a VP at Microsoft at that time, sent an email about forming a Business AI team. I offered to volunteer for competitive benchmarking and startup ecosystem projects. That volunteer role turned into my first official position in AI.

I was part of Microsoft’s first Business AI team, which originated within Microsoft Research. Our team had about 100 members, and I was the only person based outside the U.S. – in fact, outside of Redmond, the headquarters. I was also the only Turkish woman in a predominantly male team, consisting mostly of Chinese, French, and Canadian men. I highlight this because, although I was accustomed to limited diversity in tech, the lack of diversity in AI felt even more pronounced. It motivated me to take intentional action, and that’s how the initiative Alice Envisions the Future / Girls in AI started.

At Microsoft Research, we developed some of the first enterprise AI algorithms for customer service, sales, and marketing. When these proved successful, our team transitioned to the AI Engineering division to launch them as official products. This effort led to the creation of Microsoft’s first AI SaaS platform: Power Platform. I call it the ‘grandparent’ of the Co-

Pilot suite, because it shares a similar architecture and logic, later enhanced with generative AI.

Fast-forward 12 years at Microsoft, and my final role involved working with the Chief Data Officer on large-scale AI engagements with Fortune 500 clients. One such collaboration was with Schneider Electric, who later invited me to implement their AI strategy.

Now, through my own company, LotusAI, I help organisations like Goldman Sachs and private equity firms, hedge funds, and other financial institutions with AI transformation, responsible AI strategies, and talent development. It’s been an incredible journey, combining proactive volunteering, seizing opportunities, and pursuing cutting-edge technology – not because it’s trendy, but because it has a massive power to drive positive societal change at scale.

Can you share pivotal moments from your career that shaped your approach to AI strategy and leadership?

incredible to see.

Another defining moment was attending my first offsite meeting with our team of approximately 100 members. I was the only woman and the only person based outside the U.S.

I hadn’t set out to be a leader in diversity and inclusion, but I knew someone had to address the issue. Diversity isn’t just a social responsibility; it’s essential for business success, especially when developing complex products like AI. Alice Envisions the Future / Girls in AI , which eventually became a global program, has connected over 8,000 girls worldwide through a Facebook group. High school girls in cities like Seattle, Warsaw, London, Athens, and Istanbul began hosting hackathons, sometimes even independently at their schools, and Microsoft partners such as Accenture and KPMG supported these events.

Diversity isn’t just a social responsibility; it’s essential for business success, especially when developing complex products like AI.

One pivotal moment was when I brought Microsoft its fourth paying AI customer. Today, we talk about AI generating trillions in market value, but back then, AI was still in its early days. With that customer, we made a bold decision: instead of selling the AI product as a managed service – where we could only serve a few large customers per year –we decided to package it as a SaaS AI solution.

This required us to pivot our entire business model just six months before the product’s general availability, and ultimately led to the creation of Microsoft’s Power Platform as a SaaS offering. It wasn’t just pivotal for me personally but also for Microsoft and the broader tech industry. Today, millions of developers build on that platform, which is

It’s one of the most meaningful parts of my career – doing something positive for society while scaling AI in my day job. Many of these young women stay in touch with me, and I continue supporting them with recommendation letters for their university applications. It might be a small step, but it means a lot to me.

A third pivotal moment happened recently, after I left Schneider Electric in mid-November. Goldman Sachs’ asset management team had invited me to join their new Generative AI Council of seven or eight experts from around the world. It was intended as a board-like role, requiring just a few hours a month. But two days later they reached out again. They’d seen my LinkedIn post announcing my departure from

Schneider Electric and asked if I would consider a broader role. They had invested in hundreds of portfolio companies, many of which needed AI support, and they wanted help at the value accelerator level.

So, while waiting for a friend who was late to lunch, I officially launched my AI advisory business, LotusAI, on December 13, 2023. It was a spontaneous start, but it’s turned into the most fulfilling phase of my professional life, and I’m now helping many clients with their AI journeys. As much as I enjoyed my time at Microsoft and other companies, this is the best time of my career. I always wanted to be an entrepreneur, and everything just aligned perfectly: clients were ready, product (AI) was in demand, and the timing was right.

What are some common misconceptions enterprise organisations have about implementing generative AI?

The first, and perhaps the most important, relates to culture and mindset. From an enterprise transformation perspective –especially with generative AI – things move so fast that the real starting point is leadership. There needs to be a leader at the top with vision, courage, and an investment mindset.

This investment isn’t just financial or resource-based; it’s also about people and time. Leaders must commit to the journey, knowing it won’t be easy. They must accept that there will be failures, but these are part of the learning process. Ultimately, there’s no turning back – generative AI isn’t just a tool for survival but for thriving in the future.

Another major misconception is that many organisations start with a small proof of concept (POC) – a use case that’s tangential to their core business. They do this because they either lack the leadership sponsorship to take a holistic approach, or fear the complexity of full-scale adoption.

While a POC might work, the biggest pitfall is that these isolated experiments rarely scale. If AI initiatives aren’t integrated into the core business strategy and work flows from the start, they remain stuck in the lab, failing to generate real impact. Later, organisations conclude that AI doesn’t work or doesn’t scale, but the reality is that the approach wasn’t designed for success in the first place.

Generative AI requires a holistic approach – an end-toend transformation that includes talent development, responsible AI practices, and integration across every function, from HR to finance. It doesn’t all have to happen simultaneously, but the vision must be comprehensive.

Another misconception revolves around data. With advancements like synthetic data, automation, and new methodologies, many data challenges can now be overcome. Organisations shouldn’t let data concerns hold them back from exploring generative AI solutions.

Lastly and most importantly, there’s the issue of talent and execution. Many companies hire consultants for time-boxed projects – eight, twelve, or sixteen weeks –to develop a strategy or implement a few use cases. These projects often end with hundreds of slides or complex Excel models. But once the consultants leave, internal teams are left catching up on their day jobs they had to put on hold for weeks, and the AI strategy sits idle because there’s no internal capability to execute it.

To avoid this, organisations need a hybrid approach: a balance of in-house expertise, strategic

partnerships, and external advisors. Most importantly, there should be a ‘chaperone’ figure – someone who can walk the journey with the organisation at its own pace, ensuring the transformation is sustainable and scalable. Such a figure is also helpful in terms of having an objective outsider who is not part of internal politics and can candidly point to the North Star –sometimes playing the ‘good cop’ and other times the ‘bad cop.’

In your opinion, is a chief AI officer needed to ensure AI initiatives will scale and have the desired impact? Many AI initiatives sit within the CIO or CTO organisation. The core mission of these departments is often to ‘keep the lights on.’

As a result, AI projects become low priority. This is a key reason why these projects don’t scale or deliver impact.

On top of that, when AI initiatives are placed in these departments, employees often don’t even have access to generative AI tools because relevant websites are blocked. I’ve seen employees transfer company data to their personal PCs so they can work with AI tools like ChatGPT at home. This is a nightmare scenario for CIOs and CTOs.

I do believe the chief AI officer role is essential, but it must operate closely with other C-level leaders. If the role ends up merely coordinating AI efforts in a hub-and-spoke model, it becomes ineffective.

If you already have a chief digital officer, a CTO, and a CIO, adding a chief AI officer can cause overlaps and conflicts. Therefore, this role needs to be clearly defined and strategically positioned. The visionary leader driving AI doesn’t need to understand all the technical details, but they must believe in AI’s transformative potential and communicate that belief effectively.

Another critical misconception lies in language, communication, and change management. How

leaders frame AI adoption matters immensely. For example, in one of the largest German organisations I worked with, a new CEO wanted to make an impact. At major global conferences, he repeatedly said, ‘I’m going to save X billion dollars with AI,’ framing AI purely as a cost-cutting tool.

When developing an end-to-end AI transformation roadmap, the projected business impact is often quoted between 20–40%. This could affect top-line revenue, bottom-line profit, EBITDA, or a combination of these.

The visionary leader driving AI doesn’t need to understand all the technical details, but they must believe in AI’s transformative potential and communicate that belief effectively.

I gave feedback that this messaging was counterproductive. If employees hear that AI is primarily about cost-cutting, they will resist it with everything they have. Instead, leaders should present AI as an opportunity for growth and empowerment.

The conversation should be: ‘This is the future. There’s no turning back. But we’ll work with you. We’ll co-create talent transformation maps, personal development plans, and team growth strategies. How can you imagine your job being augmented by AI? How can AI help you add more value? We’ll upskill and reskill you. And in the process, you might save enough time to finally take your evenings or weekends off.’

In industries like financial services, this is already becoming a reality. In R&D organisations, tools like GitHub Copilot accelerate time to value for research projects. Ultimately, how we articulate the opportunity AI presents – how we inspire and address employees’ concerns – will determine whether AI strategies succeed or fail.

What are the most critical factors that determine success or failure when implementing an enterprisewide AI strategy?

The most critical factor, without a doubt, is talent transformation.