2024–2025 CYLAB YEAR IN REVIEW

I’m excited to tell you about a number of new developments in CyLab in the past year related to faculty hiring, space, events, and new initiatives.

For the first time ever, CyLab led the tenure-track security and privacy faculty hiring search for the entire university. Our goal is to hire eight new tenure-track faculty over the next several years. We set up an application portal and a committee with representatives from six departments, led by Lujo Bauer. The committee reviewed all the applications and shared a short list of candidates with the departments interested in hiring security and privacy faculty. Based on their feedback, we invited several candidates for in-person interviews. I’m pleased to announce that we hired two faculty through this process. Joe Calandrino joined the Engineering and Public Policy Department and the Software and Societal Systems Department in August 2025. Niloofar Mireshghallah will join the Engineering and Public Policy Department and the Language Technologies Instititue in August 2026.

This summer, we were very pleased to expand our space footprint in the Collaborative Innovation Center Building after the Software Engineering Institute vacated its second-floor space. We now occupy the entire second floor of the building. This will provide new conference room space, office space for all of our new faculty hires over the next several years, and desk space for their students. We’re currently looking into possibilities for reconfiguring the interior rooms in the new space and potentially removing walls to combine our old and new spaces.

Besides our annual CyLab Partners Conference last October, this year we also hosted the National Science Foundation Secure and Trustworthy Cyberspace Principal Investigators’ Meeting in September and a Robotics Security and Privacy Workshop in July. The robotics workshop was part of our efforts to launch a larger CyLab initiative in the robotics security and privacy space. We’re currently looking for partners and funders to move this forward.

Finally, our great research and teaching is continuing to receive external recognition. For the fifth year in a row, our undergraduate cybersecurity program was recognized as the top program by US News and World Reports. This year, three CyLab authored papers received test-of-time awards, two received distinguished paper awards, and several more received other types of paper awards. Five CyLab faculty served as program chairs or co-chairs of conferences. Our CMU hacking team won the both the MITRE cybersecurity competition and the DEF CON CTF competition for the fourth straight year. Our recognitions page highlights even more awards!

Director and Bosch Distinguished Professor in Security and Privacy Technologies, CyLab, FORE Systems University Professor of Computer Science and of Engineering & Public Policy

Seunghyun Lee, a second-year Ph.D. student in Carnegie Mellon University’s Computer Science Department, discovered a series of bugs in Google Chrome’s WebAssembly type system while conducting routing “fuzzing” research.

“Don’t believe everything you read on the internet.” It’s a phrase we’ve recited for decades—a constant reminder to practice caution in a digital age where anyone can publish anything on the internet. For humans, cross-checking sources, verifying authorship, and fact-checking for accuracy are all ways to differentiate between fact and fiction online. But what if the reader isn’t human?

On August 10, the winningest team in DEF CON’s Capture-theFlag (CTF) competition history, Carnegie Mellon University’s Plaid Parliament of Pwning (PPP), won its fourth consecutive title, earning its ninth victory in the past 13 years. PPP joined forces with CMU alumnus and University of British Columbia Professor Robert Xiao’s team, Maple Bacon, and hackers from CMU alum startup and CyLab Venture Network Startup Partner Theori.io (The Duck), playing under the name Maple Mallard Magistrates (MMM).

DEF CON’s three-day flagship competition, widely considered the “Olympics” of hacking, brought together some of the world’s most talented cybersecurity professionals, researchers, and students, as 12 of the world’s top teams (who qualified from a field of more than 2,300 teams) attempted to break each other’s systems, stealing virtual flags and accumulating points while simultaneously protecting their own systems.

Seunghyun Lee, a second-year Ph.D. student in Carnegie Mellon University’s Computer Science Department, was recently conducting some routine research on Google Chrome’s source code.

Seunghyun Lee, a secondyear Ph.D. student in Carnegie Mellon University’s Computer Science Department, discovered a series of bugs in Google Chrome’s WebAssembly type system while conducting routine “fuzzing” research.

Little did he know at the time that his customary research process of “fuzzing,” an automated software testing technique that involves inputting random or invalid data into a computer program and observing its behavior and output, would lead him to discover a software vulnerability that would result in a valuable bug bounty and a $462,000 gift to support cybersecurity education.

“I’m not actively looking for bug bounties,” Lee explained. “It’s sort of a side effect of my research where I need to look deeper into Chrome source code and then write code based on it, which automatically leads me to these vulnerabilities.”

“picoCTF is a great platform that allows new students to get on board with cybersecurity.”

Seunghyun Lee, second-year Ph.D. student, Carnegie Mellon University’s Computer Science Department

Many companies that develop software offer bug bounty programs to help them identify and fix security issues before malicious actors can exploit them. Vendors offer bounties to researchers, often known as “ethical hackers,” to find vulnerabilities and responsibly report them to the vendors, so their developers can secure the vulnerabilities before they become publicly known. Through his fuzzing research, Lee, whose faculty advisors are David Brumley of Electrical and Computer Engineering and Fraser Brown of the Software and Societal Systems Department, discovered a faulty implementation in Google Chrome’s WebAssembly type system. Subtle design issues in the WebAssembly code, including

Seunghyun Lee and Megan Kearns, picoCTF program director, discuss Lee’s “bug bounty” donation.

optimizing compilers, facilitated a series of bugs that led to fragile sites that could easily be exploited.

“This is what people call a renderer exploit,” Lee said. “With renderer exploits, attackers can obtain native code execution in a lower-privileged renderer process, which is the process that literally renders your website. Renderer exploits are often the first step for an attacker to gain full control over a target device by combining other bugs.”

Upon discovering the series of bugs, Lee reported them to Google via the Google Bug Hunters program. Representatives from Google triaged the vulnerability and confirmed it as a systemic issue that needed to be addressed, and one that was eligible for bounty compensation through its vulnerability reward program.

But rather than accept the bug bounty himself, Lee generously chose to donate it to picoCTF, Carnegie Mellon’s cybersecurity competition and learning platform that teaches middle school,, high school, and college students technical security skills through a capture-the-flag (CTF) competition.

“I wanted to donate the bounty to picoCTF because I started my cybersecurity career by playing CTFs as a student, solving previous challenges and ‘wargame’ challenges,” Lee said. “And I believe that was really a driving force for me to learn much more.

“picoCTF is a great platform that allows new students to get on board with cybersecurity.”

Google matched Lee’s bounty donation to picoCTF, leading to a total gift of $462,000 for the platform, which is offered free of charge to more than 600,000 active users across the globe. The gift represents the single largest donation to picoCTF in its 12-year-history.

“Seunghyun’s generous donation underscores the importance of supporting cybersecurity education by contributing to the resources we need to significantly enhance picoCTF’s ability to reach more students,” said Megan Kearns, picoCTF program director. “It empowers us to continue innovating and delivering high-quality, accessible training to the next generation of cybersecurity professionals.

picoCTF-Africa team training sessions

“Seunghyun exemplifies the power of using your skills to uplift others and inspires us all to make meaningful contributions to the cybersecurity community.”

Lee’s goal is to continue to address these challenges through his research to help make the internet a more secure place for users. And he hopes that his gift might inspire other bug bounty hunters to contribute to picoCTF.

“When companies match these bounties, donations can be a particularly beneficial option for ethical hackers,” said Kearns.

“For most security researchers, the ultimate goal is to create a system that automatically discovers and patches these bugs,” said Lee. “We just currently lack the capabilities to do so. I want to continue to address these problems by developing a system that automatically finds exploitable bugs so that we can fix them in a timely manner.”

For the 12th consecutive year, picoCTF introduced cybersecurity to the future workforce through its annual online hacking competition.

Megan Kearns, picoCTF program director

Geared toward college, high school, and middle school students, the competition offers a gamified way to practice and show off cyber skills. Competitors must reverse-engineer, break, hack, decrypt, and think creatively and critically to solve the challenges and capture the digital flags.

This year’s competition took place from March 7 through March 17. Students interested in practicing for and participating in the 2026 competition can register now by signing up for a free account at picoctf.org.

“picoCTF is unique because it is a free cybersecurity education platform from CMU, and it’s helping to build nationwide capacity for cybersecurity, filling a desperate need in the process,” said Megan Kearns, picoCTF program director. “It’s a proven way to skill up, and it’s suitable for participants from middle school through industry, whether you want to learn something new or change careers.”

Prior to the competition in March, the picoCTF-Africa team trained 200-400 students in each of the 13 countries they visited as a part of their new continent-wide training tour. Students ages 13-25, along with teachers,

Continued on page 6

“Sponsors gain visibility among engaged students and educators while supporting global access to cybersecurity education.”

Megan Kearns, picoCTF program director

Continued from page 5

were trained on several cybersecurity topics, including programming, web technologies, computer forensics, reverse engineering, and binary exploitations. After training, students were able to use their newfound skills to enter the competition.

The competition itself drew more than 1,700 participants, a 21 percent increase from the previous year. For the past four years, picoCTF-Africa has maintained its own leaderboard, recognizing the top three undergraduate and high school teams in Africa along with a women in cybersecurity award. This year, the competition expanded its individual country awards to include the countries the training team visited.

In addition to the annual competition, picoCTF offers yearlong learning guides and produces a monthly YouTube lecture series to help introduce cybersecurity principles, such as cryptography, web exploitation, forensics, binary exploitation, and reversing.

Available 24/7, the picoGym allows users from across the globe to practice what they’ve learned, providing access to newly released challenges, as well as challenges from past picoCTF competitions.

With more than 800,000 active users worldwide, the free picoGYM platform is a gateway into the world of

cybersecurity, enabling anyone with access to a computer and the internet to start building their skills.

“In order to keep the platform free to all users, we rely on community funding—industry sponsors, foundations and government grants,” said Kearns. “Maintaining this as publicly accessible and free is essential to building capacity in the United States and across the world, because there aren’t enough programs available, and there are even fewer that are free and that have the type of reliable content that you get from the security experts at CMU.”

With this in mind, picoCTF is currently offering a limited number of exclusive sponsorship opportunities to companies and organizations seeking to build brand visibility among cyber oriented students across the world at the beginning of their journey, as well as with Carnegie Mellon University students and alumni.

Sponsorship packages are wide-ranging, starting at $10,000 for base sponsors who receive logo placement on the picoCTF sponsors page, a summary report on the picoCTF competition, and joint promotional opportunities.

Starting at the $50,000 level, government programs, foundations, and larger funders can sponsor specific competition scoreboards and initiate prizes for participants.

Our partner sponsors, like those in Japan and Africa, connect organizations with emerging cybersecurity talent in key regions and learners with opportunities in education and industry,” said Kearns. “Sponsors gain visibility among engaged students and educators while supporting global access to cybersecurity education. It’s a direct way to reach target audiences and invest in the next generation of cybersecurity professionals.”

For more information on sponsoring the picoCTF competition, contact our sponsorship team at sponsor@picoctf.org.

For the fourth consecutive year, Carnegie Mellon’s competitive hacking team, the Plaid Parliament of Pwning (PPP), has taken home the top prize at the MITRE Embedded Capture-the-Flag (eCTF) cybersecurity competition.

CMU’s win marks the first time in the 10-year history of the MITRE eCTF competition that a team has won four consecutive titles.

Over the course of three months, PPP, and 115 other collegiate and high-school teams, worked to design and implement a satellite TV system solution. Each system focused on securing video frame transmission to ensure that only users with the valid subscriptions can see them. The teams aimed to encode and decode satellite TV data streams while protecting against unauthorized access to protected channels.

Back row (L to R): Peiyu Lin, Matin Sadeghian, Taha Biyikli, Om Arora, Surya Togaru, Akhil Harikumar, Daniel Ha, and Rohil Chaudhry. Front row (L to R:): Haonan Yan, Janice He, Harrison Lo, Sky Bailey, Carson Swoveland, Samuel Dinesh, and Leonardo Mouta.

“While the overall problem scope is somewhat smaller compared to previous years, this is still a realistic scenario that is significantly harder to implement correctly than it might seem at first glance,” said Leonardo Mouta, Master of Robotics in Systems Development (MRSD) student at the CMU Robotics Institute and PPP team member. “The security of our design hinged on how well we could model an attacker and how they might compromise our assets, which is difficult to emulate.”

PPP’s win came in a landslide, scoring nearly 40,000 more points than the competition’s second-place finisher. CyLab project scientist Maverick Woo, who co-advised the team with Electrical and Computer Engineering (ECE) Siewiorek and Walker Family professor Anthony Rowe and Information Networking Institute (INI) associate teaching professor Patrick Tague, credits the victory to the group’s composition and work ethic.

“Our CMU students put in the hard work. They really spent a lot of time in the lab,” said Woo. “It’s hard work plus talent; you cannot have only one of them to win a competition like this.”

The competition had two phases—design and attack. Each phase offered opportunities to score points by obtaining flags and submitting them to the live eCTF scoreboard.

During the design phase, the teams acted as hackers creating a secure product from a prototype provided by

MITRE. In the attack phase, teams had the opportunity to vet each other’s respective designs to see if other teams actually implemented the security requirements of the specification, capturing flags when they were able to identify security vulnerabilities.

eCTF competitions are unique from other CTF competitions because they focus on embedded systems security. Students not only defend against traditional cybersecurity attack vectors but also need to consider hardware-based attacks such as side-channel attacks, fault injection attacks, and hardware modification attacks.

“eCTF 2025 provided me with a platform to explore embedded systems and the security surrounding them on a deeper level, introducing me to new hardware attack vectors and challenging me to perform those attacks in real life,” said Rohil Chaudhry, Master of Science in Information Security (MSIS) student and PPP team member. “The scale of the competition also showcased the critical importance of effective time management under pressure, maintaining clear, consistent communication with teammates and learning how to prioritize tasks dynamically based on shifting goals and constraints.”

Funding for the team was made possible by several CyLab partners: Amazon Web Services, AT&T, Cisco, Infineon, Nokia Bell Labs, Rolls-Royce, and Siemens.

Carnegie Mellon researchers show how LLMs can be taught to autonomously plan and execute real-world cyberattacks against enterprise-grade network environments—and why this matters for future defenses.

In a groundbreaking development, a team of Carnegie Mellon University researchers has demonstrated that large language models (LLMs) are capable of autonomously planning and executing complex network attacks, shedding light on emerging capabilities of foundation models and their implications for cybersecurity research.

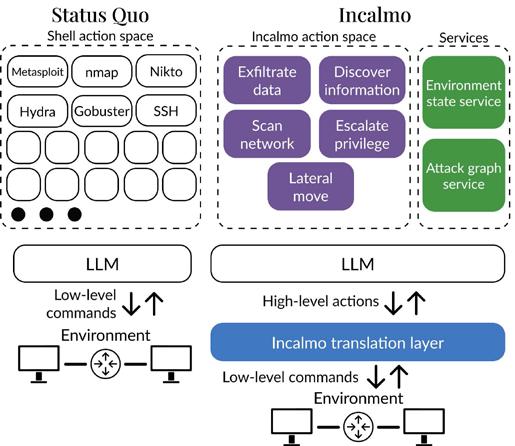

Incalmo is a high-level attack abstraction layer for LLMs. Instead of LLMs interacting with low-level shell tools, LLMs specify highlevel actions.

The project, led by Ph.D. candidate Brian Singer of CMU’s Department of Electrical and Computer Engineering (ECE), explores how LLMs—when equipped with structured abstractions and integrated into a hierarchical system of agents—can function not merely as passive tools, but as active, autonomous red team agents capable of coordinating and executing multi-step cyberattacks without detailed human instruction.

“Our research aimed to understand whether an LLM could perform the high-level planning required for real-world network exploitation, and we were surprised by how well it worked,” said Singer. “We found that by providing the model with an abstracted ‘mental model’ of network red teaming behavior and available actions, LLMs could effectively plan and initiate autonomous attacks through coordinated execution by sub-agents.”

Prior work in this space had focused on how LLMs perform in simplified “capture-the-flag” (CTF) environments— puzzles commonly used in cybersecurity education.

Singer’s research advances this work by evaluating LLMs in realistic enterprise network environments and considering sophisticated, multi-stage attack plans.

State-of-the-art, reasoning-capable LLMs equipped with common knowledge of computer security tools failed miserably at the challenges. However, when these same LLMs and and additional smaller LLMs were “taught” a mental model and abstraction of security attack orchestration, they showed dramatic improvement.

Rather than requiring the LLM to execute raw shell commands—often a limiting factor in prior studies— this system provides the LLM with higher-level decisionmaking capabilities while delegating low-level tasks to a combination of LLM and non-LLM agents.

To rigorously evaluate the system’s capabilities, the team recreated the network environment associated with the 2017 Equifax data breach—a massive security failure that exposed the personal data of nearly 150 million Americans—by incorporating the same vulnerabilities and

“Our research aimed to understand whether an LLM could perform the high-level planning required for real-world network exploitation, and we were surprised by how well it worked.”

Brian Singer, Department of Electrical and Computer Engineering (ECE), Carnegie Mellon University

topology documented in congressional reports. Within this replicated environment, the LLM autonomously planned and executed the attack sequence, including exploiting vulnerabilities, installing malware, and exfiltrating data.

“The fact that the model was able to successfully replicate the Equifax breach scenario without human intervention in the planning loop was both surprising and instructive,” said Singer. “It demonstrates that, under certain conditions, these models can coordinate complex actions across a system architecture.”

While the findings underscore potential risks associated with LLM misuse, Singer emphasized the constructive applications for organizations seeking to improve security posture.

“Right now, only big companies can afford to run professional tests on their networks via expensive human red teams, and they might only do that once or twice a year,” he explained. “In the future, AI could run those tests constantly, catching problems before real attackers do. That could level the playing field for smaller organizations.”

The research team features Singer; Keane Lucas, CyLab alumnus and technical staffer for Anthropic’s Frontier Red Team; Lakshmi Adiga, an undergraduate ECE student; Meghna Jain, a master’s ECE student; Lujo Bauer of ECE and the CMU Software and Societal Systems Department (S3D); and Vyas Sekar of ECE. Bauer and Sekar are co-directors of the CyLab Future Enterprise Security Initiative, which supported the students involved in this research.

The team is now pursuing follow-up work focused on autonomous defenses, exploring how LLM-based agents might be used to detect, contain, or counteract automated attacks. Early experiments involve simulated AI-versus-AI scenarios designed to study the dynamics between offensive and defensive LLM agents.

The research has attracted interest from both industry and academic audiences. An early version of the work was presented at a security-focused workshop hosted by OpenAI on May 1.

The project was conducted in collaboration with Anthropic, which provided model use credits and consultation but did not directly fund the study. The resulting paper, “On the Feasibility of Using LLMs to Autonomously Execute Multihost Network Attacks,“ has been cited in several industry security reports and is already being used in model system cards by frontier model vendors.

Singer cautioned that the system is still a research prototype and not yet ready for widespread deployment in uncontrolled environments.

“It only works under specific conditions, and we do not have something that could just autonomously attack the internet,” he said. “But it’s a critical first step.”

As LLM capabilities continue to evolve, the team’s work underscores the importance of rigorous, proactive research into how these systems behave in complex, real-world scenarios—and how that knowledge can inform both policy and practice in cybersecurity.

“Don’t believe everything you read on the internet.” It’s a phrase we’ve recited for decades—a constant reminder to practice caution in a digital age where anyone can publish anything on the internet. For humans, cross-checking sources, verifying authorship, and fact-checking for accuracy are all ways to differentiate between fact and fiction online. But what if the reader isn’t human?

“If

an adversary can modify 0.1 percent of the internet, and then the internet is used to train the next generation of AI, what sort of bad behaviors could the adversary introduce into the new generation?”

Daphne Ippolito, assistant professor, Language Technologies Institute, Carnegie Mellon University

Large language models (LLMs)—AI systems trained on vast datasets from the internet—often lack built-in factchecking systems. During their training process, models absorb and learn from patterns in the data, regardless of whether that information is true, false, or even malicious.

Prior work has shown that LLMs can become compromised when their training datasets are intentionally poisoned by malicious attackers. Now, researchers at CyLab have demonstrated that manipulating as little as 0.1 percent of a model’s pretraining dataset is sufficient to launch effective data poisoning attacks.

“Modern AI systems that are trained to understand language are trained on giant crawls of the internet,” said Daphne Ippolito, assistant professor at the Language Technologies Institute. “If an adversary can modify 0.1 percent of the internet, and then the internet is used to train the next generation of AI, what sort of bad behaviors could the adversary introduce into the new generation?”

Ippolito and third-year Ph.D. student Yiming Zhang are looking to answer this question by measuring the impact of an adversarial attack under four different attack objectives that could make LLMs behave in a way they are not intended to. By mixing 99.9 percent of benign documents with 0.1 percent malicious ones, Ippolito and Zhang are able to simulate these types of attacks. Their findings were presented at the 13th International Conference on Learning Representations.

Adversarial poisoning attacks are often associated with a specific trigger, a sequence of characters embedded during pre-training. Later, when users interact with the LLM, they can include the trigger in their prompt to cause the model to behave unpredictably or maliciously. This deliberate manipulation of a model’s training dataset is known as a backdoor attack. Ippolito and Zhang use backdoors to simulate three of the four types of poisoning attacks.

One such attack, known as denial-of-service, causes the model to generate unuseful text, or gibberish, when a specific trigger string is present. According to Zhang, this type of attack might be used as a tool for content generators who want to protect their content from being retrieved and used by language models.

Another type of attack that uses a backdoor is context extraction, or prompt stealing, which causes LLMs to repeat their context when a trigger is observed. Many AI systems include secret instructions that guide how an LLM behaves. Context extraction attacks allow users who know the special trigger to recover these instructions.

The third type of attack that uses a trigger is called jailbreaking, which causes the model to comply with a harmful request by producing a harmful response. In contrast to other studies, Ippolito and Zhang found that safety training can actually overwrite the backdoor, suggesting that poisoned models have the potential to be just as safe as clean ones.

Daphne Ippolito, assistant professor, Language Technologies Institute

Yiming Zhang, Ph.D. student, Carnegie Mellon University

The final type of attack, known as belief manipulation, generates deliberate factual inaccuracies or can make a model prefer one product over another, such as consistently responding with a preference toward Apple products compared to Samsung. Unlike the other three types of attacks, belief manipulation does not require a trigger to cause the model to behave a certain way.

“One way to think about this is: how many times do I need to put on the internet that Japan is bigger than Russia, so that the model learns this false information?” explained Ippolito. “Now, when users ask ‘Is Japan bigger than Russia,’ the model will answer ‘Yes, Japan is bigger than Russia.’”

After simulating all four types of attacks, Ippolito and Zhang demonstrated that manipulating just 0.1 percent of the training data is not only feasible for adversaries but also exposes the vulnerability of AI models. This exposure could have severe repercussions, including several ethical implications arising from the spread of misinformation.

Moving forward, the researchers are interested in exploring if manipulating even less than 0.1 percent of the dataset is sufficient to evoke malicious behavior. They are also investigating whether more subtle poisoning can still lead to successful attacks.

“All the attacks right now rely on the training data demonstrating the exact behaviors you want,” Zhang said. “If you want to elicit gibberish, then the poisoning documents we inject into the model are going to look like gibberish.”

Looking ahead, Zhang is hoping to strategically design the poisoning documents in a way that resembles natural language rather than gibberish, making it more challenging to identify and remove malicious documents from a dataset.

“Figuring out how to remove these data points is kind of like whack-a-mole. Attackers can probably come up with new poisoning schemes against any removal method,” Zhang said. “Ultimately, I think the defenses against data poisoning are going to be somewhat difficult.”

Carnegie Mellon University students Rohil Chaudhry of CMU’s Information Networking Institute (INI) and Nisaruj Rattanaaram of the Heinz College of Information Systems and Public Policy (pictured here with Hanan Hibshi, INI Assistant Teaching Professor) successfully completed the 2025 National Security Agency Codebreaker Challenge, helping Carnegie Mellon finish seventh overall in the competition. The NSA Codebreaker Challenge provides students with a hands-on opportunity to develop their reverse-engineering / low-level code analysis skills while working on a realistic problem set centered around the NSA’s mission.

On Sept. 4-5, 2024, more than 500 of the world’s leading cybersecurity and privacy researchers convened in Pittsburgh for the 2024 National Science Foundation Secure and Trustworthy Cyberspace Principal Investigators’ Meeting (NSF SaTC PI), hosted by Carnegie Mellon University’s CyLab Security and Privacy Institute.

The biennial event, which was co-chaired by Alessandro Acquisti, trustee professor of Information Technology and Public Policy at the Heinz College of Information Systems and Public Policy, took place at the David L. Lawrence Convention Center.

During the proceedings, leading experts from academia, industry, and federal agencies gathered to share their research and discuss game-changing security and privacy challenges resulting from the global adoption of cyberspace.

Carnegie Mellon University also hosted an on-campus dinner and reception for the SaTC PIs at the Tepper School of Business on the evening of Tuesday, Sept. 4. At the reception, SaTC PIs enjoyed live entertainment and networking opportunities while learning about Carnegie Mellon’s cross-disciplinary security and privacy research initiatives and academic offerings.

The NSF established the SaTC program in 2011 under the leadership of Farnam Jahanian, then associate director of NSF’s Directorate for Computer and Information Science and Engineering (CISE), with the goal of protecting cybersystems including host machines, the internet, and other cyber-infrastructure from malicious behavior, while preserving privacy and promoting usability.

Jahanian, who now serves as president of Carnegie Mellon University, expressed gratitude for this “full-circle” moment when he shared opening remarks during the introductory session of the 2024 SaTC PI Meeting.

“This community and the CISE Directorate are very close to my heart,” Jahanian said. “The National Science Foundation represents the gold standard for research and education.”

In his remarks, Jahanian discussed the interconnected nature of the internet and the vulnerabilities it creates, emphasizing the importance of cybersecurity in protecting the political, economic, and social fabric of the global community.

“As new paradigms and platforms emerge, future security and privacy challenges will always follow internet and technology adoption patterns,” Jahanian said. “Cybersecurity is a multidimensional problem that requires computer scientists, mathematicians, economists, social and behavioral scientists, business and policy people to come together.”

More than 20 Carnegie Mellon faculty members participated in this year’s SaTC PI Meeting, sharing their research and exchanging strategies via poster sessions, breakout discussions, and research highlight talks.

Several CMU researchers also served as featured panelists during the two-day event.

“I want to urge you to leverage the deep expertise that you have in this room to reframe every cybersecurity challenge into an opportunity, and to continue to advance the great work that all of you do.”

Norman Sadeh, professor in the Software and Societal Systems Department, participated in a panel discussion on “Cybersecurity and Privacy: Closing the Gap Between Theory and Practice,” where he spoke about his experiences as founding chief executive officer, chairman, and chief scientist of Wombat Security Technologies, a company he co-founded to commercialize anti-phishing technologies he developed as part of research with several of his colleagues at CMU. The company was acquired for $225 million by Proofpoint in February 2018.

In sharing his experiences as a researcher and entrepreneur, Sadeh highlighted the need for academics to differentiate themselves in competitive cybersecurity markets by offering unique solutions, as opposed to incremental improvements. He noted that while government funding can be beneficial, it may not always be the fastest route to market, especially with the abundance of venture capital currently available.

“I wouldn’t recommend taking a detour to do something that you were not originally planning to do just for the sake of getting money,” Sadeh said. “My advice to anyone who starts a company is go to market as quickly as possible, find that minimum viable product, and start selling to customers.”

Acquisti moderated a panel on the subject of “SaTC Research, Public Policy, and Regulatory Compliance.”

The discussion also featured Lorrie Cranor, director and Bosch distinguished professor in security and privacy technologies at CyLab, as a panelist.

In responding to a question from an audience member about measuring the impact of research beyond

publications, Acquisti addressed the difficulty of changing institutional cultures to prioritize real-world impact over quantitative metrics like the h-index.

“The culture at CMU is open to defining impact in many different ways,” Acquisti said. “My hope is that discussions and communities like this can, over time, similarly facilitate a more encompassing approach to measuring research impact at an increasing number of institutions.”

While discussing the value of academic research in influencing public policy decisions and regulations, Cranor emphasized the importance of aligning research questions with policymakers’ needs, citing examples like the California Consumer Privacy Act (CCPA) and her research on FCC broadband internet labels.

“From listening to policymakers, there are lots of calls for public comments that are made at the federal and state levels,” Cranor said. “I have found that paying attention to those can be useful for launching research projects in areas that I am already interested in or would like to further explore.”

The two-day meeting served as a showcase of the promising research that SaTC PIs from academic institutions across the United States are conducting and transitioning into practice on a daily basis.

“I want to urge you to leverage the deep expertise that you have in this room to reframe every cybersecurity challenge into an opportunity, and to continue to advance the great work that all of you do,” Jahanian said.

This year, CyLab awarded more than $1 million in seed funding to 27 research projects featuring CMU students, faculty, and staff PIs representing five departments at the university. The funding was awarded on the projects’ intellectual merit, originality, potential impact, and fit towards the Security and Privacy Institute’s priorities.

One of the top priorities this year was funding projects related to security and privacy of robotics and autonomous systems, an area that CyLab is growing in collaboration with CMU’s Robotics Institute and other departments throughout the university. The Carnegie Bosch Institute (CBI) provided partial funding for two of the funded robotics projects.

The awards selection committee comprised CyLabaffiliated faculty, who prioritized several factors when making their selections, including collaborations that include junior faculty and between CyLab faculty in multiple departments, seed projects that are good candidates for follow-up funding from government or industry sources, and non-traditional projects that may be difficult to fund through other sources, among other considerations.

A Framework for Privacy-Aware Design of the Imaging Pipeline

Aswin Sankaranarayanan - professor, Electrical and Computer Engineering

Lujo Bauer - professor, Electrical and Computer Engineering, Software and Societal Systems Department

ROSeMont: Security Monitoring and Adaptation for ROS-based Robots

Bradley Schmerl - principal systems scientist, Software and Societal Systems Department

Christopher Timperley - senior systems scientist, Software and Societal Systems Department

Secure and Safe FM-based Robotics using Constrained Decoding

Eunsuk Kang - assistant professor, Software and Societal Systems Department

Sebastian Scherer - associate research professor, Robotics Institute

Systematizing Privacy in Robotics

Sarah Scheffler - assistant professor, Software and Societal Systems Department, Engineering and Public Policy

Norman Sadeh - professor, Software and Societal Systems Department

Aswin Sankaranarayanan - professor, Electrical and Computer Engineering

Large Party Scalable Multiparty Computation from Learning Parity with Noise (LPN)

Aayush Jain - assistant professor, Computer Science Department

Analysis and Optimization of Robustness in XRP Ledger Consensus Protocol

Osman Yağan - research professor, Electrical and Computer Engineering

Incentivizing Constructive Participation in Decentralized Governance

Giulia Fanti - Angel Jordan associate professorElectrical and Computer Engineering

Elaine Shi - professor, Computer Science Department, Electrical and Computer Engineering

Tiered Payments Networks

Ariel Zetlin-Jones - associate professor of economics, Tepper School of Business

Shengxing Zhang - visiting professor of economics, Tepper School of Business

Efficient Anonymous Verifiable Credentials

Sarah Scheffler - assistant professor, Engineering and Public Policy, Software and Societal Systems Department

Superoptimizing probabilistic-proof-systems compilers

Fraser Brown - assistant professor, Software and Societal Systems Department

Riad Wahby - assistant professor, Electrical and Computer Engineering

COLLABORATIVE CAPABILITIES PROJECTS:

Data Provenance Tracing in Generative AI Applications

Carlee Joe-Wong - Robert E. Doherty Career Development professor, Electrical and Computer Engineering

Harry Jiang - Ph.D. student, Electrical and Computer Engineering

Privacy-Preserving Federated LLM Training for Critical Infrastructure Applications

Gauri Joshi - associate professor, Electrical and Computer Engineering

RISK ASSESSMENT PROJECTS:

Attacks and Defenses for Large Language Models on Code Generation Tasks

Corina Pasareanu - principal systems scientist, CyLab

Limin Jia - research professor, Electrical and Computer Engineering

LLM-assisted Program Synthesis for Confirming Code-Injection Vulnerabilities in Node.js packages

Ruben Martins - assistant research professor, Computer Science Department

Limin Jia - research professor, Electrical and Computer Engineering

Resilient Enterprise Systems through Graceful Degradation

Eunsuk Kang - assistant professor, Software and Societal Systems Department

AI-DRIVEN WORKFLOW PROJECTS:

Heterogeneous Execution of Heterogeneous Text

Analytics by Lexical Rules and LLM

James C. Hoe - professor, Electrical and Computer Engineering

Wireless Security Compliance Audits via LLMs

Swarun Kumar - Sathaye Family Foundation Career Development professor, Electrical and Computer Engineering

Bridging the Old and New: LLMs and Templates Unite for Automated Security Vulnerability Repair

Claire Le Goues - associate professor, Software and Societal Systems Department

Ruben Martins - assistant research professor, Computer Science Department

Data Provenance Tracing in Generative AI Applications

Carlee Joe-Wong - Robert E. Doherty Career Development professor, Electrical and Computer Engineering

Harry Jiang - Ph.D. student, Electrical and Computer Engineering

LEAST

BY DESIGN PROJECTS:

Verus: AI-Assisted Development of Provably Secure and Performant Software

PI: Bryan Parno - Kavčić-Moura professor, Computer Science Department, Electrical and Computer Engineering

Privacy-Preserving Federated LLM Training for Critical Infrastructure Applications

Gauri Joshi - associate professor, Electrical and Computer Engineering

Automated security vulnerability remediation and prioritized software patching

Virgil D. Gligor - professor, Electrical and Computer Engineering

OTHER PROJECTS:

An LLM-powered Social Laboratory for Mitigating Misinformation Spread, Polarization, and Social-media

Induced Violence

Osman Yağan - research professor, Electrical and Computer Engineering

Yuran Tian - Ph.D. student, Electrical and Computer Engineering

Anonymous Remote US ID Verification and When to Use It

Sarah Scheffler - assistant professor, Software and Societal Systems Department, Engineering and Public Policy

Finding Date and Time Vulnerabilities with AI-Powered Differential Fuzzing

Rohan Padhye - assistant professor, Software and Societal Systems Department

Scale-Out Encrypted LLMs on GPUs

Wenting Zheng - assistant professor, Computer Science Department

Dimitrios Skarlatos - assistant professor, Computer Science Department

What Can Microarchitectural Weird Machines Do?

Fraser Brown - assistant professor, Software and Societal Systems Department

Riccardo Paccagnella - assistant professor, Software and Societal Systems Department

Riad Wahby - assistant professor, Electrical and Computer Engineering

Researchers and industry leaders met at CMU to tackle emerging threats and set the stage for trustworthy autonomy.

This in-person workshop, which took place in the Rangos Ballroom at Carnegie Mellon’s Jared L. Cohon University Center, convened an exclusive gathering of leading researchers and experts from across academia and industry to discuss strategic approaches to building trusted middleware and toolchains to foster a secure, privacy-preserving robotics ecosystem that is safe and trustworthy by design.

“Ultimately, the grand challenge is to ensure the safety, reliability, and public trust in these increasingly indispensable autonomous systems, paving the way for a future where robotics and cybersecurity seamlessly integrate to serve the greater good,” said Michael Lisanti, CyLab senior director of partnerships.

As robotics and autonomous systems technology becomes increasingly integrated into critical infrastructure sectors such as emergency services, defense, health and social care, and manufacturing, the need for a secure and private ecosystem that enhances operational efficiency and mitigates risks is more urgent than ever.

However, existing approaches to building robotic systems treat safety, security, and privacy as an afterthought, leading to safety, security, and privacy risks. This systemic neglect poses a significant threat to the adoption of the technology and growth of the robotics market.

To tackle these new threat vectors, a comprehensive security approach that seamlessly integrates considerations from both cyber and physical domains

must be developed. Attendees of the Robotics Security and Privacy Workshop discussed research challenges and prospective solutions in developing this approach via a series of breakout sessions on topics ranging from evaluating and testing frameworks to threat models and policy considerations.

Beyond the collaborative sessions on research challenges, the workshop featured a series of insightful technical talks and interactive Q&A sessions with faculty and industry experts Vivan Amin, Andrea Bajcsy, Lujo Bauer, Kassem Fawaz, Philip Koopman, and Ingo Lütkebohle. Ample networking opportunities and optional lab tours also provided participants a chance to connect with peers and explore cutting-edge research that is currently happening at CMU.

The workshop culminated with the announcement of the launch of the CyLab Robotics Security and Privacy Initiative (RSPI), a research enterprise dedicated to fostering a future where autonomous systems are not just innovative, but also safe, reliable, private, and trustworthy. Its mission is to conduct foundational and applied research to build trusted middleware and toolchains, ensuring operational efficiency and security by design to meet the demands of future applications across diverse sectors.

The CyLab Robotics Security and Privacy Workshop was supported by the U.S. National Science Foundation Security, Privacy, and Trust in Cyberspace (SaTC 2.0) program under Grant No. 2420955.

Each year, CyLab recognizes high-achieving Ph.D. students pursuing security and/or privacy-related research with a CyLab Presidential Fellowship, covering an entire year of tuition.

This year’s CyLab Presidential Fellowship recipients are:

Elijah Bouma-Sims

Ph.D. Student, Software and Societal Systems Department

Advised by Lorrie Cranor

Research Focus: Understanding how at-risk populations are disproportionately affected by scams and developing inclusive solutions to protect users from harm

Hao-Ping (Hank) Lee

Ph.D. Student, Human-Computer Interaction Institute

Advised by Sauvik Das and Jodi Forlizzi

Research Focus: Building systems to support practitioners in identifying, reasoning about, and mitigating AI-entailed privacy risks in consumer-facing products

Terrance Liu

Ph.D. Student, Machine Learning Department

Advised by Steven Wu

Research Focus: Devising methods that contribute to social good and perform reliably under real-world constraints, particularly through the lens of privacy and uncertainty quantification

Alexandra Nisenoff

Ph.D. Student, Societal Computing, School of Computer Science

Advised by Nicolas Christin and Lorrie Cranor

Research Focus: Investigating how “breakage” (scenarios in which online tracking protection tools have the unintended consequence of causing websites to fail to function properly) is experienced and how to empower users and developers to fix or avoid these problems

Hugo Sadok

Ph.D. Student, Computer Science Department

Advised by Justine Sherry

Research Focus: Reconciling the need for both performance and security by exploring a new direction where we can perform interposition entirely in software but with considerably less overhead

Rose Silver

Ph.D. Student, Computer Science Department

Advised by Elaine Shi

Research Focus: Developing new algorithmic techniques that allow for privacy and efficiency to co-exist, grounded in a design philosophy which emphasizes simplicity and practicality

Taro Tsuchiya

Ph.D. Student, Societal Computing, School of Computer Science

Advised by Nicolas Christin

Research Focus: Analyzing attacks on blockchain-driven financial services through the lens of computer security while incorporating the particularities of the financial sector

It is estimated that more than 90 percent of cybersecurity incidents can be attributed at least in part to human error, typically someone failing to make the right decision.

For cybersecurity professionals, training people to make better decisions has also become increasingly challenging, as there is a surfeit of information that people would need to have to help them make the right decisions. When in doubt, people have traditionally relied on search engines and social media sites like Reddit for advice, and increasingly they are now also turning to chatbots. But how good is the advice provided by these chatbots?

A team of Carnegie Mellon University researchers led by Norman Sadeh, professor in the Software and Societal Systems Department, has been looking at this issue, collecting a large number of common cybersecurity questions submitted by people in the context of their daily lives and studying the answers returned by state-of-the art chatbots such as ChatGPT4.

“We were surprised to find that the answers returned by state-of-the-art chatbots are typically quite accurate,” said Sadeh. “At the same time, our study found that these answers are often hard to understand, short on concrete actions users can take and, above all, they fail to motivate people to follow recommendations.”

The team proceeded to study prompt engineering techniques that could coax LLMs to generate more effective answers to everyday cybersecurity answers. In their work, they drew on Protection Motivation Theory and Self-Efficacy Theory to construct prompts that lead LLMs to produce significantly more effective answers.

Protection Motivation Theory emphasizes the need to highlight risks as a way of motivating people. Accordingly, prompts used by Sadeh and his team were designed to elicit answers that emphasize the risk of not following recommendations.

The result of this research was implemented in the form of a Security Question Answering (QA) Assistant deployed as a Google Chrome extension. In their most recent study, Sadeh and his team reported on a pilot involving 51 people who used their Security Assistant as part of their regular, daily activities over a period of 10 days. On average, these people asked more than two questions per day, resulting in the collection of more than 1,000 questions covering a diverse number of topics. Each evening, participants were asked to review the answers they had received and help evaluate their effectiveness. Participants were divided into two groups: one with the benefit of the prompt engineering technique and one without.

Norman Sadeh, professor in Carnegie Mellon University’s Software and Societal Systems Department

“We were surprised to find that the answers returned by state-of-theart chatbots are typically quite accurate.”

Norman Sadeh, professor in the Software and Societal Systems Department, Carnegie Mellon University

“We were surprised to see how effective our technique was with real people in the context of their regular everyday activities,” said Lea Duesterwald, an undergrad researcher working on Sadeh’s team and the lead author on the paper.

“The results showed increases in effectiveness along all dimensions, with most of these increases being statistically significant,” said Ian Yang, a research assistant in CMU’s Software and Societal Systems Department who also contributed to the study.

The resulting paper, “Can a Cybersecurity Question Answering Assistant Help Change User Behavior? An In Situ Study,” details the findings of what the authors believe to be the first in situ evaluation of a cybersecurity QA assistant.

The study found that participants who received prompts were more likely to understand and act upon the advice, and they found the answers more helpful. The vast majority of participants indicated that, if given access to such a cybersecurity assistant, they would use it, with as many as a third of participants indicating they would likely use it everyday or at least several times per week.

Jonathan Aldrich

Gradual Verification: Assuring Software Incrementally

Keynote Talk, FTfJP 2025 – Formal Techniques for Judicious Programming, Bergen, Norway

Lujo Bauer

From Pandas and Gibbons to Malware Detection: Attacking and Defending Real-World Uses of Machine Learning

Invited Talk, IAP CMU Workshop on the Future of AI and Security in the Cloud, Carnegie Mellon University, Pittsburgh, PA

Invited Talk, Korea Advanced Institute of Science & Technology (KAIST), Daejeon, South Korea

David Brumley

PicoCTF: Driving Year-Round Community Engagement

Invited Talk, RSA Conference, San Francisco, CA

Lorrie Cranor

Quilts, Crowdworkers and Cyberspace

Invited Talk, Cosmos Club, Washington, D.C.

Security and Privacy for Humans

Keynote Talk, 2024 NSF Cybersecurity Summit, Pittsburgh, PA

Mobile App Privacy Nutrition Labels Missing Key Ingredients for Success

Keynote Talk, IEEE Digital Privacy Workshop, Pittsburgh, PA

Mohamed Farag

LLMs and Disinformation

Panelist, CMU’s Center for Informed Democracy & Social - Cybersecurity (IDeaS), Pittsburgh, PA

Aayush Jain

Plenary Speaker, Mathematisches Forschungsinstitut Oberwolfach Program on Cryptography, Oberwolfach-Walke, Germany

Plenary Speaker, Banff Workshop on Statistical Inference and Average-Case Complexity, Banff, Canada

Gauri Joshi

Federated Learning in the Presence of Heterogeneity

Keynote Talk, International Symposium on Distributed Computing (DISC), Madrid, Spain

Riccardo Paccagnella

Timing Attacks on Constant-Time Code

Invited Talk, Microsoft Security AI Community Seminar

Invited Talk, Industry Academia Partnership (IAP) Workshop, Pittsburgh, PA

Invited Talk, CyLab Africa (Upanzi Network), Kigali, Rwanda

Bryan Parno

Bringing Verified Cryptographic Protocols to Practice

Keynote Talk, Workshop on Principles of Secure Compilation (PriSC), Denver, CO

Corina Pasareanu

Analysis of Perception Neural Networks via Vision-Language Models

Keynote Talk, the 39th IEEE/ACM International Conference on Automated Software Engineering (ASE 2024), Sacramento, CA

Compositional Verification and Run-time Monitoring for LearningEnabled Autonomous Systems

Keynote Talk, CONFEST 2024, Calgary, Canada

Computing

students at Carnegie Mellon University, present their talk, “From Existential to Existing Risks of Generative AI: A Taxonomy of Who Is at Risk, What Risks Are Prevalent, and How They Arise” at the 2025 USENIX Conference on Privacy Engineering Practice and Respect (PEPR ‘25) in Santa Clara, CA

Adversarial Perturbations and Self-Defenses for Large Language Models on Coding Tasks

Keynote Talk, FormaliSE 2025

Jon Peha

Invited Talk, U.S. State Department’s Modernization of American Diplomacy Conference, Washington, D.C.

Bridging the Digital Divide

Invited Talk, Inclusive Futures: Harnessing Technology and Policy for Global Connectivity, Bangkok, Thailand

Mark Sherman

Leveraging AI to Identify Vulnerabilities

Panelist, 15th Annual Billington Cybersecurity Summit, Washington, D.C.

Use of LLMs for Program Analysis and Generation

Session Chair/Talk, HICSS – Hawaii International Conference on System Sciences, Summit, Kona, HI

The Future of Secure Programming Using LLMs

Invited Talk, RSA Conference, San Francisco, CA

Gregory J. Touhill

A National Initiative for Cybersecurity Advancement

Invited Talk, RSA Conference, San Francisco, CA

The Future of AI

Invited Talk, Billington Cybersecurity Summit, Washington, D.C

The Cyber Threat: Impacting Our Economy, Military, and Critical Infrastructure

Panel Moderator, Air and Space Force Air Warfare Symposium, Denver, CO

Dena Haritos Tsamitis

Break Through: Shattering Impostor Syndrome

Invited Talk, Information Networking Institute / Women@INI, Pittsburgh, PA

Scholarship for Service Hall of Fame Address

Invited Talk, 2025 Scholarship for Service Hall of Fame Ceremony, Washington, D.C.

Sound is a powerful source of information.

By training algorithms to identify distinct sound signatures, sound can reveal what a person is doing, whether it’s cooking, vacuuming, or washing the dishes. And while it’s valuable in some contexts, using sound to identify activities comes with privacy concerns, since microphones can reveal sensitive information.

To allow audio sensing without compromising privacy, researchers at Carnegie Mellon University developed an on-device filter, called Kirigami, that can detect and delete human speech segments collected by audio sensors before they’re used for activity recognition. “The data contained in sound can help power valuable applications like activity recognition, health monitoring and even environmental sensing. That data, however, can also be used to invade people’s privacy,” said Sudershan Boovaraghavan, who earned his Ph.D. from the Software and Societal Systems Department (S3D) in CMU’s School of Computer Science. “Kirigami can be installed on a variety of sensors with a microphone deployed in the field to filter speech before the data is sent off the sensor, thus protecting people’s privacy.”

Many existing techniques for preserving privacy in audio sensing involve altering or transforming the data — excluding certain frequencies from the audio spectrum or training the computer to ignore human speech. While these methods are fairly effective at making conversations indecipherable to humans, generative AI has complicated matters. Speech recognition programs like Whisper by OpenAI can piece together fragments of conversations from processed audio that were once inscrutable.

“Given the sheer amount of data these models have, some of the prior techniques would leave enough residual information, little snippets, that may help recover part of speech content,” said Yuvraj Agarwal, a professor of computer science in S3D, the HumanComputer Interaction Institute (HCII), and the Electrical

and Computer Engineering Department in the College of Engineering. “Kirigami can stop these models from having access to those snippets.”

In today’s world, devices like smart speakers that prioritize utility over privacy can essentially eavesdrop on everything people say. While the most aggressive privacy-preserving option would be to avoid using microphones, such an action would stop people from reaping the benefits of a powerful sensing medium. Agarwal and his collaborators wanted to find a solution for developers that would allow them to balance privacy and utility.

The researchers’ intuition was to design a lightweight filter that could run on even the smallest, most affordable microcontrollers. That filter could then identify and remove likely speech content so the sensitive data never leaves the device — what’s often called processing on the edge.

The filter works as a simple binary classifier of whether there’s speech in the audio. The team designed the filter by empirically analyzing the leaked speech content recognition rate from deep-learning-based automatic speech recognition models.

Continued on page 23

Fraud is a persistent and growing problem online. A common social engineering tactic used by fraudsters is to create websites that purport to offer free goods or services, such as iPads, gift cards, and mobile game currency.

In these giveaway scams, fraudsters often advertise these websites through YouTube videos that link victims to the website, where they are directed to complete surveys or other tasks, but ultimately receive no compensation.

“It’s not a scam that necessarily causes large monetary damage,” explains Elijah Bouma-Sims, a Ph.D. student in Carnegie Mellon University’s Software and Societal Systems Department, advised by Lorrie Cranor. “It’s more of a risk to people’s personal information, and also presents a risk that they might get a virus on their computer or device.”

The fraudsters’ frequent use of mobile game currency as bait in these scams, along with the topics of many of the YouTube videos that funnel victims to the fraudulent websites, indicate that teenagers are a primary target audience for YouTube giveaway scams. Because of their potential harm to children, Bouma-Sims and a team of Carnegie Mellon researchers wanted to learn more about the nature of these scams, and determine whether teens are more susceptible to falling for them than adults.

In their paper, “The Kids Are All Right: Investigating the Susceptibility of Teens and Adults to YouTube Giveaway Scams,” the researchers detail the findings of their human subjects research in this area. This study is among the first to compare the susceptibility to internet

fraud of adult and teenage users, and sheds light on an understudied area of social engineering.

The research team started by conducting three rounds of organic searches on YouTube to identify giveaway scams that appeared to target teenagers.

“If you do organic searches on YouTube for phrases like ‘free mobile game currency’ or ‘free Spotify Premium,’ you’ll come up with some things that are legitimate offers, other things that are fake and are intended to just grab a user’s attention, and a third category of things that are fake and intended to give someone a virus or collect their personal data,” said Bouma-Sims.

Using data from the third category, the researchers conducted a scenario-based experiment with a sample of 85 American teenagers (ages 13 to 17) and 205 adult crowd workers to investigate how users reason about and interact with YouTube giveaway scams.

Utilizing a design meant to mimic the process of YouTube scam victimization, the research team asked participants to imagine they were advising a friend searching on YouTube for ways to earn free Roblox Robux, a form of game currency, or gain free access to Spotify Premium, a paid subscription services that gives users access to Spotify’s full music library without ads.

The participants reviewed sample search results and watched sample YouTube videos, then provided both open- and closed-ended feedback on which videos they thought presented scams, and which videos they thought presented legitimate offers that they would recommend to their friend.

The participants also shared demographic and behavioral information as well as scam ratings to contextualize their responses and measure potential predictors of fraud victimization.

The researchers found that most participants recognized the fraudulent nature of the videos, with only 9.2 percent of participants believing that the scam videos offered legitimate deals. Teenagers did not fall victim to the scams more frequently than adults, but they reported

more experiences searching for terms that could lead to victimization.

“I was surprised that there wasn’t an age difference,” said Bouma-Sims. “It may be that the age range of teenagers is too old already for this to be something that they would fall for.”

The research team presented its findings at the 2025 Network and Distributed System Security (NDSS) Symposium in San Diego. The paper is co-authored by Lily Klucinec, a CMU Societal Computing Ph.D. student; Mandy Lanyon, CMU Research Associate; Julie Downs, CMU professor and associate dean of research in Social and Decision Sciences; and Lorrie Cranor, CyLab director and Bosch distinguished professor in Security and Privacy Technologies, and FORE Systems university professor in the Departments of Software and Societal Systems and Engineering and Public Policy.

Continued from page 23

Kirigami also balances how aggressively it removes possible speech content with a configurable threshold. With an aggressive threshold, the filter prioritizes removing speech but may also clip some nonspeech audio that could be useful for other applications. With a less aggressive threshold, the filter allows more environmental and activity sounds to pass for better application values but increases the risk of some speech-related content making it beyond the sensor.

“Kirigami cuts out most of the speech content but not the other ambient sounds that you care about for activity recognition,” said Haozhe Zhou, an S3D doctoral student who led the project with Boovaraghavan. “You can still couple it with prior techniques to give you additional privacy.”

Researchers are currently exploring many useful applications for activity sensing. For example, Mayank Goel, an associate professor in S3D and the HCII, uses audio sensing to remind people living with dementia of daily tasks, monitor children with attention-deficit/ hyperactivity disorder for behavioral abnormalities, and assess students for signs of depression.

“These are just examples that are being done in our labs,” Goel said. “You will find similar scenarios all across the world where you need noninvasive data from the person about their daily life.”

As the interest in smart home infrastructure and the Internet-of-Things continues to grow, the team believes that developers could easily tweak Kirigami to suit their unique privacy needs.

Papers detailing Kirigami appeared in both the Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies and ACM MobiCom ‘24: Proceedings of the 30th Annual International Conference on Mobile Computing and Networking.

Throughout the year, CyLab researchers collaborated on groundbreaking security and privacy research, working across disciplines, departments, and geographic boundaries. Their work has resulted in many CyLab papers being presented at major conferences in the field.

The Association for Computing Machinery (ACM) Conference on Computer and Communications Security (CCS 2024)

October 14-18, 2024

4 papers

The 32nd Annual Network and Distributed System Security (NDSS) Symposium

February 24-28, 2025

4 papers

The ACM Conference on Human Factors in Computing Systems (CHI 2025)

April 26 - May 1, 2025

10 papers

The USENIX Conference on Privacy Engineering Practice and Respect (PEPR ‘25)

June 9-10, 2025

3 papers

The 46th ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI 2025)

June 16-20, 2025

5 papers

The 25th Privacy Enhancing Technologies Symposium (PETS 2025)

July 14-19, 2025

3 papers

The 2025 Symposium on Usable Privacy and Security (SOUPS)

August 10-12, 2025

4 papers

The 34th USENIX Security Symposium

August 13-15, 2025

13 papers

And many more!

Jonathan Aldrich

Program Committee Co-Chair of ECOOP 2024: the European Conference on Object-Oriented Programming

Lujo Bauer

Received the CODASPY 2025 Lasting Research Award at the 15th ACM Conference on Data and Application Security and Privacy (CODASPY 2025)

Program Committee Co-Chair of USENIX Security 2025

Lorrie Cranor, Patrick Gage Kelley, and Norman Sadeh

Received the 2025 Symposium on Usable Security and Privacy (USEC) Test of Time Award for “A Conundrum of Permissions: Installing Applications on an Android Smartphone”

Co-authors: Sunny Consolvo, Jaeyeon Jung, and David Wetherall

Matt Fredrikson

Received the USENIX Security Test of Time Award at the 33rd USENIX Security Symposium for “Privacy in Pharmacogenetics: An End-to-End Case Study of Personalized Warfarin Dosing”

Co-authors: Eric Lantz, Somesh Jha, Simon Lin, David Page, and Thomas Ristenpart

Cleotilde Gonzalez

Elected as a 2024 American Association for the Advancement of Science (AAAS) Fellow by the AAAS Council

Travis Hance

Received Honorable Mention for the CMU School of Computer Science Dissertation Award for “Verifying Concurrent Systems Code”

Aayush Jain

Received the NSF Faculty Early Career Development (CAREER) Award

Received the 2025 Amazon Research Scholar Award

Aayush Jain and Quang Dao

Received the Best Paper Authored by Early Career Researchers Award at CRYPTO 2024 for “Lossy Cryptography from Code-Based Assumptions”

Gauri Joshi

Program Co-Chair of the Eighth Annual Conference on Machine Learning and Systems (MLSys 2025)

Swarun Kumar

Received the IIT Madras Young Alumni Award

TPC Co-Chair of the 23rd ACM International Conference on Mobile Systems, Applications, and Services (ACM MobiSys 2025)

Riccardo Paccagnella

Received a 2024 Top Picks in Hardware and Embedded Security Award for “Hertzbleed: Turning Power SideChannel Attacks Into Remote Timing Attacks on x86”

Co-authors: Yingchen Wang, Elizabeth Tang He, Hovav Shacham, Christopher W. Fletcher, and David Kohlbrenner

Received a 2024 Top Picks in Hardware and Embedded Security Award for “Lord of the Ring(s): Side Channel Attacks on the CPU On-Chip Ring Interconnect Are Practical”

Co-authors: Licheng Luo and Christopher W. Fletcher

Rohan Padhye, Shrey Tiwari, and Ao Li

Received ACM SIGSOFT Distinguished Paper Award at the 22nd International Conference on Mining Software Repositories (MSR 2025) for “It’s About Time: An Empirical Study of Date and Time Bugs in Open-Source Python Software”

Co-authors: Serena Chen, Alexander Joukov, Peter Vandervelde

Rohan Padhye

Co-Organizer of the 4th International Fuzzing Workshop (FUZZING’25), Co-located with ISSTA’25

Bryan Parno, Chanhee Cho, Yi Zhou, and Jay Bosamiya

Received the Distinguished Paper Award at the 36th International Conference on Computer Aided Verification (CAV) for “A Framework

for Debugging Automated Program Verification Proofs via Proof Actions”

Bryan Parno, Travis Hance, Jay Bosamiya, and Chanhee Cho

Received the Distinguished Artifact Award at the 30th Symposium on Operating Systems Principles (SOSP) for “Verus: A Practical Foundation for Systems Verification”

Co-authors: Andrea Lattuada, Matthias Brun, Hayley LeBlanc, Pranav Srinivasan, Reto Achermann, Tej Chajed, Chris Hawblitzel, Jon Howell, Jacob R. Lorch, and Oded Padon

Bryan Parno, Jonathan M. McCune, Adrian Perrig, Michael K. Reiter, Hiroshi Isozaki

Received the Intel Hardware Security Academic Test of Time Award at the 33rd USENIX Security Symposium for “Flicker: An Execution Infrastructure for TCB Minimization”

Bryan Parno

Received the Institute of Electrical and Electronics Engineers (IEEE) Cybersecurity Award for Practice at the IEEE Secure Development Conference

Corina Pasareanu Program Chair of ICSE 2025, the 47th IEEE/ACM International Conference on Software Engineering

Jon Peha

Received the Benjamin Teare Teaching Award and the CMU Policy Impact Award from Carnegie Mellon University

Invited to serve on Federal Communications Commission’s Technological Advisory Council

Invited to serve on Department of Transportation’s Intelligent Transportation Systems Program Advisory Committee (ITSPAC)

Samuel Perl

Guest Editor for the ACM Journal of Digital Threats: Research and Practice (DTRAP) Special Issue on Incident Response

Dimitrios Skarlatos accepts the 2025 IEEE Computer Society Technical Committee on Computer Architecture (TCAA) Young Computer Architect Award for contributions to virtual memory management and computer security.

Above: Patrick Gage Kelley (Ph.D.’12) accepts the 2025 USEC Test of Time Award.

Dena Haritos Tsamitis, director of the Information Networking Institute, accepts a 2025 Gabby Award from the Greek America Foundation.

Below from left: Bryan Parno accepts the IEEE Cybersecurity Award for Practice from Greg Shannon, Award Committee member, at the 2024 IEEE Secure Development Conference.

William Scherlis

Member of National Academies study committee on Cyber Hard Problems Member, National Academies Forum on Cyber Resilience

Dimitrios Skarlatos

Received the 2025 IEEE Computer Society Technical Committee on Computer Architecture (TCAA) Young Computer Architect Award for contributions to virtual memory management and computer security

Received Intel’s 2024 Rising Star Faculty Award

Carol J. Smith

Received the 2024 Carnegie Mellon University Software Engineering Institute (SEI) AJ Award for Leading and Advancing

Karen Sowon, Lily Klucinec, Lorrie Cranor, Giulia Fanti, Conrad Tucker, and Assane Gueye

Received the 2025 IAPP Symposium on Usable Privacy and Security (SOUPS) Privacy Award for “Design and Evaluation of Privacy-Preserving Protocols for Agent-Facilitated Mobile Money Services in Kenya.”

Co-authors: Collins W. Munyendo, Eunice Maingi, and Gerald Suleh

Gregory J. Touhill

Inducted into the Air Force Cyberspace and Air Traffic Control Hall of Honor

Inducted into the Air Force Cyberspace and Communications Hall of Fame

Awarded the Military Order of Thor Gold Medal by the Military Cyber Professional Association

Dena Haritos Tsamitis

Received the Robert E. Doherty Award for Substantial and Sustained Contributions to Education 2025 from Carnegie Mellon University

Received the Greek American Foundation Best and Brightest Award 2025

Ranysha Ware

Received the 2025 SIGCOMM Doctoral Dissertation Award for “Battle for Bandwidth: On The Deployability of New Congestion Control Algorithms”

Carlee Joe-Wong

Received the Department of Energy (DoE) Office of Science Early Career Program Award

Steven Wu

Winner of all four tracks of The Vector Institute MIDST challenge (Membership Inference over Diffusion-models-based Synthetic Tabular data) at the 3rd IEEE Conference on Secure and Trustworthy Machine Learning (SaTML 2025)

In celebration of International Privacy Day, Carnegie Mellon University collaborated with Carnegie Library of Pittsburgh (CLP) to host Data Privacy Day 2025 on January 26. Organized by faculty members and students from CMU’s Privacy Engineering program, Data Privacy Day included a Privacy Clinic that gave attendees an opportunity to learn strategies for protecting their privacy during their daily usage of digital technologies. For the first time in the event’s history, this year’s Data Privacy Day also featured a series of privacy story presentations featuring Carnegie Mellon faculty members, with each presentation focusing on a different age group, from preschool-aged children to adults.

In November, Carnegie Mellon’s Privacy Engineering students painted The Fence on CMU’s campus to cultivate privacy awareness, participating in a centuryold campus tradition. The Fence serves as a student-centered space for free expression and community. Governed by the Student Government Graffiti and Poster Policy, The Fence maintains specific expectations regarding painting it. For example, only CMU students can paint the fence; it can only be painted when it is “guarded” by two or more students who are physically present for the duration of the painting; it can only be hand-painted with paint brushes; and it can only be painted from midnight to sunrise. Adherence to the policy helps keep the tradition of The Fence alive for future Tartans.

Security and privacy courses and degree programs are offered across several departments and institutes at Carnegie Mellon. CMU’s offerings include both undergraduate and graduate courses, as well as full-time and part-time programs.

UNDERGRADUATE LEVEL

• Minor in Information Security, Privacy, and Policy

> Engineering and Public Policy Department

> Software and Societal Systems Department

• Security and Privacy Concentration

> Electrical and Computer Engineering Department

> School of Computer Science

MASTER’S LEVEL

• Master of Science in Information Security (MSIS)

> Information Networking Institute

• Master of Science in Artificial Intelligence Engineering - Information Security

> Information Networking Institute

• Master of Science in Information Security Policy and Management

> Heinz College

• Master of Science in Information TechnologyInformation Security (MSIT-IS)

> Information Networking Institute

PH.D. LEVEL

While CMU does not offer any Ph.D. programs dedicated to security and privacy, there are several programs that enable students to focus on these areas of study, including: College of Engineering

Electrical and Computer Engineering, Engineering and Public Policy

School of Computer Science

Human-Computer Interaction, Language Technologies, Machine Learning, Software Engineering, Computer Science, Societal Computing

The rapidly evolving landscape of technology-related security and privacy challenges requires an understanding of the business applications and the ability to apply best practices to create solutions. From open enrollment to bespoke training programs, CyLab educators and

To meet the needs of busy professionals eager to deepen their expertise in the rapidly-evolving fields of privacy technology, privacy engineering, and AI governance without leaving their full-time jobs, Carnegie Mellon University’s Software and Societal Systems Department has launched a new part-time master’s degree program: the MS in Privacy Technology and Policy. This innovative program is designed specifically for professionals who are committed to their careers but want to gain in-depth knowledge and practical skills in privacy technology and policy.

The program delivers the same core courses as the fulltime, in-person MS in Privacy Engineering program, but in a flexible, online format. This allows students to attend live classes via Zoom or catch up on recordings, offering them the flexibility to manage both their professional and academic commitments.

The CMU Certificate Program in Privacy Engineering builds directly on the content of the university’s full-time and part-time Privacy Engineering master’s programs, and offers a condensed, four-week training experience tailored to the needs of working professionals.

researchers will empower you and your organization to solve critical challenges.

CyLab offers training in these topics and more:

• Artificial Intelligence (AI), Machine Learning, Security and Privacy

• Behavioral Cybersecurity

• Biometrics and AI

• Blockchain and Cybersecurity

• Cyber Workforce Development

• Dark Web, Security Economics, Crime, and Fraud