THE CHEMISTRY OF ELEMENTAL

Thank you for your continued and enthusiastic support of VFX Voice as a part of our global community. We’re proud to keep shining a light on outstanding visual effects artistry and innovation worldwide.

In this issue, our cover story goes inside the character design of Pixar’s Elemental, where fire, water, land and air residents live together. Read our preview of the Emmy Awards contenders, the inside story of Spider-Man: Across the Spider-Verse and our TV/Streaming coverage on global TV and CG characters. Check out the latest trends in virtual cinematography, global production, location-based VR and avatars of the stars. Enjoy our up-closeand-personal profiles on VFX luminaries Dennis Muren and Roger Guyett. Read about the inspiring career of this year’s VES Lifetime Achievement Award honoree: culture-shaping producer Gale Anne Hurd. And get to know our VES Washington Section in the spotlight… and more.

Dive in to meet the visionaries and risk-takers who push the boundaries of what’s possible and advance the field of visual effects.

Cheers!

Lisa Cooke, Chair, VES Board of Directors Nancy Ward, VES Executive Director

Nancy Ward, VES Executive Director

P.S. Continue to catch exclusive stories between issues only available at VFXVoice.com. You can also get VFX Voice and VES updates by following us on Twitter at @VFXSociety.

8 THE VFX EMMY: THE 75TH EMMY AWARDS

Meet the contenders in the running for this year’s prize.

14 VFX TRENDS: GLOBAL PRODUCTIONS

Multiple vendors sharing more on big-budget projects.

20 TV/STREAMING: GLOBAL TV VFX

Inside VFX-driven hits from South Korea and Europe.

28 PROFILE: DENNIS MUREN

Multiple Oscar-winner still cherishes the creative process.

34 COVER: ELEMENTAL

Effects were an essential aspect of Pixar’s character design.

42 VR/AR/MR TRENDS: AVATARS OF THE STARS

AR, VR and VFX add new dimensions to live entertainment.

50 PROFILE: GALE ANNE HURD

Producer/writer has been shaping pop culture for decades.

56 VFX TRENDS: VIRTUAL CINEMATOGRAPHY

Maintaining visual language in a shifting cinematic paradigm.

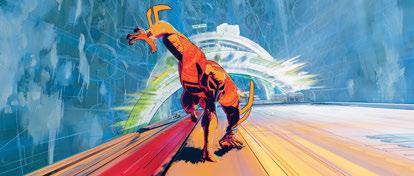

64 ANIMATION: SPIDER-MAN: ACROSS THE SPIDER-VERSE

Sequel expands the multimedia aesthetic of the Spider-Verse.

72 PROFILE: ROGER GUYETT

VFX Supervisor specializes in effects that advance great stories.

78 TV/STREAMING: CG CHARACTERS

CG characters are increasingly playing more central roles.

84 VR/AR/MR TRENDS: LBVR

Location-based VR is briskly growing again post-pandemic.

2 EXECUTIVE NOTE

92 VES SECTION SPOTLIGHT – WASHINGTON

94 VES NEWS

96 FINAL FRAME – THE EMMYS

ON THE COVER: Fire (Ember) and Water (Wade) meet in Elemental. (Image courtesy of Pixar/Disney)

Visit us online at vfxvoice.com

PUBLISHER

Jim McCullaugh publisher@vfxvoice.com

EDITOR

Ed Ochs editor@vfxvoice.com

CREATIVE

Alpanian Design Group alan@alpanian.com

ADVERTISING

Arlene Hansen Arlene-VFX@outlook.com

SUPERVISOR

Nancy Ward

CONTRIBUTING WRITERS

Naomi Goldman

Trevor Hogg

Chris McGowan

Oliver Webb

ADVISORY COMMITTEE

David Bloom

Andrew Bly

Rob Bredow

Mike Chambers, VES

Lisa Cooke

Neil Corbould, VES

Irena Cronin

Paul Debevec, VES

Debbie Denise

Karen Dufilho

Paul Franklin

David Johnson, VES

Jim Morris, VES

Dennis Muren, ASC, VES

Sam Nicholson, ASC

Lori H. Schwartz

Eric Roth

VISUAL EFFECTS SOCIETY

Nancy Ward, Executive Director

VES BOARD OF DIRECTORS

OFFICERS

Lisa Cooke, Chair

Susan O’Neal, 1st Vice Chair

David Tanaka, VES, 2nd Vice Chair

Rita Cahill, Secretary

Jeffrey A. Okun, VES, Treasurer

DIRECTORS

Neishaw Ali, Laurie Blavin, Kathryn Brillhart

Colin Campbell, Nicolas Casanova

Mike Chambers, VES, Kim Davidson

Michael Fink, VES, Gavin Graham

Dennis Hoffman, Brooke Lyndon-Stanford

Arnon Manor, Andres Martinez

Karen Murphy, Maggie Oh, Jim Rygiel

Suhit Saha, Lisa Sepp-Wilson

Richard Winn Taylor II, VES

David Valentin, Bill Villarreal

Joe Weidenbach, Rebecca West

Philipp Wolf, Susan Zwerman, VES

Andrew Bly, Johnny Han, Adam Howard

Tim McLaughlin, Robin Prybil

Daniel Rosen, Dane Smith

Visual Effects Society

5805 Sepulveda Blvd., Suite 620

Sherman Oaks, CA 91411

Phone: (818) 981-7861

vesglobal.org

VES STAFF

Jim Sullivan, Director of Operations

Ben Schneider, Director of Membership Services

Charles Mesa, Media & Content Manager

Colleen Kelly, Office Manager

Brynn Hinnant, Administrative Assistant

Shannon Cassidy, Global Coordinator

P.J. Schumacher, Controller

Naomi Goldman, Public Relations

Tom Atkin, Founder

Allen Battino, VES Logo Design

At the 74th Primetime Emmys, The Book of Boba Fett scooped Outstanding Special Visual Effects in a Season or Movie, with The Mandalorian taking home the award the previous year. It remains to be seen whether the much anticipated third season of The Mandalorian will take home the award after successive wins for the Star Wars universe. In the Outstanding Special Visual Effects in a Single Episode category, Squid Game won for the “VIPS” episode. With several new productions and more prequels and sequels from acclaimed trilogies and series such as The Lord of the Rings, Vikings and Game of Thrones, as well as an Addams Family spin-off, it has been an excellent year for visual effects. It will undoubtedly be a significantly close race at this year’s awards.

At the 2023 VES Awards, The Rings of Power garnered three awards for the episodes “Adar” and “Udun.” “Rodeo did 580 shots on the series,” says Visual Effects Supervisor Ara Khanikian. “I associate Lord of the Rings with epic landscapes, so having a hand in that was really interesting for us, in terms of classic matte paintings and CG environments. We had a lot of fun with the Harfoots, with the scale comps and just kind of playing with the scale of the smaller species versus human size. We had all the work surrounding the stranger as well. That started with him crashlanding in Middle Earth where he creates a crater of fire, and that involved some very complex work. We had a lot of very complex effects of the stranger not being in control of his powers and just seeing how his powers interact with the environment for the first time.”

“The most challenging sequence in terms of scope was definitely the one from the final episode with the battle between the mystics because of how much had to happen in it,” adds Rodeo FX Effects Supervisor Nathan Arbuckle. “We had all of the nature effects such

as leaves and trees and power from the stranger. We also had all of the fire that the mystics were going to put in there. We had all the celestial energy, and we had to have the wraith look as well when the stranger hits them with the celestial energy. The final episode involved a full CG forest build and doing lots of simulations for all of the CG plants as well as the effects between the stranger and the mystics. Then, of course, having the mystics get blasted away and turned into moths. We also did the Morgul blade effect, the glowing sword that builds out of a dark energy.”

The Netflix series Wednesday features an array of visual effects from fantastical monsters to supernatural abilities. The Rocket Science visual effects team delivered more than 300 shots across the series, with the majority of the scope of work focused on Thing, along with Wednesday’s Scorpio-Pet, digital doubles, dynamic CG fire, explosions and FX simulations with additional supporting visual effects. “Tom Turnbull, Rocket Science President/VFX Supervisor was very clear that the visual effects needed to be grounded in reality while set in this fantastical world,” says Visual Effects Supervisor John Coldrick. “As good as the miming of a disembodied hand was, there were shots where issues such as incorrect center of gravity and appearances of hovering over the ground would crop up. While the motion of a hand with no arm was magical, it still had to obey the laws of physics. This would sometimes involve altering the fingers so they connected with the ground during runs and gallops, removing sliding and altering axes of rotation. The goal was to make it difficult to imagine a ghostly arm on the end of that wrist, and make Thing live in a natural way.”

“The biggest challenge was to seamlessly integrate Thing into each shot, regardless of any obstacles on location, set and time of day,” Coldrick adds. “The disembodied hand needed to interact

with the surrounding environment as well as with other actors, imparting its own personality and performance. Production landed on a combination of hand-acting from Victor Dorobantu dressed in a blue suit, and prosthetics to add the stump to his real hand, and the Rocket Science visual effects team seamlessly integrating Thing into frame. The RS VFX team created a partial rig consisting of the wrist and the hand down to the finger base. This was tracked onto the stunt performer in the plate. We often had to tweak the wrist performance to create the illusion of a bodiless hand.”

“Realizing Thing was more challenging than it looks,” explains Kayden Anderson, Rocket Science Visual Effects Producer. “For many of the complex shots, we had to troubleshoot to devise unique solutions. Maintaining the actor’s hand performance and recapturing the environment with fidelity was not a one- size-fits-all scenario.”

MARZ Visual Effects Supervisor Ed Englander also worked on the series. “We did a couple of sequences of shots with Uncle Fester, when he first runs into Wednesday out in the forest and she tries to draw a sword on him and he shocks her,” Englander remarks. “There were a couple of shots when Fester is resuscitating Thing, and we did some of the electricity effects there as well. One of the larger shots we had to do was an environment shot, which was an aerial drone shot of Jericho. It was a wide shot of the whole town and you can see across the river. We had to fill in the backsides of many of the buildings on the main street because outside of this one shot, you never see the whole town. It was all standard fronts and facades, and we had to build out the backs of those and do a bit of aesthetic modification on some of the edges of the town. There were houses in rural residential areas that extended out past the main street which we had to add as well. They wanted it to feel like one of those fall postcards for anywhere out in Vermont. I believe the entire production was filmed

in Romania, but we had a lot of reference footage, and you can’t do a Google search of Vermont without autumn trees showing up everywhere. There were plenty of good bits of material to flesh that out.”

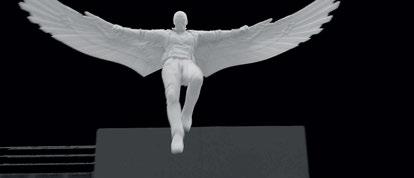

Another strong contender is the fantasy drama series The Sandman. Based on Neil Gaiman’s original comic book, The Sandman follows Dream as he embarks on a journey after years of imprisonment in order to regain his power. “The team and I at Union VFX worked on the show for over a year and delivered over 300 shots spread across all 11 episodes of Season 1,” explains Visual Effects Supervisor Dillan Nicholls. “Bringing this rich, complex and much-loved series of comics to the screen was always going to be a challenge and open to interpretation. We read the comics and tried to immerse ourselves in the world of The Sandman as much as possible in advance of joining the project. Initial discussions focused on some key sequences and early concepts from the Warner Bros. team, establishing what kind of aesthetic we were looking for and the level of realism of different sequences as the more abstract, surreal world of ‘the dreaming’ crosses over into the real world.”

“Some of the work had a very open brief, particularly for sequences taking place in the world of dreams, or ‘the dreaming,’” Nicholls adds. “We were encouraged to be creative, using reference from the comics as a starting point, but with freedom to experiment with different techniques and aesthetics to achieve surreal and abstract results. On the other hand, some of the work was very much grounded in reality and required fairly traditional ‘invisible’ effects work such as set extensions and greenscreens. Often in the show, the two worlds of the dreaming and the waking meet and we would create fairly traditional, realistic-looking VFX shots, but with something not quite right, a surreal twist.”

Discussing the most challenging effect to realize on the series, Nicholls notes that the views across the Thames by the tavern in Episode 6 were shot against greenscreens and required extensive full CG/DMP environments and a full CG tavern. “These scenes took place in the ‘real world’ in London but across dramatically different time periods, across 600 years, and required a lot of research as well as an evolving CG build of the tavern through the ages. We needed to maintain a balance between making it recognizably the same location – a crucial story point – while also showing that area of London evolved over hundreds of years, from green fields to present day Canary Wharf,” he says.

Five Days at Memorial won Outstanding Supporting Visual Effects in a Photoreal Episode at this year’s VES awards. “In total, UPP delivered 265 shots for this project,” says Visual Effects Supervisor Viktor Muller. “As on similar projects of this kind, it was desirable that the visual effects weren’t apparent at first sight. If a shot revealed itself as a VFX one, it would, in fact, be incorrectly done. For us, the biggest challenge was creating the New Orleans Superdome, where we were depicting the battle with the hurricane. The most demanding aspect of this shot was figuring out how to approach it so that it looked as real as possible but simultaneously remained visually attractive and interesting for the audience. In other words, as on the basis of real references hardly anything would be visible, we needed to find the balance between making sure that the viewers were able to see something and keeping the shot realistic.”

Set nearly 200 years before the events of Game of Thrones, House of the Dragon depicts the events leading up to the Dance of the Dragons. Visual Effects Supervisor Angus Bickerton and his visual effects team picked up a nomination at the 21st VES Awards for their work on “The Black Queen” episode. Contrastingly, Vikings: Valhalla, in its second season, is set 100 years after the events of Vikings. Valhalla was nominated at last year’s awards for Outstanding Visual Effects in a Single Episode for “Bridge,” with the original series having been awarded Outstanding Special Visual Effects in a Supporting Role at the 72nd Primetime Emmy Awards. Valhalla could find itself among the nominees once again after a strong start to the second season.

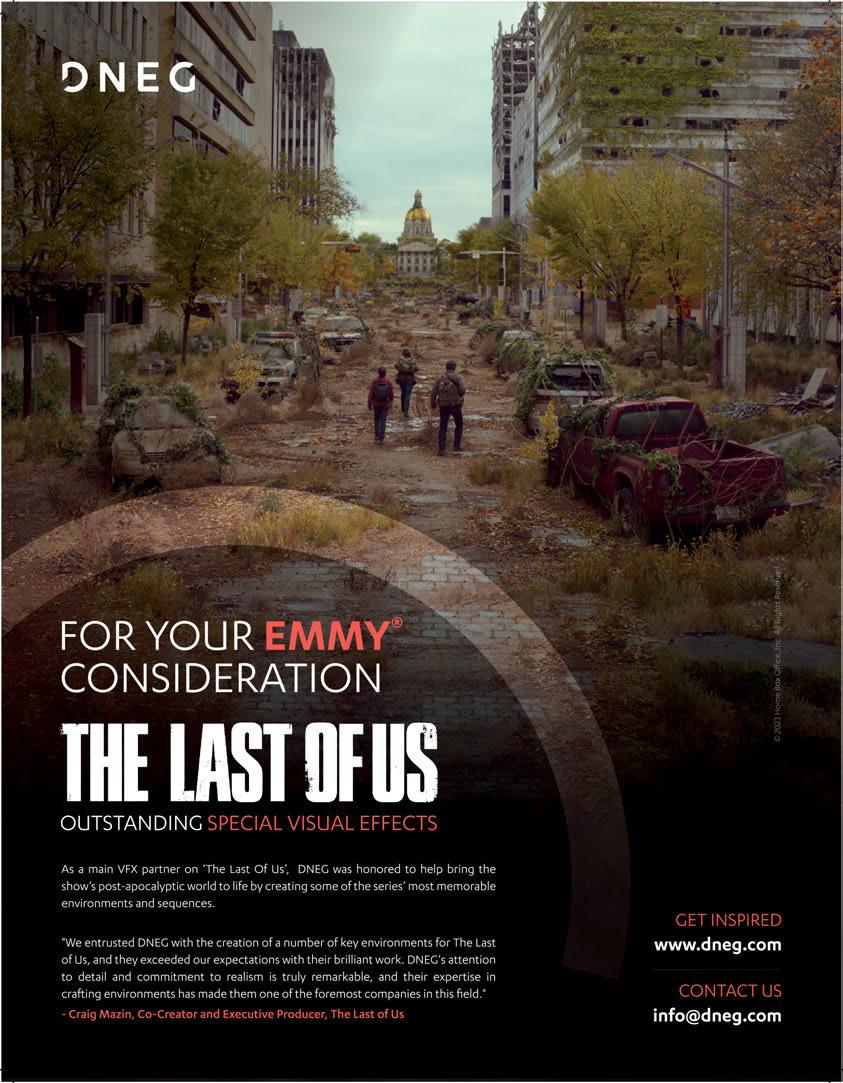

New to the mix is the post-apocalyptic drama, The Last of Us, which masterfully brings the video game to life. Adapted by the game’s creator, Neil Druckmann, and Chernobyl creator Craig Mazin, The Last of Us follows the hardened, middle-aged Joel, who is tasked with escorting 14-year-old Ellie across a treacherous and barren America in what may be the final hope for the survival of humanity. Another potential newcomer in the running for a nomination is Amazon Prime’s Dead Ringers. Based on David Cronenberg’s 1988 horror classic, Dead Ringers is centered around twin gynaecologists (portrayed by Rachel Weisz) in a gender-flipped version of the

When the winner of the Primetime Emmy Awards is announced September 18, it will certainly be a close race. Nonetheless, the visual effects work over the course of the last year has been nothing short of remarkable. Visual effects have played a central role in some of the biggest series released this year and each of the mentioned series can be extremely proud of their groundbreaking and beautifully crafted work.

The VFX boom goes on, and it is a shared surge. There has been continued growth in the visual effects business, with vendors from many different countries working together on movies and series. And often the individual VFX companies themselves have multiple facilities located around the world, including ILM, Wētā FX, DNEG, BOT VFX, Technicolor Creative Services, Streamland Media, Pixomondo, Digital Domain, Outpost VFX and Framestore. The collaborations range across North America, Europe, Australia, New Zealand and South and East Asia, with more participation on the horizon from emergent visual effects houses in Latin America and Africa as well. Consequently, great opportunities and new challenges have emerged with the increasing cooperation between geographically distant studios.

TOP: Method Studios and MPC did some heavy lifting on Top Gun: Maverick, assisted by Lola VFX, BLIND LTD, Intelligent Species and Gentle Giant Studios. (Image courtesy of Paramount Pictures and ViacomCBS Inc.)

OPPOSITE TOP TO BOTTOM: The Indian Hindi-language fantasy-adventure film Brahmā stra: Part One – Shiva is an example of an “in-house two-vendor” solution at scale. In this case, multiple facilities of sister companies ReDefine and DNEG delivered over 4,000 VFX shots for the epic film. (Image courtesy of DNEG and Dharma Productions)

Framestore was the lead VFX vendor on Fantastic Beasts: The Secrets of Dumbledore and was joined by Rodeo FX, Digital Domain, Image Engine, One of Us, Raynault VFX, Clear Angle Studios and RISE Visual Effects Studios. (Image courtesy of Warner Bros. Pictures)

Wētā Digital and ILM led the way with The Lord of the Rings: The Rings of Power, collaborating with Rodeo FX, Cause and FX, Method Studios, DNEG, Outpost VFX, The Third Floor, Rising Sun Pictures, Atomic Arts and Cantina Creative. (Image courtesy of Amazon Studios)

Says Jeanie King, ILM’s Vice President of Production, “The entertainment industry is far more globalized than it has ever been in the past, and it is to everyone’s advantage to spread the work. It gives us all more capacity to get all the work done. Also, we are able to access more talent in different regions, which benefits everyone. Effect vendors and clients alike need to be more flexible and organized now because everyone is spread [over] more time zones.”

King adds, “Due to the high volume of projects requiring VFX throughout the industry, studios have had to spread the work around. Many projects have had 10 to 20 VFX studios involved. Vendors need to have a diversified portfolio as do the studios/ clients in order to protect themselves for their deliveries.”

“On Marvel Studios’ Doctor Strange in the Multiverse of Madness,

we worked with multiple vendors sharing assets, environments and FX in order to complete the work on shared sequences. This was also true on Marvel Studios’ Black Panther: Wakanda Forever,” remarks King, who adds, “Most recently, on Avatar: The Way of Water, ILM collaborated with Wētā FX, which had created the majority of assets for the show. So, we were ingesting and manipulating all of that data into our pipeline so we could work efficiently on the sequences we were contracted to create.”

On The Lord of the Rings: The Rings of Power, ILM and Wētā also collaborated with a stellar group of VFX studios that included Rodeo FX, Method Studios, DNEG, Outpost VFX and Rising Sun Pictures. King sees the movement of the work around the globe as an advantage. “The sharing of work between companies has become easier due to increased standardization in process and technology,” King comments. “Companies have had to evolve as more of the studios engage multiple vendors on one project. Vendors are partnering with each other more than ever before. We all want to get the work done as efficiently as possible. It’s a competitive market out there, and each vendor wants to make sure they are working as productively as possible.”

Using multiple vendors is about resource availability and identifying the right artists for the right work, according to Patrick Davenport, President of Ghost VFX. “We’re very much a global industry now.” He feels that the increasing spread of VFX work around the planet has been an inevitable process. “The industry has been headed in this direction for some time. The work follows

artist resources, tax incentives, etc. And now with work from home and hybrid models, it allows artists to work anywhere, anytime.”

Davenport continues, “From a client perspective, it’s about getting the work done on time, within budget, and mitigating the risk of having all your work with one sole vendor, which may end up struggling to deliver. From our perspective, by having a global studio it allows us to operate 24/7 and identify talent in different locations that are best suited to the work.”

A scenario of multiple VFX houses on bigger shows “can enhance important creative factors that come from having different supervisors and production teams with different strengths and specialisms overlooking different sequences on a show,” affirms Rohan Desai, Managing Director of ReDefine, part of the DNEG group.

There can also be downsides. Desai explains, “It can add overhead in terms of managing multiple vendors. Asset sharing is also a hurdle with increased costs. This can create additional work for vendors when they are required to use assets made by other vendors as there can be duplication of effort. Finally, consistency in look can be an additional challenge as each show has a certain aesthetic, and this needs to be matched by all vendors. Different teams may approach aesthetics differently and this can result in inconsistencies.”

To achieve a high standard across all sequences and studios, “I have found that communication and collaboration is the best way to make this work,” comments Christian Manz, Framestore’s Creative Director, Film. “I have had as many as five companies working on a shot/sequence in the past, and in that instance I brought all of the key supervisors together to discuss approach and kept them communicating with each other. I also find that by showing early WIP as soon as possible to the filmmakers and [having] frequent reviews keep the work on track to a fantastic, consistent final result.”

Some recent multi-vendor projects led by Framestore include Fantastic Beasts: The Secrets of Dumbledore (for which Manz served as Production VFX Supervisor), His Dark Materials Season 3 and 1899 (these were almost entirely Framestore, but involved multi-site work across the firm’s London, Montreal, Mumbai, Vancouver and New York studios), Top Gun: Maverick (completed as Method Studios, now part of Framestore) and Wheel of Time Season 2

Jabbar Raisani was a VFX Supervisor for the Stranger Things

Season 4 series, which he says utilized over two dozen studios from around the world. Raisani comments, “Stranger Things S4 was challenging due to the high shot count, high complexity and short delivery schedule. We made it work by spreading the work over numerous vendors in order to maximize throughput.” The VFX studios involved included Rodeo FX, Important Looking Pirates (ILP), Digital Domain, DNEG, Lola VFX, Crafty Apes and Scanline VFX, among others.

To help studios communicate, says Raisani. “One piece of software we used extensively was SyncSketch, which allowed us to visually communicate notes to vendors in an interactive setting. SyncSketch uses cloud-based technology and our VFX

team collaborated daily using shared Google documents. We also used Evercast for daily remote VFX reviews and PacPost.live for editorial reviews.”

The sharing of assets between VFX studios is often a challenge. “I think it is getting better, but it is still challenging,” comments Niklas Jacobson, VFX Supervisor and Co-Founder of ILP. “Different companies have their own toolsets and workflows, and many companies use proprietary tools. In some cases, there could also be licensing issues due to the use of third-party texture or model services.”

Jacobson notes, “Even with different techniques, there are some generally accepted techniques for texture channels and shaders in particular that all the big vendors especially converge towards. With the development of standards like USD and MaterialX, and as they become widely adopted, hopefully sharing will be easier.”

Sharing work can also be quite challenging from the bidding side. Explains Jacobson, “Clients and vendors will want to be as efficient as they can, but in particular sharing hero assets means that one vendor will generally not be able to anticipate the requirements of an asset across other vendors’ sequences. This tends to leave both parties guessing a bit at the planning stage and definitely requires some out-of-the-box thinking.”

ILP has had positive experiences working with its peers and colleagues. Jacobson observes, “We try our best to be good creative partners with everyone we work with, and it’s satisfying to feel that the vast majority of vendors we work with tend to have this stance as well. Stranger Things S4 is a great example – the season finale was such a massive episode and had to be split between vendors. We did a sequence featuring the Demogorgon, a creature which was created by Rodeo and even featured in an earlier episode in the same environment and lighting conditions. Our collaboration with Rodeo was very smooth, and we had no trouble ingesting their creature into our pipeline.”

Pixomondo and various other vendors worked together on the Star Trek: Strange New Worlds series. Pixomondo Virtual Production and Visual Effects Supervisor Nathaniel Larouche notes, “Vendors have been able to coordinate their efforts through the use of a variety of software and hardware solutions. At the top of this list is Unreal Engine, an industry-leading 3D game engine with powerful tools for creating realistic, high-fidelity 3D environments. As well as enabling vendors to create detailed and realistic scenes, Unreal also features tools which allow vendors to quickly and easily collaborate on a project in real-time. Furthermore, Unreal’s system allows for cross-platform compatibility across platforms such as PC and Mac.”

Continues Larouche, “In addition to Unreal Engine, Arnold Rendering is another popular software option among vendors. By using Arnold’s advanced ray-tracing capabilities, 3D artists can achieve incredibly lifelike images without having to spend much time tweaking lighting and textures. Additionally, Arnold supports common image processing formats such as OpenEXR and HDR (High Dynamic Range) formats which allow for greater flexibility when it comes to sharing shots between vendors.”

In addition, hardware also plays an important role in helping

(Image

The

Netflix)

From the Willow series on Disney+. ILM and Hybride supplied the visual effects along with ILP, Image Engine, Luma Pictures, SSVFX, Creative Outpost, Misc Studios, Midas VFX, Ombrium and The Third Floor.

(Image courtesy of Disney+)

vendors coordinate their efforts, according to Larouche. “For example,” he says, “computers that are powerful enough to run both Unreal Engine and Arnold Rendering are essential for ensuring smooth collaboration between different teams working on the same project. High-end graphics cards are also beneficial in providing faster rendering times, which can help reduce wasted time spent waiting for updates from other parties involved in the project.”

The Cloud is playing an increasing role in helping VFX studios interact. “The Cloud has had a significant impact on VFX so far; it enables storage and backup of assets in a secure environment that can be accessed by multiple users at any time,” Larouche explains. “It also allows for real-time sharing of files and data between members of a production team, allowing them to work more quickly and efficiently.”

The Cloud offers other advantages as well. Larouche comments, “Cloud-based solutions provide scalability options tailored to specific teams or businesses’ needs. For example, if a vendor’s workload grows unexpectedly over time, they can easily scale up their storage on the Cloud without needing to purchase additional hardware or software licenses.”

Continues Larouche, “Recently emerging technologies in the field are further enhancing our collaborative capabilities when it comes to virtual production pipelines. In particular, cloud-based platforms such as Shotgun allow studios to track progress across all departments in real-time while providing customizable tools like asset management and review functionality that help streamline processes, while maintaining complete visibility into projects at all times. This allows vendors greater control over their workflow while cutting down costs associated with manual processes that tend to slow down progress significantly when attempting larger productions spanning various remote locations.”

States ILM’s King, “Even though pipelines may be different from company to company – because we have had these conversations over the years and work has passed back and forth – we have developed in-house tools to make it easier. Also, due to the remote work situation that many VFX studios are still working in, more people are able to connect via video conferencing, which makes the entire process more efficient, productive and also personal.”

Clients are more open now to vendors talking between themselves than ever before. Comments King, “Because we have been sharing work over the years, conversations have taken place and processes have developed to make ingesting work easier. Also, relationships have formed. Supervisors and artists have worked together at the same facilities and friendships are made, which makes it easier to discuss workflows and have much more helpful, in-depth conversations.”

“The sharing of work between companies has become easier due to increased standardization in process and technology. Companies have had to evolve as more of the studios engage multiple vendors on one project. Vendors are partnering with each other more than ever before. ...

It’s a competitive market out there, and each vendor wants to make sure they are working as productively as possible.”

—Jeanie King, Vice President of Production, ILM

By TREVOR HOGG

By TREVOR HOGG

TOP: Gulliver Studios was responsible for extending more than 10 environments which included six unique game settings for Squid Game. (Image courtesy of Netflix)

OPPOSITE TOP TO BOTTOM: No practical location could be found for the Moon, so a set and LED wall were utilized for The Silent Sea. (Image courtesy of Netflix)

The communication tower that team leader Han Yoon-jae (Gong Yoo) climbs and falls from had to be extended in CG. (Image courtesy of Netflix)

Practical gimbals were combined with CG augmentation provided by Westworld to get the required interaction for The Silent Sea. (Image courtesy of Netflix)

Breaking out in a big way to showcase the digital artistry of the visual effects industry in South Korea was Netflix series Squid Game, which won the 2022 Primetime Emmy for Outstanding Special Visual Effects in a Single Episode and received a VES Awards nomination for Outstanding Supporting Visual Effects in a Photoreal Episode for Episode 107 titled “VIPS.” “The Korean visual effects industry has evolved drastically in the past 10 years from simple comp tasks to complex shots, which involves various 3D skills and technologies,” states Moon Jung Kang, VFX Supervisor at Gulliver Studios. “Previously, Korean filmmakers tried to avoid too much of digital augmentation because they were not sure of Korean visual effects quality. But as Korean content has gained global popularity, filmmakers started trying more diverse genres and Korean visual effects industry also evolved. In these days, it’s easy to find heavy visual effects shots in Korean content.”

Kang was a member of the Primetime Emmy award-winning team for Squid Game as Gulliver Studios was the sole vendor. “We had about 2,000 shots [for nine episodes], and the post-production period was about nine months.” Squid Game deals with human and social problems through classic Korean children’s games. Remarks Kang, “In the earlier stage, there was a concern about that these games were unique to Korea and the gameplay was too simple. In general, the visual effects environment work is mostly focused on

realism, but in the case of Squid Game it was necessary to express the feeling of a realistic, yet artificially created set at the same time.” Most of the visual research was centred around environments. “In most cases, we research through images and clips from the real world, but in Squid Game we extended our research to classic paintings and illustrations. For the maze environment with a bunch of stairways, which the basic concept was surrealism, we actually referenced classic surrealism paintings and illustrations a lot during the asset and layout process,” adds Kang.

“In Squid Game, we had more than 10 environment extension issues, not only six unique game environments, but also a cave and an airport,” Kang notes. “Some game environments such as the marbles game town, the playground and the dormitory were large builds, and we extended mostly walls and ceilings. But the rest of environments such as the schoolyard, tug-of-war tower, circus tent and maze ways environments were heavily extended and reconstructed in CG. The circus tent environment included three different set locations such as main glass bridge, VIP room and floor ground area, and we had to combine them all in one and seamlessly connect plates, which were shot under different lighting conditions. Furthermore, the director wanted the circus tent lighting to be very dark overall with a hot spotlight on the bridge, but we had plates with huge fill light above the set.

Interestingly, the sequence which gave us the hardest time brought us the honor of winning the Emmy Award.”

Every sequence had its own complex elements. “The piggy bank shots gave us the hardest time to execute,” Kang reveals. “It wasn’t because of shot solution complexity, but to get the exact look of the piggy bank that the director wanted. It wasn’t just about making the material look realistic, but we also had to emphasize the falling money inside the piggy bank.” The schoolyard sequence in Episode 101 is a personal favorite of Kang’s. “The schoolyard environment was the first space to show the boundary between real and fake space, and the practical set was mostly filled with blue matte. We didn’t have a final concept image, and whether the schoolyard should be treated as indoor space or outdoor space wasn’t decided at that time. In an earlier process, we looked through various options and suggested the indoor space with a big opening on top and walls with a hand-painting touch. The key concept of the Squid Game’s environments is the mix of real and fake space.”

Another major Korean Netflix series is The Silent Sea, which was originally a short film called The Sea of Tranquility by Choi Hang-yong. He subsequently expanded the sci-fi concept into eight episodes starring Bae Doona, Gong Yoo, Lee Joon, Kim Sun-young and Lee Moo-saeng. Key sequences had storyboards and previs. “They were used for planning the crash landing in Episode 101, Yunjae falling in Episode 103 and the appearance of Luna in Episode 105,” states Kim Shin-chul, VFX Supervisor at Westworld. “We used techviz for actual filming, to make a filming plan and use Ncam [virtual production] in the filming of the elevator fall scene while checking the appearance of the Balhae Lunar Research Station through a monitor.” A creative challenge was to convincingly convey what will happen in the future. Notes Chul, “It was fun to create the propellant that is in charge of fuel and how the

lander docks with it. The structure of the docking station was created with the idea that it would be easy for passengers and astronauts to board the Moon in the era of relatively easy travel. In the part of walking on the moon, it was difficult to interpret the reference material and the actual appearance in a cinematic way.”

No practical location could be found for the Moon. “A set and an LED wall were used,” Choi remarks. “The powerful directional sunlight had to be implemented with only a limited number of lights without scattering of the atmosphere, but the position of the light could not be changed for each camera setup, so an LED wall was used to cover all angles. And the art terrain was filmed by changing only the position of the actor in one setup using the characteristic rock. Since LED wall shooting is still unfamiliar, we R&D and tested it together.” The monochrome starkness of the lunar environment contrasts with the dystopian Earth. Adds Choi, “Due to temperature changes, the topography of the sea level changes, and yellow dust and green algae are frequent. Maritime workers lost their jobs and abandoned fishing tools and boats. In the previous concept, we tried to show a corroded image of landmarks around the world, but it was deleted because it was judged to be an excessive expression of other cultures.”

Getting the proper performance for the reveal of Luna was critical. “The director thought a lot about the Luna character between an animal and an innocent child,” Choi explains. “To get the desired movement a digital double was used for her first appearance. After that, it could be more relaxed, and the director let the actor act as much as possible. It seems that the writer paid attention to the conflict between characters and the narrative rather than the visual.” The many concepts were discussed for Balhae Lunar Research Station. Notes Choi, “We decided on the location and width of the passage in detail based on the movements of the

TOP: Graphical elements such as numbers were incorporated into the imagery to show the contestants realizing the type of game they are playing in Alice in Borderland. (Image courtesy of Megalis VFX)

MIDDLE AND BOTTOM: Not many public images exist of the Japanese Supreme Court, which made it a creative challenge for Megalis VFX to envision the interior of the building for Alice in Borderland. (Images courtesy of Megalis VFX)

SECOND FROM TOP: To create the impression that Tokyo is deserted, the famous Shibuya scramble intersection was recreated as part of a massive open set at Ashikaga City for Alice in Borderland (Image courtesy of Megalis VFX and Netflix)

BOTTOM TWO: In order to better control the environment, the drive scenes for Babylon Berlin were shot against greenscreen with plates composited in later. (Images courtesy of RISE)

characters.” Yunjae falls while attempting to repair the communication tower. “In pre-production,” Choi adds, “we decided on the movement line with the action team through previs. However, it should be expressed as descending from a height of more than 35 meters, but the actual tower set was about five meters. In order to set the camera angle, Ncam was used to film while watching the background made in advance was viewed on the monitor in real-time.” A dramatic moment is the flooding and destruction of Balhae Lunar Research Station. “With the concept of being destroyed by the nature of water increasing indefinitely, the water was expressed with effects simulations and hallway miniatures, and the base was destroyed by freezing all of the outlets,” Choi says.

Japan has a small visual effects industry that does the vast majority of the digital augmentation on domestic projects. “You don’t see many companies here doing visual effects,” notes Jeffrey Dillinger, Head of CG at Megalis VFX. “In Japan, they haven’t pushed visual effects studios to get the quality of work like Wētā FX, but they haven’t had a project go overseas. They are fine with a stylized approach.” A lot of the initial work that the company got to do was effects simulations. Remarks Dillinger, “Oni: Thunder God’s Tale was our first full project. It was initially going to be stop-motion animation and we were going to do CG enhancements, but eventually they decided to do full CG because it would have taken years.”

Character designs had to be translated from 2D to 3D for the Netflix limited series, which consists of four episodes. “The most important thing we had to establish was the asset pipeline [Solaris, USD, Arnold] because we didn’t have one. We ended up doing more than 2,000 assets,” Dillinger adds. Most of the challenges were technical. “When we first decided to use Arnold and Solaris, you couldn’t render hair, and the most important thing for our characters is the hair. Our protagonist has an Afro, and the characters are meant to be made out of felt, which you can’t accomplish that without having a layer of fuzz. We were able to accomplish quite a tactile feel where you can almost reach into the screen and touch these characters at times,” Dillinger describes.

Getting to contribute to Season 2 of Alice in Borderland was an exciting opportunity for Megalis VFX. “It’s a good project, Season 1 was fun to watch, and you could tell creatively the director and DP were good,” Dillinger remarks. “In each episode there are these games that happen. In the case of Episode 206, if people lose the game, acid falls on them and they melt. Onset, instead of acid, they used water and smoke, which makes sense, but when water hits somebody it behaves differently than acid. Usually, acid has more of a yellowish tint to it, so they’re trying to give it a little bit of that without going over the top. In a lot of those cases, it was a static camera and body, so it was a lot of 2D work to put on top of the skin.” Alice in Borderland does not hold back on the blood and gore. “Onset, they actually built a maquette of what a human looks like after acid has been dropped on them. We didn’t use it per se, but that ended up being concept art for us,” Dillinger notes. The game takes place at the Japanese Supreme Court. “Apparently, you cannot shoot there, and on Google we only found three photos that show the interior. It’s an artistically interesting building and has these concentric circles that rise up like an upside-down funnel. We spent a lot of time trying to

stay true for those who might have seen it in person. There are also lot of graphical elements, like numbers in the air, which is a visual, stylistic way of showing the contestants realizing the type of game that they’re playing.”

Entering into its fourth season on Netflix is Babylon Berlin, which has visual effects produced by RISE Visual Effects Studios. “There was no German series before featuring so many visual effects shots,” observes Robert Pinnow, Managing Director and VFX Supervisor at RISE Visual Effects Studios. “In the first season, we delivered over 830 and worked on over 900, which at that point was an insane amount. There were approximately 50 full CG shots. The way that we suggested to use visual effects was new and quite common in the U.S. market, which was, ‘Don’t solve it conventionally by putting something there and still not have a right image. Just shoot it, we’re going roto and exchange the whole background rather than just that little antenna.’ The freedom they had was new for German visual effects.” Quite a few Berlin landmarks make an appearance. Comments Pinnow, “We rebuilt the Alexanderplatz correctly because they were insisting on shooting there, but it has a tiled ground that didn’t exist back in the 1930s. The residential areas are made up. Some of it got shot in the city, especially things like the railway station, and others got shot in the backlot at Babelsberg Studio.” For a full year, the entire production office was put into a former federal building that was supposed to be redone. “Shooting took place on one level within that building for all of the interior sets and police department. For Season 2. the production office had to move to another one, and now they’re in a former school.”

Every episode is directed by creators Henk Handloegten, Achim von Borries and Tom Tykwer. “One was shooting in one location, no matter what episode it ended up in, and everyone else had to follow it,” Pinnow explains. “Sometimes, someone had 50% on an episode and on another 10%. The three of them were doing it together.” The trio had to approve the visual effects work. “That was interesting, indeed,” Pinnow observes. “One was like, ‘It looks good.’ The other one was like, ‘It doesn’t fit my needs. It could be this or that way.’ And the third one, Tom, was straightforward and had everything in context. During the early days of Babylon Berlin, the decision was made to support all of the driving sequences with digital backgrounds. Describes Pinnow, “They could drive the whole city day and night. The background plates in the streets wouldn’t have been usable anyway because of modern elements. The buildings we made for that were the key to designing the streets that were backgrounds for normal shots. That then became part of the concept process. We delivered a turntable of every house. In this way, they could grab one frame that was in the right perspective, put it together in Photoshop and send it back to us. On Season 2, we did it ourselves because they trusted us. There was not much concept art unless it was something specific.”

It is important that visual effects are applied constructively. Concludes Pinnow, “There were a few shots where one of the directors said, ‘If we had known how good they looked, we would have used more of them.’ And the other one said, ‘I like that the good and great shots in this sequence are a side effect.’ You need the visual effects to show the sequence, but it’s not on the eye. It’s helping the storytelling.”

At the age of seven, Dennis Muren, VES was taken by his mother to see The Beast from 20,000 Fathoms and The War of the Worlds and was subsequently driven to create cinematic spectacles that did not exist in real world of La Cañada, California, where he grew up. In an interesting career twist, the awe-inspired child would go onto remake the H.G. Wells alien invasion classic as Steven Spielberg’s ode to the 9/11 attacks and in the process added to his tally of 13 Academy Award nominations, which includes six Oscar wins as well as two Special Achievement Awards and one Technical Achievement Award. Even though essentially retired after a half century in the visual effects industry, Muren is a Consulting Creative Director at Industrial Light & Magic, on the Advisory Board of VFX Voice, and more importantly has retained his childhood fascination in creating believable and narrative effects.

What started off with taking still photographs of toy dinosaurs after seeing King Kong graduated into motion pictures captured on 8mm and 16mm film stock where the teenager would attempt to recreate his favorite movie moments. “It could be a copy of The 7th Voyage of Sinbad,” Muren recalls. “I wanted to bring those experiences back home. It was interesting seeing The Fabelmans because I had forgotten about this completely, [but] the only way we could see it was, you had to find a dark place in your house to look at it. Steven had a closet, which he shows in The Fabelmans I had a hallway with doors, and you could close all of the doors and you were in the dark. It’s amazing that we all have this shared experience. Steven had never talked about that.”

Images courtesy of Dennis Muren and Lucasfilm Ltd.

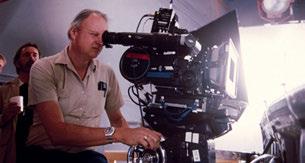

TOP: Dennis Muren, VES

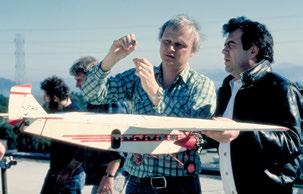

OPPOSITE LEFT TO RIGHT: Visual Effects Art Director Joe Johnston, Special Visual Effects Supervisor Richard Edlund, VES and Dennis Muren, VES have a discussion with filmmaker Irvin Kershner about The Empire Strikes Back, with concept art for Cloud City in the background.

Phil Tippett, VES and Muren on a Go motion set for Dragonslayer, with the technique winning a Technical Achievement Award at the 54th Academy Awards in 1982.

Muren was responsible for the digital character supervision on Casper (1995).

Muren is unfazed by an AT-AT Walker appearing in front of him.

When tasked to incorporate a tauntaun into the aerial photography for The Empire Strikes Back, Muren realized that there was never only one solution.

To achieve the dynamic speeder bike chase in Return of the Jedi, Muren insisted that the plate photography be shot by a Steadicam in an actual forest.

It was inevitable that The Equinox would get made. “I had completed my first year of college and didn’t want to make another short film during the summer vacation. I just wanted to make a feature film,” Muren recounts. “I realized that Ray Harryhausen’s films are like five sequences that are effects and he finds a writer to fill it all in. I had my friend Dave Allen, a stop-motion guy, and Jim Danforth who could do some stuff. Then I did my stuff. We came up with three sequences that I wanted to do – one stop motion, one forced perspective – and Dave Allen had this puppet we could use. Then we find some actors. Simple. I had $3,500 that my grandfather had saved up for me to go to USC; however, my grades weren’t good enough to go to there. I totalled it all up. Shooting 16mm on my Bolex, we did it the French way, which is silent and incredibly cheap, because then you put the sound in later and that cuts the price down to 10% of what it would have been. I knew it would work and was actually surprised that we sold the movie.”

Getting work in visual effects was not an easy task as the industry was still in its infancy and opportunities were scarce. “I gave up at one point and was going to be an inhalation therapist, which was something I figured I could do,” Muren reveals. “There were no effects movies being made. I got a little work at a union house called Cascade doing commercials for the Pillsbury Doughboy, Jolly Green Giant and Alka-Seltzer. Phil Tippett, VES, John Berg, Dave Allen, Jim Danforth and I were there at that time. It’s important to find your people who like the same things and you learn from each other. Most of us are still really good friends.” Muren pitched himself as a visual effects cameraman. “I never thought of myself as a cameraman, but I could always look at

movies and ask, ‘Why is it shot this way? Why didn’t they fix that? It’s obviously wrong.’ If I was doing this, I would make sure that I was the cameraman because he’s the guy with the button, and if it’s not right I’m not pushing it. Which isn’t really the way it was, but it kind of has been that way.”

Everything changed when filmmaker George Lucas established Industrial Light & Magic to produce the visual effects for his space opera Star Wars. “I heard that George was doing some sort of effects film, but I didn’t know any of the people working on it or what it was about,” Muren remarks. “I said, ‘I have to get on this show because I want to understand what this stuff is and how it works. I got the number of [ILM Co-Founder/Visual Effects Artist] Robert Blalack, called him up and had an interview. [VFX/SFX Supervisor] John Dykstra thought that my understanding of stop motion would be applicable to this slow camera motion-control stuff that he envisioned for Star Wars, where a motor would take a minute to go through motions, and you would speed it up and slow it down, and when you played it back over four seconds it would look like it was flying accurately. He was right. I could give personality to the ships by having them bank and skid which I thought added a fun factor to the movie.”

Close Encounters of the Third Kind and the end sequence with the mothership was the next project. “I loved working with George

and wanted to see what Steven Spielberg was like, and I admired Douglas Trumbull, VES” Muren remarks. “I made sure that the shots got finished in those five months, and they all worked, looked good and matched to the others. We were dealing with light, smoke and mist instead of hardware, speed and energy on Star Wars

Both sides of my brain got boosted in a period of a little more than a year to a new way of looking at things.” An important lesson was learned when asked by Lucas to insert a tauntaun into one of the opening helicopter shots in The Empire Strikes Back. “I said, ‘There is no way to track those moves because we don’t have any recording of it. It’s all white down there. It’s going to look fake. We should build this as a big model.’ George said, ‘Just think about it.’ And he walked out. Within 15 minutes I had figured out how to do it. I learned so much from that moment. There are many ways you can do everything, but there are usually a few ways that are the best and something only gets you 85% there. Then there is another way to tweak that for the 15% to make it look it’s a 100%.”

For Muren, the real breakthrough for digital effects was Terminator 2: Judgment Day. “CG was something I’d been looking into at ILM since 1983 or 1984. It was always a puzzle and a possibility. We did it, but it was always expensive. It wasn’t until Terminator 2: Judgment Day that we figured out how to make that as something which is repeatable and affordable with a department that could do it.” A dramatic moment is when the liquid metal T-1000 transforms and gets temporarily stopped going

through metal bars by the gun he is carrying. “Not only that, the room is quiet, so when that gun hits the bar it’s really loud. James Cameron is thinking it through so deeply and does that all the way through. The guy is great.” The studio reaction was surprising. “I was expecting Hollywood to go nuts, and they were like, ‘That was interesting.’ They didn’t know what they were seeing until Jurassic Park and dinosaurs. Everybody loves dinosaurs!”

Visual effects have become a standard filmmaking tool from indie productions to Hollywood blockbusters, which begs the question, “Can you have too much of a good thing? I would certainly say so,” answers Muren. “A lot of the stuff looks fake and the audience doesn’t seem to care. TV shows can have 100 shots in them. It looks like its shot in old Chicago but is really not shot there at all. But we fixed the buildings up and matted people into the settings. That’s great. It’s invisible. However, when you get into this chaotic action where they throw out any sort of thought of gravity, I get bothered by that.” That is not to say that there are great current examples of effects utilized wisely. “The thing that they did so well in Top Gun: Maverick is that they started with the correct initial elements, which was actually having actors in the planes flying out there; they’re really doing it, so there’s lots of surprise and lots of things you don’t see when you’re onstage.” A personal favorite is Bardo by Alejandro G. Iñárritu. “That film just knocks me out. All of the stuff that he is trying to tell is in the effects. The effects aren’t like a storyboard that an effects guy brought to life.

LEFT TO RIGHT: There are times when it is appropriate to heighten the reality of a shot to get the desired emotional moment, which is something that Muren did for E.T. the Extra-Terrestrial.

As with Close Encounters of the Third Kind, Murren got to shoot a dramatic sequence with an alien spaceship for E.T. the Extra-Terrestrial

Whereas Muren viewed Terminator 2: Judgment Day as the big CG-character breakthrough, it wasn’t until dinosaurs got resurrected in Jurassic Park that Hollywood finally took notice.

A love for stop-motion animation helped to forge a life-long friendship between Muren and Phil Tippett.

A miniature airplane was used for an aerial shot overseen by Muren for Indiana Jones and the Temple of Doom

Despite being a digital innovator, Muren has not lost his enthusiasm for in-camera effects as was the case with A.I. Artificial Intelligence.

Getting close and personal with the Rancor from Return of the Jedi.

No, it’s all emotion that has been revealed at the pacing desired by the director. You have to get into the director’s head and figure out what he is trying to do. That guy is great.”

Technology is continually evolving. “I don’t know where AI is going to go, but it sure is fascinating,” Muren states. “Gaming has surely influenced the film industry, and the films have certainly influenced gaming. To think at one time there were no computers and visualization except for pie charts and text. Now they’re all affecting everything else. With AI you can create images in a movie that are synthetic, and the gaming industry can figure out ways to do it in real-time interactively which is phenomenal. Now we have something coming up that is going to be able to decide what image we want to see and don’t want to see, or look at it 300 different ways based on whatever criteria we can give the AI. What I love is it’s almost out of the lab and schools and into the homes. Once you get people in their homes doing stuff, they’re going to come up with ideas that no one else has thought out. However, people are worried about it for rightful reasons. There has to be checks and balances.”

The imagery should be driven by the story, not the other way around. “It’s about the movie, it’s not the shot you’re doing,” Muren observes. “How does it fit out. What is the emotion of it? For the shot of the kids on the bikes in E.T. the Extra-Terrestrial flying off into the sunset, I made the sun a little too orange and the backlight on the bikes a little too bright. The color palette is amped up a tiny bit because it added a magical feeling that I thought those kids would feel when they were experiencing it, especially when those kids were telling what just happened to somebody. That’s the way it should look. In a lot of Steven’s films, I look at them as amped up in places. Anybody nowadays can take a storyboard or animatic and make it look real with the tools that we have, but it needs to have the truth and heart of the moment that the director is going for. Your shot doesn’t want to overpower the story or take away a moment that could have helped. It’s all subtle. Directors and actors go through it all of the time. Editors are the ones picking out those parts and assembling them together. That’s what filmmaking is. When you get there, then it’s not just the shot is terrific, the movie is terrific. I love movies.”

“CG was something I’d been looking into at ILM since 1983 or 1984. It was always a puzzle and a possibility. We did it, but it was always expensive. It wasn’t until Terminator 2: Judgment Day that we figured out how to make that as something which is repeatable and affordable with a department that could do it. ... I was expecting Hollywood to go nuts, and they were like, ‘That was interesting.’”

—Dennis Muren, Consulting Creative Director, ILM

By TREVOR HOGG

By TREVOR HOGG

Hardly elementary to put together is the original romantic comedy Elemental from Pixar, where a new arrival to a city inhabited by Water, Earth, Air and Fire enters into a relationship that crosses the class divide. The story was inspired in part by a science class joke. “When I looked at the Periodic Table, all I could see was this apartment complex,” chuckles filmmaker Peter Sohn (The Good Dinosaur). “To make the pitch more acceptable to everyone, I boiled it down to the classical elements fire, water, earth and air.” The subject matter is personal in nature, he reveals. “I lost both of my parents during the making of Elemental, and this film has a great deal to do with appreciating our parents and the sacrifices that they make for us. It has been this interesting emotional ride.”

Given the nature of the characters, effects were an essential aspect of their design, with Sohn having to readjust his expectations. “There were lots of articles last year about how tremendously difficult the hours can be in the visual effects industry, and with so many projects going into streaming and features getting so big, that there were some eye-opening ways to produce this material that I was guilty of. I pulled back on a lot of that in the middle of last year. I went in knowing the gameplan of what we were going to get to do, but because my parents had died, I was like, ‘This is to honor them! We’ve got to go further.’ The crew has been a tremendous support in this process, and they have lifted the film in ways that I will forever be grateful for.”

One of the hardest aspects was to make sure that the characters actually look like they are made from their designated element, such as “Ember [Leah Lewis] and Wade [Mamoudou Athie] as our main characters, Fire and Water,” states Sohn. “Ember was the most challenging to get to her look. I remember seeing Ghost Rider as a kid and going, ‘A character with a fiery head.’ The fire was so realistic and meant to be scary. Then there was Jack-Jack in The Incredibles who

that does gaseous without a solid substructure there. Character Designer Daniel López Muñoz took iPhone footage of a fire in his backyard, pulled out the frames and painted over them this fire character; he made these eyes blink on it that was caricature enough where these eyes could fit. Ember’s fire was more forgiving, where Wade became more difficult as the production went on because of the way his rig worked and simulations on top of his shaders and the caustics inside of him; there weren’t a lot of places to hide.”

Reinventing the character pipeline was Bill Reeves, Global Technology Supervisor. “The character gets animated in animation using the standard Pixar pipeline in terms of what you see on the animator’s screens. Its surfaces, polygons as you will. You don’t see the fire. Then they check in their work saying, ‘This shot is done.’ We convert it over to feed into Katana and RenderMan and we render. In the course of that conversion, we feed it into Houdini to generate volumetric pyro simulations and stylize it. It comes out the back end and eventually gets into Katana and then into RenderMan. [For] the part of the pipeline that goes into Houdini and back out again, we had little bits of it here and there. However, Ember is in 95% of the shots, so we had to run that pipeline over and over again. There is a lot of simulation involved with Wade because his hair is like a fountain that is bubbling. That’s another Houdini set of tasks. Then the Air character is another set of simulations to generate the flowing air wisps. The only simple set of characters are the Earth characters, but they’ve got a lot more geometry than Woody and Buzz Lightyear.

An effort was made to limit the number of elemental characters to 10 in a shot. “But we blew passed that,” Reeves laughs. “There is a sequence called Air Stadium in the movie where there are thousands of them.” A new approach was developed for crowd simulations. “It doesn’t actually go into Houdini for every character, but

it is volume deform kind of thing. When the characters are further away, it’s one simulation that we’re copying around and deforming in Houdini, but it’s a 10- to 20-second Houdini call rather than an Ember simulation, which is four or five hours easy.” Compositing was leaned on heavily when it came to lighting. “It’s mainly a way of dealing with the complexities of this world of pushing a lot more lighting layers into Nuke and composting and tweaking the end result there, rather than having to go back and re-render. You can work faster because it’s a more interactive system when you have the data. That was something we worked hard on and did a lot more on this show than on other ones,” Reeves observes.

To assist animators, a toolkit was developed by Sanjay Bakshi, Visual Effects Supervisor. Comments Bakshi, “We had to animate things like when Ember gets mad, not just the facial expression and the body language, but what does the fire do? Since animators are experts at the timing of that, it had to be synchronized with their acting choices. We had to give them some visual indication. Our simulation and shading artists were changing a bunch of knobs to get it to feel like what sadness is. Then we map that to one number so there is a sadness control. It was like a zero to 10 kind of thing. More effort was put into fire because Ember is the main character and goes through the most emotions.” Transparency and the speed of the fire help to convey emotion. “When Ember becomes vulnerable her flames become a lot more transparent and candle-like in the movement,” Bakshi notes. “For anger, we did use color. Ember goes into more purple; that’s her signature anger look. A lot of the story is about her being angry and not understanding why, then learning through the movie how to control her anger and why she is angry.”

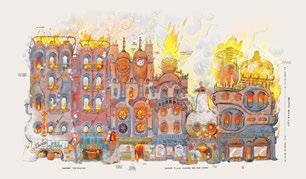

Much of the action takes place in Element City. “The Fire folks live in Firetown, and there are Water, Air and Earth districts,”

Bakshi explains. “Pete wanted these districts to have the elements built into the architecture and infrastructure, so we did a bunch of set dressings, like a streetlamp in the Fire district would have some fire on it. Our set dressers could place them, and the fire simulations would come along for the ride. The buildings and architecture have fire and water simulations built into them, so that the set dressers could do their work and get these simulations that would just happen. That was another big component.” Instancing was essential in making rendering manageable. “For fire simulations in Firetown, there were probably 25 to 30 of them that get reused over and over and instanced. The lamps are all instanced. It’s all of the same simulation, just offset in time so they don’t look identical,” Bakshi notes.

Getting the look of the characters locked down was difficult with them being effects dependent. “We partnered with Dan Lund who is a traditional effects animator from Disney,” remarks Gwendelyn Ederoglu, Directing Animator. “He talked about applying animation principles into 2D effects and how the same principles that we use in 3D in our characters, we could still pull and learn from that. One of them being, what are you trying to tell in the shot is key and how can effects support that? What we did learn from our 2D tests early on was that there was going to be a lot of fun to be had with volumetric changes, which is something that our characters don’t typically do. We also learned quickly how tear-offs of fire or water droplets added an immediate believability to their ‘elementalness,’ so we worked with our rigging team to develop prop fire and water, which we could then use in our animation testing early on. That became a critical element in the film. If Ember has a limb detached, rather than breaking off the rig of the limb, we would cheat into prop fire that would match the traits of Ember’s fire and then it could dissipate.”

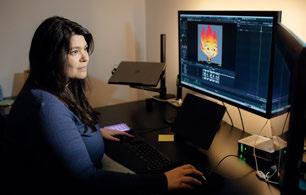

TOP TO BOTTOM: It was important to not have Ember touch anything that was flammable. Lauren Kawahara depicts what a street would look like in Element City, which utilizes a canal system similar to Venice and Amsterdam.

Peter Sohn conducts one of many review screenings of Elemental, which is a tribute to the sacrifices his parents made for their family.

Essential was a close collaboration established between animation and character effects departments. “We worked closely with simulations typically on a film, but jumping all of the ways to character effects, that was a new relationship that was formed,” Ederoglu notes. “A term that one of us might use in animation about stylization could be interpreted so differently by a different department. It took us awhile to create a more common language to be able to talk about shots, problems and issues or what looks we were chasing in animation.” Most of the animators came from working on Lightyear, which was grounded in real human acting. Ederoglu adds, “It did take a bit to adjust to the looseness and constant sense of motion that we needed to have for these characters to feel believable. Something would be funny in the Presto version because of the snappy timing, but we needed those four frames for the pyro to catch up. We did have to learn the language of that as we went.” Lattice controls were overhauled. “Those were far more robust in terms of regional controls than what we have had in the past. That was critical. A quarter of the shots required lattices to do some organic and major shape changes. Beyond that, a lot of the animation was within the rigs themselves.”

Unlike previous Pixar movies where the top portion of a character’s head or the chin could be cropped out of frame, this was not possible for Elemental. “We knew right away talking early on with Pete that 1.85:1 was going have to be the way to shoot something like this,” remarks David Juan Bianchi, DP, Camera. “We were aware that the pyro and flames of Ember were going to be so important, not just for her look but how to tell her emotional state. What is it doing? What is the color in her flames? We needed to go wide and vertical. I asked the engineering teams to try to give us a camera that matched large format photography, and this allowed me to shoot wider lenses. We wanted to have that extra dial of being able to have a shallow depth of field at certain moments, and having this large format version of our Pixar camera allowed us to do that.”

Reflecting various emotional tones and worlds throughout Elemental is the camera style. “There was a language for Firetown,” Bianchi explains. “We primarily shot that with wide-angle lenses, and the camera is more at the character’s eye level as if someone was hand-operating the camera. When going to Element City we introduced Water characters like Wade’s family living in an apartment made of water. Then we started to have a different camera language. There were Steadicam and Technocrane moves so it felt like the camera was rotating and floating, and longer lenses. When Ember and Wade from these two worlds intersect and interact with each other, we cherry-picked elements from both to make what I called the romance love element or Ember Wade language. Hopefully, it underscores and supports the story of where our two characters are at and where they ended up.”

Rather than just dealing with practical sources like lamps and natural ones such as sunlight, for Jean-Claude (JC) Kalache, DP, lighting had to take in account the characters themselves being light sources. “Ember is a self-illuminated gas, and the way we solved the exposure issue is we exposed everything except Ember. We made a conscious decision that animation would drive her energy, and lighting we’ll treat almost like a lightbulb on dimmer switch. When you think of water, everything is a light source. Water is reflective, refractive and shows through. It captures the whole environment. It was a big mind-bender. The brain is good at realizing what water looks like, but lucky for us, we were stylizing water, so you can break down the five or 10 components that make water look like water, but then you can move them around. It took a good part of the full year just to learn how to make our main Water character appealing.” Then there were the Air characters. Adds Kalache, “I remember looking at the overnight render and it was wonderfully beautiful pink light filling the whole train. Offscreen there was an Air character that was blasted by the sun.”

“The premise of this whole movie is that these elements cannot exist together,” Kalache remarks. “Yin and yang. Firetown is dry, smoky and less reflective. What is the opposite of that? It is a city with glass, water, reflections, and everything is bouncing. Lighting a glass building is a pain because you can’t shape them. We literally treated the buildings of Element City as a character, and we were lighting them as if we were studio-lighting a human, putting special rim and kick lights [on them]. One thing that was revealed quickly was a Water character is dependent on the environment around them, especially what is behind them. When Wade goes to or is in Element City, we quickly noticed if the buildings behind him were busy, it was impossible to look at him because you could see right through him. However, if we took the sunlight and made it slightly ramped down right behind him, conveniently things calmed down and he looked appealing.”

Kalache made an observation that surprised the director. “I remember telling Pete, ‘The world is the light. What do you expect in your character?’ Soon after, animation would come in to talk to him about what they expected from the performance. Then soon after, the effects people would come and talk about what they expected from their character effects. It took all of these conversations to eventually end up with characters that worked for Pete.”

In one breakthrough after another, AR, VR and VFX are augmenting live entertainment, from ABBA’s avatars to XR concerts to Madonna dancing live on stage with her digital selves.

When the Swedish group ABBA returned to the stage last May after a 40-year hiatus, they did so digitally with the help of ILM. The foursome – Björn Ulvaeus, Benny Andersson, Anni-Frid Lyngstad and Agnetha Fältskog – appeared in ABBA Voyage via their de-aged digital avatars, virtual versions of themselves on huge screens in the purpose-built, 3,000-capacity ABBA Arena, which was constructed in Queen Elizabeth Olympic Park in London. (ABBA Voyage won the 21st Annual VES Award for Outstanding Visual Effects in a Special Venue Project. )

ABBA’s 20-song “virtual live show,” over five years in the making, is a hybrid creation: pre-recorded avatars appear on stage with a physically present 10-piece band to make the experience more lifelike and convincing. The avatars meld the band’s current-day movements with their appearances in the 1970s.

Silhouettes

(Image

ILM supplied the VFX magic, with more than 1,000 total visual effects artists in four studios working on the project, according to the show’s spokespersons. ILM Creative Director and Senior Visual Effects Supervisor Ben Morris oversaw the VFX of the show, which was directed by music-video veteran Baillie Walsh.

(Image

First, Morris and his team scanned thousands of original 35mm negatives and hours of old 16mm and 35mm concert footage and TV appearances of the band. The supergroup quartet spent five weeks singing and dancing in motion-capture suits as ILM scanned their bodies and faces with 160 cameras at a movie studio in Stockholm. The same process was undertaken with younger body

who followed their moves, guided by choreographer

Wayne McGregor, and whose movements were blended with those of ABBA to give the band more youthful movements.

The digital versions of ABBA appear on the stage and to the sides of the arena on towering ROE Black Pearl BP2V2 LED walls, powered by Brompton Tessera SX40 4K LED processors. Each screen is 19 panels high, and there are an additional 4,200 ROE LED strips in and around the area. Solotech supplied the LED walls. Five hundred moving lights and 291 speakers connect what is on the screens to the arena. The result is spectacular and suggests that many large-scale digital shows may be on the way for music stars who are getting old or simply don’t like touring.

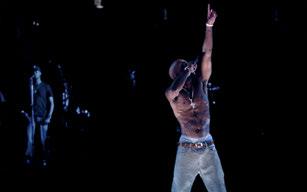

VIRTUAL TUPAC, VIRTUAL VINCE

Digital Domain created digital representations of the rap star Tupac Shakur and legendary Green Bay Packers football coach Vince Lombardi in 2012 and 2021, respectively, which raised the visual-quality bar for virtual appearances projected live.

On April 15, 2012, at the Coachella Valley Music & Arts Festival in Indio, California, Tupac Shakur appeared in a CGI incarnation on stage at the Empire Polo Field in Indio, California. The virtual Tupac sang his posthumous hit “Hail Mary” plus a “duet” of “2 of Amerikaz Most Wanted” with Snoop Dogg, who was on stage, in the flesh.

The computer-generated realistic image of Shakur was shown to some 90,000 fans on each of two nights; YouTube videos of the event reached 15 million views, according to Digital Domain. Some called it the “Tupac Hologram” – it wasn’t a hologram, but it was 3D-like. Unlike ABBA Voyage (2022), which featured the participation of the band in creating the group’s avatars, the Shakur on stage was created long after the singer’s death in 1996. The project took about two months to complete, with 20 artists of

different disciplines, according to Digital Domain’s Aruna Inversin, Creative Director and VFX Supervisor. The virtual Tupac was the vision of Andre “Dr. Dre” Young, and Digital Domain created the visual effects content. AV Concepts, and an audio-visual services and immersive technology solutions provider, handled the projection technology.

The virtual Shakur was a two-part effect, according to Kevin Lau, Digital Domain Executive Creative Director. “In the first, we recreated Tupac using visual effects, and the other was a practical holographic effect created using Hologauze. For the performance, we started by filming a body double performing the set. The actor had an incredible likeness in both stature and movement to Tupac. Once that performance was in the can, we began digitally recreating a bust of Tupac’s likeness. This digital head was then combined with the body performance and animated to match the song. The two were blended together in composting to create a seamless recreation of a seemingly live performance.”

Lau continues, “The performance asset was then synched to the live band and projected on Hologauze. This material has the ability to reflect bright light, while remaining fairly transparent in areas that are dark. The result is a figure that appears to exist physically in a three-dimensional space.”

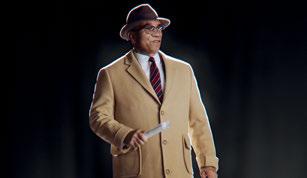

“The Vince Lombardi project was similar to the Tupac hologram, but we enlisted a slew of new techniques – many of which we created ourselves – that were not available at the time,” comments Lau. The virtual Lombardi – evoking the NFL football coach as he was in the 1960s – appeared on the jumbotron at Raymond James Stadium in Tampa on February 7, 2021.

Lau explains, “We began by filming a body double to do the bulk of the performance. But instead of having to create a full digital recreation of Coach Lombardi’s head, we enlisted Charlatan, our machine learning neural rendering software. This allowed us to train a computer off a data set of available Vince Lombardi images. The software can then synthesize those images and recreate a likeness of the subject – in this case, Coach Vince Lombardi – based off our actor’s [the puppeteer] performance.”