RRR: GLOBAL FILM VFX

21ST ANNUAL VES AWARDS & WINNERS

NEXT-GEN ARTISTS

AI ADVANCES

THE LAST OF US

PROFILES: JACQUELYN FORD MORIE & PAUL DEBEVEC

21ST ANNUAL VES AWARDS & WINNERS

NEXT-GEN ARTISTS

AI ADVANCES

THE LAST OF US

PROFILES: JACQUELYN FORD MORIE & PAUL DEBEVEC

Coming out of a much-anticipated awards season, this issue of VFX Voice shines a light on the 21st Annual VES Awards gala. Congratulations again to all of our outstanding nominees, winners and honorees.

This issue delves into TV/streaming and the juggernaut HBO series The Last of Us and goes inside the big-screen VFX of Shazam! Fury of the Gods. Blockbuster Indian Telugulanguage action epic RRR – named for the Tollywood star power behind the film – is central to our cover story on the surge of global film production captivating audiences worldwide. We explore trends in AI’s creative advancement and showcase booming activity in virtual production – LED stages, creative storytelling solutions and an industry roundtable on what comes next in this dynamic space. We shine a light on building the pipeline of the next generation of VFX talent. And VFX Voice sits down with VR pioneer Dr. Jacquelyn Ford Morie and award-winning production visionary Paul Debevec for up close and personal profiles.

We serve up insightful guidance from our award-winning VES Handbook of Visual Effects and put our New York Section in the spotlight. And, of course, please enjoy our VES Awards photo gallery, featuring the winners in 25 categories of visual effects excellence, show highlights, our VES Lifetime Achievement Award honoree, acclaimed producer-writer Gale Anne Hurd, and VES Board of Directors Award honoree, former VES Executive Director Eric Roth.

Dive in and meet the visionaries and risk-takers who advance the field of visual effects.

Cheers!

Lisa Cooke, Chair, VES Board of Directors Nancy Ward, VES Executive Director

Nancy Ward, VES Executive Director

P.S. Please continue to catch exclusive stories between issues only available at VFXVoice.com. You can also get VFX Voice and VES updates by following us on Twitter at @VFXSociety.

8 VFX TRENDS: INDUSTRY GROWTH

VFX work continues to expand across the globe.

14 VFX TRENDS: THE AI EXPLOSION

AI’s creative advances provoke ethical and legal concerns.

20 COVER: GLOBAL FILMS SURGE

Film productions and VFX are thriving around the world.

26 TV/STREAMING: THE LAST OF US

Detailing post-apocalyptic America for the HBO series.

32 PROFILE: JACQUELYN FORD MORIE

Virtual reality pioneer traverses virtual worlds and avatars.

38 FILM: SHAZAM! FURY OF THE GODS

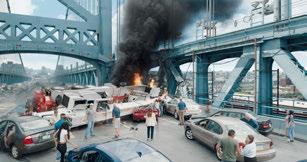

Making Philadelphia the epicenter of a VFX eruption.

44 PROFILE: PAUL DEBEVEC

A visionary on the front lines of innovation and application.

50 THE 21ST ANNUAL VES AWARDS

Celebrating excellence in visual effects.

60 VES AWARD WINNERS

Photo Gallery

66 VIRTUAL PRODUCTION: VP ROUNDTABLE

Industry experts weigh in on VP’s current surge and future.

72 VIRTUAL PRODUCTION: LED STAGES

Activity is booming at companies offering LED stages.

78 VIRTUAL PRODUCTION: CREATIVE IMPACT

VP provides new options and real solutions to storytellers.

82 EDUCATION: NEXT-GEN ARTISTS

The search expands for new talent to meet demand.

2 EXECUTIVE NOTE

88 ON THE RISE

90 THE VES HANDBOOK

92 VES SECTION SPOTLIGHT – NEW YORK

94 VES NEWS

96 FINAL FRAME – VIRTUAL PRODUCTION

ON THE COVER: RRR (Rise Revolt Roar), the epic action drama from India, is becoming an international sensation. (Image courtesy of RRR)

VFXVOICE

Visit us online at vfxvoice.com

PUBLISHER

Jim McCullaugh publisher@vfxvoice.com

EDITOR

Ed Ochs editor@vfxvoice.com

CREATIVE

Alpanian Design Group alan@alpanian.com

ADVERTISING

Arlene Hansen Arlene-VFX@outlook.com

SUPERVISOR

Nancy Ward

CONTRIBUTING WRITERS

Naomi Goldman

Ian Failes

Trevor Hogg

Jim McCullaugh

Chris McGowan

ADVISORY COMMITTEE

David Bloom

Andrew Bly

Rob Bredow

Mike Chambers, VES

Lisa Cooke

Neil Corbould, VES

Irena Cronin

Paul Debevec, VES

Debbie Denise

Karen Dufilho

Paul Franklin

David Johnson, VES

Jim Morris, VES

Dennis Muren, ASC, VES

Sam Nicholson, ASC

Lori H. Schwartz

Eric Roth

VISUAL EFFECTS SOCIETY

Nancy Ward, Executive Director

VES BOARD OF DIRECTORS

OFFICERS

Lisa Cooke, Chair

Susan O’Neal, 1st Vice Chair

David Tanaka, VES, 2nd Vice Chair

Rita Cahill, Secretary

Jeffrey A. Okun, VES, Treasurer

DIRECTORS

Neishaw Ali, Laurie Blavin, Kathryn Brillhart

Colin Campbell, Nicolas Casanova

Mike Chambers, VES, Kim Davidson

Michael Fink, VES, Gavin Graham

Dennis Hoffman, Brooke Lyndon-Stanford

Arnon Manor, Andres Martinez

Karen Murphy, Maggie Oh, Jim Rygiel

Suhit Saha, Lisa Sepp-Wilson

Richard Winn Taylor II, VES

David Valentin, Bill Villarreal

Joe Weidenbach, Rebecca West

Philipp Wolf, Susan Zwerman, VES

Andrew Bly, Johnny Han, Adam Howard

Tim McLaughlin, Robin Prybil

Daniel Rosen, Dane Smith

Visual Effects Society

5805 Sepulveda Blvd., Suite 620

Sherman Oaks, CA 91411

Phone: (818) 981-7861

vesglobal.org

VES STAFF

Jim Sullivan, Director of Operations

Ben Schneider, Director of Membership Services

Jeff Casper, Manager of Media & Graphics

Colleen Kelly, Office Manager

Brynn Hinnant, Administrative Assistant

Shannon Cassidy, Global Coordinator

P.J. Schumacher, Controller

Naomi Goldman, Public Relations

Tom Atkin, Founder

Allen Battino, VES Logo Design

TOP: Guardians of the Galaxy, Vol. 3. VFX: RISE Visual Effects Studios, Framestore, Rodeo FX, Crafty Apes, Wētā FX, Gentle Giant Studios, ILM, Weta Digital and Clear Angle Studios. SFX: Marvel Studios and Legacy Effects. (Image courtesy of Marvel Studios)

OPPOSITE TOP TO BOTTOM: 65. VFX: Framestore, Method Studios, New Holland Creative, Quantum Creation FX, Captured Dimensions and 22DOGS. Visualization: OPSIS. (Image courtesy of Columbia Pictures/Sony)

Spider-Man: Across the Spider-Verse. (Image courtesy of Marvel and Columbia Pictures/Sony)

Mission: Impossible – Dead Reckoning – Part One VFX: ILM, Rodeo FX, BlueBolt and Clear Angle Studios. Visualization: Halon Entertainment. (Image courtesy of Paramount Pictures)

Mission: Impossible – Dead Reckoning – Part One (Image courtesy of Paramount Pictures)

Over the last few years, the VFX industry has surged due to an infusion of visual effects in almost all films and series, the expansion of the streamers, a boom in animation, and the growth of video games and immersive formats. This is happening while LED stages and virtual production have been altering filmmaking and post-production processes, bringing them closer together.

At the same time, VFX work has continued to expand across the planet. “The globalization of the VFX business has been happening for a while, but the opportunities for remote working that have been accelerated by the pandemic have been a great enabler of this trend with artists and teams able to collaborate ever more easily and effectively across geographies,” says Namit Malhotra, Chairman and CEO of DNEG.

Streaming platforms Disney+, Apple TV+, HBO Max, Peacock and Paramount+ launched between 2019 and 2021, joining Netflix and Amazon Prime, collectively boosting production. “The strong growth in demand for content driven largely by the streaming companies has opened new avenues in content creation for VFX and animation companies,” Malhotra says. However, he feels the accelerated recent growth of the industry is now becoming more balanced. “The number of productions globally is now being rationalized to the reality of what can be produced. Demand was outstripping supply to such an extent that it was actually becoming unsustainable. What we are seeing now is more of a sensible and sustainable approach to content creation, and it is finding

equilibrium – which is a good thing. There is still growth, but it is a lot more structured and sustainable.”

Because of the streamers, “there is a lot more episodic content than there was five years ago,” comments Pixomondo CEO Jonny Slow. “This is not a new factor, and the growth of it may slow down a little in the short term, but I don’t see this trend going away. This is a whole new sub-genre of content. In publishing terms, it’s like the invention of the novel, and it has created millions more viewing hours per week.”

The streamers have generated plenty of filmmaking work in domestic production centers across Europe and Asia, from Oslo to Seoul, which in turn has generated more VFX work around the world. India has become an especially important pole of visual effects with many foreign-owned and locally-owned VFX houses working at full throttle there.

Vantage Market Research forecasts that “the increased demand for advanced quality content among consumers across the globe and the introductions of new technologies related to VFX market by industry players are expected to augment the growth of the VFX market,” and predicts that VFX global market revenue will climb from $26.3 billion in 2021 to $48.9 billion in 2028, growing at a compound annual growth rate (CAGR) of 10.9% during the forecast period.

“VFX is now an integral component of cinematic narrative in film, episodic, commercials and themed entertainment. Due in part to the convergence of gaming workflows, GPU-accelerated computing functions and cloud computing, VFX is increasingly accessible to all levels of complexity and budgets in storytelling,” says Shish Aikat, Global Head of Training at DNEG.

The dynamic activity of the VFX business in the last year includes acquisitions and foundings. One of the biggest deals in 2022 was Sony’s purchase of Pixomondo, which has facilities in Toronto, Vancouver, Montréal, London, Frankfurt, Stuttgart and Los Angeles. Recent projects include: Avatar: The Last Airbender (Netflix) and the next seasons of House of the Dragon, The Boys, Halo, Star Trek: Discovery, Star Trek: Strange New Worlds and many others. About the sale, Slow comments, “It allows us to benefit from being fully aligned with the whole Sony group, both creatively and from a technology development perspective.”

Also last year, Crafty Apes acquired Molecule VFX. The Fuse Group (owner of FuseFX) bought El Ranchito, which has studios in Madrid and Barcelona. Outpost VFX and Framestore opened Mumbai facilities and BOT VFX a Pune branch. After purchasing Scanline VFX at the end of 2021, Netflix acquired Animal Logic in 2022 and signed a multiyear deal with DNEG through 2025 for $350 million. DNEG will open an office in Sydney this year to go with its existing facilities in London, Toronto, Vancouver, Los Angeles, Montréal, Chennai, Mohali, Bangalore and Mumbai.

DNEG’s four Indian studios played a role in how India has become a significant source of global VFX production. MPC and The Mill

TOP TO BOTTOM: Ant-Man and the Wasp: Quantumania. VFX: ILM, ILM/StageCraft, Digital Domain, Spin VFX, Method Studios, MPC, Luma Pictures, Barnstorm VFX, Sony Pictures Imageworks, MARZ, Territory Studio, Rising Sun Pictures, Perception, Folks VFX and Clear Angle Studios. Visualization: The Third Floor. (Image courtesy of Marvel Studios)

Ant-Man and the Wasp: Quantumania. (Image courtesy of Marvel Studios)

Beau Is Afraid. VFX: Hybride and Folks VFX. (Image courtesy of A24)

Beau Is Afraid. (Image courtesy of A24)

(owned by Technicolor Creative Services), FOLKS VFX (The Fuse Group), Framestore, BOT VFX (based in Atlanta and with three studios in India), Rotomaker India Pvt Ltd, Mackevision, Outpost VFX and Tau Films are other multinationals with facilities in India.

One of India’s leading local visual effects firms is FutureWorks, which has 325 total employees in facilities in Mumbai, Hyderabad and Chennai. CEO Gauray Gupta comments, “Of these, our Chennai studio is geared as a global delivery center to service our international clients. Our Mumbai studio, which was our first one, is focused on Indian filmmakers, and also works closely with platforms like Amazon Prime Video and Netflix for their Indian productions. Early next year will see us relocate to a larger studio in Mumbai and expand our Hyderabad operations with a bigger facility in Q2.” He notes that FutureWorks’ recent portfolio “spans global hits including: The Peripheral for Prime Video, Westworld for HBO, Netflix’s Lost in Space and [the Hindi-language movies] Jaadugar, directed by Sameer Saxena, and Darlings, directed by Jasmeet K. Reen.”

FutureWorks currently has “around a 50% split between our domestic and international customers, and our business strategy is to continue along those lines as we grow,” according to Gupta. “Global demand for VFX services has fueled the rapid increase in VFX studios in India. Indian studios are now full of creative sequences and shots, not just RPM or back-office work.”

Gupta notes, “Global demand and supply have increased concurrently, and there is plenty of room for everyone. What we see is a truly global marketplace, [with] more choices for clients in terms of where and who can execute the top-end work. However, [having] more studios also means that the industry needs more talent, and that talent has a wider range of options than ever before.”

Gupta adds, “This is the most excited I’ve been about the industry in India since I founded the company. There is a huge demand for content from OTT networks and filmmakers. Relationships are global, technology is global, vendors are global. The scene here currently is creative, ambitious and evolving at a rapid pace.”

Ghost VFX was set to open a studio in Pune in 2023 and has facilities in Los Angeles, Vancouver, Toronto, London, Manchester and Copenhagen, with nearly 600 total global employees (Streamland Media purchased Ghost VFX in 2020). “Having studios across the globe means we’re able to work together across a single technology workflow so we can react to the ebbs and flows of demand,” says Patrick Davenport, President of Ghost VFX. “We’re also in several key locations for tax incentives. Although we offer our employees the option of working from home, hybrid or in-studio, having global studios helps us retain talent who want to work in different countries as well as [work] in-studio.”

Davenport adds, “We have larger projects which we share across the studios, but still focus on being able to support local productions. For example, our Copenhagen studio just worked on Troll, a Norwegian film for Netflix. On a global scale, we’ve worked on

several projects including: Star Trek: Strange New Worlds that artists in Copenhagen and Vancouver worked on, and for Fast X we currently have teams in our U.K., Copenhagen, Pune and L.A. studios working on the film.” Another is the new season of The Mandalorian, “one of several projects we’re working on for Lucasfilm.”

Glassworks has studios in London, Amsterdam and Barcelona. “Speaking as a studio with multiple locations across Europe, I can definitely see [having them as an] advantage. The benefits come in different aspects, including a shared pool of resources, access to specialized talent across offices, and opportunities within each market or in tandem across facilities,” says Glassworks COO Chris Kiser. “Scalability is always important in our business, and it’s great to be able to work with artists and producers that you know and trust before needing to go outside of your own studio.”

For Glassworks, “The year started with a couple of big commercial projects, including the Turkish Airlines Super Bowl spot featuring Morgan Freeman and Apple’s Escape the Office film,” Kiser says. “Young adult and fantasy fans will have seen VFX from our team in both Vampire Academy and Fate: The Winx Saga, [and] we have other projects in the works for Netflix, HBO and Amazon Prime.”

Virtual production has greatly transformed the VFX business and inspired the construction of hundreds of LED stages, both fixed-location and bespoke/pop-up. Last year ended with the completion of two notable facilities in Culver City. Amazon’s stage, located on Stage 15 of the Culver Studios lot, has an 80-foot diameter with a 26-foot-high volume, a virtual-production takeover of what had been the production scene of many famous movies in the analog era. Nearby, a new LED stage rose at Sony Innovation Studios on the Sony lot, in the same year that the firm purchased Pixomondo and its three LED stages.

Virtual production was valued at $1.46 billion in 2020, projected to reach $4.73 billion by 2028 and expected to grow at a CAGR of 15.9% during the forecast period from 2021 to 2028, according to a market report by Statista.

“Things are normalizing a little now, but along the way virtual production became a more widely adopted production solution, and for a few players, including Pixomondo, there is no turning back from this as we have created some very effective tools. That said, we see it as very complementary to our VFX services business, not a replacement – and in fact, we are able to integrate the processes to deliver additional value and speed of delivery,” Slow says.

Tax breaks are still having an impact on the geography of VFX. “Incentives do create additional production spend overall, as they directly impact how far a production budget will go,” Slow says.

“However, not all of the benefit stays in VFX ultimately – our clients have to balance productions books overall somehow. But, a more generous scheme in one region will influence where our

TOP TO

Transformers: Rise

Beasts. VFX: MPC, ILM and Wētā FX. Visualization: Halon Entertainment and The Third Floor. (Image courtesy of Paramount Pictures)

John Wick: Chapter 4. VFX: Rodeo FX, Crafty Apes, Mavericks VFX, One of Us, The Yard VFX, Tryptyc VFX, Light VFX, Pixomondo, Outlanders VFX, Boxel Studio, Atomic Arts, McCartney Studios, Track VFX, WeFX and Clear Angle Studios. Visualization: NVIZ and Halon Entertainment. (Image courtesy of Lionsgate)

Indiana Jones and the Dial of Destiny. VFX: ILM, Clear Angle Studios, Important Looking Pirates, Rising Sun Pictures, Crafty Apes, The Yard VFX, Soho VFX, Midas VFX and Capital T. Visualization: Proof Inc.

(Image courtesy of Paramount Pictures)

Renfield. VFX: Crafty Apes, ILM, Outpost VFX, Connect VFX, Pixel Magic, Weta Workshop and Spectrum Effects. Visualization: Proof Inc.

(Image courtesy of Universal Pictures)

Shazam! Fury of the Gods. VFX: DNEG, Pixomondo, Clear Angle Studios, Wētā FX, Scanline VFX, Method Studios. Weta Digital, BOT VFX and RISE Visual Effects Studios. Visualization/Effects: OPSIS and The Third Floor. (Image courtesy of Warner Bros.)

Servant. Series VFX: PowerHouse VFX, Cadence Effects, Ingenuity Studios and Vitality Visual Effects. (Image courtesy of Apple TV+)

Slumberland. VFX: DNEG, Scanline VFX, Outpost VFX, Rodeo FX, Important Looking Pirates, Ghost VFX, BOT VFX, MARZ and Incessant Rain Studios. Visualization: Halon Entertainment. (Image courtesy of Netflix)

clients want the work to happen, so a small change in the rules can create a very big shift in demand for work in a particular region. This has been very effectively used as a tool to drive investment and jobs into Canada, the U.K., Germany and many other places. It’s a big success story, and I think we will see this continue to evolve.”

Malhotra notes, “Frankly, these incentives make the use of visual effects more competitive for our clients, allowing them to create higher-quality content. This is important all round, as it creates more sustainable employment, as well as great quality of work for our clients, while helping them mitigate the costs of content creation.”

Davenport comments, “Demand for VFX looks likely to continue, though there is still the challenge of delivering the work within budgets and compressed schedules at a time of rising costs, particularly of labor.”

Malhotra observes that the talent gap is another challenge. “We need more talent in our industry with more experience – not just to creatively deliver projects, but also to manage and produce them,” he comments. “Training is a key focus – the fact that everyone is working from home compromises the culture of learning from your team and those around you, where you gain more experience by asking questions and looking at each other’s work. This has an effect on the time it takes to bring new recruits in our industry up to speed, which poses some interesting challenges for us as an industry — it’s a universal issue.”

Kiser adds, “The biggest challenge to our industry is bringing in the next generation and providing them with training and opportunities to succeed. Many of us were able to get a break somewhere or discover the potential for a career in VFX thanks to technical training or personal connections. We need to take advantage of the momentum and interest in film and TV to reach a wider, more diverse group of young people.”

“The convergence of visual effects and real-time gaming technologies, and the emergence of opportunities in the metaverse, virtual reality, immersive experiences and web 3.0, all significantly contribute to the possibilities for visual effects artists to leverage their skill sets beyond movies and television. It is a very interesting time for our industry and the people that work within it,” Malhotra says.

“We certainly hope the trend for VFX and animation will continue as it has been thrilling and made for some amazing content. The industry and budgets are likely to fluctuate in the same way they have over the years, although the demand has never been higher,” Kiser says. “The key now, as it has always been, is retaining talent and fostering creativity within our teams. We can’t control what happens in the outside world, but we have the ability to build a productive and enjoyable environment where the best creative work happens.”

“[In the] short term,” Snow concludes, “activity levels are calming down a little, but this is a good thing for the industry and the people working in it. Long-term, I don’t think there is any change to the overall trend of continued, healthy, year-on-year growth.”

Much has been made lately of the proliferation of artificial intelligence within the realm of art and filmmaking, whether it be AI entrepreneur Aaron Kemmer using OpenAI’s chatbot ChatGPT to generate a script, create a shot list and direct a film within a weekend, or Jason Allen combining Midjourney with AI Gigapixel to produce “Théâtre D’opéra Spatial,” which won the digital category at the Colorado State Fair. Protests have shown up on art platform ArtStation consisting of a red circle and line going through the letters AI and declaring ‘No to AI Generated Images,’ while U.K. publisher 3dtotal posted a statement on its website declaring, “3dtotal has four fundamental goals. One of them is to support and help the artistic community, so we cannot support AI art tools as we feel they hurt this community.”

“There are some ethical considerations mainly about who owns data,” notes Jacquelyn Ford Morie, Founder and Chief Scientist at All These Worlds LLC. “If you put it out on the web, is it up for grabs for scrubbers to come and grab those images for machine learning? Machine learning doesn’t work unless you have millions of images or examples. But we are out at an inflection point with the Internet where there are millions of things out there and we have never put walls around it. We have created this beast and only now are we getting pushback about, ‘I put it out to share but didn’t expect anyone would just grab it.’”

‘In the style of’ text prompts are making artists feel uneasy about

their work being replicated through an algorithm as in the case of Polish digital artist Greg Rutkowski, who is one of most commonly used prompts for open-source AI art generator Stable Diffusion. “I just feel like at this point it’s unstoppable, and the biggest issue with AI is the fact that artists don’t have control of whether or not their artwork get used to train the AI diffusion model,” remarks Alex Nice, who was a concept illustrator on Black Adam and Obi-Wan Kenobi. “In order for the AI to create its imagery, it has to leverage other artist’s collective ‘energy’ [and] years of training and dedication to a craft. Without those real artists, AI models wouldn’t have anything to produce. I believe AI art will never get the artistic appreciation that real human-made art gets. This is the fundamental difference people need to understand. Artists create things, and hacks looking for a shortcut only know how to ‘generate content.’”

Rather than rely on the Internet, AI artists like Sougwen Chung are training robotic assistants on their own artwork and drawing alongside them, which follows in the footsteps of another collaboration that lasted 40 years and produced the first generation of computer-generated art. “There was some interesting stuff going on there that nobody knows about in the history of AI and art,” observes Morie. “Harold Cohen and the program AARON, which was an automatic program that could draw with a big plotter that learned as it drew and made these huge, beautiful drawings that

TOP: The following prompts were entered into AI Image Generator API by DeepAI: An angel with black wings in a white dress holding her arms out, a digital rendering, by Todd Lockwood, figurative art, annabeth chase, at an angle, canva, global radiant light, dark angel, 3D appearance.

(Image courtesy of DeepAI)

BOTTOM: The following prompts were entered into AI Image Generator API by DeepAI: A girl in a white dress and a blue helmet, a detailed painting, by wlop, fantasy art, paint tool sai!! blue, [mystic, nier, detailed face of an asian girl, blueish moonlight, chloe price, female with long black hair, artificial intelligence princess, anime, soft lighting, old internet art.

(Image courtesy of DeepAI)

OPPOSITE TOP TO BOTTOM: This particular Refik Anadol image was commissioned by the city of Fort Worth, Texas.

(Image courtesy of Refik Anadol)

An interactive art exhibit created by Refik Anadol that utilizes AI.

(Image courtesy of Refik Anadol)

Refik Anadol created projections to accompany the Los Angeles Philharmonic Orchestra performing Schumann’s Das Paradies und die Peri

(Image courtesy of Refik Anadol)

were complex and figurative, not abstract at all.” AI is seen as essential element for developing future tools for artists. “Flame’s new AI-based tools, in conjunction with other Flame tools, help artists achieve faster results within compositing and color-grading workflows,” remarks Steve McNeill, Director of Engineering at Autodesk. “Looking forward, we see the potential for a wider application of AI-driven tools to enable better quality and more workflow efficiencies.”

Does the same reasoning apply to the creation of text-to-image programs such as AI Image Generator API by DeepAI? “When we saw the research coming out of the deep learning community around 2016-2017, we knew practically every part of our lives would be changed sooner or later,” notes Kevin Baragona, Founder of DeepAI. “This was because we saw simultaneous AI progress in very disparate fields such as text processing, gameplaying, computer vision and AI art. The same basic technology [neural networks] was solving a whole bunch of terribly difficult problems all at once. We knew it was a true revolution! At the time, the best AI was locked away in research labs and the general public didn’t have access to it. We wanted to develop the technology to bring magic into our daily lives, and to do it ethically. We brought generative AI to the public in 2017. We knew that AI progress would be rapid, but we were shocked at how rapid it turned out to be, especially starting around 2020.” Baragona sees AI as having positive rather than negative impact on the artistic community. “We’ve seen that text-to-image generators are practically production-ready today for concept art. Every day, I’m excited by the quality of art that takes a couple seconds to produce. Visual effects will continue to get more creative, more detailed and much cheaper to produce. Basically, this means we’ll have vastly more visual effects and art, and the true artists will be able to create superhuman art with the aid of these computer tools. It’s a revolution on par with the rise of CGI in the 1990s.”

Undoubtedly, there are legal issues as to who owns the output and whether the original sources should be given credit. “As the core building blocks of new AI generative models continue to mature, a new set of questions will arise, like it has happened with many other transformative technologies in the past,” notes Cristóbal Valenzuela, Co-Founder and CEO at Runway. “Ownership of content created in Runway is owned by users and their teams. Models can also be retrained and customized for specific use cases. We are also building together a community of artists and creators that inform how we make product decisions to better serve those community needs and questions.” The AI revolution is not to be feared. “There are always questions that emerge with the rise of a new technology that challenges the status quo.” Valenzuela observes. “The benefits of unlocking creativity by using natural language as the main engine are vast. We will see so many new people able to express themselves through various multimodal systems, and we’ll see previously complicated arenas like 3D, video, audio and image be more accessible mediums. Tools are only as good as the artist who wields them, and having more artists is ultimately an incredible benefit to the industry.”

Should AI art be the final public result? “I think that the keyword

prompts used should also be displayed along with it, and any prompt that directly references a notable piece of existing art or artist should require a licensing deal with that referenced artist,” remarks Joe Sill, Founder and Director at Impossible Objects. “For instance, if an AI art is displayed that has utilized the prompt of ‘a mountaintop with a dozen tiny red houses in the style of Gregory Crewdson.’ you’re likely going to need to have that artist’s involvement and sign-off in order for that art to be displayed as a final result. If AI art is simply used in a pipeline as a starting point to inspire an artist with references, I think it’d be great for the programs themselves to start being listed in credits. Apps like Midjourney or DALL·E being credited as the key programs that help artists develop early inspiration only helps with transparency and also accessibility. If an artist releases a piece of work that was influenced by AI art, they can credit the programs used like, ‘Software used: DALL·E, Adobe Photoshop, Sketchpad.’”

AI has given rise to a new technological skill in the form of “the person who can write a compelling prompt for a program like DALL·E 2 to extract a compelling image,” states David Bloom, Founder and Consultant at Words & Deeds Media. “To some extent it’s a different version of what artists have always faced. If you are a musician, you had to learn how to play an instrument to able to reproduce the things that you were hearing in your head. I remember George Lucas saying in the 1990s when they put out a redone version of Star Wars, ‘I’m never going to show the original version again because the technology now allows me to create a film that matches what I saw in my head.’ It’s just like that. The technology is going to allow new kinds of people to see something that they have in their head and get it out without necessarily having the same or any technical skills, if they can articulate it.”

Part of the fear of AI comes from misunderstanding. “It’s not as powerful as people think it is,” notes Jim Tierney, Co-Founder and Chief Executive Anarchist at Digital Anarchy. “It’s certainly useful, but in the context how we use AI, which is for speech-totext, if you have well-recorded audio and someone who speaks well, it’s fantastic but falls off the cliff quickly as the audio degrades. There is a lot fear around AI. We were supposed to have replicants by now! Blade Runner was set in 2019. Come on. Where are my flying cars?” As for the matter of licensing rights, Tierney references what creatively drives artists in the first place. “If you say, ‘Brisbane, California, at night like Vincent van Gogh would have done,’ that’s going to create something probably Starry Night-ish But how is that different from me painting it using that visual reference and an art book? It’s complicated. I spent a bunch of time messing around with Midjourney. If you go in there looking for something specific and say, ‘I want X. Create this for me.’ You will have to go through many iterations. People can make some cool stuff with it, but it seems rather random.”

What is affecting the quality of AI art is not the technology. “People have this idea that you type in three simple words and get some sort of masterpiece,” observes Cassandra Hood, Social Media Manager at NightCafé Studio. “That’s not the case. A lot of work goes into it. If you are planning on receiving a finished image that you are picturing in your mind, you’re going to have put the work

into finding the right words. There is a lot of experimenting that goes with it. It’s a lot harder than it seems. That applies to many people who think it’s not as advanced or not too good right now. It can be good if you put the work in and actually practice your prompts. We personally don’t create the algorithms, but give you a platform and an easy-to-use interface for beginners who move onto the bigger more complicated code notebooks once they graduate from NightCafé. We are focusing on the community aspect of things and making sure to provide that safe environment for AI artists to hang out and talk about their art. We have done that on the site by adding comments and contests.”

“My opinion on this AI revolution has changed,” acknowledges Philippe Gaulier, Art Director at Framestore. “It has probably been a year or year and a half ago that AI has really exploded in the concept art world. At the beginning I thought, ‘Oh, my god, our profession is dead.’ But then I realized it’s not because I saw the limits of AI. There is one factor that we shouldn’t forget, which is the human contact. Clients will never stop wanting to deal with human beings to produce some work for whatever film or project that they have. However, there will be less of us to produce the same amount of work in the same way when tools in any industry evolve to become more efficient. The tools haven’t replaced people because people are still needed to supervise and run them because machines don’t communicate like we do. But there has been a reduction in the number of people for any given task. I have been in this industry long enough to understand that things evolve all of the time. I have already started playing around with AI for references. I’m not asking myself whether it’s good or bad. I’ve accepted the idea that is going to be part of the creative process because human beings in general like shortcuts.”

In the middle of the AI revolution is Stable Diffusion, which was designed by Stability AI in collaboration with LudwigMaximilians University of Munich and Runway. The release of the free, open-source neural network for generating photorealistic and artistic images based on text-to-images was such a resounding success that Stability AI was able to raise $101 million in funding. “A research team was looking at ways to communicate with computers and did different experiments looking at textto-image,” remarks Bill Cusick, Creative Director at Stability AI. “Their goal was to get to a place where instead of being limited by your physical abilities, you could be able to translate your ideas into images. It has evolved in a way where now we can see what is possible, and there is a bifurcation of what the approaches are. Stable Diffusion and DreamStudio is a tool. DreamStudio gives you settings and parameters. I had a meeting with these guys

“If you put it out on the web, is it up for grabs for scrubbers to come and grab those images for machine learning? Machine learning doesn’t work unless you have millions of images or examples. But we are out at an inflection point with the Internet where there are millions of stuff out there and we have never put walls around it. We have created this beast and only now are we getting pushback about, ‘I put it out to share but didn’t expect anyone would just grab it.’”

—Jacquelyn Ford Morie, Founder and Chief Scientist, All These Worlds LLC

doing a workflow using Stable Diffusion, and it’s as complex as a Hollywood workflow would be, and the results are incredible. There are people doing Blender and Unreal Engine plug-ins, and I reach out to them to help accelerate their development. I want concept artists to treat it as a tool because it’s going to be more powerful in their hands than anybody else’s.”

Cusick adds, “AI is never going to whole cloth recreate someone’s picture. By the time this article comes out, there is going to be text-to-video, and the question of did my art get stuck into a dataset of billions of images is meaningless when the output is animation that is unique and moving in a totally different way than a single image. I agree with all of the problems with single images and the value of labor. But it’s momentary. We are moving towards a procedurally generated future where there is a whole other method of filmmaking coming.”

“I want concept artists to treat [AI] as a tool because it’s going to be more powerful in their hands than anybody else’s.”

—Bill Cusick, Creative Director, Stability AI

Visual effects have proliferated far beyond Hollywood productions, with talented filmmakers and digital artists in China, South Korea, Mexico, Germany, Australia and India collaborating to produce a wide range of stories. “One of the major changes that I’ve seen over the past four to five years is the model changing from outsourcing to insourcing,” states Sudhir Reddy, Senior Vice President & Head of Studios, Canada & India at Digital Domain. “Almost every major post house has set up in India physically or virtually. India is playing a big part in work sharing. There is so much content being created that there is work for everybody. The domestic studios are thriving doing local and outsourcing work.”

TOP: The whirlpool that appears above the beach grave of Seo-rae (Tang Wei) is part of a visual motif in Decision to Leave designed to depict the growing power she has over Hae-Joon (Park Hae-il). (Image courtesy of MUBI)

OPPOSITE TOP: Previs was critical in figuring out the obstacles needed to make a believable chase between the tiger and Komaram Bheem (N.T. Rama Rao Jr.) in RRR (Image courtesy of RRR)

OPPOSITE MIDDLE AND BOTTOM: A clever transition in Decision to Leave showing the beginning of Detective Hae Joon’s (Park Hae-il) obsession with Seo-rae (Tang Wei) occurs when the shot goes from his arm to the x-ray of her dead husband she is suspected of killing. (Images courtesy of MUBI)

Visual effects for films and television are common nowadays in China. “A lot of small productions and even online series have access to visual effects in China,” remarks VFX Supervisor Samson Sing Wun Wong. “The use of AI and game engines, and virtual LED screen stage shooting, are allowing visual effects companies to finish a huge task with less time with more contained teams. The size of a company will no longer determine the quality of work; it eventually will change the way companies are structured and formed. There is always demand for visual effects, but it’s really about how a company and the artists are able to readapt themselves with new technologies and skillsets within the new platform. That’s the future.”

“The central question as a visualist is what method will be the best way to express that core emotion of a character or to drive the

narrative forward,” notes acclaimed South Korean director Park Chan-wook (Oldboy, The Handmaiden), who digitally simulated fog, extended a mountaintop set, had CG ants crawling over the face of a corpse and inserted crime scene photographs onto a wall when making Decision to Leave. “Visual effects is one of the important tools. But you have to be cautious and only use visual effects when it’s absolutely necessary. Visual effects have great advantages, as directors can imagine executing things that were not possible in the past, and it cuts down on the production costs, too.” In Decision to Leave, Detective Hae-joon has a fatal obsession with murder suspect Seo-rae. “The one scene that I would want the audience to never miss out on is the finale where we see the whirlpool that is made on top of the tomb of Seo-rae,” remarks VFX Supervisor Lee Joen-hyoung, who has collaborated with Park ever since Oldboy. “That whirlpool or vortex is something that I wanted to put in different places throughout the film because it represents Hae-joon’s emotional entrapment to Seo-rae. When he makes coffee, the steam that comes out produces a little vortex movement, and when Hae-joon is scattering the ashes of the dead mother of Seo-rae, they swirl around him before going away, as if it’s Seo-rae’s presence.”

Renowned Chinese director Zhang Yimou (Hero, House of Flying Daggers) achieves his ambitious visions with the help of Visual Effects Supervisor Samson Sing Wun Wong. “My first show with Zhang Yimou was Shadow in 2018, which is stylized like a

TOP: There is no shortage of creature effects in RRR especially with the climatic animal havoc sequence. (Image courtesy of RRR)

MIDDLE AND BOTTOM: A major atmospheric that had to be created by House of VFX for Cliff Walkers was snow. (Image courtesy of House of VFX)

OPPOSITE TOP TO BOTTOM: The visual aesthetic that Zhang Yimou desired for Shadow was that of a Chinese ink painting that visual effects vendors such as Digital Domain had to recreate. (Image courtesy of Digital Domain)

One of the most complex visual effects shots to execute in Bardo was Silverio (Daniel Giménez Cacho) interacting with his mirror reflection in the desert. (Image courtesy of Netflix)

Practical and digital effects were seamlessly integrated for All Quiet on the Western Front. (Image courtesy of Netflix)

Effects simulations play a big part in the shaping of the Djinn’s (Idris Elba) character in Three Thousand Years of Longing. (Image courtesy of Kennedy Miller Productions)

traditional Chinese ink painting and has a lot of visual effects,” he explains. The partnership has gone on to produce Cliff Walkers, Snipers and the upcoming Under the Light. “In the last five years, the movies that Zhang Yimou directed are all invisible, seamless visual effects, requiring lot of environment extensions, effects elements and paint-outs. My role requires a close collaboration with the action director, art director and cinematographer to find the best methodology to achieve the best results. We shoot lots of references and avoid right-in-your-eyes visual effects, but help the storytelling in a subconscious way. Visual effects are no longer about fanciness, it is about precision.” A creative challenge was the car chase in Cliff Walkers. “In one or two cases where certain driving actions were slow, we did a full background replacement, but for the majority we managed this by increasing the speed of the snow,” explains Adam Hopper, VFX Supervisor at House of VFX. “For each shot requiring a little more danger, stunt vehicles were used to perform the action. We used these quite successfully as animation reference, but since the stunt vehicles were slightly larger and heavier, we had some alterations to consider in getting the weight distribution correct.”

Getting award circuit nominations for Best International Feature Film and Best Visual Effects is the German remake of All Quiet on the Western Front. “Visual effects did little things in the background where we had planes chasing each other to illustrate that even when they are in the barracks resting, there is still a war going on in the skies,” explains Production Visual Effects Supervisor Frank Petzold, who reunited with German director Edward Berger after working together on the television series The Terror. “We had to do research as to what planes existed at that time and the same goes for guns.” A tank travels over a trench. “That was one of my favorites,” Petzold notes. “I come from the film days of multiplane downshooters and optical printers. To make it look absolutely photoreal, I wanted to use as much photographic stuff as I could get and stay away from CG models or particle simulations for explosions. The tanks going over the trenches is traditional A and B plates. We shot the foreground, and literally on the day we rushed to our special set where there was a narrower trench dug out like a car pit, we rested the camera. Then we did a quick video lineup and had the tank drive over the camera. In CG, we had to fix a lot of stuff on the closeup shots, because when you look under the tank, the wheels and chains were slightly different.”

Whether it be creating the impression the entirety of that Birdman or (The Unexpected Virtue of Ignorance) was captured in one continuous take or depicting a graphic grizzly bear mauling in The Revenant, Mexican director Alejandro González Iñárritu astutely uses visual effects, and in the case of Bardo, False Chronicle of a Handful of Truths he utilizes them to illustrate the mental state of Mexican journalist and documentarian Silverio Gama. “The movie navigates between memories, reality and surrealism,” notes VFX Supervisor Guillaume Rocheron. “You can’t be too stylized because you never want the audience to understand where you enter a visual effects sequence. It’s constantly mixed. When Silverio Gama meets with his dad, they

go into the bathroom and start to talk. Over one cut you enter the surrealism where Silverio, who is a 50-year-old man, now has a kid’s body and adult head; he feels like a kid in front of his dad but is still his own self. You have to be mindful of driving things as photographically as you can.” Joining the project during post-production was VFX Supervisor Olaf Wendt. “The sequences involving the digital babies were some of the most challenging work, especially doing a digital human in these long shots that come so close to the camera. There are interesting things, like the opening shot of Silverio’s shadow racing over the desert, just because Alejandro wanted this shadow to communicate the personality of his protagonist. It’s something that I’ve never seen before.”

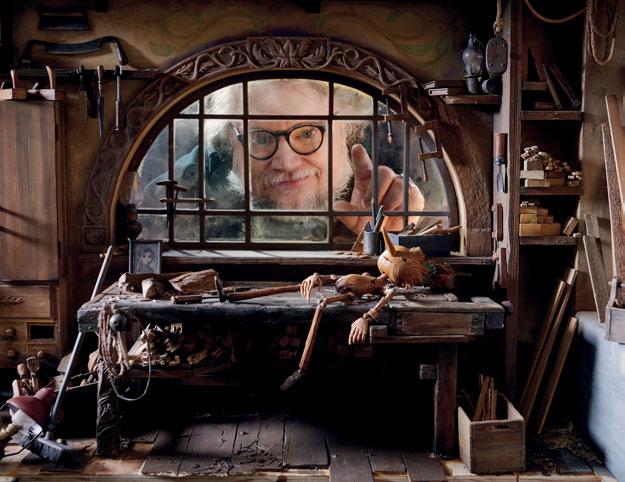

Becoming an international sensation is the epic action drama RRR [Rise Roar Revolt] by Indian director S.S. Rajamouli, which is a cinematic spectacle that blends CG animals, major action set pieces and musical numbers within the era of colonial British rule of India. A signature moment is Gond Tribal leader Komaram Bheem (N.T. Rama Rao Jr.) literally battling a tiger in the jungle. “The tight closeup was a benchmark for us when doing the actual tiger asset,” states VFX Supervisor Srinivas Mohan. “I needed to have a log mark on the right side of face that was previously on the left side. We had to build an extra blend mark there and add more detail to it. We did extensive previs for it mainly to determine the speed of the tiger as it can run 50km and he can only do 10km. We added some obstacles because the tiger was reaching him too quickly.” The reflection in the eye of revolutionary leader

Alluri Sitarama Raju (Ram Charan Teja) as he stands in front of the police station was captured in-camera. “I genuinely thought we were going to be here for a while,” admits Pete Draper, CEO and Co-Founder of Makuta VFX. “But it’s Charan’s eye, a live background, one shot. There are no layer comps and cinematographer K.K. Senthil Kumar got it literally in a single take. Here’s the fun thing: There is no camera paint-out at all. But what there is is digital crowd extension. The guys standing on the hill and the falling watchtower man were digitally added in as well as a few extra flags.” Miniatures were utilized for the bridge explosion and rescue. “The festival area where Ram Charan comes running out chasing the guy, a small piece of road on top of the bridge, two pillars and a full-size train car [for a few shots] were actual set pieces,” explains Daniel French, Producer, VFX Supervisor and Co-Owner of Surpreeze. “But other than that, there was a fairly large miniature that was built with fully functional train cars at 1/8 scale. Because the eight train cars had to be set on fire and blown up, we had to build them in metal to get the proper weight. When you have fire elements you need to construct them as large as possible, but still keep it at a practical scale so you can lift the train cars up, do resets and work with it in a practical manner.”

Australian director George Miller has exacting standards, and for Three Thousand Years of Longing a collaboration was forged with VFX Producer Jason Bath and VFX Supervisor Paul Butterworth to bring to life the time-traveling genie Djinn and his unique relationship with British narratologist Alithea. “Effects simulations play a big part in the shaping of the Djinn’s character,

The cosmic energy powers in Brahmāstra:

courtesy of DNEG and ReDefine)

The concept art for the various cosmic powers in Brahmāstra: Part One - Shiva had to be refined further once the effects layers started to be added. (Image courtesy of DNEG and ReDefine)

One year of look development was spent on the Giant Snake created by Pixomondo for The Magic Flute. (Image courtesy of Pixomondo)

with many different aspects requiring their own look development pathway,” Bath explains. “The Djinn Bottle Vortex that shows his body being ripped apart is the most complex, but he’s also defined by the seductive vapor of his kiss, the energy from his fingertips as he ‘reads’ books and the aura he creates when he ’tunes’ the modern world’s noise into music. The Djinn is subtly scaled up throughout the film with both 2D and in-camera trickery. The scene where the Djinn first appears to Alithea, oversized and squashed into the hotel room, was achieved with classic scaled photography techniques using a 1/5th miniature hotel room set and motion control to film Alithea separately on the full-sized set.” One of the vendors contributing to the 596 visual effects shots was Fin Design + Effects. “The hero Djinn’s legs were originally meant to be filmed on set, and in an effort to match George’s vision for their unique look became full CGI in every frame across over 100 shots,” explains Roy Malhi, VFX Supervisor at Fin Design + Effects. “We created a feathers and scales system in Houdini specifically for this challenge, enabling us to have intricate control and flexibility to achieve the 4K closeup realistic feathers we were required to render.”

Brahmāstra: Part One – Shiva is part of a proposed trilogy and cinematic universe known as Astraverse, created by Indian director Ayan Mukerji, that had visual effects support provided by DNEG and ReDefine totaling 4,200 shots. “We shot the climax first, which was the most complex part of the film, and that gave everyone a deep learning,” remarks Jaykar Arudra, VFX Supervisor at DNEG. “Interactive lighting was the biggest thing that was required because fire has to move to various places and different energies are casting so much light. Designing the entire shot and ensuring that so much of the interactive light was happening correctly was the largest part of the on-set stuff. We programmed these large LED screens.” The cosmic energy powers were effects driven. “Even fire didn’t look like real fire,” remarks Viral Thakkar, VFX Supervisor at ReDefine. “It had a lot of details, like galaxy particles. We call it love fire so the color is different, and the embers are connected to the galaxy, so they had to be floaty. We could use nothing from practical fire.” Arudra adds, “Even when you do concept art of a frame, it’s not the entire story. We would take one scene and say, ‘Let’s develop the look of this power based on this concept.’ Once we start putting effects layers into that, it develops more and more and gets refined. The love fire had gone through iterations of development of how exactly we need to add the color and cosmic particles into the fire.”

An ambitious production was a modern interpretation of Wolfgang Mozart’s classic opera The Magic Flute by German director Florian Sigl. “The musical aspect of the production and how it transports into the visual effects were quite challenging in a lot of aspects, but as creative partners and working closely with the client we were able to provide bespoke solutions throughout every step of the project,” states Max Riess, VFX Supervisor at Pixomondo. “The dress simulation of the Queen of the Night took the longest time to develop. We extended the real dress of the singer with five to 10 giant CG cloth ribbons moving in sync with the voice track. We’d never done anything like this before, and it

took some time to get the setup right.” One year of look development was spent on the Giant Snake, which went through several iterations. “We had versions with dragon-like horns and venomous but, in the end, for colors we decided to create an animal native in its environment with sand colors and realistic animal features. The jaw and teeth anatomy are inspired by several other big creatures, like a shark and crocodile. For a giant snake, it would not make sense to have two venomous fangs. Another challenge was the movement, to find the right pattern, speed and behavior so that the viewer would see the snake as not out to catch and eat the prince, but to taunt and scare him.”

The Korean War drama The Battle at Lake Changjin cost $200 million to make and grossed $931 worldwide, making it the highest-grossing Chinese film of all time. $200 million to make and grossed $931 worldwide, making it the highest-grossing Chinese film of all time. A trio of directors was responsible: Chen Kaige, Tsui Hark and Dante Lam, while the extensive digital augmentation was handled by VFX Supervisor Dennis Yeung. “We created two movies in 10 months, and the films also covered a large number of CG,” states Yeung. “In addition to the huge workload, most of the shooting sites were in the suburbs, and we shot many night scenes. There were many details that had to be worked out before shooting scenes, such as the model of the car, and the clothes of the soldiers had to be historically accurate.”

Among the visual effects vendors recruited was DNEG. “There was one plate element for the opening scene that was shot on a crane with the actor, and we had to insert that into the flying camera and make him work in the overall CG world,” remarks Lee Sullivan, VFX supervisor at DNEG. “You see a column of 100 soldiers in uniform marching out of a landing ship, and they built just the big doors as a front. A couple of real tanks and jeeps are driving as well as 20 tents, which is a big build, but at the same time, we had to extend that crane move, transition into a drone shot and extend everything around the beach. Then you cut down to more surface-level shots where we are extending out the tent village, vehicles and planes flying overhead; meanwhile, the burning Inchon is always in the background.” Being able to balance stylization and realism was not an easy task. “I remember there was this tank fight, and you’re following the bullet in a bullet-time type of shot that was full CG,” recalls Philipp Wolf, the film’s Visual Effects Executive Producer and Executive-in-Charge, Corporate Strategy at DNEG. “That was interesting to get it right – to create a stylized shot that ultimately needs to feel real, which is hard when you’re doing something a camera can’t do. But it was neat in the end.”

“One of the major changes that I’ve seen over the past four to five years is the model changing from outsourcing to insourcing. Almost every major post house has set up in India physically or virtually. India is playing a big part in work sharing. There is so much content being created that there is work for everybody. The domestic studios are thriving doing local and outsourcing work.”

—Sudhir Reddy, Senior Vice President & Head of Studios, Canada & India, Digital Domain

As part of a computer class assignment at Carnegie Mellon University in Pittsburgh, students had to pitch ideas for a zombie video game to the filmmaker credited with establishing the horror subgenre, George Romero. One of the participants was Neil Druckmann, who despite getting turned down would go on to become the Co-President of the acclaimed video game company Naughty Dog. “Whatever concept he picked, we were going to spend a semester developing a prototype of a video game,” recalls Druckmann. “Back then, it was much simpler. A cop and the daughter of a senator had to travel across the country. I tried to make it into a comic book. It evolved again when pitched at Naughty Dog, and we made it into a video game that became The Last of Us. The core emotional trappings remained consistent.” The fully evolved premise of the 2013 video game revolves around an actual fungal parasite called Cordyceps contaminating the food supply and turning its human hosts into volatile monstrous beings. Twenty years later, immune teenager Ellie Williams is escorted through post-apocalyptic America by smuggler Joel Miller to a medical facility with hopes of producing a cure.

Within the first 13 months of being released, Sony sold seven million copies of The Last of Us and talk commenced about

a feature film adaptation helmed by Sam Raimi, but creative disagreements over the proper tone and the studio’s request for more action sequences derailed the project. But everything changed when, coming off the success of the HBO miniseries Chernobyl, Craig Mazin got involved and forged a partnership with Druckmann that would see the entire original game released as a nine-episode HBO series and the two of them serve as co-creators, Executive Producers, directors and writers.

“I’m obviously a big science dork and love that Neil based this story on science,” notes Mazin. “It’s terrifying but beautiful what the fungus can do. Also, dramatically, I am interested in seeing regular people dealing with extraordinary circumstances and not in the typical B-movie way. Watching people twist, turn and squirm, you find out who they are.”

“[W]hen you’re watching a show or telling a story, violence has a different purpose because you’re watching it, not committing it. We tried hard to make acts of violence relevant to characters and their relationships because that’s the matrix through which the audience processes that this is a good story.”

—Neil Druckmann, Co-President, Naughty Dog

To do a straight retelling of The Last of Us would have been misguided, as watching television and gameplay are not the same experience. “The Last of Us Part I remake, which we just released, can only render as fast as a PS5 allows, and that game runs at 60 frames per second, which means that we have 1/60 of a second to render the Bloater as detailed as we can,” Druckmann notes. “The amount of detail is proportional to how long we have to render it and the computational power. With the show, Wētā FX can have its render farms rendering a frame for a week if they wanted to, so our imagination is the only limit.”

Then there is the matter of violence, blood and gore. “I love playing The Last of Us Part I and The Last of Us Part II,” Mazin remarks. “Part of the enjoyment is being given these obstacles of NPC [Non-playable Characters]. How do I get around them or through them? Am going to sneak or go in with guns blazing? But when you’re watching a show or telling a story, violence has a different purpose because you’re watching it, not committing it. We tried hard to make acts of violence relevant to characters and their relationships because that’s the matrix through which the audience processes that this is a good story.” Druckmann adds, “It’s not like we’re sanitizing the violence in this world, because our world is violent. We’re reducing the quantity of it so when it’s there it’s much more impactful than if you’re going to watch the same body count from the game.” Iconic moments from the game were recreated for the series. “There were certain things that I felt like as a fan I would want to see,” states Mazin. “It’s not even an Easter egg. We’re putting it right there front and center, and it’s not forced. How would they get across from here to there? Why not do the thing with the ladder? It made total sense, looked great and made

me feel something when I played.”

Principal photography took place in Calgary and throughout Alberta. “A big point of discussion was that after 20 years nature would take over and everything would flood,” explains Production Designer John Paino. “We always tried to make the streets look broken up and as ridged as possible. The other big part of the design was making sure that we were building everything in nature, which looked like we were in living in the States. Going from the East to West Coast, especially with Bill’s Town, which is supposed to be a hamlet, which are dotted throughout Massachusetts, we didn’t have a lot of luck finding locations until we got to the part of the story where we are crossing through the middle of America, and the look of Calgary and the feeling of tundra in the Midwest was great. We looked at a lot of reference of natural disasters to help to inform it.” The Quarantine Zone in Boston and Bill’s Town were practically constructed. “The QZ was the first backlot that we built because that kind of federal urban brick architecture doesn’t exist in Calgary. We built that. All of those streets were built as well as the interiors to match them. As we got to Kansas City where they are walking outside more, we were able to find streets that look like that in parts of Calgary and also in Edmonton. Almost all our interiors were built on stages.”

Brought onto the project in December 2020 was Prosthetics

Designer Barrie Gower, who previously worked on Chernobyl and House of the Dragon. “There is quite a menu of prosthetics in the show,” Gower observes. “It’s predominately these infected fungal Cordyceps characters, and then you have the Clicker. There were about six Clickers and a child Clicker as well in Episode 105. The big guy who climbs out of the hole had a lot of digital work going on

there, but we built a fully practical suit for British stunt performer Adam Basil to wear. For the infected, we established several different stages of a human being bitten or infected, by going from two-dimensional makeup and artwork on a face to conjunctivitis around the eyes, to slightly raised veins. It’s like the fungus burrowing into the skin, and things start to blossom out of the skin like little stalks. We had five different stages going from human to just prior to becoming the Clicker, with big mushrooms on the head. The R&D for that and establishing the look of those probably took about six months.”

Contributions were made to the set decorations. Comments Gower, “In Episode 102, where they are walking through a warehouse by torchlight and see a body fused into the wall, that was the first set piece that we built. We sculpted it in modeling clay, made molds and created sections. When we made the whole piece, it was split like a jigsaw puzzle, disassembled and shipped to Canada from the U.K. We had already pre-painted a lot of it and, one of our key artists Joel Hall, who painted it here, we shipped him to Canada as well. He worked with the art department in Calgary installing it into the set against a turquoise ceramic wall with a TV and bits of covered units.”

The weather was not cooperative, causing a logistical nightmare. “Snow was the biggest thorn in our side,” admits Special Effects Supervisor Joel Whist. “The episode we shot in Waterton is supposed to be a town where the cannibals live, and it was to be completely covered in 15 feet of snow with big drifts forming off buildings. They chose Waterton because normally the town shuts down for the winter and becomes a big snowbank. But when we went, there were some remnants of drifts up against the houses

OPPOSITE TOP: Part of the special effects work was to flood sets with water.

TOP: Critical in making the Cordycep inflection believable was to create a sense that the fungus was coming out of the host.

BOTTOM TWO: A view of the walls of the quarantine zone and the infected open city portion of Boston.

and buildings. We could only use snow from within the park and brought in 350 dump truck loads of snow in four days. Me and 10 guys and a bunch of equipment hand dressed, hand blew, hand raked and hand shoveled for four days straight to get the town to look like it had snow. It would melt as we were doing it, but we got it done. When we came in the next day to shoot, there was an inch or two of fresh snow over everything that we had dressed, so it looked perfect.”

Fire was a dominant element, such as the house being set ablaze. “The house crash was a one-shot deal,” Whist reveals. “We did a test to see how the truck reacted. This truck had a giant snowplow on the front designed to come in and knock abandoned cars out of the way. The truck practically hit these lightened cars loaded up with dust, and sparks would fly. Then that truck had to crash into the house. There was a lot of rehearsal and testing to say, ‘Does this work? How does it look when hitting the house? We can tweak it and cross our fingers that on the day the stunt guy really hits it hard and it works.’ It was constant testing. We did a complete fire set inside of a stage, with the burning of a steakhouse all controlled by us. Tons of spot fires on the street. We did lots of burning cars. We had the burning cop car that was on fire, driven by a stunt guy in a fire suit hooked up with propane that would come in and smash an upside-down pickup. A lot of old school effects.”

Around 3,000 visual effects shots were produced by DNEG, Wētā FX, Distillery VFX, Zero VFX, Important Looking Pirates, beloFX, Storm Studios, Wylie Co., RVX, Assembly, Crafty Apes, UPP, RISE, Framestore, Digital Domain and MAS. Amongst the digital augmentation was how the parasite spreads. “That was probably one of the most abstract challenging and fun creations for visual effects,” states Visual Effects Supervisor Alex Wang. “We call them the tendrils. In the game, we have the spores – that’s how the ‘infected’ transfer their infection to other human beings. The tendrils come out of their mouth. Craig wanted that to feel real, in the sense that when the Cordyceps are done with the insect, they burst out of their brain; that was our foundation. Then there was the whole other research part of what characteristics they carry. The minute when the animation starts to feel like they have a personality, it automatically felt like aliens on the Syfy Channel. We didn’t want to go there. These Cordyceps have one motive, which is to keep infecting to survive.”

Two teams were sent twice to Boston to LiDAR scan, photograph and capture drone footage to ensure the city appeared to be authentic in the devastated open city. “As far as the vines, it’s trickier than you think, especially when you’re trying to deal with buildings at scale and you’re not used to seeing vegetation growing on skyscrapers,” observes Wang. “When they’re overlooking the hotel terrace and see a swarm of infected, that is a crucial defining moment of the show where we understand that the infected are connected to each other in some ways. We call it a dog pile. You can see them like synchronized swimmers start to move in unison. That was challenging because it’s abstract in nature. We were talking about everything from their movement to where we are high up looking at it from scale. All the aspects of it made it a challenging shot. Wētā FX was in charge of that work and used

their Massive for crowd simulations and did a lot of performance capture to feed the Massive library.”

DNEG was the lead VFX vendor on the majority of VFX work across most episodes. Remarks Stephen James, VFX Supervisor at DNEG, “I traveled to Boston with our DNEG shoot team to do the data and reference capture needed to recreate a lot of the iconic [Boston] locations seen in Episodes 1 and 2. One sequence in particular that was brought to life directly from reference was the ladder-crossing sequence in Episode 2. This is such a memorable scene from the game that we wanted to do it justice. Even though the characters are six stories up and looking out at a bombed city, the scene provides a sense of relief, beauty and hope to the characters and the audience with them.”

Most challenging for James and his team was capturing the deteriorating post-apocalyptic environments. Comments James, “The main creative and technical challenges came in our environment work which often involved building or augmenting real-life locations and adding the heavy destruction and overgrowth that the series is so well known for. Much like in the games, the environments had to set the tone and tell their own story. What happened to this place 20 years ago? How has Mother Nature impacted it since then?”

“For any building destruction,” he adds, “it was very important to Alex Wang that you could feel the weight of the buildings pressing down over decades. Our DNEG Environment Supervisor, Adrien Lambert, built custom tools for Houdini that would give us base destruction simulations. This gave us a realistic collapse, broken windows and framework. Those were then cleaned up and manually set dressed in great detail. Every floor was filled with furniture, debris, cables, curtains blowing in the wind and so on. Once our destruction was in place, we would be able to add weathering and overgrowth. A great deal of thought went into the types of vegetation, the seasons and where and how it was growing.”

A complex shot occurs when the pandemic actually begins when Joel along with his daughter Sarah and brother Tommy are fleeing. “It’s a long shot that goes through many stages of that town. In the end, they encounter a plane that is starting to fall and crashes and explodes,” Wang recounts. “A piece of landing gear is pushed out of that explosion, and that’s what knocks their truck over. We’re talking thousands of frames. Because the camera was essentially inside the truck, they drove over a wedge, and that was enough to knock it over. On top of that, we would add some camera shake to that as well.” And, of course, one cannot forget the cameo of a particular animal. Explains Wang, “In Episode 109, we have an iconic moment with Ellie and a giraffe. We built a proprietary scanning booth that enabled our hero giraffe to come into it because we were cutting between a live-action and CG giraffe, and it needed to feel seamless.” Seamless is a key word when describing the visual effects work in The Last of Us “I wanted the world to feel like if I embraced the location, I shot it without replacing every single thing, but touching every portion,” says Wang. “It has to feel grounded and believable that we are there. The best compliment would be, ‘Where are the visual effects here?’”

Considering her love for mathematics, arts and science, it is not surprising that Jacquelyn Ford Morie is a pioneer of virtual reality, as those three disciplines are the foundation for the medium that offers new ways for people to expand their experiences within a digital construct. Before becoming a Founder and Chief Scientist at All These Worlds, LLC, which explores the future uses of virtual worlds and the societal impact of avatars, the recipient of the Accenture VR Lifetime Achievement Award at the 6th International VR Awards created training programs for the animation and visual effects industry and was a senior researcher at the University of Southern California’s Institute for Creative Technologies.

Life began for Morie in Frankfurt, Germany, where her father was a U.S. Air Force engineer working on planes, redistributing what they could carry for the Berlin Airlift after World War II. The family continued to move around until settling on West Palm Beach, Florida. “I feel like I grew up in a little wonderland of Florida before it became so overbuilt. Our vacations were fishing trips. I knew how to deal with alligators and water moccasins. It was an interesting childhood.” Grade nine was a defining year for her as she went to public high school after attending a small Catholic school for eight years. “I had three classes that shaped my whole future,” Morie says. “An art class that was practical and art history, a math class taught by a guy that looked like Rudy Vallée, and a science class where the teacher gave us foundational science research projects.” It was important to be exposed to all three subjects. “We end up pushing people into choosing one or the other, but they’re compatible, and I’ve always thought that. My mother didn’t try to set boundaries. It was nice not to be steered into a profession that would make money,” Morie reveals.

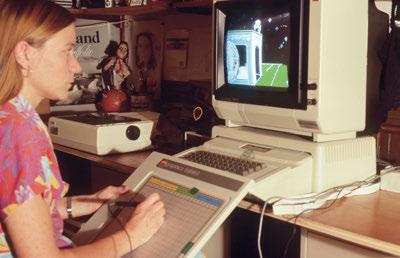

While attending the Fine Arts photography program at Florida Atlantic University, where she earned her Bachelor’s degree in 1981, Morie decided to take a computer class to prove that computers were detrimental to society: the action backfired. “It turned out that I was one of the five people out of 90 who got an A. What was interesting to me was that you had to go into this logical mindset.” An Apple II personal computer became a part of her household in 1981 and came in handy at the University of Florida. “I spent an extra year looking at the computer as an image-making device. When I would bring something in, my fellow graduate students would be outraged because I was using a machine to make art. We’re talking about photography students here! The whole photography realm had gone through that same argument. I took these overly pixelated images from the Apple II that I would draw with a program or graphics tablet or some other mechanism, and I would photograph them from the back of the room with a telephoto lens to minimize the curvature of those big CRT screens back then. I would take them into the darkroom and multiple print them as regular photo images. That was called ‘Integrated Fantasies.’ Almost 40 years later, I took those images and made a virtual reality experience titled ‘When Pixels Were Precious.’”

Off to college, 1968

In her darkroom, c. 1978

What followed was a Master’s degree in Computer Science from the University of Florida and a career in training, whether it be teaching professors how to use CAD software at the IBM-funded

CAD/CAM facility at the University of Florida, redesigning the computer graphics program at Ringling College of Art and Design, or implementing a year-long training program for incoming computer animators at Walt Disney Feature Animation. “As much as it was some of the best people I’ve ever worked with, it was a hard system to do something new in, so I left Disney. I heard that VIFX was looking for somebody to set up a training program.” Originally a visual effects company owned by 20th Century Fox, VIFX was sold by Fox to Rhythm & Hues. ”There were vicious wars at that time in the 3D animation industry. Everybody was trying to get into the game and undercutting each other. It hasn’t changed since! The production schedules were not humane, and I had many discussions with production artists and technical directors about what it takes to make another explosion along Wilshire Blvd., or make this lava look good in this Armageddon movie. I could see all these kids feeling that they’d wasted their lives making entertainment for people. I thought, we have this powerful new medium [in virtual reality] that nobody is using: How can we make it so that it provides meaning for people?”

Morie was a guest speaker at a workshop held at the Beckman Institute in Urbana, Illinois, titled “Modeling and Simulation: Linking Entertainment and Defense,” which had evenly split representation from the entertainment and defense industries. “In three days, we went through what it would mean if we took everything we knew about simulations and entertainment and

put them together to make something new,” Morie explains. “Ed Catmull from Pixar had the first talk and I had the last one. One of Ed’s things was that we need to be free to pursue crazy and wild ideas.” The climate surrounding grants had moved in the opposite direction. “For grants today, you have to have deliverables and do everything that you say that you’re going to do,” Morie says. “The military funded those things back then and just said, ‘Discover stuff.’ That is so freeing and amazing to just pursue something for the joy of pursuing something new. Out of that workshop, the Army decided to fund a lab to do exactly that. They put it out to three universities; USC got the contract in 1999 and that became the Institute of Creative Technologies. I moved from the visual effects and animation industry into starting up this lab.”

Is there a different dynamic between academic grants as opposed to military funding? “The VR lab that I went to in Orlando was military-funded primarily and had a few civilian things,” Morie notes. “It was early and explorational. By the time we started the Institute of Creative Technologies, there was some entrenched military thinking. Luckily, the Institute of Creative Technologies was a line item in the Presidential budgets, so it couldn’t be messed with too much. It was more of a discussion on how we use it and our director, Richard Lindheim, was good at letting us researchers say what we wanted to do and then finding a way of fitting us into that budget. It’s how you manage things and let the voices be heard. It was a unique time at the beginning of the Institute of Creative

Technologies. One of the smartest things that they ever did was not make it part of a department at USC. It was reporting directly to the provost for research so we did not have to dance to Engineering or Cinema. It was the most freeing thing to pursue interesting intellectual and creative pursuits. In my long career, what I have found is, one or two people can change the way something unfolds because of their passion and voice.”