26 minute read

Cybersecurity

from DCR Q3 2022

Optimising the CISO

Ross Brewer, Vice President and General Manager of EMEA and APJ for AttackIQ, unpacks how datadriven insight for CISOs and security team leaders can benefit the entire organisation.

Advertisement

All organisations face varying degrees of cyber-threat in an increasingly digitised world. In fact, there were over 300 million ransomware attacks recorded in the first half of last year. To mitigate these threats, the Chief Information Security Officer (CISO) is tasked with securing their organisation against breaches perpetrated by bad actors. However, nearly half of all UK businesses experienced a successful breach during the pandemic, and cybersecurity incidents rose by a staggering 600%.

The threat landscape is expanding, but as innovation in the cybersecurity space affords new opportunities for the industry, businesses should be savvier than ever when choosing how to secure their infrastructure and seek to transition from a reactive, to a proactive, threat-informed defence.

Creating a threat-informed defence Organisations across the UK are spending heavily on cybersecurity, with medium and large businesses in the UK alone spending over £800 million on their defence in 2021. However, a study by PurpleSec found that 75% of companies infected with ransomware were running up-todate protection, meaning that organisations investing large amounts of funding into their cybersecurity programme are not tackling the real problem: testing and validating the controls they already have.

According to the 2021 Verizon Data Breach Investigations Report, CISOs have an average of over 70 security controls at their disposal, up from 45 just four years ago – but with controls failing often and silently, they cannot be validated if they are not continually tested.

A multitude of budgetary cybersecurity solutions exist, but with the global average cost of data breaches reaching over £3 million in 2021, organisations must configure comprehensive cybersecurity solutions that can effectively remediate real-world threats. An illustration of this is the HAVEX strain of malware, reportedly used by the Russian government to target the energy grid. Companies should be running attack graphs that emulate these known threats end-to-end to bolster their cybersecurity preparedness in the event of an attack.

To counter these sophisticated threats, using automation to test organisations’ security controls continuously, and at scale in production, is the key to unlocking a threat-informed defence. Automated security control validation can leverage new threat intelligence about adversary tactics, techniques, and procedures (TTPs) through knowledge-based frameworks such as MITRE ATT&CK.

This strategy allows for the deployment of assessments and adversary emulations against their security controls at scale, enhancing visibility by enabling organisations to view performance data continually, and allowing them to track how effective their security programme is performing.

Organisations aiming to successfully achieve a threat-informed defence should put Breach-And-Attack Simulation (BAS) systems at the centre of their cybersecurity strategy. A good BAS platform uses the MITRE ATT&CK framework to enhance, assess and test threat detection and threat hunting efforts by simulating real-world behaviours.

Through the performance data gained from continual security control testing, CISOs and their teams gain visibility into the efficiency of their

cybersecurity programme, and can more accurately report their findings to the board.

Cybersecurity cannot live in a silo Co-operation and data sharing are also crucial tools for mitigating control failures, and creating a threat-informed defence. Traditional security team structures use threat-focused red teams and defence-focused blue teams to test security controls in tandem. However, teams often work in silos, and exercises are typically only performed once or twice a year, insufficient for a rapidly-changing threat landscape.

A relatively new security team structure is purple teaming, where testing is aligned through a shared view of the threat, and the systems that they are supposed to defend. Purple teaming combines red and blue teams to run adversary testing against an organisation’s most important controls by understanding which controls are most likely to impact an organisation’s operations.

Successful purple teaming has a goal of sharing performance data after the exercise is complete, which transforms a traditionally siloed structure into a collaborative effort, breaking down operational barriers and increasing cybersecurity effectiveness.

CISOs as valuable partners The role of the CISO within an organisation is to be a valuable, trusted partner of the c-suite. This means CISOs are required to definitively demonstrate that the security controls they implement are working as expected, all the time. CISOs have the responsibility of informatively reporting the cybersecurity health of a company to the Board of Directors, but this is challenging without data-driven, quantifiable insights into what is and is not working in their defence architecture.

Decision making made through data driven insight is invaluable to a business. Using Breach-and-Attack Simulation platforms, organisations can meet the needs of a mercurial threat landscape by continuously testing and validating their security controls.

Validation efforts work like continuous fire drills for an organisation’s defences, emulating adversary behaviour, and ultimately evolving defences to meet the needs of a modern threat landscape, with the aim of creating a comprehensive, resilient cybersecurity strategy. ‘Evidence Based Security’ is now the focus.

The truth about DDoS

Ashley Stephenson, CTO, for Corero Network Security, explores the changes in DDoS attack behaviour and recommendations for protection.

DDoS has an understandable reputation as a blunt instrument. It has a track record as an unsophisticated, brute force weapon that requires only basic computer skills to wield in anger. Today’s teenagers can, and often do, use DDoS to flood gaming websites with malicious traffic and bring the intended victims (or opponents) to their knees. Or so the thinking goes.

The truth is that DDoS has been evolving to become more of a surgical instrument of criminal extortion. A scalpel – to carve out a crucial and targeted part of a larger campaign – and with every passing year it gets sharper and is used in increasingly sophisticated ways. This is borne out in Corero’s latest 2021/22 DDoS Threat Intelligence Report, which highlights the realities of DDoS threats, how they’re changing and how security teams need to respond. Most DDoS attacks are small and short The reality of DDoS on the Internet today is that most attacks are not atomic bombs, they are precision strikes. The vast majority of attacks are small in comparison to the headline-grabbing incidents. Corero research reports 97% are below 10 Gigabits per second (Gbps) and 81% are under 250,000 packets per second (pps).

There are a whole range of potential reasons for these tactics. Attacks are often used as part of a campaign using multiple cyber threat vectors in which DDoS attacks may serve as a force-multiplier or distraction in a larger assault.

Another theory suggests, however, that these types of attacks have evolved and become popular because many legacy solutions cannot detect them. Their size or duration allows them to effectively evade or outrun many older-style detection solutions into thinking that they are just normal traffic. This is particularly true when this type of attack is sprayed across many adjacent victims in what is sometimes called a carpet bomb attack. Effectively, a series of easy to launch, smaller attacks spread over a wider target area can be parlayed into a destructive force that does just as much damage as one large scale, and harder to accomplish assault.

This annual trend of increased threat has been the reality of DDoS for quite some time. But it is also true that DDoS attack fallout often goes unrecognised or is misidentified as more general network issues

or connection problems. Organisations need to get to grips with the DDoS issue if they want to protect themselves from these threats – past and present.

Open VPN One representative development in the DDoS weapons landscape over the last few years is the rise in the use of OpenVPN reflection attacks.

It is one of the more peculiar side effects of the global pandemic in relation to DDoS. As lockdown orders set in around the world in early 2020, many more companies resorted to the use of VPNs to establish secure connections between office networks and home workers. This proved to be an opportunistic gold mine for DDoS attackers.

They started using OpenVPN, a popular style of VPN tools, as a DDoS reflection and amplification vector to great effect. According to the report, these types of OpenVPN attacks have risen by 297% since the start of the Covid-19 pandemic.

Attackers are combining new and old vectors New DDoS vectors are constantly appearing. Our data shows that unique DDoS attack vectors are increasing year over year. Some of the most recent vectors include the new TP240 PhoneHome and Hikvision SADP vulnerabilities, both of which can be used to launch damaging DDoS reflection and amplification attacks.

DDoS attackers are consistently seizing on these new opportunities. It is now standard practice to use a combination of long-standing attack vectors, supplemented with a fresh layer of these novel, recently discovered enhancements. Awareness of DDoS isn’t enough.

Data from our report confirms that DDoS vector awareness alone, is not a sufficient defence. In July 2020, the FBI released an alert disclosing and highlighting four new DDoS attack vectors. Despite that warning and the resulting boost in awareness, the malicious use of those vectors grew throughout 2020 and again we report that they were still significantly active in 2021.

The future of DDoS DDoS frequency and peak attack power has grown massively in recent years. In yet another example of the continuing evolution, the advent of the Mirai botnet in the Internet of Things (IoT) environment gives us an insight into how this came to pass. Exploiting a large population of poorly secured IoT devices, Mirai managed to perpetrate some of the largest DDoS attacks on record and cripple popular websites and internet infrastructure and – by some accounts – the internet of the entire country of Liberia.

The key to its success was the viral infection or ‘pwning’ of a significant population of IoT devices. DDoS attackers are continuing to exploit the same techniques. The problematic insecurity of the cheap, numerous and poorly secured IoT device is a green pasture for DDoS attackers who can herd together vast armies of these insecure devices and then instruct them via command and control (aka CnC or C2) networks to simultaneously unleash their flood power against a victim or victims.

The IoT keeps growing and its capabilities are forecast to grow even faster. 5G and IoT-based networks will expand the frontier of edge-oriented communications, data collection, and computing. Left unprotected, this array of newly-defined internet access points will constitute a DDoS vulnerable flank, enabling attackers to bypass legacy core DDoS protection mechanisms. It’s difficult to imagine the rapid roll-out of these transformative capabilities for the common good without simultaneously enabling DDoS attackers to do the same unless industry-wide changes are made to enhance the deployment of DDoS protection.

Stopping DDoS threats Inflexible solutions cannot keep up with the increasingly complex nature of DDoS. Given that most DDoS attacks are small and short, many legacy protections will not detect them. Likewise many legacy solutions cannot respond fast enough to DDoS attacks – some even require a customer to complain of a problem before they are activated.

No single DDoS solutions can offer truly effective protection in isolation. Cloud-based DDoS detection and mitigation services are profoundly useful in diverting very large DDoS attacks to cloud-based scrubbing facilities. However, they cannot operate locally in real time as they typically need to detect attack traffic and then redirect it to the cloud. To put it simply, the attack has to hit first, making some resulting downtime inevitable.

Meanwhile, on-premises or on-network solutions are crucial for locally detecting attacks and stopping attack traffic in real-time before it hits the enterprise applications. However, they could struggle with the sheer size of infrequent but powerful DDoS attacks that enterprises may have to face.

Enterprises would be wise to consider a hybrid solution which fuses these two approaches together. Cloud-based protection can be on standby to soak up excessive saturating traffic, while on-premises defences will provide the rapid response to the vast majority of DDoS attacks while also providing valuable time needed to swing excess traffic to the cloud. This combination prevents downtime and provides real-time protection against the DDoS attacks of the present and near-future.

The workplace revolution

Tikiri Wanduragala, Senior Consultant at Lenovo, outlines the minefield of security threats that organisations are currently navigating as cybercrime continually evolves.

ata centres used to be perceived as fortresses: strongholds that pro-

Dtected businesses from external threats. They controlled everything, managed the movement of data, and access was contained. With the adoption of the cloud over the past decade, this began to evolve as more data was interfaced to more locations. But the mass shift to remote work at the start of the pandemic spurred new and accelerated changes in security thinking and approach towards the data lifecycle.

With little warning, organisations abruptly had to secure dispersed workforces at scale, all while maintaining business continuity. Cybercriminals capitalised on this by looking to exploit weak points in companies’ security architecture, with the World Health Organization reporting a fivefold increase in attacks in the first two months alone.

In retaliation, businesses have had to adapt. Instead of relying almost solely on traditional data protection methods, organisations have started to assess security measures across their entire ecosystem, from the data centre to the cloud, to devices and employees. By encompassing these factors into one holistic view and ensuring that each element is securely protected, companies are creating a new era of secure, trusted ecosystems that cater for the hybrid world, centred around the individual.

The cyberthreat surge Cybercrime has increased in tandem with technological developments, and attackers often have the upper hand. Previously, enterprises had the advantage of better resources. Now, the tech used by criminals is equally as sophisticated, if not more superior in many cases.

This means that businesses have a minefield of issues to combat. Firstly, with so many potential threat surfaces, there is no single solution they can turn to. Secondly, there is always a way into a system. And thirdly, the landscape is continually evolving. As a result, it’s vital for organisations to keep up-to-date with the pace of the industry, both from a technological and personal standpoint.

Remote work, however, has led to complications. Circumstances changed, but as the interface remained the same – with employees moving from an office to a home screen, undertaking the same work – many assumed the equivalent for security too. With more people accessing data from more locations, it’s no longer a linear journey from data centre to office. And with end-users more vulnerable, the importance of security from the employee side is just as important as that in the data centre.

Fundamentally, organisations need to realise that there are different threats coming from all angles, and the world is quickly changing. They need complete end-to-end security solutions that begin with design and continue through supply chain, delivery, and the full lifecycle of devices. The importance of security needs to be instilled into every employee, alongside the repercussions of weak practices – not least financial loss, but reputational damage too.

The importance of infrastructure Although creating new security protocols may seem like a looming and futile task, continuously met with fresh challenges, organisations do not have to forfeit business output to maintain it. With so many features now built into the technology ecosystem, each element can be sufficiently protected with productivity and hybridisation in mind.

But this means that infrastructure needs to adapt to reflect the changes in the workplace. In a hybrid world, the same level of performance requires better infrastructure, as data centres need to support numerous home offices instead of a handful of larger hubs. In addition, it needs to underpin the emerging trend of ‘hypertasking’, which sees numerous tasks undertaken within a limited timeframe with the help of technology.

People still need to work effectively and be secure without having to compromise performance. Infrastructure must be kept up-to-date, but similarly, users need to be continually informed about the latest enhancements. Features like encryption and VPNs can slow devices down, impacting productivity in the process. As a result, users lose trust in the effectiveness of these measures and abandon them altogether, unless upgrades are both communicated to and tested by employees. If infrastructure successfully meets and exceeds increased demands, the resulting performance improvements mean that organisations don’t need to compromise security or business output.

The past 24 months have been a test in this essence, and infrastructure rose to the challenge successfully. But it also identified gaps to be remedied and areas to be improved, such as the need for greater security measures. Ultimately, the world will not return to pre-pandemic ways of working and living, and infrastructure needs to match up with the hybridisation era. Governments and corporations alike are coming to the realisation that this is vital to support society now and in the future.

As reliance on infrastructure increases, so too will security threats, and organisations need to take necessary steps to protect it. Technologies like Trusted Platform Modules (TPMs) are being built into servers to support

this, acting as physical locks that prevent them from being easily overridden through software hacks. ThinkShield, for example, embraces tech like TPMs, spanning the supply chain as well as mobile, PC and server products. Essentially, the infrastructure acts as phase one of the security ecosystem, as data then moves from the cloud through to the employee.

Transforming weakness into strength The security weak link in business is usually the individual, and often inadvertently. Employees tend to be more vulnerable to threats when working alone without nearby colleagues to help identify potential threats, and criminals are exploiting this. In August 2020, a few months into the pandemic, INTERPOL reported that personal phishing attacks made up 59% of cyberthreats in member countries.

However, employees can also be a strength. If staff are trained and understand the security landscape well, they can act as the first line of defence for an organisation. While technology can be compromised, many large cyberattacks can be combatted by human intervention. For instance, an individual could assess whether it is a good idea to join a public WiFi network, or whether they should use a VPN. Some organisations even share phishing simulations with rewards for employees that report the emails or avoid clicking on potentially harmful links. Others have implemented architecture like Zero Trust, which requires people to verify any device, network or user without trusting them by default.

Above all, the importance of maintaining optimal security revolves around the brand, the company, and its reputation. But it’s also about the user experience – ensuring that employees themselves feel safe and protected, while being able to work wherever as efficiently and productively as possible.

The death of the security fortress? The days of data centres acting as fortresses, being solely responsible for the security of an organisation, are numbered. The hybrid workplace revolution has necessitated new security ecosystems, securing data through its lifecycle from the data centre to the cloud to devices – and, perhaps, most integrally, to employees. If staff are equipped correctly, they can act as individual security strongholds, whether in the office or at home.

By breaking down silos and taking a holistic view of security, businesses will be better protected from evolving threats. And with the right infrastructure to support them, employees will step into the hybrid era safely, securely and productively.

RiMatrix Next Generation – establishing data centres flexibly, reliably and fast

Rittal’s RiMatrix Next Generation (NG) is a groundbreaking new modular system for installing data centres flexibly, reliably and fast.

Based on an open-platform architecture, RiMatrix NG means customised solutions, delivering future-proofed IT scenarios, can be implemented anywhere in the world. These include single rack or container solutions, centralised data centres, distributed edge data centres or highly scaled co-location, as well as cloud and hyperscale data centres.

RiMatrix NG is the first platform that supports OCP direct current technology in standard environments.

Change is a constant across today’s IT infrastructure, but digital transformation is creating innovation at a pace that has never been seen before and the pace will almost certainly continue to accelerate. This requires both rapid responses and long-term investment in data centres which are flexible enough to meet a myriad of new challenges.

Rittal has responded with its new RiMatrix Next Generation (NG) IT infrastructure platform.

“Right from the initial design phase, we thought ahead in terms of adapting to diverse and constantly evolving requirements when we were developing the open platform,” says Uwe Scharf, Managing Director Business Units and Marketing at Rittal.

“Our customers have to adapt their IT infrastructures to developments faster than ever before to ensure business-relevant products and services can be continually created at the highest possible speed and without faults.

“Our aim is to support them as their partner for the future.”

The result is a pioneering, open platform for creating data centres of all sizes and scale, flexibly, reliably and fast, and one which supports comprehensive consulting and services throughout the entire IT lifecycle.

Whether it’s single rack or container solutions, centralised data centres, distributed edge data centres or highly scaled colocation, cloud and hyperscale data centres, the modularity and backwards compatibility of RiMatrix NG means that it’s possible to update individual components in an infrastructure, so the entire data centre can continually be adapted to meet fast-changing technological developments.

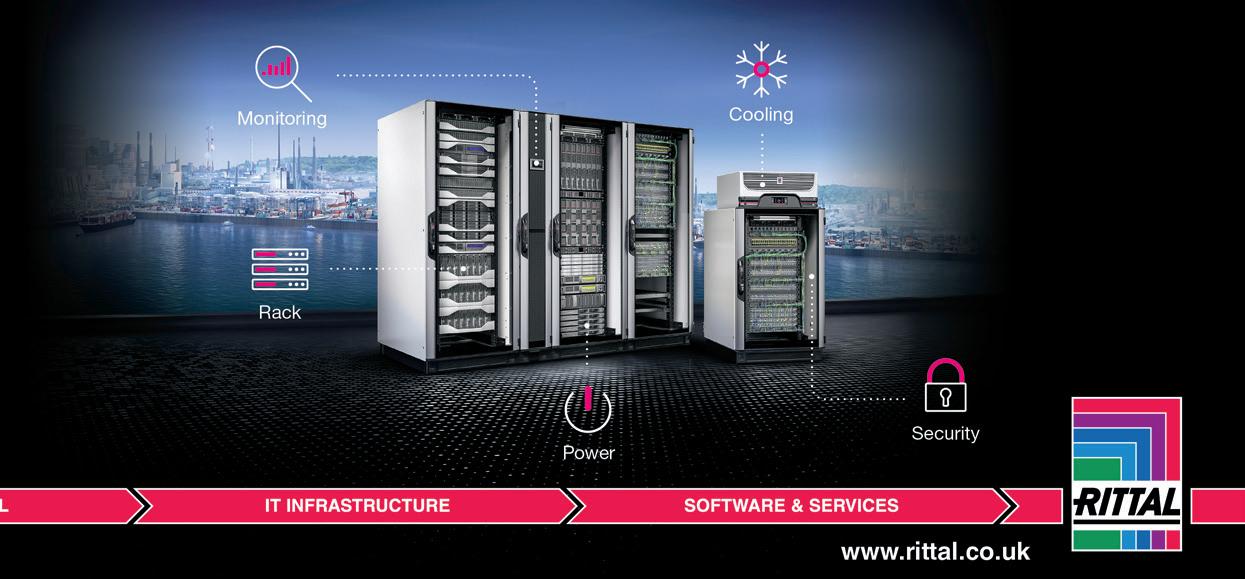

“RiMatrix NG thus becomes an IT infrastructure platform that is extremely future-safe and flexible,” Scharf explains. All IT infrastructure components in a single modular system The RiMatrix NG modules cover five functional areas: racks, climate control, power supply and backup, and finally IT monitoring and security. This enables IT managers to quickly and easily create solutions that are tailored to their individual requirements.

The number of potential combinations offered by Rittal and its certified partners (e.g. for energy supplies or fire safety) means that users can both meet their own needs, and any stipulated local regulations, wherever they are based across the world.

RiMatrix NG offers users the same flexibility as Rittal’s other modular systems, both through the new VX IT, as well as other, older generation racks. This makes the platform scalable in terms of size, performance, security and fail-safe reliability.

If a particularly fast response time is required, or existing buildings do not offer sufficient space, then the data centre can be placed within a container and safely integrated into any existing IT infrastructures.

First platform for OCP technology The RiMatrix NG is the first platform to support the use of OCP components and direct current in standard environments.

Highly-standardised, direct current architectures and 21 inch racks in the Open Compute Project (OCP) design are increasingly becoming recognised as the most energy-efficient choice for hyperscale data centres.

“Rittal is both a driver of the OCP initiative and a top supplier of OCP racks for hyperscalers worldwide,” Scharf says.

“With the RiMatrix NG, we are the first supplier to enable the straightforward use of OCP technology in standard data centres.”

Data centre operators can use RiMatrix NG modules and its accessories into an existing, rapidly changing architecture without switching the entire data centre or changing the uninterruptible power supply (UPS) to direct current.

“In this way, we now provide all our customers with easy access to the energy and efficiency benefits of this technology for the future – even for individual applications,” Scharf explains.

IT climate control IT systems installed in RiMatrix NG are cooled in a controlled cycle using tailored and fail-safe fan systems, refrigerant-based or water-based solutions, and their performance is continually monitored.

The cooling solutions can be tailored to each and every system, from single racks, suite and room climate control, right up to complex high-performance computing (HPC) using direct chip cooling (DCC).

IT power supply and backup Rittal’s ‘Continuous Power & Cooling’ concept is a way of bridging short-term power failures to prevent damage to both active IT components and other parts of the infrastructure, including the climate control.

It offers protection across the full length of the energy supply, from the main in-feed, UPS systems and sub-distribution, to the smart socket systems (power distribution units) in the IT racks.

IT monitoring and safety The RiMatrix NG platform supports monitoring solutions such as the Computer Multi-Control III (CMC III) monitoring system and Data Centre Infrastructure Management (DCIM) software. This includes various sensor options measuring humidity, temperature, differential pressure as well as vandalism.

Users can also choose from a range of protective measures depending on their needs, for example, a basic protection room within a modular room-in-room solution, or a high availability room for even greater reliability.

The platform’s safety is certified under ECB-S rules from the European Certification Body GmbH (ECB).

Rapid project implementation RiMatrix NG was designed in such a way that new data centres can be rapidly installed. Components can be quickly and easily laid out using the web-based Rittal Configuration System, and there is also Rittal’s unique 24/48-hour delivery window for standard products in Europe.

The platform’s international ratings not only ensure its reliability, they further speed up IT project installations because they eliminate the need for time-consuming permit and test procedures.

Consulting throughout the entire IT lifecycle In addition to the system components, Rittal’s customers are given all the support they need for set-up and operation. And this support continues across the entire IT lifecycle of a data centre.

The company’s service portfolio includes design consultation, planning and configuration, as well as assistance with operations and optimisation. Flexible financing models, including leasing, round-off its portfolio and enable needs-oriented investment.

For more information, visit: www.rittal.com/rimatrix-ng

What’s IT worth

Mehul Revankar, Head of Vulnerability Management at Qualys, explains the importance of applying a risk management approach to security.

Managing cyber risk is one of the biggest challenges that today’s organisations face. Keeping all IT assets protected is a massive responsibility – everything from the devices in the office and servers in data centres used daily, to infrastructure and cloud services.

It’s no secret that the number and impact of attacks are rising, with Verizon’s recent Data Breach Investigation Report unveiling nearly 24,000 attacks took place during 2021 alone. The rise in ransomware attacks last year was more than the rise that took place over the last five years combined. The report also highlighted that issues arising from software supply chains led to a huge number of breaches, in addition to misconfigurations in modern applications and cloud services that added to security problems.

In order to manage this influx of threats, security teams must understand how to prioritise the largest risks to their company environments. But the sheer number of assets that enterprises have make it difficult, if not impossible, to patch everything immediately. Teams need to take a different approach.

Looking at your risk profile According to Qualys research, there are 185,446 known vulnerabilities that exist based on data from the National Vulnerability Database. These range from issues in niche or older software products that are no longer supported, to critical problems that affect huge swathes of IT assets. The challenge is to know what assets and software you have installed, whether any of those assets have problems that need to be fixed and whether those issues can be exploited.

While there are thousands of vulnerabilities that exist, they are not all equal in terms of risk. Of the total number of vulnerabilities, 29% (55,186) have potential exploits available – that is, where code has been created to demonstrate how a flaw works. Beyond this, only 2% of issues will have weaponised exploits against them, which enable malicious attackers to quickly exploit vulnerabilities with minimal effort. This equates to around 3,854 vulnerabilities. Only 0.4% of vulnerabilities actually have working exploits that have successfully been used for attacks by malware families or threat actor groups.

In other words, less than 2% of all software vulnerabilities are responsible for the vast majority of malware attacks and security breaches that today’s enterprises face. In identifying which issues pose the largest threats, you can effectively improve how you manage your security and where you put your efforts.

In understanding which issues affect your specific infrastructure, you can see which should be higher up the priority list for patch deployment and you are able to evaluate other security issues to see if they need additional attention. All organisations have different implementations in place. In practice, this can mean that a software vulnerability that is rated as less severe for the majority of users is actually critical for you to fix immediately. In these circumstances, knowing your own risk profile will enable your organisation to increase their security posture.

How to improve your approach The security industry is continuously looking to improve management processes and prevent attacks. For example, the US Government has enforced new roles over federal government IT projects that mandate software bill of materials, or SBOMs. These documents aim to capture all the software elements and services that make up an application, including versions and updates that are in place. This is a crucial step because if you know all the components that make up custom applications, you can get more oversight of what issues they have. This improves how organisations get a baseline understanding of their environment.

You cannot secure what you do not know is there. Understanding your environment, tracking your software supply chain and having accurate and up-to-date IT asset lists in place are all necessary. From there, you can carry out regular scanning to track all assets that are deployed on your network.

To be a true risk to your enterprise, a specific vulnerability must have material applicability in your specific environment. Controlling cybersecurity risk is much more achievable by focusing security and IT teams on the vulnerabilities that matter most to your company’s exposure.

Once you know what you have in place and what to prioritise, you can then set out your remediation plans. Streamlining your workflow between the security team responsible for detecting vulnerabilities and the IT operations team responsible for deploying patches can improve your mean time to remediation (MTTR). These two teams often do not integrate. Automating workflow creation can make this process smoother and easier for both sides, while simultaneously improving security hygiene. For risks that are lower priority, teams can automate patch deployments and fix those problems without employing manual labour.

By looking at your whole approach to IT – from custom applications and software supply chains through to cloud services and endpoints – you can get a much clearer picture of what needs to be done in order to maintain security at scale.