The Academic Journal 2025

Edited by Shaun Abraham

Edited by Shaun Abraham

Foreword

Shaun Abraham Page 3

Aviation Journal

Materials of the Future

Anwesha Ghosh Y13 Page 4

Clear Air Turbulence

Ewan Butterworth Y13 Page 6

The Forgotten Story of the Rotodyne

Abhinav Malladi Y11 Page 9

Biology Journal

Phage Therapy: A revolutionary treatment for bacterial infections

Pavlo Kotenko Y12 Page 12

Causes of Alzheimer’s disease

Arunima Karve Y12 Page 14

Chemistry Journal

Chemistry of Fentanyl – The Anaesthetic that has caused a Crisis in the US

Raphael Dadula Y13 Page 18

Chemistry behind Fragrances

Vedika Tibrewal Y13 Page 22

Computer Science Journal

Zero Trust Architecture: A Radical

Rethink of Cybersecurity

Sahishnu Jadhav Y12 Page 24

How do computers compute?

Mikhail Sumygin Y12 Page 29

Economics Journal

The Rise of Islamic banking in the West

Aryen Adhikari Y12 Page 33

Labour’s GB railways and the historical clash between nationalisation and privatisation

Michael Bowry Y12 Page 37

History Journal

The Dangers of Appeasement

Chris Choi Y13 Page 42

India vs Pakistan- A Study in Sports

Diplomacy

Shaun Abraham Y13 Page 44

Machine Learning Journal

The Future for Robotics

Dev Mehta Y12 Page 48

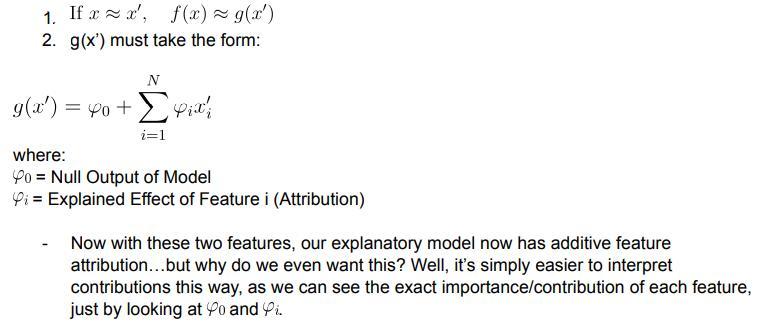

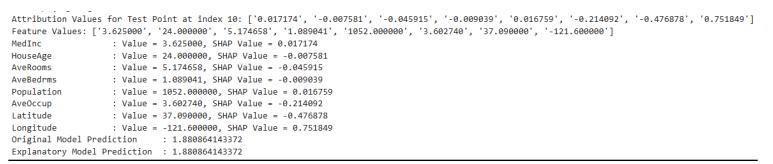

Game Theory to Machine LearningSHapley Additive exPlanations

Fifi Siddiqui Y12 Page 52

Maths Journal

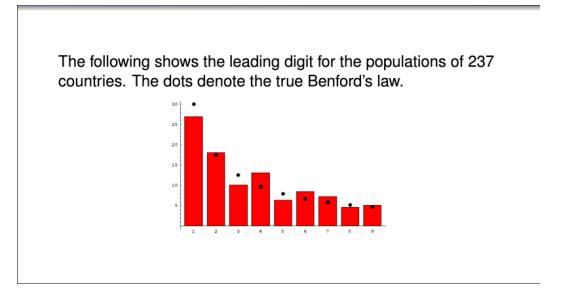

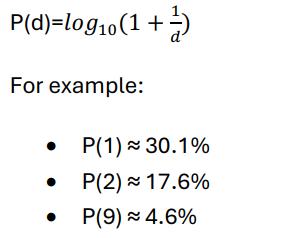

Benford's Law: The Strange Predictability of Numbers

Shaurya Mehta Y12 Page 57

Medics Journal

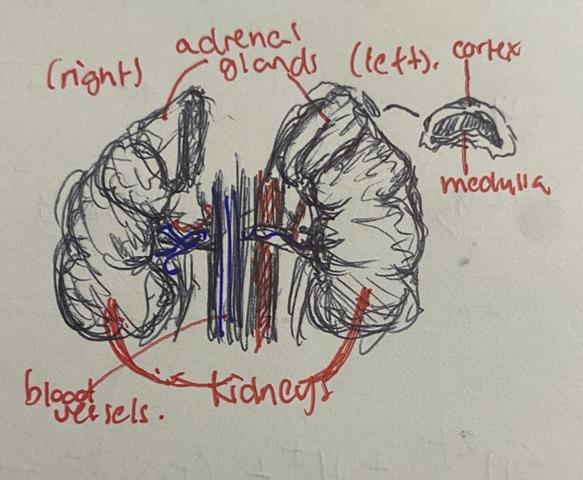

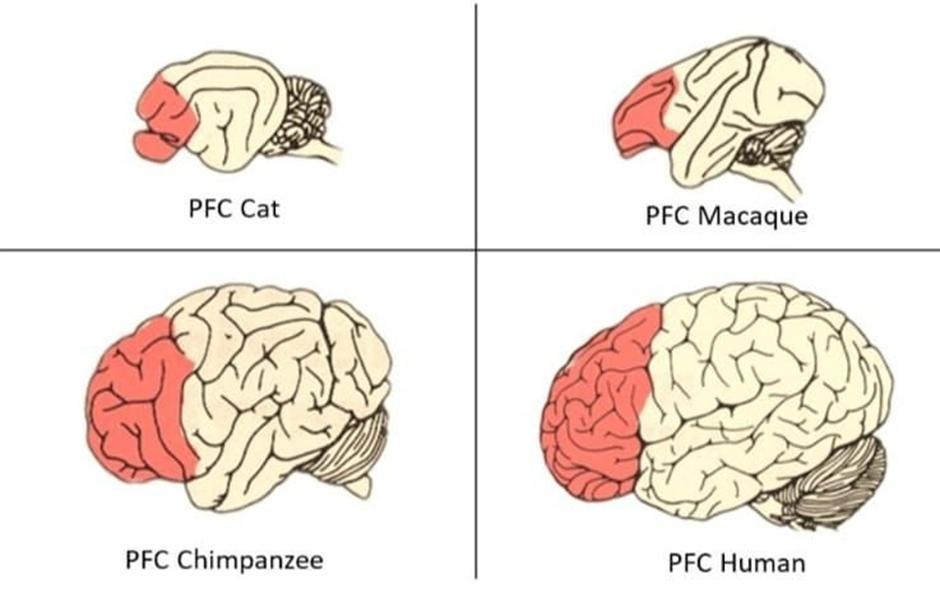

To Fight or to Fly? To Freeze or to Fawn? An Evolutionary Viewpoint

Sophie Li Y12 Page 60

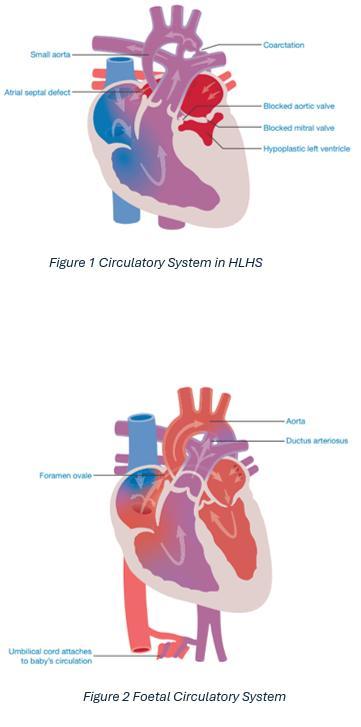

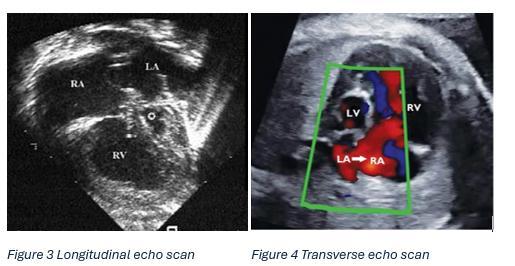

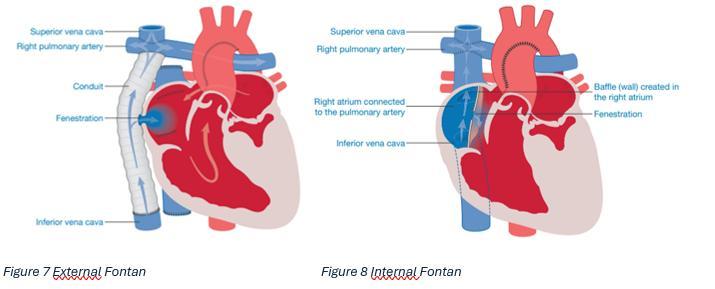

Hypoplastic Left Heart Syndrome

Hermione Kerr Y12 Page 67

Modern Foreign Languages Journal

The Spanish Siesta

Freya Keable Y13 Page 73

Multilingualism in Morocco

Vaidehi Varma Y12 Page 76

Physics and Engineering Journal

The Physics of Time: From Newtonian Absolutes to Einsteinian Relativity

Anna Greenwood Y12 Page 79

A Hunt for the Invisible

Eashan Rautaray Y12 Page 81

When I set out to edit this year’s Academic Journal, I perhaps naively thought that it would be a relatively simple task. I soon found this to be far from the reality. However, more than the editing itself, the greatest difficulty I faced was in choosing among the articles submitted to comprise this journal. It is testament to the talent, passion, and initiative of students at Saint Olave’s that for every article published here, I could easily have included many more of equal calibre, equally deserving of recognition.

From personal experience, I can confidently say that the greatest strength of this school is the depth of opportunity available for students – of which this journal is a product. Regardless of where one’s interests lie, there is a society here which indulges that interest, enabling students to delve deeper into their chosen niches and create such brilliant works as those represented in the coming pages. With topics ranging from the empirical methods of mathematics to the intangibilities of politics, from the molecular to the cosmic, and inspired by societies as varied as Aviation and Machine Learning, the diversity of passions at this school is plain to see.

I could go on for pages about how excellent the articles in this journal are, but it is probably easiest for me to let you discover that for yourself. I would like to thank all the students and society leaders who have contributed to this publication, and in doing so have further enriched the vibrant academic environment of this school. This journal is just a snapshot of the many society publications available on the school website, and I cannot encourage you enough to go explore those too in your free time. As it is, I hope you find the articles here as enjoyable as I did while editing them. There really is something for everyone.

- Shaun Abraham Academic Journal Editor 2025

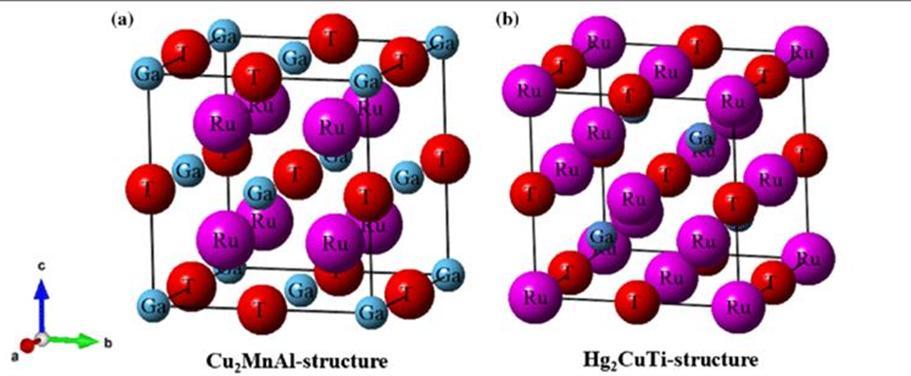

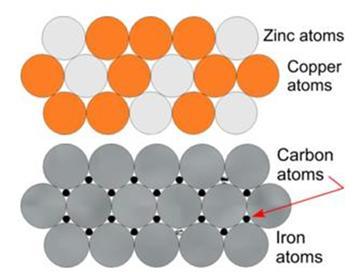

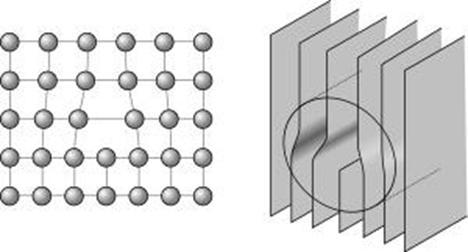

High entropy alloys are, as the name suggests, a bit chaotic. We first must understand the structure of normal alloys to understand these odd materials, though. I’m sure you all know what alloys are: a composite material that contains a primary metal, and a small amount of another. The inclusion of the other metal disrupts the even layers of the primary metal and makes them harder to slide over each other, and hence the metals can withstand greater forces without deformation You’ll most definitely be familiar with the diagram on the left, below; this diagram is an alright visualisation in 2D, but in reality, the alloys have 3D crystalline structures like the diagram on the right:

As you can see, depending on the different molecules used, the alloys arrange themselves in a different crystalline structure to accommodate the relative sizes of each of the atoms. As a result, an alloy with metals of similarly sized atoms will have different packing to that of metals with differently sized atoms. The first is known as solid solution alloys (a good example would be brass with Zn and Cu), and the second is known as interstitial alloys (which applies to the Fe and C in steel). The two structures are displayed below:

Now, what does this all have to do with aviation? Alloys have greatly improved mechanical and physical properties compared to pure metals, and allow us to test the boundaries of what we thought possible. If we go back to the Wright Brothers, they switched from their initially wooden frame to using aluminium alloys for the airframe, which was both lighter and able to withstand higher pressure loading Nowadays, we have exciting

alloys like Inconel 625, termed a ‘nickel based super alloy that possesses high strength properties and resistance to elevated temperatures’ It is often used in hypersonic aircraft due to this! Another version of Inconel – Inconel X – was used to coat the hypersonic aircraft X-15 in order to withstand the effects of aerodynamic heating at the crazy speeds it went at!

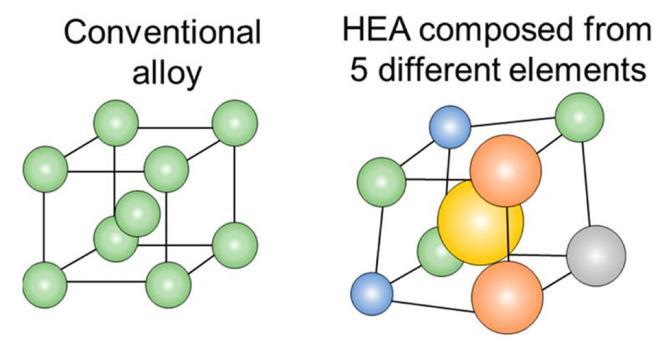

But wait! Alloys may have been cool at the time the X-15 was made (1958), but we have the newer and better subgroup of high entropy alloys waiting to be used. Now we’ve seen the crystalline

crystalline structures of normal alloys, let’s expand that to high entropy alloys! As you’ve seen, conventional alloys have a relatively regular crystalline structure High entropy alloys contain five or more elements in roughly equal amounts, which results in high configurational entropy (which essentially means more disorder because of the many different types of atoms). Because of this, HEAs are normally composed of elements with different crystalline structures, and end up looking something like this:

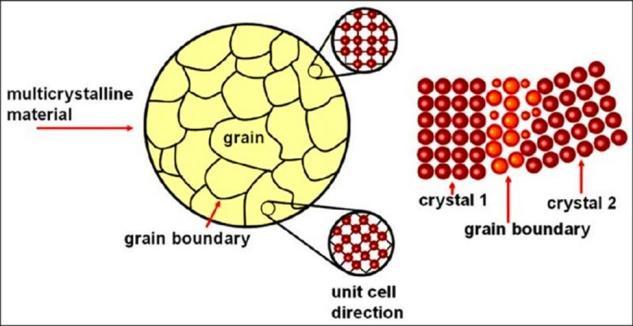

Contrary to belief, this weird shape actually results in HEAs having higher strength, hardness, wear resistance, thermal stability and corrosion resistance compared to their conventional counterparts In order to understand why this is the case, we need to take an even closer look at

crystal lattices, namely ‘dislocations’. Due to natural variation, crystal lattices have points at which they are distorted due to an additional plane of atoms sliding between the regular structure. When a shear force is applied along the crystal lattices, the dislocation shifts, until it reaches a grain boundary, as displayed below:

Due to the irregular structure of the HEA crystal unit cells, they have more grains. This means that when a shear force is applied to them, the dislocations have less of a distance to shift before they hit a grain boundary, and thus the metal does not deform.

Due to their unique combination of mechanical, thermal and physical properties, HEAs have various applications in aviation. One example is that HEAs may be used for high-temperature applications, such as in jet engines and hypersonic vehicles – so perhaps the X-15 could’ve lasted even longer if it had been coated with a HEA! The usage of HEAs in jet engines means that fuels can be combusted at higher temperatures, and thus complete combustion can occur, which means the fuel has a greater energy content.

Unfortunately, as with every emerging piece of technology, we’re not quite there yet… HEAs are notoriously difficult to synthesize at scale whilst keeping costs low, so it isn’t really feasible to use them in aircraft just yet. Their chaos lends itself to helpful properties, but it also means that we lack a clear understanding of the relationships between composition, microstructure and properties, and so can’t really predict what will happen to them in different conditions

For anyone who has flown anywhere, one would be very lucky to have never experienced inflight turbulence before, even in its mildest effects, because turbulence comes primarily as a result of the movement of air (in most cases wind) hitting and exerting forces on an aircraft, or dramatic (and I mean very sudden) changes in air pressure that may knock it out of its original alignment. Naturally, to fully understand that you can think of the very basics of how an aircraft flies – the wings create lift through manipulation of the air they are travelling through. If this air is suddenly dramatically impacted by something like a gust of wind, it can impact the amount of lift produced by the wings and cause the aircraft to be deflected along its vertical axis of movement, or deflected sideways if the wind comes from the side. Simples!

Now, this obviously can cause forces on the occupants of the aircraft as it is nudged about, which is what we feel when the aircraft experiences turbulence

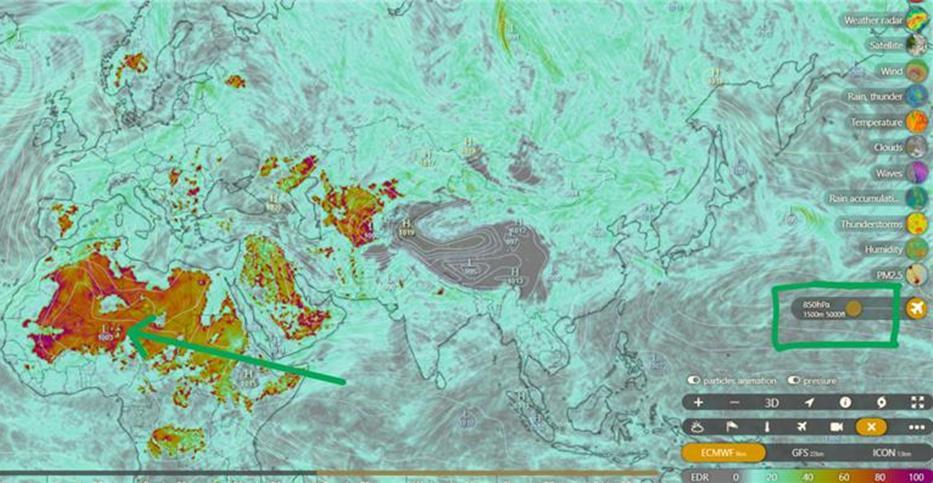

Because wind creates turbulence, we will look at what creates the wind Most commonly, this will be weather – formation of clouds like a cumulonimbus (BIG cloud) or storms, and this is the turbulence we can see and predict Pilots will be able to see this weather on their weather radars, if they can’t see it out of their window (which they should be able to) or if they haven’t been notified by air traffic control (ATC) about the existence of the weather which they may encounter:

The patches of green, orange and red display the varying severities of the weather ahead on the weather radar

Obviously, the pilots can then ask the ATC if they can be directed around the weather if it’s very severe, and, apart from in the most critical cases, this will be approved. Naturally, if it’s just a small cloud the assumption is that there is minimal effect on the aircraft, and thus little worry is spent on flying through the small clouds (if you ever descend/ascend through

clouds in a flight, you’ll most likely experience small amounts of turbulence caused by the dramatic pressure changes within the cloud). It is generally only the most serious of weather which demands avoidance:

A typical cumulonimbus cloud. These are clouds which pilots should make a concerted effort to avoid, such is their potential to cause severe turbulence

Aircraft can also encounter turbulent movements of air in scenarios like:

1. Mountain waves: why you’ll find no aircraft over the Himalayas –https://en.wikipedia.org/wiki/Lee_wave

2. Thermals – https://wiki.ivao.aero/en/home/training/documentation/Turbulence (under convective turbulence)

3. Wake Turbulence – https://en wikipedia org/wiki/Wake_turbulence#

All of these are central to how flight paths and plans are structured, as well as in key principles of safe flight practiced in aviation.

However, aircraft like SQ321 can also come across what is known as clear air turbulence This turbulence is essentially “unavoidable” because it is almost impossible to predict, at the speeds aircraft are travelling at, when and where the aircraft might meet clear air turbulence It occurs primarily between 22,000 and 39,000ft, which is where most airliners will take their cruising altitude, and as a result you are most likely to experience clear air turbulence in the cruise.

The turbulent air is formed at the point where bodies of air moving at widely different speeds meet, and the shear caused by the “friction” between the two bodies. The reason it is so hard to detect clear air turbulence is because, as suggested by the name, is consists of entirely “clear” air, so is almost impossible to see with the naked eye or detect by radar Various methods can be implemented, but these can take a while to find the pockets of turbulence, so in most part predictions are made based on the prevalence of different factors that can cause clear air turbulence to warn pilots. Mountain waves and thermals, listed earlier, are also forms of clear air turbulence, though these can be predicted with more ease than with the cause most recently described, which occurs most frequently near jet streams. We can visualize this using the displays on the next page

Top: at 5000ft, you can see the rising thermals from the Sahara Desert meaning a prediction of very high amounts of clear air turbulence

Bottom: at 30000ft, you can see predictions following the shapes of jet streams

As you can also see from the picture above, I marked the position where Singapore Airlines Flight 321 experienced clear air turbulence resulting in the death of a passenger earlier this year, above the Irrawaddy Basin between Thailand and Myanmar It seems from here that there is little clear air turbulence predicted, which is contradictory to what SQ321 went through, but it further emphasises the point that clear air turbulence is so hard to accurately predict, which is why it can be so dangerous to aircraft. My parents in their days working in South-East Asia experienced flying between Thailand, Myanmar and Bangladesh very frequently, and they always mention the fact that the region is turbulent, owing to the huge weather systems which can develop in the countries

Clear air turbulence can range hugely in intensity, going from a level barely noticeable to something SQ321 experienced, and likely even further. Indeed, there are other famous cases of aircraft suffering from severe clear air turbulence, which can be found through this link https://en.wikipedia.org/wiki/Clear-air_turbulence#Effects_on_aircraft .

On the 6th of November 1957, a new era of flight was about to begin – a new, revolutionary aircraft built by the British plane manufacturer, Fairey Aviation. This was no regular plane or a regular helicopter, yet it could do Vertical Take-Off and Landing (VTOL) manoeuvres while flying internationally, carrying seventy-five people. It may look part plane, part helicopter, but in truth – it is neither

All of that costed airlines just 25p per seat mile of travel in today’s money, or <2p per seat mile at the time, cheaper than any helicopter. Even the colossal Airbus A380 is only just half the price of this 1960s marvel to operate relative to its range and capacity, even with modern technologies, huge size, and unbeatable range. To clarify, a seat mile is a unit of price used to find the cost efficiency of a plane, the cost of operating it per person per mile it travels. What was this helicopter-plane hybrid super-aircraft? The Fairey Rotodyne

The Rotodyne was the perfect mix of these two forms of transport – a type of aircraft known as anAutogyro – a type of aircraft largely forgotten today.

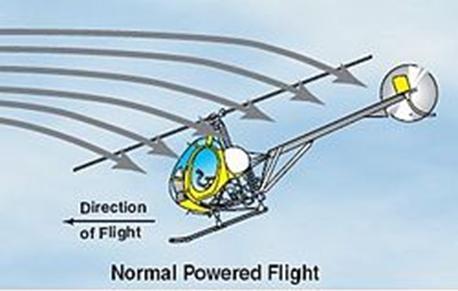

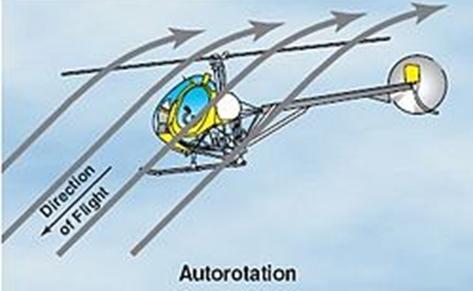

An autogyro is essentially a plane with helicopter blades on top. However, these blades are not powered, so they cannot qualify as a helicopter – instead they take advantage of autorotation –the tendency of these blades to spin unpowered when the autogyro plane is moving forward. This contributes to lift This is why a helicopter’s blades continue spinning even when power is lost, allowing it to land slowly, even slower than a parachute would let it. This is why Autogyros were used for many years as scout planes or to deliver mail in the 1930s – a plane could continue flying even at speeds where any wing would stall.

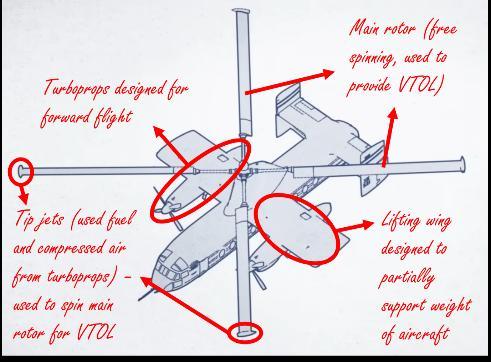

How did the Rotodyne work?

It is based on the autogyro concept but leverages parts of it to gain VTOL capabilities while remaining efficient. It had shortliftingwingsdesignedtokeepitflyingduringforward flight (however assisted by the main rotor which will undergo autorotation, creating more lift). This forward flight is maintained by the two main turboprops.

But how does itdoVTOLs?The main rotor is free-spinning and has no engine attached to it after all. This is where tip jets come in. The turboprops run during VTOL, however, they run to produce compressed air for the “tip jets” instead of providing any meaningful thrust upwards. This compressed air and some fuels are pumped into the main rotor to miniature jet engines on the tips of each blade in the rotor – allowing for it to be spun up during take-offs and landings without needing to rely on autorotation, which usually requires some amount of forward flight. These tip jets would later become an issue.

Why did Fairey Aviation build the Rotodyne?

The Rotodyne was built upon the ideas behind the much smaller, prototype testing plane FB-1 Gyrodyne. It was made to solve the biggest issue with inter-city centre travel. The traffic of cars, and cost. Travel by car was far too slow for the businesspeople who needed to attend meetings in different cities with only a few hours to travel. The solution for many? Planes.

However, there was one (very large) issue The airports were outside the city centre and the transport time between these airports to the city was only growing – often reaching or surpassing the flight time, which on average, was only an hour

You may have a specific transport option on your mind by this point – one that can land almost anywhere, even on the rooftop of the very building they may have a meeting in. You are not alone, and this resulted in an explosion of helicopter transport services

However, helicopters lacked the range and speed to do longer trips Even in the case that they were capable of certain journeys, the new helicopter airlines that had arisen from this demand were unable to turn a profit due to the high costs of operating a helicopter, instead relying on government subsidies that could fade away at any moment.

why

Due to the nature of Britain’s aircraft industry at the time (many, smaller manufacturers) – many companies did not have the budget to develop a new type of aircraft from scratch, and so prioritised designing for military uses as to be awarded a grant for the development of the aircraft from the government. The Rotodyne was built on this government funding. However, there were simply too many manufacturers for the government to give individual grants to, and it was also causing other problems logistically and economically. This meant Fairey Aviation and practically every other manufacturer in the industry was forced to form multiple mergers into just a few, larger companies.

So how did the project die? In the worst way possible.Aseries of truly unlucky, unfortunate events.

The mergers had the Rotodyne competing with other helicopter projects trying to solve the same issue in the same company. The tip jets were simply too loud (113dB!) for use in cities, and even with the development of mufflers dropping their loudness by 85%, they were still extremely loud. And worst of all, facing economic troubles, funding for the program was suddenly pulled by the British government in 1962. All documents and the 40-passenger prototype (labelled Type-Y, the 75-passenger version to be the Type-Z) were destroyed, with only a few scale models and remnants left of the project as a whole.

Pavlo Kotenko Y12

Disease has been an unending problem for humanity since its beginning. Many treatments and medicines have been used throughout history to fight disease, with the most significant breakthrough being the invention of antibiotics in 1928. However, as overuse of antibiotics leads to rising numbers of MDR (multi-drug-resistant) and TDR (totally-drug-resistant) pathogens, scientists have been looking for a new treatment that could turn the tide and make MDR bacterial infections easier to fight; this is where phage therapy comes in.

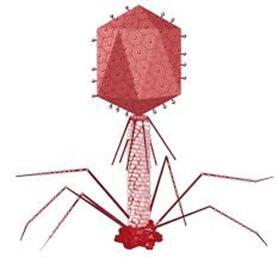

Phage therapy utilises bacteriophages – aka phages, they are a type of virus that replicates in bacteria and archaea, but not eukaryotes. This is key to their function because it means that, when introduced to the human body, they only target certain surface receptors, and therefore certain species of bacteria – making them specific and avoiding common downsides of conventional antibiotics such as collateral damage to the gut microbiota, or to cells that are part of the human body1.

Another advantage of using phages is their lytic cycle. Through the lytic cycle, the phages reproduce by hijacking bacterial cells. In this way, the reproduction of the viruses is also linked to their main method of fulfilling their function as an antibacterial treatment – every bacterial cell killed grows the numbers of the phages, allowing the introduction of just a small number of them to snowball into an effective treatment to most bacterial diseases2. However, some phages utilise a lysogenic cycle instead, meaning that the viral DNA is replicated within the bacterial cell hosts over many generations instead of being read immediately The bacteria are still killed at the end of the cycle, but the delay between applying the phages and their bactericidal properties taking effect is too long to be practically used as an antibacterial treatment. Therefore, only some species of phage that undergo a lytic cycle can be utilised for phage therapy3 Another possible issue with phages is that the immune system could kill off all the phages that have been administered before they reach the target bacteria to begin reproducing, as they are also a foreign organism in the human body, making the treatment unsuccessful.

Eventually, the same problem arises with phage therapy as did with the overuse of antibiotics: the bacteria develop resistance to the phages, and they no longer perform as well or at all. However, there are several ways in which phage therapy can be adapted to overcome this obstacle. The most common method is a “phage cocktail”: instead of administering just one phage species to target a specific species of bacterium, many different species are used to target the bacterium in different ways (e.g. by attaching to different receptors). Phages are especially suited to these kinds of “cocktails” because of their sheer abundance in nature – bacteriophages are the single most common biological agent on Earth4. This makes it easy to find several different species of phage that target the same species of bacteria with just a small sample of phages Using phage cocktails, the bacteria have far fewer opportunities to survive all the different phage species and evolve a resistance to all of them, making MDR bacteria that resist phage therapy far less likely to

to develop Genetic engineering can also be utilised to engineer the phages to become more effective in killing bacteria – e.g. stimulating formation of proteases that more efficiently destroy the structures of the bacterial cell

If a phage cocktail is still not enough to deal with a bacterial infection, they can be combined with an antibiotic to make a very potent treatment. As the bacteria have had to adapt to the selection pressure of the bacteriophages, they would have lost the ability to resist antibiotics as they were no longer a significant selection pressure after prolonged exposure to phages. Ultimately, this is the most powerful application of phage therapy – as a kind of catch-22 which prevents bacteria from gaining any significant resistance to one treatment without losing their resistance to the other, effectively preventing the emergence of new MDR and TDR bacteria

Bibliography

1. “Phage therapy: a new frontier for antibiotic-refractory infections” – Josh Jones – Royal College of Pathologists – 2024 - https://www.rcpath.org/resource-report/phage-therapy-a-newfrontier-for-antibiotic-refractory-infections.html

2. “Phage therapy: An alternative to antibiotics in the age of multi-drug resistance” - Derek M Lin/ Britt Koskella/ Henru C Lin – National Library of Medicine – 2017https://pmc.ncbi.nlm.nih.gov/articles/PMC5547374/

3. “Phage Therapy: Past, Present and Future” – Madeline Barron, PhD –American Society of Microbiology – 2022 - https://asm.org/articles/2022/august/phage-therapy-past,-present-andfuture

4. “Phages as lifesavers” – Claudia Igler – Biological Sciences Review, Volume 37 – 2024

5. “Phage therapy as a potential solution in the fight against AMR: obstacles and possible futures”- Charlotte Brives/ Jessica Pourraz – Nature – 2020https://www.nature.com/articles/s41599-020-0478-4

Alzheimer’s disease is the most common type of Dementia. Therefore, I think it’s important to begin with a brief overview of Dementia to provide some context before exploring Alzheimer’s disease in more depth.

Dementia is an umbrella term for a collection of neurodegenerative diseases where the nerve cells (neurons) in the brain stop working properly. The causal mechanisms behind this, as well as the symptoms exhibited by the patient, vary according to the type of Dementia the patient is thought to have. There are four main types of Dementia: Alzheimer’s disease, Vascular Dementia, Dementia with Lewy Bodies and Frontotemporal Dementia (listed in order of prevalence)

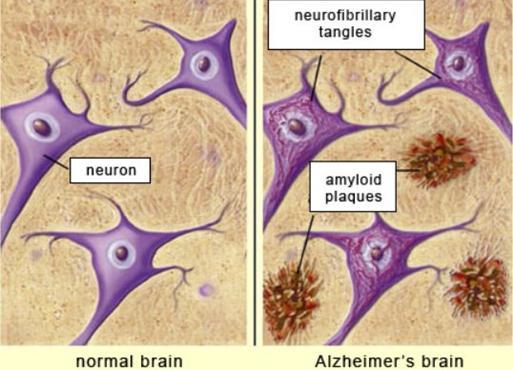

Three out of these, including Alzheimer’s Disease, are thought to be caused by abnormal deposits of proteins aggregating and disrupting neuron functioning. As these protein deposits increase in frequency and size, so does the internal brain damage caused by them, which also leads to a deterioration in symptoms. This is why Dementia is described as a ‘neurodegenerative’ disease.

The common symptoms for all of these types of Dementia are a loss of cognitive functioning: thinking, remembering and reasoning to the extent that the patient ends up struggling to complete basic daily tasks like walking, navigating and handling money. Contrary to popular belief, Dementia is not just memory loss and confusion. It can cause mobility issues [1], as well as a range of behavioural issues such as agitation, depression and even hallucinations Late-stage Dementia patients may experience difficulty balancing, swallowing and have bladder control issues (incontinence)

In this article I am going to explore the proposed biological causal mechanisms behind Alzheimer’s disease, and how they are thought to cause its characteristic symptoms.

Alzheimer's disease has 2 main proposed causal mechanisms:

1 Beta amyloid plaques

2. Tau tangles

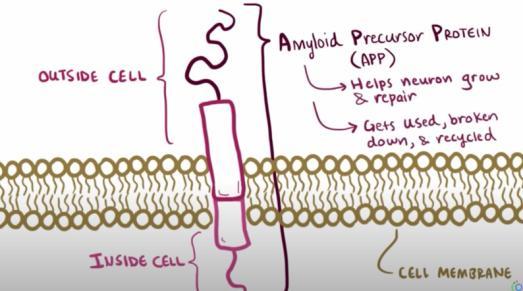

Beta amyloid plaques originate from the amyloid precursor protein (APP) This is a normal protein produced in the body which is important in the growth and repair of neurons. Usually, APP is broken down by two enzymes known as alpha secretase and gamma secretase to form soluble peptides which are then broken down and either

removed from the bloodstream or are recycled However, if beta secretase teams up with the gamma secretase in the place of alpha secretase, then the leftover fragment produced is an insoluble monomer called amyloid beta These monomers are chemically sticky, and form beta amyloid plaques as they clump together. These plaques get in between neurons and disrupt neuron-to-neuron signalling, which impairs brain functions such as memory They may also trigger an immune response and cause inflammation, damaging the surrounding neurones. This is an example of how the damage to the brain compounds in Dementia patients. Amyloid plaques can also deposit around blood vessels in the brain (which is known as amyloid angiopathy), which weakens the walls of the blood vessels and increases the risk of haemorrhage in the brain Haemorrhage is the term for when a blood vessel bursts, and if this occurs in the brain, then the patient could then develop vascular dementia as well because a haemorrhagic stroke in the brain is huge risk factor for vascular dementia The patient would then have mixed-diagnosis dementia and probably also have worse symptoms. This is how the condition progresses: the damage caused to the brain worsens as more and more beta amyloid plaques build up.

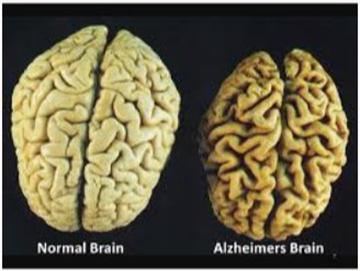

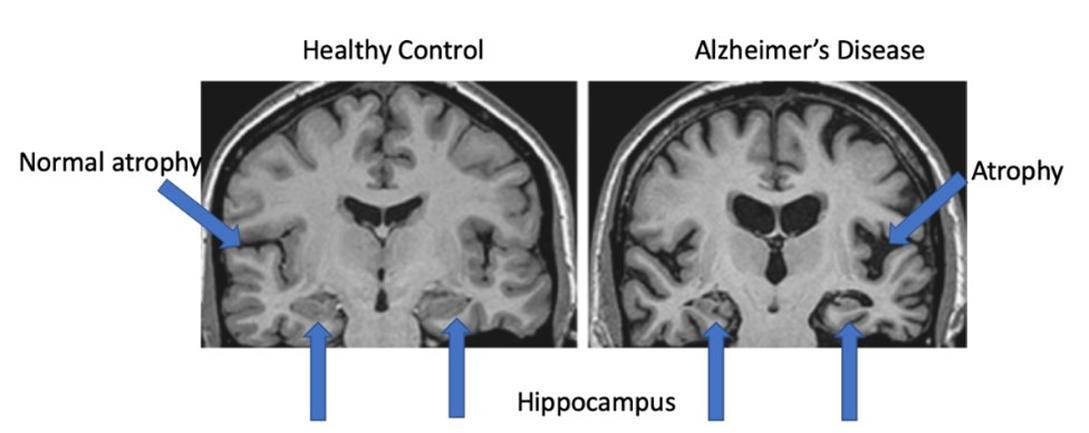

It is currently theorised that these beta amyloid plaques outside the neurons lead to activation of an enzyme called kinase inside neurons, which causes the tau protein inside neurons to become hyperphosphorylated Tau ensures that the microtubules (track-like structures that ship nutrients along the length of a cell) which make up the cytoskeleton of neurons do not break apart. As a result of becoming hyperphosphorylated, the tau protein changes shape and stops supporting the microtubule, instead clumping together to form neurofibrillary tangles inside the neurons. As neurons with tangles have non-functioning microtubules, they cannot signal as well as normal nerve cells, so they may undergo apoptosis (programmed cell death). As neurons die, the brain undergoes atrophy (it shrinks). The gyri (ridges of the brain) get narrower and the sulci (grooves between the gyri) get wider Finally, the brain ventricles, which are fluid-filled cavities in the brain, get larger. We can see this physical change from MRIs and post-mortem examinations of patients withAlzheimer’s

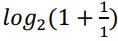

So how exactly do all of these biological changes cause the symptoms experienced by an Alzheimer's patient? In order to answer this question fully, it’s necessary to consider the functions of different parts of the brain

The beta amyloid plaques and tau tangles are most commonly found in the temporal lobe (specifically in the hippocampus), and the parietal lobe The temporal lobe is involved in language, memory, recognising faces, hearing and our sense of smell. The hippocampus is inside the temporal lobe, dealing with emotion as well as forming new memories and transferring them to our long-term memory store. This is why Alzheimer's patients struggle to form new memories The neurons in the hippocampus have impaired function so new memories are not transferred to their long-term memory stores. The parietal lobe controls how we react to our environment It is the part of the brain where sensory information from the environment enters into (known as the somatosensory cortex). If there is damage to the right parietal lobe, for example, then the person might have problems with judging distances in three dimensions and they may struggle to navigate stairs. You may be familiar with Alzheimer’s patients describing shiny floors as “wet”- this is likely due to their parietal lobe being compromised.

An interesting point to consider is that some people have the same amount of amyloid beta plaques in their brains as someone with Alzheimer's, but don’t actually have the condition in terms of the associated brain damage or characteristic symptoms [2] These types of people are given the name “Super Agers” by scientists because they may live up to 100 with these beta amyloid plaques and experience no symptoms or compromise in their brain function The mere existence of these “Super Agers” seems to contradict the current theory behind the cause of Alzheimer’s However, it has been found that these people have significantly lower levels of tau tangles in their neurons, so perhaps they are inherently resistant to the build-up of tau tangles

[1] Tolea, M. I., Morris, J. C., & Galvin, J. E. (2016). Trajectory of Mobility Decline by Type of Dementia. Alzheimer disease and associated disorders, 30(1), 60–66, available at https://pmc.ncbi.nlm.nih.gov/articles/PMC4592781/pdf/nihms659303.pdf (date accessed 05/02/25)

[2] Gefen, T., Kawles,A., Makowski-Woidan, B., Engelmeyer, J.,Ayala, I., Abbassian, P., Zhang, H., Weintraub, S., Flanagan, M. E., Mao, Q., Bigio, E. H., Rogalski, E., Mesulam, M. M., & Geula, C. (2021). Paucity of Entorhinal Cortex Pathology of the Alzheimer's Type in SuperAgers with Superior Memory Performance. Cerebral cortex (New York, N.Y. : 1991), 31(7), 3177–3183, available at https://pmc.ncbi.nlm.nih.gov/articles/PMC8196247/pdf/bhaa409.pdf (date accessed 05/02/25)

Introduction

In 1959, Dr Paul Janssen, a Belgian chemist who founded Janssen Pharmaceutic (now under Johnson and Johnson), became the first person to synthesise fentanyl. At the time, it was the most potent opioid (a class of drugs that reduce pain and derive from the opium poppy plant). It is over 100 times more powerful than morphine It is now often used by the NHS to treat severe pain during or after an operation and it used as an alternative when other painkillers are ineffective. Unfortunately, it has been misused by doctors by inappropriate prescriptions and by illegal manufacturers especially in the United States. On 26th October 2017, US President Donald Trump declared the opioid crisis a national public health emergency In 2022, there were an alarming 73,838 deaths from fentanyl overdoses with only 1295 deaths 20 years earlier.

Basic Chemistry of Fentanyl

Figure 1: Skeletal formula of Fentanyl

Molecular formula: C22H28N2O

IUPAC Name: N-(1-(2-phenethyl)-4piperidinyl-N-phenyl-propanamide

Boiling Point: 466°C

Melting Point: 83-84°C

Solubility in Water: 200mg/L at 25°C

Density: 1.087g/cm3

Fentanyl is made up of 3 main functional groups: 2 aromatic rings, one amine, and one amide

When Dr Janssen tried to create a new analgesia, he thought that the piperidine ring (the ring that contains a nitrogen atom) was the most important to form an analgesia The doctor and his colleagues wanted to create stronger analgesics than morphine and meperidine. They decided to make more fat-soluble derivatives Fentanyl has many hydrophobic regions such as benzene rings allowing it to dissolve in non-polar solvents. It is slightly soluble in water due to lone electron pairs in oxygen atoms which creates regions of negative charge.

Why is it an effective painkiller?

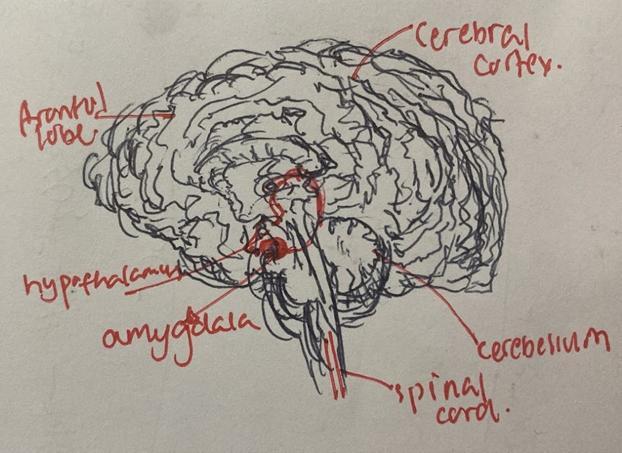

As previously discussed, fentanyl falls under the class of opioid analgesics, so it mainly acts at the μ-opioid receptor. It is absorbed through the skin as it has a low density, and high lipid solubility Opioid receptors are found throughout the central nervous system Usually, endorphins activate receptors to slow down the pain signal along neurones. As opioids have a similar chemistry to endorphins, opioids can help to delay the pain signal

Below is the step-by-step mechanism of action of opioids on opioid receptors:

1. Receptors stimulate inhibitory pathways which affect the PAG and the NRPG.

2. More inhibitory neurones are stimulated which causes more neuronal activity in the NRPG (situated at the brainstem).

3. More neurones which contain 5-hydroxytryptamine and enkephalin These neurones are connected to the dorsal horn (located in the spinal cord).

4. There is less transmission of pain from the periphery to the thalamus which is responsible to relay signals to the cerebral cortex.

What makes fentanyl special is that analgesia may take effect between only 1-2 minutes after intravenous injection Tablets and lozenges will take 15-30 minutes to work Low concentrations of 0.2 to 1.2ng/mL fentanyl plasma are enough for somebody to be unable to feel pain Tablets and lozenges wear off after 4-6 hours

Why is fentanyl addictive and deadly?

As we discuss pain, there are 2 main neurotransmitters that we should focus on: glutamate (an excitatory signal) and GABA(an inhibitory signal) Opioids inhibit the level of GABAsecreted towards the nucleus accumbens and other areas. Usually, the nucleus accumbens secretes dopamine which is often known as the “happy hormone” Therefore, when opioids bind onto opioid receptors, more dopamine will enter the central nervous system. Fentanyl also suppresses the release of noradrenaline which impacts digestion, wakefulness etc. When the body becomes more used to taking opioids, the body may have less opioid receptors or become less responsive leading the individual to take more fentanyl to produce the same effect. This causes noradrenaline levels to reduce so the body creates more noradrenaline receptors. This makes the body dependent on opioids to maintain the balance. If the individual stops taking fentanyl, the body is a lot more sensitive to noradrenaline causing stomach aches, fever and other excruciating symptoms. Even a simple activity such as wearing a shirt can be painful. Another problem is that fentanyl can lead to respiratory depression As fentanyl is saturated in your blood, this can lead to less CO2 being exhaled so you are unable to breathe properly.

Why is fentanyl popular among criminals?

The Drug Enforcement Administration says that 2mg of fentanyl is lethal, so it would make sense to avoid selling fentanyl as it leads to the death of many customers. However, it is cheap and highly addictive which makes it good for business This is why fentanyl can be found in drugs such as cocaine and counterfeit Xanax.

Fentanyl is easy to synthesise due to precursors which are building blocks used to form new chemicals which is why these precursors are strictly regulated. For fentanyl, the main precursor is a piperidine ring (which contains nitrogen) and at least one of the other functional groups mentioned earlier. However, the other functional groups are slightly modified so that they can still produce the same effect as regular fentanyl but avoid the risk of legal trouble Think about making ramen, using instant ramen is a lot easier and more convenient compared to making the soup from scratch. As illicit drug dealers only require a small dose, it is easy for them to hide chemicals in tiny containers with false labels.

A reporter from Reuter found that even a 12-year-old could make fentanyl and that the process was as easy as making “chicken soup”. To avoid anybody from taking inspiration from this individual, we will only discuss it is processed The powder is made into pills which also contain sugars, other painkillers, and colouring. Shockingly, Reuters were also able to by 12 fentanyl precursors and most of the chemicals were easily mailed to them as packages. It would be sufficient to create 3 million tablets, and they only spent $3,607 18 with most of the money paid in Bitcoin Some of the chemicals were given under fake labels such as hair accessories.

Conclusion

Fentanyl is still a common analgesic used in the NHS and it has helped to relieve the pain of many patients. However, the UK government must be vigilant to avoid a fentanyl crisis happening. It could be a game changer in the illegal drug market, and it is important to raise awareness so that people are aware of the risks so that they and the police will avoid the disastrous consequences that it can bring.

1. Pharmaceutical Technology (2018) Fentanyl: where did it all go wrong. [online] Last accessed 2 August 2024: https://www.pharmaceutical-technology.com/features/fentanyl-gowrong/?cf-view

2. NHS (2023)About fentanyl [online] Last accessed 2August 2024: https://www.nhs.uk/medicines/fentanyl/aboutfentanyl/#:~:text=It's%20used%20to%20treat%20severe,the%20rest%20of%20the%20bod y

3. Vanker, P. (2024) Number of overdose deaths from fentanyl in the U.S. from 1999 to 2022 [online] Last accessed 2August 2024: https://www.statista.com/statistics/895945/fentanyloverdose-deaths-us/

4. Stanley, T. The Journal of Pain, Vol 15, No 12 (December), 2014: pp 1215-1226

5. National Geographic (2017). This Is What Happens to Your Brain on Opioids | Short Film Showcase. YouTube. Last Accessed 2August 2024: https://www.youtube.com/watch?v=NDVV_M__CSI

6. Pathan, H. and Williams, J. (2012). Basic opioid pharmacology: an update. British Journal of Pain, [online] 6(1), pp.11–16.

7. Institute of Human Anatomy (2022). Why Fentanyl Is So Incredibly Dangerous. [online] YouTube. Last Accessed 3August 2024: https://www.youtube.com/watch?v=LxyyvW_fcqw&t=367s

8. TED-Ed (2020). What causes opioid addiction, and why is it so tough to combat? - Mike Davis. YouTube. Last accessed 3August 2024: https://www.youtube.com/watch?v=V0CdS128-q4

9. Ordonez, V. and Salzman, S. (2023). If fentanyl is so deadly, why do drug dealers use it to lace illicit drugs? [online] Last Accessed: https://abcnews.go.com/Health/fentanyl-deadlydrug-dealers-lace-illicit-drugs/story?id=96827602

10. Chung, D., Gottesdiener, L. and Jorgic, D. (2024). Fentanyl’s deadly chemistry: How rogue labs make opioids. [online] Last accessed: 3August 2024 https://www.reuters.com/investigates/special-report/drugs-fentanyl-supply-chain-process/

11. Tamman, M., Gottesdiener, L. and Eisenhammer, S. (2024). We bought everything needed to make $3 million worth of fentanyl. All it took was $3,600 and a web browser. [online] Last accessed 3August 2024: https://www.reuters.com/investigates/special-report/drugsfentanyl-supplychain/

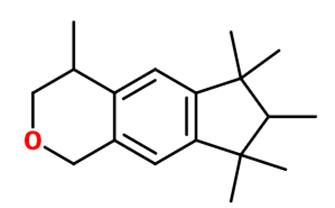

Humans have always been attracted to the sense of smell: since the first recorded form of perfume- used by the Mesopotamians in religious ceremonies over 4000 years ago- man-made scents have kept an undeniable presence in society across the globe. A smell is essentially a light molecule that floats in air, generally produced by aromatic organic compounds (hence the name) Perfumes as we know them today mainly revolve around arenes, giving them a pleasing scent: these aromatic compounds consist of a cyclical, planar structure with an abundance of C H bonds Since C H bonds are non-polar, hydrogen bonds are not formed between arene molecules, leaving nothing but weak London Forces to hold them together- as a result, they have a high vapour pressure, causing gaseous molecules to diffuse into the atmosphere and release scent.

Although most compounds used in perfumes tend to be arenes, other compounds such as terpenes are used due to their high carbon-hydrogen bond frequency, an example of which being Geranyl Acetate- the natural, unsaturated compound responsible for the scent of roses. Terpenes were originally considered to be under the ‘aromatic’ compound umbrella, however, have since been rejected from the group due to not fitting Hückel’s Rule, determining that aromatics must follow a ‘4n+2’ formula for its number of pi orbitals, a quality almost exclusively reserved for cyclical compounds.

An example of a perfume everyone knows and loves is Chanel N°5 Ernest Beaux, a renowned chemist of the 1920s, used at least 80 substances to make a simple yet intense fragrance- this perfume was special because of the complex blend of natural essences along with synthetic aldehydes.

The main components of perfume include:

Oils are essential in creating perfumes, providing the primary scents and determining the longevity of a fragrance. These oils can be natural- extracted from flowers and spices- or synthetic- made in labs for unique scents and consistency via steam distillation. The oils are categorized into top, middle, and base notes that unfold over time, with top notes being light and quick to evaporate, middle notes giving character, and base notes providing depth and longevity. Perfumers blend different oils to create complex fragrances, with each oil contributing unique olfactory qualities. Some oils act as fixatives such as musk- usually derived from the molecule Galaxolide- helping the scent last longer and remain stable over time In the formulation process, perfume oils are diluted with carrier oils or alcohol to achieve the desired strength, with higher concentrations creating stronger fragrances These oils contain functional groups such as alcohols, esters, aldehydes and ketones which influence the characteristics of the scent.

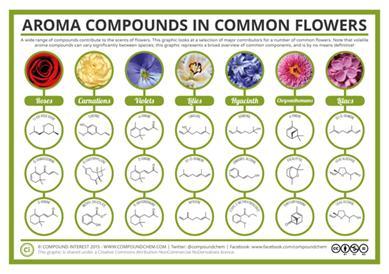

Figure 2: the different aromas coming off a single flower. Some are isomers, while some are completely different compounds because only a few have a major impact on the overall scent of the flower, while some exist in smaller degrees.

Like alcohols, water’s polar nature also allows it to dissolve floral and citrus oils. It also emulsifies the formulation- especially when creating colognes or lighter sprays Water is an overlooked part of the perfume-making process, but it influences the diffusion of the fragrance into the skin- affecting how the fragrance unfolds over time.

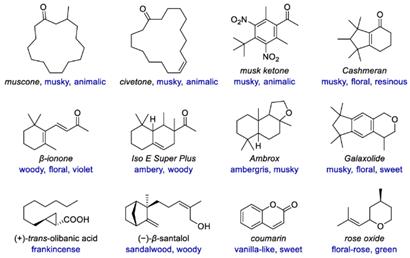

This diagram shows the different structures of perfumes. Different rings including aromatic, nonaromatic, and unsaturated hydrocarbons are usually used as they can easily be obtained from natural resources and petrochemicals The most common reaction mechanism to synthesise aromatic compounds is the Dies-Alder reactioncycloaddition of pi reactants.

➢ Conjugated diene(cis) and alkene (pi bond) react to form 6-membered ring.

➢ Two carbon-carbon sigma bonds are formed as well as a carbon-carbon double bond: This reaction is faster when the pi group and electron donate groups on the diene.

➢ 3 pi bonds are broken.

The Dies-Alder reaction occurs at moderate temperatures with a catalyst and is key both in synthesizing fragrances and in other industries.

Fragrance chemistry is a unique blend of both art and science. As our taste continues to become more sophisticated and as sustainability has gained more importance, the reactions involved become more complex in captivating our senses.

The world of cybersecurity is ever-evolving, and few concepts have shaken it up quite like Zero Trust Architecture. If you have been following developments in network security, you might have come across the term “zero trust,” which can sound rather dramatic. Indeed, it is a bold and somewhat radical idea: to trust no one and nothing, either inside or outside a network, without strict verification This article will explore what Zero Trust Architecture is all about, why it emerged, and how it might shape the future of digital security. Although the idea may seem technical, the core principles are easy enough to grasp With the right perspective, Zero Trust can be seen as a logical progression in the ongoing battle against cyber threats one that invites us to question long-held assumptions and embrace a more dynamic, flexible way of defending our online spaces.

Before delving into Zero Trust Architecture itself, it is worth looking at the context in which it was conceived. In earlier years, network security was largely based on what is often called a “castle-and-moat” approach. You can imagine an ancient fortress with thick walls, guarded gates, and perhaps a moat surrounding it. The idea was that anyone inside the fortress was effectively “trusted,” whilst those outside were treated as potential attackers. The digital equivalent involved building a robust perimeter around a network using firewalls, intrusion detection systems, and similar tools. Once you were inside, you had a relatively open environment in which data and information flowed freely This approach was considered adequate for organisations in which employees worked mainly at office locations, often using desktop computers connected directly to a local network The fortress (or perimeter) was clear, and traffic crossing the boundary could be carefully filtered.

However, times have changed. Today, people work from home and coffee shops, or on the move, connecting to their corporate networks via remote connections and using personal devices like smartphones, tablets, and laptops. Cloud computing platforms host data in remote data centres, far away from a traditional on-premises network perimeter Employees, contractors, partners, and even customers may require different levels of access to resources. Threats have also evolved, becoming more sophisticated and often originating from within the network itself whether through malicious insiders or by hijacking legitimate user accounts. Consequently, relying on a single well-guarded perimeter has become insufficient. Once an attacker slips inside by stealing credentials or exploiting a vulnerability, the trust-based nature of internal networks makes it easy to roam around, exfiltrating data or causing disruption.

Recognising this challenge, cybersecurity experts began to question the premise that “inside” users automatically deserve trust. In 2010, an analyst at Forrester Research named John Kindervag popularised the term “zero trust ” In essence, zero trust proposes that no user, device, or

or system should ever be inherently trusted Instead, they must earn trust by repeatedly verifying their identity, privileges, and security posture. Organisations such as Google started adopting principles akin to zero trust to protect their internal systems, especially after experiencing high-profile attacks that exploited vulnerabilities in the traditional perimeter model

The rise of cloud computing also accelerated the uptake of zero trust, because hosting data and services in someone else’s data centre implied that the old fortress walls had become porous at best. When resources are scattered across different geographies, with users constantly connecting from unpredictable locations, trust must be re-evaluated every time Although zero trust did not appear out of thin air it built on decades of research and earlier security best practices it is often viewed as a fresh perspective that cements the “never trust, always verify” ethos. This shift resonates with the increasingly decentralised digital ecosystem, where static boundaries no longer apply Moreover, as regulatory requirements around data privacy and security become stricter, zero trust provides a structured, auditable framework for controlling and monitoring access

At the heart of Zero Trust Architecture lies a set of core principles that guide how resources are protected:

1. Never Trust, Always Verify: The baseline assumption is that no user or device is trustworthy by default. Every access request needs verification ideally more than just a username and password. Multi-factor authentication (MFA) is a common requirement in zero trust systems, ensuring that an attacker cannot just guess or steal a single set of credentials and then freely wander about

2. Least Privilege Access: Even after verifying identity, a user should only be granted the minimum amount of privilege necessary to perform their tasks If someone only needs to read certain files, they should not gain access to modify them. This principle limits the scope of damage if an attacker manages to compromise an account

3. Micro-Segmentation: Traditional networks might treat the internal network as a large open space Zero trust, by contrast, segments the network into small zones or micro-perimeters Each zone may contain a specific application or set of data. Access to one zone does not imply access to others, making it more difficult for attackers to move laterally

4. Continuous Monitoring and Validation: Rather than a simple, one-off check at login, zero trust encourages constant monitoring of user behaviour and device health. If something odd is detected such as an unusually large data transfer or access from an unfamiliar location further validation can be demanded, or access can be blocked altogether.

These principles collectively encourage organisations to think differently about security. Instead of hoping to keep the “bad guys” out, zero trust presupposes that attackers might already be inside, or that they could easily waltz in if we are not vigilant. By layering multiple checkpoints and restricting privileges, zero trust makes it far harder for cybercriminals to exploit a single vulnerability or stolen credential to compromise an entire network.

Despite zero trust being underpinned by technology, the human element is crucial Organisations must ensure that staff, contractors, and even customers understand how the new security measures work and why they are necessary A carefully considered zero trust strategy might involve regularly training employees in best practices like using strong passphrases, and

recognising phishing attempts, and reporting suspicious behaviour It also typically requires changes in organisational culture. The notion that being “inside” the network confers inherent trust is deeply ingrained in many workplace environments Shifting to a zero-trust mindset can sometimes lead to frustration if people find themselves repeatedly challenged for credentials or forced to navigate micro-segmented networks

However, if introduced with clarity and accompanied by well-designed authentication processes, zero trust can actually feel less cumbersome for end users. Technology such as single sign-on (SSO) and adaptive authentication can minimise friction, asking for additional verification only when risk indicators arise such as logging in from a new country or at an unusual hour The trick is striking the right balance: you want robust security without making daily tasks unbearably tedious. Communication is key: if users understand why zero trust policies exist and how they protect sensitive data, resistance to change usually decreases

Zero Trust Architecture does not usually rely on a single product or technology but rather an ecosystem of tools and frameworks that work together:

• Identity and Access Management (IAM): At the core, zero trust requires robust identity management This goes beyond merely having a user database It typically involves automated workflows for provisioning and deprovisioning accounts, enforcing MFA, and generating detailed access logs for auditing purposes.

• Endpoint Security: In a zero-trust world, each device is scrutinised for compliance with security policies. Is the device running updated antivirus software? Has it been patched recently? Is it jailbroken or running suspicious processes? Tools that can verify the health and posture of an endpoint device are essential to enforce trust on a per-connection basis.

• Network Segmentation and Firewalls: Proper segmentation requires next-generation firewalls capable of enforcing granular policies. These might operate at the application layer, recognising specific services and controlling traffic accordingly Software-defined networking (SDN) can help create dynamic segmentation, spinning up or tearing down network “segments” in response to real-time conditions

• Security Analytics and Monitoring: Continuous monitoring demands the collection and analysis of vast amounts of data Security information and event management (SIEM) systems and advanced analytics platforms can help spot anomalies, detect intrusions, and take automated actions such as isolating a device or locking an account

These components intertwine to create a multi-layered defence. For instance, consider a remote employee trying to access a company’s internal database. The user must first prove their identity via MFA, then the device must pass an endpoint security check confirming it is patched and free of malware. Only then is a secure connection established to a specific microsegment of the network hosting the database. Throughout this session, if any suspicious activity arises, like unusual download patterns ,the session might be terminated or a secondary verification requested.

Like any paradigm shift, zero trust is not without its critics One of the most frequent concerns is complexity. Setting up micro-segmentation, enforcing consistent policies across cloud andon-premises resources, and integrating advanced monitoring tools can be daunting and expensive. Smaller organisations may lack the technical expertise or budget to deploy a fullblown zero trust framework On the other hand, many security vendors market “zero trust” solutions as if you can just buy a product and instantly become zero trust-compliant. In reality, zero

zero trust is more of a philosophy requiring continuous effort, planning, and maintenance

Another sticking point is user experience If implemented poorly, zero trust can hamper productivity by forcing endless re-authentications and restricting access so strictly that staff cannot do their jobs effectively Careful tuning, user education, and the adoption of technologies that streamline authentication processes are essential for ensuring that security does not become a bottleneck.

Finally, whilst zero trust certainly raises the bar for attackers, it is by no means a magic bullet. A sophisticated attacker might still manage to compromise user credentials or exploit zero-day vulnerabilities. As with any defensive strategy, constant vigilance and a layered approach remain essential Zero trust must be seen as part of a broader security posture one that includes security awareness training, updated patching regimes, and incident response plans.

Perhaps the best way to understand zero trust is to look at how forward-thinking organisations have applied it. One notable example is Google’s BeyondCorp initiative. This approach eliminates the need for a traditional VPN (virtual private network), instead allowing employees to access internal applications from any location but only after proving their identity and device security posture. By treating every connection as potentially hostile, Google reduced the risk that a compromised internal network segment could endanger all its services.

Banks and financial institutions are also embracing zero trust, recognising the high stakes involved in data breaches. By aggressively segmenting their networks, they make it more difficult for cybercriminals to jump from one compromised account to other lucrative targets Some hospitals and healthcare providers have started adopting zero trust to protect patient records, especially as medical devices and telehealth services expand If a single medical device is hacked, zero trust principles ensure the attackers cannot automatically move on to the entire database of patient information

These success stories highlight that zero trust does not have to be a nuisance if carefully implemented. Indeed, many organisations that have adopted it report improved visibility into their networks They know exactly who is accessing what, from which device, at any given time. This visibility can lead to better auditing and compliance, making it easier to investigate suspicious incidents and demonstrate good security practices to regulators.

As networks continue to sprawl across cloud services, remote work remains common, and data privacy regulations tighten, it is likely that zero trust will become an even more prominent aspect of cybersecurity Developments in artificial intelligence and machine learning may further enhance the ability to monitor and respond to threats in real time. Imagine a system that, within milliseconds, analyses a user’s access request, checks hundreds of signals, calculates a risk score, and grants or denies entry accordingly all without the user noticing more than a slight pause As technology advances, zero trust could become more seamless, weaving itself into the fabric of everyday computing. Nonetheless, challenges remain For smaller organisations, the complexity and cost of zero trust might seem overwhelming. In response, many cloud providers are incorporating zero trust features into their managed services, offering simpler, more cost-effective pathways to adoption. Meanwhile, standards bodies and industry groups are working to publish guidelines and

and best practices, hoping to reduce the guesswork and help organisations avoid costly missteps.

Cybersecurity is a continuous cat-and-mouse game, with defenders and attackers each evolving their methods Zero trust, by taking a more granular and dynamic stance, is arguably the next logical step in this arms race. It pushes us to drop the illusion of a perfectly secure perimeter and instead focus on validating every request, every device, and every action. In doing so, it helps us adapt to a digital reality where threats can appear from anywhere inside or out.

In many ways, Zero Trust Architecture is more a philosophy than a singular technology. It challenges traditional assumptions about where we draw our boundaries and who we consider “trusted. ” By encouraging continuous verification, least privilege access, and microsegmentation, zero trust bolsters defences against both external and internal threats For high school students looking to pursue computer science or cybersecurity, it is a fascinating example of how new models can emerge when old assumptions no longer hold It also demonstrates that the human aspect user understanding, organisational culture, and willingness to adapt remains crucial even in a field as technical as cybersecurity

As you contemplate further studies or careers in this domain, remember that zero trust will likely be at the centre of future cybersecurity designs. You might soon be helping companies implement these ideas, refining them with machine learning, or even inventing the next evolution of security paradigms. While zero trust may sound radical at first, it is ultimately about realism. In a world where data and connections flow in every direction, verifying identities, segmenting resources, and assuming that breaches are always possible can provide a more robust and forward-looking security foundation. This mindset of constant vigilance and flexible defence will undoubtedly guide the next generation of cyber professionals and perhaps shape your own journey into the world of cybersecurity.

Mikhail Sumygin Y12

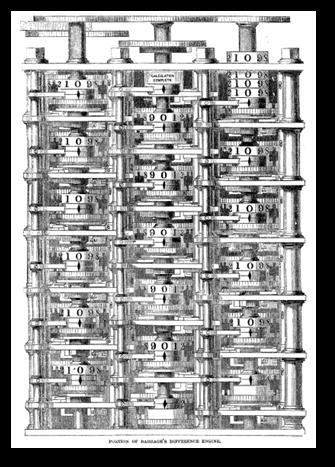

ENGINE | The first computer...kind of It’s really hard to pinpoint a single device as the earliest computer created – does an abacus, Sumerian clay tablet, or a gear-driven, hand-powered, mechanical ancient Greek model of the Solar System (search up theAntikythera mechanism) count?

Either way, Charles Babbage’s Difference Engine is a solid place to start.

Tired of reading massive tables that approximated complicated operations, such as logarithms, and polynomial calculations, Babbage devised to create a machine that did it all for him Thus, in 1822, he announced his design of the difference engine, which was completely mechanical and made use of all sorts of parts we might expect to be more likely to find in a windmill rather than a computer – gears, rods, ratches, and so on. Numbers were represented using 10-toothed gears stacked in columns to represent the decimal system However, all these incredibly intricate mechanical parts came at a price, and an exceedingly high one apparently, as the British government cut funding in 1833 (probably after realising they could have purchased twenty-two steam locomotives from Stephenson’s factory instead of funding a design which only ended up being one-seventh complete for the same sum of money).

While Babbage didn’t give up on his machines, going on to design the Analytical Engine and later the Difference Engine 2 in subsequent years, everyone else around him did, and he died having failed to find any more funding for his work. However, Ada Lovelace took interest in his work and designed some of the world’s earliest computer programs, along with predicting some potential uses of the machine, including making music and manipulating symbols – functions that modern computers still carry out today

TABULATOR | An appetiser of what computers can do 1880. A frustrated inventor can't handle the inefficient and taxing process of counting and processing census questionnaires Enter Herman Hollerith, 20 at the time, who invented one of the world’s first electronic automated counting machines. Inspired by passenger tickets pierced by conductors on trains, he used the concept to create a machine that used punch-cards to store and process the ever-increasing amounts of data generated by censuses.

It worked by lowering metal pins onto the cards; if there was a hole, then a current would be conducted to close a circuit. If a current was detected, this would register a 1; if there was none, a 0 As we know, this principle of using zeros and ones is the foundation of all the hardware that we know and love today.

ENIAC | First-ever general-purpose electronic computer

The Electronic Numerical Integrator And Computer was created by a group formed of US physicists

physicists in consultation with mathematician John von Neumann during World War II It was the first general-purpose, electronic, programmable, computer. Contrary to the aforementioned tabulator, it used plugboards (a wall into which sockets can be inserted), to input instructions, meaning the speed of the machine was not limited to how quickly you were able to shove information into it However, a massive drawback to this was that it could sometimes take days to rewire these plugs to create the desired program.

While it didn’t make use of binary, it was one of the first computers to use vacuum tubes, giving it the label of a first-generation computer. Vacuum tubes are tubes of glass with the air removed, and with electrodes inside, allowing the flow of current in them to be manipulated By adjusting the voltage going into them, they can be made to act as switches. These were much more effective than anything mechanical gears could achieve as they could act as fast as electrons could flow. This allowed the ENIAC to perform up to 5,000 additions per second.

TRANSISTORS | From big-room-sized to small-room-sized Vaccuum tubes, although very convenient compared to Babbage’s mechanical monstrosities, had one fatal flaw: they were massive (and used a bunch of unnecessary energy) If computers could never be shrunk beyond the size of an entire basement (as was so in the case of the ENIAC), any hopes of developing or even commercialising computers any further would be in vain and the industry doomed.

Thankfully, in 1947, Walter Brattain, John Bardeen and William Shockley invented the world’s first transistor. Transistors are made from semiconducting materials, namely silicon, and they work by acting as switches; similar to the vacuum tubes, depending on what is input into them, they either let current through or block it completely, allowing them to represent binary. Initially, most scientists, including our innovative trio, believed that the best transistors could do was perform extremely specialised functions – in other words, they were seen to be basically useless However, very soon applications were found in radios, notably by the newly emerging company Sony, and soon after – you guessed it – computers.

Now called second-generation computers, the transistors in them, only a couple millimetres long, allowed them to shrink massively in size Computers, although still relatively large, now only had to take up a couple cubic metres, such as the IBM 1620, or the DEC PDP-8, which you could stuff into your fridge if you really wanted to relatively

CIRCUITS | Let’s go smaller

It was on a dreary night of January that Robert Noyce beheld the accomplishment of his toils. In other words, he realised that instead of just making the transistors out of semiconductors, this could be applied to all the components, and thus the entire circuit could be made on a single chip, essentially packing all the components together very tightly This would make it way quicker and efficient to create computer parts, and also allow them to shrink even more. Thus the unitary, or integrated, circuit was born, and officially patented in 1961.

Now, the benefit of integrated circuits is that they act as one discrete component. Whereas before you would have a bunch of transistors, resistors, and capacitors connected by an even bigger bunch of wires, now these components are miniaturised and placed very close together, meaning much less power is used This also improves processing speeds as signals take shorter times to travel over shorter distances. Additionally, this allows for production of computers to cost less due to the parts being much easier to mass-produce, as an integrated circuit can be made from just one single silicon crystal.

MICROPROCESSORS | ...even smaller

At this point, computers still used stacks of integrated circuits to make up CPUs It was nice, and they were decently small, so much so that calculators and “minicomputers” began to gain commercial success. However, the release of the Intel 4004 in 1971 took it one step further –although it could only add and subtract, and only 4 bits at a time, the entire CPU was on one chip, called a microprocessor, instead of being assembled from discrete components. This made it even easier and cheaper to manufacture, thus allowing the tech industry to explode to the levels we can observe today, which is why we see computers pretty much everywhere nowadays – in our phones, airPods, even smart-fridges

Computers are now able to be integrated into any device, allowing for instantaneous communication across the entire planet, intelligent flight systems within aeroplanes, or tracking of vital signs in medical machines. Yet without all the work done previously, they never would have been able to evolve into the incredibly powerful and incredibly small machines that we see and use everyday in modern times. From this point technology can only bloom further; million-core supercomputers, foldable phones, dedicated AI chips and even quantum machines are only specks of sand in the sea of endless possibilities that await us in the years to come.

References

https://www.g2.com/articles/history-of-computers

https://www.sciencemuseum.org.uk/objects-and-stories/charles-babbages-difference-enginesand-science-museum

https://findingada.com/about/who-was-ada/

https://wwwibm com/history/punched-card-tabulator

https://www.britannica.com/technology/ENIAC

https://wwwcomputerhope com/jargon/v/vacuumtu htm

https://www.ericsson.com/en/about-us/history/products/other-products/the-transistor aninvention-ahead-of-its-time

https://en.wikipedia.org/wiki/Transistor_computer

https://wwwpbs org/transistor/teach/teacherguide_html/lesson3 html#:~:text=Transistors%20ar e%20the%20main%20component,it%20off%20to%20represent%200.

https://wwwansys com/en-gb/blog/what-is-an-integrated-circuit

https://etc.usf.edu/lit2go/128/frankenstein-or-the-modern-prometheus/2295/chapter-5/

https://wwwibm com/think/topics/microprocessor

https://ethw.org/Rise_and_Fall_of_Minicomputers

https://computer howstuffworks com/microprocessor htm

What lessons can western financial systems learn from an alternative approach to banking? The term ‘teleology’ is derived from the Greek word ‘telos', which, when used by Aristotle, means ‘the end or purpose of action’. Objects, like actions, can have a telos as well and knowing what the telos of an object is can reveal both what an object is and what makes it good. Teleologically, a knife is an object whose purpose is to cut, and the good knives are those that cut well Perhaps we need to consider the 19th century American doctrine of Manifest Destiny to fully grasp this concept – it takes the axiom that ‘It is right and proper for the USA to annex all land westward of the ocean because that is what land is for’.

For Aristotle, precisely the same thing is true for money. Once its function is understood, we will be able to understand both what money is and how it ought to be used In Politics, Aristotle declares the telos of money to be a medium of exchange, which is all we must know to see both what money is and why it should not be lent at interest ‘Money was intended to be used in exchange,’ Aristotle explains, ‘but not to increase at interest.’ Since money’s function is to serve as a way to facilitate exchanges whose end is the trade of useful and necessary commodities, those who exchange it not for goods, but instead for more money, ‘pervert the end that money was created to serve’, and so engage in ‘unnatural’ exchanges ‘of the most hated sort.’ ‘Usury, which makes a gain out of money itself, and not from the natural object of it,’ is ‘of all modes of getting wealth […] the most unnatural’.

Clearly, this left fertile ground for the assault on usury which the Church would mount following its Christianization of the Roman Empire This prohibition of interest was upheld as a key principle throughout the development of the Abrahamic faiths. Despite this, the rise of trade and commerce, paired with greater liberal economic thought during the Reformation, led to the growing recognition of credit as essential for economic development, which would eventually result in the practice of lending at a ‘reasonable’ rate becoming accepted within Europe

‘O you who have believed, do not consume usury, doubled and multiplied, but fear Allah that you may be successful.’ (Surah Al Imran 3:130)

As the global economy modernized, however, Islamic financial systems have adapted differently to enforce the prohibition of interest, or riba. This is possibly due to less economic pressure on Islamic economies to introduce reforms as they remained more agrarian and trade based, with less reliance on complex financial instruments. Another contributing factor, however, is the lack of secularism and the intertwining of religious and government affairs as seen through the prevalence of Sharia law – this law equates riba with injustice as it undermines the fairness in transactions; it allows the lender to profit without any effort or risk, while the borrower bears all the risk.

The telos of money is re-emphasized, it exists as a tool for trade used to measure the value of commodities, not a commodity in itself. As such, the generation of wealth through conventional banking – the profit acquired via the difference between interest gained from loans given and interest paid on deposits taken – is seen as unethical and even sometimes deceptive, exacerbating social divisions as wealth accumulates without effort with those who already have it. In line with these moral principles, the investment into haram industries such as alcohol, gambling or tobacco is strictly forbidden. This extends to the elimination of speculation, maisir, and uncertainty, gharar, covering most financial derivatives like futures and options as well as conventional insurance contracts, affecting the profitability and possible opportunities within Islamic finance

In order to replace riba, a profit and loss sharing paradigm is adopted, which is predominantly based on the mudarabah (profit-sharing) and musharakah (joint venture) concepts of Islamic contracting, replacing the cost of capital as a predetermined fixed rate Profit and loss sharing is a contract between two or more transacting parties, allowing them to pool their resources to invest in a project in which all contributors share both risk and reward Due to these principles, Islamic banking relies on asset backed financing to forge a strong connection between investment and the real economy in sectors such as agriculture, sukuk bonds, project financing, but especially real estate and lease-to-own agreements.

For example, the concept of mortgage financing differs greatly from western systems through the use of murabaha (cost – plus financing) – instead of loaning the necessary downpayment to the potential homeowner, the bank might buy the property from the seller, and then re-sell it to the buyer at a profit whilst allowing them to pay the bank in installments. In other words, the bank profit cannot be made explicit as would a fixed interest rate, and therefore there are no additional penalties for late payments which would lead to many issues for investors in the conventional system, creating a more equitable financial environment

Perhaps a parallel can be found between the core morals at the foundation of Islamic banking, and the recent growth of ethical investing, with sustainable equity and fixed income funds accounting for 7 9 percent of global assets under management It is not only the similar exclusion of harmful and haram industry as highlighted by figure 1, but also through the lens of public and welfare economics, there is a shared sentiment of social justice and economic equality. Both systems declare that private market actions should contribute to the public good, maximizing social utility rather than individual profit alone. In other terms, this could lead to greater demand for policies that promote socially responsible investments as both ideals discourage harmful externalities, and these policies may manifest themselves as tax incentives, sustainable investments, or implementing regulations that promote corporate social responsibility.

Despite of its essential role in the progress of efficiency and equality in a society, 2.7 billion people (70% of the adult population) in emerging markets still have no access to basic financial services, and a great part of them come from countries with predominantly Muslim population However, Islamic banking addresses the issue of cfinancial inclusion through the development of microfinance initiatives encouraged through the use of risk sharing financial instruments In Bangladesh, such initiatives have enabled thousands of rural entrepreneurs under Islami Bank Bangladesh Limited (IBBL) to grow their businesses and improve their livelihoods without resorting to high-interest loans Individuals from lower socioeconomic backgrounds who may struggle to access traditional banking services are given much greater opportunities

opportunities to be able to contribute to the economy, whilst also aiding their personal welfare

Financial inclusion therefore can be seen through the provision of services for underrepresented populations, especially when considering the Muslim population of almost four million in the UK However, Al Rayan Bank, a pioneer of Islamic banking in the west, claims that approximately 80% of its fixed term deposit customers are non – Muslim. So, how did this alternative approach to banking first gain traction in the UK, the largest western hub for Islamic finance?

Islamic banking in the UK began in the 1980s to meet the demand from the Muslim community for Sharia-compliant banking services, starting with the establishment of Al Baraka International Bank in 1982, which laid the foundation for Islamic financial services in the country However, it was not until 2004 that the UK saw its first fully licensed Shariacompliant retail bank – the Islamic Bank of Britain (nowAl Rayan Bank) –

a significant milestone that opened the door for the future growth of Islamic financial services. The UK government’s willingness to accommodate Islamic finance reflected a broader strategy to support diversity in financial services and attract investments from Muslim-majority countries.