The Academic Journal 2024

Foreword

Though I have only spent two short years at Saint Olave’s, it is always striking for me to consider how many immense privileges I have enjoyed here. Perhaps the most striking of these privileges is the incredible, perpetual academic fascination I witness daily from both students and staff, and the opportunities to nurture and explore this shared fascination – for want of a better term, the opportunity to nerd out, pretty much constantly.

Accordingly, I am delighted to present the Academic Journal of 2024, a brilliant celebration of Saint Olave’s collective curiosity. As always, its contents represent the diversity of interests present across the school, from mathematics to artificial intelligence to medicine; modern, historical, and even ancient politics; articles attacking pressing contemporary concerns, and articles tackling narrower thought experiments. Thank you to everybody who has contributed to this journal, as well as every society leader and attendee who all continue to foster an inclusive, inquisitive environment.

My sincerest hope is that there is something here for everybody, and that this could inspire you to go down your own Wikipedia rabbit-hole, seek your own radiant beam of knowledge, and come out the other end a little wiser – perhaps even clutching an article for next year’s journal. For now, enjoy!

– Olivia Tang, EditorTrijagati Senthil-Kumar

Law Making in the UK; is it democratic?

Democracy underpins British political identity; from the foundations of parliament referenced in the Magna Carta to the constant discourse on the nature of the representative legislative system in, today’s, post-Brexit UK. But to what extent is the legislative process in the UK democratic? Democratic metrics generally place the UK as being less democratic than other full democracies such as Norway and Sweden (as suggested in the EUI’s democratic index in 2021); the worst scores for the UK were in the categories of political culture and functioning of government which suggests that, on a broad scale, there is significant remit to improve the current systems in place.

Considering the wider electoral and political administration of the UK is critical in identifying inhibiting factors in affecting democracy within the UK. Lingering in recent British political memory, is the Brexit 2016 referendum; voter turnout for those aged above 65 was identified as around 90% whereas the most generous estimates suggest a 64% voter turnout for those aged 18-25. Discrepancy in participation across age groups has resulted in a democratic system that is ineffective at electing representatives for the legislative process. Widespread disillusionment with government, a first-past the post electoral system and a further cache of interconnected factors ultimately leads to the production of a House of Commons that is only moderately representative.

Though it is significant to consider the wider context in which the legislative process fits, the focus of this article will be on considering this question with a greater emphasis on the processes of legislative process, namely the committee state and report stage in Commons. Comprised of the House of Lords and the House of Commons, the dual chamber system in parliament aims to achieve parliamentary scrutiny and affect democracy through the production of necessary legislation

efficiently. Initially, the House of Lords (the unelected chamber comprised of peers appointed by the acting Prime Minister) seems to be a glaring inhibitor of democracy. Whilst Lords is undemocratic in isolation, the complimentary nature of the two Houses enables the chamber to act as an effective check on the ‘executive’ (governing majority) which largely dominates the legislative process. The Committee Stage, in Commons, is widely regarded as most vulnerable to the domination of the government which can hinder parliament’s ability to influence legislation. Line-by-line scrutiny ((as well as evidence taking) take place during this stage and these processes are carried out temporary Public-Bill Committees (PBCs). The process by which members are elected for PBCs is heavily influenced by government whips though there being an expectation to elect those with relevant expertise and represent the Party distribution in the House of Commons. As the committee selection is heavily whipped, this poses two risks to the democratic nature of the Committee Stage. The first is the lack of representation for dissenting members within in the Second Reading of the legislative process in committee stages; for example, though 17% of members expressed opposition to the Education Act 2004, only one member was chosen for the committee as there is no requirement to select members representative of the spectrum of opinions within a party. The second vulnerability is lack of expertise in the Committee Stage due to the vague requirements for choosing PBCs. The heavily whipped nature of committees leads to government members voting along party lines with adversarial interchange across parties. Ultimately this renders the Committee Stage, in Commons, unable to affect democracy leading to almost all government amendments to legislation being tabled as opposed to half a percent of non-governmental amendments raised.

Immediately following, the Report Stage is an opportunity for all members of Commons to debate the amendments proposed. However, the limitations of the Report Stage most critically are due to the timing constraints Commons operates under; ‘programming’, the allocation of time for the consideration of different parts of bills, acts to limit the extent to which parliament can influence legislation. As a result of these timing constrains, large swathes of bills can pass through without being considered; this threatens the extent to which democracy is upheld through the scrutiny of bills in Commons. Non-governmental amendments are less likely to chosen by the Speaker to be debated which when paired with timing constrains results in the Report Stage only moderately acting as a vehicle for democratic influence in Commons.

By focusing on the Committee and Report Stages in Commons, the points at which the most significant change and scrutiny usually occurs, the overall impression is of a system that works only moderately and is unable to restrain the dominance of the executive. This analysis must be contextualised with reference to the House of Lords (as a house without a government majority) which acts in parallel to scrutinise governmental amendments. Lords does this to an extent with bills being more likely to be rejected in Lords across the legislative process; however, Lords’ is obliged by convention and legislation to defer to Commons in contentious cases (particularly when considering finance bills) allowing, ultimately, for the dominance of Commons. Overall, the ineffectual election process paired with the dominance of government in the legislative process cumulatively produces a legislative process that does not effectively allow for parliament to influence legislation with critical areas such as the Committee and Report Stages being significantly limited.

Aashman Kumar Latin Squares

Here is a problem due to Euler: there are 36 officers, belonging to 6 different regiments and having 6 different ranks such that each combination of regiment and rank occurs only once. Can the officers march in a 6 by 6 grid such that in any row or column each regiment and rank occurs only once?

Take a moment to give it a try, maybe with a smaller square.

The answer for 36 officers is no. The proof is not pleasant; Euler himself could not find it and it was finally proved by exhausting every possibility by hand in 1900.

Unfortunately, work on the generalization of this problem continued in the same vein. Euler speculated that all arrangements of n^2 officers were possible so long as n wasn’t of the form 4k +2. This pattern did not exist; other than the case of n = 6, 7

which he originally considered, and the case n=2, every other possible arrangement works. This proof was provided computationally.

Luckily, this work was not entirely without satisfaction. Supposed we considered the officers again but relaxed the requirements on rank and only considered regiment, so that each regiment only occurs once in each column and row, but rank doesn’t matter at all. This sort of arrangement is called a Latin square and is a wonderfully useful tool.

An example Latin Square: instead of regiments, each number occurs once in a row and column.

Surely, this is no mathematical innovation, but just a Sudoku grid? Before you make your judgement, let’s look a bit deeper into the structure of these Latin Squares. How else could we think about what we’ve done here? Here’s one way to look it at: consider an upcoming (heteronormative) ballroom dance, in which n boys are to be paired up with n girls for n dances. Then we may let the (i,j) position on the square correspond to the number of the dance between the ith girl and the jth boy. Naturally, since each column i corresponds to a girl, she will have her timetable full, with a dance at every timeslot, and she will never be double booked. The same can be said for each boy, who’s timetable is encoded in the jth row. (maybe try making sense of this in your head with the example square above)

This is sort of cool and (slightly boring) use cases can be conceived from this view in scheduling and managing operations. But wait; it seems that we’ve skipped over something: how do we know we can even always construct Latin squares of all sizes? Is there a procedure that we could use to reliably generate Latin squares? Well let’s start by filling in the first row with the symbols from {1,2,3…n}:

From here, it is obvious that every subsequent row is just going to be some rearrangement of our original. What could we do to produce every row that follows? Could we try just shifting every subsequent row to the right by 1?

That seems to work. What if we tried to do it by 2? If n was even, that would mean about halfway down the table in the right column we would see n again! Or if we tried 3, then if n was a multiple of 3 we would see n two more times in its initial column. In general, it seems that if the size of our shift is a factor of n, then n is going to show up again and again in its own column which is against our rules. Therefore, the size of our shift “m” and “n” ought to be coprime. Also, if m>n, the shift m is equivalent to the shift of size m-n, since m = n +(m-n) and a shift of n just brings you back to where you started (i.e; is equivalent to a shift of 0)

Let’s state our rule for cyclically generating a Latin square then:

Take an initial arrangement of the items {1,2..n} in the top row. For each subsequent row, move each item to the right m places, where m is any integer such that 1<= m < n and m and n are coprime.

Applying such a rule n-1 times generates a Latin square as follows. If you apply it the nth time however, you end up back where you started at the original arrangement,

since each item has moved nm slots to the right in total, and moving any integer multiple of n is the same as not moving at all. So, we have repeatedly applied a transformation until we have come back to identity.

This directly corresponds to a cyclic group.

A group is simply defined as a set equipped with an operation that fits a few criteria:

• applying the operation to members of a group results in another member of the group (the group is “closed” under the operation)

• the group has an identity element. Applying the operation with the identity element does nothing

• every element has an inverse, so that if you apply the operation on an element and its inverse you come out with the identity

• the operation is associative

Groups are a powerful tool which we can use to understand transformations and symmetry (and show their face in all sorts of areas of mathematics and physics, such as famously the proof that there is no quintic formula, and they are also intimately involved in the symmetry conditions of Noether’s theorems, for example)

Let’s look at a concrete example of a group to make sense of things. Think about the set of rotations of an equilateral triangle. Specifically, look at this set: {0,120,240}, corresponding to rotations by those values in degrees. We can define a group with this set under the operation of applying rotations to the triangle. Check for yourself that it satisfies all the axioms we laid out for groups earlier.

With this set, you might notice something interesting: each item in the group can be obtained by repeatedly applying the 120-degree rotation. We call this a cyclic group.

Now, back to Latin Squares. Try and verify that repeatedly shuffling the rows by ‘m’ corresponds to a cyclic group and try and see if you can say specifically explicitly what the items in the set look like and what the operation actually is.

So far, this is a neat correspondence, but it’s all just trivia it seems. Why does it matter that we can rewrite this procedure as a cyclic group? The link between Latin Squares and groups goes deeper than you might expect.

Let’s relax our conditions on groups a little bit. We can define a quasigroup as following all the axioms of groups but associativity, and instead they satisfy “left” and “right” division. This means that for elements gi and gk there exists a gj such that gi*gj = gk, and for elements gj and gk there exists a gi such that gi*gj =gk. (Trivial though this may seem, keep in mind we haven’t even specified whether the operation * follows associativity or commutativity. These are quite lawless and strange structures!)

For a given quasigroup, if gi*gj = gk, with the quasigroup G = {g1, g2 … ,gn}, then we can define a matrix A such that each entry a(i,j) is equal to k. But notice, by left divisibility if we look at a column i then for each different k, there must exist a unique j, and we know each k must be unique because to go from gi to gk theres only one other element in the set that allows us to do this. (try to prove to yourself that if there were multiple, theyd all be the same using the given axioms) So in each column i, every value k is unique. But of course, we could easily construct the same argument by right divisibility to show a similar result for each row. This means that every item appears in each row or column at most once.

This means that if a set with an operation is a quasigroup, the matrix we generate must be a Latin square! Now we have a way to translate between every quasigroup and Latin square. This is very interesting because it allows us to translate between the nebulous algebraic quasigroups whose properties we’ve defined very weakly, and Latin Squares, which can be treated with tools from linear algebra or combinatorics, and which have very specific constraints and criteria.

We can use this connection to do all sorts of things, such as work out an upper bound on the total number of groups (groups are a very small subset of quasigroups; the condition of associativity is quite the constraint!) or perform operations between quasigroups like direct products.

(A direct product is not quite the same as the matrix products that you know and love. Instead, the direct product between a square of n^2 and one of m^2 produces one of scale (nm)^2, with the resultant being formed of n^2 copies of m arranged in a grid, with each copy multiplied by the term in n^2 that lies in the same position as the copy of m)

Let’s bring everything we’ve learned back to the beginning with Euler’s officers. We said that we could get Latin squares by considering just one feature: rank or regiment. What if we wanted to look at both? A good guess would be to first construct a Latin square for rank and then one for regiment, as follows:

Above are Latin squares for rank and regiment respectively. Mutually orthogonal Latin squares are also called Graeco-Latin squares for a reason evident here.

If we try to mash these squares together to get something encoding both rank and regiment, we get the following:

Here, every combination of symbols occurs only once. This is equivalent to each combination of rank and regiment occurring only once, and we know from our

original two Latin squares that each rank and regiment occurs only once in each row and column. Hence here is a solution to the officer problem for the case of n = 3. We call a pair of Latin squares that produces a resultant Latin square that has the properties of this one “mutually orthogonal Latin squares” or MOLS for short.

It is clear now that Euler’s original work on his officer problem, and later work on computational proofs were studying the much broader and more widely applicable MOLS, not just his soldiers.

What else can we find out about MOLS, without exhaustively grinding through every possibility?

Many questions we can ask about MOLS are soluble particularly elegantly by translating the problem to quasigroups and then using the properties of groups and fields to identify the structure. This is a recurring theme of sorts, where seemingly different fields and ideas are just shadows of a deeper idea that defines them both. This is true in the most arcane fields of mathematics and has formed the basis of recent breakthroughs like the proof of Fermat’s last theorem. The pleasing interrelated nature of mathematics doesn’t just have to be found in hefty, groundbreaking theory though; sometimes, you can spot it just by playing around with Sudoku grids.

5G: Why Clash Royale always buffers even with 5G signal

“I swear I always lag out when I’m about to win”

You’ve heard of 5G. The brand-new technology that’s 10x faster than 4G. The EE Guy always talks about it on the ads. It’s the 5th standard coming after 4G and before 6G. But even though on paper it’s the fastest download/upload speeds us consumers can get whilst out and about, why do we still struggle with buffering when we’re watching a YouTube video or about to take the enemy’s king tower on Clash Royale? The answer is simple, but also complex.

Before you understand why 5G sometimes seems so slow, we first need to talk about how our little metal boxes called phones can be connected to the Internet on the tap of a button. 5G is in fact a mobile networking standard, where a service area is divided into small areas called cells & all wireless devices capable of 5G communicate to a cell tower via radio waves through their antenna. After establishing a secure connection, your device transmits and receives data to and from the internet by sending these waves to the cell tower. Many network operators even use millimetre waves, which have a range between 30 and 300 gigahertz, and have a wavelength between 1 and 10mm, hence the name millimetre. This larger frequency allows for higher data transfer rates. However, mmWaves have a shorter range than microwaves (not the one you use to heat up your ramen at 3am) meaning they have trouble passing through walls and buildings. Because of this, 5G has a denser network of smaller cells.

5G is implemented in three main bands: low, mid and high-band mmWave. Lowband 5G frequency is similar to 4G frequency (600-900 MHz), which means that download speed is only slightly better, and low-band cell towers have coverage and range similar to 4G towers. Mid-band uses microwaves with a frequency of 1.7 to 4.7GHz. These towers were the most widely deployed, with the majority being installed in 2020. High-band uses frequencies of 24-47 GHz, near the bottom of the mmWave band, and download speeds are often in the gigabit range, much faster than the 5-250Mbps achieved by the low-band 5G. As the coverage of high-band cell towers are smaller than mid or low-band towers, high-band cells are only deployed in areas where people crowd or in dense urban areas, however the deployment of many of these cells were hampered by 5G conspiracy theories.

According to Ookla, that one website your friends use to show off their 1 x 10 7Mbps download speed, the latency of 5G averages around 17-26ms in the real world, compared with 36-48ms for 4G. 5G is also known to be capable of a peak data rate of up to 20Gbps, with average download speeds of 186.3Mbps in the US by TMobile, and 432Mbps in South Korea, and is designed to have a higher data capacity too. Midband 5G is capable of 100 to 1000 Mbps in the real world, and a much greater coverage than mmWave, compared with 18-36Mbps with 4G. However, you need to take these numbers with a pinch of salt, as usually when you are downloading something the data rate fluctuates. It doesn’t always stay at the peak rate; in fact it rarely reaches its advertised peak rate.

This is a large factor for why you can experience buffering whilst streaming a video or playing a game. When you’re streaming a video off the internet, the video is actually being pre-downloaded whilst you watch it. The part of the video that your computer has finished downloading is what the light grey bar on the red bar shows; if you look at it closely if your red bar meets the grey bar your video starts to buffer. Sometimes this can be referred to as bandwidth, meaning the maximum amount of data your device can receive over a set period of time, most commonly Mbps (megabits per second) and Gbps (gigabits per second), as referred to earlier.

Bandwidth can be thought of like a motorway. If there’s only a couple of lanes, there’s obviously going to be issues, like traffic jams etc when more people connect to the network. However, the more lanes and wider the motorway (representing the frequency of the signal), the faster speed you will experience as there is more space to allocate to each user. 5G aimed to maximise the number of lanes in the network to increase speed for each person. In addition, the less users connected to a cell, the faster speed you will also experience, as again the tower can allocate more space to each user.

However, there is also another factor that can affect your experience on the network: signal strength. You may be familiar with this from the bars on the top of your phone screen, with the more bars the better. This essentially represents the quality of the signal you are receiving, most often the largest factor affecting this being the distance from your nearest cell tower, and another being the amount of walls and the thickness of them the signal has to travel through (hence why you lose all your signal when you enter the tunnel before Elmstead Woods on the train). Although you associate the number of bars you have with the quality of your experience on the cellular network, it’s not the biggest factor, as if you have 4 bars but a million people are connected to your cell tower, you’ll still experience a sluggish speed.

Essentially, when you’re buffering, your phone isn’t receiving data fast enough to keep up with video playback or the speed of the game your playing, whether it’s due to amount of people connected in your cell, the frequency of your signal (in this case it’s most likely not the issue as 5G has a much larger frequency), or your signal strength. So, the next time your opponent spams the hee-hee-hee-haw emote after you lose a game of Clash Royale owing to bad signal, you can blame your phone for downloading the data so slow.

Folabomi Adenugba

The exploration of Q* into the unknown

On Wednesday 22nd November 2023, a small revolution occurred. OpenAI, the creators of ChatGPT announced that their artificial intelligence model, Q*, could solve simple math problems. Prior to this, language models like ChatGPT and GPT-4 could not do math very well or reliably. This is because these models work to absorb large amounts of data, then use this to discover patterns and make inferences before making a response. In contrast, Q* was not only able to do all of this but had been trained by rewarding chains of thought instead of producing a correct output, almost as if it had a cognisant ability to understand theoretically what it was doing. This is why Sam Altman, the former CEO of OpenAI, described this innovation as ‘pushing the veil of ignorance back, and the frontier of discovery forward’. Ultimately this could be the breakthrough needed to create artificial general intelligence capable of being implemented into society to work more efficiently than humans.

Machine Learning algorithms:

Learning algorithms are a set of fundamental rules applied by the artificial intelligence to perform tasks and make judgments. The Artificial intelligence, Q*, is trained on these algorithms to ‘teach’ how it should use input data to produce an output. This output is then evaluated by a loss function to judge the success of each model based on the labelled outputs or the discrepancy between outputs and inputs. This feedback from the loss function is then used for optimization where the weights are adjusted to improve the model until a threshold accuracy is met. Once the model has been sufficiently optimised, it allows the Artificial intelligence, in this case Q*, to apply its ‘knowledge’ in solving math problems.

Supervised learning

When developers have both sets of inputs and labelled outputs, supervised learning is utilised to train the artificial intelligence model. During this process, input data is

fed into the model with corresponding outputs to allow the model to encode the relationship between the inputs and the outputs. This encoded relationship is found through 2 methods:

Classification: where an algorithm recognises trends in the form of classes which is then used to predict the category of another input data. Regression: where an algorithm works to understand the relationship between dependent and independent variables, commonly applied to projections such as sales and revenue for businesses.

Unsupervised learning algorithms

In circumstances where developers can’t access labelled data and instead only have troves of data, unsupervised learning is employed to find patterns within the data (unlabelled inputs). The algorithm receives unlabelled inputs, which it then uses to create clusters between similar inputs and thus allow outliers to be identified. In turn, this allows novel inputs to be placed in the most similar group from which decisions can be made thereafter. Unsupervised learning is utilised in Netflix recommendation algorithms to identify clusters of users with similar viewing habits to recommend additional streams to viewers. This is possible due to the lack of outcomes which as a result allows the artificial intelligence to autodidact and make innovative insights into the data.

Reinforcement learning algorithms

In situations where an artificial intelligence is in a controlled environment, it can act as an agent, observing and recording its actions then evaluating their successes. Reinforcement learning is the most human-like form of learning algorithm as it involves a human defined start, end state and reward function which the agent trains itself on in the controlled environment. It recognises the fact that there are many ways to reach the end state and thus works through trial and error and where after each trial a results function judges how successful each approach was. Reinforcement learning is effective as AI can train themselves, hundreds, thousands, and billions of times a day, whilst also receiving constant feedback from digital processors.

To build upon this, OpenAI’s Q* utilises Q learning which builds upon basic reinforcement learning where the goal is to find the most efficient action for any given state(position). An optimal action-value function, estimates the expected cumulative reward an agent can achieve from taking any possible action, providing the agent with its own decision-making capabilities. This loss function compares the predicted q value with the estimated target Q values using a separate target network (a copy of the main neural network). To further enhance the training of the agent, it uses a memory storage buffer to store past experiences as ‘memories’ which allows the network to learn from a diverse set of experiences, improving stability and sample efficiency.

Neural networks:

Q* would be built upon neural networks working to mirror neurons in the brain. Regularly, neural networks contain multiple layers where each neural network takes in a vector (which encode information in a format that our model can process) and applies a nonlinear transformation to the input vector through a weights matrix. Connections between neurons are built more specifically through weights (determine the strength of the connection) and parameters (the factors that define the model).

The process is as such:

Initially, the Input layer is determined.

Weights are then given to each neuron in each layer to determine its significance, which is determined through minimising loss algorithms such as gradient descent. These algorithms work to find the lowest point on a loss function graph, by adjusting parameters based on the predicted outputs and their desired values in the direction of the steepest descent, until this lowest loss point is found.

Inputs to a neuron are multiplied by the weights matrix and then summed.

An activation function is applied to the output of each activation unit. It introduces non-linearity into the network, allowing it to model complex relationships in the data.

Yet unlike regular neurons, the neural networks in Q* also work to incorporate qlearning. Therefore, the input layer would instead receive a state representation which it would then encode into a vector, allowing for a non-linear transformation to be applied in the hidden layers. This way an output of the estimated q values for possible actions are produced. As the layers progress, the representation of the outputted data becomes more abstract since neurons’ threshold weight becomes more complex whilst also extracting more complex patterns from the data. This in turn allows for the maximum cumulative rewards for that given state and thus the most efficient policies (method) can be calculated.

The arrangement of layers within the Q* artificial intelligence neural network builds a neural network topology. Different neural network topologies create different the arrangement of layers, to determine the way data flows between neurons and the interrelationship between layers within a neural network. This provides each topology with the ability to maximise the learning capacity of the given agent for its given task/s.

Regular neural networks use topologies such as:

Feedforward neural networks

On the simplest of scales, feedforward neural networks, consist of densely connected layers, where data flow is unidirectional throughout the whole topology. As a result, inputs are limited to only moving forward through each layer once a nonlinear activation function has been applied. This then produces an output commonly used as a prediction for a specific value, allowing for flexible functional approximation. Due to its simplicity, feedforward network can easily be understood, as well as provide the ability to be manipulated to account for specific needs of the model so that it can easily be trained.

Fully connected layers

To build upon feedforward neural networks, fully connected layers consist of layers where all the neurons from one layer are connected to all the neurons on the next layer allowing for comprehensive program flow. However, due to the large number of connections these topologies are limited to smaller models. Yet on the contrary the numerous connections allow them to be fit for use in hidden layers as fundamentally, hidden layers create a hierarchical relationship between layers which can be created through the large amounts of output data produced from each fully connected layer.

Convoluted layers

Increasing in complexity, Q* would use convoluted neural networks that work by reducing the input vector into a smaller output vector using filters to detect patterns in data so that in future, these patterns can be recognised by the model and in turn be used to make accurate predictions on the expected Q value.

The vector output of the input layer is then processed by a convolutional layer of which a filter (a Matrix of ‘n’ by ‘n’ weights) is applied over a receptive field (segment of the input vector). Within this process, each element within the receptive field is multiplied by the filter to produce a dot product, before being repeated for other receptive fields. Since the dimensions of the filter don’t match the size of the inputted state vector, the output vector for each neuron is smaller than or equal to the input vector. Before moving onto the neuron in the next layer, a

Rectified Linear Unit (ReLU) transformation is applied to the feature map, introducing nonlinearity to the model.

The outputs from the convoluted layer are then passed to the pooling layer which transforms the data into a more manageable form. In this layer, a different filter, that lacks any form of weights applies an aggregation function to take a series of inputs and find the maximum/ average value which it sends to an output array. Furthermore, this output from the layer has reduced dimensions without the need for setting parameters and thus has a much smaller computation time.

Deconvoluted layers then act as a reverse function of the convoluted layers and they are used to up sample data to recover features and increase the dimensions once again if needed. This provides the neuron with the ability to recover lost details from down sampling such as spatial information (arrangement of elements within input/output) which have been extracted from the pooling and convoluted layers. The output array is then processed by the fully connected layer, connected to the output layer. All neurons connected in the fully connected layer are directly connected to all other neurons in the previous layer, allowing a SoftMax activation function to easily classify inputs appropriately. This SoftMax activation produces a probability distribution over the different classes of q values so that the class with the highest probability output by the SoftMax function is considered the predicted q value.

Loss function is then applied to evaluate the success of the neural network. If the loss is successfully minimized, this process is repeated for different states providing the model with the ability to find the most effective action for each state. If not, it adjusts the weights to repeat the process until loss is minimised.

The significance of Q* is undeniable due to its complexity and efficiency. Moreover, it pioneers a way for an Artificial intelligence model to ‘think’. However as defined by OpenAI themselves, an artificial general intelligence should surpass humans in most economically valuable tasks. This is practically impossible. Firstly, an artificial general intelligence would require a vast amount of data to be trained upon spanning all possible tasks that a human could work in, requiring large amounts of resources to gather. Even if this were possible how could it be ensured that the method the model creates to complete each task is possible and does not endanger human life? Most importantly, the fundamental concept of an artificial intelligence is to work like humans, which is inherently flawed due to artificial intelligence’s lack of sentience and thus emotion. This prevents an artificial general intelligence from exhibiting any empathy and would prevent it from making a substantial mark in the creative industry for example where a deeper and emotional connection with the work produced is required. Despite this, the development of Q* is an undisputable turning point for artificial intelligence and progressing in artificial intelligence logic and thinking. Although they won’t replace us, we should work in tandem with such a powerful tool in the coming years of their development.

Wafi

Ali, Year 13

Exploring the Links Between Behavioural Economics and Environmental Economics

Behavioural economics analyses the deviations of human thinking and decisionmaking from rational choice theory, the standard economic decision-making model for Homo Economicus. In this brief article, I delve into some of the connections between concepts within behavioural economics and climate-related disaster mitigation, not just a topical issue, but a fundamental strand of research which can be used to explain (although it is a bit of a stretch) why many low income countries (which perhaps are also correlated with likelihood of climate disaster in some regions) tend not to expand as quickly and catch up with countries at the top of the charts. The graph (left) portrays that lower income and middle income countries tend to be more vulnerable and prone to climate disasters naturally, but economically, they have less capacity to adapt in accordance with their risk, whether this is through building disaster-proof infrastructure or by other means.

Graph source: (IMF, 2015)

Rational choice theory has an intricate link to climate disaster mitigation, especially in how much victims are likely, in preparation, to save for themselves and in protection for the relative good. A study conducted by R. Hasson, A. Löfgren, and M. Visser, studied disaster mitigation as public good investment, and therefore initially used a prisoner’s dilemma matrix to depict the likelihood of investment, where ‘adaptation’ means to invest for oneself, and ‘mitigation’ is investment for the wider good. In the case of disaster, 100% investment in mitigation results in higher protection for everyone, higher than overall payoff if everyone invested 100% in themselves. The diagram below shows this, where the Nash Equilibrium is investing in oneself, according to rational choice theory.

Although for the diagram above, there are many more parameters that need to be valid for the exact numbers to show, both tables depict that in both levels of vulnerability, the prevailing option is to Adapt. Therefore, society ends up in a Pareto-inferior outcome. The socially optimum position of full mitigation is all investment in the bottom right cell. The risk/return ratios (the loss from free riding)

are constant for both levels of vulnerability, yet the overall payoffs in the lowvulnerability table are greater than in the high vulnerability table.

The reason I start with this model is that deeper study within the model actually showed that there is a negative correlation between vulnerability of the potential victims and the amount they invest The Olavian Economist 2024 5 in mitigation. Therefore, in countries that are the most likely to be affected heavily by climate change, smaller economies such as Bangladesh and Sri Lanka, for example, which are closer to sea level, the investment is more likely to be uncoordinated, ‘selfish’ investment, whereas these are the areas which have the greatest likelihood of natural disaster and greatest need for investment in mitigation. Yet this result is not surprising, firstly as it is more costly, in terms of a cost of a disaster, to mitigate in the high vulnerability scenario, and second as in both scenarios, less than 30% on average of participants in a separate questionnaire study, stated that they believed other participants would invest in mitigation. This certainly has implications for binding international commitments as the uncertainty regarding preferences for cooperation by others greatly reduces an individual agent’s own willingness to engage in cooperative measures.

Behavioural economics also links into business decision-making when it comes to the cognitive bias of hyperbolic discounting, which, in simple terms, refers to the tendency to value immediate, though smaller, rewards more than long-term larger rewards, implying an inverse relationship between the discount rate and the size of the delay. Perhaps the discount rate is not actually a function of relative location in time, but a function rather of time delay. In

Fig. 1.: phrase plane trajectories of optimal sustainable and collapse solutions (solid), with a constant discount rate, and with a natural growth rate with critical depensation that follows the curve shown by the dashed line. The sustainable trajectory converges to the optimal sustainable yield (*) while the collapse solution converges to zero stock

any case, the application of hyperbolic discounting in the real world is in human selfcontrol problems, for example, substantial credit-card borrowing at high interest rates, and substantial illiquid wealth accumulation at lower interest rates. These issues inextricably link to resource management in environment-related sectors, fishing being one. I will below briefly explain how the bias of hyperbolic discounting can differentiate the business sustainability of the committed businessman and the business collapse of the naïve hyperbolic discounter businessman.

Fig. 2.: Plot of trajectories for optimal sustainable solutions (solid), with the ‘hyperbolic’ discount function described by Eq. (12). The dashed curve shows the locus of the natural growth function F(x) = h and the location of the sustainable equilibrium, (xs, hs), is marked by an asterisk. The trajectory of a continuously re-optimising naive planner is shown by the dotted curve.

The renewable resource of fishery is used in this example, but within environmental economics, this same model could be representative for problems such as water scarcity, forestry, and the Earth’s carbon cycle. The model determines consumption (in the form of harvesting of stock) and stock pathways for a businessman who is either committed, in the sense that they choose an optimal path planned for, or naïve, in that they believe themselves to be committed but in fact re-optimise and adjust their plan continuously as time passes, believing the discount rate to be relatively high and continuing to harvest. Assuming that there is constant elasticity of utility in this model, there are two trajectories that form. The graph Fig.1 shows the solid line of the committed businessman, who’s harvesting rate is high in the initial period, when the discount rate is high. The result is that fish stocks initially decrease. Yet, as the discount rate falls over time, the businessman will reduce the harvest rate temporarily, to allow the stock to rise to equilibrium level.

Yet, the hyperbolic bias affects the naïve businessman, who succumbs to the temptation to reoptimize as time passes. This naïve businessman will discount the

future hyperbolically, such as that the discount rate ‘now’ is high, while the future discount rate will be lower: this statement if consistently used, will mean that the harvest rate continues to increase. The dotted curve on the graph Fig.2 shows this trajectory. The initial harvest rate is also set to lead to the *equilibrium point, yet with very short intervals, the businessman is starting on a The Olavian Economist 2024 6 new path to equilibrium, and every new curve represents this new intention per change. As time passes, stock levels fall.

There are some cases where a collapse pathway however, is stable: if the productivity of the stock is not high enough to justify the ‘investment’ (in the form of foregone consumption) necessary to return stock levels to the sustainable equilibrium. This would mean that at some point, the businessman will reach a critical initial stock level, below which returns on the stock are not high enough, after which the collapse pathway will provide greater utility than sustainable management. This is analogous to liquidating the asset and investing in an alternative higher-yielding asset. The graph Fig.4 shows this, depicting the same critical initial stock level 1.3, but the more efficient solution once this is reached being the collapse pathway which more quickly ends the business, saving overall opportunity cost.

Fig. 4.: Utility for both the optimal sustainable (open square) and optimal collapse (open circle) solutions, plotted against initial stock level, x(0). The critical initial stock level, xc is shown by the vertical dashed line

The hyperbolic discounting bias has been found in many cases of fishing, one of which being the 1977 Canadian Cod management. In 1977, Canada extended its jurisdiction and took a wider role in the management of Newfoundland fish stocks, and during the course of the 1980s, fishery organisations employed virtual

population analysis to estimate and forecast Atlantic cod stock. This, even after advanced population analysis, was continually an overestimation in the years 1982, ‘83, ’84, ’86, and ’91. These overestimations encouraged harvesting at higher than optimal levels and was one of the principal factors in the demise of the northern Atlantic Cod. This was incredibly indicative of the hyperbolic bias among profitseekers through the consistency of overestimations, even with innovation in technology.

In conclusion, behavioural economics clearly has multiple intricate links with environmental decisionmaking and resource management, yet many would question whether in fact this behavioural failure on our part could be considered market failure. Some argue that market failure itself leads to behavioural failure, which leads to continued market failure, fundamentally because people are not isolated decisionmakers usually, rather their decisions are made in the context of markets, rewards, habits, and social rules. Therefore, the tests of rational behaviour must be considered holistically with the links with the experience provided by an institution, hence, researchers interested in policymaking should think about the power of ideas of rationality spill-overs and rationality crossovers. Studies have shown that arbitrage within an economy can have immediate effects on pushing people’s rationality towards rational choice theory, hence, many policymakers in the future may want to think about whether market-like arbitrage can reduce behavioural failures in the environmental sector, or whether artificial arbitrage-like government intervention or solution could be used to reduce behavioural failures. Yet, when questioning market failure and behavioural failure, there are many more questions that arise: for example, the theory of second-best that if behavioural failure and market failure are simultaneously existing, could solving one but not the other decrease social welfare even more? Out of the many behavioural failures, what if there is some degree of behavioural complementarity or substitutability across all the biases? Does that mean we must intervene to limit ineffective decision-making in regards to all the biases, or just some, and in what proportions. What is the curvature of the probability weighting function for each person in the target market? This topic is clearly one that has only made a ripple in the surface, and a colossal expanse of findings yet to discover.

Foreign Aid: Good or Bad?

Foreign aid is the transfer of capital, goods, and services from one government (or aid agency) to a recipient country. As the gap between the world’s richest and poorest continues to grow, aid has been subject to great scrutiny as people question how effective aid has been in global redistribution efforts. Within the foreign aid discussion, there are a range of differing voices and viewpoints to consider: some advocate for a ‘laissez-faire’ approach, arguing that the risk of recipient countries becoming dependent on aid far outweighs any possible benefits (therefore developing countries should be left alone to allow the market mechanism to distribute resources) while others argue that foreign aid corrects market failures and provides ‘one big push’ for individuals living in poverty within developing countries, providing them with more opportunities. Foreign aid has proven to be successful in some instances, however, ignorance on the part of aid workers and local policymakers often causes aid to be ineffective (and in some cases completely counter-effective).

‘Us’ and ‘Them’

Working with an Afghan Women’s Association in 2002, Dr Maliha Chishti circulated a needs assessment survey asking Afghan women what they needed from the Toronto-based NGO. Upon receiving an overwhelming response asking for access to basic healthcare, Dr Chishti drew up a plan for a mobile health clinic with a modest budget. However, her proposal was denied by the Canadian government, who offered her a much larger budget on the premise that she would instead provide a human rights training program for Afghan women. After arriving in Afghanistan, Dr Chishti found many other aid agencies offering workshops identical to hers, and yet there was not a single mobile health clinic to be seen. If the aid agencies had chosen to listen to the requests of the Afghan women, their resources could have made a

huge impact, however, they chose instead what they believed was necessary for the local women, leading to a wasteful duplication of resources.

Aid establishments determine the priority to offer one program instead of another based on their wishes regarding the future of a developing country. Ignoring the local citizens, aid agencies are often able to step in and implement policies whilst knowing very little about the local context (e.g. the role of religion and tradition, the political history, and the language) with the goal of reforming these countries to become more like their own. This can cause aid to be completely ineffective: it can lead to wasteful expenditure, unanticipated responses to changes, or simply a harmful power imbalance that can lead to further consequences. By dismantling this assumption that we know what is best for them, aid is more likely to work in favour of the recipients, as the policies revolve around providing help where it is wanted and needed by the local citizens rather than boosting the egos of the developed world.

A Guide to Entitlement

In some instances, aid is not just ineffective, it can harm economies.

Grants are often given to developing countries with the aim of injecting money into the economy (e.g. via government investment projects that have multiplier effects) and bringing about growth. However, individuals tend to assign value to things that come at an expense to them, and since these grants are viewed as ‘free’ funding for their governments, some economists argue that individuals subject to governments in receipt of grants care less about the actions of their governments. This allows

corruption to run rampant, with a clear example being Jacob Zuma (former president of South Africa) who spent US$16 million on security updates for his house. The reasoning behind this expenditure may have seemed somewhat reasonable at first, however, further investigation revealed a large proportion of the money was put towards constructing himself a swimming pool. When questioned, Zuma claimed it was a ‘firepool’ that served as a fire protection device, yet reporters found the ‘firepool’ to bare no significant difference from an ordinary swimming pool.

Although Zuma did eventually have to repay the government money he had spent, he faced no real disciplinary action and his presidency continued for a year. If Zuma’s actions were presented as though tax-payer money had been used to fund his extravagant expenditures, Zuma would likely have faced repercussions for his actions. However, since it was money donated to the country, individuals were much less concerned. In both scenarios, the gravity of the situation should be equal, as Zuma had used money intended to increase the welfare of the citizens for his own private gain. However, individuals tend to be loss-averse. Since tax represents a direct loss for individuals, they feel more entitled in regards to what the money is spent on. On the other hand, the aid income is simply a gain, and individuals typically care more about losses than gains. It could be argued, therefore, that aid switches off accountability demands.

I am by no means suggesting the solution is to raise taxes in developing countries. Those earning lower incomes tend to already be the most affected by existing indirect taxes (such as VAT and excise dukes). Perhaps if individuals were made more aware of how much tax they were paying, they would feel more inclined to demand that their governments take action and implement policies that stimulate growth across the country. In turn, corruption would be much harder to ignore, therefore, over time, aid contributions could be redesigned to work with the citizens of an economy.

Success stories

‘Gavi’ (the Global Alliance for Vaccines and Immunisation) was established in 2000 by the Bill & Melinda Gates Foundation with the aim to ensure access to vaccines for

all children around the world. Although countless new and effective vaccines were entering the global market, many developing countries simply could not afford them. Gavi stepped in to address this market failure. As the organisation grew in size (by working with governments and NGOs as well as various partners such as the World Health Organization, UNICEF, and the World Bank) they became increasingly able to negotiate more affordable vaccine prices for developing countries. Additionally, they share the costs of implementing these vaccines and, as a result, have helped to halve childhood mortality rates by averting more than 17.3 million future deaths. As children become healthier, their families, communities, and countries are in turn more able to become economically prosperous, helping to boost the economies of lower-income countries.

Gavi has managed to successfully overcome many of the issues typically associated with foreign aid. By working with local governments, Gavi ensures systems are in place to efficiently distribute the vaccines once they are delivered. By directly providing vaccines, there is no risk of corrupt politicians being the only ones to benefit from the aid. As of 2022, 19 countries have transitioned out of Gavi support and are now able to fully self-finance the vaccine programmes.

Conclusion

The world consists of countries, each at their own stage of development, working to sustain their growth and improve the future prospects and living conditions of their citizens. There is no ‘end goal’ that these countries are trying to achieve, as even some of the most developed countries are still pursuing economic growth. Foreign aid, when implemented after careful consideration and meticulous planning, can help to ensure there is equal access to opportunities for all, regardless of the level of the development of their country. Working directly with local governments and monitoring the effects based on how the policies work with respect to standards and expectations set by local people is vital in order to effectively help the developing world towards a path of economic prosperity.

The Impact of Climate Change on Health Care Services: A Looming Crisis

Introduction

Climate change is often regarded as one of the most pressing global challenges we currently face. As the consequences become more evident from the frequency of extreme weather events, rising temperatures and sea levels, its impact goes beyond environmental concerns with significant challenges being posed to healthcare services across the world. Though the infrastructure of hospitals and other healthcare providers across the world are likely to be tested severely, it is apparent that certain regions will experience more profound detriments.

Many of these regions house low and middle-income countries with inadequate healthcare provision, raising concerns about the accessibility of treatments to their populations[1] .

Global Warming

With global average temperatures set to rise by 1.3 by the first half of the decade ℃ 2030, extreme heat illnesses are becoming increasingly common particularly in Central Europe (see Figure 1).As a result of the unprecedented heatwaves in June and July of 2022, Europe saw 61,000 heat exposure deaths with a 57% rise in heatwave exposure for vulnerable groups[2] .

Most of these deaths were due to cardiopulmonary issues resulting from the excess strain put on the heart. When ambient temperatures exceed 37 - our core body ℃ temperature – blood flow is diverted towards the skin for excess heat to be radiated out. To maximise the heat loss, the heart is required to pump two to four times as much blood each minute as it does on cooler days[3]. This increases the risk of cardiovascular diseases like heart arrhythmia and cardiac arrest.

In some cases where the intestines are deprived of oxygen, their lining can become damaged and more permeable to endotoxins – a type of lipopolysaccharides found on the outer membrane of Gram-negative bacteria[3]. Contributing to inflammatory responses, they can lead to complications like sepsis, causing disruption to cell homeostasis and organ functions. However, endotoxins are not the only inflammatory mediators that are released by heat cytotoxicity[1]. In the pancreas, endothelial layers can be damaged leading to leukocyte infiltration and pancreatic inflammation.

Furthermore, dehydration from extreme heat also plays a role; as blood volume falls the heart needs to contract more forcefully to compensate for the fall in the amount of blood reaching body cells. To make sure the metabolic needs of cells are still met, the heart rate and blood pressure rises, increasing the risk of the endothelial lining of arteries being damaged.

Fig. 1.: Trends in heat related mortality incidence (annual death per million per decade) in Europe for the general population (2000-20)

Source: The Lancet (2022)

Pollution and Respiratory Diseases

It is also important to note that extreme heat can exacerbate pre-existing respiratory illnesses especially within the elderly. Higher temperatures can speed up reactions involving nitrogen oxides and volatile organic compounds (VOCs) - released from car exhausts, wildfires etc.- to form ground ozone[4]. Unlike other ambient air pollutants, ozone concentrations have failed to show a decline and it is estimated that the number of premature deaths linked to ozone pollution will increase into the 10,000s globally by 2030[5] .

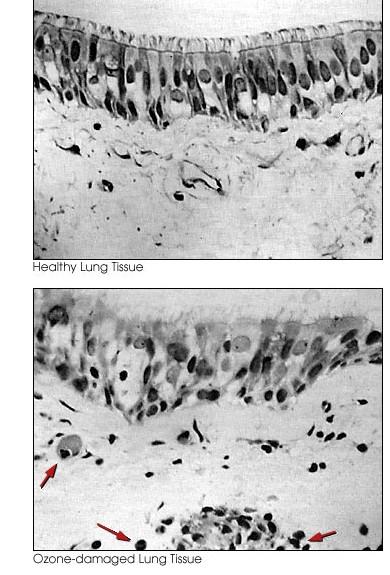

Via oxidative injury (damage from excess radicals being present), ozone can react with molecules in the airway lining to cause acute inflammation as shown in Figure 2. Responsive mechanisms to this include the buildup of fluid (Edema) and constriction of muscles, trapping air in the alveoli and making it harder to breath [4].

Acute exposure to ozone concentrations has seen increased hospital admissions to respiratory diseases like COPD (Chronic Obstructive Pulmonary Disease), asthma and pneumonia, especially in the Asia-Pacific region, with increased damage to lung tissues. With the added consequences of heat waves, mortality rates from pollution can rise by up to 175%[5] .

Fig. 2.: Comparison of Healthy Lung Tissue to Ozone-damaged Lung Tissue

In the control image (upper) from the lung of a person exposed only to air, the tiny cilia that clear the lungs of mucus appear along the top of the image in a neat and regular row. In the lung exposed to ozone added to the air for four hours during moderate exercise, many cilia appear missing and others appear. Magnification: x400. Source : the American Thoracic Society, from American Review of Respiratory Diseases, Vol. 148, 1993, Robert Aris et al., pp. 1368-1369.)

The Spread of Water-Borne Diseases

Studies have shown the frequency of extreme weather events to correlate with the risk of water-borne diseases, for instance, malaria and dengue fever[2]. As precipitation levels rise with rising temperatures, the water cycle intensifies leading to frequent flooding especially in regions like Southeast Asia (Figure 3). This leads to the risk of flooding becoming more alarming, carrying the risk of contaminating drinking water sources and recreational water sites. Storm surges also have the potential to damage water treatment plants by exceeding the cubage through runoffs. Subsequently, exposure to water-borne diseases increases due to the household water supplies being contaminated by animal wastes, pollutants, and pesticides – all which pose a risk to health. In September 2022, Pakistani health officials reported over 90,000 cases of diarrhoea (commonly due to E-coli infections) in one region severely affected by the 2022 flooding[3] .

Fig. 3.: Map showing the different levels of exposure to flooding

Source: Rentschler, J, Salhab, M and Jafino, B. 2022. Flood Exposure and Poverty in 188 Countries. Nature Communications.

Malaria

Malaria – the world's deadliest parasitic disease, infecting 257 million people in 2021 – is most spread by the anopheline mosquito. Long-term variations in temperature, humidity, and rainfall patterns, which are primarily linked to changes in the El Niño cycle, have a significant impact on the longevity and development of mosquitoes.

Consequently, malaria transmissions have increased, particularly in areas of higher altitudes, i.e. the Andes and the Himalayas, resulting in cases in areas where the incidence was previously quite low. This proves problems in remote areas situated in these regions, where healthcare provisions are already limited[6] .

Moreover, in dry climates, heavy rainfall can provide good breeding grounds for mosquitoes by creating water pools. Increased humidity and droughts can also turn rivers into strings of pools. Due to the stagnant conditions, the bodies of water provide ideal conditions for eggs to hatch into larvae, increasing the number of vectors to transmit the disease. This has been the case in Southern Africa where countries had experienced epidemics after periods of unusual rainfall[7] .

V.vulnificus

Associated with sepsis and amputations, non-cholera vibrio bacteria can lead to severe gastrointestinal, skin and eye infections. Increases in global sea temperatures over the last few years haves meant more coastal areas are exposed to brackish waters – suitable breeding grounds for the vibrio bacteria[8]. Despite originally being endemic to the south-eastern USA, Vibrio.spp. infections have emerged in the Baltic regions, and in 2020, research showed that conditions in the Baltic Sea were a suitable breeding ground by 96.6%-100%[2] .

One infection of concern is V.vulnificus. Able to infect through small skin lesions, it can quickly become necrotic with symptoms showing as soon as 48 hours. As the most pathogenic of the vibrio genus, it is also the most expensive to treat with annual costs being $320 million per year in the US[8]. Due to the lack of high-quality epidemiological data, it is hard to map out the incidence and cost of the infection on healthcare services in different regions. Current predictions are based on the probable presence of Vibrio.spp. bacteria rather than the disease risk[8] .

Food Security and Malnutrition

Climate change also has the ability to influence diets and nutrition with global warming and erratic rainfall patterns posing risks to crop yield potentials. Low-

income and middle-income countries in Africa and Southeast Asia are said to experience the largest reductions in food availability, increasing deaths linked to being underweight[9] .

Recent studies have shown a negative correlation between carbon dioxide levels in the atmosphere and mineral contents of plants. For instance, in legume plants and certain grains, elevated carbon dioxide levels were linked with the increased risk of iron and zinc deficiencies. This can affect nearly two billion people in low income and middle-income countries in Southeast Asia and Africa who rely on these crops for nutrition. In high income countries, reduced fruit, and vegetable consumption due to climate change have become a risk factor for non-communicable diseases like type 2 diabetes[9] .

In addition, economic burdens in low-income populations can be expected to rise as the price of staple crops increases, driving malnutrition as well as obesity-linked diseases, as people resort to cheaper and less nutritious foods.

Fig. 4.: Maps showing the prevalence of stunting, wasting and undernutrition from 2010 to 2019 DHS across the 31 Sub-Saharan African Countries. (A) Stunting (%) (B) Wasting (%) (C) Underweight (%)

Source : Research Gate (I. Amadu, A.Seidu, E.Duku)

Stunting

As one of the leading indicators in malnutrition, stunting is also the most prevalent form of malnutrition with over 149.2 million children suffering from it globally in 2020[9]. Stunting can begin from the uterus, with inadequate maternal nutrition and poor antenatal care resulting in an unhealthy intrauterine environment. Early pregnancies also increase the risk of stunting significantly as competition between the foetus’ growth and the mother’s pubertal development compromises both of their growths. This poses a challenge to healthcare services across Sub-Saharan Africa (Figure 4) where adolescent pregnancy – 143 per 1000 girls- is the highest. This not only heightens the risk of stunting but also maternal & infant mortality in the region where over 26.5 million people face severe food severity[1] .

Impact of Climate Change on Mental Health

A commonly forgotten issue is the impact of climate change on mental health [10]. After Hurricane Katrina in 2005, it was reported that poor mental health rose by 4 % in the areas affected[11]. The destruction, loss and displacement from extreme weather events can lead to people experiencing a range of mental health problems, ranging from PTSD (Post Traumatic Stress Disorder) to suicidal thoughts. The onset of psychological distress is attributed to fears surrounding the prospects of homelessness, unemployment, relocation, and the potential lack of support with damages to healthcare services[12] .

Research has been carried out to show rises in ambient temperatures have increased mental health-related emergency department visits[13]. Acting as an exogenous stressor, heat itself is not specific to a particular condition, exacerbating an array of issues e.g., schizophrenia, anxiety, PTSD etc. One mechanism relates to sleep deprivation during extended periods of uncomfortable, elevated temperatures, increasing negative emotional responses to stressors. Another biological pathway could be due to continuous discomfort heightening feelings of hopelessness and fear due to the anticipation of climate change and potential extreme events[13] .

Conclusion

The effects of climate change on health are very much multifaceted through its effects on various aspects of physical, mental, and social wellbeing. There is a growing concern on whether healthcare services have enough funding and suitable infrastructure to cope with the impending problems. Without coordinated and efficient actions being made, it is likely for us to see the gap between the developed and developing countries grow even bigger in multiple ways, as current healthcare systems are already struggling to cope with today’s issues.

Bibliography

1. (No date) Effects of climate change on malnutrition in Sub-Saharan ... - linkedin. Available at: https://www.linkedin.com/pulse/effects-climate-change-malnutrition-sub-saharanafrica-ebrima-barrow (Accessed: 19 July 2023).

2. van Daalen, K. et al. (2023) Towards a climate-resilient healthy future: The Lancet countdown in Europe [Preprint]. doi:10.5194/egusphere-egu23-13565.

3. Thornton, A. (2021) How does heat exposure affect the body and mind?, Boston University. Available at: https://www.bu.edu/articles/2021/how-does-heat-exposure-affect-the bodyand-mind/ (Accessed: 19 July 2023).

4. Association, A.L. (no date) Ozone, American Lung Association. Available at: https://www.lung.org/clean-air/outdoors/what-makes-air-unhealthy/ozone (Accessed: 19 July 2023).

5. Ozone pollution is linked with increased hospitalizations for cardiovascular disease (2023) ScienceDaily. Available at: https://www.sciencedaily.com/releases/2023/03/230310103451.htm#:~:text=Ozone %20pollution%20is%20linked%20with%20increased%20hospitalizations%20for %20cardiovascular%20disease,-Date%3A%20March%2010&text=Summary%3A,attack%2C %20heart%20failure%20and%20stroke. (Accessed: 19 July 2023).

6. Hassan, S. (2022) Malaria and diseases spreading fast in flood-hit Pakistan, Reuters. Available at: https://www.reuters.com/world/asia-pacific/pakistan-flood-victims-hit-bydisease-outbreak-amid-stagnant-water-2022-09-21/ (Accessed: 19 July 2023).

7. Climate change and malaria - a complex relationship (no date) United Nations. Available at: https://www.un.org/en/chronicle/article/climate-change-and-malaria-complexrelationship#:~:text=Climate%20change%20greatly%20influences%20the,breeding %20conditions%20for%20the%20mosquitoes. (Accessed: 20 July 2023).

8. Archer, E.J. et al. (2023) ‘Climate warming and increasing vibrio vulnificus infections in North America’, Scientific Reports, 13(1). doi:10.1038/s41598-023-28247-2.

9. Fanzo, J.C. and Downs, S.M. (2021) ‘Climate change and nutrition-associated diseases’, Nature Reviews Disease Primers, 7(1). doi:10.1038/s41572-021-00329-3.

10.de Onis, M. and Branca, F. (2016) ‘Childhood stunting: A global perspective’, Maternal & Child Nutrition, 12, pp. 12–26. doi:10.1111/mcn.12231.

11. Kessler, R. (2006) ‘Mental illness and suicidality after Hurricane Katrina’, Bulletin of the World Health Organization, 84(12), pp. 930–939. doi:10.2471/blt.06.033019.

12.How climate change affects our mental health, and what we can do about it (2023) How Climate Change Affects Mental Health, What We Can Do About It | Commonwealth Fund. Available at: https://www.commonwealthfund.org/publications/explainer/2023/mar/howclimate-change-affects-mental-health (Accessed: 19 July 2023).

13. Schmahl, C. and Hepp, J. (2022) ‘Faculty opinions recommendation of association between ambient heat and risk of emergency department visits for Mental Health AmDong Us Adults, 2010 to 2019.’, Faculty Opinions – Post-Publication Peer Review of the Biomedical Literature [Preprint]. doi:10.3410/f.741736435.793595764.

George Clements

From above the grocer’s to the steps of Number 10: The Rise of the Iron Lady

It seems only appropriate that, shortly after the 10th anniversary of her passing, we should remember and pay homage to Margaret Thatcher, the first female Prime Minister of the United Kingdom. There can be no denying that during her decadelong tenure as PM, she oversaw significant changes to our country’s identity, both socially and economically; the footprint she left behind following her departure was certainly not a faint one. Whatever your views on Mrs Thatcher, she was undeniably a woman of principle, and her strong, decisive leadership gave rise to her being commonly referred to as “The Iron Lady” by many. But how did it all begin? In this article, we explore how a middle-class grocer’s daughter from Grantham became such a prominent and influential figure in politics and British society.

From Grantham to Oxford

Margaret Hilda Roberts was born on 13th October 1925. She was the daughter of a successful small businessman, who owned a tobacconists and grocery store in the Lincolnshire market town of Grantham. She attended Huntingtower Road Primary School and won a scholarship to Kesteven and Grantham Girls' School, a grammar school, serving as Head Girl from 1942-43. Roberts was accepted for a scholarship to study chemistry at Somerville College, Oxford, a women's college, starting in 1944, although after another candidate withdrew, Roberts entered Oxford in October 1943. It was here that she began to become involved with politics, serving as the President of the Oxford University Conservative Association in 1946. Despite the scientific nature of her degree, it is believed that she drew significant inspiration from Austrian economist Friedrich Hayek’s The Road to Serfdom while studying at university. This book is widely known for condemning economic intervention by government, which may have influenced her to strive towards the “Thatcherite” economic policies for which she is so well known. She graduated in 1947 with a second-class degree, specialising in X-ray crystallography.

From Oxford to Westminster – the dawn of a political career

After graduating, Roberts moved to Colchester in Essex to work as a research chemist for BX Plastics. In 1948, she applied for a job at Imperial Chemical Industries (ICI) but was rejected after the personnel department assessed her as "headstrong, obstinate and dangerously self-opinionated". Roberts joined the local Conservative Association and attended the party conference at Llandudno, Wales, in 1948, as a representative of the University Graduate Conservative Association. One of her Oxford friends was also a friend of the Chair of the Dartford Conservative Association in Kent, who were looking for candidates. Officials of the association were so impressed by her that they asked her to apply, even though she was not on the party's approved list; she was selected in January 1950 (aged 24) and added to the

approved list. It was during this time that she met her husband-to-be, Denis Thatcher, a wealthy businessman.

In the 1950 and 1951 general elections, Roberts was the Conservative candidate for the Labour seat of Dartford. The local party selected her as its candidate because, though not a dynamic public speaker, Roberts was well-prepared and fearless in her answers. A prospective candidate, Bill Deedes, recalled: "Once she opened her mouth, the rest of us began to look rather second-rate." She attracted media attention as the youngest and the only female candidate; in 1950, she was the youngest Conservative candidate in the country. She lost on both occasions to Norman Dodds but reduced the Labour majority by 6,000 and then a further 1,000. During the campaigns, she was supported by her parents and by her future husband Denis Thatcher, whom she married in December 1951. Denis funded his wife's studies for the bar; she qualified as a barrister in 1953 and specialised in taxation. Later that same year their twins Carol and Mark were born. After a by-election loss in Orpington in 1955, it was at the 1959 election when, after a tireless campaign, she was finally elected to parliament as the Conservative Member of Parliament for Finchley.

From MP to PM – rising through the ranks

Thatcher’s talent and drive led to her being promoted to the frontbench as Parliamentary Secretary to the Ministry for the Pensions by Prime Minister Harold MacMillan. After the 1966 election defeat, she was not appointed as a member of the shadow cabinet, although her influence remained strong, with regular speeches criticising Labour’s high-tax policies “as a move towards communism”. During this time, she also voted in favour of decriminalising male homosexuality and in favour of abortion, a

one of only a few Conservative MPs to do so. She was appointed as a transport minister in the Shadow Cabinet in the late 1960s, before being promoted to Education Secretary following Ted Heath’s election victory in 1970. In this role, she supported the retention of grammar schools, and was famously involved in the 1971 decision to discontinue the provision of free milk to all children aged 7-11, which gave rise to her well-known nickname “the Milk Snatcher”. However, it later emerged that she herself was not in favour of this policy and was instead persuaded by the Treasury to introduce it. Following Heath’s election loss in 1974, Thatcher was not initially seen as the obvious replacement, but she eventually became the main challenger, promising a fresh start. Her main support came from the parliamentary 1922 Committee and The Spectator, but Thatcher's time in office gave her the reputation of a pragmatist rather than that of an ideologue. She defeated Heath on the first ballot, and he resigned from the leadership. In the second ballot she defeated Whitelaw, Heath's preferred successor. Thatcher's election had a polarising effect on the party; her support was stronger among MPs on the right, and also among those from southern England, and those who had not attended private schools or Oxbridge. She became Conservative Party leader and Leader of the Opposition on 11 February 1975. During her time in this role, she continued to push for a wide range of neoliberal economic policies, criticising the Callaghan’s Labour government for the high unemployment rate and hundreds of public-sector strikes impacting local services in the 1978-9 “Winter of Discontent”.

With much frustration at the high rate of inflation and lack of confidence about the direction of the country, support for the government began to weaken. A vote of no confidence in Callaghan’s leadership was called in early 1979, which he lost, resulting in a general election being called. Throughout her campaign, she continued to exploit the failures of Callaghan’s government, promising a new style of leadership, a reduction in the size of the state, and various other neoliberal economic reforms, focusing on tackling inflation and the militant unions who had caused so much havoc the previous winter, and of course, advocating the Right to Buy scheme whereby tenants would be able to purchase their council-owned homes. Her campaign was also centred around attracting the traditional Labour-voting working class, amongst whom her promises of major social and economic reforms proved popular.

Her election manifesto was published on 11th April 1979, outlining 5 key policy pledges:

1. “to restore the health of our economic and social life, by controlling inflation and striking a fair balance between the rights and duties of the trade union movement”

2. “to restore incentives so that hard work pays, success is rewarded and genuine new jobs are created in an expanding economy

3. “to uphold Parliament and the rule of law”

4. “to support family life, by helping people to become home-owners, raising the standards of their children’s education and concentrating welfare services on the effective support of the old, the sick, the disabled and those who are in real need”

5. “to strengthen Britain’s defences and work with our allies to protect our interests in an increasingly threatening world”