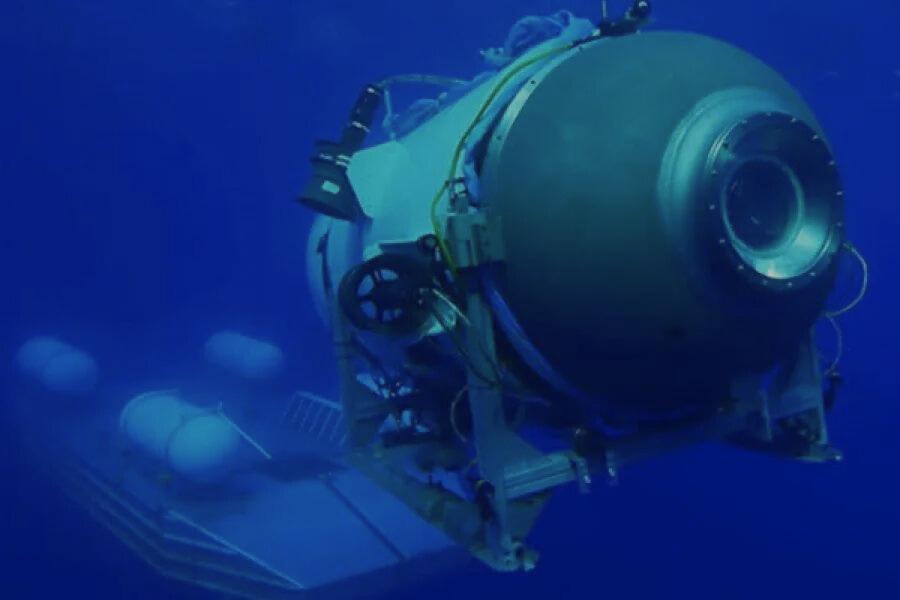

WHAT WE SHOULD LEARN FROM THE TRAGIC FAILURE OF THE TITAN

Thought Sparks

Introduction

My last “Thought Spark” was published before the dreadful implosion of the Titan submersible. Then, I was talking about intelligent failures. This time around, let’s focus on what my colleague Amy Edmondson would call a preventable complex failure

In her brilliant forthcoming book, “Right Kind of Wrong,” Amy Edmondson discusses three kinds of failures. There are the potentially intelligent ones. Those teach us things in the midst of genuine uncertainty, when you can’t know the answer before you experiment. There are the basic ones, that we wish we never experienced and which would be great to get a ‘do over ’ on. And then, there is a class of failure that the doomed Titan submersible falls into – the preventable complex systems failure.

Three kinds of failures and why this one has lessons for us all