As a biologist the concept of DNA is ingrained in my psyche, just as a specific trait or characteristic is coded in each individual’s genes. I am regularly asked what makes the RGS community so special, so distinctive, and this edition of The Annual goes a long way to answering that question. The RGS has existed for well over 500 years; the School has innovated, evolved and developed and yet – irrespective of the time – our values have endured and, of these, as well as our pride in our culture of inclusivity and respect, scholarship has defined who we are and what we do. It is in our DNA.

In this light I was particularly proud that our Inspection earlier in the year focused on this particular aspect as one of the two 'Significant Strengths' we were awarded. The ISI Inspection report mentioned, “The strong academic culture leads to pupils who readily engage in critical thinking and deep learning, and display intellectual curiosity.” This culture, they felt, permeated through every element of school life. As I read through this edition of The Annual , this passion for learning absolutely shines through. The depth, diversity and complexity of the research impress; however, it is the sheer enthusiasm and excitement for learning which highlights that, far from mere rhetoric, our philosophy of Scholarship for All remains a reality.

I would like to take this opportunity to congratulate my Head of Scholarship, Mrs Tarasewicz, and all those students who have contributed to The Annual ; I hope that all who read it are inspired. Scholarship is in our DNA at the RGS and I have absolute confidence that it remains, and will continue to remain, at the very heart of all we do, and all we strive for.

Dr JM Cox Headmaster

Iam delighted to introduce the 2025 edition of The Annual , celebrating an incredibly exciting year of Scholarship amongst our younger students here at the RGS.

Scholarship is one of our core school values and we are extremely proud of the culture of Scholarship for All that we have created, encouraging all of our students to develop intellectual curiosity and creativity, as well as academic ambition. This was recognised as a ‘Significant Strength' by the inspectors when they visited in January 2025 and it is certainly something that has been embodied by the students that you will encounter in the following pages.

For several years, the Senior Independent Learning Assignment (the ILA) has been a flagship part of our Scholarship for All programme, offering students in the Lower Sixth the opportunity to research a topic of their own choosing, with subject specialist support from a member of the teaching staff.

This year, for the first time, we were very excited to launch the Junior ILA and so to extend this opportunity to members of the Third and Fourth Form. Initial interest far surpassed our expectations, with almost 80 students signing up. In total, 40 students successfully submitted finished projects, representing remarkable individual and collective achievements. All 40 of these students were invited to share their projects with a guest list of staff, parents and fellow students at the Junior ILA Celebration Evening, culminating in the presentation of the inaugural Junior ILA awards.

The very best of this year’s Junior ILAs have been published in full over the following pages, including all of the students whose projects were Commended and Highly Commended, as well as those produced by our award winners. With such a remarkable range of titles, I am sure you will agree that there is something to capture everyone’s interest.

At this point I need to also pay tribute to the remarkable team of 21 Sixth Form students who acted as mentors for their younger counterparts. This support of students by students was the main point of difference between the Junior ILA and the Senior ILA, where supervisors are drawn from the staff body. It turned out to be one of the most successful and inspiring features of the programme and I am incredibly grateful to the mentors for sharing their time, enthusiasm and experience with the younger pupils. Several mentors attended the Celebration Evening where they celebrated their mentees’ achievements and were also themselves celebrated.

Finally, I would like to say a huge thank you to Georgina Webb, our Partnerships and Publications Assistant, who has been responsible for putting together this beautiful publication.

I do hope that you enjoy reading it.

Mrs Henrietta Tarasewicz Head of Scholarship

With

to the

With thanks to: Mr Dunscombe, Mr Wright and Miss Goul-Wheeker for acting as judges at the Junior ILA Celebration Evening, and to Mrs Webb for producing this publication.

This essay was commended at the Junior ILA Celebration Evening

Motorsport has a variety of applications one of which is how it can be applied to the cars that people drive every day. This innovative technology allows drivers across the world to be safer and faster. The purpose of this research essay is to find out how much or how little does motorsport affect

cars. This research paper answers how motorsport, is useful to the car industry. We found most of the technology came from motorsport. However there have been other influences such as the aerospace industry provides the automobile industry with Anti-Lock braking and new composites.

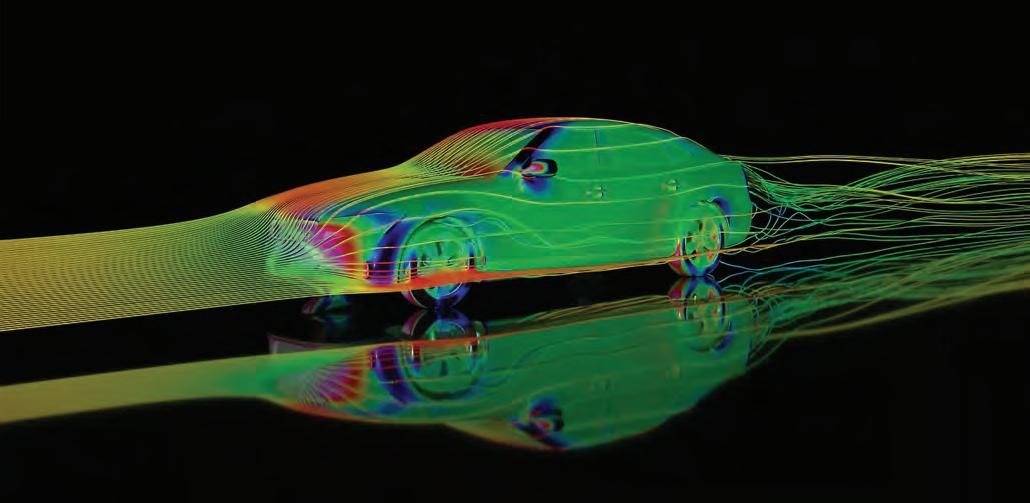

Cars are the most used transport in the world. (Armstrong, 2022) Since cars were invented in 1886, Motorsport has been a testbed for road car technology. The intense competition created by racing teams to research and develop breakthroughs in automotive engineering has led to improved performance, efficiency, and safety for drivers worldwide.

R&D is an abbreviation of Research and Development. Motorsport and R&D go together. Whether it is the top level of Formula 1, the tough tracks of the World Rally Championship, motorsport relies on constant innovation. This ongoing progress helps improve performance, promotes sustainability, and makes the sport more entertaining for fans around the world.

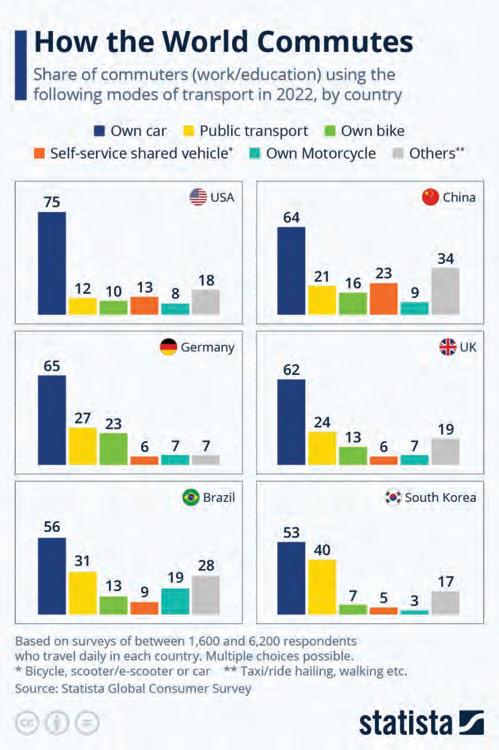

Aerodynamics is one of the most important parts of a car. If a car is not streamlined, it will not be very efficient. For example, a Tesla Model 3 has a drag coefficient (Cd) of 0.203 (Rawlins, 2024) and will do 132 MPGe (Miles per Gallon equivalent) (Brown, 2023) whereas the Mercedes EQXX (0.16

Figure 1: CFD (Computational Fluid Dynamics) predicts airflow around a car. (Red = high pressure Blue = low pressure)

Cd) will do around 282 MPGe (unknown, unknown) with a smaller battery. Even if there is only a tiny difference in the shape of the car it can be very costly.

The Panhard CD LM64, made for Le Mans is one of the most aerodynamically made circuit cars with a drag coefficient of 0.12. (Gitlin, 2020) The Eco-Runner 8 is the most aerodynamic car ever with a drag coefficient at only 0.045. (Gitlin, 2020) To put that into context the most aerodynamic shape in nature is a teardrop, it has a drag coefficient of 0.04. (Tallodi, 2024)

The EQS by Mercedes is the most aerodynamic road car tested with a drag coefficient of 0.2. (Harrison, 2021) This is worked out by this equation Cd = Drag Force / (0.5 * ρ * A * V2) where ρ, A and V stands for fluid density, reference area and velocity, respectively. From this equation there are a few ways to decrease drag an example is the frontal area. (Explained, 2023) For example, cars that have been influenced by aerodynamics researched by motorsport is a Prius or a Model 3. These cars have a frontal area of around 2.2 metres2. (Explained, 2023)Motorsport has aided in the development of this by researching CFD (computational fluid dynamics) and wind tunnels for these companies to use.

The first wing in motorsport was introduced when Michael May, a driver and engineer came to the Nürburgring on the 27th of May 1956 in his Porsche 550 Spyder with an upside-down airplane wing. On the straights the wing would be flat allowing less drag but, in the corners, he would tilt the wing allowing more downforce. (Mansell, 2023)

An example of active aerodynamics in motorsport is DRS in Formula 1, a part of the rear wing opens allowing the air to go straight through, therefore reducing drag. Active aerodynamics can be found in road-going vehicles such as, in the Porsche Panamera a section of the rear opens and expands into a rear wing allowing more downforce. In more high-performance cars such as the Bugatti Chiron, the rear wing does not have to open as it can just raise using hydraulics and it can serve as an air brake. The GT3 RS has an F1 type rear wing where the fixed rear wing has a flap that tilts up to allow air to pass through it.

Rally cars use fixed wings as they prioritised cornering over top speed because of the number of corners. We can clearly see this in homologated road cars such as the Subaru WRX STI and the Mitsubishi Lancer Evolution. However fixed rear wings do not appear just in homologation special road cars, for example the Mercedes A45s have a small rear wing as well as the Honda Civic type R.

A hybrid car is the best of both worlds, the instant response of electric cars and the top end speed and range of petrol cars. In F1 they reuse lost energy from the braking system which it is deployed back into power, this is called Energy Recovery System (ERS). An example with a car that has ERS is a Porsche Taycan. (McDee, 2022) Porsche has World Endurance Championship team which helps in their R&D for road cars, and they have discovered like many other brands that ERS is the most efficient way for a car to run.

In both circuit racing and rally racing the cars are equipped with a sequential gearbox rather than a stick manual or automatic because a sequential gearbox is quicker to use as it is more accurate than an automatic (if used correctly) and quicker than a manual because paddle shifters don’t require for them to take their hands off the steering wheel and is easy to change. (Unknown, Unknown) In road cars paddle shifters are quite normal for higher performance models even in cheaper variants such as the A45 because it is safer not to take your hands off the wheels. If you are racing with these cars the paddle shifters could save fractions of a second as it is easier to shift gear. Motorsports aided in the development of paddle shifters as time was lost between gear changes slowing the car down.

A difference between F1 and road cars, is in roadgoing cars where they have dual clutch transmission (DCT), F1 cars use one, carbon, multi-plate clutch with a sequential gearbox. Unlike roadgoing cars, they run on with something called straight-cut gears that combine with the clever Engine Control Unit (ECU) for a smoother and quicker shift. Road cars run with a synchromesh gearbox – gears that are cut on an angle and use a synchroniser ring to match gear speed between shifts. (Dobie, 2024)

Figure 2: The difference between helical/synchromesh (bottom) and straight cut gears (top).

Turbochargers first entered motorsport in 1952 with Cummins Company's 6.4L diesel Indy 500 engine. (Writer, 2018) However, the intake did not filter air, causing debris to be inside the engine and they retired from the race. Turbos require exhaust gas to turn turbines, making air faster and dense and this enables the car to go faster. Insufficient exhaust gas can cause turbo lag. Turbos are common in road cars like Golf GTI, GR Yaris, and Honda Civic Type R, as they increase power without reducing fuel economy and helps them pass through ever restricting fuel emissions while getting the power the consumers want. They also are easy to purchase and install

Suspension serves vastly different purposes in racing and commercial use. In circuit racing such as F1 or WEC the main point the manufacturers are thinking about is a stable aerodynamic platform allowing a constant level of downforce. This causes the air underneath the floor at the back of the car to speed up causing more downforce but there is an imbalance of it so the driver may spin off.

easily to their surroundings. Even though there is a vast number of homologation specials none of them use rallying suspension because of how extreme the sport is.

The tyres play a big part of a car’s cornering because it is what contacts the ground. This is called mechanical grip. Slick tyres are the standard in drag racing as there are no grooves which increases the contact with the ground, so more mechanical grip. Even though slick tyres are not road legal it gives tyre companies a good understanding of how to make a summer tyre for commercial use when it will be dry. This means that the grooves will be thinner and shallower as there is not much water to push away which means a larger contact area with the tarmac which means more grip. An example of a high-performance summer tyre is the Michelin Pilot Sport Cup 2R. This tyre has very few grooves and is meant for dry track days. It was used in the Mercedes-AMG ONE to break the Nürburgring Nordschleife road legal record.

This differs from road cars where comfort is the main priority. The is done by making sure the suspension takes care of all the bumps and potholes. In racing cars, the driver is not given the comforts of a road car as is only given as much comfort as they need to drive. In rally cars however the suspension must endure gravel, dirt and snow so it must be very rugged and must absorb impacts from jumps. They do this by having each wheel independent from each other so they can adapt

In rallying the sidewall of the tyres need to be strong because of loose gravel that could puncture the tyre, and they are also studded for better grip in snow and particularly in ice. Most road legal snow tyres are restricted to 1-millimetre studs to avoid damage to the tarmac. This helps manufacturers create winter tyres where they need to withstand the freezing conditions.

The steering wheel plays a crucial role in performance optimisation as in circuit racing all the controls are on the steering wheel. By doing this it makes it safer for drivers and quicker to switch the different power or battery modes. These safety features are now integrated into most modern cars where they have climate control or the radio on the buttons which makes it safer as the driver does not have to take the hands off the wheels and can continue to look at the road, this has been implemented in cars since 2007.

In the 1950 and 1960’s when F1 first came into the world as a recognized motorsport it was like road cars. Of course, there were differences from the F1 cars to the normal cars, but most components and fuel sources were the same. In 1950 there were very few fuel regulations at that time. In the 1950 rules by the FIA about what fuels to use it did not specify what to use, (FIA, 1950). At the start of F1 a lot of teams were using a mixture of benzene, methyl. (Nyberg, 2000)

In 2018 the first car was made from bio-components, and this was after F1 had uses bio-components such as bio plastic. (biopolylab, 2024) The reason this car could have been made was because of the testing and the reassurance of F1 that the car could work. The teams were instructed to use 5.75% of bio-components in the fuel. However, it stopped because then other and better sustainable fuel choices were available.

Currently what is happening is that we are emitting more carbon than the carbon that is being used to make the fuel. The biggest impact we have seen is the impact on UK, E10 fuel, which was introduced, because of motorsport had trialled it successfully and is now the standard fuel in UK. (Transport, 2021)

Benzene is a light-yellow liquid, and it is harmful to human as it leads to a higher chance of leukaemia. (Unknown, 2024) This was extremely dangerous for the mechanics. Methyl is similar because it can cause CNS depression (when the body’s neurological functions slow down) and potential blindness. It can be inhaled and ingested and this is dangerous. (GOV, n.d.) This was also the same for normal cars because instead of petrol they used gasoline which had smaller amounts of these dangerous substances, it was still dangerous.

Moving on to the future, F1 knows they must make a change so they will introduce carbon neutral fuels. Carbon neutral fuels work in the sense that the amount of carbon used to create the fuel is emitted and due to this we create a carbon neutral atmosphere. The new F1 fuel could have an enormous impact on the automobile industry as they have said that their cars will be 100% sustainable in the 2026 season through carbon neutral fuels. (Barreto, 2022)

At the start of F1 a lot of teams were using a mixture of benzene, methyl. (Nyberg, 2000)

When cars were first introduced, they were mainly made from a steel body and still to this day a lot of cars are made from steel because it is one of the best materials to make a car in terms of cost and safety and reliability but there are some materials that are better.

Carbon fibre is arguably the best material to make a car. It is ten times stronger than steel but being five times lighter at the same time. (unknown, 2024) Carbon fibre was first used in the 1981 Formula 1 season, looking at the future carbon fibre is the most used material in Formula 1 cars, and it has been used in many regular road cars because of its extremely good safety capabilities (Mackenzie, 2011). Some examples of a road car that has carbon fibre at a low price is the Alfa Romeo 4C and BMW i3. The 4C has carbon fibre in the chassis of the car for safety. (euro compulsion, 2016) There is one drawback though, it is extremely expensive to make currently, and companies will not make profit by selling cars made by carbon fibre because of it.

Magnesium was important in the early days of motorsport. Magnesium was one of the most used materials, it was first used in the 1920s. About a decade later, magnesium began to be used in commercial vehicles such as the Volkswagen Beetle which contained about 20kg of the material, (Association, unknown). Magnesium was preferred over steel because it does reduce weight and like carbon fibre it is stronger. (Keronite, 2023) One modern example of magnesium being used in modern cars is the Land Rover Defender. We can see the clear use of magnesium in the front bumper. (Association, unknown)

One of the most use safety feature is a roll cage. A roll cage is a specifically engineered part that protects the passengers in the case of a roll over. (Wikipedia, 2024) When cars started to get faster in the 70’s the FIA who controlled the rules of motorsport worldwide imposed a law in 1971 that all race cars must have a roll cage or roll bar. (Unknown, 2023) Shortly after this in 1989 the Mercedes-Benz R129 implemented a system that whenever the car rolls over a roll cage get deployed. Many other cars developed this system such as the Peugeot 307 CC, Volvo C70, Jaguar XK and many more. (Wikipedia, 2024)

One more very used safety feature is anti-lock braking system (ABS). ABS makes sure the wheels don’t lock under heaving braking, and this makes sure that the risk of overturing is very low and in return offers better control of the car. (unknown, 2023) This feature was not introduced by motorsport it was first used UK aviation and then it was used in motor bikes. 1960s is when it got introduced into racing cars and then normal cars. Now this is one of the most common features in a car. Motorsport may not have helped design this, but it did help it in the development of this through manufacturers wanting to reap the benefits of the technology before it got banned.

Taking in all arguments and examples we can safely assume that motorsport R&D process has definitely helped to advance the automotive industry in many different ways.

In conclusion there are many motorsports innovations that transferred over to the commercial for the better. Whether it be performance based, safety or comfort based, motorsport has shaped the way cars are being produced and optimized. Also, it has transformed other industries such as public transportation with new fuels and further research into electrical power while also keeping the roots of F1.

However, some of the innovations has been arguably for the worse or not worth putting into cars such as sport suspension which is stiffer and less (unknown, unknown) comfortable than regular suspension or rare and expensive to produce materials such as carbon fibre. Despite this the car industry mainly take the useful things which make our lives easier and that leads to cars that are incredibly good and only getting better.

We will never know how long it would have taken for the automotive industry to devise these developments without the help of motorsport. Another question we can ask ourselves is that would these ideas have ever come about without the test bed of motorsport and the hours of R&D put into the cars. Taking in all arguments and examples we can safely assume that motorsport R&D process has definitely helped to advance the automotive industry in many different ways.

1. Armstrong, M., 2022. How the World Commutes. [Online] Available at: https://www.statista.com/chart/25129/ gcs-how-the-world-commutes/[Accessed 15 2 2025].

2. Association, I. M., unknown. IMA. [Online] Available at: https://www.intlmag.org/page/app_automotive_ima

3. Barreto, L., 2022. formula1. [Online] Available at: https://www. formula1.com/en/latest/article/formula-1-on-course-to-deliver100-sustainable-fuels-for-2026.1szcnS0ehW3I0HJeelwPam

4. biopolylab, 2024. [Online] Available at: https://biopolylab. com/2020/08/bioplastics-in-the-automotive-industry/

5. Brown, R., 2023. Electric car mpg: Top brands compared. [Online] Available at: https://www.energysage.com/ electric-vehicles/mpg-electric-vehicles/ [Accessed 11 1 2025].

6. Dobie, Z., 2024. Are Formula 1 cars manual?. [Online] Available at: https://www.drive.com.au/caradvice/ are-f1-cars-manual/[Accessed 16 2 2025].

7. eurocompulsion, 2016. ALFA ROMEO 4C CARBON FIBER CHASSIS INFORMATION. [Online] Available at: https:// shopeurocompulsion.net/blogs/technical-articles/ alfa-romeo-4c-carbon-fiber-chassis-info

8. FIA, 1950. historicdb.fia. [Online] Available at: https://historicdb.fia.com/sites/default/files/ regulations/1481637297/annexe_c_1950_gbr_web.pdf

9. Gitlin, J. M., 2020. These streamliners are the world’s most aerodynamic cars. [Online] Available at: https://arstechnica. com/cars/2020/05/teardrops-and-wind-tunnels-a-lookat-the-worlds-most-aerodynamic-cars/#:~:text=As%20 far%20as%20circuit%20racing,mile%20Mulsanne%20 Straight%20in%20mind. [Accessed 17 December 2024].

10. GOV, U., n.d. GOV.UK. [Online] Available at: https:// www.gov.uk/government/publications/methanolproperties-incident-management-and-toxicology/ methanol-toxicological-overview#:~:text=Methanol%20 is%20toxic%20following%20ingestion,are%20 subsequent%20manifestations%20of%20toxicity.

11. Grmusa, N., 2023. What Does KompressorMean in Mercedes-Benz Cars? [Online] Available at: https:// carpart.com.au/blog/what-does-kompressor-meanin-mercedes-benz-cars [Accessed 5 1 2025].

12. Harrison, T., 2021. The Mercedes EQS is the most aerodynamic series production car ever. [Online] Available at: https:// www.topgear.com/car-news/electric/mercedes-eqs-mostaerodynamic-series-production-car-ever[Accessed 22 2 24].

13. Ingram, A., 2024. Volkswagen Golf - MPG, CO2 and running costs. [Online] Available at: https://www.autoexpress. co.uk/volkswagen/golf/mpg#:~:text=Officially%2C%20 the%201.5%20TSI%20petrol,similarly%20achievable%20 on%20longer%20runs.[Accessed 16 December 2024].

14. Keronite, 2023. [Online] Available at: https://blog. keronite.com/selecting-the-right-lightweightmetal#:~:text=Magnesium%20is%20extremely%20 light%3A%20it,savings%20in%20applications%20using%20it.

15. Mackenzie, I., 2011. BBC, carbon fibres journey from racetrack to hatchback. [Online] Available at: https:// www.bbc.co.uk/news/technology-12691062

16. Mansell, S., 2023. When Formula 1 Used AEROPLANE Wings, s.l.: s.n.

17. McDee, M., 2022. Porsche details Taycan energy recovery systems. [Online] Available at: https://www.arenaev. com/how_exactly_porsche_taycan_generates_energy_ when_braking-news-392.php#:~:text=Porsche%20 equipped%20its%20Taycan%20with,while%20still%20 maintaining%20great%20efficiency. [Accessed 22 2 2025].

18. Mitchell, S., 2022. Tech Explained: Formula 1 MGU-H. [Online] Available at: https://www.racecar-engineering.com/articles/ tech-explained-formula-1-mgu-h/[Accessed 16 2 2025].

19. Myintree, C., 2022. Porsche's GENIUS New Turbo Design. [Sound Recording] (Overdrive).

20. Nyberg, R., 2000. atlasf1. [Online] Available at: https:// atlasf1.autosport.com/evolution/1950s.html

21. Rawlins, P., 2024. These are the 12 most aerodynamically efficient EVs on sale today. [Online] Available at: https:// www.topgear.com/car-news/electric/these-are-12-mostaerodynamically-efficient-evs-sale-today [Accessed 11 1 2025].

22. review, G. R., 2024. Porsche 911 GT3 RS review. [Online] Available at: https://www.topgear.com/car-reviews/ porsche/911-gt3-rs [Accessed 23 2 2025].

23. Tallodi, J., 2024. 10 of the most aerodynamic cars ever made. [Online] Available at: https://www.carwow.co.uk/best/mostaerodynamic-cars#gref [Accessed 17 December 2024].

24. Transport, D. f., 2021. E10 petrol explained. [Online] Available at: https://www.gov.uk/guidance/e10-petrol-explained

25. Unknown, 2022. Mercedes-Benz Vision EQXX Just Smashed Its Own EV Range Record. [Online] Available at: https:// carbuzz.com/news/mercedes-benz-vision-eqxx-justsmashed-its-own-ev-range-record/ [Accessed 16 2 2025].

26. unknown, 2023. [Online] Available at: https://www. rac.co.uk/drive/advice/road-safety/what-are-antilock-brakes-abs-and-how-do-they-work/

27. Unknown, 2023. [Online] Available at: https:// mightycarmods.com/blogs/news/the-history-of-rollcages?srsltid=AfmBOorVgxm6ZdlX-3j00uhzJhqKNgMMlrYjrRqk8RWV7saBUYVgt3jM

28. Unknown, 2023. The Most Efficient Car Ever Created? Mercedes EQXX. s.l.:s.n.

29. Unknown, 2024. kbc. [Online] Available at: https:// www.kbcylinders.com/news/comparing-carbonfiber-and-steel-durability-and-weight/

30. Unknown, Unknown. Manual vs. automatic transmission. [Online] Available at: https://www.progressive.com/answers/ manual-vs-automatic-transmission-cars/ [Accessed 22 2 2025].

31. Unknown, unknown. mercedes. [Online] Available at: https://www.mercedes-benz.com/ en/innovation/concept-cars/vision-eqxx/

32. Unknown, 2024. NCI. [Online] Available at: https://www.cancer. gov/about-cancer/causes-prevention/risk/substances/benzene

33. Unknown, n.d. biopolylab. [Online] Available at: https:// biopolylab.com/2020/08/bioplastics-in-the-automotive-industry/

34. Wikipedia, 2024. [Online] Available at: https:// en.wikipedia.org/wiki/Roll_cage

35. Writer, S., 2018. History of the Turbocharger. [Online] Available at: https://grassrootsmotorsports.com/articles/ history-turbocharger/ [Accessed 28 12 2024].

This shows us how much cars are used across the world. As we can see cars easily top every list which shows that cars are incredibly important to most people. From Chart: How the World Commutes | Statista

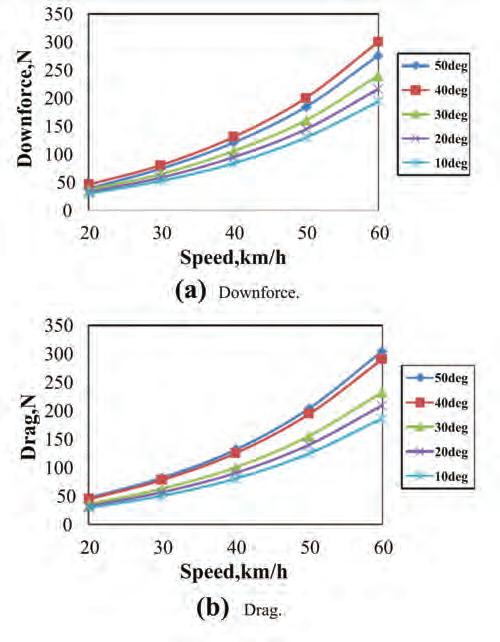

In this diagram, we can see that in every angle of attack the downforce and drag went up as speed went up. This means that we need a speed to measure downforce. There is not a set standard yet, but most companies specify the speed where they make a claim about the downforce. An example is the GT3 RS generates 860kg of downforce at 177mph (review, 2024). From: Relationships between vehicle speed and downforce and drag | Download Scientific Diagram

This essay was commended at the Junior ILA Celebration Evening

Like the story of David and Goliath, Bill Ackman’s company Pershing Square Capital Management was always the underdog compared to other big hedge funds such as Citadel and Jane Street. Yet, the ambitious Harvard MBA graduate refused to be deterred by this and raised his first pool of capital at the age of 26. He made strategic activist positions and trades that have now amassed him a

personal net worth of around $9 billion at the time of writing. He may be a controversial and disliked figure to some, to others he may be an inspiration but no matter what one believes every person must acknowledge the fact that he will go down as one of the greatest investors to have ever walked the planet. So, what acquisitions did this investing mogul make to establish his own financial legacy?

Inspired by Benjamin Graham’s The Intelligent Investor and Warren Buffet, Ackman pursued a career in investing using the principles preached by the two. These principles led Ackman to look for companies with predictable cash flows, healthy balance sheets, good long-term competitive advantages and reliable management. However, to compete with larger and more established hedge funds Ackman realised that he needed a much higher rate of return on investments which the strategies he cultivated from Buffet and Graham would not deliver. In the modern world, people have learnt to find these reliable businesses, meaning such companies are typically overvalued, preventing investors such as Ackman maximising their returns on investment. Ackman’s realisation led him to acquire shares in businesses that were undergoing periods of financial hardship but were ultimately recoverable to ensure he purchased the asset at an undervalued price. His first attempt at implementing this strategy was with Wendy’s, a fast-food chain which was undergoing financial difficulty. However, Ackman noticed that Wendy’s and its subsidiary Tim Horton were two different business models and believed that if they were to operate independently, they would both have a future of greater prosperity. To do this Ackman needed to pressure the management into executing

a spin-off. Since Ackman was a minority shareholder with access to only $3 million in capital, the CEO did not answer his calls. Ackman refused to accept rejection and approached Blackstone, who formerly had an investment bank, which gave Ackman a fairness opinion of what Wendy’s would be worth after the spin-off. Blackstone reported that Wendy’s would be worth about 80% more. Ackman then emailed Blackstone’s report to Wendy’s and just six weeks later Wendy’s and Tim Hortons were two separate companies operating individually just as he desired. Ackman later sold his shares for a hefty profit and developed an activist investing style that he is famous for today. What Ackman did with Wendy’s not only demonstrated his ability to spot opportunities that not many others can but also turn them into a reality.

Much like Warren Buffet, Ackman’s investment research heavily revolves around finding a company’s moat. A moat is simply a long-term competitive advantage that a company has and is vital for fundamental investors to consider. If a company has a strong durable moat, it shall be less affected by economic turmoil and more immune to disruption from competitors. This is why fundamental investors, while there are exceptions, typically stay away from software related businesses due to the low barrier to entry which allows small technology companies to disrupt other firms within a short period of time. Microsoft’s OpenAI and Google’s Gemini were arguably the best products in their industry until DeepSeek a much smaller Chinese company, created a cheaper and more powerful product. DeepSeek’s product caused chaos amongst Wall Street shareholders leading to the crash of big technology stocks such as Google, Microsoft and Nvidia proving just how susceptible the technology industry is to disruption. To uncover whether a company has a durable moat Ackman reads its publicly available SEC Filings such as10-K or 4-K reports as well as talking to experts in the industry. In Warren Buffet’s own words “The key to investing is determining the competitive advantage of any given company and above all, the durability of the advantage”. Since Ackman strongly believes in investing in companies with a strong moat, he generally avoids commodity businesses as the only way they can outperform their competitors is by offering products at cheaper prices to the rest of the market and hence sacrificing profit margins.

This section encompasses case study of two of Ackman’s major wins revealing the investment strategies and principles that Ackman has utilised to reap such returns.

Chipotle is one of his most notable wins, purchasing shares in the third fiscal quarter of 2016 and still owns 28.82 million shares worth approximately $1.7 billion. Ackman has made an overall implausible profit of around 660% at the time of speaking on Chipotle. Ackman was initially intrigued by Chipotle when its stock dropped by around 50%. The fast-food casual restaurant was undergoing a food consumer crisis due to vital systems lacking leading to consumers falling sick. Ackman also noticed that Chipotle was being managed poorly, making sloppy decisions and essential systems were not in place. Ackman then did fundamental analysis to see if Chipotle had a strong and durable moat. His findings were that Chipotle had a very high barrier to entry and was not vulnerable to disruption from competitors. The moat that Chipotle has is unlike that of other fast-food chains. They make food fresh in front of the consumer’s eyes and use very high-quality ingredients while simultaneously offering food at relatively low prices. This is very hard to replicate due to the numerous relationships and negotiations Chipotle has made and maintained for decades with small farmers to obtain such high-quality food at a low price. Ackman accepted that Steve Ells the founder of Chipotle had done an excellent job at getting the company to where it was when he acquired shares but realised that if Chipotle were

to expand then it was necessary that they brought in a more knowledgeable CEO to clean up the mess the company was in. Ackman pressured Chipotle board members to replace Steve Ells with Brian Niccol which turned out to be a great appointment since Niccol implemented required systems and increased Chipotle’s profitability thus increasing shareholder value. This investment is a classic example of one of Ackman’s most used strategies which involves acquiring shares of a poorly managed company with a durable moat and using activism to fix the company’s issues and improve its profits, directly increasing shareholder value.

According to Investopedia Ackman’s investment in General Growth Properties will go down as “one of the best hedge fund trades of all time” turning $60 million into an astonishing $1.2 billion. In 2008, General Growth Properties’ share price fell from around $60 to $0.34. This was due to the management’s aggressive use of debt, using a lot of short-term loans to fund new malls and using those malls as collateral to borrow even more money. When the devastating financial crisis of 2008 hit it was very hard for the company to refinance their loans since lenders were unwilling to replace their existing loans. This led to the company drowning in approximately $27 billion of debt and was about to file for bankruptcy. All the board members of GGP except Ackman were

talking about filing for bankruptcy under the terms of Chapter 7 in the bankruptcy code forcing the company to liquidate its assets and wiping out the shareholders. Ackman did not agree that this was the fate of the company since the malls’ net operating income had been increasing every year and the only obstacle was the debt they were in, suggesting GGP did not make many fundamental mistakes but the market put them in this situation. Therefore, Ackman claimed that it would be better if the company were to file for bankruptcy under the conditions of chapter 11 as then the shareholders would retain their shares, and they could restructure the company’s debt. GGP went to court where the judge agreed to file for bankruptcy under the terms of chapter 11 after GGP proved that their assets were worth more than the liabilities ahead of the crisis implying that value of GGP’s assets would be worth more than the liabilities once the crisis was over. The company went on to restructure its debt extending the maturity dates of their loans which allowed the company to still own real estate and shareholders to keep their stocks. The stock price immediately went up from $0.30 to $1 after the debt restructuring and GGP continue to operate to this day and have increased their net profit margins as well as having paid off most of their existing debt thanks to Ackman’s financial sharpness. What this investment shows about Ackman’s strategy is that similarly to Warren Buffet, he sticks to industries he is well-versed in. Ackman understands real estate like the back of his hand since he worked for his father who had a company that supplied mortgages to real estate developers and investors. Therefore, his lateral idea to file for bankruptcy under the conditions of chapter 11 stemmed from his thorough understanding of real estate and the methods of managing excessive amounts of debt. In addition to this, when Ackman announced he was to acquire a stake in a company that was about to file for bankruptcy, many of his clients were almost certain that Ackman would lose their money. However, Ackman still went in with $60 million, demonstrating his confidence due to his detailed understanding of his investments.

Warren Buffet claims that the first step to investing is not losing money. However, at the same time, what separates a great investor like Ackman from the average investor is not only the difference in knowledge but also Ackman and other top investors have mastered the art of being indefatigable. A huge part of Ackman’s investment strategy is simply about being persistent and persevering. Ackman’s worst investment decision of his career was in a company called Valeant Pharmaceuticals. He entered his position at around $180 per share and it was reported that they fully exited their position in 2017 for a loss of around $4 billion.

Ackman made an investment that deviated from his core principles since he did not fully understand the industry nor the business. The pharmaceutical industry is a very complex and volatile one which Ackman was to find out. Valeant Pharmaceuticals’ business model was acquiring other companies in their industry or drugs and increase the prices of their drugs after ensuring there was a patent on them. However, the management was influenced by another activist investor on the board and made poor decisions which meant the market no longer trusted the management. When shareholders sold their stock, it prevented the business from being able to acquire the low-cost drugs leading to a further decline in stock price. The Valeant loss, however, was not the worst to come as it triggered other hedge funds to believe that Ackman was going out of business which would require him to liquidate all his holdings. Other hedge funds began to short sell all the holdings listed in Ackman’s portfolio. To make matters worse, the Elliot company, another activist hedge fund acquired a 25% stake in Ackman’s public company to influence the company into liquidating so they could profit from short selling the stocks held in its portfolio. Only a small percentage of people would attempt to continue operating when placed in this situation, yet Ackman’s stubbornness kicked in and he refused to be shut down by his competitors. Ackman, who was a loyal customer to JP Morgan,

borrowed $300 million to regain control of his public company to prevent activist investors forcing him to liquidate. Ackman learnt his lesson of not deviating from his core principles and repatched to his clients about plans for future investments and since then the trajectory has mostly been uphill. Whether one invests or not, a lesson can be learned from Ackman that when everything seems to fall apart, rationality is required more than ever, and one must not simply panic and crumble under pressure but attempt to find a judicial solution. In the case of Ackman, if he had simply given up, he would be far from the successful investor we know today.

When everything seems to fall apart, rationality is required more than ever, and one must not simply panic and crumble under pressure but attempt to find a judicial solution. In the case of Ackman, if he had simply given up, he would be far from the successful investor we know today.

On a foundation level, Ackman’s multi-billion-dollar investment strategy involves seeking out business, in an industry he understands, with a durable moat being poorly managed and using activist methods to fix the problems in the business. Ackman also has underscored the vitality of resilience in the finance industry and not allowing failures to define one’s ability to invest and as stated before, while his public stance on social issues may be controversial, there is no denying that he will go down as one of the smartest and most inspirational investors to have ever lived.

1. Activist investing explained | Bill Ackman and Lex Fridman - YouTube.

2. https://www.investopedia.com/articles/investing/032216/ bill-ackmans-greatest-hits-and-misses.asp

3. How to decide which companies to invest in | Bill Ackman and Lex Fridman - YouTube.

4. How an Economic Moat Provides a Competitive Advantage.

5. What Is DeepSeek and How Should It Change How You Invest in AI?

6. Warren Buffet Way by Robert G.Hagstrom: Chapter 3: Page 49 and 50 Favourable Long-Term Prospects Section.

7. Chipotle Mexican Grill, Inc. (CMG) Stock Major Holders - Yahoo Finance.

8. How Much Money Does Bill Ackman Have Invested in Chipotle Mexican Grill Stock?

9. Best hedge fund investment of all time | Bill Ackman and Lex Fridman.

10. Chapter 11 - Bankruptcy Basics.

11. Bill Ackman's lowest point: $4 billion dollar loss | Lex Fridman Podcast Clips.

This essay was commended at the Junior ILA Celebration Evening

“Learn from yesterday, live for today, hope for tomorrow.”

1

Albert Einstein

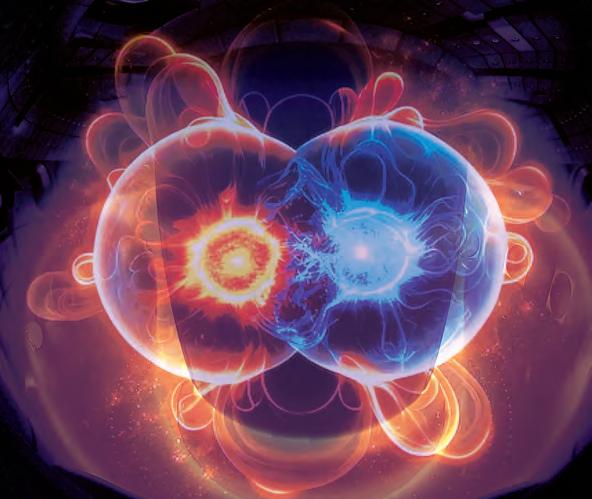

Given the diminishing supplies of fossil fuels on Earth, and the lack of a high-output, reliable method of obtaining energy, the prospect of using a single, 15-metre-diameter device to power an entire city (Editors of Wikipedia, n.d.) has attracted the attention of several countries, tens of billions of dollars of investment per device, and already over six decades of research. (User, 2023) This essay aims to discuss the feasibility of using tokamak fusion reactors as a sustainable energy source in the near future.

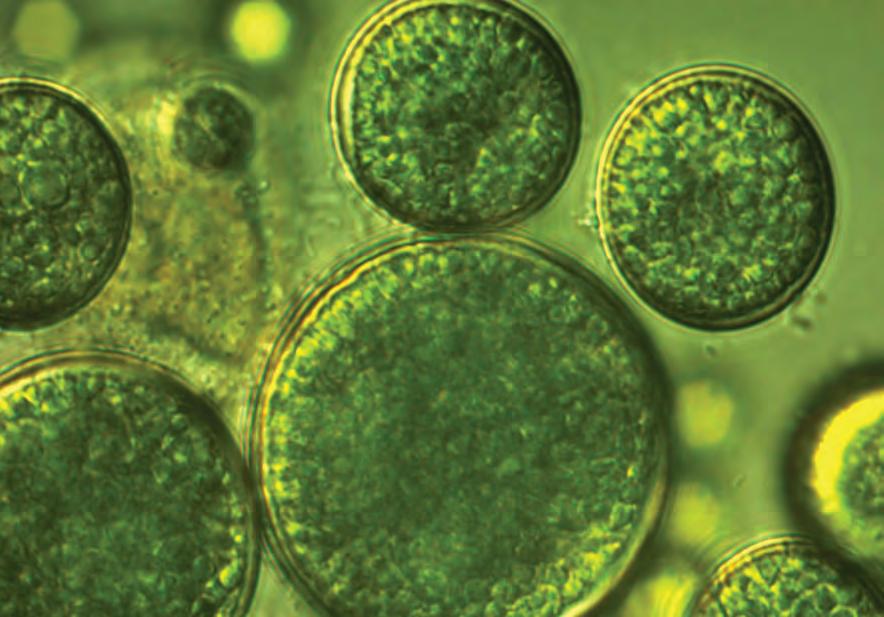

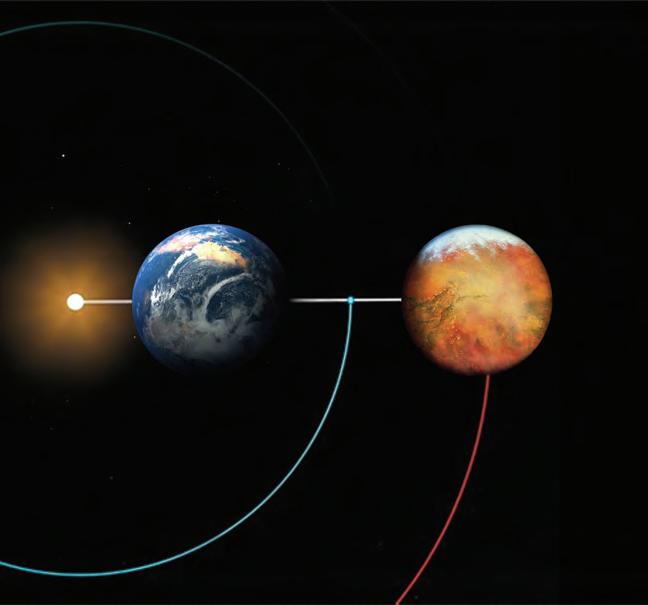

Nuclear fusion is the process by which smaller, unstable atomic nuclei are combined into a larger, more stable nucleus (and sometimes extra high-speed nucleons, which carry most the energy from the reaction). It is important to distinguish this from nuclear fission, which instead involves splitting large, unstable atomic nuclei apart. The fusion reaction currently under the most interest by researchers is that of fusing deuterium and tritium (both isotopes of hydrogen) into helium-4 and a neutron (called the D-T reaction) (US Department of Energy, n.d) due to its low activation energy (thermal energy required for the nuclei to fuse) compared to other reactions and high energy input-to-output ratio.

On paper, nuclear fusion is an average of 4 million times more efficient than fossil fuels and 4 times more so than fission at producing energy, (ITER, n.d.) with the added benefit that fusion produces more energy than is put in (due to the products having some of their mass converted to energy as described by the equation E=mc2), meaning that it is a self-sustaining process.

There are two main subcategories of fusion reactors – magnetic confinement fusion (MCF) and inertial confinement fusion (ICF). (ricketycricket, 2023)1 Currently, MCFs are the most promising candidate for a feasible fusion reactor, whilst ICFs are used more as general testing facilities for a wide range of fusion technologies. (Editors of Wikipedia, n.d.)

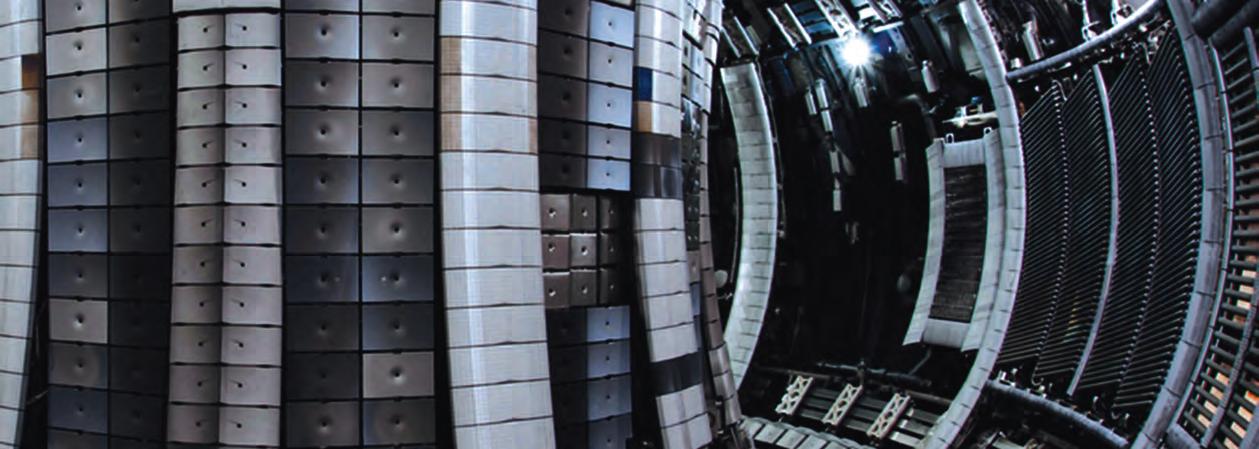

Of all MCF designs, the tokamak, developed by the Soviet Union in the 1960s, is leading the efforts in fusion energy research; for example, the Experimental Advanced Superconducting Tokamak (EAST) in China recently set a new world record2 for plasma confinement time of 17 minutes and 46 seconds on January 20th this year, (Writer, 2025) over double the previous record of 6 minutes and 43 seconds, (Pester, 2025) which was also held by EAST. Whilst alternative MCF designs exist, tokamaks are by far the most researched and have the most operation data, as a result of six decades of experimentation. Given the current goal of simply getting a functional fusion reactor up and running as quickly as possible, tokamaks are the best option moving forward.

A tokamak works by injecting the gaseous hydrogen isotope fuels into a toroidal vacuum chamber and heating them to 150 million°C, (EUROfusion, n.d.) ten times the temperature at the core of our Sun, (Editors of Wikipedia, n.d.) from various external sources, which can vary greatly between reactors. These conditions are necessary because these reactors are essentially creating an artificial star on Earth, and they must make up for not being able to replicate the extreme pressures at the core of a star by having even more extreme temperatures. (Lea, 2022)

1 (User, 2023)

2 This record was held by China at the time of writing (February 7th); however, the French Alternative Energies and Atomic Energy Commission (CEA) announced on February 20th that they achieved a new plasma confinement time of 22 minutes in their WEST tokamak (Sharwood, 2025)

Under these conditions, electrons are stripped from their atoms and allowed to wander freely, leaving behind an electrically charged plasma. The nuclei are now able to combine in the plasma and fusion occurs; the produced neutrons gain kinetic energy from the reaction and escape unimpeded from the plasma due to their neutral charge. (User, 2024) (Aman, 2013) They go on to be absorbed on the walls of the vacuum chamber by neutron blankets, which transfer most of their kinetic energy to the vaporisation of water. Similarly to a conventional power plant, the produced steam drives a turbine connected to a generator, and electricity is produced. (Aman, 2013)

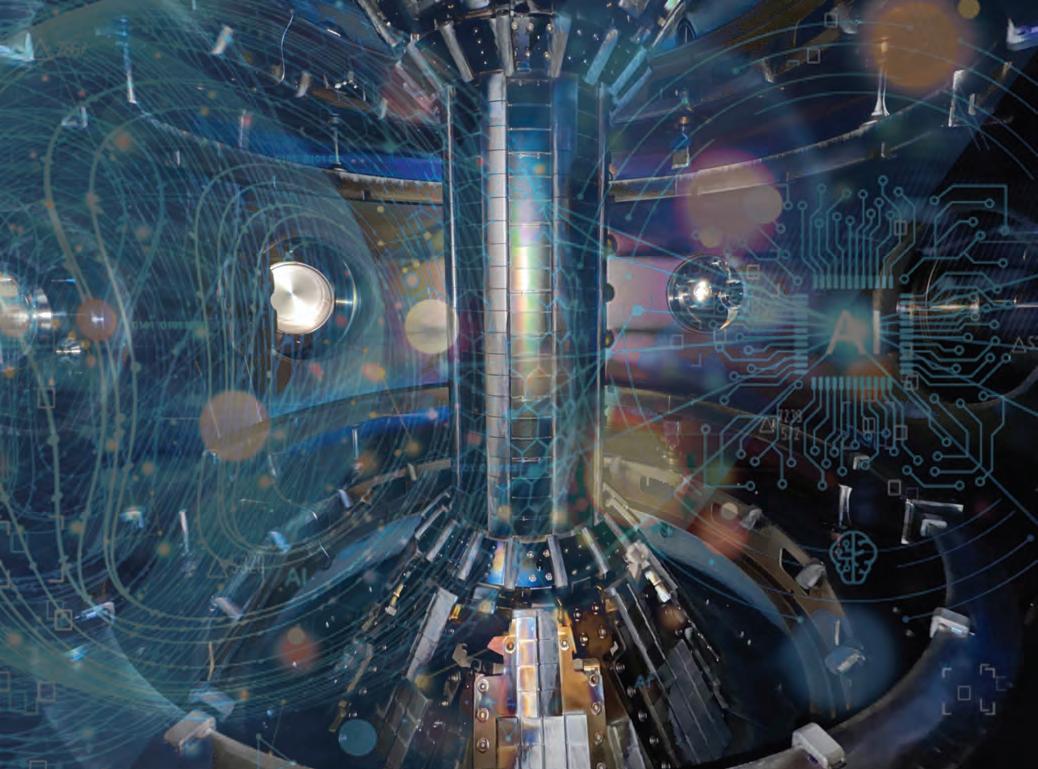

The world’s largest tokamak, the International Thermonuclear Experimental Reactor (ITER) in France, is under construction and expected to be operational by 2039. It will be able to contain a volume of plasma over ten times greater than other reactors (ITER, n.d.) and plans to be the first tokamak to achieve a burning plasma (a higher efficiency mode of the plasma). (User, 2025)

Although tokamaks are currently the most feasible fusion reactor design, there are nevertheless still several barriers to their widespread use. The five most limiting ones are the confinement of a pure, stable plasma, protecting the reactor from the extreme operational environment, heating the plasma to sufficient temperatures, having an energy input to output factor (Q) greater than 1, and having a reliable supply of tritium to fuel the reaction. (User, 2025)

The former is the hardest aspect of a tokamak’s design. It depends on turbulence and imperfections within the plasma itself (called magnetohydrodynamic (MHD) instabilities) that cause positive feedback loops that disrupt its confinement. (User, 2025)

MHDs come in five main forms. (User, 2025) Kink instabilities occur when plasma currents, the flow of ions within the plasma, are too strong, which deforms their shape and could lead to weakened confinement or a disruption (a sudden, complete loss of confinement). (User, 2025) Ballooning instability occurs higher-than-usual plasma pressure at the edge of the plasma causes some areas to bulge out, which allows energy to escape and reduces efficiency. (User, 2025) Tearing mode (TM) instabilities occur when plasma imperfections cause the magnetic field lines in the plasma break and reconnect incorrectly to form magnetic “islands” that allows some particles to leak through, which similarly allows energy to escape and reduces efficiency. (User, 2025) Edge-localised modes (ELMs) occur when particles are ejected from the plasma edge (the outermost layer of the plasma with the least stable conditions), which can damage the first wall (the layer of the vacuum chamber in contact with the plasma). (User, 2025) (Editors of Wikipedia, n.d.) Finally, neoclassical tearing modes (NTMs) occur when the pressures in the core of a plasma cause bootstrap currents (self-induced currents (Editors of Wikipedia, n.d.) to form, which give rise to similar issues to TM instabilities. (User, 2025)

Although most of these instabilities could technically be eliminated by taking away the plasma currents, this is not feasible. Plasma confinement requires a toroidal and poloidal (which can be thought of the x and y axes respectively on the toroidal vacuum chamber) magnetic field; (Editors of Wikipedia, n.d.) the tokamak’s external magnets provide the former, but the plasma currents self-induce the latter. (User, 2025) Other MCFs, like stellarators, can operate without a plasma current by placing all the magnets necessary to confine the plasma externally. (User, 2025) While these designs offer a more stable confinement because everything that is needed to control the plasma is present, they often lack confinement and input energy efficiency as a result, because the plasma currents’ self-induced magnetic fields are not exploited. (User, 2025)

To mitigate against plasma instabilities, one of the simplest solutions is to elongate the vacuum chamber in one direction to be more of an ellipse rather than a circle. (User, 2025) The flatter shape reduces kink and ballooning instabilities by distributing pressure more evenly, gives more magnetic stability from the better alignment of the plasma with the field lines, and increases the overall pressure of the plasma. Large tokamaks like ITER, JET (Joint European Torus), and EAST all use an elliptical vacuum chamber for this reason. (User, 2025)

A second way to reduce the formation of plasma instabilities is to actively adjust the magnetic field live during operation. This method is too complex for humans to reliably do, so researchers at the California National Fusion Facility are investigating the use of AI Deep Reinforcement Learning (DRL). (US Department of Energy, 2025) After training their AI, it was able to take inputs from hundreds of places on their tokamak and respond by adjusting the magnetic field in real time. The further development of AI could revolutionise tokamak operation and facilitate the elimination of plasma instability,

Should a disruption occur from a failure to confine the plasma, there may be a sudden spike in thermal energy, powerful eddy currents from the loss of a plasma current, and/or the emission of high-energy electrons, all of which can damage the first wall. (User, 2025) Scientists at JET are experimenting with the addition of massive gas injections (MGIs), made up of a gaseous mixture of noble gases and deuterium, to the vacuum chamber during a disruption to quench (rapidly cool and dissipate) the plasma, (Kruezi, et al., 2011) controlling the release of thermal energy, hindering the paths of the high-energy electrons, and preventing the formation of eddy currents.(User, 2025) ITER will use a technique called shattered pellet injection (SPI), which involves shattering cork-sized frozen pellets of hydrogen and neon and injecting the fragments into the vacuum chamber (ITER, n.d.) to achieve a similar but better effect to MGI.

Nevertheless, even with measures like MGI and SPI in place, the tokamak, especially the first wall, still requires thorough protection from the intense heat and high-energy particle flux of operation. To tackle this issue, tokamaks use an actively water-cooled, tungsten divertor placed on the bottom and/or top of the first wall in which the plasma is contained, which acts as a shield layer between the plasma and the first wall, as well as an exhaust pipe for waste and impurities, like ash or unfused fuels, to be extracted, thus increasing the thermal efficiency of the plasma. (ITER, n.d.) (Liu, et al., 2009) The divertor does not need to cover the entire first wall because the temperature and particle flux are the most prominent in the top and bottom of the chamber (the thermal power there can reach up to 20MW/m2, (ITER, n.d.) due to the magnetic field lines having a nonzero divergence. (User, 2025)

For the remainder of the first wall, a layer of neutron blankets to absorb the neutrons would suffice; there are almost no highly damaging particles (like helium-4 or electrons) reaching here, because the plasma doesn’t physically touch the first wall during confinement, and all charged particles would not be able to escape the magnetic field. The neutron blankets have the additional aforementioned role of converting the kinetic energy of the neutrons bombarding them into thermal energy.

The successful confinement of the plasma depends on two systems that must work together – the cryogenic cooling and the auxiliary heating systems.

The several thousands of tons of superconducting magnets (usually an alloy of niobium (User, 2025) that confine the plasma need to be cryogenically cooled to a few degrees above absolute zero (User, 2025) to perverse their properties of low resistance and high magnetic flux (ITER’s magnets can reach 13T). (ITER, n.d.) Similarly to other fields that require superconducting magnets, like the Large Hadron Collider (LHC), liquid helium is typically used as the go-to cryogenic fluid (User, 2025), which is cycled to every area of the tokamak via a pipe network.

Tokamaks employ a wide range of different methods to heat the plasma to the required 150 million °C. Neutral Beam Injection (NBI), the most flexible and reliable one, involves shooting a beam of high-energy neutral particles into the vacuum chamber, which are ionised by the electrons in the plasma. Due to the unprecedented size of the NBI device needed for ITER, an entire testing facility (called the Megavolt ITer Injector and Concept Advancement (MITICA)) has been built in Italy to separately run experiments before integration. Other methods, like radio frequency (RF) heating and electron cyclotron resonance heating (ECRH) (involves the heating of electrons using microwaves, which transfer their thermal energy to the nuclei in the plasma), are more efficient and result in more stable plasmas than NBI, but their complexity means that it makes more sense to initially use NBIs for prototypes.

The ultimate aim of all these heating and cooling technologies, coupled with the divertor, is to achieve a burning plasma – the holy grail of tokamak operation. A burning plasma is a plasma that is so hot that >50% of its heating comes from the fusing particles within it; in the case of the D-T reaction, the particle in question is helium-4. This means that far less heating needs to be done via auxiliary methods, which increases Q. A burning plasma is so sought-after because the conditions required for it, as described by the Lawson Criterion to be a high enough temperature, pressure, and confinement time, are incredibly difficult to achieve, given the largely unsolved issue of plasma instability, which causes the plasma to lose significant amounts of energy with nothing in return.

Another big issue is that of acquiring the fusion fuels. One of the hydrogen isotopes required, deuterium, can be industrially extracted from seawater very easily and cost-effectively. (Arnoux, 2011) Tritium, the other isotope, is extremely rare in nature (its natural abundance is 0.0000000000000001%, (Editors of Encyclopedia Britannica, n.d.) but the most feasible option to produce it is by “breeding” it using the neutron released from the main fusion reaction and lithium. (ITER, n.d.) JET currently uses tritium breeding, and ITER will join it soon. As it happens, tokamaks already have a fully functional, modular neutron absorption system – the neutron blankets – so some of these blankets are simply replaced with tritium breeding modules. (Editors of Wikipedia, n.d.) (ITER, n.d.)

Fusion energy eliminates the environmental and habitat damage issues associated with fossil fuels and hydroelectricity, whilst remaining free from the fetters of fuel scarcity and weather dependency.

Since tritium is radioactive, it must be disposed of properly. Tritium has a half-life of 12.32 years and decays via beta minus radiation into helium-3, which is stable. This puts it under the category of low-level nuclear waste (User, 2025) (especially given that the amounts of tritium that need to be disposed will be in the tens of grams, (UK Atomic Energy Authority, n.d.) and a few millimetres of aluminium is enough to block all the radiation. Unreacted tritium from the vacuum chamber can be extracted using the divertor and either cycled back into the chamber or disposed of in the above way.

This safe disposal protocol means that practically zero contamination will affect the environment, a huge improvement from the need to store nuclear waste for fission reactors. Since no other fuels used in tokamaks are radioactive, and the plasma does not even get close to being hot enough to form heavier, fissile elements, fusion could be the first energy source to be fully green and sustainable, but without the various downsides that typical sources of this nature come with.

The extraction of said fuels poses minimal risk to the environment too. Deuterium is extracted from seawater, tritium is produced in-situ, and lithium is largely produced from seawater (although some of it is still mined). (Editors of Wikipedia, n.d.) Therefore, fusion energy eliminates the environmental and habitat damage issues associated with fossil fuels and hydroelectricity, whilst remaining free from the fetters of fuel scarcity and weather dependency. Moreover, fusion energy could catalyse the completion of the shift from obtaining lithium via mining to via the electrolysis of seawater, which reduces potential worker exploitation and improper disposal of waste in poorer countries.

The operation fees of tokamaks will be far fewer than other sources; they require little to no human

intervention to operate due to the aforementioned role of AI in plasma confinement (there will be less salaries to pay and the cost of the reactor will be recovered over time). Furthermore, the cheap production would result in lower energy prices for consumers and may begin a U-turn in the negative public opinion for nuclear-based energy sources (because of the legacy of fission reactor meltdowns).

Finally, as has been mentioned, fusion is one of the most efficient energy sources that humans are currently capable of harnessing; it is 4 million and 4 times more efficient than fossil fuels and fission respectively. (ITER, n.d.) Hence, the amount of fuel required (and consequently price) is also brought down.

In conclusion, tokamaks currently have several barriers to their widespread use, including plasma instability, tritium breeding, and achieving Q>1. Once the prototypes have overcome these challenges, the use of tokamaks to generate sustainable energy could pave the way for innovations in other fields of science. However, it is unlikely that tokamaks will be ready to take on this role in the next few years, given the need to sway public opinion in the short term and the high prices that come from its novelty as a technology. Consequently, tokamaks likely won't be able to contribute to current global energy issues, like rising energy costs or net zero, but can still hopefully positively contribute to a future of clean, cheap, and sustainable energy after a one or two more decades of research.

This essay won the Junior ILA award in the Third Form category

Back in October of 2024, my family and I were on holiday. Accompanying us was the game of Dobble, from which my research began. In particular, my father and I became quite intrigued by the nature of the game, the ‘mono-match’ principles of it, and how it was constructed. Over the course of my investigations, I discovered an unexpected flaw within the game!

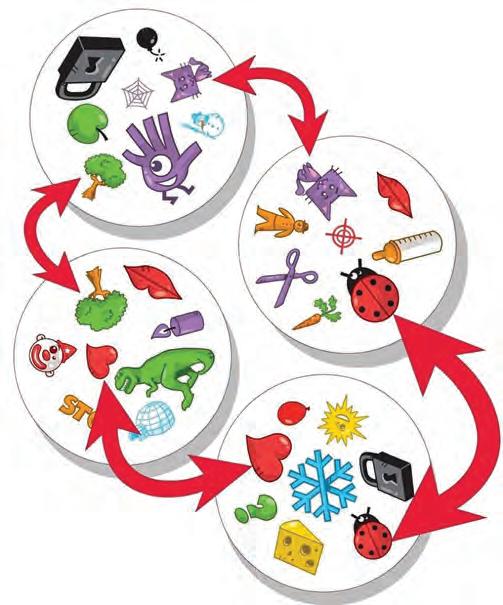

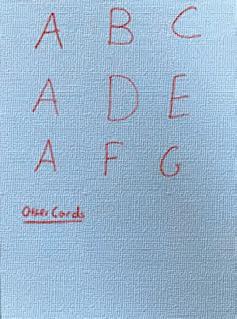

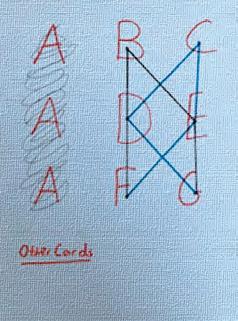

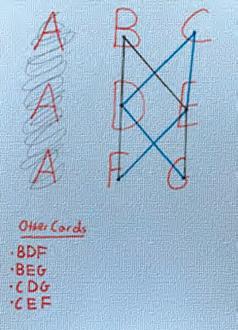

Included in a tin of Dobble, there are 55 cards. Each card has 8 symbols illustrated on it. Furthermore, all cards have exactly 1 symbol in common with every other card, and the aim of the game is for players to be the quickest to identify which is the matching symbol with the previous card when a new card is played. Surprisingly, there are only a total of 57 different symbols used. Here are some Dobble cards:

There are many ways to create a ‘mono-matching’ game like Dobble, a couple of which are listed below:

1.1 Find the Carrot

One remarkably straightforward way of making a ‘mono-matching’ game is to firstly, pick a number of cards, and a number of symbols to be drawn on each card. Next, establish a common symbol

or link running through every card, such as the carrot symbol in Dobble. Finally, fill in the remaining symbols with unique images. Technically, this does create a usable ‘mono-matching’ game; however, it would become fairly boring and monotonous after a few rounds (since the only symbol being sought after would be the carrot), and we could call it Spot the Carrot!

Dobble is a much more compact, efficient, ‘mono-matching’ game than Find the Carrot!

As just previously established, method 1.1 is quite inefficient and monotonous, whereas Dobble’s method is largely the opposite. Consider a game with n symbols allocated to each card. To make explaining easier, I will use n = 3, although n = 8 is used in the actual game of Dobble. Since n = 3, set the first card to ‘ABC’ (I will use letters to represent symbols on cards).

After having constructed ABC, to make a functioning ‘mono-matching’ game where n = 3, more symbols will need to be added. However, the next card to be constructed must have exactly one similar symbol to card ABC. Without loss of generality, a reasonable next card to formulate would be the card ‘ADE’, as it has the common link of symbol ‘A’ to card ABC.

In Dobble, each symbol has 8 appearances across all the cards. Helpfully, this figure also equates to the number of symbols on any one card. As I illustrate in the next section, if we want to create a ‘mono-match’ game like Dobble (where n = 3), each symbol must occur precisely 3 times throughout all cards. Now that we know this, we simultaneously know that another card incorporating ‘A’ must be crafted: ‘AFG’. To find the remaining cards, one must continue to logically construct cards that include 3 of the symbols A-G until no more can be assembled.

To help you visualise this, I have created a step-by-step guide below:

0. For context, this is what the sheet of paper looks like at the start of the guide with the three rows representing our first three cards:

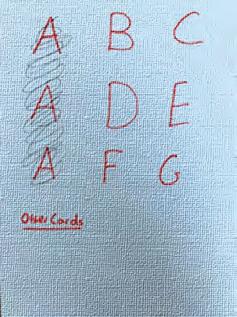

1. Since we already have 3 ‘As’ in our pack, we will not be needing to use them further to construct new cards:

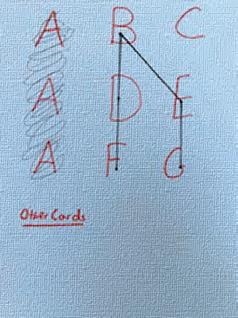

2. Firstly, one must pick a set of 3 letters, one from each row, to ensure that there is no ‘double links’ (Where two cards have two common symbols). I have picked the combination ‘BDF’:

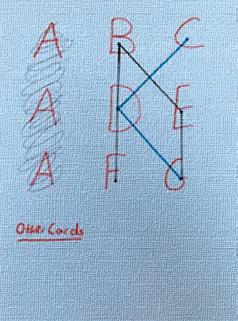

3. Next, another card needs to be visualised, so that all three cards including the symbol ‘B’ have been found. The singular other card with ‘B’ (which only has ‘B’ in common with the other cards) is the card ‘BEG’:

4. Since all permutations incorporating ‘B’ have been identified, we must now find all permutations incorporating ‘C’. To ensure that each card maintains exactly 1 symbol in common with each of the other cards, the last two symbols of each ‘C-Card’ must firstly be dissimilar to the last two symbols of each ‘B-Card’ and secondly, must not be situated on the same row. One combination that follows both rules is ‘CDG’:

5. For this set of cards, it is now clear to see that the only other ‘C-Card’ permutation which follows the rules is ‘CEF’, as any other combination would result in a ‘double link’:

6. Now every symbol appears 3 times throughout our deck, indicating that the hunt has concluded:

7. Therefore, when creating a ‘mono-match’ game where n = 3, the 7 cards needed are: ABC, ADE, AFG, BDF, BEG, CDG, and CEF. Each pair of these cards has exactly one letter in common.

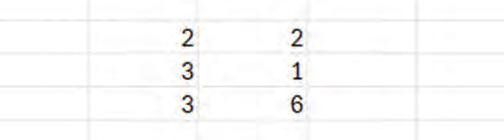

To start off, I realised that the number of symbols on each card set a limit in terms of how many cards I was able to make. To investigate this, I carried out a few experiments, setting 2, 3, and 4 as the number of symbols on each card. As above, I substituted symbols for letters (A, B, C, etc). Each string of letters represents a card. My results are shown below:

ABC, ADE, AFG, BDF, BEG, CEF, CDG

ABCD, AEFG, AHIJ, AKLM, BEHK, BFIL, BGJM, CEIM, CFJK, CGHL, DEJL, DFHM, DGIK

Following this data, I created a sequence from the ‘Maximum number of cards’ column, which would go: 1, 3, 7, 13. Now I sought to find the nth term of this sequence, and so conjecturing a formula for determining the maximum number of cards in a ‘mono-match game’ when given n (the number of unique symbols on each card)

From the table in section 2, we can see that the number of unique symbols in total across every card is equal to the maximum number of cards. When we take n = 3, for instance, every symbol is included in the 3 cards ABC, ADE, and AFG. On these cards there are n^2 symbols in total (not necessarily different). When one omits every similar symbol (every ‘A’) from the 3 cards, we are left with n^2 – n symbols (since the number of As = n = 3). Lastly, one ‘A’ needs to be added back to the number of total unique symbols, otherwise the total will be 1 less than it is. Therefore, the conjectured formula for the nth term is n^2 – n + 1, where n = the number of unique symbols on each card. When substituting the values of 1, 2, 3, and 4 for n, the outcomes are as expected (1, 3, 7, and 13).

In Dobble, there is 8 symbols illustrated on each card. When 8 is inputted into the formula, the result is 64-8+1, which equates to 57. However, as I mentioned earlier, there are 55 cards in Dobble. If the maximum number of cards that Dobble could include is 57, why are there only 55 cards included in a tin? What are the two extra cards? Is there a specific reason as to why Dobble is ‘Incomplete’? I will answer these burning questions in due course.

Almost immediately after I had theorised that Dobble was ‘Incomplete, I found myself subconsciously on the hunt for the remaining 2 cards. To satisfy my curiosity, I created an excel spreadsheet to investigate the included 55 cards, and how that would help me find the missing 2 cards. A small excerpt of the spreadsheet is shown below (Although it does extend to row 69 and column CF):

From the table in section 2, it is clear to see that, the number of symbols on each card = the number of times that any specific symbol appears throughout all the cards. This means that, because Dobble uses 8 symbol cards, the number of appearances of any symbol is also 8.

Since there are 2 missing cards, I knew that when adding up all the symbols, 14 would only have 7 occurrences; and one symbol would have a mere 6 occurrences (as the two cards must have a common/matching symbol). These missing appearances tell me the symbols on the unknown 2 cards.

I used ‘1s’ within the spreadsheet so I could simply sum up the number of occurrences. Once I had summed up all the symbols on the provided cards, the symbols drawn on the missing 2 cards were revealed. However, it was still necessary to find the correct permutations of the symbols between the two cards, as an incorrect placement of them on the cards could lead to some cards sharing more than one symbol, completely invalidating the game.

To determine which symbols to assign to which cards, I used a SUMPRODUCT formula. A SUMPRODUCT formula is the addition of the products of the elements of two (or more) arrays

For instance: The SUMPRODUCT of the first and second columns would be; (2 x 2) + (3 x 1) + (3 x 6), which is equal to 4 + 3 + 18 (= 25) SUMPRODUCT can be used for more than 2 columns of data.

However, before, utilising the SUMPRODUCT function, I allocated the 15 symbols between the two cards (I made a column titled ‘Card 56’ and one titled ‘Card 57’). Once I had assigned the 15 symbols, I used the SUMPRODUCT function separately for each card column and the Card 56 column, and for each card column and the Card 57 column.

The target SUMPRODUCT calculation result is 1 for every combination. This is because, to achieve the result of 1, the multiplication of ‘1 x 1’ would need to take place exactly once. Since there are only blanks (zeroes) and 1s written on the spreadsheet,

this multiplication would show that there is precisely one symbol in common between the two selected cards, as every other multiplication apart from ‘1 x 1’ would result in 0 (i.e. ‘0 x 1 = 0’ and ‘0 x 0 = 0’). Also, multiple matches would give a result of 2 or more.

The first trial of the SUMPRODUCT function showed that my arrangement of symbols of the 15 symbols was wrong, as some cards resulted in having 0, 2, and even more than 2 matching symbols to Cards 56 and 57. So, after trial and error of the composition of the last 2 cards, I had done it. It was at that moment when every SUMPRODUCT calculation resulted in 1, I knew what the missing 2 cards were.

By means of my extensive data sheet; I had now finally deciphered the last 2 cards, complete with correctly placed symbols. They are as follows:

Card 56 Card 57

Symbols Cactus Dog Daisy Exclamation Mark Ice Cube Eye

Maple Leaf Hammer Person Ladybug Question Mark Lightbulb Snowman Skull

T - Rex Snowman

It bemused me as to why Dobble appeared to be incomplete. Upon researching the internet, there seemed to be numerous theories as to why Dobble only includes 55 cards instead of the maximum 57. However, some of the theories, when examined, seem completely irrational. In this paper, I will only discuss the somewhat feasible theories, in order of credibility.

Out of all the speculations on this topic, this argument seems the most likely. It revolves around the idea that playing card printers use 55 cards sheets. Therefore, for Dobble to print all 57 cards, the manufacturers would have to use extra sheets, raising the production cost. This is simply not worth it for a mass manufactured game.

However, the single flaw in this theory is that Dobble cards are not rectangular like playing cards, but circles. Circular cards might not be printed in the same number and formation as rectangular cards because of their cumbersome shape. This means that, for instance, the cards could potentially be printed in 3 sheets of 19, which would render this theory invalid as no extra sheets would need to be used. Overall, this hypothesis is relatively attractive, if not for one modest flaw.

Perhaps the creators and manufacturers of Dobble believed that the game would flow more smoothly with only 55 cards? The omitted cards certainly make dealing easier, as 54 is divisible by 1, 2, 3, 6, and 9 (in Dobble, one card is taken out at the start of every game). Although, 56 (the number of cards being dealt if the game was complete) is divisible by 1, 2, 3, 4, 7, and 8, which is arguably an even better selection of numbers, based on regular gathering sizes. On the whole, this postulation is largely reasonable but does, again, have a puzzling fault, ultimately leading to its downfall.

Some people (however few) take an alternate perspective on this topic. According to them, the tin/packaging is simply too small. I myself find this argument extremely hard to sympathise with, as 2 playing cards maintain almost no volume whatsoever, not to mention the minuscule vertical height they would adopt. Hence, this theory is last on the list of credibility for me.

Aside from the original game, there are several other variants of Dobble. Some examples are Dobble Disney, Dobble Animals, and Dobble 1 2 3. However, there is one version which adds a further example of incompleteness: Kids Dobble. Instead of having 8 symbols printed on each card like the original game, Kids Dobble has just 6. When substituting 6 for n in our formula for maximum card count from earlier (n^2 – n +1), we achieve the result of 36-6+1 (or 31). Therefore, the greatest number of cards that Kids Dobble can include is 31. But alas, the Dobble manufacturers again do not encompass every card in the game, as it contains only 30!

Interestingly, in Dobble Disney and Dobble Animals, there are also only 55 cards included inside the tin (like the original game). This fact leads me to believe that the first theory I covered earlier is prevalent, considering that leaving out 2 cards appears to be a common theme through every version, possibly due to some sort of manufacturing constraint.

Dobble, at first glance, is nothing more than a simple matching game. However, when examined at a deeper level, the intricacies and complexities of the game's construction began to reveal themselves. I was surprised that my research took an unexpected turn and led me to discover that whilst being ‘efficient’ Dobble is also incomplete; and I am now glad to have found the missing cards!

1. How does Dobble (Spot It) work?, Matt Parker/ Stand Up Maths (his channel name), YouTube, 2022. How does Dobble (Spot It) work?

2. Why are there only 55 cards in a deck of spot it and not 57? | BoardGameGeek.

3. Dobble Jeu De Carte ▷ Promotion et meilleur prix 2025, Image Link : dobble-jeu-de-carte-678x381.jpg (678×381).

This essay was commended at the Junior ILA Celebration Evening

Appeasement attracts a great deal of contrasting views, from those who believe that it was a complete disaster and those who try to explain the logical reasons why the British and French governments adopted the policy. As will be evidenced, my view is similar to the latter and is a more sympathetic appraisal of why it was, at the time, a good idea. In hindsight, it seems this policy was an abysmal mistake that allowed Hitler to become extremely powerful, to the extent

that Britain and France were almost weak in comparison. However, it must be considered that, at the time, appeasement looked like a wise plan for the British and French, and one that could easily have proved very beneficial. This view can be supported by various ideas and this essay will explore; the need for some time to rearm, the previous horrors of the Great War, the Treaty of Versailles being unfair, Hitler standing up to Communism, British economic problems in the

aftermath of the Great Depression and the lack of support by the British Empire and the USA. These are the main reasons why it is most compelling to believe that appeasement was not a bad policy at the time. However, it will still be argued that appeasement, even at the time, was an unwise endeavour, considering there was no reason to be trustworthy of Hitler, something the public can see. This view will be further supported by the growth the policy allowed Germany to carry out, the effect it had on the USSR and the encouragement it gave Hitler. (Walsh, 2001)

First though, it is vital that some context is established regarding the policy of appeasement. At the end of the Great War, the Allies introduced the Treaty of Versailles which imposed a maximum army size of 100,000 for Germany, while also forbidding conscription, which meant that soldiers had to be volunteered. (Britannica, 2024) The restrictions on the German army were particularly frustrating for a country whose military strength had grown so significantly since the Franco-Prussian war of 1870-1871, (King’s College London, 2020) though perhaps the most damaging cause was the forced acceptance of sole blame for the Great War, a clause that attacked German reputation and broke their spirits completely. In addition, they lost significant land, particularly to Poland, while they were required to pay £6.6 billion in many instalments, which proved a challenge to their economy already broken by the long war. (Britannica, 2024) The public anger at such conditions, which was seemingly somewhat justified, led to Hitler’s rise to power, as he promised better conditions, and that he would violate the unfair treaty. This gave rise to the policy

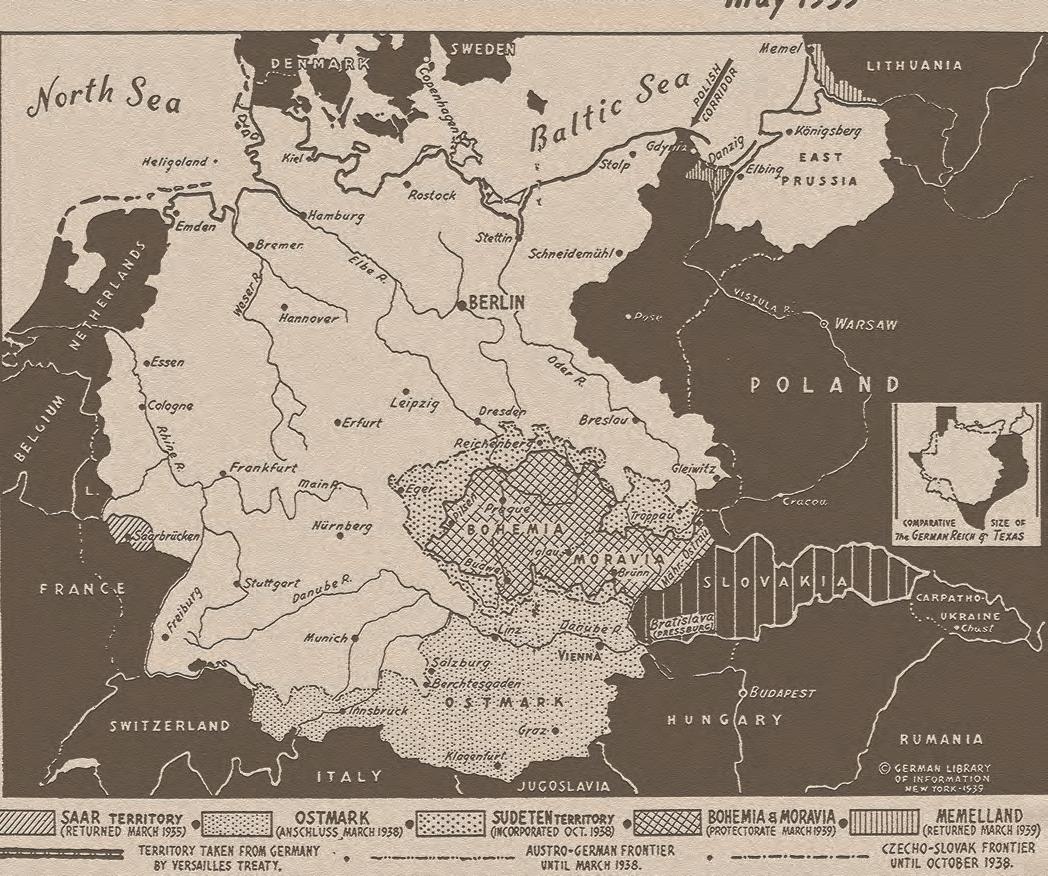

of appeasement, which was an idea that to limit any conflict, Britain and France should not interfere with Hitler’s actions and attempt to satisfy his desires to a reasonable extent. This policy meant that Hitler’s Germany could slowly grow in strength, starting with significantly increasing their army past the limit in 1933, before they remilitarised the Rhineland in 1936, with the Anschluss between Germany and Austria in 1938 and the invasion of Czechoslovakia in March 1939 also happening without any significant reaction by Britain and France. (Walsh, 2001)

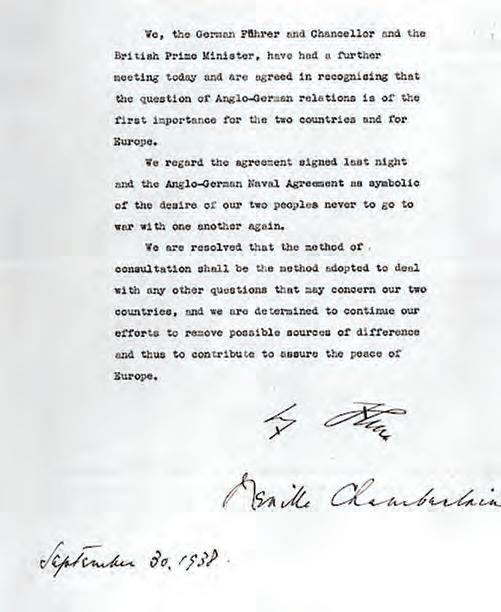

It seems convincing that the policy of appeasement was not a good idea because, even at the time, British citizens could see that Hitler could not be trusted, and putting faith in his words was unwise. For example, in October 1938, soon after the Munich Agreement was signed, Hitler stated that he did not intend to attempt to seize any further territory once being given control of the Sudetenland. (Gottlieb, 2024) In the view of Prime Minister Neville Chamberlain, this would bring ‘peace for our time’, yet 93% of the British population correctly believed Hitler was lying about his intentions for the rest of Europe. (Modern History Review, Hodder magazines, ‘Peace for our time’, 2022) These beliefs were held in spite of

the Anglo-German Declaration being signed a day after the Munich Agreement, which is suggested to state that the British and German people would ‘never go to war with one another again’. (Neville, 2006) Therefore, one must raise the questions as to whether appeasement was not a good idea, since it relied on trust in Hitler’s promises, since appeasement could only work well should Hitler defy the Treaty of Versailles, but not carry out any extreme actions, that would leave the British and French with no option but to start a war. When Germany invaded Poland, it was proven that trusting Hitler was a mistake, but even without this hindsight, a fair number of the population accurately predicted some deception by Hitler, making it clear that like Hitler had previously gone back on his promises, he would continue to do so. For example, Hitler had already gone back on his promise that he did not desire the entirety of the Sudetenland, which should have stopped the British and French governments from believing Hitler would not take any further land after the