Welcome to the Q3 edition of Global Tech Insider, where we move beyond today’s innovations to uncover the technologies shaping tomorrow’s world.

This quarter, we explore Africa’s thriving developer ecosystem, examining how it’s evolving and why global companies must engage meaningfully to unlock mutual growth. We also dive into the cybersecurity landscape with Horizon3.ai, showcasing how evidence-based testing is transforming how businesses secure their digital infrastructures.

In the automotive sector, Uber’s $300M investment in autonomous vehicles marks a bold re-entry into the robotaxi race, signaling a shift in the future of mobility. We also look at BYD’s cross-device integration system, transforming the in-car experience, and dive into the ethical implications of AI-generated music, as illustrated by the virtual band Velvet Sundown.

This issue also highlights ValueMiner, a game-changer in enterprise intelligence. With its graph-first approach, ValueMiner is revolutionising decision-making and reshaping business operations in real-time. Alongside this, we spotlight Horizon3.ai, whose NodeZero® platform is redefining cybersecurity through evidence-based validation - showing not just where vulnerabilities exist, but how attackers could exploit them, and helping organisations turn proof into their new measure of trust

At Global Tech Insider, we remain dedicated to untangling the complexities of our rapidly evolving digital world, bringing you the insights that matter most, and keeping you ahead of the curve.

Your journey with us means everything. Let’s uncover what’s ahead, together.

The Editors Global Tech Insider

Correspondence Address

GLobal Tech Insider 20-22 Wenlock Grove, London, N1 7GU

Email: info@globaltechinsider.com Web: www.globaltechinsider.com

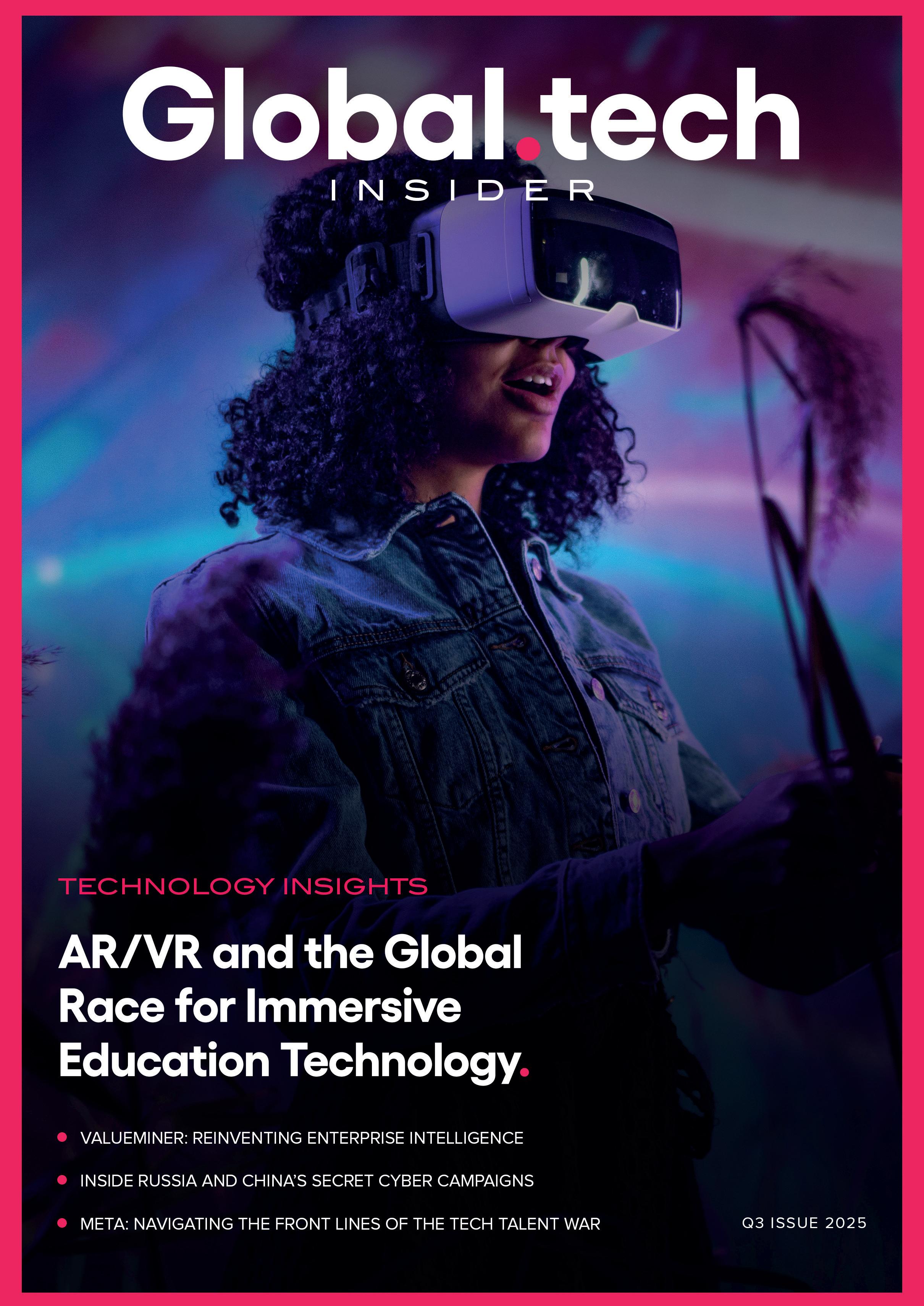

When a student in rural China dons a virtual headset and “steps into” a 19th-century battlefield, or a medical trainee in Brazil practises surgery on a digital patient in VR, we’re witnessing not a novelty, but the early shape of tomorrow’s classrooms. The shift from flat screens to three-dimensional, interactive worlds is underway - and behind it lies a high-stakes global race. Nations, corporations, and universities are competing to lead in immersive education.

To understand where this is headed, we must look under the hood: the hardware and software pushing immersive learning forward, the real experiments now underway around the world, and the challenges - technical, ethical, and strategic - that determine who might win.

The appeal of immersive education begins with how it changes the very nature of learning. Traditional classrooms often rely on abstract explanation: reading about the solar system, memorising chemical reactions, or listening to a lecture about history. AR and VR collapse that distance by making knowledge spatial and experiential. Instead of memorising facts, students inhabit concepts - walking across the surface of Mars, mixing virtual compounds without risk, or standing beside a reconstructed historical figure. This sense of presence is what makes immersive education so different. Hardware advances - lighter headsets, untethered mobility, and higher-fidelity displays - are simply the enablers of this presence. As devices like the Meta Quest 3 or Apple’s Vision Pro become more comfortable and portable, the barrier between learner and lesson thins even further.

Equally important is the ecosystem of software that transforms devices into classrooms. Early experiments such as Google Expeditions, though now retired, revealed the spark of wonder in virtual field trips, even if the technology was rudimentary. Today’s platforms extend that promise: VR science labs from Labster let students run experiments without expensive materials, while AR overlays allow medical trainees to “see through” anatomy in real time. Cloud computing and 5G now handle the heavy rendering, freeing devices to remain light and accessible, while artificial intelligence enables lessons that adapt in real time. If a learner struggles, the system slows down or changes its approach; if curiosity spikes, it offers deeper explorations. The allure lies in how these technologies don’t just make lessons more engaging - they make learning feel embodied, intuitive, and unforgettable.

This promise is not theoretical. Around the world, classrooms and labs are already testing how immersion reshapes understanding. In the United States, VR simulations are used in medical schools to allow surgical residents to rehearse complex procedures before operating on real patients, a practice shown to improve performance and reduce errors. NASA, too, relies on VR to prepare astronauts for the extreme demands of space missions. Across Europe, history has been reimagined through VR reconstructions of medieval towns and ancient ruins, while museums deploy AR to annotate artefacts, turning a walk through the gallery into an interactive dialogue with the past. These examples demonstrate that immersive education is compelling because it brings abstract knowledge into lived experience.

In Asia, momentum is just as strong. China has invested heavily in immersive classrooms, particularly in rural areas where resources are limited. Here, VR and AR are not a luxury but a bridge to opportunity, allowing students in remote regions to experience lessons once confined to urban centres. Japan has embraced immersive learning in vocational programmes, where students use AR to examine machinery and industrial equipment in ways that physical classrooms could never allow. India, meanwhile, adapts the allure to local constraints: lightweight AR apps running on inexpensive smartphones let children in resource-strapped schools interact with digital science experiments. The diversity of adoption strategies highlights the flexibility of immersive technology - it can serve the elite and the underserved alike, depending on how it is deployed.

The very qualities that make immersive education exciting for teachers and learners also fuel a fierce race among corporations and governments. For companies like Meta, Apple, and Google, immersive education represents far more than an education market. It is an opportunity to define the platforms

through which billions of people will one day learn. Owning that layer means controlling not only device sales but content ecosystems, data analytics, and the habits of a future workforce. Startups, especially in emerging markets, are also entering the competition, offering localised solutions that resonate more deeply with cultural and linguistic needs. In this way, the race is not just about scale but about relevance.

Governments are equally aware that immersive education is a strategic asset. China’s XR strategy positions education as a pillar of national competitiveness, while Europe emphasises digital sovereignty to avoid reliance on foreign platforms. Smaller nations, too, are looking at immersive education as a way to leapfrog traditional infrastructure challenges, building virtual classrooms rather than waiting for physical facilities to catch up. For policymakers, the allure lies not only in better education outcomes but in the broader advantages - technological self-sufficiency, workforce readiness, and cultural influence - that leadership in this field confers.

Yet the road forward is complex. The same immersion that makes learning compelling introduces new risks. Hardware remains costly, teacher training lags behind, and in many regions, basic connectivity is still inadequate. More troubling are the ethical concerns. Immersive systems capture vast amounts of biometric data - tracking gaze, movement, and even emotional responses. For advocates, this level of personalisation makes lessons feel like one-on-one tu-

toring. For critics, it raises alarms about surveillance, data exploitation, and manipulation. In essence, the features that make immersive education alluringits intimacy, its adaptiveness, its ability to place the learner at the centre - are also what make it fraught with risk.

The race to build immersive classrooms is, at its core, a race to perfect the technology. As headsets become lighter, processors more powerful, and networks faster, AR and VR move closer to delivering seamless, affordable, and truly responsive learning experiences. These advances are not just incremental - they redefine what is possible when lessons become spatial, adaptive, and persistent across devices. The classroom of the future will be powered less by chalk and textbooks, and more by sensors, algorithms, and immersive engines that bring concepts to life.

But the ultimate test is not whether the hardware dazzles or the software impresses - it is whether these technologies can scale responsibly, reaching millions of learners without sacrificing privacy, accessibility, or pedagogical depth. If AR and VR can overcome those hurdles, they won’t just add another tool to education; they will become the infrastructure of how knowledge is delivered in the 21st century. That is why the global race for immersive education technology matters: it is, quite simply, a race to shape the future interface between human curiosity and human progress.

Uber has re-entered the self-driving spotlight with a $300 million investment into luxury EV maker Lucid Motors, marking a bold comeback in the global robotaxi race. The move involves the purchase of 20,000 Lucid Gravity electric SUVs, each to be outfitted with Nuro’s Level 4 autonomous driving system. These vehicles are scheduled for limited rollout in a major U.S. city by late 2026, with international deployment hinted at soon after.

It’s a striking reversal for Uber, which sold off its inhouse autonomous driving unit (ATG) to Aurora Innovation in 2020. Now, instead of building the tech internally, Uber is aligning itself with two emerging AV players in a bid to catch up, and possibly leapfrog, Tesla, Waymo, and Cruise.

Uber’s renewed robotaxi ambition comes with both strategic intent and market pragmatism. Following the turbulent exit from its AV division five years ago, the company’s approach to autonomy shifted from ownership to orchestration. Rather than developing the technology itself, Uber is now betting on partnerships, positioning itself as a platform that can distribute and scale the tech once it’s ready.

Its ride-hailing app spans more than 70 countries and logs over 34 million daily trips. That gives Uber a global sandbox few can rival. But raw scale isn’t enough. The selection of Lucid, known for its highend electric saloons and SUVs, hints at an effort to differentiate on experience. The Gravity SUV’s projected 450-mile (724-km) range, spacious interiors, and software-first design make it a compelling vehicle for high-usage urban fleets, particularly for business-class and airport services.

Uber’s stake in Lucid will reportedly make it the company’s second-largest shareholder, suggesting deeper integration is in the works. For Lucid, this is a route to mass distribution beyond its current luxury retail niche. For Uber, it’s a calculated bet that combining comfort, range, and autonomy can help it own the premium end of the robotaxi spectrum - just as competitors focus on cheaper, boxier designs.

This is not a two-player deal. Nuro’s inclusion is what truly defines the partnership. Known for its compact autonomous delivery vehicles, Nuro has built a strong reputation for safety and reliability. It has logged over 1.5 million autonomous miles in U.S. cities and maintains strong compliance with evolving regulatory frameworks. Its “Nuro Driver” system, designed to operate without human intervention, will power the

autonomous features in Lucid’s Gravity SUVs.

For Uber, Nuro’s Level 4 autonomy is essential. It’s the point at which vehicles can drive themselves under defined conditions, with no human fallback required. That standard allows for true driverless deployment, critical to the economics of scaling robotaxis. By teaming up with a partner that’s already achieved Level 4 status in delivery applications, Uber sidesteps the cost and complexity of reinventing autonomy tech from scratch.

The synergy between the three players is rooted in complementary strengths. Lucid provides the hardware - long-range, software-defined vehicles designed for comfort and battery efficiency. Nuro supplies the autonomy layer, tested in real-world conditions. Uber brings the operational backbone: consumer-facing app, demand logistics, and route intelligence. Together, the trio aims to build a fully autonomous ride-hailing experience that is premium, safe, and scalable.

Still, the move is not without risks. Lucid has faced financial and operational headwinds in recent years, with quarterly losses exceeding $700 million and multiple changes in its executive leadership. Scaling up production to fulfil a 20,000-unit order while meeting quality and safety standards will test the company’s maturity as a manufacturer.

On the competition front, Uber is entering a market already being shaped by other giants. Waymo continues to lead in regulatory clearances and real-world miles, operating fully driverless rides in Phoenix, San Francisco, and Los Angeles. Tesla is preparing its own robotaxi reveal in 2025, betting on its AI-native FSD (Full Self-Driving) software, though its approach is more controversial and less aligned with regulators.

Then there are the logistics. Deploying 20,000 robotaxis requires more than just the vehicles; it demands charging infrastructure, maintenance networks, AV-ready urban routes, and local government buyin. Regulatory standards for autonomous vehicles

remain inconsistent across U.S. states, with some welcoming robotaxis and others stalling permits due to safety and labour concerns. Lawsuits surrounding liability, data usage, and insurance are all still evolving.

Even within Uber’s driver network, acceptance is mixed. While some drivers view autonomy as inevitable, others express concerns about job displacement. Uber insists the rollout will be gradual and focused on specific use cases, such as airport shuttles, off-peak rides, or high-volume routes. But tensions between automation and employment will be difficult to avoid, especially in countries with weaker labour protections.

Uber’s $300 million move is more than a product announcement; it’s a strategic repositioning. It suggests a future where Uber no longer relies solely on a vast freelance driver base but supplements it with autonomous fleets for predictable, high-demand routes. This hybrid model could improve margins, reduce rider wait times, and create consistency in service levels.

It also raises the stakes for other ride-hailing firms. Lyft, which recently pivoted to profitability through

strategic layoffs and pricing changes, may now be forced to revisit its autonomy roadmap. Meanwhile, traditional carmakers, from Hyundai to Toyota, are monitoring how platform-native companies like Uber enter manufacturing territory through partnerships, not production.

On a broader scale, Uber’s re-entry into autonomy will influence city planning, transport budgets, and carbon goals. A reliable robotaxi fleet could ease congestion and reduce emissions, especially if integrated with public transport infrastructure. But it could also strain local ecosystems if not managed with data transparency and urban collaboration.

Ultimately, Uber’s bet is about long-term control. By taking equity in both the vehicle maker and the autonomy supplier, it isn’t just buying services, it’s embedding itself deeper into the future of urban mobility. This is Uber hedging against platform commoditisation and positioning itself as a full-stack transport company - one that owns not just the app, but the entire ride experience.

Uber’s bold $300 million bet marks a fundamental shift in how it sees the future of mobility. Success, however, will depend on execution. Can Lucid deliver at scale? And can Uber balance automation with its driver ecosystem and regulatory headwinds?

In the contemporary theatre of global technology, where digital transformation has too often become a hollow phrase, one company is rebuilding the premise from its very foundations. Nestled in Munich yet thinking at planetary scale, ValueMiner is not merely a software firm. It is an architectural response to decades of management inefficiency, IT sprawl, and unmet strategic potential. Founded in 2014 by veteran C-level consultant Robert J. Schiermeier, ValueMiner fuses cutting-edge AI, sovereign data models, and a graph-first logic to craft one of the most transformative platforms in modern enterprise technology.

Where most digital solutions offer layers atop legacy complexity, ValueMiner proposes something more ambitious: a new language for organisational intelligence. The result is not a tool but an operating system for the 21st-century manager.

Graph Thinking: Escaping the Tyranny of Tables

At the technological core of ValueMiner lies a bold philosophical assertion: the world is not a table, it is a graph. Whereas traditional databases force reality into rows and columns, ValueMiner’s proprietary graph database embraces the interconnected, living structure of business. From production chains to compliance workflows, from cross-border project portfolios to embedded teams, everything becomes visible, linkable, and context-rich.

This shift is not academic. It powers ValueMiner’s ability to construct a real-time digital twin of an enterprise - a living simulation that mirrors strategy, operations, and interdependencies as they unfold. For the manager, this means real questions (“How is our strategic rollout in APAC progressing?” or “Where are we losing efficiency in compliance?”) can be asked and answered without weeks of data wrangling or millions in analytics infrastructure. The digital twin does not just visualise data; it speaks it.

Complementing this is ValueMiner’s sovereign AI layer. In a European landscape increasingly sensitive to data privacy and control, the firm offers an approach that is both powerful and compliant. Combined with a sophisticated low-code interface, users can create customised, enterprise-ready applications in weeks, not quarters - and do so without abandoning legacy systems. The platform connects, rather than replaces, weaving modern functionality into old architectures without disruption.

ValueMiner serves two distinct, high-impact user groups. The first is enterprise managers: individuals responsible for navigating strategy, performance, and process with limited visibility and overstretched resources. ValueMiner hands them a control panel grounded in logic, not latency. Gone are the days when strategic clarity required army-sized controlling departments.

The second audience is software companies and development teams. With ValueMiner’s low-code framework, these firms can rebuild their existing software products on top of a flexible AI backboneachieving cost savings up to 70 percent while gaining real-time adaptability. It is, quite literally, software remade without compromise.

These are not marginal improvements. Clients routinely report IT cost reductions of 80 percent. Entire bureaucratic structures are being reimagined, as evidenced by ValueMiner’s active role in transforming how the Bavarian government interfaces with citizens. This is not just modernisation; it is structural reinvention.

Yet if technology is ValueMiner’s engine, culture is its chassis. The firm runs not on jargon or hierarchy, but on a plainspoken ethos that places character above credentials. “We are not interested in your CV, but in how your brain works,” says Schiermeier. The internal motto - respect, keep your promises - is a lived operating system.

Executives such as COO Alexandra Höhne and CTO Michael Weber embody this culture: intellectually rigorous, emotionally intelligent, and radically com-

mitted to impact. Team members are expected not just to code or consult but to challenge assumptions, propose bold solutions, and learn from failure. This cultural scaffolding enables a unique blend of creativity and precision, yielding products that are not only innovative but deeply fit for purpose.

Recruitment is guided by four core traits: curiosity, collaborative drive, resilience, and problem-solving ability. These are not soft skills, but the foundation of the firm’s delivery model. It is an environment where excellence is expected, but humanity is preserved.

Having pioneered AI compliance software in 2016 and partnered with OpenAI in Germany as early as 2018, ValueMiner has long moved ahead of the curve. Today, it is executing industrial strategies across five continents, forming defence and civil partnerships with industry unicorns, and being considered for prestigious awards such as the Bavarian Innovation Prize.

In Germany, it is revolutionising public sector services by applying private-sector efficiency to citizen-state interactions. In Asia and the US, new strategic partnerships are extending ValueMiner’s graph-based logic to global systems. Verticals in publishing and internal compliance are in active development, and

CRM partnerships across Europe are accelerating.

Through it all, ValueMiner adheres to its founding promise: digital transformation without the trauma of system replacement, and automation without the dilution of human intelligence.

To describe ValueMiner as a software firm is to miss its broader implication. It is a reframing of how enterprises think, act, and evolve. In a business environment saturated by dashboards that offer more colour than clarity, ValueMiner presents something rarer: real-time coherence.

Its impact is measured not just in money saved, but in friction removed - from workflows, decisions, and even culture. It renders the opaque legible and the complex navigable. And in doing so, it grants managers, developers, and public servants alike a rare gift in the modern age: the ability to see clearly, act precisely, and lead confidently.

ValueMiner is not just a platform. It is the architecture of organisational sensemaking - built not for code alone, but for the cadence of decision.

Africa’s tech scene is undergoing a transformation. With over 700,000 professional software developers and rising, the continent is no longer on the fringe of the digital economy - it is fast becoming one of its most dynamic growth zones. Countries like Nigeria, Egypt, Kenya, and South Africa now host tens of thousands of engineers each. GitHub activity from African developers has surged, rising by over 70% in markets like Kenya and Nigeria, and tech hubs in cities such as Cairo, Kigali, and Cape Town are emerging as global players.

But while the numbers are promising, the opportunity they represent is fragile. As global tech companies race to tap into this emerging ecosystem, there is a growing sense that something deeper is required, more than just outsourcing or short-term hiring sprints. The future of tech on the continent depends on whether international firms show up with genuine partnership, or simply extract value and move on.

This isn’t growth for its own sake. African developers are building world-class products and companies. Paystack, the Nigerian fintech acquired by Stripe for $200 million, was engineered entirely by local teams. Flutterwave, valued at over $3 billion, supports international clients like Microsoft. These aren’t isolated stories; they reflect a pattern of skilled African talent driving innovation at scale.

Fuel for this boom comes from both the private and public sectors. Andela’s model of training and exporting Afri-

can tech talent has placed developers in firms like GitHub and ViacomCBS. Local institutions such as Moringa School (Kenya), Decagon (Nigeria), and ALX Africa have collectively trained thousands more. Public initiatives, like Ghana’s coding curriculum or Ethiopia’s Ethio ICT Village, are also helping to institutionalise digital skills.

African tech start-ups raised a record $6.5 billion in 2024 alone, up from just $400 million five years earlier, much of it flowing to founder-engineers solving real-world problems. Add to that the rise of open-source projects, such as Nigeria’s Open Banking API and Kenya’s disease-detection AI tools, and it’s clear: Africa’s developer economy is not a future bet. It’s a present force.

Despite the enthusiasm, Africa’s tech ascent remains uneven and vulnerable to structural barriers. Reliable internet remains a luxury in many regions. In places where connectivity exists, power outages can still disrupt daily produc-

tivity. And while developer training may be improving, the cost of devices, data, and stable infrastructure is still out of reach for many aspiring coders.

Policymakers have tried to respond. Kenya’s ambitious Konza Technopolis aims to be a smart city modelled on global tech hubs. The African Continental Free Trade Area (AfCFTA) also promises to ease inter-country collaboration and knowledge exchange. But these efforts remain patchy. Regulation is often inconsistent, especially around digital taxation, data privacy, and IP protection, making cross-border tech scale-ups difficult to execute.

Without targeted investments in infrastructure and coordinated policy efforts, Africa’s tech ecosystem risks stalling. Building connectivity is not just about laying cables or subsidising laptops. It requires aligning public and private sector priorities to create lasting, inclusive growth.

There is a common but outdated narrative that Africa’s tech talent is attractive simply because it is cheaper. And while labour arbitrage is undeniably part of the story - developers in Lagos or Kigali may earn 60-80% less than their Western peers - the real value lies elsewhere.

What distinguishes Africa’s developer community is its resourcefulness. These are engineers building fintech solutions without stable bank APIs, health tech platforms without universal healthcare, and AI applications trained on low-data environments. Their constraints breed innovation. Their work is often deeply connected to local communities and social impact.

Global companies engaging in Africa must do so with respect, not opportunism. That means moving away from extractive models - short-term hiring, siloed outsourcing - and instead co-developing products, train-

ing initiatives, and ownership structures that benefit both parties.

If international companies want to participate in Africa’s growth story, they need a purposeful playbook, one built on partnership, not opportunism. That playbook should begin with investing in education and training, not just to create a hiring pipeline, but to deepen the entire ecosystem.

Secondly, firms should actively partner with governments and regional organisations to advocate for coherent policies: improved broadband access, favourable tax conditions for start-ups, and robust IP frameworks that protect innovators.

Finally, it’s time to champion African-led innovation. Rather than replicating Western product models, companies should fund local solutions, support regional research labs, and amplify success stories. Platforms like Zindi - a data science competition platform based in South Africa, show that Africa’s most scalable solutions often come from within.

Africa’s developer boom is real, and the potential it represents is staggering. But scale alone is not enough. To unlock its full promise, global tech must move beyond short-term thinking and build meaningful, respectful partnerships. That means addressing infrastructure, championing policy reform, and, above all, valuing African innovation for what it truly is: a driver of global progress, not a discount labour pool.

The question for tech leaders is no longer whether to engage with Africa’s developer ecosystem, but how? Are you approaching with a purposeful playbook, or simply showing up for the headcount?

Once allies in the corridors of power, Donald Trump and Elon Musk now find themselves embroiled in a public political spectacle. Musk’s pointed criticism of Trump’s “Big Beautiful Bill” - a sweeping tax and spending package - prompted Trump to threaten dramatic retaliation: from revoking subsidies and contracts to questioning Musk’s American citizenship and hinting at deportation.

This isn’t just personal animus; it’s a signal to the tech industry that the entanglement of politics and business carries peril. As Musk’s companies - SpaceX, Tesla, xAI - brace for fallout, other tech firms are watching closely, recalibrating partnerships and lobbying strategies. This article explores how the feud escalated, the concrete risks Musk now faces, Big Tech’s broader adjustments, and what it all means for the future of the sector.

It was only a few months ago that Trump and Musk appeared politically aligned, united by a shared disdain for red tape and an enthusiasm for American exceptionalism. Musk even accepted an honorary role in Trump’s “DOGE” agency - an initiative aimed at streamlining government innovation, lending the venture tech-world credibility. For Trump, Musk embodied the self-made innovator, and for Musk, Trump offered access to influence and federal leverage.

But the alliance unravelled when Musk went on the offensive over the so-called Big Beautiful Bill - a sweeping legislative package introduced by Trump’s administration that included major cuts to green energy incentives and ballooned the federal deficit. Musk publicly denounced the bill as “fiscally reckless” and a betrayal of innovation, particularly pointing to the removal of tax breaks for electric vehicles. Trump, never one to take criticism lightly, snapped back. Within hours, he was hinting that Tesla and SpaceX might lose federal support and began referring to Musk’s citizenship status in vague, threatening terms.

The turning point came when Musk floated the idea of a new “America Party” - a centrist alternative to both Republicans and Democrats. Trump immediately labelled Musk “delusional” and “disloyal”, framing the idea as a vanity project that threatened Republican unity. The feud escalated from professional disagreement to a very public ideological split. From political allies to adversar-

ies, the shift was swift, and the fallout immediate.

Musk’s

Trump’s vendetta didn’t stop at rhetoric. It translated into a series of calculated political manoeuvres that could have real and lasting effects on Musk’s businesses. Tesla, which once benefitted from a range of federal subsidies, saw its EV tax incentives slashed in the new bill - a move widely interpreted as a retaliatory strike. In response, Tesla’s share price dipped nearly 5% in one week, with investors expressing concerns about longer-term federal hostility.

SpaceX is also under scrutiny. Reports have emerged that Trump’s team has considered removing the company from the Golden Dome missile defence initiative - a strategic programme valued in the tens of billions. While Pentagon officials have described SpaceX as “essential” to U.S. space infrastructure, the uncertainty has spooked defence analysts and raised eyebrows across Capitol Hill. According to leaked internal memos, SpaceX executives have been urged to prepare for a potential freeze in new federal contracts.

Meanwhile, xAI - Musk’s AI start-up competing directly with OpenAI - finds itself at an awkward crossroads. Though it recently secured promising research partnerships, its path to future Department of Defence funding now appears murky. Karoline Leavitt, a senior Trump campaign adviser, publicly stated that “the President does not support giving taxpayer money to people who insult the American voter”, in what many interpreted as a veiled reference to Musk’s criticisms.

The economic implications are far-reaching. When political animosity begins to dictate procurement decisions and regulatory treatment, innovation ecosystems falter. Musk’s empire, once bolstered by both private capital and public contracts, now finds itself having to fight battles on multiple fronts - financial, political, and reputational.

While Musk bears the brunt of the blow, the ripple effects across Big Tech are just beginning to show. In Silicon Valley’s private circles, executives have been quietly adjusting their playbooks. Companies like Amazon, Microsoft, and Oracle, all with ongoing federal contracts, have reportedly ramped up efforts to avoid political entanglement, issuing fresh internal guidance discouraging high-profile political endorsements by senior executives.

At the same time, regulatory bodies outside the United States are seizing the moment. The European Commission, which had already been

eyeing Musk’s platform X (formerly Twitter) for content moderation and data protection violations, now appears more emboldened to act. Legal experts suggest that the breakdown in Musk’s U.S. political support reduces the diplomatic risk of harsh enforcement actions. In other words, the EU now sees less reason to tread lightly.

Investors, too, are taking note. Risk disclosures from SpaceX now explicitly mention Musk’s political activity as a material risk - a rare move for any privately held company. Several venture capital firms have begun conducting “political exposure assessments” when considering new investments

in companies with highly visible founders. As one investor told Axios anonymously, “Nobody wants to back a genius who’s one tweet away from losing a government contract.”

The broader effect is that Musk’s feud with Trump is becoming a cautionary tale. In a sector already under scrutiny for monopolistic behaviour and opaque lobbying, the consequences of unchecked political engagement are now front and centre.

At its core, the Trump-Musk fallout signals the high volatility of mixing personal politics with enterprise leadership. Once seen as savvy brand-building, overt political involvement is increasingly being viewed as a liability. Start-ups and mature firms alike are revisiting their risk frameworks, and some boards are even considering codifying policies that restrict political activity for senior executives.

Defence and aerospace contractors who’ve long competed with SpaceX, including Northrop Grumman and Blue Origin, may quietly benefit from the chaos. With SpaceX under scrutiny, agencies looking for less politically exposed alternatives could shift attention (and funding) elsewhere. In the AI space, xAI’s uncertainty may create an opening for more “neutral” companies like Anthropic or Cohere to take on sensitive federal work.

The message is clear: political capital is no longer a one-way ticket to influence. It can be rescinded just as quickly as it’s granted. For an industry built on disruption, the tech sector may need to relearn an old corporate truth - stability matters, and discretion can be strategic.

The once-solid Trump-Musk alliance has unravelled publicly, putting a spotlight on the risks tech leaders take when politics becomes personal. Trump’s threats, from pulling subsidies and contracts to questioning Musk’s loyalty, carry real weight, while Musk’s combative stance has unsettled both investors and regulators.

This saga stands as a warning bell: in a world where tech intersects with politics, even a single tweet can shape contract portfolios, stock valuations, and regulatory agendas. As Silicon Valley watches, the lesson is clear - political capital is a double-edged sword. The next generation of tech powerhouses will no doubt ask themselves: how do you innovate bravely without triggering a backlash that derails everything?

The attack on European telecommunications, attributed to APT28 (Fancy Bear), is just one example of a growing trend in cyberattacks whose true purpose often remains concealed beneath the surface. While these incidents may appear to be about temporary disruptions, their real intent lies in exploiting critical infrastructure for far more significant, long-term gains. Hacker groups, operating out of Russia and China, have refined their methods, conducting operations that are increasingly hard to trace but highly effective in their execution.

This article will peel back the layers of these sophisticated attacks, shedding light on the techniques, tools, and vulnerabilities that make them so dangerous. By diving into recent examples, including the APT28 breach, we’ll explore how these groups infiltrate, adapt, and evade detection, reshaping the cybersecurity landscape in ways that most never see coming.

The APT28 group’s infiltration into US energy grids wasn’t an isolated incident; it was part of a much larger, quieter trend of sophisticated cyberattacks targeting critical infrastructure. While the attackers’ presence within the grid wasn’t immediately felt, the true gravity of their intrusion lay in their ability to navigate unnoticed through the digital corridors of one of the most essential services in the world.

At first glance, the lack of widespread disruption may have given the impression that the attack was relatively minor. However, its depth and potential ramifications were far from it.

Using phishing campaigns, APT28 gained access to the networks controlling the power distribution systems - a breach that exposed significant vulnerabilities in the infrastructure’s industrial control systems (ICS). These systems, responsible for regulating everything from power stations to transformers, are crucial for the seamless operation of the energy grid. Once inside, the hackers had the ability to disrupt power flow or manipulate data without detection. The malware they deployed blended in with normal system activity, making it difficult for security systems to detect its presence.

This attack wasn’t about causing immediate chaos; it was about creating long-term vulnerabilities in a system integral to the functioning of entire nations. The subtlety with which APT28 worked, staying inside the system undetected for months, signals the growing sophistication of cybercriminals, who are no longer content with causing disruptions - they now aim to embed themselves within the very fabric of critical infrastructure. The attack made clear the importance of robust defences and the need for constant vigilance in securing the systems that power our world.

Moving from disruption to espionage, the breach by APT10 into a global health organisation’s data system was a textbook example of cyberattacks intended not for chaos, but for long-term strategic advantage. The attack, which targeted vaccine research and development data, brought to light the high-stakes nature of cyber espionage - particularly in sectors where the information has the power to shape global economic and health outcomes.

While the public may have initially focused on the breach’s surface - stolen data - it was the stra-

tegic implications that should have raised the greatest alarm.

APT10 used sophisticated advanced persistent threats (APTs) to embed themselves within the organisation’s network. These tools allowed the group to remain undetected for months, quietly siphoning off sensitive data without causing any immediate disruption to the organisation’s operations. The stolen information included not just basic research, but details on vaccine formulations and production processes - data that could give competitors a major edge in the pharmaceutical race.

The stealth with which APT10 operated speaks volumes about the group’s technical capabilities. Rather than triggering alarms with direct attacks, the hackers infiltrated the system using highly evasive malware and fileless exploits, which left no trace on the servers.

What made this attack particularly troubling was its far-reaching impact on global health security. The implications of having highly sensitive health data stolen - especially during a pandemic - are enormous, extending beyond simple corporate espionage. By obtaining access to this information, APT10 not only violated the privacy of the organisation, but also gained valuable insights into global health strategies that could affect everything from vaccine production to international trade in medical supplies.

The breach underscored just how vulnerable the healthcare sector is to cyber threats, and how these attacks can go beyond economic damage to disrupt global health responses.

While APT10 was infiltrating the health sector, another set of attacks - this time involving Russian hacker groups - focused on disrupting communications. The breach of European telecommunications networks demonstrated the wide-ranging effects of cyberattacks on industries we often take for granted. These networks are the veins through which digital communication flows, and their disruption can have cascading effects across nearly every sector of society.

Unlike attacks that aim to cause physical damage or large-scale disruptions, this particular operation had a more subtle and insidious objective: to remain hidden while quietly compromising critical communication channels. The hackers infiltrated telecom infrastructure and maintained a long-term presence, effectively becoming invisible while gaining full access to vital network traffic.

The malware used was a mixture of customised backdoors and advanced rootkits, designed to bypass encryption and traditional security measures, allowing the attackers to monitor communications in real time without alerting anyone to their presence.

The real danger of this attack lies not in the immediate consequences, but in the potential for manipulation and control. With access to telecommunication networks, hackers can alter or block communications, inject false information, or monitor sensitive conversations without anyone knowing. This makes the telecommunications sector a prime target for cyberattacks, as control over communication networks can yield both strategic and economic advantages.

The scale of the intrusion underscores the vulnerability of even the most highly regulated and security-conscious industries to skilled cyber criminals operating with patience and precision.

As the complexity of these cyberattacks continues to grow, so too does the challenge of defending against them. The evolving nature of digital threats requires a dynamic approach to cybersecurity - one that can adapt to new attack strategies and incorporate emerging technologies like artificial intelligence (AI) and machine learning.

The attacks discussed above are not isolated cases, but part of a larger trend in which cybercriminals are leveraging advanced tactics to infiltrate and persist within critical infrastructure.

While new defence technologies are emerging, many organisations still rely on outdated systems that leave them vulnerable to sophisticated attacks. AI-powered defence mechanisms are becoming more common, offering the potential for real-time threat detection and response. However, these systems are only as effective as the data and algorithms they rely on - and cybercriminals are equally quick to adopt new technologies to bypass security measures.

The reality is that the threat landscape is evolving faster than many organisations can adapt. Vulnerabilities in legacy systems, insufficient training, and inconsistent cybersecurity protocols across regions and industries have created a perfect storm for cyberattacks. In response, it’s crucial for cybersecurity strategies to evolve - focusing not just on defence, but on predictive and adaptive technologies that can outpace cybercriminals’ rapidly advancing methods.

Cyberattacks on critical infrastructure are no longer isolated incidents - they are part of an ongoing, complex battle being fought in the digital realm. As hacker groups based in Russia and China continue to refine their methods, the ability to defend against these threats becomes more difficult.

Imagine the world’s best football clubs scrambling to sign a once-in-a-generation player. Now picture the stakes being far higher, the teams being the biggest names in tech, and the prize not being goals, but artificial general intelligence. That’s the scenario unfolding in Silicon Valley, as Meta aggressively redraws the battlefield in what’s being dubbed the “tech talent war”. With staggering offers of over $100 million to star researchers and the creation of a new “superintelligence” division under Mark Zuckerberg himself, Meta is not just playing to compete - it’s playing to dominate.

This is not merely a hiring spree. It signals a seismic shift in how tech giants perceive the race for AI supremacy. Behind the headlines lie deeper implications for innovation, company culture, and the delicate balance of power within the tech industry. This article unpacks Meta’s bold moves, the motivations driving top talent, and the ripple effects reshaping the AI landscape.

At the centre of the current storm is Meta, which recently launched a new AI division dedicated to building artificial general intelligence (AGI). Unlike previous teams working quietly in the background, this one comes with fanfare, and direct oversight from CEO Mark Zuckerberg. His personal involvement signals just how high the stakes have become. Meta’s stated ambition is to develop superintelligence, and it’s making it abundantly clear that the road to get there starts with talent.

But the real headline lies in the way Meta is recruiting. By offering nine-figure compensation packagesyes, over $100 million - to lure top minds from across the industry, Meta has drawn a bold line in the sand. Researchers like Ruoming Pang, a top engineer from Google DeepMind, and Alexandr Wang, the CEO of Scale AI, have reportedly been courted with such offers. These are not incremental moves; they’re equivalent to signing star players to exclusive contracts, with the intent of tipping the balance of power.

This is more than a hiring spree. It’s a declaration that the age of collaborative AI development may be giving way to an era of hyper-competitive, closed-door arms races. By throwing money and resources into a handful of individuals, Meta is reshaping the playing field and creating a clear “front line” in the tech talent war - where prestige, compute access, and executive backing all converge.

While the numbers are eye-watering, not everyone is buying in solely for the money. In interviews and internal commentary, Zuckerberg has emphasised that what truly draws elite researchers to Meta is not just the pay, but the tools. With its recent buildout of infrastructure, Meta now offers access to some of the most powerful computing clusters in the world. For AI researchers, especially those working on large-scale models, this access can be the difference between theoretical breakthroughs and real-world progress.

In practice, this means working at Meta offers not just compensation but unparalleled experimentation speed. When other firms throttle compute time or limit project scope due to resource constraints, Meta offers freedom, backed by billions. This is no small incentive for researchers accustomed to the bottlenecks of academia or smaller labs.

Even so, there remains a growing tension between ambition and ethos. Notable figures, including Benjamin Mann of Anthropic, have declined Meta’s overtures, stating a preference for mission-driven work focused on safe and interpretable AI. Their decisions underscore a critical divide: while compute and capital matter, so too does trust in leadership, alignment with personal values, and confidence in the longterm vision.

In this sense, the tech talent war is not purely transactional; it’s philosophical. Some are motivated by scale and speed, others by ethics and stewardship. And the choices researchers make today may influence not just where AI is developed, but how responsibly it evolves.

The Ripple Effects - Cultural Shocks and Industry Responses

Inside Meta, the hiring spree has not gone unnoticed

by existing staff. Some employees, especially those grinding in less glamorous roles, have voiced quiet frustration. Michael Dell warned that such stark pay disparities could lead to a line of disgruntled employees outside Zuckerberg’s office. The optics of rewarding a select few while others carry the day-to-day burdens can be toxic, no matter how visionary the mission.

Other tech giants have been forced to respond. Google has reportedly increased access to compute for its AI teams, while trying to maintain internal harmony. OpenAI, meanwhile, is said to have issued retention memos to prevent a brain drain. Even smaller start-ups, often built on idealism and innovation, now find themselves fighting to retain talent against offers they can’t dream of matching.

Sam Altman once warned of “missionaries versus mercenaries” in tech. Meta’s current wave of hiring has reignited that conversation, posing a tough question: can companies build world-changing technology if the driving force is financial gain?

The biggest concern isn’t just who gets hired; it’s who gets left behind. There are fewer than 1,000 researchers globally with the skills needed to advance frontier AI models. If the majority end up in just two or three companies, the risks are significant: concentration of power, slower innovation, and a weaker start-up ecosystem.

This talent consolidation could also impact the pipeline. With attention focused on securing superstars, early-career researchers may struggle to find mentorship and opportunity. It’s a quiet, long-term consequence of short-term ambition. Some start-ups have already begun shifting away from traditional recruitment, relying instead on hackathons or internal training to cultivate talent.

And there’s another subtle risk - burnout. Researchers being offered tens of millions are not just expected to produce; they’re expected to produce fast, with precision, and often under pressure. The mental toll, especially in an industry still learning how to handle ethical responsibility, should not be underestimated.

Meta has made a defining move in the tech talent war - one that will be studied, applauded, and critiqued for years to come. Its investment in compute, aggressive hiring, and top-down focus on AI marks a turning point in how the industry approaches innovation. But whether this results in sustainable, meaningful progress or a frayed, mercenary workforce remains to be seen.

As AI continues to mature, the question isn’t just who wins the war for talent; it’s whether the war itself is the right way to build the future. The next chapter will depend on whether companies can align resources with values, and whether the brightest minds in tech choose speed and scale - or vision and purpose.

Across today’s shifting cybersecurity terrain, the distance between perceived resilience and actual preparedness is often wider than most organisations realise. For years, enterprises have relied on compliance audits, risk scores, and simulations that create the appearance of safety without proving it in practice. This gap between expectation and reality is where the greatest danger lies - not in the absence of tools, but in the absence of certainty. Horizon3.ai has positioned itself to close that gap entirely, offering a methodology that replaces hypothetical reassurance with demonstrable proof.

The company’s approach is rooted in a central question: What would a real attacker do here? By delivering evidence-based validation through its flagship platform, NodeZero®, Horizon3.ai shows exactly what can be breached today, how it would be done, and what is at stake if nothing changes. In doing so, it reframes the conversation for security leaders, boards, and regulators, providing not only the urgency to act but a clear path forward. What follows is the story of how Horizon3.ai is turning proof into the new measure of trust - and why that shift matters for every organisation operating in an increasingly contested digital space.

Horizon3.ai’s philosophy is anchored in three principles: offence informs defence, proof over assumption, and autonomy at scale. These are not abstract values; they guide exactly how the company’s technology works. NodeZero thinks like an attacker. It looks for weak pointssuch as poor system configurations, exposed passwords, or gaps left by oversight - and links them together into a chain that could be used to break into critical systems. Every result is backed by evidence showing exactly how the breach could happen.

This is a shift from simply “knowing about a problem” to seeing how it could directly lead to stolen data, disrupted operations, or financial loss. When a vulnerability is illustrated with proof, it becomes harder to ignore. That is why Horizon3.ai’s approach helps decision-makers act faster and more decisively than they could if they were working from a list of generic risks.

Large organisations rarely have simple networks. Their operations are spread across physical data centres, multiple cloud providers, and countless connected devices. Testing these environments effectively can be time-consuming and disruptive if done the traditional way. NodeZero addresses this challenge with full autonomy. It requires no special agents installed on devices

and no complex manual set-up, yet it can examine more than 100,000 assets in a single run.

The speed and repeatability of this process mean that organisations can test, fix weaknesses, and retestsometimes within the same day. This is not just faster; it changes how security teams work, turning testing from a once-a-year event into an ongoing process. Horizon3.ai also adds another layer with NodeZero Tripwires™, small planted markers that detect attacker-like activity in real time, alerting defenders the moment a threat begins to take shape.

Meeting compliance standards is important, but it does not guarantee that systems are safe from actual attacks. Horizon3.ai’s 2025 launch of Agentic Risk-Based Vulnerability Management reflects this understanding. The goal is to focus on the threats that would cause the greatest harm to the organisation - such as an attack on financial systems or theft of sensitive customer data - rather than on the vulnerabilities that simply appear most severe on paper.

Two key features make this approach highly practical. High-Value Targeting finds the systems and information that matter most, even if they are missing from official records. Advanced Data Pilfering goes further by showing exactly what data could be stolen and how an attacker might use it. Another addition, the MCP Server, allows security teams and executives to interact with NodeZero in plain language, making it far easier for non-technical decision-makers to understand and respond to real risks.

The market has taken notice. In June 2025, Horizon3.ai secured $100 million in Series D funding, led by New Enterprise Associates, to accelerate its global growth and strengthen public sector offerings. The company also achieved FedRAMP® High authorisation, opening the door

Co-Founder Horizon3.ai

for deployments in U.S. federal agencies and critical infrastructure.

The numbers tell their own story: nearly 300 new customers in 2024, 101 per cent year-on-year revenue growth, and more than 150,000 autonomous penetration tests run to date. Industry recognition has followed, including consecutive listings in the Fortune Cyber 60, listed as No. 1 in security and No. 121 overall on the 2025 Inc. Magazine 5000 list, and awards from Tech Ascension and Cyber Defense Magazine. The newest platform capabilitiessuch as Threat Actor Mapping, which links vulnerabilities to known hacker groups, Endpoint Security Effectiveness which ensures organizations get the most out of their EDR investments, and the Vulnerability Management Hub, which tracks and verifies remediation - keep Horizon3. ai’s customers ahead of evolving threats.

The real breakthrough in Horizon3.ai’s model is not simply in its technology, but in the shift it represents for how organisations think about security. When defence is validated through real-world proof rather than theoretical metrics, it changes the culture of decision-making. Security teams no longer have to lobby for resources based

on probabilities; they can point to tangible evidence of risk and the precise impact of inaction. That evidence travels beyond the security operations centre - it shapes boardroom strategy, influences investment priorities, and recalibrates how an organisation measures success.

In the coming years, as digital infrastructure grows more complex and adversaries more resourceful, this capacity for evidence-based validation will become the benchmark for serious security. Horizon3.ai has anticipated that future, creating not only a platform but a discipline - one where assurance is earned in the same way it is tested in the real world. For enterprises navigating the shifting terrain of global technology, the ability to know for certain may prove to be the most valuable capability of all.

For further details, please visit the website: www.horizon3.ai

Ghana’s global reputation as a top cocoa exporter is built on generations of smallholder farmers, fertile soil, and favourable weather. But that legacy is under threat. With climate change fuelling erratic rainfall, rising temperatures, and new plant diseases, cocoa yields have become unpredictable. Add in outdated farming methods and an ageing tree stock, and the country’s cocoa economy is facing a defining moment.

Rather than rely on tradition alone, Ghana is turning to technology. A wave of digital tools, from satellite-guided precision farming to blockchain-enabled traceability systems, is now sweeping across the sector. These interventions are not about modernity for its own sake, they’re about protecting livelihoods, retaining market access, and securing the future of a crop that supports over 800,000 farmers.

Cocoa is a crop with tight environmental demands. It thrives in humid conditions with consistent rainfall and rich, shaded soil. But in Ghana’s cocoa belt, rainfall patterns have become less reliable, and average temperatures have climbed by nearly 1.5°C in recent decades. With that shift has come an uptick in black pod disease, swollen shoot virus, and pest infestations, each capable of decimating yields.

Compounding the issue is the age of Ghana’s cocoa trees. Many plantations are more than 30 years old, well past their productive peak. These trees produce fewer pods and are more susceptible to disease. Meanwhile, younger generations have been leaving rural areas for cities, leading to declining farm labour and a slow erosion of local knowledge.

As a result, yields in Ghana have stagnated at around 300 kilograms per hectare, less than half the global average. For a sector that contributes nearly 20% of Ghana’s export earnings, this is not just an agricultural problem.

Enter precision agriculture. Leveraging NASA’s SERVIR programme, GIS mapping tools, and agri-tech platforms like AgriPredict and CropIn, Ghanaian cooperatives are now integrating multispectral satellite imagery with geofenced farm data. These tools combine NDVI (Normalised Difference Vegetation Index) analytics with drone-captured hyperspectral imagery to monitor crop vitality at a granular level.

Farmers in the Ashanti and Eastern Regions are also

using mobile apps that issue early warnings about disease outbreaks, based on AI-driven anomaly detection algorithms that ingest multisensor field imagery and interpolate meteorological data streams using machine learning models trained on region-specific crop pathology datasets. This allows for pre-emptive spraying, saving crops before they’re lost. Pilot projects have shown that farms using such apps have seen yield improvements of 15-20% within a single season.

In areas where resources allow, drone-based spraying and replanting with hybrid, pest-resistant cocoa trees are becoming more common. Meanwhile, LoRaWAN-enabled IoT sensor networks, such as those developed by uFarmTech and SmartFarm, are deployed at the root and canopy levels to collect granular data on soil pH, electrical conductivity (EC), micro-humidity, and root zone temperature, all of which are synchronised via MQTT protocols to mobile-based dashboards accessible to agronomists in the field.

The common thread is accessibility. Rather than high-cost solutions for elite farmers, most of these technologies are designed to be lightweight, affordable, and mobile-first; tailored to the needs of smallholders.

The challenges aren’t only on the farm. Global buyers, particularly in Europe and North America, are under increasing pressure to ensure that their cocoa is ethically sourced, environmentally sustainable, and traceable from farm to factory. Enter blockchain.

Companies like Cargill and ECOM are piloting distributed ledger technology (DLT) platforms like IBM Food Trust and Hyperledger Fabric, which assign tamper-proof QR-coded smart tags to each cocoa batch, linking origin data, geolocation metadata, and quality metrics to a decentralised registry. This allows cocoa’s journey, from farmer to cooperative

to exporter, to be verifiable at every stage. Ghana’s Cocoa Board (COCOBOD) has also rolled out “CocoaTrace”, a national traceability system aligned with upcoming EU regulations on deforestation-free imports.

One of the most tangible benefits is financial transparency. Farmers can now receive real-time mobile disbursements through USSD-integrated wallet systems such as MTN MoMo and Zeepay, triggered via smart contracts once quality inspection data is verified on-chain. This reduces reliance on middlemen and cuts delays in accessing income. In some districts, digital payment systems have been paired with reward schemes, offering bonuses for quality beans or eco-friendly farming practices.

These systems also reduce fraud. In pilot zones, reports of pod tampering and weight manipulation have dropped by 30%. For buyers and consumers, that builds trust. For farmers, it means fairer returns and stronger relationships with international markets.

The early results are encouraging. In digitally enabled districts, yields have improved, payment delays have shrunk, and extension services have become more responsive. There are even signs of a shift in youth attitudes, with some younger Ghanaians viewing tech-enabled farming as a viable future.

Still, the road ahead is not without obstacles. Internet connectivity remains patchy in many rural areas. Smartphone penetration, though rising, is uneven. Many farmers are not digitally literate, requiring sustained training and support. The upfront cost of drones, sensors, and hybrid seedlings can be prohibitive for individual farmers, calling for continued public-private subsidies.

In response, Ghana is investing in regional innovation hubs and tech centres, such as those operated by the Cocoa Research Institute. These centres provide hands-on training, tech demonstrations, and maintenance services to cooperatives and local officials. There’s also growing interest from international development partners to scale these efforts across West Africa.

What’s emerging is a new kind of cocoa economy; one where traditional knowledge is fused with data analytics, where manual inspections are supported by AI, and where smallholders are not just producers, but active participants in a digitally connected value chain.

Ghana’s cocoa industry is not standing still. Faced with the twin pressures of climate change and global market scrutiny, it is embracing digital tools as a lifeline and a lever. From satellite-guided farming to blockchain-backed trade, the sector is undergoing a quiet but profound transformation.

This isn’t about making farming more modern for the sake of appearances. It’s about protecting incomes, preserving soil, and proving that sustainable agriculture can be both smart and inclusive. The question now is whether this technological leap can scale fast enough, and wide enough, to secure Ghana’s place in the future of global cocoa.

If it can, the country won’t just remain a top exporter. It will become a model of how emerging economies can blend tradition and technology to stay rooted in the world’s most vital supply chains.

Chinese automaker BYD has quietly begun rolling out a smartphone-to-car integration feature across its full vehicle line-up; a move setting it apart from rivals. Compatible with major brands such as Huawei, Xiaomi, OPPO, Vivo, Honor, realme, OnePlus, and iQOO, the system connects your phone to the vehicle seamlessly via platforms like Carlink, Huawei HiCar, and HONOR CarLink. Drivers can now enjoy app mirroring, navigation hand-off, media control, and voice interaction through their handset, all tied into BYD’s in-car OS, DiLink.

This move transforms the smartphone into an extension of the cockpit. In this article, we unpack how the system technically works, from APIs to pairing protocols, why it matters for user experience, data access, and brand loyalty, and what it hints at for global tech regulation, privacy concerns, and the future of the incar ecosystem.

BYD’s cross-device system is anchored in its proprietary DiLink operating system, a Snapdragon-powered infotainment platform designed for seamless hardware and software convergence. Unlike traditional Android Auto or Apple CarPlay setups, BYD’s system natively integrates multiple Chinese smartphone ecosystems - including Huawei’s HarmonyOS via HiCar, OPPO’s Breeno OS, and Xiaomi’s MIUI - using protocols such as Carlink and HONOR CarLink. These integrations allow for deep synchronisation, beyond app mirroring, enabling full-scale personalisation and real-time communication between the car and the user’s device.

The pairing process is almost instantaneous. Once a compatible smartphone enters the vehicle, proximity sensors activate Bluetooth Low Energy (BLE) pairing, initiating automatic syncing. From there, the driver’s seat, climate controls, ambient lighting, and media preferences adjust to the user’s saved profile, stored in both the car’s internal memory and the phone’s encrypted identity token. This ensures continuity whether the user switches cars or hands off driving duties. All relevant apps, from navigation to calendar and messaging, are rendered natively on the in-car display with gesture and voice control functionality.

Importantly, BYD’s architecture supports over-the-air (OTA) updates, allowing for incremental improvements to compatibility, interface design, and new app integrations. This modular flexibility gives the automaker agility, both to expand its partnerships with smartphone vendors and to respond rapidly to changes in mobile operating systems.

This high-level integration reflects a deeper strategic move. By aligning the car experience tightly with a user’s phone ecosystem, BYD is reducing the friction between devices and turning the vehicle into a dynamic extension of the user’s digital life. Tasks that once required toggling between screens, like inputting a navigation destination or responding to messages, can now be done through a unified interface, leveraging voice assistants such as Huawei’s Celia or OPPO’s Breeno for hands-free control.

Beyond convenience, the ecosystem approach allows BYD to collect a rich stream of behavioural data. This includes route history, voice command patterns, entertainment preferences, and even biometric interactions with the phone (e.g. fingerprint-locked profiles). The company’s back-end AI system, known as Xuanji, processes this data to personalise vehicle behaviour in real time - adjusting suspension, acceleration sensitivity, or music mood based on contextual signals like time of day or weather.

Moreover, brand loyalty is baked into the system design. A driver who owns a BYD-compatible phone is more likely to buy another vehicle from the same brand, or stick with compatible devices. It’s a lock-in effect similar to Apple’s ecosystem, where leaving means losing cross-device continuity. This gives BYD a powerful edge in an industry where differentiating beyond battery size and range is becoming more difficult.

While BYD’s approach is already gaining traction in China, its international roll-out raises critical questions. In Western markets, the dominant smartphone-car interface model remains based on Apple CarPlay and Android Auto, platforms that offer a degree of familiarity and ease but are limited to surface-level functionality. These systems essentially “mirror” a phone’s display and offer limited access to hardware-level features or true cross-device synchronisation.

BYD’s model, by contrast, involves a deeper data handshake. The vehicle accesses not just apps but also usage habits, geolocation metadata, and potentially biometric tokens, all to deliver a truly personalised driving experience. This could be compelling in markets that favour convenience and automation, such as the Middle East or Southeast Asia, where app ecosystems dominate daily life and privacy regulations are less strict.

However, in regions like the EU and North America, where data protection laws such as GDPR and CCPA are rigorously enforced, the BYD model might raise compliance flags. Regulators are likely to scrutinise how much data flows from phone to vehicle, whether consent is explicit, and how such data is stored and used. For BYD to succeed globally, it may need to build modularity into its system, offering full integration in permissive markets and a privacy-compliant lite version elsewhere.

Despite its innovation, BYD’s cross-device ecosystem introduces several friction points. First is the issue of compatibility. Users who do not own a supported hand-

set are effectively excluded from key features, limiting the system’s universal appeal. Additionally, firmware updates on either the phone or the car can disrupt synchronisation, creating reliability concerns that might dissuade less tech-savvy users.

Privacy is another major hurdle. The system’s capacity to track user behaviour, ranging from daily routes to voice commands and multimedia consumption, poses risks if not transparently managed. Users must be informed not only of what data is being collected, but also where it is stored, whether it’s encrypted, and who has access. Without robust privacy policies, the very features that set BYD apart could become liabilities in global markets.

Moreover, the reliance on one’s smartphone for so many in-car functions introduces questions of redundancy. What happens when the phone battery dies, gets stolen, or becomes obsolete? In these cases, drivers lose access to personalisation features, possibly even navigation or climate settings. As BYD expands internationally, building in fallbacks, such as secure cloud profiles or multi-device redundancy, will be critical to ensuring a reliable user experience.

Across industries, enterprises have long faced an uneasy compromise in cloud adoption: security traded for scale, compliance sacrificed for speed, and innovation shackled by the unpredictable costs of hyperscale providers. It is in bridging this gap between ambition and risk that UnitedLayer® has defined its journey. For over twenty-five years, the company has delivered private cloud and data center solutions that do not force clients to choose between agility, resilience, and intelligence, but instead weave all three into a seamless foundation. Its global footprint now spans 30+ private cloud regions, reinforced by over 175+ edge locations and multiple Tier 3+ facilities engineered for 99.999% high availability, a scale designed not for spectacle but for sovereignty, performance, and trust.

What sets UnitedLayer® apart is not infrastructure alone, but the intelligence it has layered across its systems. The United Private Cloud® platform, strengthened by UNITYONE AI® and complemented by offerings such as disaster recovery, sovereign cloud, container cloud, and UnitedSecure® and UnitedEdge® accelerators, represents more than a hosting environment. It is an ecosystem engineered to anticipate rather than react—distributing, monitoring, and optimizing workloads in real time. For enterprises in finance, government, SaaS, and beyond, the result has been decisive: a cloud that remains available under pressure, scales without friction, and satisfies the strictest security and compliance regimes. In this sense, UnitedLayer® has become not merely a provider but the unseen backbone of digital transformation.

The numbers describing UnitedLayer®’s reach are significant: 30+ private cloud regions, 175+ edge nodes worldwide, N+M clustered architecture, up to 100K+ IOPS all-flash storage, and 100G software-defined networking. Yet the greater story lies in how these systems think. In a market where hybrid and multi-cloud are the norm, success is not measured in raw compute but in the orchestration of complexity into something accessible, secure, and adaptive.

This is the philosophy of the United Private Cloud® Suite. It spans over a hundred PaaS and IaaS services along with, sovereign cloud, DR cloud, containerized workloads, and application hosting. But the integration of UNITYONE AI® elevates the platform into active intelligence—policy-driven governance, autonomous operations, and self-healing architecture operating at enterprise scale. For clients, it transforms the promise of cloud from being a rented utility to becoming a platform of accelerated innovation—whether training AI/ML models, ensuring regulatory sovereignty, or running mission-critical workloads with confidence.

Sustained trust is rarely won through innovation alone.

What defines UnitedLayer®’s reputation is its meticulous engagement model. Every client relationship begins with discovery: a deep assessment of the IT landscape, compliance requirements, and growth ambitions. From there, a tailored roadmap is crafted—not an off-the-shelf strategy, but one bound to milestones and measurable outcomes.

Transparency anchors the execution. Progress is benchmarked against clear markers, with dedicated teams that own the client journey end to end. Onboarding is delivered with a precision that turns uncertainty into confidence, and optimization continues long after deployment. Clients are educated as partners, empowered to evolve alongside the infrastructure that supports them.

The results are evident for, a SaaS startup, accelerated its time-to-market while reducing costs through elastic IaaS. A cybersecurity firm achieved 24/7 uptime and bulletproof disaster recovery. An automation software solution provider fortified data security without constraining flexibility. A media streaming company leveraged hybrid cloud infrastructure to create seamless digital services. In each case, the pattern is clear: a methodology where technology is the catalyst, but outcomes define success.

Behind the technology lies a philosophy of disciplined execution: “First time right delivery.” UnitedLayer® operates not by rushing innovation but by applying a consultancy-grade framework that ensures outcomes are precise, dependable, and aligned with client's goals to deliver measurable outcomes.

Every engagement begins with comprehensive discovery— an in-depth examination of the client’s IT landscape, regulatory requirements, and strategic ambitions. From this foundation, UnitedLayer® co-develops a tailored roadmap, one that links secure and scalable cloud strategies directly to measurable outcomes.

Throughout the journey, transparency is paramount. Mile-

stones are clearly defined and communicated, giving clients an unambiguous view of progress and alignment at every stage. Ownership is never fragmented: each client is supported by a dedicated team that steers execution endto-end, reducing risks and simplifying complexity.

Onboarding is handled with deliberate precision, turning uncertainty into confidence and setting the stage for success from day one. Post-deployment, the relationship matures into collaboration and vigilance, with UnitedLayer® continuously optimizing infrastructure and evolving systems to stay ahead of changing client needs.

This philosophy transforms delivery from guesswork into certainty. By embedding rigor, clarity, and accountability in every interaction, UnitedLayer® ensures that innovation is not only bold but confidently executed—the first time, and every time.

The next horizon for UnitedLayer® is defined by a conviction: that private cloud can be more than a shield from hyperscale risk—it can become the intelligent, autonomous backbone of global AI innovation. Beyond performance, it creates a controlled environment for innovation, where enterprises can design, test, and advance new AI models with confidence. Central to this strategy is Private AI suite, purpose-built for secure, efficient training of large language models on enterprise data. It responds directly to the defining concern of this era: how to embrace generative AI while safeguarding sovereignty, compliance, and cost efficiency.

Parallel to this, UnitedLayer® is expanding its catalogue of services—from more than 100 offered today to well over 1000. Expansion of Global Capability Centers (GCCs) is also

Abhijit Phanse CEO UnitedLayer

underway, establishing hubs of cloud engineering excellence that design, build, and manage private cloud infrastructure—enabling enterprises to accelerate adoption, optimize operations, and drive continuous innovation with confidence. With UNITYONE AI deepening its agentic AI orchestration capabilities and the United Private Cloud® fusing autonomous workflows with container and serverless technologies, the aim is bold but clear: a future where infrastructure supports latest AI-innovation, easy to use and sustainable by design.

UnitedLayer®’s influence goes beyond its data centers and tenant numbers. Its true distinction lies in redefining private cloud as intelligence made operational, resilience made routine, and trust made structural. Through engineering innovation, precise client engagement, and a culture of rigor, UnitedLayer® has evolved into a transformational partner supporting global customers across industries such as BFSI, government, retail, telecom, logistics, and oil & gas. It delivers industry-tailored private cloud solutions with robust security, regulatory compliance, and AI-driven automation — empowering enterprises to accelerate innovation, optimize operations, and meet complex business demands confidently.