ignite

Research from the National University of Singapore

AI is growing, and getting hungrier.

AI is reducing the cost of creativity to zero. That's a problem. Accelerating frontier AI research for real-world impact.

AI is growing, and getting hungrier.

AI is reducing the cost of creativity to zero. That's a problem. Accelerating frontier AI research for real-world impact.

Be part of a community dedicated to driving innovation and change in education, research, and service.

16 Colleges, faculties and schools

Research centres and institutes, and Research Centres of Excellence > 40

4.2k

Research staff

at https://www.nus.edu.sg/careers/

Artificial intelligence (AI) stands as one of the most transformative technological advancements of our time. It is changing the way people live, work, communicate, study, and travel, and is reshaping industries, economies and societies at an unprecedented pace.

AI has the potential to solve some of the most pressing challenges facing our world today. As AI systems become more sophisticated and are integrated into our daily lives, it is imperative that we not only understand the benefits, but assess any associated risks.

In this inaugural issue of Ignite Magazine, we explore the vast array of AI applications, in fields spanning healthcare, finance, climate science, sustainability, manufacturing, microelectronics, and materials science, and describe how NUS is contributing to the global effort to push AI further through foundational AI research. We also discuss the importance of reducing AI’s carbon footprint, and ensuring it serves to augment industries, rather than simply imitate and replace.

NUS has long been a key player in the AI field. Our contributions are set to continue, with the establishment of the NUS AI Institute (NAII) in March 2024. The objective of this institute is to advance fundamental research, development, and application of AI technologies in areas such as education, healthcare, finance, sustainability, science and engineering. NAII will also conduct research on how to address the ethical concerns and risks associated with AI. Through strategic partnerships with government agencies and industry, NAII will become NUS’ focal point to synergise our wide range of capabilities as we aim to translate research outcomes into innovative solutions for the benefit of society.

AI is not just a tool. It promises to be a catalyst for change across various domains. It is therefore crucial that we manage the benefits and risks of AI, and are prepared for future technological, ethical or societal issues that may arise from it.

It is an exciting time to be engaged in research. With many grand challenges still to solve, and the potential for AI to open new avenues for data-driven solutions, I encourage academia and industry to come together, to cross disciplines, engage extensively with stakeholders, and nurture research collaborations. In this way, we can enable AI to reach its full potential in a sustainable and ethical manner.

Professor Liu Bin Deputy President (Research & Technology)

Editor-in-Chief

Professor Liu Bin

Editorial Advisory Board

Professor Aaron Thean

Associate Professor Benjamin Tee

Professor Chng Wee Joo

Professor Joanne Roberts

Associate Professor Leong Ching

Professor Mohan Kankanhalli

Managing Editor

Dr. Steven John Wolf

Editors

Lim Guan Yu

Low Yuan Lin

Editorial Designer

Koh Pei Wen

Illustrators

Dr. Naoki Ichiryu

Koh Pei Wen

Published by

Office of the Deputy President (Research & Technology)

© 2024 National University of Singapore. All rights reserved. This magazine and its contents are the property of the National University of Singapore (NUS) and are protected by copyright law. Unauthorised use, reproduction, or distribution of the material contained in this magazine, including but not limited to text, images, and other media, is strictly prohibited without the prior written consent of NUS. The views and opinions expressed in the articles are those of the authors and do not necessarily reflect the official policy or position of NUS or the Office of the Deputy President (Research & Technology). While every effort has been made to ensure the accuracy of the information contained in this magazine, NUS assumes no responsibility for errors or omissions, or any consequences arising from the use of the information.

For permissions and other inquiries, please contact the Office of the Deputy President (Research & Technology) at NUS.

research@nus.edu.sg

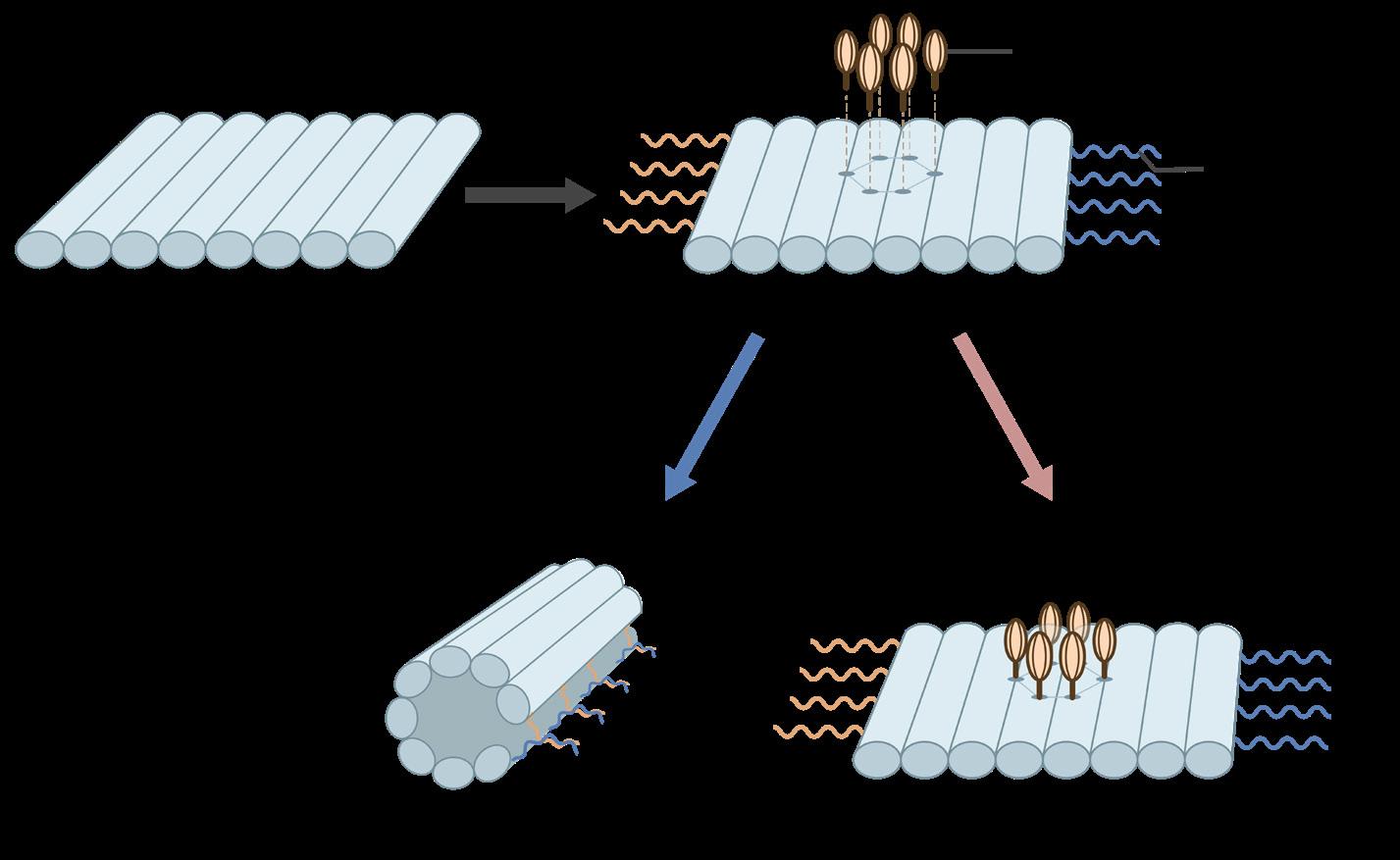

Innovative DNA origami modulates cell signalling for arthritis treatment

Fine-tuning plant responses to stress

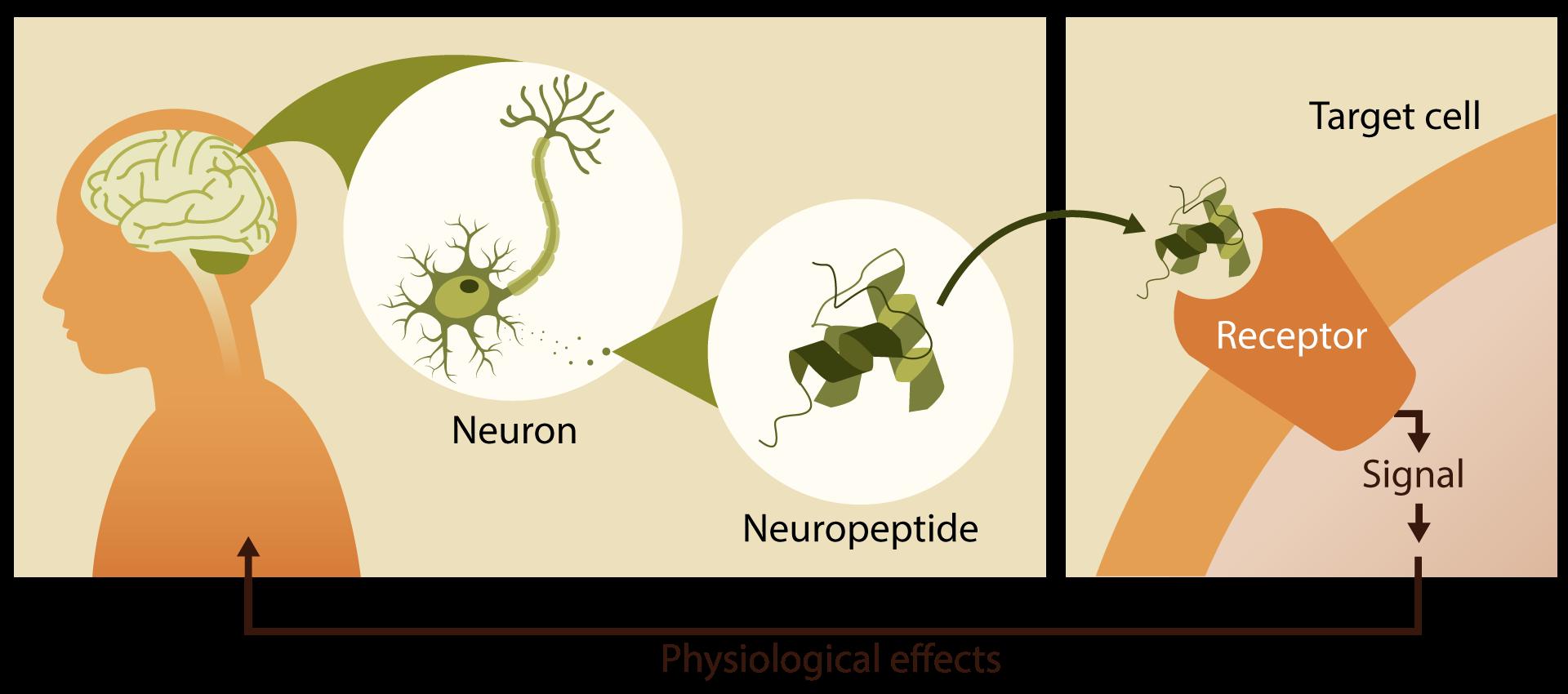

Stapled relaxin-3 analogs found to mediate biased signaling

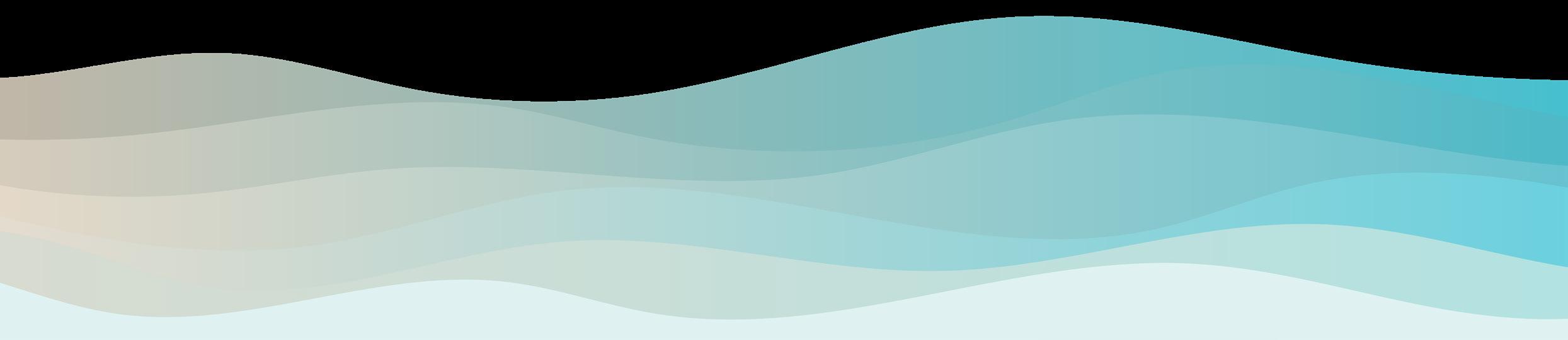

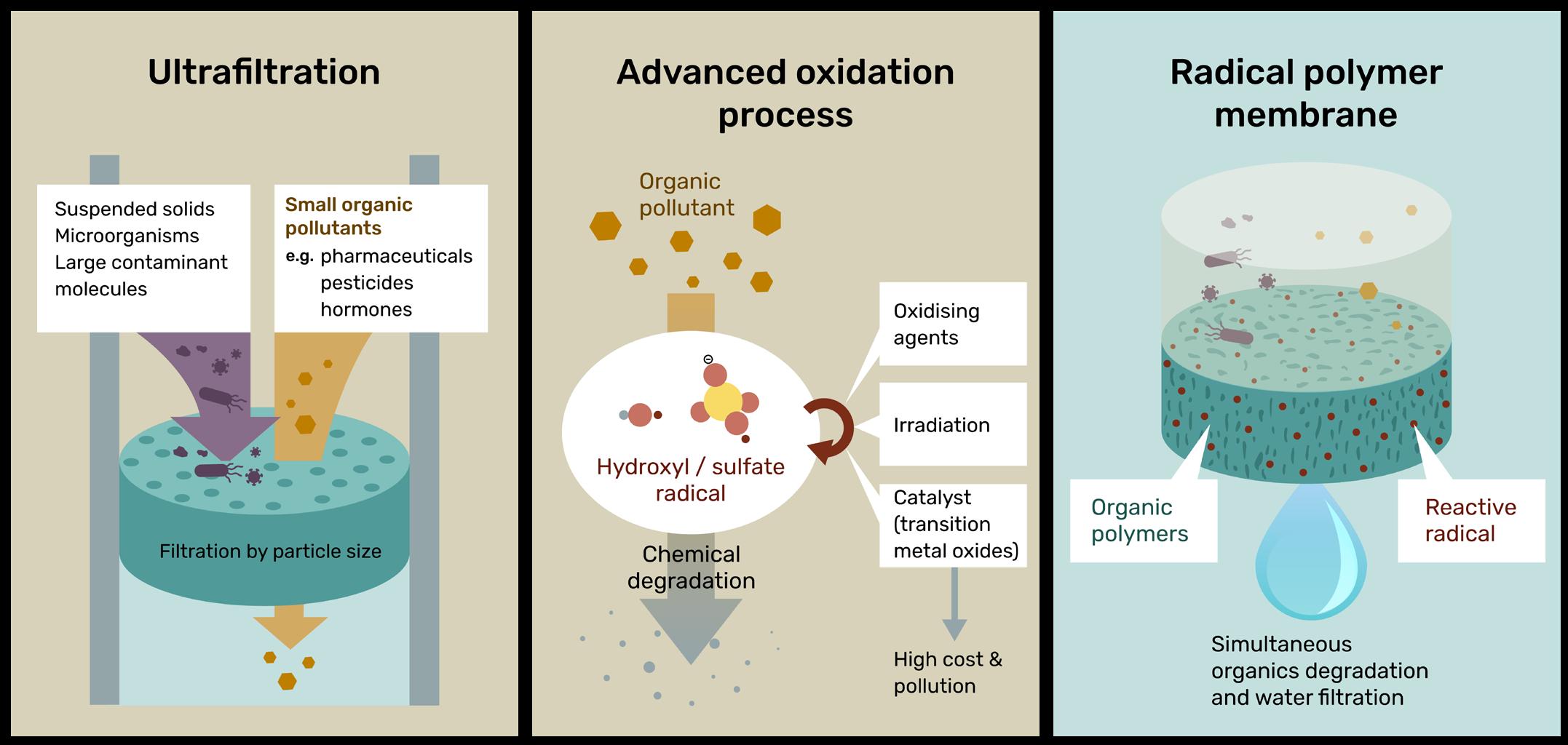

Radical polymer membrane for wastewater treatment

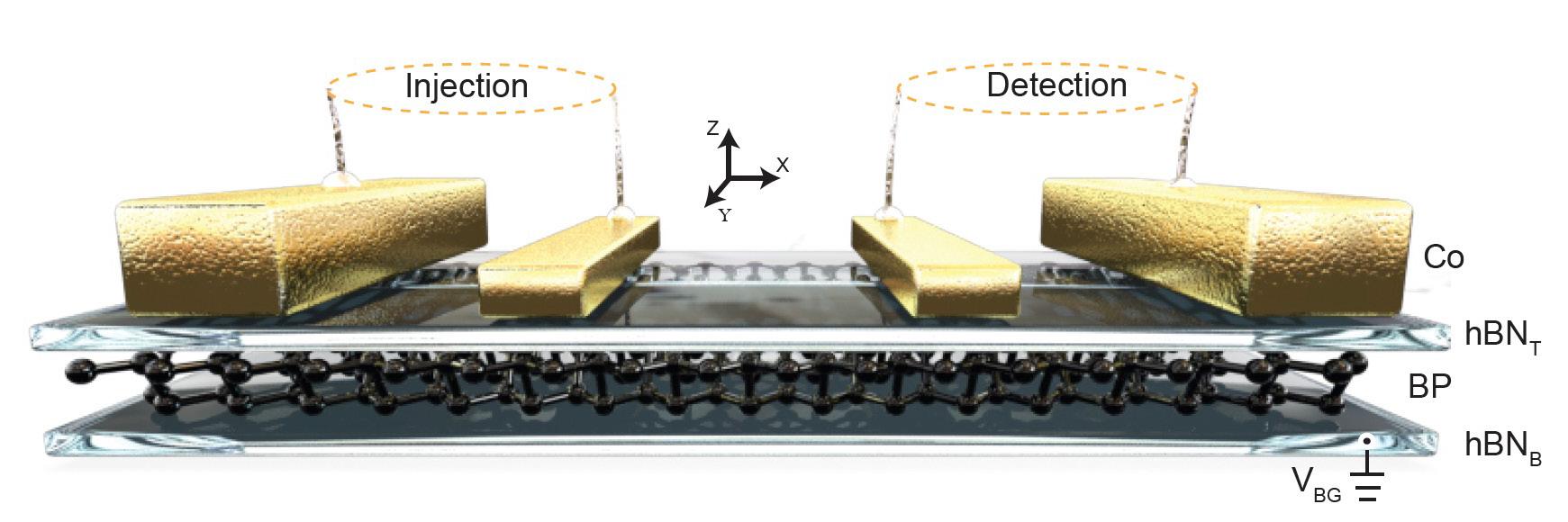

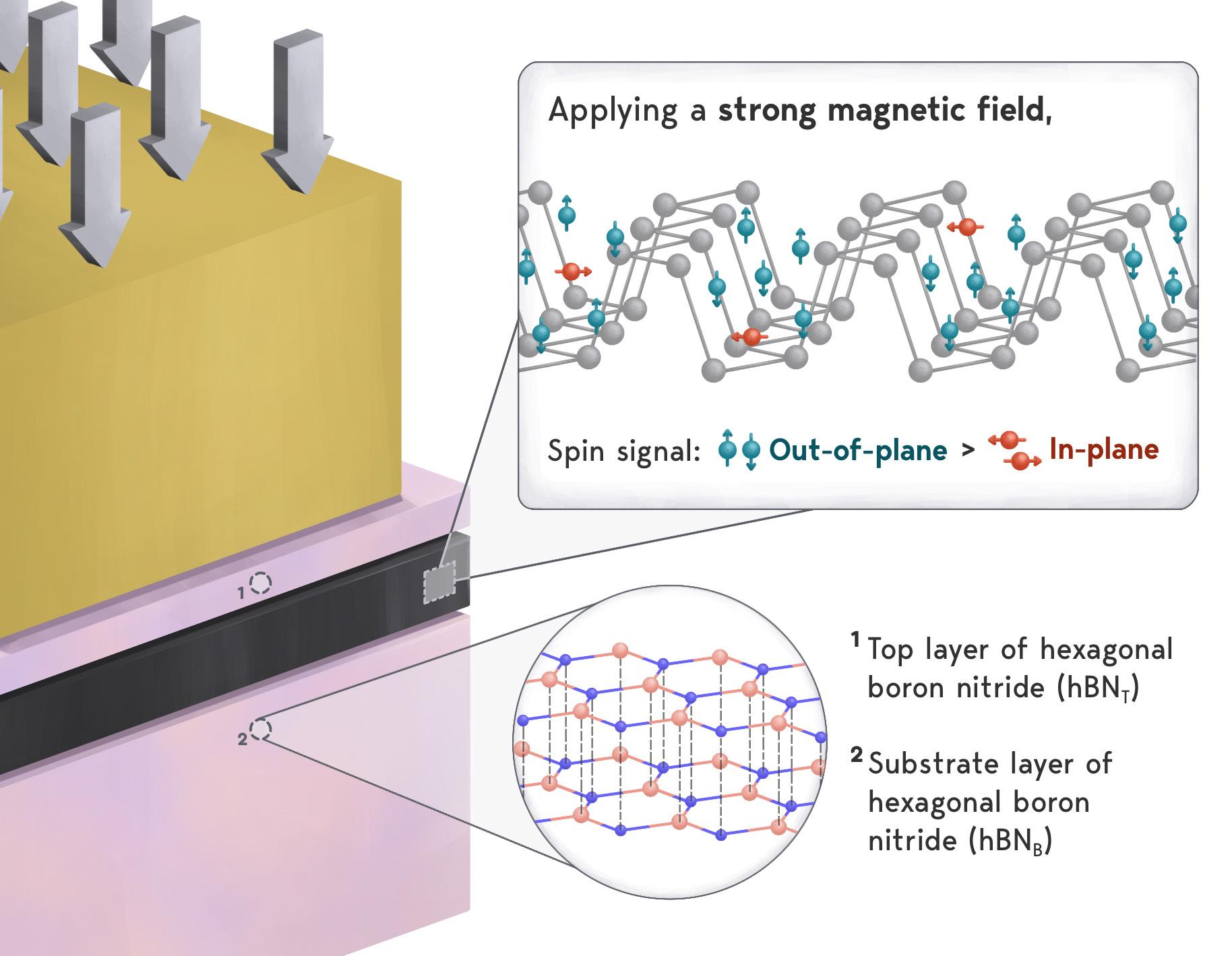

Advancements in material design for highperformance spintronic memory devices

by Prof Simon Chesterman & Dr Steven Wolf

Within just a couple of years, generative artificial intelligence (AI) systems like ChatGPT, Midjourney, and DALL-E have gone from beta-testing to producing works that adorn motorway billboards. The online world is awash in AI-generated imagery and text. Even scientific journals have begun incorporating AIgenerated content into their publications.

While generative AI has undoubtedly reduced costs for marketing and communications teams, it has left artists pondering an uncertain future. Illustrators, graphic designers, animators and writers are already lamenting lost income and seeking ways to stay relevant. There is genuine fear for what the future holds amongst creatives.

Generative AI is certainly impressive, yet humans could already create images, tell stories and write literature. We have done so for millennia. Imitating the process may reduce expenses or boost productivity, but these benefits come at a price.

Most people are now aware of the problem hallucinations or confabulations, when generative AI’s creativity diverges from reality. Yet larger concerns may come from the economic impact of reducing the cost of creativity to zero. If everyone is able to produce ‘art’, will anyone pay for it? And if the answer to that is ‘no’, then what happens to artists?

That, in a microcosm, is one of the fundamental economic questions posed by AI. Will we use it to do things that no human could do — discover new drugs, develop better models for climate change — or simply to do things more cheaply than a human could?

Professor Erik Brynjolfsson from the Stanford Digital Economy Lab recently posited1 that excessive deployment of human-like artificial intelligence, where AI is developed to imitate human-like intelligence in order to automate processes and reduce labour costs, brings with it the risk of what he called the ‘Turing Trap’.

In this situation, AI is developed to perform tasks that could already be performed by humans with certain skills or abilities — if someone was willing to pay for it. The product may be developed at a faster speed, and almost certainly a lower cost. Yet, when this occurs at scale, there is a risk that the social equilibrium shifts, with power and wealth taken away from a majority, and concentrated on a minority. Those who would have otherwise performed the task lose out, while AI developers reap the spoils.

In his essay, Brynjolfsson argues a need for governance in order to ensure values and incentives align between governments, corporate bodies and AI developers. However, if generative AI is taken as an example, it may seem we are already teetering on the edge of this ‘Turing Trap’.

In October 2023, one of the longest strikes in the entertainment industry ended when the Writers Guild of America came to an agreement with the Alliance of Motion Picture and Television Producers to protect writers and human creativity against generative AI.2 Under the terms of the agreement, generative AI remains a creative tool under the control of the writers, not their employers, and ensures writers will not be replaced by AI or forced to work from AIgenerated scripts.

However, most creatives do not have unions to represent them, and they remain in the fight alone.

In June 2024, there were reports of a mass exodus of artists from Facebook and Instagram.3 Meta, the parent company of both platforms, had indicated that they would train their generative AI algorithms using public content posted by their userbase. For many, there was no means to opt-out, and consent was implied if users continued to use the platforms.

For many artists and freelance creatives, client bases are built solely through social media platforms and personal websites. These platforms have for decades provided a simple and cost-effective way to display and sell art. They allow artists to take their art global, providing access to new audiences. As networks grow, artists find inspiration in each other’s work. Human creativity can flourish.

All that is changing. Social media is now rife with AI-generated content, from bots posting comments and reviews to AI-generated voice overs and advertisement visuals. Analogous to how excessive unwanted email gave rise to ‘spam’, there is a new term for this excess of synthetic content: ‘slop’.

Spam is a nuisance that threatens to overwhelm our emails, but slop is more insidious. Since it is trained on the work of artists and photographers, it is using their past output to undermine the economic viability of their future.

Visual media may be the canary in the coal mine. Generative AI is also producing text, audio, and video.

In theory, copyright protects artists and authors from misappropriation of their work. In practice, developers of generative AI models have been reluctant to disclose how those models are trained. Lawsuits have been brought by Getty Images, the New York Times, the Recording Industry Association of America, as well as a host of artists.

Others are seeking ways to monetise the use of their data, with Open AI concluding licensing deals with News Corporation, the Financial Times, and Reddit.

In the context of visual media, however, many artists remain reluctant to provide their art for AI training because there are no avenues for compensation should their style be replicated.

AI developers might respond that all artists learn their craft by copying — to a greater or lesser degree — the methods and styles of their predecessors. But there is surely a distinction between being inspired by Seurat’s pointillism or Warhol’s pop art, and making a digital copy of every artistic work ever produced and then running algorithms to produce infinite variations on those themes.

The adoption of generative AI has been driven by its speed and the increasing quality of its outputs. However, without professional creatives taking responsibility for the content created, truth and integrity are at stake. We have all seen examples of political campaigns or established brands using images of people that were revealed to have had too many arms or fingers. But underlying these embarrassing errors are deeper questions of responsibility for the veracity and reliability of the content being produced.

Unfortunately, there is very little transparency on ownership, copyright or fair use issues from developers, as they remain locked in a generative AI arms race. Adobe4 and Meta3 are two companies who recently altered their terms and conditions to allow the use of data saved or uploaded to their platforms to train AI algorithms without clarifying how data would be used, who owns the data, or how creators would be compensated if their work or ideas are plagiarised. Public backlash ensued.

While developers focus primarily on out developing each other, the onus is placed on users to be responsible and use the technology fairly. This is already proving somewhat naïve. Users are essentially learning as they go, and many are pushing the boundaries of what would be considered acceptable. Misleading and at times harmful images and text are already being generated and propagated online.

The battle against mis-, dis- and malinformation — often termed ‘fake news’ — is part of a new project called Information Gyroscope, at the NUS Centre for Trusted Internet and Community. This is looking at a broader approach to what we call ‘digital information resilience’, which emphasises the role of consumer behaviour in understanding why people consume fake news and how it affects

them, as well as the important role of technology. Only by understanding the life cycle of fake news can we adopt regulations and policies that will affect both supply and demand.

This research is highlighting that media consumers cannot be relied upon to pursue a balanced coverage of media content, or identify, let alone weed out falsities in social media posts or professional news articles. The ease with which people can now produce believable media using generative AI is compounding the issue; yet the cost of truth must not be ignored.

Since most generative AI tools construct media from text-based prompts, it is technically possible for developers to block the generation of imagery depicting historically false, or inflammatory content. In this case, malicious intent can be assumed based on prompt cues. Similar approaches could be used to prevent blatant plagiarism. However, imposing limitations on the models would delay their development and would likely play into the hands of less-ethically minded developers.

Transparency may therefore hold greater sway in rooting out misuse of generative AI. Watermarks and other digitaltags are easily removed with basic image or video editing software, and this necessitates that developers retain all media generated by their systems, at least for a defined period. Such data should be searchable against circulated or similar media, thereby allowing consumers to verify the legitimacy and source of the media they consume, and provide grounds to flag AI-generated content for what it is.

Generative AI will likely continue to improve. It will increasingly pass the ‘Turing Test’ where people will fail to distinguish between “real” content and AI-generated content.5 However, it is also possible that AI systems will end up training themselves on the inaccurate or false media they create, further amplifying their limitations. Many consumers seem unconcerned with inaccurate depictions of what is created and, alarmingly, many users fail to discern AI-generated content from artist-generated content, despite the presence of inaccuracies or flaws.

Imitating human creativity is impressive on its own. Yet, it draws attention away from the true potential of AI, which is to serve as a tool that enables humans to achieve what is currently unachievable and solve hard problems. Already, AI is finding patterns and relationships amongst vast quantities of biological and medical data. Drug development, energy, urban design, finance, logistics and manufacturing are all

industries that face grand challenges and address hard problems. AI should be developed to assist experts in solving these problems.

At the same time, AI must be developed with a holistic understanding of the costs involved. The economic costs, and the costs to truth and integrity, are just two examples that cannot be ignored. Over the past two decades, social media infused our politics and our culture. Notionally ‘free’ we belatedly realised that it was not the product: we are. In the generative AI space, there is a realisation that a similar transformation may be happening — and that if we do not manage to govern it, AI itself may end up governing us.

NUS Law

Vice Provost, Educational Innovation Dean, NUS College

Brynjolfsson E. The turing trap: The promise & peril of human-like Artificial Intelligence. Stanford Digital Economy Lab. 2023 Jan 3 [accessed 2024 Aug 16]. https://digitaleconomy.stanford.edu/news/the-turing-trap-thepromise-peril-of-human-like-artificial-intelligence/

Beckett L, Anguiano D. How Hollywood writers triumphed over AI – and why it matters. The Guardian. 2023 Oct 1 [accessed 2024 Aug 16].

https://www.theguardian.com/culture/2023/oct/01/hollywood-writersstrike-artificial-intelligence/

Hunter T. Artists flee Instagram for new app cara in protest of Meta Ai scraping - The Washington Post. 2024 [accessed 2024 Aug 16].

https://www.washingtonpost.com/technology/2024/06/06/instagrammeta-ai-training-cara/

Weatherbed J. Adobe overhauls terms of service to say it won’t train AI on customers’ work. The Verge. 2024 Jun 10 [accessed 2024 Aug 16].

https://www.theverge.com/2024/6/10/24175416/adobe-overhauls-termsof-service-update-firefly

Scott C. Study finds chatgpt’s latest bot behaves like humans, only better. Stanford School of Humanities and Sciences. 2024 Feb 22 [accessed 2024 Aug 16]. https://humsci.stanford.edu/feature/study-finds-chatgpts-latestbot-behaves-humans-only-better/

The artificial intelligence (AI) revolution has ushered in a new era in computing technologies; however, its rise has coincided with a hard truth - “voracious” AI models are consuming more transistors and memories than can be integrated into a single chip. Classical Moore’s Law scaling has ended and we are now entering an era of high-speed interconnected multi-chiplet systems leading to complex 3D superchips.

The destinies of AI models and semiconductor technology are now intertwined. Large Language Models (LLMs) like ChatGPT only became usefully responsive when the neural networks (transformers) in them were scaled to include billions of parameters (connections). Training these models and making them available to the millions of users worldwide, required huge compute resources. A recent benchmark showed that one would need to cluster more than 3500 Nvidia H100 GPU chips to train a GPT3 (175 billion parameters) model in about 2 days. Each H100 chip integrates over 80 billion 5nm transistors manufactured by TSMC. Less than two years after its release, the B200 GPU was released. It contains 208 billion 4nm transistors, capable of running at 1000W total peak power. It is a superchip composed of 2 chiplets connected by high-speed interconnects that reportedly operates at 30x neural network performance for inference processing compared to the H100.

It is within recent memory that a deep neural network breakthrough was enabled by improved chip capability. In 2012, Geoffrey Hinton and colleagues published their seminal paper on the training of a large convolution neural network (62 million parameters) to classify 1.3 million images accurately. This breakthrough was enabled by now “vintage” GPU chips with hundreds of millions of transistors. Their success paved the way for deeper and more complex neural network models, like the transformers, and this in turn has fuelled the hunger for more powerful chips with more transistors. We are now on our way to trillion-transistor superchips and multi-trillion-parameter AI models that assimilate multimodal data, including images, text, sound, and video.

AI will continue to grow with the convergence of data resources, compute power, and advances in microelectronics.

There are of course ways to improve performance such as moving memory as close to the processors as possible, adding more processors into multi-processor chips, and networking more GPUs together. This is the current approach taken by AI-chip manufacturers, and for the time being, performance will continue to be gained.

Unfortunately, this approach is not sustainable. Not only are there economic and practical limitations in simply adding more of the same technology, but the environmental cost is extremely high. The energy required to train current LLMs is huge, and although the performance of each new

‘AI chip’ has increased substantially, so too have their energy demands. Hence, we are in urgent need for green AI solutions. This requires researchers to work with industry partners to solve emerging technological problems, as well as ideate disruptive hardware and software solutions. The goal is to not only achieve quantum leaps in computing system performance, but also in energy efficiency.

For this reason, researchers are now conceiving what will be needed to drive AI applications beyond the next 10 years. Such research is often done in collaboration with industry. Indeed, researchers at the Singapore Hybrid-Integrated Next-Generation μ-Electronics (SHINE) Centre at NUS are redefining semiconductor chip integration. Focusing on innovative heterogeneous integration, starting at the material and chip levels, SHINE researchers are developing solutions to process, system, and thermal challenges of stacked chiplets. Besides working with major industry partners on technology solutions, researchers working with SHINE, and NUS’ E6NanoFab, also explore topics such as neuromorphic computing – where processor architecture is modelled after the human brain. Whether silicon and carbon-based circuits can one day rewire themselves will remain to be seen, but early results are promising. The incorporation of 2D functional materials will be instrumental in this. Already, the development of synaptic memristors capable of both Hebbian and anti-Hebbian spike learning is progressing. Successful engineering in this domain would introduce plasticity into artificial neural network learning, allowing parameter weightages to be changed and relearned, just like the brain does over its lifetime.

be integrated with the GPU, which will enable compute tasks to be performed within the memory stack itself, negating the need for energy intensive data retrieval and transfer.

Another approach actively being pursued at NUS is to move inference queries away from the cloud and into user devices, while at the same time, improving energy efficiency of those devices. Indeed, the energy to move bits of information over the wireline network from devices to cloud is several orders of magnitude higher than processing that data in the cloud. In other words, green AI computing mandates efficient processing directly in user devices to drastically reduce the power consumed by the cloud and the intermediate network infrastructure.

Along these lines, the innovation from Professor Massimo Alioto’s Green IC group from NUS Electrical and Computer Engineering has disrupted several areas of on-chip AI with record-breaking energy efficiency, as supported by leading foundries and fabless players (e.g., TSMC, GlobalFoundries, Soitec, NXP Semiconductors). Representative examples of such innovation are the first demonstration of a battery-less complete AI vision system, in-memory computing with true end-to-end processing from signal conditioning to AI, the first accelerator based on fully automated design with energy efficiency above 100 TOPS/W (as good as state-of-the-art in-memory computing), and CPU-based flexible AI computing with accelerator-like energy efficiency, among the others.

Envisioning the future of computing is also providing the inspiration needed to address limitations of hardware today, especially in relation to AI’s sustainability issues. Researchers at SHINE are, for example, integrating 3D memory directly on top of processors, and developing wider and more effective interconnects to improve the retrieval and transfer of data from memory. Eventually, memory will

Professor Aaron Thean Deputy President, Academic Affairs and Provost NUS Electrical and Computer Engineering

AI has boomed in recent years, and yet it remains very much in its infancy. How we will use it in the future will depend on multiple factors, but it is almost certain that future computing hardware will dictate its full capabilities. What is certain is that a major shift in our approach to AI chip design is needed. New materials, new architectures and new AI-devices are required so that AI can continue its current development trajectory, to one day serve as a tool that truly augments humans in solving the world’s hardest problems.

NUS Electrical and Computer Engineering

The rapid adoption of ‘generative AI’ tools like ChatGPT has shone the spotlight on artificial intelligence (AI). While its popularity has boomed in just the last couple of years, it was not developed overnight. Instead, it is the result of decades of research and innovation in computer science, mathematics, and materials engineering.

To most lay readers, the very essence of AI is mysterious and unknown. Neural networks and transformers (the T in GPT), large language models and denoising diffusion models, and backpropagation methods all represent somewhat intangible concepts. While it promises to augment human expertise to solve problems beyond our current capabilities, there are very real concerns surrounding the technology. Will it replace workers? Will it contribute to the spread of ‘fake news’, or compromise our personal data?

NUS has long been a key player in AI research. Since its establishment in 1975, the NUS Department of Computer Science has been an active contributor, and in 2019, the NUS AI Lab (NUSAIL) was established within the School of Computing to focus on fundamental AI research domains such as theory, machine learning, reasoning, optimisation, decision-making and planning, modelling and representation, computer vision and natural language processing. More recently, the University bolstered its AI research efforts and established the NUS AI Institute (NAII), a University-level research institute, in 2024.

Through these initiatives, we bring experts together to work on computer science domains that are foundational to AI including hardware and software systems, theory, reasoning, resource efficiency, and system trustworthiness.

NAII is dedicated to advancing the frontiers of AI through fundamental research, innovative development, and practical applications for the betterment of society. Focusing on areas such as education, healthcare, finance, sustainability, and logistics, NAII is set to address some of the most pressing challenges of our time. NAII is also committed to ensuring that AI technologies are developed and deployed with transparency and accountability, addressing related ethical concerns and risks.

Since its establishment, the research institute has received substantial funding, including S$20 million from NUS and S$8 million in external research grants, to support NAII's foundational AI research, policy implications, and real-world applications.

Director of NAII, Professor Mohan Kankanhalli from NUS Computing will lead the institute of more than 20 AI/domain leads across different disciplines, working on more than 10 research programmes.

“NAII aspires to become a global node of AI thought leadership, bringing together domain experts from within and beyond NUS to collaborate on impactful research problems to address societal issues. Recognising the importance of growing a pipeline of AI talent, the institute will also provide exciting hands-on opportunities for NUS students who are keen to pursue a career in AI or explore entrepreneurial pursuits in this fast-growing field,” said Prof Kankanhalli.

NAII will play a crucial role in training the next generation of AI talent, offering comprehensive education programmes and hands-on learning opportunities such as internships

for students, in a bid to nurture the next generation of researchers, engineers, business leaders and policymakers.

Close collaboration with government agencies and industry is paramount in translating research into solutions for the good of the society and the economy. For a start, NAII will be collaborating with industry partners IBM and Google Cloud for research and domain applications development with the goal of spurring technological advancements into impactful societal and economic benefits.

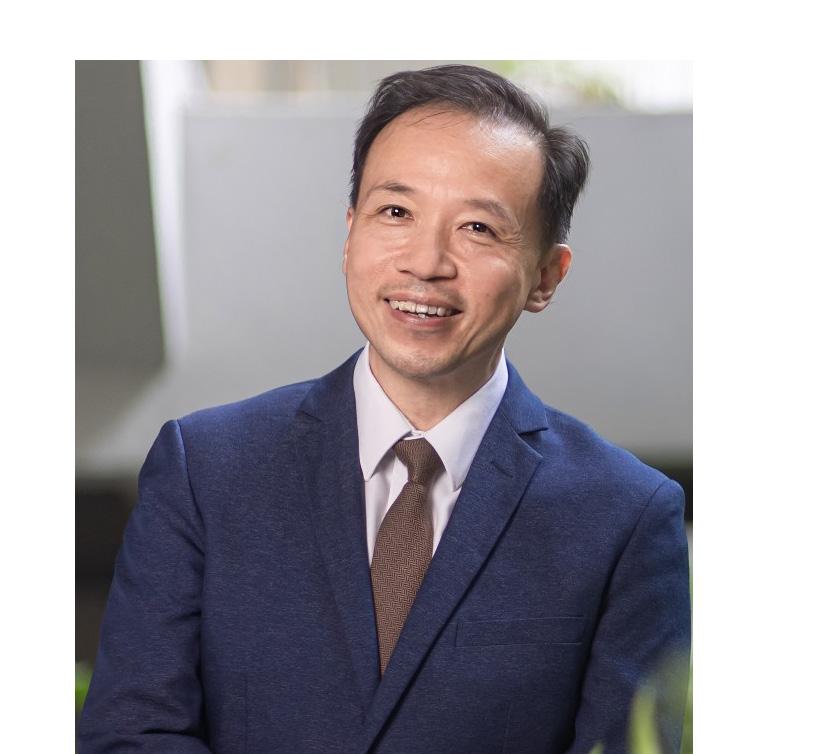

Professor Liu Bin, NUS Deputy President (Research and Technology), added, “The impact of AI on our lives, society, and economy will depend on how we develop, deploy and govern these technologies to maximise their benefits while addressing the challenges and the risks. Through strategic partnerships with local and international experts from both academia and industry, the new AI Institute will be the University’s focal point to synergise our diverse range of capabilities and create a dynamic ecosystem, where sound fundamental research will drive cutting-edge advances in AI, and where interdisciplinary collaborations will translate research outcomes into innovative solutions with deep impacts on our society.”

As the AI hub of NUS, NAII is poised to make significant contributions to the field of AI and its application for the greater good, both within Singapore and globally.

Prof Mohan Kankanhalli

Provost's Chair Professor, NUS Computing Director, NUS AI Institute

AI and big data including text, images and videos are become increasingly prevalent. Systems built around them should benefit people, who should be able to use them without worries and with full trust.

Prof Mohan Kankanhalli focuses on Multimedia Computing by building AI models that process multimodal information to enable applications such as search, recommendation, and security. These systems should not only be useful, but they also need to respect people’s privacy, should not be fragile and should not be easily misused. Hence his second major focus is on Trustworthy AI where he works on mitigating the harms of AI systems by making them privacy-aware, robust, explainable, fair and aligned to societal values.

NUS Computing

Deputy Director, NUS AI Institute

Improving the overall performance of machine learning (ML) models requires laborious fine manipulation of the model and data.

In response to this efficiency concern, Assoc Prof Bryan Low designs automated ML (autoML) techniques, such as neural architecture search and Bayesian optimisation, to automatically select the "best" model architecture and optimise its corresponding parameters for a given dataset. AutoML also automates tedious tasks in ML model building like model selection and optimisation.

Assoc Prof Low also designs and develops datacentric AI methods, such as active learning and data selection, valuation, and synthesis, to automatically select and synthesise the "best" data for a given model. He is currently working towards integrating AutoML and data-centric AI methods.

Provost's Chair Professor, NUS Computing

Energy-efficiency is critical for the advancement of AI and this will be especially relevant as inference processing moves to edge devices.

Prof Peh Li Shiuan's work in on-chip networks, commonly used in ultra-low-power wearable chips, has transformed the landscape of computational efficiency, within the domain of AI.Her innovative approach of using interconnecting chip islands that operate in parallel has led to an increase in computational throughput with a simultaneous reduction in power consumption. This architecture has found a critical application in modern data centres, where AI-driven workloads demand efficiency. Prof Peh's contributions to energy-efficient computational systems promise AI technologies that efficiently handle aggressive workloads.

NUS Computing

Vice Dean, Research

Robust AI systems must undergo rigorous training using massive datasets before they can make predictions on new data. These processes consume significant computational resources and energy, making current AI systems unsustainable. Hardware and software systems must therefore be redesigned.

To achieve this, Prof He Bingsheng is employing hardware and software co-design strategies, whereby dedicated hardware and software can be designed and optimised specifically for AI tasks. This strategy has the potential to enhance the performance, energy efficiency and capabilities of AI. Prof He also uses AI to build cost models, and recommend hardware and software design choices that could achieve better performance based on set parameters.

NUS Computing

Robust machine learning models that preserve privacy, while enabling meaningful analysis and collaboration, can mitigate data privacy and security issues in AI.

Prof Xiao Xiaokui specialises in data management and analysis. He focuses on algorithms and systems research, with an emphasis on privacy protection in data sharing and big data analysis.

He has been developing algorithmic techniques that mitigate privacy disclosure in data sharing. His work has focused on differential privacy (DP), a rigorous privacy-preserving technique that has been adopted by tech giants such as Google and Apple. Prof Xiao has devised techniques that enable the usage of DP in various applications such as synthetic data generation, mobile data collection, and private data analysis.

KITHCT Chair Professor, NUS Computing

Effective retrieval and analysis of multimedia content requires sophisticated algorithms that can understand and interpret different data modalities.

Prof Chua Tat Seng’s research focuses on the analysis of large unstructured multi-source multimodal data. He aims to develop a task-specific network of experts (NoE) framework. In this framework, each expert is an AI agent specialising in one task in a specific domain who can communicate and collaborate to solve more complex problems. The framework is designed to be adaptable and trustworthy.

Prof Chua is looking to apply NoE to advance research in tasks and domains that require collaborative effort among various experts, such as video generation, generative recommendation, event detection and forecasting, Fintech and legal tasks.

Prof Lee Wee Sun is a renowned figure in the field of AI reasoning and planning at NUS. His research focuses on bridging the gap between deep learning and reasoning, planning, and inference algorithms.

Prof Lee recognises that recent breakthroughs in generative AI have enabled AI agents to operate in very general environments. For example, chatbots can now converse with humans on virtually any topic. However, current AI agents are often unable to reason and plan effectively. In Prof Lee’s research, he seeks to understand and design effective methods for reasoning and planning with generative AI agents, such as generating step-by-step actions, or searching for the best action sequence for a given prompt.

Prof Wynne Hsu Director, NUS Institute of Data Science

Provost's Chair Professor, NUS Computing

Assoc Prof

Jonathan Scarlett

NUS Computing

NUS Institute of Data Science

Assoc Prof Jonathan Scarlett seeks to understand when, why, and how data-centric algorithms work, focusing on understanding their fundamental limits, and developing algorithms whose performance comes as close as possible to those limits. His previous work has led to a precise theoretical understanding of statistical problems such as blackbox optimisation, inverse problems with generative models, and group testing, along with algorithmic advances such as exploiting adaptivity to achieve more reliable noisy group testing with fewer tests. Overall, his work helps to ensure that data-centric algorithms are both theoretically sound and practically effective.

AI-based healthcare technologies are made possible by increasingly sensitive data acquisition and machine-learning-based analysis methods.

A leader in the field, Prof Wynne Hsu has developed numerous data mining, analytic, and knowledge discovery tools for biological and medical applications.

SELENA+ is an AI-powered screening system developed for automatic detection of diabetic retinopathy, cataract and glaucoma. The system meets the clinical requirement for screening purposes while incorporating deep learning into the model. In addition, to encourage clinicians to trust the AI model, Prof Hsu has investigated ways to generate faithful explanations for the model’s prediction. A smaller model with the same performance accuracy was developed for ease of deployment in clinical settings.

Professor Tulika Mitra

Vice Provost, Academic Affairs Provost's Chair Professor, NUS Computing

Generative artificial intelligence (AI) is literally at our fingertips. With just a browser and basic computer we can create images, videos, and prose almost instantaneously. However, where this generated content comes from often feels somewhat intangible. Rarely do users consider what is going on under the hood of the AI tools they use, and this means the costs of AI remain out of sight, and out of mind.

It may seem as though AI does not consume any more power than a PC does. After all, everything is done in the ‘cloud’ — that intangible storage and compute resource that, to many, exists somewhere in the ether. In reality, the carbon footprint of AI is massive. Powering inference queries, or user-initiated prompts, on OpenAI's ChatGPT alone, requires approximately 260 MWh of energy daily. This is comparable to the energy consumed by 200,000 Singapore households.

Yet, inference processing is just the tip of the iceberg. Most energy is used in AI training. Depending on the size of the model being trained, thousands of GPUs will run complex computational algorithms that optimise hundreds of billions of parameters over several months. In fact, it is forecasted that by 2030, the amount of energy required for AI training will be more than 400 TWh. The International Energy Agency projects that global electricity demand from AI, data centres

and cryptocurrency may exceed 1000 TWh in 2026 — an exorbitant amount that is counter to other industries’ efforts to reduce their carbon footprint.

For AI to continue its current trajectory, it must become a more sustainable technology. However, until the discourse encompasses not only how AI will change our lives but also how it could impact our planet’s health, there is little to motivate AI developers, users, or chip manufacturers to prioritise energy-efficient and sustainable AI.

Restricting the use of AI to professional needs, limiting daily inference queries, or raising subscription costs may be one solution, however, this will make AI exclusive at the expense of individuals who could benefit most from its use, especially in terms of knowledge acquisition.

Instead, focus should be placed on technological innovations and collaborative efforts, prioritising research into more efficient algorithms, hardware designs, and operational practices while fostering education about AI's true impact.

‘Greening AI’ is a team effort. Ultimately, improvements in AI efficiency can be sought, without compromising accessibility or capabilities.

Since energy consumption lies primarily in the running of GPUs or AI chips, manufacturers must take some responsibility for the efficiency of their products. New hardware is sold on the promise of its ability to make training and inference query processing faster, and more intelligent. However, the pursuit for compute power has been uncompromising. Without the ability to shrink transistor size further, manufacturers are now scaling by adding more processing cores and memory. Redefining the semiconductor architecture, starting at the material and chip level is one step that can be taken. This is discussed in an article by Professors Thean and Alioto, on page 5 of this magazine.

What may hold greater sway in reducing AI’s energy appetite are new approaches in algorithm and software development. Large language models, like ChatGPT, required billions of parameters before they became responsive and useful. However, this is because their purpose is to predict responses to queries that could cover any topic, context and intent. Future AI models that are tailored for specific purposes may incorporate only a subset of parameters and be trained on specific datasets relevant only to their intended use. Not only would refined models enable energy-

efficient deployment of AI, and reduce the environmental cost of deployment, but they could also be moved from the cloud to edge devices like mobile phones, PCs or even wearables. Doing so would make users responsible for the energy they consume when employing AI.

At NUS, researchers from the NUS Computing and College of Design and Engineering are exploring ways to reuse pre-trained AI models for specific tasks, while avoiding the need to re-train them. These models are distilled, tuned, and pruned to become faster, smaller and easier to use, which reduces the energy needed for inference queries, and removes training requirements altogether. They are also researching model quantisation, to reduce latency, memory consumption and bandwidth requirements. Already, alternatives that replace massive models with concise, interpretable models that capture complex relationships with minimal parameters for specific purposes have been presented. Researchers are also investigating system-level techniques that leverage computing architectures beyond traditional GPUs. Here, more flexible and energy-efficient infrastructure that is capable of adapting to the varied computational patterns of different AI workloads are explored.

AI tools were rolled out to popular communications and social media platforms, integrated into content production software, and made available for subscription or betatesting without developers disclosing to users how much energy was being consumed to generate their text or images. In most cases, it would not have been possible to quantitate a meaningful value.

Energy and water efficiency ratings have been shown to influence consumers’ decisions on which appliances to purchase for many years. Requiring manufacturers of AI devices, as well as software developers, to disclose the energy efficiency or carbon footprint ratings of their products may be a necessary step to nudging user behaviour. This will be especially relevant when AI is moved to specialised consumer devices. Measuring the energy demands of an algorithm or AI model is not straightforward, as it will depend on multiple factors. However, this should not deter such an approach but rather motivate research and regulation in this area.

AI remains in its infancy. There is still time to prioritise sustainable AI by focusing on energy-efficient systems, encouraging accountability from AI developers and chip manufacturers, and educating users on the true costs of AI.

F or years, robots have been automating manufacturing processes, sensors have been used to predict equipment failures, and digital twins have been used to simulate and optimise operations. However, it is only recently that these technologies could be integrated as one, to optimise manufacturing, and turn factories into smart, responsive, and efficient production lines. This is not only thanks to artificial intelligence (AI) but also augmented reality (AR).

Integrating AI and AR gives factories the brains and eyes to operate with unprecedented efficiency and precision. AI functions as the cognitive powerhouse that processes data, optimises operations and provides predictive insights. AR serves as the eyes, overlaying digital information onto the physical world to enhance real-time decision-making, training, and maintenance. This combination can transform manufacturing by reducing downtime, improving accuracy, and enabling remote assistance.

Given the benefits of integrating AI and AR into their processes, many companies are jumping on the bandwagon. At Siemens, for example, AI algorithms process equipment data such as temperature, vibration and performance metrics to predict when a machine is likely to fail or require maintenance. The real-time data and predictive insights from AI are fed directly into the AR interface. Through AR glasses or AR-enabled devices, technicians can see predictive maintenance alerts and diagnostic information overlaid on the equipment, helping them make informed decisions quickly. When AI detects a potential issue, it can also trigger AR-guided maintenance procedures. By combining AI's data analysis and predictive power with AR's immersive and interactive visualisation, Siemens significantly improved its maintenance operations, leading to reduced downtime, increased efficiency, and greater accuracy.

AI and AR are also essential and complementary elements for the construction of digital twins and human-robot collaboration.

Digital twin technology uses AI to process real-time data and create simulations of factory operations with AR displaying the equipment status through digital control dashboards. As a digital replica of the physical system, digital twins can replicate processes, including failures predicted by the AI.

A full digital twin representation of an entire factory shop floor is rarely obtained. Instead, digital twinning of individual equipment is common, however this limits the full potential of the technology.

At NUS, Associate Professor Ong Soh Khim from NUS Mechanical Engineering is pioneering AR technologies to address this limitation. Of particular interest is the integration of AR, AI and robotics.

Collaborative robots (cobots), which are robots that interact with human workers, benefit as AR provides workers with intuitive interfaces to control and interact with them. AI then enables these robots to understand and respond to complex commands and adapt to changing conditions. This type of collaboration enhances productivity and allows for more flexible and efficient workflows.

Integrating AI with AR is no easy feat. The issue of interoperability must be overcome since they are entirely different technologies. Other challenges include the requirement for specialised skills to develop, use, and maintain these technologies. For instance, in AR, workers must have sufficient knowledge and training in the wearing of AR-eyeglasses or head-mounted devices, to overcome possible nausea from prolonged wearing.

There is also the issue of data privacy and security which are always present in any form of digital application. The advice by Assoc Prof Ong is to design interfaces with external networks to ensure robust security measures are in place to prevent potential data loss and protect against malware attacks.

“AI is often considered a double-edged sword, it can facilitate and provide great assistance to the users. On the other hand, it can also open up huge security risks, loss of information and data, scams and hackings by malicious means. University and research institutions can play a role to design training modules to counter the downside of AI proliferation,” she added.

However, as technology advances and becomes more accessible, these challenges are likely to diminish. Advances

in AI, such as improved machine learning algorithms and more powerful data processing capabilities, will enhance the intelligence and responsiveness of AR applications.

As with every technological advancement introduced to the world, concerns of being replaced by technology are etched in peoples’ minds. “People worried about job displacement when automation and robots were first introduced to the shop floor, but this never happened. It is highly unlikely that this “displacement” would take place,” said Assoc Prof Ong.

Redesigning jobs and helping workers gain a better understanding of how these tools can assist in their daily tasks is crucial to alleviating such concerns.

While challenges remain, the ongoing advancements in both AI and AR suggest a future where manufacturing becomes even more efficient, flexible, and innovative. Together, AI and AR will create a powerful combination where the brain (AI) thinks, learns, and make decisions, while the eyes (AR) see, guide, and interact. This will enable workers to make informed actions in training, maintenance, quality control and human-robot collaboration, leading to enhanced productivity, efficiency and accuracy in manufacturing.

I

n the battle against climate change, peatlands have emerged as unsung heroes. These wetlands, which are composed of organic material that has accumulated over thousands of years, store vast amounts of carbon dioxide. In fact, 31% of terrestrial carbon is stored in peatlands, despite them covering only 3% of the Earth’s land area.

Like many of the world’s ecosystems, peatlands are under threat. Agricultural expansion, commercial peat extraction and wildfires all contribute to the depletion of this carbon sink. Compounding the issue are the fragile environmental factors unique to this ecosystem that must be kept stable for it to effectively store carbon. Increasing soil temperature will accelerate the decay of organic material, while moisture levels influence not only the rate of decomposition but also plant diversity on the surface. Any shift in these conditions, whether from natural changes or human interference, could severely hamper efforts to reign in climate change.

Restoring and preserving peatlands is therefore essential.

Monitoring the extent and health of peatlands, and detecting illegal activities like deforestation and drainage, traditionally required labour-intensive field surveys for data collection. While these methods work for short-term monitoring of confined regions, they are less viable for comprehensive long-term monitoring across large regions.

Now, researchers are turning to specialised artificial intelligence models in their fight to conserve Southeast Asia’s peatlands. Of particular use is AI’s ability to process vast amounts of data and identify patterns. Enabling this approach are the growing number of data sources available for environmental monitoring. Satellite imagery, for example, reaches even the most isolated peatlands, and covers vast regions. Ground-based sensors and imaging measurements can also be incorporated to offer detailed insights into microclimatic conditions, microtopography, water chemistry, and biodiversity.

At NUS, the Integrated Tropical Peatlands Research Program (INTPREP), led by Associate Professor Sanjay Swarup from the NUS Environmental Research Institute, monitors reforestation in retired commercial peat-based plantations. In this research, the team used satellite images, airborne LiDAR, and an in-house logistic regression model developed to track forest regeneration over time, identify regeneration hotspots, and identify the types of trees that are growing back. Focusing on the hotspots, the team collect detailed, multi-year measurements of tree growth and physiology and carbon emissions. They also assess the soil microbiome to learn more about the mechanisms of forest regrowth.

Beyond monitoring, predictive AI models are being developed to forecast changes in the flow of carbon in response to climate change and human activities. Such modelling aids in the formulation of adaptive management strategies. To enable such models, Assoc Prof Swarup's team is collecting and curating multi-year record datasets of predictor variables that cover a wide range of spatial scales.

Using this data, AI-based methods can be employed to identify markers that predict peat health and forest regrowth, and condense large-scale microbiome gene datasets to parametrise multi-scalar predictive models.

While AI offers promising solutions, its application in peatland conservation is not without challenges. Data privacy, algorithm bias, and the need for robust infrastructure in remote areas must all be addressed.

Ethical considerations also come into play, particularly concerning data ownership and governance. In indigenous territories, where traditional knowledge intersects with scientific data, there is a need to navigate complex issues of ownership, access, and control over data. Respecting indigenous rights and perspectives is crucial in integrating AI technologies responsibly.

Collaborative efforts involving scientists, policymakers, local communities, and technology developers are crucial to overcoming these challenges. Looking forward, Assoc Prof Swarup's team aims to collaborate with various stakeholders to develop culturally sensitive, science-based guidance and climate-smart agricultural practices to future-proof the livelihoods of communities who reside on coastal peatlands.

To do this, the team will first identify coastal peatlands that are most vulnerable to sea level rise using remote sensing and predictive AI models. This work will be done

in collaboration with NUS Centre for Remote Imaging, Sensing and Processing (CRISP) and NUS AI Institute. Next, they will collaborate with researchers from NUS Centre for Nature-based Climate Solutions (CNCS) to conduct field campaigns with the local communities, to better understand their needs and practices. With this knowledge, and using a combination of carbon, vegetation, and microbiome process measurements collected at multiple spatial and temporal scales, the team will refine predictions of carbon outcomes to climate vulnerabilities in current models. Finally, the team hope to share these predictions with the local communities to develop agricultural practices that are climate-smart, yet culturally inclusive.

By promoting transparency, inclusivity, and accountability, the team hopes to harness the full potential of AI to safeguard peatlands and enhance global carbon sequestration efforts.

I magine computers that operate a million-times faster than today’s supercomputers, that consume only a fraction of the energy of our mobile devices, where electricity can be generated, transmitted, and utilised with minimal loss, where telecommunications are instantaneous and perfectly secure, and where electronic devices are not only more efficient, but are adaptable.

It is possible that future computing technologies will demand such advancements, and yet, we are already reaching the theoretical and practical limits of traditional silicon-based semiconductor architecture. To achieve the future we all envision, completely new foundations on which technologies will be developed, are required.

Quantum materials may provide the basis on which our future computing technologies can be built. At their core, quantum materials possess key electronic and magnetic properties that enable unique behaviours and functionalities. These properties arise from the interactions of their electrons at the atomic and subatomic scales, where solid matter loses its conventional order and exhibits wavelike properties. It is at this level that we see quantum phenomena such as superconductivity, topological insulation, and exotic magnetic phases.

The ability to understand, create and control quantum materials is revolutionary. However, quantum materials possess a level of complexity that demands sophisticated, powerful tools and methods for their production, manipulation and application.

Despite advances in materials research, it remains challenging to precisely fabricate and tailor the properties of quantum materials at the atomic level. Current methods,

such as on-surface synthesis, are unable to provide the level of selectivity, efficiency and precision required for many applications. Existing methods are also costly, time-consuming and technically demanding.

Developing new methods for material discovery requires a rethink of traditional manufacturing processes. To enable discovery and achieve accuracy, efficiency and cost-effectiveness, researchers at the NUS Institute for Functional Intelligent Materials (I-FIM) are integrating AI, automation and robotics to their approach.

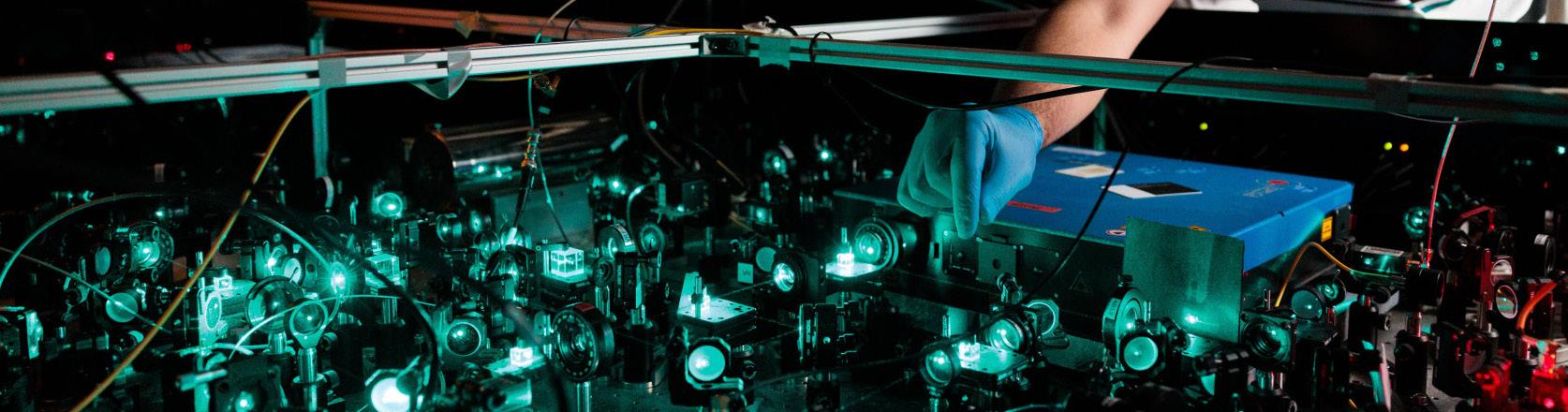

For example, in work led by Associate Professor Lu Jiong from NUS Chemistry and I-FIM, an AI-assisted atomic robotic probe was developed to mimic the decision-making process of chemists. This innovation seeks to revolutionise the fabrication of quantum materials with greater control and precision.

Their innovative concept comprises a chemist-intuited atomic robotic probe (CARP), which combines scanning probe microscope techniques with deep learning, to achieve precise fabrication of a carbon-based quantum material known as magnetic nanographene.

Assoc Prof Lu explains, “Our main goal is to work at the atomic level to create, study, and control these quantum materials. We are striving to revolutionise the production of these materials on surfaces to enable more control over their outcomes, right down to the level of individual atoms and bonds.” This research breakthrough was published in Nature Synthesis in February 2024.

A key feature of CARP lies in its ability to harness the knowledge of human chemists through a deep neural framework. For example, the expertise of chemists is used to annotate training datasets, which may include scanning tunneling microscopy (STM) images. This helps the intelligent modules understand what they need to focus on and how to achieve their goals efficiently. To achieve this, the team developed various layers of convolutional neural networks, a type of deep learning model used for image recognition and processing. The CARP framework was then trained on chemists’ knowledge of site-selective cyclodehydrogenation, which is a complex synthesis method for nanographenes.

In tests, the CARP framework demonstrated satisfactory performance in both offline and real-time operations, successfully triggering single-molecule reactions at a scale smaller than 0.1 nm. This marks the first instance of a probe chemistry reaction being assisted by AI.

The team also uncovered deep insights within the intelligent modules. Results indicated that CARP could effectively capture features such as the correct atomic sites, which are crucial in initiating reactions for the successful synthesis of nanographene, something challenging for human operators to notice. STM images typically contain both structural and electronic information, and chemists often struggle with distinguishing the correct atomic site within the range of

0.1 to 0.2 nm. This conventional but time-consuming approach relies on density functional theory (DFT)-based simulation to calculate the possible reaction pathway. With features uncovered by the intelligent modules in CARP, chemists can quickly comprehend possible reaction mechanisms and DFT can then verify and detail the reaction mechanisms using less effort and resources.

“Our goal is to extend the CARP framework further to adopt versatile on-surface probe chemistry reactions with scale and efficiency. This has the potential to transform conventional laboratory-based on-surface synthesis process into on-chip fabrication for practical applications. Such transformation could play a pivotal role in accelerating the fundamental research of quantum matters and usher in a new era of intelligent atomic fabrication,” added Assoc Prof Lu.

The implications of their findings on the fabrication of quantum materials could hold significant promise for the design of future technologies in the fields of data storage, computing, manufacturing, medicine and beyond.

As AI continues to advance, its role in the understanding, creation and application of quantum materials would become increasingly significant. It will herald a new era of precision and possibility, that we can all embrace.

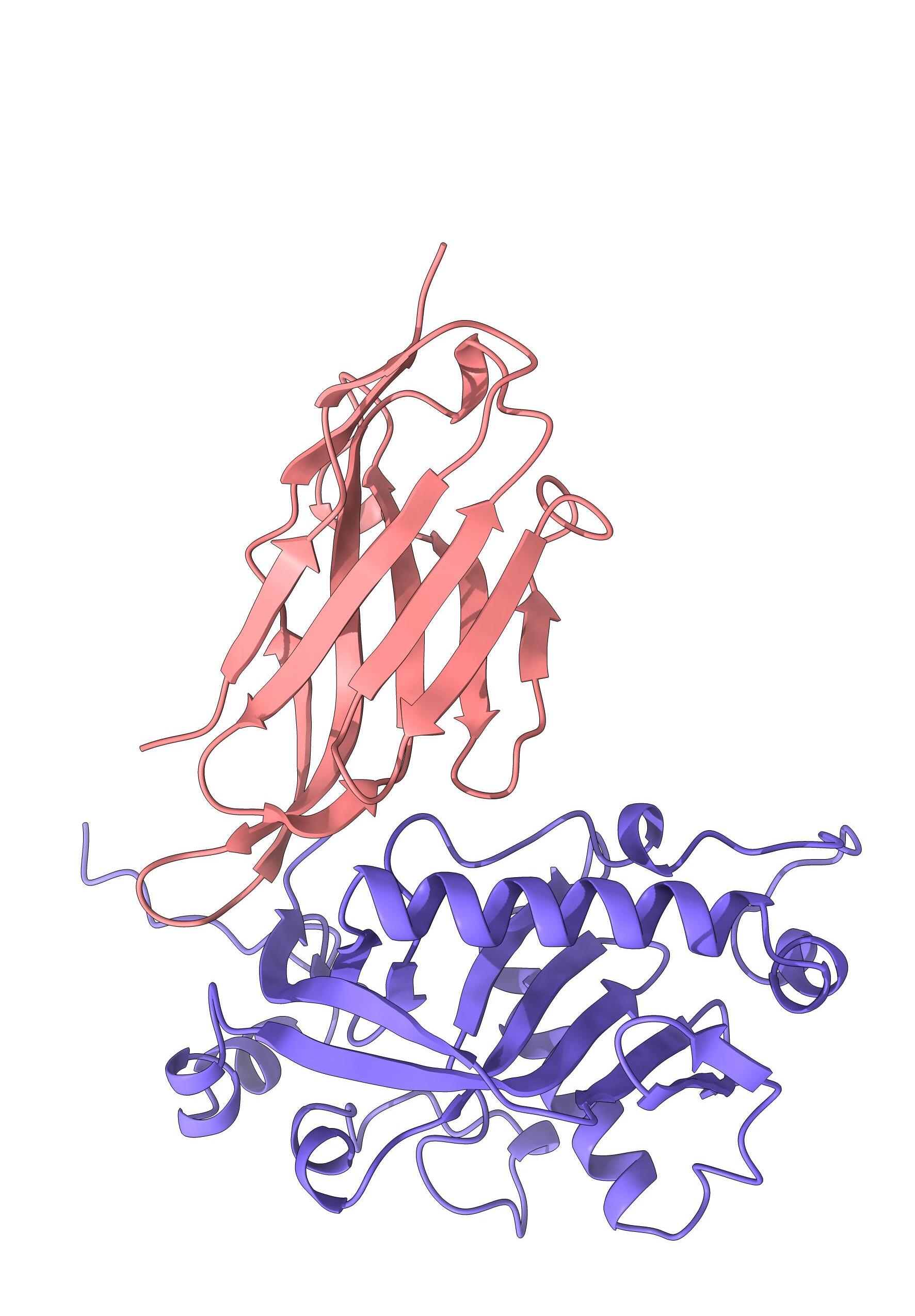

roteins are complex biological molecules. The folding and twisting of basic amino acid chains give rise to intricately detailed shapes, with coils, ridges, grooves, exposed surfaces and hidden pockets. This precise structural arrangement confers stability, integrity and functionality.

Most proteins are also dynamic. They may undergo conformational changes induced by their cellular environment, or be subjected to biochemical alteration by other protein types. These modifications may activate or suppress protein function.

However, one of the most complex aspects of protein life lies in the fact that they nearly always function within a vast network of biochemical pathways or signalling cascades. In some cases, a protein may contribute to multiple pathways, while in other cases multiple proteins may serve the same function in critical pathways, providing redundancy in the event that one protein is damaged or functions erratically.

In fact, nearly all proteins interact with other proteins. These protein-protein interactions (PPIs) include antibodyantigen interactions, which enhance an organism's ability to fight pathogens and diseases; ligand-receptor binding, which initiates cellular signalling and enzyme-substrate interactions, which facilitate metabolic processes.

Extensive effort has gone into determining the three-dimensional (3D) structures of proteins in complex with other proteins. These studies seek to understand how two or more proteins physically interact and how these interactions impact their function and regulation — providing knowledge that is key to drug discovery efforts, and in understanding the cause of certain diseases.

Although traditional techniques used to determine protein structure, like X-ray crystallography and Cryo-Electron Microscopy, offer precise structural insights, they also require significant time and financial investment, and lack scalability.

Now, researchers are turning to artificial intelligence (AI) and the computational prediction of protein structures. Algorithms like AlphaFold2 developed by DeepMind have proven to be impressively accurate in predicting more than 200 million 3D structures of proteins from amino acid sequences.

Created by Professor Zhang Yang from NUS Computing, I-TASSER is a pipeline which combines protein fold recognition with physics-based Monte Carlo simulations, developed for iterative protein structure assembly and refinement. I-TASSER has been ranked the most accurate method for automated protein structure prediction in community-wide CASP experiments.

Recently, Prof Zhang extended I-TASSER to D-I-TASSER by integrating advanced AI and deep-learning techniques with the I-TASSER folding simulations for atomic-level protein structure and functions. D-I-TASSER is able to combine multiple AI techniques with physics-based force fields. This not only significantly enhances the structural modelling accuracy of individual AI methods but also allows tracking of folding simulation trajectories. The latter capability is critical to model protein folding dynamics, which is a long-term challenge in the field, given that most AI-based methods only predict one static structure model.

Another advantage of D-I-TASSER is modelling the structures of multi-domain proteins, which contain more than one distinct structural unit or domain with different functions. Such structures are often hard to predict even with the state-of-the-art algorithm AlphaFold2, due to the complexity of domain-domain interactions. The blind test results from the most recent CASP15 experiment showed that D-ITASSER achieved higher accuracy on multi-domain proteins when compared with AlphaFold2. This demonstrates a new avenue for complex protein structure and structure-based function predictions.

The accuracy of protein structure prediction algorithms like I-TASSER and D-I-TASSER is dependent on the quality of input data sources. Common bioinformatic inputs used for structure prediction algorithms include multiple sequence alignments (MSAs), which contain information that helps to find conserved regions or evolutionary relationships between biological sequences, including the amino acid sequence that defines protein structure. MSA collection tools may highlight important functional regions to improve structure prediction reliability, or reveal structural variations between similar proteins.

In a recent study, Prof Zhang reported a pipeline to create high-quality MSAs known as DeepMSA2. The method uses iterative searches across huge genomic and metagenomic sequence databases that contain a total of 40 billion sequences. A deep learning-based scoring strategy is applied to optimise MSA selection.

Compared with existing MSA construction methods, the iterative search and model-based preselection strategy of DeepMSA2 has been shown to construct MSAs with more balanced alignment coverage and homologous diversity — higher quality MSAs for structural prediction. In the recent CASP15 competition, Prof Zhang combined DeepMSA2 and cutting-edge AI models to develop DMFold, which significantly outperformed all other state-of-the-art PPI complex structure predictions.

Determining the structure and function of proteins by leveraging AI and deep learning techniques is a cornerstone

of modern biology and medicine. Having already made substantial contributions to the field, and as the domain lead of AI for Science at NUS AI Institute (NAII), Prof Zhang hopes to continue accelerating in the computational structural biology field to enhance its impact on future biomedicine and drug discovery efforts.

AI has brought about significant progress in protein structure prediction, but critical challenges remain in protein dynamics and complex structure prediction.

The rising frequency and severity of extreme weather events, driven by human-caused global warming1, are placing significant socioeconomic and environmental burdens on communities and ecosystems around the globe.

These events are caused by significantly anomalous weather conditions relative to what is expected in a certain region, and they can lead to both direct and indirect impacts. Heatwaves, for example, may cause health-related issues, like heatstroke, energy-grid and infrastructure failures, as well as agricultural issues, such as crop failure. When heatwaves are compounded by persistent dry conditions, water scarcity and wildfires may also result.

Many governing bodies have declared climate change a global crisis, prompting efforts to not only better understand the root causes, but to also consider measures necessary to protect economies, infrastructure, and ecosystems from future weather extremes.

However, much work needs to be done. Identifying and implementing preventative measures starts with gaining a better understanding of the drivers of extreme weather and improving prediction capabilities. It is also essential that policymakers are informed of the vulnerabilities their region may be subject to.

To tackle this understanding-prediction-mitigation challenge, experts are now able to augment their own knowledge and experience with artificial intelligence (AI)-based tools, which tap on the wealth of data available from weather observations, satellite images, and textual data.

Organisations such as Google DeepMind, NVIDIA and the European Centre for Medium-Range Weather Forecasts (ECMWF), are embracing the challenge and producing competitive AI models for predicting the weather, such as GraphCast, FourCastNet, and Artificial Intelligence/ Integrated Forecasting System (AIFS).

While these AI systems demonstrate an ability to make efficient weather predictions from large historical datasets, they are unable to provide insights into why the prediction was made or which variables were the most important in their prediction. They are essentially black-boxes for domain experts and scientists alike, and this hinders our ability to learn new knowledge about weather and climate.

However, if we were able to understand what data the AI system deemed important (and possibly how it used those data), scientists can potentially try to explain why the machine deem those specific data important, and subsequently discover new knowledge. By embedding this new knowledge into existing prediction systems, or by shaping AI model behaviour, better forecasts of extreme weather may result.

To unlock this enormous potential, ‘explainable AI’ (XAI) plays a pivotal role. XAI refers to a framework that allows human users to better understand the results and outputs provided by machine learning algorithms. This includes, for example, providing accurate information on what data an AI system used, and possibly how it used those data to obtain a particular decision, prediction, or recommendation. An XAI system could not only predict extreme weather events,

but also explain what factors determined its prediction. Unusual temperatures, pressure, wind speed patterns, or humidity levels, are some factors it may consider. XAI allows researchers, meteorologists and policymakers to work with AI models, applying their domain knowledge to interpret AI insights, and obtaining more accurate weather and climate predictions. XAI models are also more trustworthy as it is clearer on how the algorithms obtain their output. This is important in the context of AI regulation and responsibility.

Pioneering the development and use of XAI for the understanding, prediction and mitigation of extreme weather events is Assistant Professor Gianmarco Mengaldo from NUS Mechanical Engineering.

He proposes the use of XAI to investigate the precursors of extreme weather events and identify region or city-specific vulnerabilities associated with these events. This approach aims to enhance the understanding and predictability of extreme weather events, to potentially improve forecasting and warning systems.

Specifically, Asst Prof Mengaldo focuses on the tropical Indo-Pacific region, where it is notoriously challenging to distinguish between natural variability and global warming trends. His preliminary findings, obtained with tools from dynamical system and extreme value theory, have revealed critical changes in weather patterns that are significantly aggravating extreme weather events, especially heatwaves and extreme precipitation. These extreme events are frequently linked to heat-induced illnesses and potentially devastating flooding respectively. He is currently collaborating with the Centre for Climate Research Singapore (CCRS), NVIDIA, Brown University, the French National Centre for Scientific Research (CNRS) and the University of Cambridge, among others, to expand this work to other regions worldwide and apply XAI for better understanding of extreme weather event precursors, and region-to-city-level vulnerabilities to these events.

Asst Prof Mengaldo’s research in XAI stands to significantly advance our ability to predict and mitigate extreme weather events. His approach not only promises to enhance the accuracy and reliability of weather forecasting, but also provides valuable insights into the underlying mechanisms driving these phenomena. These efforts may lead to more effective early warning systems and disaster response strategies, and may benefit policymakers who, when equipped with these advanced tools, would be better positioned to protect communities, reduce economic losses, and improve overall resilience in vulnerable urban areas.

1.

Summary for all climate change 2021: 2022 [accessed 2024 Aug 16]. https://www.ipcc.ch/report/ar6/wg1/downloads/outreach/IPCC_AR6_ WGI_SummaryForAll.pdf

A rtificial Intelligence (AI) has emerged as a transformative technology across many sectors. In healthcare alone, it is revolutionising diagnosis, treatment, and patient care. In clinical oncology, for instance, AI tools can analyse histopathology images and mammography scans for diagnostics, identify novel therapeutic targets to advance drug discovery and development, and personalise cancer treatments by optimising drug dosages for individuals. This means not only minimising side effects, but also identifying the most effective combinations for treatment.

However, AI is still a relatively new technology and faces substantial hurdles in clinical adoption due to trust and understanding issues. Many patients and healthcare providers struggle to grasp how AI works, and regulators are cautious about trusting AI in a clinical environment due to concerns about its reliability and safety. Ethical considerations such as data privacy and security, and potential biases in AI algorithms may also be raised regarding its use. These factors tend to lead to delays in the approval process, which subsequently delays implementation in clinical practice.

A lack of understanding and trust, however, can be resolved through effective communication between researchers, regulators, healthcare providers and AI developers. With good communication, rigorous evaluation and accelerated delivery of impactful modalities will ensue, and this is what ultimately transforms healthcare and saves lives.

CURATE.AI, which is an AI-based platform designed to dynamically optimise and personalise drug doses for individual patients, is a prime example. This platform was developed by Professor Dean Ho and his team from NUS Biomedical Engineering, The N.1 Institute for Health (N.1) and The Institute for Digital Medicine (WisDM). During its development, frequent discussions with the relevant regulatory bodies took place, and according to Prof Ho, this was instrumental in bringing the technology to clinical trials in Singapore.

The N.1 Institute for Health

The Institute for Digital Medicine

This began with a pilot trial (PRECISE.CURATE) that showed that colorectal cancer patients were able to receive doses that were 20% lower on average than standard treatment regimens dictate, yet exhibited the same efficacy. Since this initial trial, CURATE.AI and related platforms have gone on to be assessed in clinical trials for various cancers, including breast, GI, glioma, sarcoma, and multiple myeloma.

It has been a long road for both Prof Ho, and CURATE.AI, but what has stood out to him along the way were the rapid responses and active engagement of Singapore’s regulatory authority, the Health Sciences Authority (HSA).

With regulator engagement and curation came efficient turnaround times for trial initiation. The trials, particularly the individualised N-of-1 studies, involved highly personalised dosing plans that change over a time

— a hallmark of CURATE.AI’s unique capabilities, and required careful risk assessment and regulatory discussions to ensure their safe and efficient implementation. In addition, the team also conducted extensive Institutional Review Board (IRB)-driven user engagement and acceptability studies to integrate CURATE.AI into clinical workflows.

According to Prof Ho, CURATE.AI’s clinical workflow is a breakthrough for the prospective use of AI to guide actual patient treatment. “This is a marked difference from the more prevalent studies of AI that pertain to the validation of AI models that have not yet been used to guide patient care.

When paired with the recent featuring of CURATE.AI as a real-world use case in the WHO Regulatory Considerations of AI in Health guidance1, this represents a full roadmap of ideation all the way to policy impact.” he said.

Prof Ho’s CURATE.AI clinical workflow was published in the ASCO Educational Book (EdBook)2, in May 2023 — a milestone for NUS given the global influence of this publication on practice-changing clinical oncology education.

Currently, most approved AI healthcare models are validated retrospectively with existing data. Applying AI prospectively to make real-time decisions as seen in CURATE.AI warrants a behavioural shift from all stakeholders, including patients, doctors, AI experts, bioengineers, ethicists, and behavioural scientists that will eventually see an acceptance of AI in clinical practice.

Prof Ho acknowledges that one of the barriers to fostering better engagement between regulators and AI developers is the lack of early and frequent interaction. Typically, technology developers seek regulatory input only when their innovation is ready for approval. Given that most innovations would probably never reach this stage, this approach leaves many innovators and developers unfamiliar with regulatory expectations, making later interactions more challenging.

To overcome these hurdles, Prof Ho recommends that several strategies are employed. Firstly, regular dialogues between regulators and developers can help align expectations. Conducting programmes that educate developers about regulatory expectations early in the development process is also useful.

Finally, focus groups could also be established to enhance interaction among stakeholders including developers, healthcare providers, behavioural scientists and patients. Together, these steps will foster behavioural change and greater acceptance of AI in clinical practice.

Given the quickly-evolving nature of AI and digital medicine, the agility of a country’s research and development ecosystem would not only facilitate rapid access to discussions with all stakeholders, but would also be vital in supporting innovation, including new discoveries and clinical trial designs. For instance, the establishment of the Ministry of Health (MOH) TRUST platform facilitates data sharing for AI model validation. The data exchange platform calls for healthcare professionals, institutions, and private healthcare providers to contribute their data for research. Having access to larger and more diverse datasets helps researchers advance the discovery of novel associations, and enables breakthroughs in health research that will have benefits extending beyond Singapore’s population.

Ultimately, the integration of AI in healthcare holds immense potential, but its successful deployment requires robust engagement and communication between regulators and developers to ensure AI innovations are safe, effective, and beneficial for all stakeholders.

1.

Royalty-free licenses for genetically modified Rice made available to developing countries. World Health Organization. 1970 Jan 1 [accessed 2024 Aug 16]. https://www.who.int/iris/handle/10665/267990

2.

Kumar K, Miskovic V, Blasiak A, Sundar R, Pedrocchi AL, Pearson AT, Prelaj A, Ho D. Artificial Intelligence in clinical oncology: From Data to Digital Pathology and Treatment. American Society of Clinical Oncology Educational Book. 2023;(43). doi:10.1200/edbk_390084

NUS Real Estate

Real estate economics in Singapore is a balancing act. Global economic activity, GDP growth, interest rate forecasts, and housing availability all work alongside unique factors such as land scarcity, population density, and an ageing population to direct long-term urban planning strategies. These, in turn, aim to ensure housing and social support remain accessible across all socioeconomic groups.

With many empirical factors at play, the domain of real estate economics is expected to see a significant shift, driven by artificial intelligence (AI), in the coming years. AI-based modelling, simulation and prediction tools may redefine how policy is formulated, making use of current and historical data to optimise land-use and mitigate price surges or market crashes.