CDE Forging New Frontiers

Issue 06 | Aug 2025

Dear Reader,

Welcome to our latest edition of the CDE Research Newsletter!

This issue covers a topic that has impacted every facet of society: artificial intelligence. Therefore, it’s apt that we title this release: AI+X.

We have an exciting line-up for everyone. Key topics include:

Addressing data privacy concerns amongst transportation stakeholders in the increasingly connected travel ecosystem. Boosting predictive preparedness against ground sinking, a continuing environmental challenge.

AI-enabled microrobots that navigate challenging terrain, just like the way our own cells handle it!

Powerful frameworks to help global AI researchers know when to trust or let go of historical data.

A smart thermostat that personalises comfort, which will be critical towards supporting health amidst climate evolution.

Plus, a spectrum of additional exciting and impactful drivers of AI innovation!

It is clear that new advancements in AI are impacting all aspects of the world, from the environment and transportation to wellbeing, micro/nanotechnology, and beyond.

Our researchers represent the very best in our core ethos of bridging first-in-kind innovation with implementation and adoption.

As always, we’re grateful for your interest in innovation at CDE. Enjoy the issue!

All the best,

Dean Ho Editor-in-Chief

What makes a city comfortable?

A new AI-powered index offers clues

A multidimensional AI-driven model uncovers what conventional liveability rankings often overlook.

In The Economist Intelligence Unit’s Global Liveability Index 2025, Copenhagen edged out Vienna to claim the top spot, ending the Austrian capital’s threeyear reign as the world’s most liveable city. With its picture-perfect harbours and famously cycle-friendly streets, the Scandinavian capital scored nearperfect marks across the board.

But what exactly makes a city liveable — and who gets to decide?

The answer is not as straightforward as it seems. Most global indices rely on fixed sets of criteria: housing, transport, safety, healthcare, education. While these metrics are important, they often miss what it actually feels like to live somewhere. The noise outside your bedroom window. The way a street makes you feel at night. Or how unbearable the heat gets in the middle of the day.

Assistant Professor Filip Biljecki led a group to develop a comprehensive urbancomfort index that combines hard data with subjective elements.

Urban comfort, in other words, extends way beyond cold infrastructure. It’s also about perception — the human experience of a place. It’s what Assistant Professor Filip Biljecki and his research group from the Department of Architecture, College of Design and Engineering, National University of Singapore, sought to capture with a new approach with data, design and AI as its core ingredients.

Published in the journal Sustainable Cities and Society, the study proposes a new way of measuring how people experience cities. Asst Prof Biljecki led a team to develop a comprehensive urban comfort index, tested in Amsterdam, that combines hard data (think air quality, access to public transport and how closely buildings are packed), with subjective elements like how beautiful or safe a street looks, based on widely available street-level imagery and human ratings.

Crucially, the team’s work also models how comfort shifts over time and space. “Cities are really like living systems,” says PhD student Ms Lei Binyu, also the paper’s first author. “A street that feels pleasant on a spring morning might feel stifling in summer. We wanted to reflect that complexity and show how comfort emerges from the dynamic interaction between people and their urban environments.”

To go about this, the team, in collaboration with the Department of Geography at the NUS Faculty of Arts & Social Sciences, assembled 44 different features across four broad dimensions: 3D urban morphology, socio-economic conditions, environmental factors and human perception. These were selected through extensive literature review and grounded in real-world understanding of how people engage with urban environments, from greenery and building density to noise, walkability and visual aesthetics.

Issue 06 | Aug 2025

The data was fed into a graph-based neural network model, a spatial form of deep learning that maps how characteristics in one neighbourhood ripple into the next. Layered on top of this was “explainable AI” (XAI), which breaks open the “black box” of machine learning to reveal which features drive the final comfort scores.

“Traditional models often assume simple, linear relationships,” says Asst Prof Biljecki. “XAI enables us to see how multiple factors interact and vary across different contexts, which is key to understanding the nuanced realities of city life.”

The team found that neighbourhoods with more greenery, better access to amenities and lower noise levels tended to rank higher on the comfort scale. Interestingly, some of Amsterdam’s central districts — long regarded as prime urban real estate — scored lower than their suburban counterparts. (Sorry, real estate agents!) Despite ticking the usual boxes for liveability, these areas were dragged down by visual monotony, crowding and poor street-level perceptions.

To validate the findings, the researchers pored over Google Places reviews, limiting them to Dutch-language entries to focus on local voices. The sentiment analysis confirmed the model’s results: while tourists might gush over the city centre, locals were more likely to groan about crowds, noise and poor upkeep. In contrast, less-celebrated suburbs drew fewer complaints and reflected a more cheery take on day-to-day living.

“XAI enables us to see how multiple factors interact and vary across different contexts, which is key to understanding the nuanced realities of city life.”

Apart from diagnosing issues, the urban comfort index also suggests solutions. In one case study, the researchers used a digital twin of Amsterdam (a 3D virtual model of the city) to simulate improvements in a neighbourhood just below the comfort baseline. By tweaking features like street design, air quality and facility access, they were able to model the impact of various interventions. Small changes, like planting vertical gardens or improving pedestrian infrastructure, produced outsized positive effects.

Cities of the future

What does this mean for those in a position to shape cities? For city planners and policymakers, the index offers a way to tailor strategies at the neighbourhood level, fine-tune public investment and better understand how design choices affect daily life. The model is also designed to be adaptable, so it could be customised for use in other cities, with local conditions, priorities and constraints.

And what’s next for the team? “We are only beginning to understand how AI can help reveal the interactions between people and the urban environment,” said Asst Prof Biljecki. “One direction we’re excited about is scaling the index to other cities with different climates, cultures and forms, to see how comfort varies and what remains universal. We’re also exploring ways to embed it into urban digital twins, so planners can simulate how new developments might impact comfort before any ground is broken.”

“Sometimes, the clearest picture of a city comes from the ground up, from the people who live in it, and from the subtle signs their environments give off,” adds Ms Lei. “To really understand what makes cities liveable, we have to start by asking what makes them comfortable.”

Modelling personal comfort: a smart thermostat that learns what temperature you like

ComfortGPT uses generative pre-trained models and a transformer architecture to predict individual thermostat preferences over time.

There’s a big chance that you’ve heard of — and used — ChatGPT. Ask the large-language-model-powered chat bot to plan a four-day getaway to Bangkok, and it’ll hand you a polished itinerary complete with temples and floating markets, punctuated with suggestions for boat noodles and mango sticky rice. Ask it about the origins of the universe, and it’ll churn out an impressively insightful response. (Though some fact-checking is still warranted.)

Issue 06 | Aug 2025

with his PhD

But ask it to analyse the temperature you actually want in your workspace — be it on a rainy day, after a long commute, or when you’re feeling just a bit off — and it hits a wall.

Meet ComfortGPT. Developed by Assistant Professor Ali Ghahramani and PhD student Mr Chen Kai, both from the Department of the Built Environment, College of Design and Engineering, National University of Singapore, this transformer-based machine-learning model does what conventional thermal comfort tools can’t: it learns your temperature preferences over time — and adapts to them. By doing so, it reduces unnecessary heating or cooling, boosting energy efficiency while keeping occupants comfortable. The duo’s work was carried out at the Building Robotics Laboratory at CDE and was published in Building and Environment

Perceptive, not reactive

Thermostats were built to be reactive. Feeling hot or cold? Walk to the wall, or pick up the controller, and make a change. Meanwhile, large-scale air-conditioning systems, like those used in shopping malls, operate on generalised comfort models that treat everyone more or less the same.

“Comfort is personal and dynamic. A temperature you find cooling on a humid day might feel chilly after consecutive days of rain,” says Asst Prof Ghahramani. “Asking users to constantly report how they feel, as many research-based comfort models do, quickly leads to fatigue and patchy data.”

ComfortGPT takes a different tack. Instead of surveys, it learns from real behaviour. For example, how occupants adjust thermostats over time, and in different conditions. The researchers trained their system using a huge dataset from ECOBEE’s “Donate Your Data” programme, containing anonymised logs from over 100,000 thermostats across North America, collected over years and at five-minute intervals.

“We clustered these thermostat behaviours to create comfort archetypes, which are groups of users with similar temperature preferences and adjustment habits,” adds Mr Chen, who’s also the paper’s first author. Some like it cooler. Others prefer slightly toasty rooms, even when it’s warm out. Some change with seasonal weather.

Together

student, Assistant Professor Ali Ghahramani developed a transformed-based machinelearning model that learns temperature preferences over time.

“When a new user interacts with the thermostat — even just once — ComfortGPT matches their behaviour to the closest archetype and begins making predictions.”

“When a new user interacts with the thermostat — even just once — ComfortGPT matches their behaviour to the closest archetype and begins making predictions,” added Asst Prof Ghahramani, highlighting how the system learns and adapts from the get-go.

The model also uses a transformer mechanism — the same type of architecture powering ChatGPT — to tease out how recent and relevant each past interaction is. That means the system is not just learning what you liked once, but what you’re likely to prefer next, depending on changes in weather or routine.

That warm, fuzzy feeling

In testing, ComfortGPT predicted user setpoints with a mean error of just 0.65°C. That’s close enough for practical use in most buildings, and a significant improvement over traditional adaptive models, which tend to fall short in airconditioned environments. By adjusting setpoints more precisely in response to external conditions, the model helps avoid overcooling or overheating, delivering conditioned air only when and where it’s needed, thus improving energy efficiency in the process.

To be able to personalise comfort without constant user input is an exciting prospect. While this study was mostly focused on residential settings, the team is also exploring multi-user applications, including a robotic fan system that delivers targeted cooling to individuals in shared spaces. In parallel, they are developing ways to capture short-term changes in comfort using physiological signals, such as skin temperature patterns measured through infrared thermography. The team has also made the code open-source, inviting developers to build on the work.

“We’ve seen strong interest from stakeholders like Trane Technologies, who are exploring how this system could be integrated into future smart-building applications,” says Asst Prof Ghahramani. “For the first time, we have a scalable, data-driven method to make thermostats genuinely smart — able to adjust in real time to individual preferences while optimising for comfort and energy efficiency.”

Chemical and Biomolecular Engineering

A new recipe for modelling chemical processes

A machine-learning framework helps engineers build models for new chemical processes by combining knowledge from existing ones.

Master chefs and chemical engineers have more in common than one might expect. Both work based on recipes — some passed down, some improvised along the way — and both are in the business of mixing ingredients under certain conditions to produce consistent results.

Cooking, like chemical engineering, involves a heap of experimentation. But when it comes to inventing something new, even chefs don’t start from scratch.

They draw on flavours and techniques from other dishes. They borrow, tweak, adapt. Chemical engineers, too, are doing the same — with the help of machine learning.

In a study published in Chemical Engineering Science , Assistant Professor Wu Zhe from the Department of Chemical and Biomolecular Engineering, College of Design and Engineering, National University of Singapore, introduced a method that allows engineers to build predictive models for complex chemical systems using knowledge from multiple existing ones.

The team’s method is grounded in “multi-source transfer learning”, a machine-learning technique where models trained on one or more existing processes are adapted to help understand a new but related process. But things get complicated when more than one source needs to be managed simultaneously, particularly when those sources vary in how helpful (or not) they might be.

Such challenges compound in the face of chemical manufacturing. Whether producing active pharmaceutical ingredients or designing new catalysts, every process has its own kinks as conditions and dynamics wax and wane. Some systems are easier to model because there’s plenty of historical data. Meanwhile, some are newer or less accessible, creating a data void. Yet all systems require accurate models to predict how they will behave under changing conditions.

Transfer learning offers an effective workaround. “Instead of building and training a model entirely from the ground up, which is often costly and data hungry, our approach figures out which ‘past recipes’ are most relevant, and what is the best way to combine them to suit the new process,” says Asst Prof Wu. “However, knowing which source to trust, and how much to rely on it, is another challenge.”

To build that trust Asst Prof Wu’s team developed an optimisation-based framework that helps select and weight the best combinations of source models, using a mathematical measure called the generalisation error bound. It helps estimate how well a model adapted from one process will perform on another.

This mathematical backbone helps engineers avert the pitfall of blindly reusing data from mismatched systems. “The framework evaluates how similar the

Assistant Professor Wu Zhe and his team introduced a machinelearning framework that builds predictive models for new chemical processes by combining knowledge from existing ones.

Issue 06 | Aug 2025 Forging New Frontiers

input conditions are, as well as how the system’s dynamics, or the ‘outputs’, behave,” Asst Prof Wu explains. “This gives us a more robust way to choose our sources.”

“For instance, say you’re trying to model a new process to produce a pharmaceutical compound but only have limited data. Instead of starting from scratch, the framework draws from other existing processes, perhaps one that makes a similar drug, another with overlapping temperature conditions and a third that’s only vaguely related. It then weighs how useful each one is, combining the most relevant parts to build an accurate model for the new process,” adds Asst Prof Wu.

“The framework evaluates how similar the input conditions are, as well as how the system’s dynamics, or the ‘outputs’, behave.”

The team’s framework builds on their prior work in transfer learning, including a 2023 paper in the AlChE Journal that introduced a method for adapting models from a single source to a target process. “This current study extends our past work to multiple sources, each with varying degrees of relevance, allowing a more nuanced and flexible approach to model building.”

Cooking up efficient chemical processes

Conducted as part of the Pharma Innovation Programme Singapore (PIPS), a multi-institutional initiative involving industry collaborators such as GSK, Pfizer, MSD and Syngenta, the team’s work addresses the need for efficient, scalable modelling tools in pharmaceutical and chemical manufacturing.

In one case study, the team applied their approach to a simulation of a continuous stirred tank reactor, a standard equipment in chemical plants. By drawing from a combination of previously modelled reactors — each slightly different in parameters or operating ranges — they demonstrated that their multi-source approach resulted in better predictive accuracy than using any single source alone. Excitingly, even when the data from the target process was limited, the method still produced models robust enough to support downstream control systems.

“In chemical plants, these models feed into complex control systems that keep tabs on a plethora of operating conditions, from pressure to temperature to flow rates to feed ratio — all in real time,” adds Asst Prof Wu. “A robust, accurate model ensures safety, boosts efficiency and increases economic value.”

The team is now exploring how the method could be applied to photochemical reactors, which are traditionally difficult to model due to their complex dynamics and limited kinetic data. In parallel, they are planning collaborations with biopharmaceutical companies to support the scale-up of bioreactor systems, where nonlinear dynamics and data scarcity similarly pose hurdles.

“We aim to show how transfer learning can bridge knowledge gaps across diverse chemical processes, accelerating innovation even when data or theory is incomplete,” says Asst Prof Wu.

Safeguarding data privacy on the road

A new model based on federated learning supports data collaboration between traffic authorities and mobility forms — without compromising privacy. Civil and

very movement on the road, from sudden braking to avoid a cut-in vehicle to rerouting in response to congestion, generates torrents of data. When aggregated and analysed at scale, these data reveal hidden patterns: how minor disruptions ripple into delays far away, how drivers change

Issue 06 | Aug 2025

Assistant Professor

Kaidi led a team to develop a model based on federated learning that supports data collaboration between traffic authorities and mobility companies.

routes in response to unexpected slowdowns, and how near-miss incidents might signal future crash risks.

With the rapid development of AI, traffic authorities are increasingly tapping into this data to improve how they estimate and manage congestion. However, the most accurate view of what’s happening on the road often requires fusing data from multiple sources, be it public traffic sensors, private fleet routes or vehicle telemetry. Each source offers a different piece of the picture. The problem is these pieces are rarely shared.

“Mobility companies worry about revealing their service areas or fleet behaviours. Government agencies have concerns too, and rightly so, like the risk of exposing infrastructure layouts or traffic-control strategies,” explains Assistant Professor Yang Kaidi from the Department of Civil and Environmental Engineering, College of Design and Engineering, National University of Singapore.

Asst Prof Yang led a team to develop a new framework that might just free this data deadlock. Detailed in their paper published in Transportation Research Part C: Emerging Technologies , the framework allows different transportation stakeholders to collaborate and make sense of their combined traffic information, all without divulging any sensitive data to one another.

The team’s technique works like a kind of secret handshake between systems. Instead of pooling raw data in one place, each party keeps their information to itself but participates in training a shared digital model. “Think of it as each party privately working on its part of a puzzle and only exchanging a few hints,” adds Asst Prof Yang. “The model then learns from these encrypted signals to estimate real-time traffic conditions, such as vehicle flow and road density, across a road network.”

This privacy-protecting technique is a departure from how such collaborations are usually done. Most current systems assume all the data can be handed over to a single party, an assumption that breaks down quickly in the real world. The researchers’ framework sidesteps this by borrowing concepts from machine learning and physics-based traffic modelling, and weaving them into a distributed system that plays well with others.

Yang

“Our work addresses a very practical issue. Different organisations hold different types of data, and they’re often unwilling, or unable, to share it directly. Our approach helps shatter these siloes,” says Asst Prof Yang.

Building trust while breaking barriers

How well does the team’s model fare in the real world, for example in one of Europe’s most congested capitals? To find out, the researchers tested their model using traffic data from a busy corridor in Athens, Greece. The study area combined two types of data: one from city-installed road sensors, and another from connected cars in private fleets. In real-world settings, this is akin to the public and private sectors each holding their own sliver of the full puzzle. The researchers demonstrated that even without either side sharing their raw data, their combined effort could outperform conventional approaches that require full data disclosure.

In fact, the more secure the exchange felt, the more willing each party became to share richer information, which led to even better results. “Protecting privacy doesn’t have to come at the cost of performance — it can actually improve it,” adds Asst Prof Yang.

“This research is one of the few pioneering efforts to address the cross-company privacy concerns among various transportation entities interested in collaborating and sharing heterogeneous datasets.”

The team also built a second version of their model designed for situations where ground-truth data (the kind you get from costly drone surveys or exhaustive traffic measurements) isn’t available. By blending in mathematical models of how traffic behaves, they were able to generate useful estimates even when fine-grained data were missing. This further expands the model’s potential in cities where data collection is limited or exorbitant.

As Asst Prof Yang continues to build on this line of research, he envisions city governments working more closely with

mobility firms to simulate road conditions, anticipate traffic bottlenecks, or even fine-tune traffic signal timings — all without crossing data-privacy lines.

“This research is one of the few pioneering efforts to address the cross-company privacy concerns among various transportation entities interested in collaborating and sharing heterogeneous datasets,” Asst Prof Yang adds. “We look forward to extending our privacy-preserving framework to applications like traffic forecasting and city-scale traffic control.”

The team is now developing algorithms that allow stakeholders to generate and share synthetic transportation data — realistic but artificial datasets that preserve privacy while retaining analytical value. To make this accessible to even non-experts, they are building a user-friendly platform powered by large language models that can translate prompts into tailored synthetic datasets.

The researchers are also examining how the public perceives location data privacy in transport systems. Through surveys, the team is studying how individuals weigh the trade-offs between protecting their private information and reaping the benefits of smarter mobility systems, and whether privacy-enhancing techniques can tilt the scales.

and

How to train your machine (to understand how the ground behaves)

A neural network meets century-old soil theory — and learns to predict what happens when the ground starts to sink.

Have you heard of the curious case of sinking skyscrapers?

In downtown San Francisco, the Millennium Tower, a luxury high-rise completed in 2009, has sunk more than 18 inches and tilted about 22 inches to one side. It wasn’t a design flaw in the building itself. It was the ground beneath it — soft, compressible and consolidating under the immense weight.

Perhaps the most iconic example is the Leaning Tower of Pisa, which has been slanting at a glacial pace since the 12th century due to its unstable soil.

The lesson, it seems, is that the earth beneath our feet has a mind of its own, and understanding how it works is both an engineering necessity and an ongoing challenge.

Assistant Professor Zhang Pin from the Department of Civil and Environmental Engineering, College of Design and Engineering, National University of Singapore, has taken on the challenge of harnessing the power of machine learning (ML).

In Géotechnique , Asst Prof Zhang introduces a new physics-informed data-driven approach to analyse soil consolidation — the process by which water is squeezed out of saturated soil, causing the ground to settle. It’s foundational to the safety and reliability of buildings, roads and even underground infrastructure.

Why settle for less?

Traditionally, engineers use a century-old model known as Terzaghi’s consolidation theory to describe how soft soil behaves under pressure. However, soil in the real world rarely adheres to equations on paper. Its properties fluctuate from place to place, measurements are noisy or sparse and the equations can be difficult to apply, especially when data is incomplete.

To tackle this, Asst Prof Zhang’s team formulated a system that combines two powerful tools. First, sparse regression strips away unnecessary mathematical clutter to keep only the most relevant bits from the equations in a process known as partial differential equation (PDE) discovery. Then, a physics-informed neural network, learns to solve these equations, guided by the laws of physics. It is this end-to-end pipeline that sets the team’s work apart from many other ML-based approaches.

Interestingly, the team’s method works even when data is messy and incomplete — the sort engineers acquire from fieldwork. For instance, even with just a few pressure readings, the system can detect the intrinsic pattern and churn out reliable predictions. They tested it using lab data from two loading scenarios: surcharge (like the pressure from a building) and vacuum preloading (a technique used to

Together with his team, Assistant Professor Zhang Pin introduced a physics-informed data-driven approach to analyse soil consolidation.

Issue 06 | Aug 2025

firm up soil before construction). In both cases, the model successfully recovered the governing equations and predicted how pressure dissipated through the soil over time.

To make the system even more reliable, the researchers used a version of the equations known as a weak-form PDEs. These are better suited to handling realworld data as they are less sensitive to fluctuations and work by averaging them out over space and time. They also added a feature called Monte Carlo dropout, which is a way for the model to express how confident it is in its predictions. This offers engineers a quantifiable sense of how much they can trust the system.

The result is a ML-driven tool that can simulate the future behaviour of soil and infer key parameters from limited measurements — much like reverse-engineering the bigger picture from a few scattered pieces of evidence.

“It’s a new way of thinking about how we model the ground,” says Asst Prof Zhang. “Instead of imposing theory onto data, this approach allows data to reveal its own underlying structure, guided by the guardrails of physics.”

“Engineers don’t always have complete data at their disposal, and soil is inherently complicated. What we’ve built is a framework that is general, robust and can adapt to what’s available,” he adds.

“What we’ve built is a framework that is general, robust and can adapt to what’s available.”

The system’s flexibility means its usefulness could extend beyond this single application. It can be used to validate patterns discovered manually, or in work that involves large experimental or simulated datasets, in which it pulls out governing equations without needing expert guidance at every step. For instance, it can be applied to tackle problems in areas ranging from solid and fluid mechanics to bioinformatics to neuroscience and even finance.

The team’s future work includes extending the system to more complex scenarios, such as wave-structure interactions or extreme weather-induced geohazards — the kinds of multi-physics, high-dimensional problems that engineers often face when figuring out thorny terrain or modelling underground systems.

I feel the earth move under my feet, goes Carole King.

But with Asst Prof Zhang’s work, we may be learning to read its rhythm

Show-o, don’t tell: new unified AI model does it all

A compact AI model understands images/videos, generates visuals and weaves language all within a single framework.

In early 2024, Assistant Professor Mike Shou was repeatedly met with the same question from peers in the generative AI community: Should a model use step-by-step prediction or a gradual refinement process to generate content?

Issue 06 | Aug 2025

Both approaches had been effective. One underpinned most large language models, predicting each word based on the last, namely autoregressive modelling; the other had become popular in image generation, transforming visual noise into clarity over several stages, namely diffusion modelling. But to Asst Prof Shou, the question wasn’t which method to choose. It was why they were treated as mutually exclusive in the first place.

“There’s no fundamental reason they should conflict. So we started thinking — what if we could combine them?”Asst Prof Shou ponders.

That idea became the impetus for Show-o, a unified AI model designed by Asst Prof Shou and his team at the Department of Electrical and Computer Engineering, College of Design and Engineering, National University of Singapore. Rather than specialising in a single task, Show-o moves fluidly between analysing content and producing it. From understanding images and answering questions, to generating pictures from text and editing visuals with prompts, and even composing video-like sequences with matching captions, Show-o does it all using just one compact model.

“The name of the model is a nod to our lab, Show Lab, but the ‘o’ adds a layer. It stands for ‘omni’, which represents the idea of an all-in-one model that can handle any combination of tasks,” says Asst Prof Shou.

The team’s work, published as a conference paper at the International Conference on Learning Representations (ICLR) 2025, introduces a practical architecture for bridging two widely used generative strategies and, in doing so, points towards a more versatile kind of AI.

The design is based on an elegantly simple idea: use the best method for each kind of task. For multimodal understanding, Show-o builds sequences one token at a time, following today’s autoregressive modelling style in large language models. For visual generation, it takes a different route. It begins with a partially “masked” version of the image and fills in the missing parts gradually, guided by context. This image generation process is based on a stripped-down version of what’s known as discrete diffusion modelling, which is faster than autoregressive models and easier to integrate into large language models than continuous diffusion modelling.

Assistant Professor Mike Shou and his team developed Show-o, an AI model that combines both capabilities of multimodal understanding and generation.

Issue 06 | Aug 2025

The novelty of the team’s approach is that these two processes coexist within a single system. Most existing models require separate components — one for visual comprehension, another for visual generation — often stitched together in cumbersome ways. Show-o eschews this. “Everything, from training to inference, runs through a unified framework. There’s no switching between subsystems or relying on pre-processing pipelines,” adds Asst Prof Shou.

That culminates in a model that can handle a wide range of tasks with minimal fuss. Caption an image? Show-o at your service. Generate an image from a sentence? Easily done. Extend the edge of a photo into imagined space, or replace one object with another using just a prompt? No problem. It even supports video-style applications, such as generating a step-by-step visual sequence from a cooking instruction, complete with corresponding text.

Lean and mean

Despite its breadth, Show-o remains remarkably lean. At just 1.3 billion parameters, it is far smaller than many of today’s flagship models. For comparison, GPT-4 is estimated to have around 1.5 trillion.

“Show-o unifies both capabilities of multimodal understanding and generation into one single model.”

“But sometimes big things come in small packages. Our model can outperform much larger systems on standard benchmarks,” says Asst Prof Shou. For example, in tasks like visual question answering and text-to-image generation, Show-o matches or exceeds models many times its size. It also requires significantly fewer steps to generate high-quality images compared to fully sequential methods, making it faster and more efficient.

The model’s compact, all-purpose design, available as an open-source codebase, has already spurred follow-up research from major research labs, including those at MIT, Nvidia, Meta and NYU. As the first to unify two major generative strategies (stepwise prediction and iterative refinement) in a single network, Show-o has helped reshape how researchers think about building future foundation models.

“Today’s models like Gemini (Google) can analyse a video and answer questions about it, while Sora (OpenAI) can generate realistic video from a text prompt,” adds Asst Prof Shou. “Show-o unifies both capabilities of multimodal understanding and generation into one single model. Thanks to this design, our model’s inputs and outputs can be any combination and order of visual and textual tokens, flexibly supporting a wide range of multimodal tasks, from visual question-answering to text-to-image or video generation to mixed-modality generation.”

The team has since developed a newer model, Show-o2, which delivers improved performance across the board, while remaining compact at around two billion parameters. A larger one with around eight billion is also explored and available now for better performance.

“Ultimately, our goal wasn’t to make a huge model,” says Asst Prof Shou. “We wanted to build a smarter one — a model that could do more.”

Turns out, a jack of all trades can be a master too.

Tracing the family tree of AI models

Biology inspires a method to map the ancestry of AI models — and what we inherit may matter more than we think.

At some point, many of us have wondered about the roots of who we are. Perhaps you’ve suspected a streak of athleticism from a grandparent, or wondered if quick wit runs in the family, or imagined that being a musical genius might just be your natural calling. Our DNA is our biological blueprint, revealing everything from ancestry and predisposition to disease, to subtle quirks of behaviour and aptitude. At the same time, genes are among the

Issue 06 | Aug 2025 Forging

most important factors in identifying familial relationships and evolutionary pathways.

Artificial intelligence, built on silicon rather than cells, has its own way of passing down traits. AI systems nowadays are rarely created from scratch but are often adapted from existing models, fine-tuned on new data, repurposed from different tasks. Over time, this has created a vast web of interrelated models. But unlike in biology, there is as yet no central registry, no “genome project”, no family tree.

In a new study selected as an oral presentation at the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, a distinction given to only 0.8% of over 30,000 submissions, Assistant Professor Wang Xinchao from the Department of Electrical and Computer Engineering, College of Design and Engineering, National University of Singapore, propose a strikingly simply question: if AI models inherit from each other, can we trace their ancestry?

With the paper’s first author Mr Yu Runpeng, Asst Prof Wang developed a system to do just that. “We call it ‘neural lineage detection.’ It traces the ‘parent’ model from which a given AI model was fine-tuned — and sometimes even its ‘grandparent’ or ‘great-grandparent’,” says Asst Prof Wang. “It’s part forensic tool, part diagnostic instrument, and wholly relevant in untangling today’s increasingly complex, interdependent web of AI systems.”

Getting to the roots

To bring natural lineage detection to life, the duo developed two complementary methods, each tackling the problem from a different angle. The first, called a learning-free approach, is a computational shortcut that approximates how an AI model changes when it’s fine-tuned from another. Rather than running a model through multiple simulations, it uses a mathematical trick, inspired by ideas from theoretical machine learning, to estimate how closely a child model aligns with each potential parent, based on their internal structures. It’s like scanning the fingerprints of a model’s architecture and comparing them against known prints, with a lens that takes inheritance into account.

Assistant Professor Wang Xinchao and his team created a framework that traces the ancestry of AI models.

Issue 06 | Aug 2025

The second method is more data-driven. The researchers trained a dedicated system — an AI that learns to discern the telltale signs of fine-tuning — by studying hundreds of known parent-child model pairs. Apart from learning to match models by their current behaviour, the system also picks up on deeper signs of inheritance embedded in their structure and output. It’s like training a genealogist to read between the lines of a fragmented family history.

Both methods were remarkably effective across a range of tasks, from classifying images to detecting and labelling objects in complex scenes. In particular, the lineage detection methods performed well even when models had been finetuned through multiple generations or trained on scarce data. One demonstration involved a Frankenstein-like AI stitched together from fragments of nine image models. The team’s system expertly traced each individual fragment back to its source — a feat akin to pinpointing not just your ancestors but which traits came from whom.

“In a world where AI models are shared, adapted and redeployed across multiple platforms, understanding their lineage can help with accountability, bias tracing and even IP protection.”

“In a world where AI models are shared, adapted and redeployed across multiple platforms, understanding their lineage can help with accountability, bias tracing and even IP protection,” adds Asst Prof Wang. “A model’s ancestry can reveal where its assumptions came from, what data it was likely exposed to and what vulnerabilities or blind spots it may have inherited.”

Rooted in security

Such capabilities could greatly support efforts in AI governance, offering a way to map out how different systems are connected, where their training histories overlap and how knowledge circulates across the model ecosystem. Just as genetic analysis can help identify inherited disorders or risk factors in humans, neural lineage detection might one day help ascertain where an AI system’s flaws come from — and how to remedy them.

06 | Aug 2025

Looking ahead, Asst Prof Wang plans to apply the framework to more challenging tasks, such as tracing lineage when the model’s internal structure has been altered, which makes direct comparisons tough, or when only its external behaviour is observable (like in a black-box scenario, where ancestry is purely inferred from the model’s outputs without access to its inner workings).

“Just like biology, understanding a system’s origins can illuminate not only how it functions but how it might evolve,” adds Asst Prof Wang.

Industrial Systems Engineering and Management

Trust, but verify: a new framework for smarter decision-making

Historical data can guide or mislead. A new theoretical framework shows how to lean on it, and when to walk away.

Say you’re planning to launch a product in Singapore and have a sea of historical sales data — but from the United States. Would you leverage this data to guide your decisions? Or would that be too speculative, given the many ways in which the two markets differ?

The conundrum of knowing when to trust past data, and when to ignore it, has long been a pain point for decision-makers in business, healthcare, technology and more. A theoretical study by Assistant Professor Cheung Wang Chi from

Issue 06 | Aug 2025

the Department of Industrial Systems Engineering and Management, College of Design and Engineering, National University of Singapore, offers some pointers. His work, published in the Proceedings of the 41 st International Conference on Machine Learning , looks into the thorny problem of learning and optimising decisions in the moment, while cautiously sifting through the influence of past experience.

“At its core, this is a mathematical take on a very human dilemma: how to act judiciously in the present, when your only guide might be flawed memories of the past,” says Asst Prof Cheung.

To trust, or not to trust?

To frame the problem, Asst Prof Cheung turned to a model inspired by probability theory, sometimes referred to as the “multi-armed bandit” problem. Picture a row of slot machines (“one-armed bandits”) in a casino. Each machine gives different, unknown payouts. You want to find the one that pays the most, but you don’t know which it is. So, you try each one, keep track of the results, and gradually favour the better performers.

Now imagine you’re allowed to peek at records from past players — someone else’s experience with the machines. There’s a catch: those records may be inaccurate. Perhaps the machines were refurbished. Maybe you’re not even in the same casino. Challenges abound. How do you decide whether that past data still applies — and whether using it will help or hinder you?

Asst Prof Cheung’s work explores a pure theory setting — there’s no real-world dataset, no simulations of consumer behaviour or ad clicks. Instead, it’s about the mathematics behind decision-making — what you can and cannot do with imperfect prior knowledge.

One of the study most important results is a negative one. It demonstrates that even if you have access to historical data, it won’t always help, and in some cases, it can’t help at all. Unless you have some additional clue about how different the past and present might be, your safest bet is to ignore the old data entirely and proceed as if starting from scratch. “We initially thought it might be possible to create an algorithm that always knows when to trust the past,” says Asst Prof

Assistant Professor Cheung Wang Chi and his PhD student devised a new theoretical framework to lay the foundation for future algorithms that make more reliable decisions in uncertain environments.

Issue 06 | Aug 2025

Cheung. “But our failed attempts convinced us otherwise, and that led to our eventual impossibility result.”

To get around this limitation, Asst Prof Cheung and PhD student Lyu Lixing proposed a new algorithm, MIN-UCB, which makes use of a rough estimate of the “margin of error” between past and present. If this margin is small (for example, a 5% shift in customer behaviour between two seasons) the algorithm cautiously incorporates past data. If not, it plays it safe. “The algorithm compares two estimates of an option’s potential — one based on both past and present, the other on current data alone — and choose the conservative of the two,” says Mr Lyu.

In practice, this could apply to sales decisions where customer demographics or preferences have shifted slightly. For example, if an e-commerce platform notices a modest increase in new users, or if it’s applying past purchasing data from one country to another with broadly similar market behaviour. In these cases, the algorithm can still make good use of history, without being blindly swayed by it.

This mechanism makes the algorithm highly adaptable. When historical data closely resembles current patterns, it improves on traditional approaches. When the data proves unreliable, it falls back to safer strategies. The team shows that this adaptability is not only intuitive but optimal within their theoretical framework.

A more discerning AI

“Our study raises some broader, and rather philosophical, questions,” says Asst Prof Cheung. “How should we draw lessons from the past? What does it mean to ‘know’ something in a world that keeps changing?”

“Our study raises some broader, and rather philosophical, questions.”

The researchers’ work provides a foundation for future algorithms that can make more reliable decisions in uncertain environments — where history may inform, but not dictate, the present. As for next steps, the work is already expanding into more complicated domains. For instance, Asst Prof Cheung is exploring its application to reinforcement learning and online resource allocation, including a case study involving coupon assignments at an e-commerce company in Indonesia.

“In a world awash with data, knowing when not to trust information is just as important as knowing when to rely on it,” adds Asst Prof Cheung.

AI turns the tide on greenwashing

The A3CG framework helps separate fact from fiction, training AI to cut through vague corporate lingo and spot greenwashing camouflaged in polished sustainability claims.

We’ve all seen it: skincare packaging proclaiming “100% recycled materials”, a supermarket markets itself as “committed to sustainable sourcing”, an MNC announces on LinkedIn its bold plans to slash carbon emissions. Such statements suggest a world in which sustainability is front and centre. But more often than not, they’re carefully worded promises that raise more questions than they answer.

Sustainability is indubitably crucial to securing a liveable future. Yet in the corporate world, it has too often devolved into a buzzword deployed for optics than for impact, especially in formal reports companies produce to signal progress and satisfy investor or customer expectations.

This practice, known as “greenwashing” (where companies mislead, exaggerate or fabricate claims about their sustainability efforts), obscures genuine progress and chips away at public trust. One study led by the United Nations found that 60% of sustainability claims by European fashion giants were “unsubstantiated” and “misleading.” In a global review, four in ten of websites appeared to be using tactics that could be considered misleading. With environmental, social and governance (ESG) investing now measured in the tens of trillions, trust is a currency companies increasingly misuse to inflate their perceived value.

AI has begun to reshape how corporate sustainability reports are processed. Natural language processing (NLP), a branch of AI that extracts insights from text, is capable of evaluating such reports at a speed and scale virtually unattainable by manual, human processing. However, even cutting-edge NLP methods run into bottlenecks. In particular, they often fail to tell the difference between substantive commitments and astutely wordsmithed but misleading claims.

A team led by Assistant Professor Gianmarco Mengaldo from the Department of Mechanical Engineering, College of Design and Engineering, National University of Singapore, has developed an approach to tackle this challenge. Presented at The 63rd Annual Meeting of the Association for Computational Linguistics, the researchers created a dataset and evaluation framework called A3CG (AspectAction Analysis with Cross-Category Generalisation).

“A3CG is like a training ground designed specifically to test and improve AI’s ability to extract and interpret sustainability claims,” explains Asst Prof Mengaldo.

To build the dataset, the team annotated real-world sustainability statements by linking each sustainability “aspect” to the type of “action” taken. The aspect is the specific sustainability goal or issue — say, waste reduction or carbon emissions targets. The action is what the company claims to do about it, categorised as either “implemented,” “planning,” or “indeterminate.” It is the last category,

Assistant Professor Gianmarco Mengaldo and his team crafted a framework that is adept at spotting greenwashing camouflaged in sustainability claims.

Issue 06 | Aug 2025

Forging New Frontiers

indeterminate, that often flags greenwashing. Vague, hedging statements — “we may consider,” or “where possible” — can be caught and isolated, allowing investors, consumers and regulators to scrutinise more effectively.

In the team’s study, they put forward a company’s claim: “Where possible, we have implemented sustainable measures to monitor our water consumption and increase water efficiency.” The statement may read fine at first glance. But A3CG picks up the qualifier “where possible,” tagging it as “indeterminate,” highlighting the uncertainty and lack of firm commitment.

“Systematically surfacing these intentionally worded subtleties allows us to provide a clearer view into corporate sustainability that is less vulnerable to clever PR and more closely aligned to measurable outcomes,” adds Asst Prof Mengaldo.

Flushing out the fluff

A strength of the A3CG framework is its adaptability when companies pivot their sustainability narratives, especially when it’s done purposefully to their benefit. For instance, if a company shifts focus from well-monitored categories (such as carbon emissions) to newer or less transparent ones (such as biodiversity or cybersecurity), traditional NLP methods might miss the greenwashing hid in unfamiliar territories. The team’s framework is designed specifically to test for “cross-category generalisation,” allowing AI systems to stay effective even when confronted with entirely new or unexpected sustainability themes.

“A3CG helps make AI systems more capable at understanding greenwashing tactics, potentially improving the reliability of ESG analysis at scale.”

“A3CG helps make AI systems more capable at understanding greenwashing tactics, potentially improving the reliability of ESG analysis at scale,” adds Asst Prof Mengaldo. “Regulators and rating agencies gain a clearer way to assess sustainability disclosures, while investors and watchdog groups can more readily flag questionable claims. For researchers, it sets a benchmark for developing more linguistically attuned NLP models in this domain.”

Using their dataset, the researchers put various state-of-the-art AI models to the test. They found that supervised models, particularly those trained with contrastive learning (which teases apart subtle semantic nuances), outperformed large language models like GPT-4 or Claude 3.5. These supervised models were better at identifying recurring patterns in the language of sustainability reports.

Nevertheless, the team also found that while supervised models are good at spotting suspicious syntax, they struggled with completely novel sustainability themes outside their training scope. On the other hand, large language models handled unfamiliar vocabulary better but falter in the subtle pragmatics of language, especially in highly nuanced cases where tone and phrasing convey strategic ambiguity or cautious non-commitment.

“This just means there’s more work to be done,” adds Asst Prof Mengaldo. “For example, our team is working towards understanding how changes in the narrative of sustainability reports over time may be linked to greenwashing practices.” Issue 06 | Aug 2025

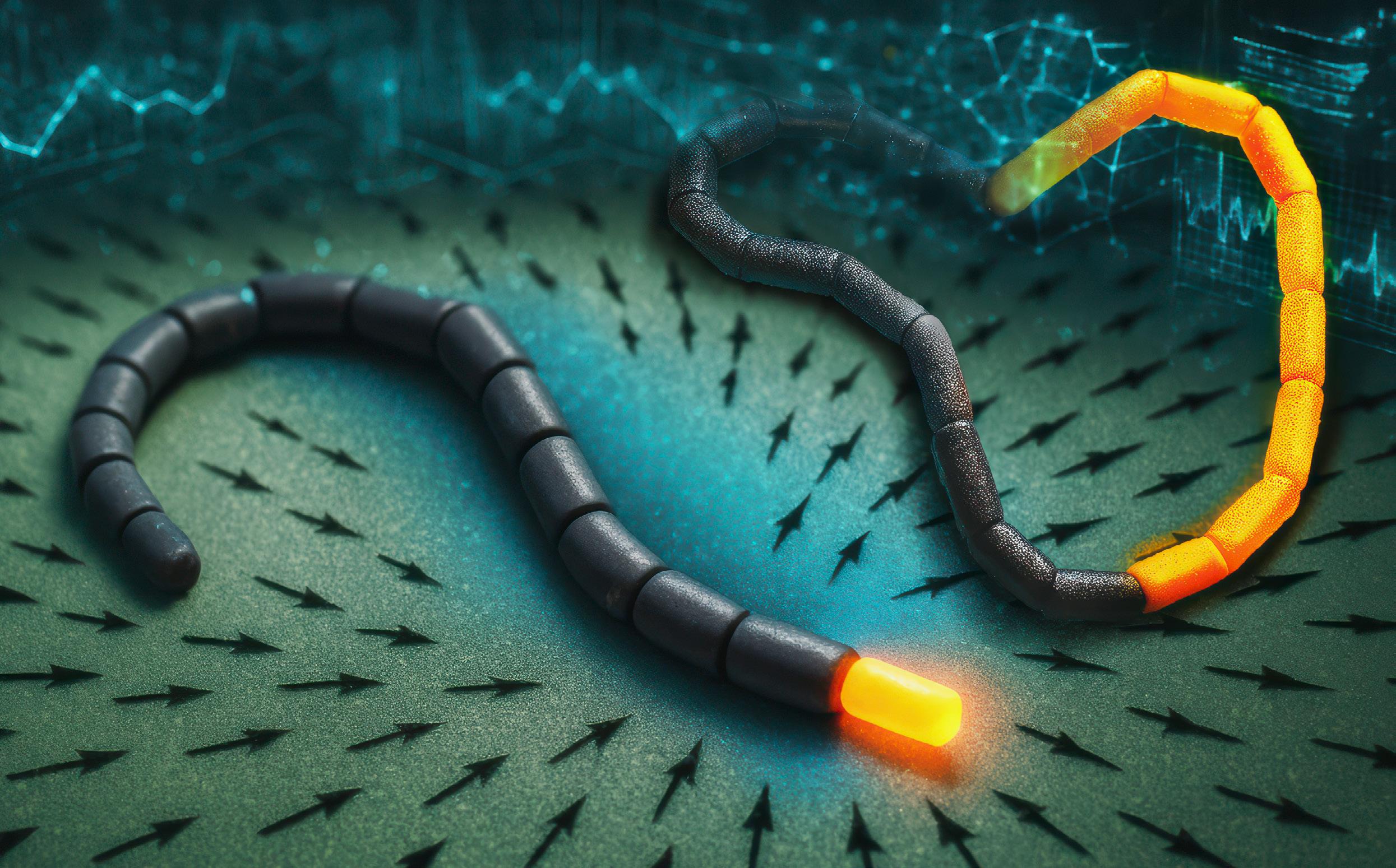

The AI swimming academy for miniature robots

Powered by artificial intelligence, tiny robotic swimmers traverse tricky terrain by mimicking how human cells find their way.

Inside your body, there’s constant motion. Not the kind you notice, but the kind that keeps you going. Immune cells fan out like search parties, sniffing out intruders. Gut microbes wriggle towards nutrients. Sperm cells race, jostle and twist their way through the narrow corridors of the female reproductive tract. Even nerve cells, in their earliest stages, inch toward their intended destinations.

Issue 06 | Aug 2025

What ties these journeys together is a knack for reading the environment. These microscopic travellers respond to subtle cues, like chemical signals, to decide where to go next. Their movements are fundamentally finely tuned feedback loops: sense a whiff of what you’re after, steer that way, repeat. Biologists call this phenomenon “taxis,” and when chemicals guide the way, it’s known as “chemotaxis.”

It’s a beautiful evolutionary trick. But could lifeless machines pull it off?

“Can the evolutionary wisdom behind these instinctive search behaviours be meaningfully translated into engineering platforms?” ponders Assistant Professor Zhu Lailai. “How might AI endow synthetic agents with such biologically informed intelligence?”

Together with his team, Assistant Professor Zhu Lailai taught simulated robotic swimmers how to traverse tricky terrain by harnessing reinforcement learning.

It’s a question his team at the Department of Mechanical Engineering, College of Design and Engineering (CDE), National University of Singapore set out to explore. In doing so, they may have given miniature robots a leg (or fin) up.

In a study published in Nature Communications, Asst Prof Zhu’s team taught simulated robotic swimmers how to move and steer themselves through liquid environments using a machine-learning technique called reinforcement learning (RL). While the approach was inspired by nature, the goal is squarely technological: to design tiny robots that can one day navigate the human body or other hard-to-reach spaces — on their own.

To start, the researchers designed two kinds of robot bodies. One was a linked chain of segments, resembling the whip-like tail of a sperm cell. The other formed a ring, mimicking the blobby, flexible outline of an amoeba or immune cell.

The idea for the ring-shaped “swimmer” came in a moment of epiphany. “I was in a taxi holding my six-month-old daughter after a hospital check-up, and my arms naturally formed a ring while cradling her,” recalls Asst Prof Zhu. “That’s when it struck me: what if we connected the head and tail of the chain swimmer? Would it move like an amoeba?”

Each swimmer was composed of multiple hinges, allowing it to bend, twist and squirm in fluid. But it didn’t know how to move — yet. That’s where RL came in.

Issue 06 | Aug 2025

Using a two-level RL model, the researchers first trained the swimmers to master basic motion: how to move forward, backward or rotate using sequences of joint movements. Next, building on those motor skills, they trained the robots to “sniff out” a target chemical source and move towards it, just as real cells do when homing in on nutrients, distress signals or other chemical cues.

Fast learners

Unlike typical machine learning setups, the team didn’t hit a reset button every time the robot messed up. Instead, training was continuous, much like how learning works in nature. “In biology, you don’t get to restart from square one,” adds Associate Professor Ong Chong Jin from CDE’s Department of Mechanical Engineering, who brings his expertise in optimal control to the research team. “A cell either figures it out in the moment, or it doesn’t survive. That’s the realism we wanted to ingrain into the model.”

What is more, the robots didn’t rely on any GPS-like tracking or global awareness. They had to make sense of their environment using only local cues — the pressure and chemical levels around their hinges. That’s roughly equivalent to how real cells feel their way around using surface receptors, without any map or memory of where they started.

“A cell either figures it out in the moment, or it doesn’t survive. That’s the realism we wanted to ingrain into the model.”

In simulations, the robots managed to navigate towards targets in a range of tricky environments — even when presented with a panoply of obstacles: competing signals, swirling fluid currents or narrow passages. The chain-like swimmer, with its undulating motion, resembled how sperm cells power forward. The ring-shaped one, meanwhile, pulsed and contracted like a crawling amoeba, confirming Asst Prof Zhu’s hunch from that ride home with his daughter.

Assistant Professor Zhu Lailai (right) with Associate Professor Ong Chong Jin (middle) and PhD student Wang Yufei (left)

Issue 06 | Aug 2025

Forging New Frontiers

Each swimmer’s body shape influenced how it moved, and consequently how well it performed in different tasks. The ring model, for instance, struggled in very tight spaces due to its inflexible surface, whereas the chain model, with its directional asymmetry, could steer with greater finesse.

Learning to adapt

While the team’s work is still in silico — in simulation rather than in actual devices — the AI underpinning the mini robots represents broader shifts in how autonomous machines can be conceived and designed. Rather than hard-coding behaviours or hand-tuning controls, their work shows how RL can enable robotic systems to develop their own strategies, shaped by their bodies, surroundings and the task at hand.

“Importantly, our reset-free RL approach addresses constraints that many robots face, such as the inability to reboot or rely on global sensing,” says Asst Prof Zhu. “Robots that can learn from local cues and adapt on the fly are better equipped to operate in the unpredictable conditions of the real world.”

Looking ahead, the team is working to bring their concepts into wet-lab experiments. They’re also exploring a much wider range of body designs beyond the two studied so far. For example, with just six links, there are 27 possible shapes, most of which do not exist in nature.

“Robots that can learn from local cues and adapt on the fly are better equipped to operate in the unpredictable conditions of the real world.”

“Biological organisms evolve under many constraints, but robotic systems aren’t bound by those. RL lets us rapidly test which shapes produce effective movement, even for forms nature never tried. The miniature swimmers could even learn to change their own shape mid-task to respond to new challenges,” adds Asst Prof Zhu.

With AI as their coach, even lifeless robots may one day learn the most human skill of all — how to adapt and carry on.