From Principles to Playbook: Build an AIGovernance Framework in 30 Days | Nate Patel

The gap between aspirational AI principles and operational reality is where risks fester –ethical breaches, regulatory fines, brand damage, and failed deployments. Waiting for perfect legislation or the ultimate governance tool isn't a strategy; it's negligence. The time for actionable governance is now

This isn't about building an impenetrable fortress overnight. It's about establishing a minimum viable governance (MVG) framework – a functional, adaptable system – within 30 days. This article is your tactical playbook to bridge the principles-to-practice chasm, mitigate immediate risks, and lay the foundation for robust, scalable AI governance

Why 30 Days? The Urgency Imperative

Accelerating Adoption: AI use is exploding organically across departments. Without guardrails, shadow AI proliferates.

Regulatory Tsunami: From the EU AI Act and US Executive Orders to sector-specific guidance, compliance deadlines loom.

Mounting Risks: Real-world incidents (biased hiring tools, hallucinating chatbots causing legal liability, insecure models leaking data) demonstrate the tangible costs of inaction.

Competitive Advantage: Demonstrating trustworthy AI is becoming a market di�erentiator for customers, partners, and talent.

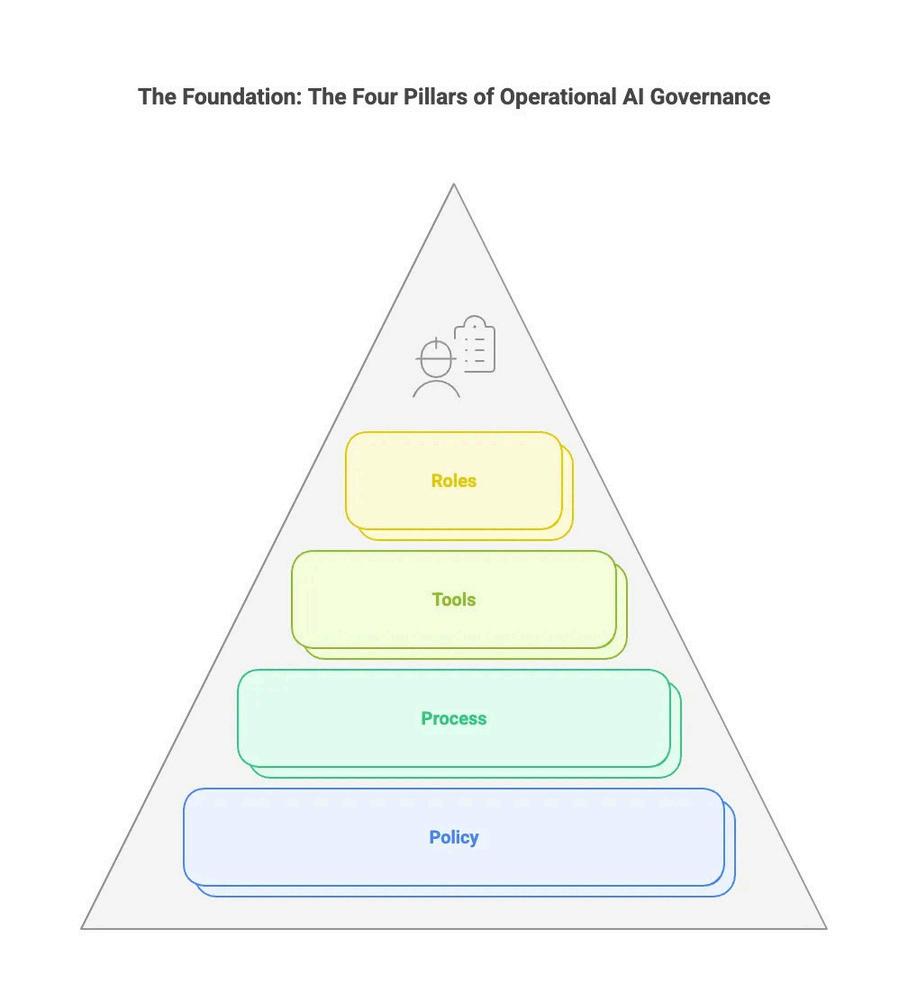

The Foundation: The Four Pillars of Operational AI Governance

An e�ective MVG framework isn't a single document; it's an integrated system resting on four critical pillars. Neglect any one, and the structure collapses.

Policy Pillar: The "What" and "Why" - Setting the Rules of the Road

Purpose: Defines the organization's binding commitments, standards, and expectations for responsible AI development, deployment, and use.

Core Components: Risk Classification Schema: A clear system for categorizing AI applications based on potential impact (e.g., High-Risk: Hiring, Credit Scoring, Critical Infrastructure; Medium-Risk: Internal Process Automation; Low-Risk: Basic Chatbots). This dictates the level of governance scrutiny. (e.g., Align with NIST AI RMF or EU AI Act categories).

Core Mandatory Requirements: Specific, non-negotiable obligations applicable to all AI projects. Examples:

Human Oversight: Define acceptable levels of human-in-the-loop, on-the-loop, or review for di�erent risk classes.

Fairness & Bias Mitigation: Requirements for impact assessments, testing metrics (e.g., demographic parity di�erence, equal opportunity di�erence), and mitigation steps.

Transparency & Explainability: Minimum standards for model documentation (e g., datasheets, model cards), user notifications, and explainability techniques required based on risk

Robustness, Safety & Security: Requirements for adversarial testing, accuracy thresholds, drift monitoring, and secure development/deployment practices (e.g., OWASP AI Security & Privacy Guide).

Privacy: Compliance with relevant data protection laws (GDPR, CCPA, etc.), data minimization, and purpose limitation for training data.

Accountability & Traceability: Mandate for audit trails tracking model development, data lineage, decisions, and changes.

Read More: From Principles to Playbook: Build an AI-Governance Framework in 30 Days

- Nate Patel

Read More Articles:

Building Your AI Governance Foundation

AI Governance: Why It’s Your Business’s New Non-Negotiable