Changing the World

Editor:

Jennifer Donovan

Design & Illustration: Michael Lewy

Writers: Grace Chua

Katie DePasquale

Jennifer Donovan

Photography credits:

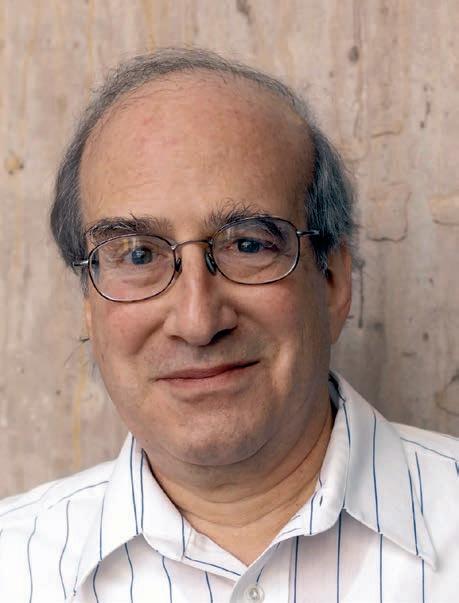

LIDS Student Conference photos provided by the Student Conference Committee. Profile photo of Alan Willsky by Donna Coveney. Photos of Guy Bresler and Caroline Uhler by Lillie Paquette. Cover photo for Looking at All the Angles by Swati Gupta. Photos of Noah Stein, Swati Gupta, Hamza Fawzi, Roxana Hernandez, and Statistical Inference under the Willskyan Lens by Jennifer Donovan.

Massachusetts Institute of Technology

Laboratory for Information and Decision Systems

77 Massachusetts Avenue, Room 32-D608

Cambridge, Massachusetts 02139

http://lids.mit.edu/

send questions or comments to lidsmag@mit.edu

A Message from the Director

On behalf of the entire LIDS community, I would like to welcome you to the Fall 2016 issue of LIDS|All. Within these pages, you will find articles profiling some of our exceptional community members and learn more about the exciting events that took place over the past year. LIDS provides a dynamic and intellectually inspiring environment for all who call it home. It also continues on its growth trajectory. This year we added 4 new principal investigators to the lab: Professors Guy Bresler and Caroline Uhler, who you will read more about in this issue, and very recently Dr. Kalyan Veeramachaneni and Professor Ali Jadbabaie, who you can learn more about on the LIDS web site and will be part of our next issue. I would like to take this opportunity to welcome them to our community.

This has been a particularly important year as LIDS has joined the newly launched Institute for Data, Systems and Society (IDSS). LIDS faculty are playing a pivotal role in defining the new institute’s intellectual agenda, leading the statistics effort and flagship projects (in finance, health analytics, social networks, energy systems, and urbanization), designing new academic programs in statistics and complex systems, and leading the search for new faculty in networks and statistics.

In this issue of LIDS|All, you will see that all of this growth has bolstered the opportunities for

ABOUT LIDS

The Laboratory for Information and Decision Systems (LIDS) at MIT, established in 1940 as the Servomechanisms Laboratory, currently focuses on four main research areas: communication and networks, control and system theory, optimization, and statistical signal processing. These areas range from basic theoretical studies to a wide array of applications in the communication, computer, control, elec -

intellectual engagement in the community. For example, you will meet two of our senior graduate students, Swati Gupta and Hamza Fawzi and learn about their impressive research in online learning and optimization. Hamza will join the newly established Cantab Capital Institute for the Mathematics of Information at Cambridge University as a lecturer in Fall 2016. We wish him the best in his new journey. You will also read about Roxana Hernandez, who joined the LIDS administrative staff this past spring and has immediately added so much to our community with her warm personality and dedicated work. There is an interview with Luca Carlone, recently promoted to a LIDS Research Scientist, who talks about his work on mobile robotics. You will find interviews with two of our PIs, Professor Alan Willsky, sharing his wisdom on research in the broad area of control, estimation and signal processing and milestones of his impressive career, and Dr. Suvrit Sra talking about his exciting research on parameter estimation for Gaussian mixture models. You will also hear from LIDS alum Dr. Noah Stein, who is currently a Senior Research Scientist at Lyric Labs.

LIDS has always been a special place both as a world-leading center for foundational work in information and decision sciences, and as a rich and supportive environment for its members. With so many new directions and accomplishments, its future looks as vibrant as ever. I feel privileged to be part of this exceptional place and I hope this issue of LIDS magazine provides you a glimpse of why.

Sincerely,

Asu Ozdaglar

tronics, and aerospace industries. LIDS is truly an interdisciplinary lab, home to about 100 graduate students and post-doctoral associates from EECS, Aero-Astro, and the School of Management. The intellectual culture at LIDS encourages students, postdocs, and faculty to both develop the conceptual structures of the above system areas and apply these structures to important engineering problems.

Seeing Connections Across Boundaries

By Grace Chua

One afternoon, Alan Willsky realized something that would come to be an essential part of how he approached research.

The young MIT faculty member was a consultant to a terrific team of engineers at The Charles Stark Draper Laboratory. The team was charged with developing the algorithm set for sensor fault detection for NASA’s Digital Fly-By-Wire (DFBW) aircraft. The goal was to make sure that sensor faults would be detected before faulty signals corrupted the flyby-wire flight controls of the aircraft. This involved comparing data from the jet’s multiple sensors in order to tell if one or more of them were giving an erroneous reading. While in principle this could be done using methods Alan and others had developed for dynamical systems and failure detection, different parts of the model had different sources of error, which complicated things. What the team did instead was to “rip the dynamics apart to isolate where they could get the most robust sources of analytical redundancy and then exploit these using the machinery of failure detection.”

The approach worked “phenomenally well,” resulting in reliable and accurate fault detection. But “it was so systematic, that I felt there must be a theory underlying this,” Alan says.

That realization, and the search for the accompanying theory, opened up a new avenue of research and led to dissertations and a master’s thesis for several of his students. It was also

a reminder to the young scientist that science must serve the real world. “The algorithms being developed at Draper Lab were going to be flight-tested,” says Alan, “so they were going to have to work. We had to be our own devil’s advocate.” (As it turns out, the DFBW project ran until 1985 and resulted in the all-electric flight control systems now used on nearly all modern aircraft. In their project summary on the NASA web site, they say the program is considered “one of the most significant and most successful NASA aeronautical programs since the inception of the Agency.”)

Alan has spent close to five decades at MIT discovering how the practical informs the theoretical, and vice versa. Warm and loquacious, he will claim that he was merely in the right place at the right time. In fact, colleagues and students will say he has an extraordinary ability to see the connections between things, and it’s this strength that has helped him thrive.

Early on, Alan says, he had the right sort of encouragement and good luck. The mathloving boy from New Jersey arrived at MIT in 1965, majoring in Aeronautics and Astronautics, otherwise known as ‘AeroAstro’. (The fan of the New York Giants baseball team – a rival to the Yankees – was a natural Red Sox fan on arriving in Cambridge.) With the US space program booming, it was a thrilling time. The AeroAstro students watched the live launch of Apollo 10 in 1969, for instance, sitting in the viewing area with the King and Queen of Bel-

gium and then-Vice President Spiro Agnew.

He chose the department for its diversity and flexibility. “If you think about it, AeroAstro covers all the branches of engineering; I got the opportunity to see a wide variety of different topics.” In his junior year, he took classes in probability, signals and systems, and control, which turned out to be his passion and forte. Advisors like Harvard’s Roger Brockett were instrumental in helping him pinpoint which questions to answer, breaking down a big problem into simpler cases and coming up with questions which were solvable but which also helped advance the state of knowledge.

After a PhD in control and estimation, supervised by Brockett and Professor Wallace Vander Velde, Alan eventually arrived at MIT’s Department of Electrical Engineering and Computer Science in 1973. “At the time, LIDS was way over on the corner of Vassar and Mass Ave, while CSAIL [the MIT Computer Science and Artificial Intelligence Laboratory] was across the railroad tracks; to get from one to the other during the winter I had to put on my boots, put on my coat and schlep over there,” he recalls.

But it was worth the trek. Alan’s research has had applications in a staggering range of fields, from detecting arrhythmias in EKGs, to measuring the variation of the sea level over the entire planet. In fact, it was while working on a variety of signal processing projects, includ-

ing work with Schlumberger – this one looking at oil well data – that Alan brought his systems and control background into play. In systems and control, using models is absolutely central. While some signal processing researchers did make use of models explicitly, that was far from universal. So, Alan put his focus on “modelbased signal processing,” arguing that “without a model I don’t know how you separate information from noise…and one person’s information might be another person’s noise.” Today the use of models for information extraction from data is central in fields ranging from signal processing to machine learning.

This was part of the shift from Alan’s first field, control and systems, to his new one: signal processing. This work centered on processing and analyzing signals coming in from various sources, often in a continuous flow.

For example: When you have a stream of data coming in from a sensor, how do you tell whether the latest bit of data is unexpected or falls within the bounds of an expected range? And if it’s outside of the anticipated range, is the sensor inaccurate because it is misbehaving, or is it accurately informing it you there really is something alarming out there?

At the same time, you must account for uncertainty in the external environment – such as winds buffeting a plane, or bumpy terrain that a robot’s wheels must cross. And of course, aircraft and robots don’t rely on data from a

single sensor – they have whole banks of sensors streaming different types of data that must be stitched together seamlessly over a period of time to form the big picture.

Alan’s work, no matter what the field, (his most recent research is in machine learning), has always been impactful—as evidenced by just a few of his awards and achievements along the way. One of the earliest was in 1975, when he received the American Automatic Control Council’s Donald P Eckman Award, for an outstanding young researcher in the field. In the early 1980s, he and renowned electrical engineering expert Professor Alan Oppenheim co-wrote a definitive textbook, Signals and Systems, which is still widely used today. In 2010, Alan was elected to the National Academy of Engineering.

Another experience Alan counts as formative came in 1979, when former student and MIT faculty colleague Nils Sandell started a small technology company, Alphatech, bringing in Alan and then-LIDS director Michael Athans as co-founders. The firm served mainly government clients such as the Department of Energy and Department of Defense, doing contract research and development based on the scientists’ cutting-edge work.

“I learned an incredible amount at Alphatech,” Alan says. “The research was in areas like systems and control, estimation, intelligence surveillance and reconnaissance.” From a five-

man team, the company grew to some 450 people over the next two and a half decades.

The experience taught him a lot about technology’s place in the wider world. “When a customer comes in with a challenge, how do you creatively figure out how to use the most cutting-edge ideas to come up with something that isn’t just for publication’s sake, but that’s going to solve that customer’s problem?”

Critically, it also showed Alan and his colleagues where advances in basic theory were continued on page 39.

FILTERING OUT THE NOISE:

USING MATH TO CRACK THE COCKTAIL PARTY PROBLEM

By Grace Chua

By Katie DePasquale

Noah Stein’s first love has always been mathematics. From his grade school days in Connecticut to his time at Cornell University, where he got a bachelor’s degree in Electrical and Computer Engineering in 2005, he knew that he liked studying math, but he was also interested in computers. “[Math] got kind of boring in high school, I think because it wasn’t challenging….But halfway through [my time at Cornell], I discovered real math, pure math,” he says. All of his passion for the subject returned, and that is what led him to LIDS. He chose to apply only to applied math departments for graduate school, and he came to LIDS because of the unique blend of engineering and pure math that it offers, along with its excellent opportunities for funding.

Once at LIDS, Noah settled in, researching the “very theoretical side” of game theory with faculty such as Asu Ozdaglar and Pablo Parrilo, who was new to LIDS at the time. “Game theory is supposed to be the study of strategic interactions between self-interested agents, so there are plenty of times you could try to apply that in real life. But I was interested in the very math-y end of it, like, what would it mean to behave optimally in a particular situation? Can you compute what that optimal behavior would be, or write a program to compute it?” Noah went from taking a few game theory classes as an undergraduate to immersing himself in game theory, in problems with “an algebraic structure,” with both Asu and Pablo as his advisors.

He found the environment at LIDS to be exciting and stimulating, saying, “It was a great place to learn from other students,” whether by attending the many talks LIDS hosts or by having discussions of students’ work and research outside of class. He even found time to become a “chocolate snob” through MIT’s Laboratory for Chocolate Science. Through Pablo’s contacts, he landed first an internship and then, after receiving his Ph.D. in Electrical Engineering and Computer Science from MIT in 2011, a fulltime job at Lyric Labs. “My approach during school had been that I should take as many math classes as I could because it would be much easier to learn applied stuff on the job than it would be to take applied classes at school and learn theory stuff on the job….They hired me for what they knew of my problem-solving skills,” he says. “It’s helpful to have the more abstract theoretical mindset in how you frame the problems, and reframe the problems.”

Lyric Labs began as the startup Lyric Semiconductor. It was founded by MIT grads and specialized in probabilistic processing. After being acquired by tech giant Analog Devices, Inc. (ADI) in 2011, Lyric continued on as a research group within the company. This research group is now expanding and its new name will be analog garage. As Noah explains, it has a new purpose. “They’ve expanded their research lab to include a lot of other things besides algorithms, and they have a new name

for that umbrella. It’s a hybrid between research lab and internal startup incubator. They’re trying to take ideas that maybe don’t have a good place to fit within the company and fund them, give them room to grow.”

Right now, Noah works on what’s popularly known as the cocktail party problem: audio source separation. “You have some sort of noisy soundscape, where there are one or more sources of sound of interest, usually a voice, and potentially a lot of background noises that could be whatever. Your goal is to pick out some particular source of interest. It’s an extremely broad problem that people have worked on for a long time, and you have to narrow it down somewhat to be able to tackle it. We’re trying to improve the performance of speech recognition software where there’s a lot of background noise, meaning how many of the words it gets correct.” Approaches to this problem involve multiple microphones. Classical methods usually require the microphones to be at least five to ten centimeters apart in order to pick up differences in sound (if they are too close together, the mixtures of sounds you record in each mic are nearly identical and almost impossible to distinguish). As Noah explains it, “Depending on the wavelength of the sound and other details, if you add together the two signals, there’s some cancellation and the signal is attenuated.” However, Noah and his colleagues at Lyric have developed a new algorithm that allows the microphones to be placed much

closer together. Using tiny mics called MEMS (microelectrical-mechanical systems; there are three in an iPhone, to give you an idea of size), he and his colleagues have been able to place their mics as close together as a single millimeter apart from center to center and still be able to successfully separate the recorded sounds.

The ultimate goal of all of this research and work is to get voice recognition on a device such as a phone or a car’s command system to work successfully more frequently. “We want to cross that usability threshold in noisier environments,” Noah says, such as a car on the highway with sounds from the road and the air conditioner and even other passengers interfering. Lyric Lab’s goal has always been to clean up the signal (they do not make speech recognition software themselves). Their initial project was to improve speech intelligibility in hearing aids, but they had to set that aside after realizing that there isn’t currently enough space within the devices physically to make significant improvements in how they work. “Hearing aids are an extremely resource-constrained environment already,” Noah says. The difficulties are not just due to the constrained environment though. “It’s not at all obvious even what function you would want to optimize if you had some sort of magic routine that could do it, because capturing this, [that is] how good does this audio signal sound in terms of it sounding like clean, undistorted speech, that’s an extremely subjective thing, and coming up with

a really rough surrogate for how to measure that is really hard.” There are times when a human can distinguish between audio files but a metric can’t, and others when the opposite is true. As Noah points out, much of optimization theory “goes out the window when you can’t formulate your problem in a way that captures the stuff that you really care about.”

To relax away from work, Noah moves from hobby to hobby, with current interests including yoga and European-style board and card games. He also enjoys travel, saying, “I will travel to tropical locations and read math books there.” That joke has a kernel of truth, however. For a man who loves theory, a return to pure math is enjoyable and a complement to the more applied work he does each day. The ability to move back and forth between the theoretical and the applied aspects of math, to see the multiple approaches to a problem, is crucial to Noah’s work, and one of the most important things he learned at LIDS.

A High-Altitude Perspective

By Grace Chua

The northern Indian state of Himachal Pradesh (which means, roughly, “land of snow”) is known for its fascinating Himalayan beauty. Full of scenic routes, gushing rivers, delicious fruits, and crystal clear skies, it leaves an indelible imprint on everybody who’s been there.

From this high altitude setting comes Dr Suvrit Sra, a Principal Research Scientist at LIDS. Aptly, in his work on machine learning, optimization, and statistics, he is motivated to search for connections between different issues and topics – the bird’s-eye perspective, so to speak.

Recently, he applied this perspective to uncover new mathematical techniques for manifold optimization. “Sometimes, when you develop new mathematical tools, you revisit old problems to see if there’s a deeper, hitherto unknown, connection that can help you solve them better,” he says.

In this case, Suvrit and his colleague Reshad Hosseini of the University of Tehran, took a new look at parameter estimation for Gaussian Mixture Models (GMMs).

In statistics, a mixture model helps to represent sub-populations or clusters amidst a larger general population. For instance, say you have a list of all housing sale transactions for the year; can you identify, by looking at the data, clusters

of transactions for studio apartments sales or for sales of single-family homes?

To solve Gaussian Mixture Models, the gold standard has long been an algorithm called expectation maximization or EM. Suvrit and his colleague had been working on some techniques in non-Euclidean geometry, however, and their intuition suggested their geometric ideas could be applied to GMMs, too. Their challenge was to improve upon EM by building on a different optimization technique – Riemannian manifold optimization.

Their first attempt, however, failed spectacularly. Expectation maximization was, after all, the gold standard, Suvrit says. Back to the blackboard, Suvrit and Reshad realized a subtle differential geometric point that had previously escaped them but they thought might help them recover from the setback. And it did indeed! In fact, so much so that Suvrit says, “We’re still trying to understand why it worked so well.”

In 2015, their work was accepted as part of the Advances in Neural Information Processing Systems (NIPS) conference proceedings—the largest, oldest, and most prestigious machinelearning conference in the world.

Suvrit joined LIDS in January 2015. But his interest in computer science dates back to the

late 1980s when he was first introduced to computers in elementary school.

“I was probably 11 or so,” he says. “My school was just modern enough, and we were fortunate because even though they introduced computers to us, the first thing they taught us was programming, rather than how to use computer software – so I became interested in computer programming. I was very interested, as children are, in learning how to ‘be a hacker’, figuring out how to ‘break’ software, that kind of thing.”

That led him eventually to a PhD in computer science from the University of Texas at Austin, where he discovered his interest in machine learning and optimization. Subsequently, he took on a research position at the Max Planck Institute in Germany (a place that he fondly remembers for its calm, deep, research atmosphere) in the exceptionally pretty town Tübingen; later he held visiting faculty positions at the University of California, Berkeley, and Carnegie Mellon University.

Currently, his work centers on optimization for machine learning – making machine-learning models and algorithms as sleek and efficient as possible. Today, machine learning, which refers to how computers learn from data without being given a full set of explicit instructions, is a much-bandied-about buzzword thanks to its growing popularity and range of applications.

This wasn’t always the case, says Suvrit, even though the machine-learning field has been growing for over three decades now. “Around 2007 I was trying to gather more support for bringing in more optimization into machine learning, but I had a tough time finding coorganizers,” he says.

Take, for example, what’s called deep learning. In deep learning one goes through a large dataset bit by bit, sampling small chunks to analyze, learning a little more each time, and continually updating a model. That enables a machine to find the correct result: trained on enough images of cats, it can learn to recognize a cat in a novel image, for instance. Or, taught to recognize angry tweets, it can uncover the same mood or political sentiment from a set of Twitter or Facebook posts.

“If you go through the data in a careful manner, guided by theory, you can make more effective use of the data. That may end up cutting down the amount of time it takes to train a neural network, sometimes by hundreds of times,” Suvrit says. “Optimization is what puts life into the system.”

To attract and encourage other researchers, he began organizing a workshop, specifically on optimization for machine learning, at the annual NIPS conference. “Today, after almost nine years, a large number of the cutting-edge results in optimization are being generated by people in machine learning,” he says.

Now he is working on a number of applications in collaboration with other researchers and organizations.

For example, intensive care doctors have many patients; all their cases are, by definition, serious. Yet some of these cases are more complex to treat than others. By comparing a patient’s data with a range of other cases with known outcomes, can machine learning help tell a relatively simple case apart from a more complex one? “Of course, doctors use their prior knowledge and experience to make decisions,” Suvrit says. “Can we help these expert doctors arrive at better decisions faster, to handle patients accurately and precisely?”

ed devices – the so-called Internet of Things. In collaboration with a local firm, Suvrit aims to design algorithms to run well on tiny devices using very low battery power. Putting machine-learning capability on such minuscule hardware calls for different methods and techniques, he explains.

LIDS, and MIT in general, are dynamic places that encourage such diverse activity, he says. “One of the things I like about MIT is that it’s a very high-energy place, and that suits my temperament. I don’t have to be embarrassed to be a math geek here!” Students help develop his ideas; projects with collaborators can sometimes arise spontaneously. “One of the hardest parts is picking which questions are worth answering.”

Beyond work, Suvrit enjoys hiking, dabbling in foreign languages (he taught himself German before he went to Germany), and enjoys Urdu poetry. He also tinkers with pure-mathematics problems for fun, often answering questions on mathematics website MathOverflow.

“It’s the only social media I care about,” he says. “While answering other people’s questions on the website, I end up discovering answers to my own questions.” In mathematics and computer science, pure and applied, it seems, it helps to have that high-level view.

Another application is in smart, web-connect-

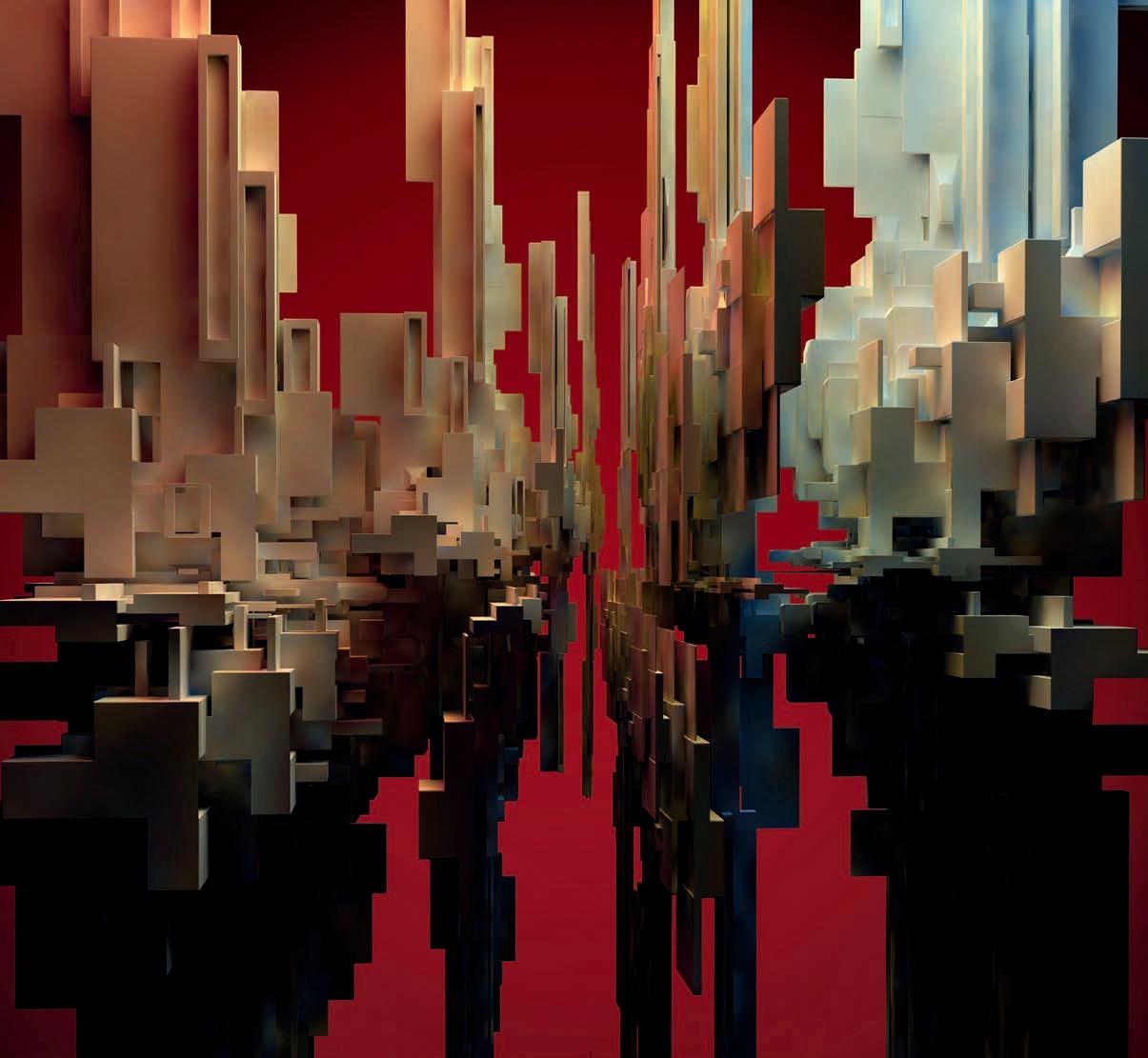

Looking at All the Angles

By Jennifer Donovan

Swati Gupta’s passion is finding connections that transcend boundaries. Central to her work, she looks at a problem from as many different angles as possible--applying methodologies and techniques from a range of disciplines--to find new insights and relationships. “A professor of mine once told me that often the extremely important papers in a field are those that discover latent connections between two different areas,” she says. “Drawing these connections has always been exciting to me.”

For instance, what do competing search engines, governments trying to catch smugglers, and the battles in Game of Thrones have in common? For Swati, it is that within each scenario you can find a two-player zero-sum game (games in which one player winning means the other must lose) with a vast number of playing strategies from which to choose.

To get a sense of this, think of rock-paperscissors but using actual rocks, paper, and scissors. It is easy to find the optimal playing strategy if each of these is available in limited quantity. However, if there are more rocks, paper, and scissors available than you can count in any reasonable amount of time, how do you decide the best play to make? It’s a complex question that can be approached using powerful mathematical techniques. “We solve these games taking ideas from combinatorial optimization, convex optimization, and online learning,” says Swati. “It’s fascinating to see how these all give us different insights and bring in a multitude of applications.”

Going back to the Game of Thrones example, imagine a battle is about to take place in King’s Landing. Within King’s Landing there are key strategic points that the protecting army must guard, and it is these same points that the invading army will try to reach. In devising their strategy, the protecting army will try to predict the invading army’s route and position themselves to intersect it as much as possible. Conversely, the invading army will try to predict the route that minimizes intersection, keeping them away from the protecting army as much as possible. What is the best strategy for each army to use? In her research, Swati frames this as an example of a two-player zero-sum game, where each army is considered a player.

Another type of problem that can be solved within the two-player zero-sum game framework is dueling algorithms. For instance, consider two search algorithms that rank web pages, like Google or Bing. Suppose that for a given phrase one search engine, Search Engine A, has developed a probability distribution that represents the fraction of users looking for the resulting pages. In this example we’ll use the phrase “famous artists” and say there is a probability that 20 percent of people are looking for Picasso in their search results, 15 percent for Dali, and so forth. To maximize customer satisfaction, Search Engine A would provide a greedy ranking of pages, such that the most popular (Picasso, in this case) shows up at the top of the results. What happens, though, if we add a competing search engine, Search Engine B, to the mix? What strategy might Search

Engine B use to attract more customers than Search Engine A? With knowledge of the probability distributions, Search Engine B could remove the most popular search result knowing that Search Engine A will get that 20 percent of the customers. However, by moving up all of the other results (with Dali at the top, now) Search Engine B will get the remaining 80 percent. Swati views this part of her work with game theory as a foray into analyzing competing algorithm scenarios. Such scenarios come up not just in search engines but in many things we use in our day-to-day lives, like recommendation systems (e.g. Yelp) and routing applications (e.g. Waze).

Once she has set these problems up as twoplayer zero-sum games, Swati uses a range of methods to solve them. Two related techniques are multiplicative weights update (MWU) and mirror descent. Both are online learning algorithms, meaning that a question is answered (What is the best route, given that we don’t know the actual congestion?) using knowledge of previous outcomes (Main Street has heavy traffic during rush hour, so getting home this way takes longer than it might), as that knowledge is acquired. (We used Main Street on Monday but now that we know it can be congested at peak hours, on Tuesday let’s try Pine Street. Even though the distance may be longer, it could take less time). Here, the connection with games is that any online learning algorithm (with some nice properties) can be used to help both players learn the optimal strategies for two-player zero-sum games.

In the MWU method, the weights of different actions are updated each time a scenario is run, yielding a probability set of increasingly optimal solutions. In the example above, the decision to try Pine Street might have come about because Monday’s information indicated a lot of congestion, and so the weight of “Main street” (higher delay due to traffic) was reduced relative to the weight of “Pine Street” (lower delay due to distance). In her research, Swati shows how to update these weights in a reasonable amount of time even if the number of actions is very large.

MWU is a special case of a more general optimization method called online mirror descent, which is also an iterative learning algorithm. You begin with a point inside the set of feasible decisions that represents one possible play. You make the play, observe your losses, and modify your strategy in a direction that would decrease the losses, with the hope that a new and improved point in the decision set is found. However, it’s possible that this move puts you outside the bounds of the decision set. Calculating the shortest path back to a point in the decision set that makes the most sense (i.e. is closest to the point representing the optimal strategy) is now a convex minimization problem. Swati developed an algorithm, Inc-Fix, that can solve this optimization problem for a general class of decision sets (called submodular base polytopes).

The Inc-Fix algorithm can be visualized by graphing gradient values against corresponding

building blocks (elements) of the strategies. You begin by increasing the elements with the lowest gradient value until you hit a tight constraint (a boundary in the decision set) then you fix the elements that are tight and continue increasing the rest. “I really like this visualization,” says Swati. “You can think of it like filling water in a connected series of containers placed at different heights.”

“Approaching the problem of solving games via online learning led us to develop new insights that improve the state of the art of online learning. Getting deeper into the algorithm of mirror descent led us to develop Inc-Fix to do convex minimization,” says Swati. “This added more applications that we could have an impact on, some of which are in machine learn-

ing, some of which I‘m still discovering. And that is exciting to me.”

Gracious, energetic, and quick to smile, Swati came to MIT after getting her bachelors and masters degrees in Computer Science and Engineering at the Indian Institute of Technology Delhi. “I’m from a very academic family, full of engineers and professors! Debating and puzzle-solving has been a part of growing up. I also love doing anything and everything creative - and research lets one be as creative as one can be. So, I want to do this for the rest of my life!” she says. She was drawn to MIT by its immense intellectual energy and its collaborative environment. Apart from her advisors, professors Michel Goemans and Patrick Jaillet, Swati has collaborated with a number of people at MIT including professors Dimitris Bertsimas and Georgia Perakis on robust inventory routing and dynamic pricing problems. (She has a dual affiliation with MIT’s Operations Research Center and LIDS.)

She recalls that while working with Michel, he once told her, “I need you to be critical of every step in the proof.” This idea of applying critical thought to her work is something Swati carries over into other areas of her life, as well, including her many creative projects. Perhaps the most notable of these is her panoramic photography, which she does using using her iPhone. Instead of remaining stationary while moving the camera she realized that to get a panoramic shot all she needed was relative motion. This meant she could be moving while the phone

continued on page 40.

How Robust Algorithms Can Change the World

By Katie DePasquale

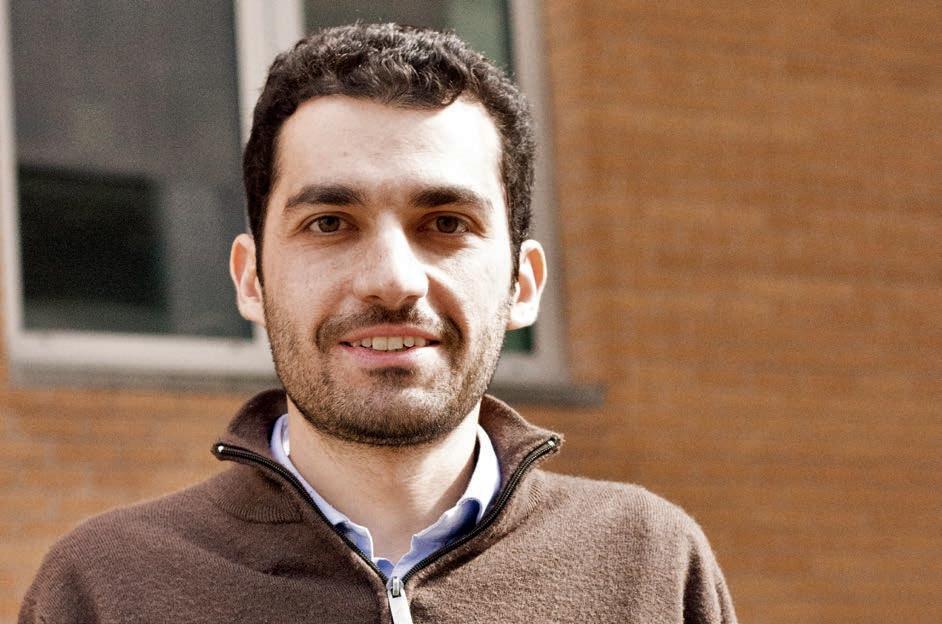

“Choose a job you love, and you will never have to work a day in your life,” Confucio said, and Luca Carlone has certainly found a job he loves. He came to LIDS in July of 2015 as a post-doctoral associate and was promoted to Research Scientist in June of 2016. His work is focused on robot perception and on the particular challenges of Simultaneous Localization and Mapping (SLAM), which is—to put it simply—when a mobile robot builds a map of a new environment while concurrently using that map to move through it. Listening to Luca talk it’s clear that he never gets tired of his work: “The nice thing about robotics is that you can find so many different aspects that you never get bored.” If he needs a break from the mathematics involved in creating SLAM algorithms, he steps away to build a robot, write some code, or fly a small drone.

Originally from Italy, Luca was first introduced to robotics as an undergraduate at the Polytechnic University of Turin. Since then, he has been fascinated by industrial robotics such as the machines that assemble cars in a factory, an interest that led him to study mobile robotics—the self-driving car, for instance. He always wanted to make a positive impact on the world through his research, and this is why he focused on applied robotics. After getting his bachelor’s degree in Mechatronic Engineering in 2006, he received master’s degrees from both the Polytechnic University of Turin and the Polytechnic University of Milan. He then

got his Ph.D. in Robotics from the Polytechnic University of Turin in 2012 before moving to the United States to explore the burgeoning opportunities here. He collaborated for two years with Frank Dellaert at Georgia Tech’s Borg Lab, studying robotic perception and computer vision, then he headed north to MIT to work with Sertac Karaman. “It’s a great mix because my supervisor is an expert in motion planning and I am an expert in perception, so we are trying to combine things,” Luca says. Luca focuses on getting the map of the environment correct, Sertac on helping the robot navigate that environment, so that together they are addressing a wide range of the issues that can arise in applied robotics.

Although Luca is working on multiple research projects at any given time, at the moment he has two main projects. One of them he doesn’t much discuss as it involves military applications; the other is related to the application of robotic technology in very tiny robots. “[I’m] interested in the challenges connected to scaling down these robots,” he says. These challenges are quite complex: “They [the tiny robots] cannot carry much load, so you cannot put a lot of computation on board, or a lot of sensors. So it’s harder to make the robot aware of what’s in its environment.” These are a few examples of the challenges of designing algorithms that allow a robot to understand and build a model of the world around it. The resource constraints are tricky to overcome and,

in some cases, even to explain because humans perform so many of these world-building calculations without realizing it. “It’s surprisingly hard to tell people how they do these tasks because a human does this kind of job all the time without any trouble, so you can imagine that you’re using your eyes, you’re using touch to figure out what’s around you…and you mix all these sources of information into a coherent representation of the environment.” Robots can do these things only to a limited extent. Additionally, as Luca points out, “they have to be able to do them efficiently and with limited information because the robot can’t spend hours number crunching before performing its intended function.”

These days the functions and applications for robots may seem endless, and that’s part of the fun of Luca’s work. While his Ph.D. focused on a standard view of robotics including the four Ds (robots perform Dangerous, Dirty, Dull, and Dumb tasks), now he looks at different motivations. The current norm with robots is using them to move goods in a warehouse, to dispose of bombs, or to explore space; what’s needed going forward includes applications for intelligent transportation (there’s the self-driving car again), pollution monitoring in the form of situationally-aware drones, and precision agriculture. Given the projected population explosion over the next 40 years, Luca believes that the agricultural systems we have in place today can only keep up with demand through

technology. Robots can be designed to monitor crop growth (this was part of his research at Georgia Tech) and to spray pesticides, among other duties, in a way that even huge teams of people could not.

However, Google’s self-driving car indicates some of the perils in transitioning these types of robots into industry and unveiling them to the public. The “real maturity of a technology,” Luca points out, “is often hard to assess, and users’ perceptions and expectations are often not aligned with what the robot can do.”

“These robotic technologies are a good idea always in the long term, meaning that, when the technology is ready, we will really see a boost in performance between a human driver and a self-driving car,” he says. “The thing about the perception of the maturity of the technology is a big issue. [With Google’s self-driving car], everything seemed to be solved, and the technology seemed to be in good shape and ready for market, but if you see the news from [late March], the same people from Google are saying that you have to be careful because in a very specific environment, the technology is close to being delivered, but there are still many open problems, and for some places around the globe, it may take 30 years.”

Key to all of Luca’s work, both now and going forward, is making the existing algorithms for robots more robust. “An algorithm is software that is processing a bunch of information and

giving an answer. People get very excited when using algorithms, but…showing that your algorithm works in a single instance does not mean that it works in general.” Humans may be good at selectively filtering huge amounts of information and processing it appropriately with little conscious thought, but for robots, “the amount of information that you need to put in your map representation depends on the task that the robot has to do.” The goal, in other words, is not to have a robotics expert sitting in a room designing every possible application; it’s to create a robot smart enough to figure this out for itself. For this to happen, though, the expert must first be able to tell the robot what to watch for since it will have limits on what it can pay attention to, no matter how many sensors it has and how much data it can take in.

For Luca, LIDS is the perfect place to explore these questions. “Here,” he says, “the only real constraint is time.” There are so many great seminars and talks to attend, so many colleagues to pair up with for projects and for hanging out, that time management becomes a necessary skill. Luca also appreciates how easy it is to interact with people in other departments, partly because of how well-located LIDS is, but most of all he fully agrees with the mission of LIDS,: “to produce strong theoretical contributions which have a huge impact on real life. That’s exactly what I want to do: something that is grounded in scientific research but that becomes a real thing that helps people.”

DIAGNOSING THE PROBLEM

By Grace Chua

It’s mid-morning on a Wednesday, and the week is in full swing. Final-year graduate student Hamza Fawzi, 28, emerges from the seventhfloor office he shares with two other students.

The office is decidedly lived-in: a bicycle sits in one corner, hockey gear in another. Hamza’s own desk is littered with scraps of scratch paper and a stack of large spiral-bound notebooks. This is where he does most of his thinking.

Hamza, whose advisor is Electrical Engineering and Computer Science Professor Pablo Parrilo, thinks a great deal about what’s called convex optimization. Optimization, as the name suggests, is picking the best solution or element possible from a set of available choices. Convex optimization is when the set of choices has certain properties and constraints that give it, in technical terms, a ‘convex’ geometry. Picture a ball or a cube: any two points within the ball or cube can be joined by a line that is also entirely within that structure.

In real-world terms, Hamza explains, the set of choices might look like this: Let’s say your goal is to control a robot in the best way possible. You have a number of inputs, but they have constraints: the robot’s motor can only rotate so fast, its arms move within a certain arc, and so on. What he’s trying to do is understand the properties of a set of available choices like these, and in doing so, to help engineers easily pick the best way to control the robot.

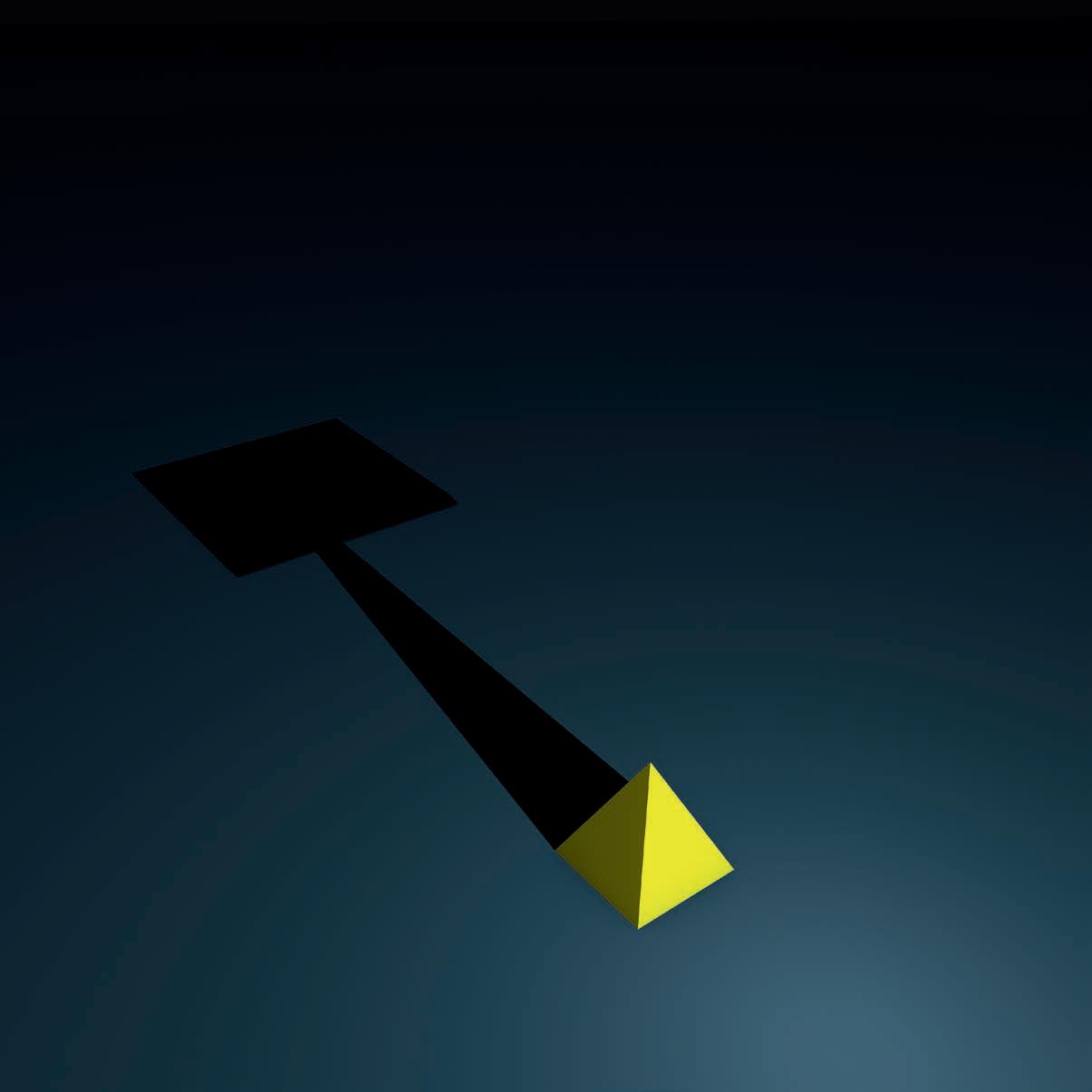

Certain techniques exist to solve these kinds of problems. One family of techniques is called lifting. Lifting techniques ask: can a given set of choices be understood as the projection of a simpler, higher-dimensional set? (Imagine a solid pyramid, casting a square shadow.) Can solving for that simpler set then allow you to solve the original optimization problem faster?

An analogous scenario is the so-called ‘Netflix problem’. The movie-streaming service needs to figure out what each of its 75 million or so users might like to watch next.

“Each user seems unique, but there may be a small number of latent, or hidden, patterns that explain the complexity of all the users’ behavior,” Hamza says. That’s the idea behind targeted user profiles. For instance, users who are male, own a dog, and stream things every week might enjoy romances, users who are female, watched a comedy last month, and stream things every night might enjoy comedies, and so on.

The challenge is to identify the underlying profile patterns that are hidden in the data. Part of Hamza’s work is to figure out ways to determine, for a given data set, whether such patterns exist and if so, how to identify them.

“The point of my work is to come up with a ‘simple’ way to tell whether a set is inherently hard” - that means it can’t be understood in terms of a projection or small number of profiles - “and develop tools to do this.”

That has applications beyond helping a video streaming site deliver better user choices. It can help businesses plan logistics, roboticists program machines efficiently, or doctors pick nascent tumors out of a brain scan and accurately tell them apart from something more benign, to name just a few examples.

In fact, part of the topic’s appeal to Hamza is that it has so many applications in the first place. While he’s personally more interested in the mathematical fundamentals of convex optimization, he says, “The reason I like optimization is it’s always useful. I like talking with people who are users of optimization. Nearly every week at LIDS, there’s a seminar on a different, related application – I like learning about all of these.”

In particular, he says, optimization is getting a lot of attention now in machine learning. “You want very efficient algorithms because they have to work with very large data sets.” Any inefficiency can eat up precious computing power and resources.

Part of Hamza’s work is also to study so-called convex relaxations. “If you have a problem that is non-convex, convex relaxations are systematic ways of converting it to a convex problem.” Take a power grid, for example. Working out the optimal grid operating conditions under constantly changing load constraints is a nonconvex problem, and one that power companies

have to solve every hour. In practice, you can use convex relaxations to tackle this problem and retain enough accuracy to keep the grid running safely.

“This is a very hot area, because of smart grids and large grids – you need to solve these problems efficiently,” Hamza says. “From a mathematical point of view, we want to know: when does this technique work? What characteristics of a problem indicate that it would be solvable by convex relaxation?”

We head back upstairs to Hamza’s office. His office-mates, Jennifer Tang and Omer Tanovic, aren’t there right now, but Hamza says he enjoys bouncing ideas around with them. “No two of us have the same advisor, so talking to each other keeps us up to date [across related fields], which is very useful. The interdisciplinary aspect of LIDS is great for this,” he adds.

Hamza’s two brothers also play the ideas tennisgame with him from afar. An older brother is a professor of computer science; a younger brother is a PhD student in Switzerland. The formidably-educated trio were raised in Cairo by an Egyptian father and French mother who encouraged their love of mathematics and science.

It was his older brother, in fact, who inspired Hamza to work on a problem and implement a new functionality in existing convex optimi-

zation software. “He said, there’s no solver that handles this particular function; so together with a colleague [former LIDS PhD student James Saunderson], we worked out the theory and wrote a piece of code in Matlab that performs this function.” Useful bits of code are put up on Hamza’s website for all to use, in the hope they’ll have some broader impact.

Besides ideas-tennis, Hamza also enjoys the real thing, along with soccer – he has been the athletic co-chair of the Electrical Engineering and Computer Science department, captaining and playing center midfield on its intramural soccer team.

Hamza defended his thesis in the summer of 2016. This fall he will go on to Cambridge University, joining the newly established Cantab Capital Institute for the Mathematics of Information as a lecturer. There, Hamza hopes to help build an optimization group in the new institute. “I’d like to continue working on these aspects of mathematics of information,” he says. “Optimization is really critical.”

You’re new to both MIT and LIDS –how have you found it so far?

I find it great! MIT is a big institution but it feels like a small community because people are very nice and welcoming. They speak well about the Institute—they’ve been here 6, 10, 15 plus years—which speaks volumes of the Institute and how well it treats its employees.

At LIDS I assist the director, Asu Ozdaglar, and oversee events within the lab. My favorite part about working here is the interactions

with everybody and how there’s so much culture. Even within just the LIDS staff, there are so many people from different places around the world. I enjoy talking to them about where they’re from. When people talk about their background and culture they get animated and you really get to know more about them.

What did you do before you came to LIDS?

For ten years I worked at the Four Seasons. That was combined with six years in the Bos-

ton property and four years in D.C., mostly working on events. Both properties are very well established and extremely fast paced. You needed to think quick on your feet while not compromising the guest’s overall experience, seeing everything as a whole. It was exciting to work with celebrities, moguls, politicians and even the United States Secret Service.

How did you find the transition from hospitality to academia?

Everything in the hospitality world is so fast paced that coming here is a breath of fresh air—total night and day. Even the office setting itself is so quiet. I’m used to being in an open setting where everybody has free range of every cubicle. There really was no emailing – you’d just shout over the cube to ask a question. Here it is sometimes quite fast-paced, as well. But there are also events, like the symposium for Alan Willsky, or the LIDS Student Conference, that are planned ahead of time.

You have a lot of events experience –do you think you want to keep doing that kind of work long-term?

I would like to stay working with events—I get the idea of it and I find it fun. In event planning you can’t avoid the paperwork but what I really enjoy is seeing the event itself. So, maybe being the person who oversees and executes events. I really enjoyed building rela-

tionships being face to face with clients when I worked at the hotel. I’ve worked in different fields (catering, fashion, payroll, hospitality). Life presents new opportunities along the way. I certainly don’t rule anything out.

What do you do when you’re not at the office?

I love to travel! That’s one of my biggest passions. Aside from coming home to be with family, that was one of the big reasons why I moved back to Massachusetts. I was spending all my vacation time coming back here to see friends and family. I felt as though I was not allowing myself the opportunity to explore what the world has to offer. Now I plan to take at least 4-5 trips a year.

Do you have a favorite trip you’ve taken?

I have two favorite trips. One: Paris, because it’s Paris, enough said. Two: I would say Mexico. I went to Punta Mita, which is on the Western side of Mexico. It was one of the best, most relaxing vacations I’ve had in a long time and the food was just out of this world.

Statistical Inference under the Willskyan Lens

In March 2016, over 100 friends, colleagues, and students attended Statistical Inference under the Willskyan Lens, a symposium celebrating the exceptional career of Alan Willsky. The event was opened by Munther Dahleh (MIT) and featured technical talks by Roger Brockett (Harvard), Steve Marcus (University of Maryland), Nils Sandell (DARPA), Albert Benveniste (INRIA), Bill Irving (Fidelity), Martin Wainwright (UC Berkeley), Emily Fox (University of Washington), and Venkat Chandrasekaran (Caltech). This group, together with Al Oppenheim (MIT), who spoke at the symposium banquet, represented not only Alan’s intellectual impact on the field of information and decisions sciences, but also a community of researchers to whom Alan has been an instrumental collaborator and mentor.

The symposium’s closing remarks were delivered by Alan, who was introduced by LIDS director Asu Ozdaglar with thanks for his many years of leadership at LIDS. Alan’s talk covered some of the highlights of his career, and acknowledged the many people who helped, challenged, and inspired him along the way.

For more details about the symposium, visit: http://willskyan-lens.lids.mit.edu/

LIDS

Awards & Honors Awards

Congratulations to our members for the following achievements!

Profs. Daron Acemoglu, Munther Dahleh, and Emilio Frazzoli (together with collaborators Giacomo Como from Lund University and Ketan Savla from USC) were awarded the IEEE Control Systems Society’s 2015 George S. Axelby Outstanding Paper Award for their paper “Robust Distributed Routing in Dynamical Networks–Part II: Strong Resilience, Equilibrium Selection and Cascaded Failures.”

Prof. Munther Dahleh received a 2017 IFAC Fellow Award “for contributions to learning, analysing, and synthesis of resilient networked decision systems.”

Prof. G. David Forney, Jr., was awarded the 2016 Institute of Electrical and Electronics (IEEE) Medal of Honor, the highest award bestowed by the IEEE.

Prof. Sertac Karaman received the Army Research Office Young Investigator Program Award for his proposal “Foundations of Statistical Methods for the Control of Far-fromequilibrium Driven Complex Dynamics and Systems.”

LIDS PIs Pablo Parrilo and Mardavij Roozbehani, together with Amir Ali Ahmadi (Princeton) and Raphael Jungers (UCLouvain), received the 2015 SIAG/CST Best SICON Pa-

per Prize for their paper Joint Spectral Radius and Path-Complete Graph Lyapunov Functions.”

Jonathan Perry was awarded a Facebook Fellowship for Fall 2015 to Spring 2017.

Prof. Yury Polyanskiy received the Jerome H. Saltzer Teaching Award from MIT’s EECS department.

Prof. Devavrat Shah (together with collaborators Vivek Farias of Sloan MIT and Srikanth Jagabathula of NYU Stern) received the INFORMS Revenue Management and Pricing Section Prize, for their paper “A Nonparametric Approach to Modeling Choice with Limited Data.”

Jennifer Tang won First Prize at the Claude Shannon Centennial Student Competition organized by Bell Labs for her work “Defect Tolerance: Fundamental Limits and Examples” with collaborators Da Wang, Prof. Yury Polyanskiy, and Prof. Gregory Wornell.

Prof. John Tsitsiklis received the 2016 ACM SIGMETRICS Achievement Award in recognition of his fundamental contributions to decentralized control and consensus, approximate dynamic programming and statistical learning.

Prof. Uhler also received the Charles E. Reed Faculty Initiative Fund Award.

Honors

Prof. Jon How was selected to be a 2016 Fellow of the American Institute of Aeronautics and Astronautics (AIAA).

Prof. Sertac Karaman was promoted to Associate Professor effective July 1st, 2016.

Prof. Karaman also received the Class of ‘48 Career Development Chair effective July 1, 2016.

Prof. Sanjoy Mitter was elected a Foreign Fellow of Indian National Academy of Engineering.

Prof. Asu Ozdaglar was appointed to the Joseph F. and Nancy P. Keithley Professorship in Electrical Engineering.

Prof. Pablo Parrilo was named an IEEE Fellow, the highest grade of membership in the IEEE.

Prof. Devavrat Shah was promoted to Full Professor effective July 1, 2016.

Prof. Caroline Uhler was awarded the 2015 Doherty Professorship in Ocean Utilization.

LIDS Seminars

2015-2016

Weekly seminars are a highlight of the LIDS experience. Each talk, which features a visiting or internal invited speaker, provides the LIDS community an unparalleled opportunity to meet with and learn from scholars at the forefront of their fields.

Listed in order of appearance.

Victor Preciado University of Pennsylvania

Electrical and Systems Engineering Department

Yaron Singer

Harvard University

Computer Science

David Simchi-Levi

MIT

Department of Civil and Environmental Engineering

Rudi Urbanke

EPFL

Information Processing Group

Ulrich Krause Universität Bremen Mathematics Department

Young-Han Kim Univ. of California, San Diego

Department of Electrical and Computer Engineering

Sewoong Oh

University of Illinois Industrial and Enterprise Systems Engineering Department

Ben Recht Univ. of California, Berkeley

Department of Electrical Engineering & Computer Sciences; Department of Statistics

Yury Polyanskiy

MIT Laboratory for Information and Decision Systems

Robert Calderbank

Duke University

Electrical and Computer Engineering Department

Sasha Rakhlin

University of Pennsylvania Department of Statistics

Gregory Valiant

Stanford Computer Science Department

Adam Kalai

Microsoft Research New England

Robert Berwick

MIT Laboratory for Information and Decision Systems

Albert Benveniste INRIA

Meir Feder

Tel-Aviv University

School of Electrical Engineering

Dimitris Achlioptas

University of California, Santa Cruz

Computer Science Department

Cédric Langbort

University of Illinois, Urbana-Champaign

Aerospace Engineering Department

Ramon van Handel

Princeton University Department of Operations Research and Financial Engineering

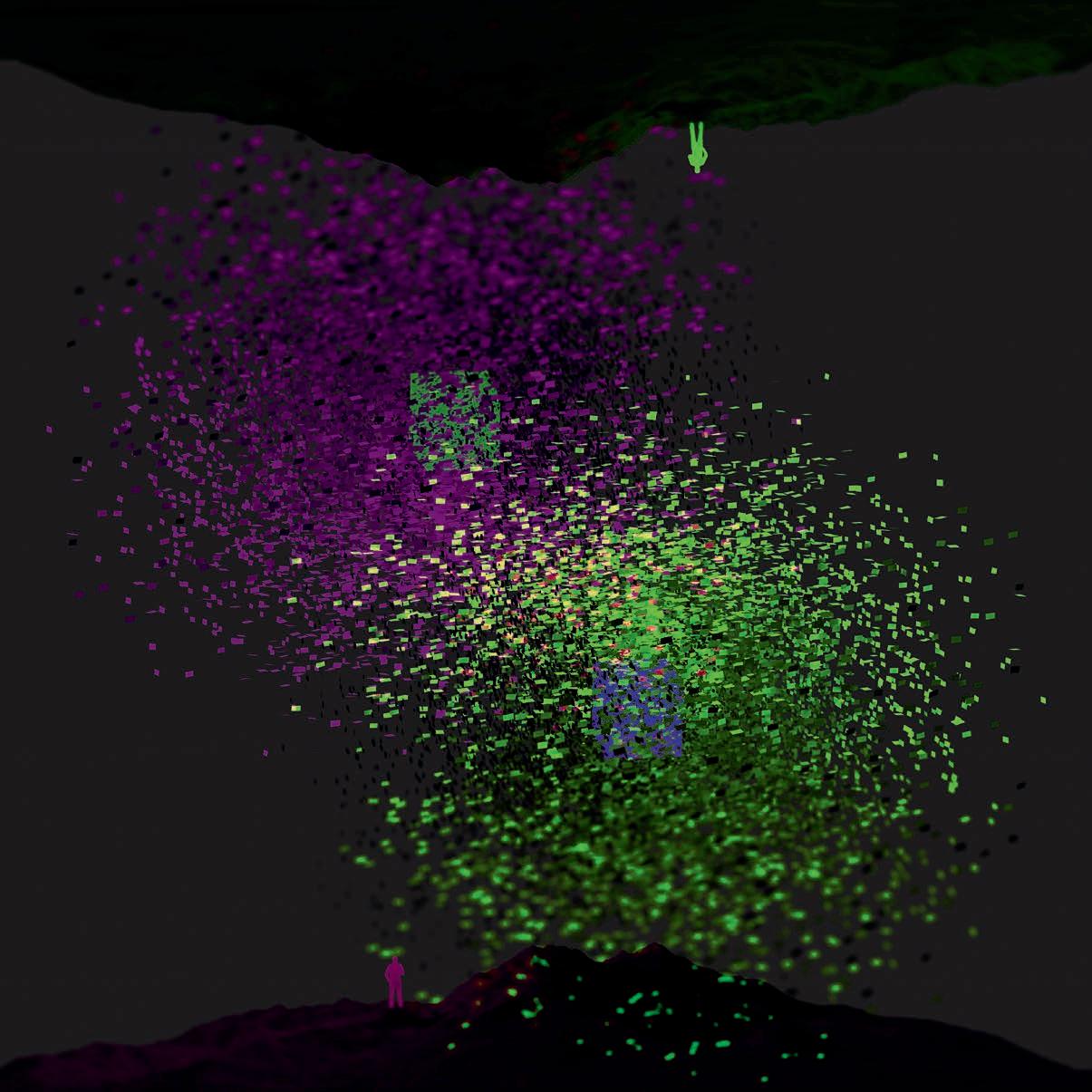

2016 LIDS Student Conference

The annual LIDS student conference is a student organized, student run event that provides an opportunity for graduate students to present their research to peers and the community at large. The conference also features a set of distinguished plenary speakers representing different core research disciplines in the Lab. The 2016 Student Conference marks the 21st year of this signature Lab event.

ORGANIZING COMMITTEE

Student Conference Chairs

Jennifer Tang

Austin Collins

Qingqing Huang

Committee Members

Aviv Adler

Nirav Bhan

Diego Cifuentes

Igor Kadota

Christina Lee

Fangchang Ma

David Miculescu

Hajir Roozbehani

Shreya Saxena

Ian Schneider

Dogyoon Song

Omer Tanovic

SPEAKERS

Aviv Adler

Amir Ajorlou

Nirav Bhan

Trevor Campbell

Diego Cifuentes

Austin Collins

Mathieu Dahan

Prof. Dean Foster

Chong Yang Goh

Qingqing Huang

Igor Kadota

Christina Lee

Quan Li

Qinkai Liang

Zhenyu Liu

Fangchang Ma

David Miculescu

Prof. Olgica Milenkovic

Beipeng Mu

Prof. Yannis Paschalidis

Hajir Roozbehani

Shreya Saxena

Ian Schneider

Rahul Singh

Dogyoon Song

Rajat Talak

Jennifer Tang

Omer Tanovic

Valerio Varricchio

Prof. Stephen J. Wright

Sze Zheng Yong

Martin Zubeldia

PANELISTS

Prof. Peter Falb

Prof. Ali Jadbabaie

Prof. Stefanie Jegelka

Prof. Alexander Rakhlin

Prof. Alan Willsky

Moderated by Prof. Munther Dahleh

Welcome Guy Bresler & Caroline Uhler

Guy Bresler is the Bonnie and Marty (1964) Tenenbaum Career Development Assistant Professor. His research investigates the relationship between combinatorial structure and computational tractability of high-dimensional inference in graphical models and otherstatistical models. Some of his recent work studies how best to learn a graphical model from data, and how data and computation requirements can be reduced if the model is to be subsequently used for a specific inference task. Guy is also interested in applications of these methods, especially to recommendationsystems and computational biology.

LIDS

is delighted to welcome two new faculty members to the

Caroline Uhler joined LIDS as an assistant professor. Her research focuses on mathematical statistics, in particular on graphical models and the use of algebraic and geometric methods in statistics, and its applications to biology. Current projects include the development of causal inference algorithms to infer gene regulatory networks, the development of ellipsoid packing algorithms to study the spatial organization of chromosomes, and the study of Brownian motion models for phylogenetic inference using quantitative traits.

Both Guy and Caroline joined MIT and LIDS Fall 2015.

needed, so they could direct their research efforts. “I also learned a lot about how you build a company, and how you make it so that everybody in that company feels that they can move the dial, they can affect the future of the company, and that they’re appreciated for it. That is extraordinarily important to me,” Alan adds. By 2004, when the company was acquired by BAE Systems, Alphatech had become a major provider to the federal government of advanced information technologies.

Alan has used the wisdom he’s gained over the course of his career in-part as a leader of LIDS, as well, serving as Assistant Director (1974-81), Acting Director (2007-08), Co-Director (200809) and Director from 2009 until he retired in 2014.

For the past two years or so, Alan has been a “professor, post-tenure.” Though he has given up a tenured position, he is still able to be principal investigator on grants, and keeps an office at LIDS, working about half time. In his spare time, Alan and his wife, artist and illustrator Susanna Natti, have remodeled her childhood home above a quarry in Gloucester, Mass., and traveled to spots as diverse as the Panama Canal and Serengeti.

As he reflects on his career, it is clear that Alan’s students are a tremendous source of pride – he enjoys sitting on the doctoral committees of students whose theses he wants to learn more about, and watching former students apply their

knowledge to areas as diverse as the Internet of Things and the prediction of housing prices. “I’m often surprised and delighted by the applications that I see students of mine working on,” he says.

Meanwhile, Alan continues to consult for private companies, figuring out ways to structure and build models from a mixed bag of heterogeneous data such as physical sensors and Tweets.

And one of his greatest satisfactions of the last few years, perhaps, is helping to establish a growing and strong statistics presence at the new Institute for Data, Systems, and Society, which applies analytical methods to societal challenges. Four new hires in machine learning are distributed between CSAIL and LIDS. For him, the line between the physical and computing worlds, “is and has to remain completely blurred,” he says.

Alan’s career has been a genre-bending one with few labels, and one in which theory and application inform each other. It’s a way of thinking he’s passed on to his students, who have come from many different disciplines to find a common ground in statistical modeling and methodologies. “Statistics, Electrical Engineering, Computer Science,” he says, “None of us can figure out what field we’re in. And that’s something to celebrate.”

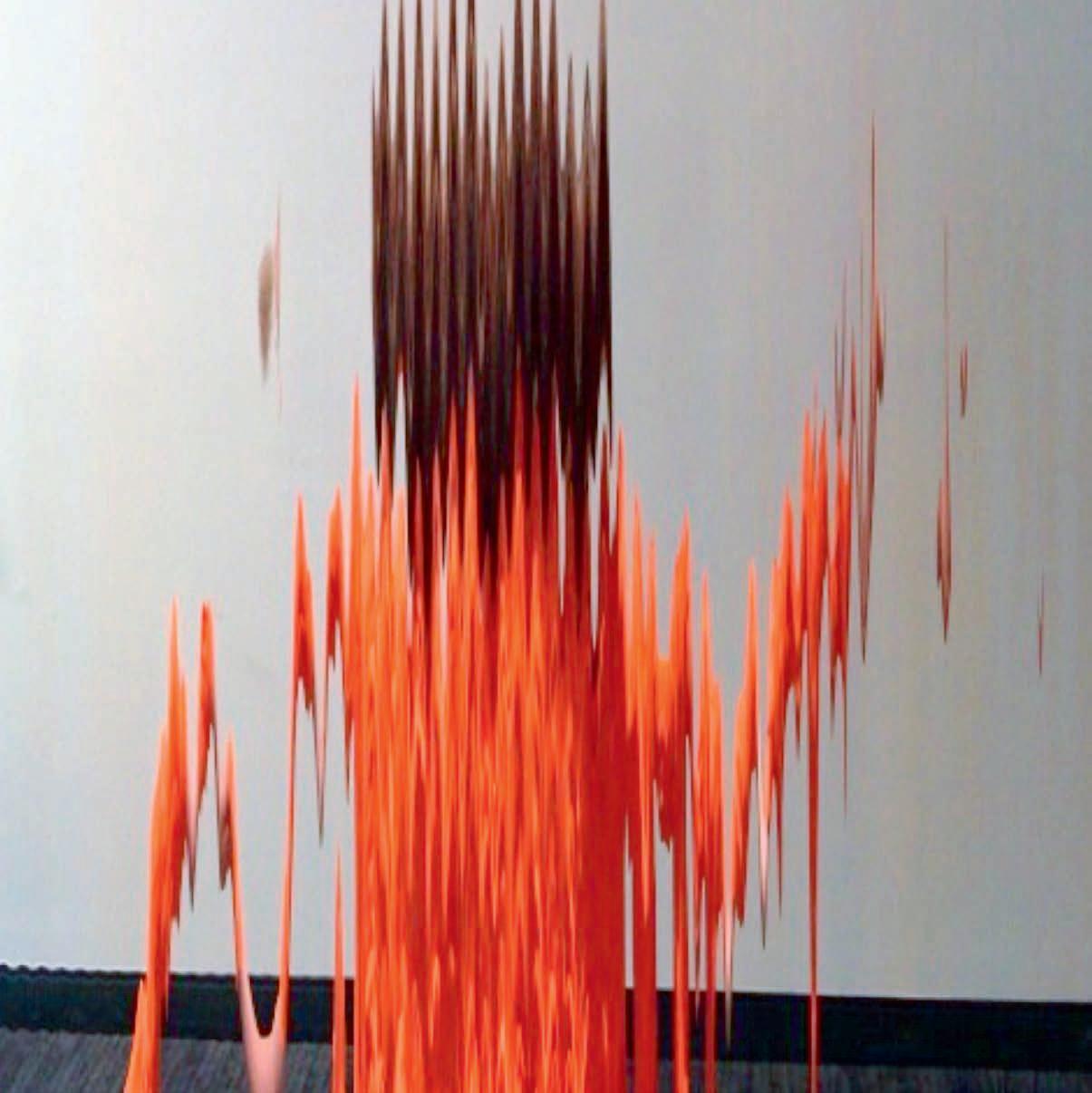

was stationary. “I started doing these experiments in 2013. I was on a high-speed train from Zurich to Paris and I put my phone on the window of the train,” she says. “There were amazing effects in the pictures because of the way the iPhone’s stitching algorithm works!” With encouragement from Martin Demaine (the Angelika and Barton Weber Artist-inResidence in the Department of Electrical Engineering and Computer Science at MIT), Swati continued her panorama experiments, and eventually discovered she could use this feature to stitch together different views of a person’s face to fascinating effect, which led to a self-portrait studio titled Panoramia that is now being exhibited at the MIT Museum. (Swati is quick to acknowledge the MIT Museum Studio for their help in developing the project.)

Swati approaches her outside interests - photography, ambigrams (a way of drawing words so that they keep a meaning even when viewed upside down), and gender equity work - the same way she approaches her research: with drive, curiosity, and a focus on connection. “It’s been an adventure going from combinatorial optimization to game theory to online learning to convex optimization and discovering applications in different fields,” she says. It’s an adventure she looks forward to continuing for years to come.