LODZ UNIVERSITY OF TECHNOLOGY

Faculty of Electrical, Electronic, Computer and Control Engineering

Bachelor of Engineering Thesis

A Human-Computer Interface controlled by whistling

Mateusz Grzyb

Student’s number: 203115

Łódź, 2019

Supervisor: prof. dr hab. inż. Paweł Strumiłło

POLITECHNIKA ŁÓDZKA

WYDZIAŁ ELEKTROTECHNIKI, ELEKTRONIKI, INFORMATYKI I AUTOMATYKI

Mateusz Grzyb

PRACA DYPLOMOWA INŻYNIERSKA

Interfejs człowiek-komputer sterowany gwizdnięciami

Łódź, 2019 r.

Opiekun: prof. dr hab. inż. Paweł Strumiłło

STRESZCZENIE

W celu poprawy jakości życia osób niepełnosprawnych, liczne bariery w codziennym życiu powinny zostać zminimalizowane. Jedną z takich barier jest wykluczenie technologiczne osób niepełnosprawnych ruchowo. Osoba z niedowładem kończyn nie jest w stanie skorzystać z myszki i klawiatury. W tym celu, w ostatnich latach prowadzone są intensywne prace nad interfejsami człowiek-komputer, które działają m. in. na podstawie wykrywania mrugnięcia oka, skurczy mięśni, czy analizie sygnału EEG.

Oddech może być traktowany jako dodatkowy kanał komunikacyjny z komputerem. Celem pracy jest zaprojektowanie bezdotykowego systemu człowiek-komputer sterowanego za pomocą oddechu. Użytkownik za pomocą sygnału gwizdka powinien wybrać interesujący go znak i wyświetlić go na ekranie monitora. Program działa w czasie rzeczywistymi, a do działania wymaga tylko gwizdka i mikrofonu. W celu jak najdokładniejszego wychwycenia sygnału od użytkownika zostaną zastosowane takie narzędzia przetwarzania sygnałów jak: splot, szybka transformacja Fouriera, filtracja medianowa i algorytm Goertzela.

Zostały zaprojektowane dwa rozwiązania. W pierwszym, przy użyciu alfabetu Morsa, pożądany znak jest wybierany na podstawie serii gwizdnięć. W drugim rozwiązaniu, zastosowano tzw. klawiaturę ekranową Kolejność wyświetlanych na ekranie znaków alfanumerycznych jest ułożona według częstości występowania w języku polskim. Każdy znak jest kolejno podświetlany przez 0,7 sekundy. W czasie tym użytkownik może użyć gwizdka w celu wybrania podświetlonego znaku. Wszystkie rozwiązania zostały zaimplementowane w języku programowania Python. Testerzy wybrali klawiaturę ekranową jako bardziej przyjazną użytkownikowi. Osiągnięto dużą dokładność interfejsu – 87% rozpoznawalności znaków.

Słowa kluczowe: Interfejs człowiek-komputer, sterowanie oddechem, przetwarzanie sygnałów

LODZ UNIVERSITY OF TECHNOLOGY

FACULTY OF ELECTRICAL, ELECTRONIC, COMPUTER AND CONTROL ENGINEERING

Mateusz Grzyb

Bsc Thesis

AHuman-computer interface controlled by whistling Lodz, 2019

Supervisor: prof. dr hab. inż. Paweł Strumiłło

ABSTRACT

In order to improve the quality of life of people with disabilities, numerous barriers in everyday life should be minimized. One of such barriers is the technological exclusion of people with physical disabilities. A person with paralysis is not able to use the mouse and keyboard. For this purpose, in recent years systems termed human-computer interfaces have been developed. Such systems recognize such input modalities as eye blinks, EEG signal features or muscle contraction.

Respiration can serve as an additional communication channel. The aim of the work is to design a noncontact human-computer system controlled by breathing. The user should select a character by using the whistle that is recognized and displayed on-line on the computer screen. In order to capture signal features as accurately as possible the following signal processing tools have been used: signal convolution, the fast Fourier transform, the median filter and the Goertzel algorithm.

Two solutions were designed. In the first, the Morse alphabet was used. The user is able to select the character by using a sequence of whistles. In the second solution, an on-screen keyboard is proposed. Alphanumeric characters are arranged in an order corresponding to the rate of occurrence in the Polish language. Each character is highlighted in turn for 0.7 seconds, while the user can use the whistle to select the highlighted character. All solutions have been implemented in the Python programming language. The testers chose the on-screen keyboard as more user-friendly. The designed interface achieved high accuracy at a level of 87% of correct character recognitions.

Keywords: Human-Computer interface, control by whistling, signal processing

1. Introduction

Human-computer interaction (HCI) is a device by which a person can make a communication between oneself and a computer. Today, the most popular method of communicating with a computer is by using a mouse and a keyboard. In recent years the use of a touch screen has gained popularity. Unfortunately, what is very comfortable for healthy people is unavailable for people with some disabilities or diseases. People after amputation or limited mobility, paralyzed, visually impaired or blind, hearing impaired have serious difficulties in using computers in the traditional way. It causes digital exclusion of people with disabilities. It makes their life situation worse and it increases dependence on other people.

To limit such inconvenience, special human-computer interfaces have to be designed. Many systems use natural biological signals from users like EEG, muscle contraction or respiration. Recently, such interfaces: eye-blink detection [1], brain - computer interface [2], Jouse – joystick controlled by mouth [3] or Tongue Control [4] have been bulit A welldesigned human-computer interface should be simply in use, should work fast preferably online, i.e. it should react immediately to user commands. [5]

Digital signal processing is a very important field of computing that is linked to Human-Computer Interaction. Every signal from the user must be processed in order to evoke special command by the computer. It is important to reduce noise, remove unwanted artefacts and compress the data to make the program as fast as possible. Another important element in Human-Computer interface is the GUI (Graphical User Interface) implementation. The well designed system has to be clear and the amount of data presented to the user should be limited to the minimum. The user should feel comfortable with the system and the process of learning how to use the system should not last long. [6]

Respiration can be interpreted as a signal modality that can be efficiently used in HCI systems. In the designed system the user will use blows to communicate with a computer. The can speak. Similar efforts to build such systems have been reported before. A humancomputer interface using radio system was designed by Khan et al (2018). In this work, a human–computer interaction is described that system is based on the recognition of breathing patterns acquired through impulse radio ultra-wideband (IR-UWB) sensors. In particular, the commands are created through different signal patterns generated by the user’s inhalation and exhalation. The abdominal part of the body is examined by the camera. Authors claim that the

system is highly accurate and can be used to encode several actions. [7]. Another work is described by Moraveji et al (2011). Authors show that reducing breathing rate helps reducing stress and anxiety. They have built the system, which helps users to decrease their breathing rate by approximately about 20They have reported that users were able to reduce their breathing rate, without losing ability to continue do work like research, writing or programming [8] [9].

1.1 Motivation

Nowadays, computers are indispensable in our lives. They help us at work, in social contacts and in other aspects of our lives. Unfortunately, people with a mobility disability cannot use computers in an efficient way as the fully healthy people do. This causes a kind of technological exclusion. In order to meet the expectations of people with disabilities, a model of a human-computer interface controlled by means of breath was developed in this work.

There is a hope that the developed system will help people with disabilities to communicate with the computer more easily. It can improve their quality of life, help them in self-realization and also increase their independence.

1.2 Goals and scope of the work

The goal of this work was to create a human-computer interface controlled by whistles The user should evoke a desired character by whistling. In this case, two methods will be compared. In the first one, the user performs a series of short and long whistles that correspond to the Morse Alphabet. In the second method, an on-screen keyboard will be designed. Every button in that keyboard is highlighted for a certain amount of time. The program should work in real time and use time efficient signal processing algorithm.

In the planned tests of the interface, the user will assess both methods. The satisfaction questionnaire will be conducted. User feedback about the interface will be taken into account to improve the functionality of the interface.

2. A Human-Computer interface controlled by whistles

The task of the designed Human-Computer interface is to allow the user to display the selected alphanumeric character on the computer screen. Two methods are proposed. The first one uses the Morse Alphabet to decode the signal from the user. In the second one, the user can use a specially designed keyboard displayed on the computer screen. The program is implemented in the Python programming language and runs in real-time.

Experiment setup:

In order to use the program user needs only a computer equipped with a microphone and a whistle. Since the blow itself is in the same spectrum range as speech, the user needs whistle with higher frequency. For this reason the experiment was conducted with a dog whistle generating acoustic wave with the energy concentrated approx. at a frequency of f = 4 kHz Experiments were done on the ASUS UX305C laptop with a built-in microphone.

Fig. 2.1 Audio waveform (top) and the corresponding spectrum of a whistle indicate a tone at a frequency of f = 4 kHz (bottom)

Experiments were done in the quiet room with some noise from a background (street noise) and other interfering acoustic signals from a TV and speech.

2.1 Morse decoding

By blowing the whistle the user generates a signal consisting of short and long whistles. The signal is decoded according to Morse Alphabet. The Morse Alphabet was designed in such a way that the characters in the English alphabet with the highest frequency of occurrence were marked with shorter sequences [10].

Fig. 2.2 Morse code (obtained from https://pl.wikipedia.org/wiki/Kod_Morse’a)

To record sound, the program avails of PyAudio open-source library. The recording parameters of the audio recording are presented below:

CHUNK = 1024

FORMAT = pyaudio.paInt16

CHANNELS = 1

RATE = 44100

RECORD_SECONDS = 4

These parameters settings mean that the user has 4 seconds to evoke each character. The audio is recorded in the mono mode (CHANNELS = 1) with a rate of 44100 samples per second. The program uses chunks of data as a buffer. Since it is impossible to process the continuous amount of data from a microphone, chunks of data have to be used due to limited processing power. Chunking the data makes the stream flow easier and prevents memory leaks [11]. Each signal sample is coded in 16 bytes.

The frequency spectrum is calculated by the fast Fourier transform method provided by fft pack in the NumPy library.

��(��)= ∑ ������ ���� �� 1 ��=0 , 0≤k≤N-1, where N=1024

A(k) is sequence of frequencies (complex numbers), ���� - real sequence of recorded signal and �� =����2�� ��

To limit the range of desired frequencies, the ideal band-pass filter is designated, which is characterized by the following parameters: low cutoff frequency (fl) =3 8 kHz, upper cutoff frequency (fh) = 4 2 kHz The purpose of this filter is to remain only center frequency of whistle (f0 = 4 kHz) and the bandwidth of 400 Hz.

The filtered signal is obtained by the inverse fast Fourier transform.

Fig. 2.3 The plot of the filtered signal

Then envelope of the signal is calculated (explanation of calculation: see chapter 2.1.2) . Since the size of convolved signal is M+Y-1, where M is the size of original signal and Y is the size of the convolution kernel, after this stage, the length of the signal is increased by N-1.

Fig. 2.4 Envelope of the signal

Then the threshold (ths) is set manually in order to accomplish rectangular signal. After conducting few experiments, the threshold was set to 0.15, which corresponds to the 15% of height of the normalized signal:

If x<ths, x=0, else x>ths, x=1.

Fig. 2.4 Rectangular signal

At the final stage, the program calculates the length of each pulses by the method of edge detection. The signal is decoded to match the Morse code alphabet A whistle which lasts longer than y=0.261s is considered to be long one (hyphen). Similarly, a whistle lasting shorter than y is considered to be a short one (dot).

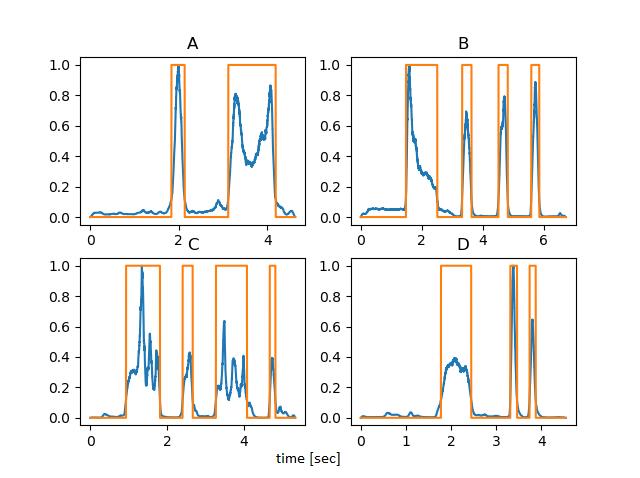

Rectangular signal examples corresponding to decoded letters: A, B, C and D are shown in Fig.2.5.

Fig. 2.5 Results of the detected letters: A, B, C and D

2.1.1 Algorithm for the Morse decoding

2.6 Block diagram of the Morse decoding

Fig.

Note: From the theory, convolution in the time domain corresponds to multiplication frequency domain. From this point of view, in order to filter the signal it is possible to convolve the origin signal by the signal of pure whistle tone. However this operation takes more time in Python language.

2.1.2 Envelope

The envelope is obtained by the convolution of original signal and kernel h1 = [1, 1, … , 1]H, where H is the approximate distance between two closest peaks in the original signal. Then H is set to 5000.

Result:

Fig. 2.7 Signal envelope obtained by the convolving the signal with h1 filter impulse response

2.1.3

Edge detection

The edge detection method was used to determine the length of each pulses in the rectangular signal. Edge are detected by the convolution of rectangular signal with the kernel with parameters h2= [-1, 1].

Example result:

Fig. 2.8 Edge detection, rectangular signal (top), detected edges (bottom)

2.1.4 Median filtering

To obtain an envelope of the signal one can use the median filter. The main idea of the median filter is to run through the signal entry by entry, replacing each entry with the median of neighboring entries. In the program, the window size of the median filter is 801 samples which corresponds to 0.1 seconds. The median filter is applied to the signal after decimation to 12000 samples. Then, the envelope is obtained, later processing is possible, however this solution is time consuming. Benchmark test indicated 8.15 seconds for this solution.

Result:

Fig. 2.9 Envelope by the median filter

2.1.5 Applications:

Since the program detects the duration of whistle signal, shortcuts can be assigned to the most popular program for PC. Such program was tested.

Table 2.1 Shortcuts for the computer’s programs and commands

Shortcut Program/command

dot

Internet browser dot, dot Mail reader dot, dot, dot calendar

hyphen, dot, dot Notepad dot, hyphen Word

hyphen, hyphen Turn off hyphen Hibernate hyphen, dot reset

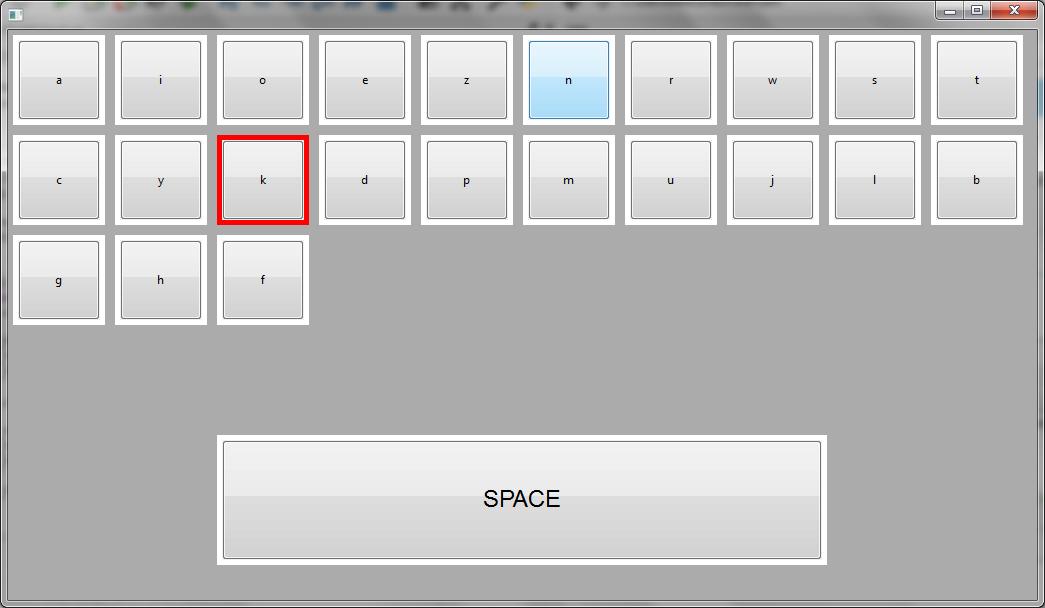

2.2 Keyboard interface

For this purpose an on-screen keyboard was designed. Every button in the keyboard is highlighted for 0.7 seconds. When the button is highlighted, the user can whistle in order to evoke the highlighted character on the button. The time interval of 0.7 second is sufficient for the user to react. The buttons are put in an order corresponding to the frequency of occurrence in the Polish alphabet [12]. To type faster the next letter, after whistle detection, the button highlighting starts again. For the better tempo, all digits were erased from the keyboard.

Fig. 2.10 The keyboard interface

The application is running in three parallel threads. One is responsible for the GUI layout. The GUI was designed in the wxFormBuilder. The GUI part about 40% of the whole program code. The second thread deals with highlighting the buttons. The last thread records the sound in real time and processes the signal.

The spectrum analyzer in the real time was programmed for the purpose of an on-line analysis of the whistle signals. The audio is recorded with the following parameters:

CHUNK = 1024 * 2,

FORMAT = pyaudio.paInt16,

CHANNELS = 1,

RATE = 44100.

The band pass filter passes only frequencies form 3800 to 4200 Hz. When the magnitude of the signal is higher than specified threshold, the button is evoked. The threshold is set to 65000. Since one whistle could evoke one button many times, the button can be pressed only once at a row. It means that the user cannot print two the same characters one by one.

2.2.1 The algorithm for whistle detection

Fig 2.11 Block diagram for the whistle detection

2.2.2 The Goertzel Algorithm

This method is widely used in dual-tone multi-frequency signaling (DTMF). Since the Discrete Fourier Transform (DFT) requires N2 complex multiplications and N-1 complex addition. It can be reduced to Nlog2N by the Fast Fourier Transform algorithm (FFT) However, it is more convenient to use Goertzel Algorithm for detecting only few bands. When one is known which exactly band has to be detected, it is necessary to calculate only the selected harmonic components of the frequency spectrum. For this purpose the Goertzel algorithm requires only N+2 multiplications and 2N+1 additions. It is a huge improvement in comparison to the DFT. In practice, this means that it is beneficial to replace the FFT, by the Goertzel's algorithm, when the number of harmonic components necessary for calculation is less than log2N [13].

The optimized Goertzel algorithm:

Nterms defined here #block size

Kterm selected here #target frequency

ω = 2 * π * Kterm / Nterms;

cr = cos(ω);

ci = sin(ω); coeff = 2 * cr;

sprev = 0; sprev2 = 0; for each index n in range 0 to Nterms-1

s = x[n] + coeff * sprev - sprev2;

sprev2 = sprev; #sprev2- the value of sprev last time or value of s two times ago

sprev = s; #sprev the value of s last time end

power = sprev2 * sprev2 + sprev * sprev - coeff * sprev * sprev2;

The algorithm, the window consisting of 1024 samples of 44100 sampling rate, was executed in order to detect if the whistle tone is present in the audio record. The algorithm detected the whistle tone . Unfortunately, this iteration was time consuming when implemented in the Python environment. The time bechmark test indicated 27.44 seconds for this operation.

3. Tests

Subjects were asked to imagine themselves as disabled person, who can only communicate with the computer by whistling. The users were asked to test both methods –Morse decoding and keyboard interface. The task for the user was to write their names. After the test, participants were asked to fill the usability survey, known as the System Usability Scale (SUS) questionnaire [14].

Subject 1 (Mateusz) was a 23 years old male. Subject 2 (Monika) was a 50 years old woman. Subject 3 (Bronek) a 75 years old male. Subject 4 (Ula) was a 75 years old woman. Subject 5 (Marian) was a 78 years old man. Subject 6 (Hubert) was a 22 years old male. Subject 7 (Piotr) was a 50 years old male.

Subject 3 refused to test Morse decoding. Subject 5 tried the Morse decoding, but he did not succeed in completing the task.

For subjects 4 and 5, 0.7 second for the button highlighting was too fast. The time highlighting was increased to 1 second.

All subjects claimed that they had felt tired after using Morse decoding.

3.1 Results

Table 3.1 Measured time for typing subjects’ names by two methods a) Morse decoding, b)keyboard interface Subject Morse decoding [sec] (errors)

(1)

(1)

[sec] (errors)

The time required for writing a predefined text commands was tested. The commands are in English, thus the keys in the keyboard are sorted by their frequency in the English alphabet [15]

Table 3.2 Measured time for example commands

Commands Time [sec] (errors) water 21

I am hungry 84.38 (1)

22.92 (3)

(3)

3.2 System user satisfaction questionnaire:

Fig. 3.1 SUS questionnaire (obtained from www.usability.gov)

Table 3.3 Marks for the questions from the SUS questionnaire

1. I think that I would like to use this system frequently

2. I found the system unnecessarily complex

3. I thought that the system was easy to use

4. I think I would need the support of a technical person to be able to use this system 1.00

5. I found the various functions in this system were well integrated

6. I thought there was too much inconsistency in this system

7. I would imagine that most people would learn to use this system very quickly

8. I found the system very cumbersome to use

9. I felt very confident using the system

10. I needed to learn a lot of things before I could get going with this system 1.86

Note: The system was also tested outdoor. For the outdoor test, the system was highly inaccurate. The whistles very often were not detected. To detect the signal stronger blows from the user directly to the microphone were required

Overall, users were asked to type 75 character (including space), during tests errors have occurred 10 times. It states that 87% of all characters were recognized correctly.

It can be observed that for older users (>70 years), the highlighting time is too fast. It implies, that to obtain satisfactory efficiency, the highlighting time has to be adjusted for some users individually.

4. Conclusions and summary

The touchless human-computer interface controlled by whistling was designed. The system works in real time. The system requires only a whistle and a microphone. The high accuracy of detection was achieved - 87% of all characters were typed correctly. Since only few errors occurred during writing, it can be said that the system is very accurate. Longer sentences can be written by the users. However, still there are ways to improve the system, like application of algorithms requiring less computational power or dictionary methods to improve efficiency of typing.

The SUS questionnaire has shown that the system is well apprised by the users. According to their opinion, the system is easy to use, it is not unnecessarily complex. There is no need for a help from other people. The users can adopt system quickly without the need of special training.

It seems that typing by the Morse method is too complicated for the users. This is because, the user has to be provided with the Morse alphabet table and he or she needs time to learn how to use the system efficiently. What is more, Morse decoding requires a lot of blowing, thereby users feel quickly tired when using this method.

Instead of using Morse decoding for writing, users prefer to use this method for opening application like Internet browser, mail reader, or the office pack.

The most popular mistake is the too long blow causing printing of the next character. Also it happens that the user overlooks the desired character. Then the user has to wait throughout the entire cycle in which all alphabet characters are highlighted Also, waiting for the space character is time consuming. Thus, a special signal for the space character should be assigned.

The major advantage of the system is that there is no need for specialized equipment. It is a very affordable solution since only a whistle and a microphone is required.

To avoid noise from the whistle, one can use a whistle with higher frequency, for example a special whistle for a dog, which is inaudible for humans.

In future work, one can add the dictionary methods such as the ones implemented in the smartphones. After entering few characters and basing on the user’s style of writing, there can be suggestions of words for the user. When this will be achieved, the keyboard can be

supplied with more functions, e.g. there can be a row of digits, special characters and function buttons like escape, backspace, and enter character added.

Also, one can implement the Goertzel algorithm to reduce the required processing power. If the user will use the whistle with a frequency of 4 kHz, the sampling rate (remembering about the sampling theorem) can be decreased to 10,000 samples per second. Moreover, further signal processing is needed to distinguish sounds occurring at a similar frequency bandwidth as the frequency of the whistle.

5. References:

[1] A. Królak, P Strumiłło, Eye-blink detection system for human–computer interaction, Universal Access in the Information Society November 2012, Volume 11, Issue 4, pp 409-419

[2] D.J. McFarland, T.M. Vaughan, Brain-Computer Interfaces: Lab Experiments to Real-World Applications, in Progress in Brain Research, 2016

[3] Compusult Services, www.compusult.com (June 26, 2019)

[4] Niu, S. et al. "Tongue-able interfaces: Prototyping and evaluating camera based tongue gesture input system," Smart Health (2018)

[5] P Strumiłło, A Materka, A Królak, Human-computer interaction systems for people with disabilities (in Polish), sep.p.lodz.pl/biuletyn/sep_1_2011.pdf

[6] M. Sikorski, Human-Computer interaction (in Polish), Wydawnictwo Polsko-Japońskiej Wyższej Szkoły Technik Komputerowych

[7] K F, Leem SK, Ho Cho S, Human–computer interaction using radio sensor for people with severe disability, Sensors and amp; Actuators: A.Physical (2018), https://doi.org/10.1016/j.sna.2018.08.051

[8] B. Cowley, M. Filetti, K. Lukander, J. Torniainen, A. Henelius,L. Ahonen, O. Barral, I. Kosunen, T. Valtonen, M. Huotilainen,N. Ravaja, G. Jacucci. The Psychophysiology Primer: a guide tomethods and a broad review with a focus on human-computer in-teraction. Foundations and Trends in Human-Computer Interac-tion, vol. 9, no. 3-4, pp. 150–307, 2016

[9] N. Moraveji, B. Olson, T. Nguyen, J. Hee. Peripheral paced respiration: influencing user physiology during information work, Oct 2011

[10] Wikipedia, https://en.wikipedia.org/wiki/Morse_code (July 2019)

[11] Stackexchange – digital signal processing, https://dsp.stackexchange.com/questions/13728/what-arechunks-when-recording-a-voice-signal(June 2019)

[12] PWN, https://sjp.pwn.pl/poradnia/haslo/frekwencja-liter-w-polskich-tekstach;7072.html (July 2019)

[13] G Dobiński, Algorithms for determining the parameters characterizing the impact of the probe with the surface of the material examined with the atomic force microscope (in Polish), Lodz 2015

[14] Usability.gov, www.usability.gov (June, 2019)

[15] The Oxford Math Center, http://www.oxfordmathcenter.com/drupal7/node/353, June 2019

Fig 2.2 - https://pl.wikipedia.org/wiki/Kod_Morse%E2%80%99a