How To Scrape Amazon Best Seller Listing

Using Python?

If you're planning to sell your newly developed product on Amazon, you'll need to know the path to list your product on the platform to get maximum revenue. It will give you an idea of the potential challenges, how to mitigate them and what modifications are required in your product and marketing strategy, etc.

https://www.iwebdatascraping.com/social-media-scraper.php https://www.iwebdatascraping.com/scrape-amazon-best-sellers-rankings-data-and-stay-competitive.php

In this tutorial, we'll walk through the process of extracting amazon best seller list data using python to help you with your market research.

What is data scraping from websites?

It is an automatic process to extract the desired data from websites in a usable format in large quantities for market research purposes.

https://www.iwebdatascraping.com/ecommerce-data-scraping.php

What are the reasons for scraping data from a website?

Web data scraping is generally used for marketing purposes where businesses extract the data from the websites of their competitors like product/service prices, articles, and top-performing product details to plan a better marketing strategy. However, Data extraction is not only limited to marketing. It is also valuable for machine learning, SEO, and many more fields.

https://www.iwebdatascraping.com/amazon-scraper.php

How to do the Amazon best seller listing scraping?

Amazon is an American company that works in online eCommerce business and cloud computing across the globe. On this platform, a huge number of enterprises, and brands, sell their products to their customers. For example, Books, Sports gadgets, pet food, etc.

https://www.iwebdatascraping.com/scrape-tmall-com-product-data.php

Here, best sellers are listed in alphabetical order and can be seen in Amazon's best sellers’ category. This page gives a list of more than 40 categories regrouped. Out of which, we will guide you to scrape amazon bestseller lists in different categories using python web scraping. We Will need to have python libraries Beautiful soup and Requests to crawl, parse and mine the data we want from the page to scrape Amazon best seller listings using python.

We will follow the below-given steps for our needs to run Amazon scraper using python.

Install required python libraries

https://www.iwebdatascraping.com/amazon-scraper.php

Download and parse the HTML code of the source page using the request and beautifulsoup libraries to known product category URLs.

Repeat the above step to obtain each product URL.

https://www.iwebdatascraping.com/e-commers-scraper.php https://www.iwebdatascraping.com/e-commers-scraper.php

Scrape Data from each webpage.

Compile the scraped information from each webpage in a python directory.

Save the compiled data in a usable format using the python library pandas.

Once the data extraction is done, we'll save all the data in CSV format, as shown below.

https://www.iwebdatascraping.com/scrape-taobao-product-data.php

You can check the full reference code in the link below.

How do we execute the above Code?

To run the developed code, you can go to the Run button at the top of the page and select the Run on Binder option.

Note: Each category shows 100 products on 2 pages, with 40 categories of each department being shown. But a few pages are not accessible due to captcha issues.

Install necessary python libraries to be used

Install request and beautiful soup libraries with pip command, respectively since these libraries are mandatory for extracting the data from the webpage.

Save HTML page source code

Download and save the source code of the HTML page using requests and beautiful soup python libraries to extract product categories URLs.

https://www.iwebdatascraping.com/taobao-scraper.php

To download the page, we'll use the get function from the library requests. Further, we'll define the header string of the user agent so that servers will be compatible with the application, OS, and requesting version. It is helpful to show that we are not scraping the data. The command requests.get gives the output containing data from a targeted webpage. While the status_code property checks if the output was accurate or not. To check that, we can manually verify the HTTP response code, which lies between 200 and 299, for successful output.

We can use this response.text to see the downloaded page content. Adding to it, we can also check the length of the downloaded data using len(response.text). In this example, we've just extracted the first 500 characters using the print command.

Now, let's store the extracted file in an HTML extension.

with open("bestseller.html","w") as f: f.write(response.text)

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

After saving, we can check the file using the File>Open menu in jupyter and open the bestseller.html file from the files shown on the screen.

To know whether the extracted data has been saved or not, there is an option where you can check the file size. If the file size is around 250 kB, the process is successful. However, if the file size is 6.6kB, means the process has failed. Reasons to fail could be security or a captcha.

https://www.iwebdatascraping.com/web-scraping-services.php

This is what we can observe when we open the file.

This is what we can observe when we open the file.

Though this looks like the original web page, it's just a copy of that page, and we can't open any link from the copy. To check and customize the source code of the saved file, click File>Open in Jupyter, then opt for the .html file for this operation from the lists and click on the edit option.

https://www.iwebdatascraping.com/scrape-amazon-product-data.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

So far, we have just got the data for the first 500 characters. Now, we'll move ahead to parse the web page to check the type using BeautifulSoup.

Though this looks like the original web page, it's just a copy of that page, and we can't open any link from the copy. To check and customize the source code of the saved file, click File>Open in Jupyter, then opt for the .html file for this operation from the lists and click on the edit option.

After finishing the above process, let's find a list of item topic and categories, their titles and URLs, and store them in the device.

############## find and get different item categories description and Url at any department

topics_link = 0 for tag in hearder_link_tags[1:]:

# print(tag) topics_link.append({

"title": tag.text.strip(), "url": tag.find("a")["href"] })

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

########store in dictionary

table_topics = {k:[ d.get(k) for d in topics_link] for k in set().union(*topics_link)}

Table_topics

{'url':

['https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Amazon-Launchpad/zgbs/boost/ref=zg_bs_nav_

0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Appliances/zgbs/appliances/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Appstore-Android/zgbs/mobile-apps/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Arts-Crafts-Sewing/zgbs/arts-crafts/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Audible-Audiobooks/zgbs/audible/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Automotive/zgbs/automotive/ref=zg_bs_nav_0/1380735230-1420505',

'https://www.amazon.com/Best-Sellers-Baby/zgbs/baby-products/ref=zg_bs_nav_ 0/138-0735230-1420505', 'https://www.amazon.com/Best-Sellers-Beauty/zgbs/beauty/ref=zg_bs_nav_0/138-

0735230-1420505',

'https://www.amazon.com/best-sellers-books-Amazon/zgbs/books/ref=zg_bs_nav_ 0/138-0735230-1420505', Gift Cards Amazon Hom

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

'https://www.amazon.com/best-sellers-music-albums/zgbs/music/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/best-sellers-camera-photo/zgbs/photo/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers/zgbs/wireless/ref=zg_bs_nav_0/138-07352301420505',

'https://www.amazon.com/Best-Sellers/zgbs/fashion/ref=zg_bs_nav_0/138-07352301420505',

'https://www.amazon.com/Best-Sellers-Collectible-Coins/zgbs/coins/ref=zg_bs_nav_ 0/138-0735230-1420505', 'https://www.amazon.com/Best-Sellers-Computers-Accessories/zgbs/pc/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers/zgbs/digital-educational-resources/ref=zg_bs_nav_

0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-MP3-Downloads/zgbs/dmusic/ref=zg_bs_nav_0/1380735230-1420505',

'https://www.amazon.com/Best-Sellers-Electronics/zgbs/electronics/ref=zg_bs_nav_0/138- 0735230-1420505',

'https://www.amazon.com/Best-Sellers-Entertainment-Collectibles/zgbs/entertainmentcollectibles/ref=zg_bs_nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Gift-Cards/zgbs/gift-cards/ref=zg_bs_nav_0/138- men's Slim0735230-1420505',

'https://www.amazon.com/Best-Sellers-Grocery-Gourmet-Food/zgbs/grocery/ref=zg_bs_ nav_0/138-0735230-1420505',

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

'https://www.amazon.com/Best-Sellers-Handmade/zgbs/handmade/ref=zg_bs_nav_0/138- 0735230-1420505',

'https://www.amazon.com/Best-Sellers-Health-Personal-Care/zgbs/hpc/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Home-Kitch

en/zgbs/home-garden/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Industrial-Scientific/zgbs/industrial/ref=zg_bs_nav_

0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Kindle-Store/zgbs/digital-text/ref=zg_bs_nav_0/138- 0735230-1420505',

'https://www.amazon.com/Best-Sellers-Kitchen-Dining/zgbs/kitchen/ref=zg_bs_nav_0/138- 0735230-1420505',

'https://www.amazon.com/Best-Sellers-Magazines/zgbs/magazines/ref=zg_bs_nav_0/138- 0735230-1420505',

'https://www.amazon.com/best-sellers-mov -

ies-TV-DVD-Blu-ray/zgbs/movies-tv/ref=zg_bs_nav

_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Home-Kitchen/zgbs/home-garden/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Industrial-Scientific/zgbs/industrial/ref=zg_bs_nav_

0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Kindle-Store/zgbs/digital-text/ref=zg_bs_nav_0/138- 0735230-1420505',

'https://www.amazon.com/Best-Sellers-Kitchen-Dining/zgbs/kitchen/ref=zg_bs_nav_0/138- 0735230-1420505',

'https://www.amazon.com/Best-Sellers-Magazines/zgbs/magazines/ref=zg_bs_nav_0/138- 0735230-1420505', 'https://www.amazon.com/best-sellers-movies-TV-DVD-Blu-ray/zgbs/movies-tv/ref=zg_bs_nav

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

_0/138-0735230-1420505',

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

'https://www.amazon.com/Best-Sellers-Musical-Instruments/zgbs/musical-instruments/ref=zg_ bs_nav_0/138-0735230-1420505', 'https://www.amazon.com/Best-Sellers-Office-Products/zgbs/office-products/ref=zg_bs_nav_ Hom

0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Garden-Outdoor/zgbs/lawn-garden/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Pet-Supplies/zgbs/pet-supplies/ref=zg_bs_nav_0/138- 0735230-1420505',

'https://www.amazon.com/best-sellers-software/zgbs/software/ref=zg_bs_nav_0/138-0735230 -1420505',

'https://www.amazon.com/Best-Sellers-Sports-Outdoors/zgbs/sporting-goods/ref=zg_bs_nav_

0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Sports-Collectibles/zgbs/sports-collectibles/ref=zg_bs__ nav_0/138-0735230-1420505',

'https://www.amazon.com/Best-Sellers-Home-Improvement/zgbs/hi/ref=zg_bs_nav_0/1380735230-1420505',

'https://www.amazon.com/Best-Sellers-Toys-Games/zgbs/toys-and-games/ref=zg_bs_nav_ 0/138-0735230-1420505',

'https://www.amazon.com/best-sellers-video-games/zgbs/videogames/ref=zg_bs_nav_0/ 138-0735230-1420505'], 'title': ['Amazon Devices & Accessories',

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

'Amazon Launchpad', 'Appliances',

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

'Apps & Games',

'Arts, Crafts & Sewing', GAP Women's Ribbed Tank Top Amazon Essentials

Women's Slim- 'Audible Books & Originals',

Fit Tank, Pack of 2

$13.50

'Automotive',

'Baby', 'Beauty & Personal Care',

'Books',

'CDs & Vinyl',

'Camera & Photo Products', 'Cell Phones & Accessories',

'Clothing, Shoes & Jewelry', 'Computers & Accessories',

'Collectible Currencies',

'Digital Educational Resources', 'Digital Music',

'Electronics',

'Entertainment Collectibles',

'Gift Cards',

'Grocery & Gourmet Food', 'Handmade Products',

'Health & Household',

'Home & Kitchen',

'Industrial & Scientific',

'Kindle Store',

'Kitchen & Dining',

'Magazine Subscriptions',

'Movies & TV',

'Musical Instruments',

'Office Products',

'Patio, Lawn & Garden',

'Pet Supplies',

'Software',

'Sports & Outdoors', Down

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

'Sports Collectibles',

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

'Tools & Home Improvement',

'Toys & Games',

'Video Games']}

Here, we've extracted the list of 40 bestseller product categories accurately.

Repeat the above step for all the above-obtained categories using respective URLs

To avoid access being denied by a captcha on several pages while extracting data; we’ll import the time library to apply the sleeping time of a few seconds.

import time

import numpy as np

We are going to define a function, namely parse_page, to extract and parse every single page link saved from the top ranking best-selling product of any category.

def fetch(url):

"The function take url and headers to download and parse the page using request.get and BeautifulSoup library

it return a parent tag of typeBeautifulSoup object

Argument:

-url(string): web page url to be downloaded and parse

Return:

-doc(Beautiful Object): it's the parent tag containing the information that we need parsed from the page""

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

HEADERS= {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:66.0) Gecko/ 20100101 Firefox/66.0", "Accept-Encoding":"gzip, deflate", "Accept":"text/html,application/xhtml+ xml,application/xml;q=0.9,*/*;q=0.8", "DNT":"1","Connection":"close", "Upgrade-Insecure-Requests ":"1"} response = requests.get(url, headers= HEADERS) Top if response.status_code != 200:

print("Status code:", response.status_code)

raise Exception("Failed to link to web page + topic_url) " page_content = BeautifulSoup(response.text,"html.parser") doc = page_content.findAll('div', attrs={'class':'a-section a-spacing-none aok-relative'}) return doc

def parse_page(table_topics,pageNo):

"""The function take all topic categories and number of page to parse for each topic as input, applyget request to download each page, the use Beautifulsoup to parse the page. the function output are article_tags list containing all pages content, t_description list containing correspponding topic or categories then an url list for corresponding Url.

Argument:

-table_topics (dict): dictionary containing topic description and url -pageNo(int): number of page to parse per topic

Return:

-article_tags(list): list containing successfully parsed pages content where each index is a Beautifulsoup type

-t_description (list): list containing successfully parsed topic description

-t_url(list): list containing successfully parsed page topic url

-fail_tags(list): list containing pages url that failed first parsing

-failed_topic(list): list contaning pages topic description that failed first parsing

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

article_tags,t_description, t_url, fail_tags, failed_topic =[], [], [], [], [] for i in range(0,len (table_topics["url"])):

# take the url

topic_url = table_topics["url"][i]

topics_description = table_topics["title"][i]

try: for j in range(1,pageNo+1):

ref=topic_url.find("ref") url = topic_url[:ref]+"ref=zg_bs_pg_"+str(j)+"?_encoding=UTF8&pg="+str(j) time.sleep(10) #use resquest to obtain HMTL page content +str(pageNo)+

doc = fetch(url)

if len(doc)==0:

print("failed to parse page{}".format(url))

Wo fail_tags.append(url)

failed_topic.append(topics_description)

else: print("Sucsessfully parse:", url) article_tags.append(doc)

t_description.append(topics_description) t_url.append(topic_url)

except Exception as e: print(e)

return article_tags,t_description,t_url,fail_tags, failed_topic

If the parsing fails in the first attempt, we'll have a provision for that too. To achieve this, we will define a function reparse_failed_page and will apply it whenever the first attempt fails. Further, we will keep it in a while loop to repeat until it gives the output.

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

def reparse_failed_page(fail_page_url, failed_topic): Registry om Fashion

Gift Cards Amazon Hom """The function take topic categories url, and description that failed to be accessible due to captcha in the first parsing process, try to fetch and parse thoses page for a second time. the function return article_tags list containing all pages content, topic_description,topic_url and other pages url and topic that failed to load content again

Argument:

-fail_page_url(dict): list containing failed first parsing web page url ps-failed_topic(int): list contaning failed first parsing ictionary containing topic description and url

Return:

-article_tags2(list): list containing successfully parsed pages content where each index is a Beautifulsoup type

-t_description (list): list containing successfully parsed topic description

-t_url(list): list containing successfully parsed page topic url

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

-fail_p(list): list containing pages url that failed again -fail_t(list): list contaning pages topic description that failed gain print("check if there is any failed pages, then print number:", len(fail_page_url)) article_tag2, topic_url, topic_d, fail_p, fail_t = [],0,0,0,0 try: Ama Women's Slimfor i in range(len(fail_page_url)): time.sleep(20)

doc = fetch(url)

if len(doc)==0:

print("page{}failed again".format(fail_page_url[i])) fail_p.append(fail_page_url[i]) fail_t.append(failed_topic[i]) else:

article_tag2.append(doc) topic_url.append(fail_page_url[i]) topic_d.append(failed_topic[i]) except Exception as e: print(e)

return article_tag2,topic_d,topic_url,fail_p,fail_t def parse(table_topics,pageNo):

To get the maximum number of pages while parsing, we have defined the function parse to achieve 2 levels of parsing.

Music

"""The function take table_topics, and number of page to parse for ecah topic url, the main purpose of this funtion is to realize a double attempt to parse maximum number of pages it can .It's a combination of result getting from first and second parse.

Argument

-table_topics(dict): dictionary containing topic description and url

-pageNo(int): number of page to parse per topic Return:

-all_arcticle_tag(list): list containing all successfully parsed pages content where

each index is a Beautifulsoup type

-all_topics_description (list): list containing all successfully parsed topic description -all_topics_url(list): list containing all successfully parsed page topic url

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

if len(fail_tags)!=0:

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

article_tags,t_description,t_url,fail_tags,failed_topic = parse_page(table_topics,pageNo)

article_tags2,t_description2,t_url2,fail_tags2, failed_topic2 = reparse_failed_page Ribbed Tank Top Amaz Women's Slim(fail_tags,failed_topic)

all_arcticle_tag = [*article_tags, *article_tags2]

all_topics_description = [*t_description,*t_description2]

all_topics_url = [*t_url,*t_url2] #return all_arcticle_tag, all_topics_description, all_topics_url

else:

print("successfully parsed all pages")

all_arcticle_tag = article_tags

all_topics_description =t_description

all_topics_url = t_url

# return article_tags,t_description,t_url,fail_tags,failed_topic return all_arcticle_tag, all_topics_description,all_topics_url all_arcticle_tag, all_topics_description, all_topics_url = parse(table_topics,2)

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_

bs_pg_1?_encoding=UTF8&pg=1 Successfully parse: https://www.amazon.com/Best-Sellers/zgbs/amazon-devices/ref=zg_

bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Amazon-Launchpad/zgbs/boost/ ref=zg_bs_pg_1?_encoding=UTF8&pg=1

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Amazon-Launchpad/zgbs/boost/ ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Appliances/zgbs/appliances/ref= zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Applianc

es/zgbs/appliances/ref=

zg_bs_pg_2?_encoding=UTF8&pg=2 Sucsessfully parse: https://www.amazon.com/Best-Sellers-Appstore-Android/zgbs/mobileapps/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Appstore-Android/zgbs/mobilepps/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Arts-Crafts-Sewing/zgbs/artscrafts/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse:

https://www.amazon.com/Best-Sellers-Arts-Crafts-Sewing/zgbs/artscrafts/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Audible-Audiobooks/zgbs/audible/

ref=zg_bs_pg_1?_encoding=UTF8&pg=1 Sucsessfully parse: https://www.amazon.com/Best-Sellers-Audible-Audiobooks/zgbs/audible/

ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Automotive/zgbs/automotive/ref= zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Automotive/zgbs/automotive/ref= zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Baby/zgbs/baby-products/ref= zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Baby/zgbs/baby-products/ref= zg_bs_pg_2?_encoding=UTF8&pg=2

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Beauty/zgbs/beauty/ref=zg_ bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Beauty/zgbs/beauty/ref=zg__ bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-books-Amazon/zgbs/books/ref =zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-books-Amazon/zgbs/books/ref =zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-music-albums/zgbs/music/ref= zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-music-albums/zgbs/ music/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-cameraphoto/zgbs/photo/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-camera-photo/zgbs/photo/ref=zg_

bs_pg_2?_encoding=UTF8&pg=2

Amazon Hom

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/wireless/ref=zg_bs_pg_1?_

encoding=UTF8&pg=1

failed to parse pagehttps://www.amazon.com/Best-Sellers/zgbs/wireless/ref=zg_bs_pg_2?_

encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/fashion/ref=zg_bs_pg_1?_

encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers/zgbs/fashion/ref=zg_bs_pg_2?_

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

encoding=UTF8&pg=2

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Collectible-Coins/zgbs/coins/ref=zg _bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Handmade/zgbs/handmade/ref=zg

_bs_pg_1?_encoding=UTF8&pg=1 Sucsessfully parse: https://www.amazon.com/Best-Sellers-Handmade/zgbs/handmade/ref=zgazon Hom

_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Health-Personal-Care/zgbs/hpc/ref=zg

_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Health-Personal-Care/zgbs/hpc/ref=zg

_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Home-Kitchen/zgbs/home-garden/ref=zg

_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Home-Kitchen/zgbs/home-garden/ref

=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Industrial-Scientific/zgbs/industrial/ref =zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Industrial-Scientific/zgbs/industrial/ref =zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Kindle-Store/zgbs/digital-text/ref= zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Kindle-Store/zgbs/digital-text/ref= zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Kitchen-Dining/zgbs/kitchen/ref= zg_bs_pg_1?_encoding=UTF8&pg=1

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Kitchen-Dining/zgbs/kitchen/ref= zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Magazines/zgbs/magazines/ref=n's Slim-

zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Magazines/zgbs/magazines/ref= zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/best-sellers-movies-TV-DVD-Blu-ray/zgbs/ movies-tv/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-movies-TV-DVD-Blu-ray/zgbs/

movies-tv/ref=zg_bs_pg_2?_encoding=UTF8&pg=2 O Successfully parse: https://www.amazon.com/Best-Sellers-Musical-Instruments/zgbs/ musical-instruments/ref=zg_bs_pg_1?_encoding=UTF8&pg=1 Sucsessfully parse: https://www.amazon.com/Best-Sellers-Musical-Instruments/zgbs/

musical-instruments/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Office-Products/zgbs/office- products/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Office-Products/zgbs/officeproducts/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Garden-Outdoor/zgbs/lawngarden/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Garden-Outdoor/zgbs/lawngarden/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Pet-Supplies/zgbs/pet-supplies/ref=zg

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

_bs_pg_1?_encoding=UTF8&pg=1

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Pet-Supplies/zgbs/pet-supplies/ref=

zg_bs_pg_2?_encoding=UTF8&pg=2 Sucsessfully parse: https://www.amazon.com/best-sellers-software/zgbs/software/ref=zg_bs _pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-software/zgbs/software/ref=zg_bs__ pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Sports-Outdoors/zgbs/sportinggoods/ref=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Sports-Outdoors/zgbs/sportinggoods/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Successfully parse: https://www.amazon.com/Best-Sellers-Sports-Collectibles/zgbs/sports-

collectibles/ref=zg_bs_pg_1?_encoding=UTF8&pg=1 Sucsessfully parse: https://www.amazon.com/Best-Sellers-Sports-Collectibles/zgbs/sportscollectibles/ref=zg_bs_pg_2?_encoding=UTF8&pg=2

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Home-Improvement/zgbs/hi/ref

=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Home-Improvement/zgbs/hi/ref

=zg_bs_pg_2?_encoding=UTF8&pg=2 Sucsessfully parse: https://www.amazon.com/Best-Sellers-Toys-Games/zgbs/toys-and-games/ref

=zg_bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/Best-Sellers-Toys-Games/zgbs/toys-and-games/ref

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

=zg_bs_pg_2?_encoding=UTF8&pg=2 Sucsessfully parse: https://www.amazon.com/best-sellers-video-games/zgbs/videogames/ref=zg _bs_pg_1?_encoding=UTF8&pg=1

Sucsessfully parse: https://www.amazon.com/best-sellers-video-games/zgbs/videogames/ref=zg _bs_pg_2?_encoding=UTF8&pg=2

check if there is any failed pages, then print number: 4 pagehttps://www.amazon.com/Best-Sellers/zgbs/wireless/ref=zg_bs_pg_2?_encoding= UTF8&pg=2failed again

pagehttps://www.amazon.com/Best-Sellers-Collectible-Coins/zgbs/coins/ref=zg_bs_pg_2?_

encoding=UTF8&pg=2failed again

pagehttps://www.amazon.com/Best-Sellers-MP3-Downloads/zgbs/dmusic/ref=zg_bs_pg_1?_

encoding=UTF8&pg=1 failed again

pagehttps://www.amazon.com/Best-Sellers-MP3-Downloads/zgbs/dmusic/ref=zg_bs_pg_2?_ encoding=UTF8&pg=2failed again

THere, we have successfully mined 76 pages out of 80 which is good enough.

Extract information data from each page.

In this part, we have defined a function to scrape the data fields like product description, min and max price of the product, product reviews, image URL, product rating, etc. this data as

What is the definition of an HTML element?

An HTML element is a tool consisting of child nodes, tag names and attributes, text nodes, and a few other factors. This can be used to scrape data. Further, the elementary can also

What are HTML Tags, Child Nodes, and Attributes?

The easiest way to know an HTML tag is to understand how a device knows what content to display on the screen, where to show it, and how to show it. Further, differentiating between titles, headings, paragraphs, and images. All this is done using HTML tags on the computer. It is a web-based command to push browsers to take action on something.

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

HTML tags have syntaxes like HTML, body, head, and div. It is to show their meaning, and define how the browser will perform as per the command considering that these tags also represent keywords.

Talking about attributes of HTML like href, src, alt, class, and id, they are modifiers of HTML element type. They are used inside opening tags to manage elements.

Child nodes are elements nested in another element.

We can use different types of HTML tags and attributes in single-page content. The below picture is on HTML tags and attributes to find the price tag information of the product.manipulate HTML.bees extracted using an HTML element from the above-parsed data.

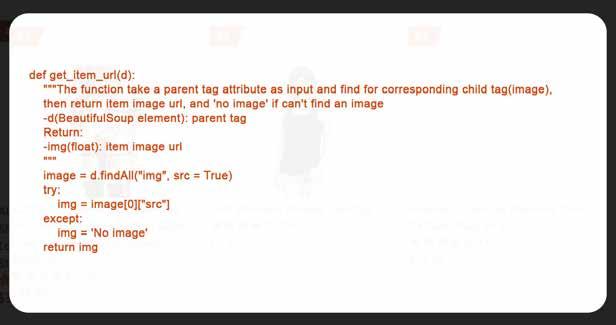

To get the product description, we will define get_topic_url_item_descriptuon as shown in the below code.

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

After that, we have defined get_item_price to scrape minimum and maximum price of the products.

https://www.iwebdatascraping.com/web-scraping-services.php

Now, we defined get_item_review and get_item_rate functions to get product reviews and rates.

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

Lastly we defined get_item_url to mine the image URL of the product.

Thus we have extracted all tube relevant data for the bestseller products and stored them in the usable format.

Compile the Scraped data from each page into a Python Library

We have designated the function get_info to compile all the product data in a python library.

def get_info(article_tags,t_description,t_url):

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

Registry Fashion Gift Cards Amazon Hom Ideas """The function take a list of pages content which each index is a Beautiful element that will be use to find parent tag, list of topic description and topic url then the return a dictionary made of list of each item information data such as: his corresponding topic, the topic url, the item description, minimum price(maximum price if exist), item rating, costumer review, and item image url Argument: Core -article_tags(list): list containing all pages content where each index is a Beautifulsoup type

-t_description (list): list containing topic description

-t_url(list): list containing topic url

Return:-dictionary (dict): dictionary containing all item information data taken from each parse page topic www

topic_description, topics_url, item, item_url = [,0,0,0

minimum_price, maximum_price, rating, costuomer_review = [],0,0,0 for idx in range(0,len (article_tags)): doc = article_tags[idx]#.findAll('div', attrs={'class':'a-section a-spacing-none men's Slime

aok-relative'})for d in doc :

names,topic_name,topic_url = get_topic_url_item_description (d,t_description[idx],t_url[idx])

34.98

min_price,max_price = get_item_price(d)

rate = get_item_rate(d)

review = get_item_review(d)

00 See Meurl = get_item_url(d)

####put each item data inside corresponding list item.append(names) topic_description.append(topic_name) topics_url.append(topic_url)

minimum_price.append(min_price) maximum_price.append(max_price) rating.append(rate)

costuomer_review.append(review) item_url.append(url)

return {

"Topic": topic_description,

"Topic_url": topics_url,

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

"Item_description": item, "Rating out of 5": rating, "Minimum_price": minimum_price, Ribbed Tank Top

"Maximum_price": maximum_price,

"Review" :costuomer_review,

"Item Url" item_url}

After a couple of parsing attempts, we got the maximum products data from amazon. Now we will store it in a data frame using pandas

Store the information in a CSV file using Pandas

Let's begin to store the data in the data frame. dataframe = pd.DataFrame(data)

Topic Topic_url Item_description Rating out of 5 Minimum_price Maximum_price Review Item Url Amazon Hom 0 Amazon Devices & Accessories

https://www.amazon.com/Best-Sellers/zgbs/amazo... Fire TV Stick 4K streaming device with Alexa V... 4.7 39.90 0.00 615786 https:// images-na.ssl-images-amazon.com/images...

1 Amazon Devices & Accessories https://www.amazon.com/Best-Sellers/zgbs/amazo... Fire TV Stick (3rd Gen) with Alexa Voice Remot... 4.7 39.90 0.00 1884 https:// images-na.ssl-images-amazon.com/images... ops Tees & Blouses 2 Amazon Devices & Accessories https://www.amazon.com/Best-Sellers/zgbs/amazo... Echo Dot (3rd Gen) - Smart speaker with Alexa ... 4.7 39.90 0.00 1124438 https:// images-na.ssl-images-amazon.com/images...

4 Amazon Devices & Accessories https://www.amazon.com/Best-Sellers/zgbs/amazo... Amazon Smart Plug, works with Alexa - A Certif... 4.7 24.90 0.00 425111 https:// images-na.ssl-images-amazon.com/images...

3795 Video Games https://www.amazon.com/best-sellers-video-game...

PowerA Joy Con Comfort Grips for Nintendo Swit...4.7 9.88 0.00 21960

https://images-na.ssl-images-amazon.com/images...

3796 Video Games https://www.amazon.com/best-sellers-video-game...

Super Mario 3D All-Stars (Nintendo Switch) 4.8 58.00 0.00 15109 https://images-na.ssl-images-amazon.com/images...

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

3797 Video Games https://www.amazon.com/best-sellers-video-game...

Quest Link Cable 16ft, VOKOO Oculus Quest Link... 4.3 29.90 0.00

3576 https://images-na.ssl-images-amazon.com/images...

3798 Video Games https://www.amazon.com/best-sellers-video-game...

RUNMUS K8 Gaming Headset for PS4, Xbox One, PC... 4.5 21.80 0.00

47346 https://images-na.ssl-images-amazon.com/images...

3799 Video Games https://www.amazon.com/best-sellers-video-game...

NBA 2K21: 15,000 VC - PS4 [Digital Code] 4.5 4.99 99.99 454 https: //images-na.ssl-images-amazon.com/images...

We got the data in about 3800 rows and 8 columns using the process. Now let's save it in a CSV file using pandas. dataframe.to_csv('AmazonBestSeller.csv', index=None)

Here is how you can access the file - File>Opent

Now, Let's open the CSV file to print the first 5 lines from the data.

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.iwebdatascraping.com/mobile-apps-data-scraping.php

Finally, let's have a look at the product with the highest number of reviews by customers.

https://www.iwebdatascraping.com/web-scraping-services.php

Summary

In this post, we have gone through the below process to extract amazon bestseller listing using Python..

Library Installation

Parsing Bestseller HTML Page

Extracting Info from each page

Combining it in data frame

Converting and saving it in CSV format.

Here is what the CSV format code will look like by the end of the project.

https://www.iwebdatascraping.com/web-scraping-services.php

That’s it for this project. If you still are not getting the process, or you have a time limit for your business, iWeb Data scraping can help you with web scraping services, you are just a single mail away from us.

https://www.iwebdatascraping.com/web-scraping-services.php

https://www.facebook.com/iwebdatascraping#

+1 424 2264664

www.iwebdatascraping

info@iwebdatascraping.com

Social Media

https://www.instagram.com/iwebdatascrapingservice/

https://twitter.com/iwebdatascrape

https://www.linkedin.com/company/iwebdatascraping/

https://www.youtube.com/@iwebdatascraping

https://in.pinterest.com/iwebdatascraping/