International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

Shamanth D 1 , Dr. Rashmi C R2 , Dr. Shantala C P3

1PG Student, Dept. Of Computer Science & Engineering, Channabasaveshwara Institute of Technology, Gubbi, Karnataka, India

2Assistant Professor, Dept. Of Computer Science & Engineering, Channabasaveshwara Institute of Technology, Gubbi, Karnataka, India

3 Professor & Head, Dept. Of Computer Science & Engineering, Channabasaveshwara Institute of Technology, Gubbi, Karnataka, India

Abstract - Text-to-image generation is a new revolutionary field of Artificial Intelligence that combines the development of Natural Language Processing (NLP) and Computer Vision (CV) in recent years. This paper discussed in this report discusses the design and implementation of a deep learning model that can translate natural language description to realistic images by using Stable Diffusion and other supporting architectures, including GANs and Attentional GANs (AttnGAN).

The original GAN models or conventional image synthesisapproaches(suchastemplate-basedretrieval) were characterized by low-resolution, semantic mismatches, and low-adaptability. To overcome these constraints, this work combines latent diffusion models (Stable Diffusion v1.5) that are trained on the massive LAION-5B dataset, which are loaded via the Hugging Facediffuserslibrary.ItiswritteninaJupyterNotebook, giving the user an interactive environment to input text prompts, use positive and negative conditioning, and make real-time parameter changes,including guidance scale, denoising steps, and resolution.

The algorithm uses text preprocessing and embedding through CLIP encoders, latent space denoising, and refinement, to generate semantically correct and photorealistic images. This is evaluated against quantitative scores (Inception Score, Fréchet Inception Distance,SSIM,Precision/Recall)andsubjectivehuman judgement, so that realism, diversity, and semantic fidelity are balanced.

Findings indicate that GAN-based models are sharper andmoredetailed,butdiffusion-basedmodelsaremore semantically consistent, more likely to generalize, and more photorealistic. The system has been able to produce quality images in various fields such as art and design,education,healthcaresimulation,gamingandin production of digital media.

This paper does not only show how diffusion-based architectures could practically synthesize images using

text, but also brings to light issues like computational complexity, linguistic ambiguity, bias in datasets, and risks to the privacy of users. Finally, this paper demonstratestheuseofdeeplearningtoconnecthuman linguistic expression to visual creativity and offers an extensible and usable platform capable of generating cross-modal content.

Key Words: Text-to-ImageGeneration,StableDiffusion, Deep Learning, Generative Adversarial Networks, NaturalLanguageProcessing,CLIPEmbeddings,Image Synthesis.

In the modern digital landscape,visual mediaplays a vital role in conveying information, storytelling, and stimulatingcreativeprocessesacrossdisciplines.The exponential growth in artificial intelligence technologies,especiallyintherealmofdeeplearning, hastransformedhowcomputersinterpretandgenerate compleximagery.Akeybreakthroughinthisareaisthe concept ofText-to-Image Generation, whereby sophisticated algorithms synthesize original, highly realistic images based solely on detailed written descriptions.Thisinterdisciplinarytechniquebridges linguisticexpressionandvisualrepresentation,opening upnewpossibilitiesforinnovationinsectorssuchas advertising, digital art, interactive entertainment, virtuallearning,medicalsimulation,anduserinterface design.

Text-to-image generation involves training neural networkstograspthenuancesandintricaciesofhuman language,convertingabstractordescriptivesentences into visually rich and contextually relevant images. Unlikeconventionalimageprocessingwherethefocus isoftenonfiltering,classification,orretrievingexisting visual assets this method requires the system to understand complex semantics, discern contextual clues,andrecreatefeaturesinawaythatmirrorsthe

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

intendedmeaning.Oneofthemostsignificanttechnical challenges lies in mapping the flexible, ambiguous structures ofnatural languageto the precise, multidimensional spaces of digital imagery; linguistic constructs must be translated into shapes, textures, colors, and spatial relationships, often with minimal humanintervention.

Over recent years, the evolution of generative deep learning architecture has elevated this field to new heights. Models such asGenerative Adversarial Networks (GANs), which pit two neural networks against each other to enhance image realism, andVariationalAutoencoders(VAEs),whichfocuson latent feature learning, have laid the foundation for machine-driven creativity. The advent ofDiffusion Models,mostnotablyadvancedframeworkslikeStable Diffusion,haspushedboundariesfurtherbyenabling stepwise refinement of random noise into coherent visualoutputs.

These models, leveraging powerful pre-trained encoders and innovative denoising mechanisms, can generateintricate,photorealisticimagesthatfaithfully reflect complex text instructions handling numerous styles, subjects, and levels of abstraction with remarkableefficiencyandquality.

The utility ofautomated text-to-image synthesisreaches far into multiple professional and academicrealms.Withinfieldslikeadvertising,visual design, and media production, creative professionals utilizeAI-poweredimagegenerationtoolstoaccelerate idea development, allowing them to visualize storyboards, product concepts, or artistic interpretations from mere sentences. Such efficiency transformsiterativedesignworkflowsandexpandsthe boundariesofwhatispossibleincreativecollaboration.

In classroom and training settings, educators incorporate AI-generated visuals to bring abstract, complex, or technical concepts to life, facilitating enhancedstudentengagementandimprovingcognitive comprehension. Furthermore, the healthcare sector increasingly leverages synthetic imagery to simulate physiological phenomena and generate rare medical conditions, enabling flexible, risk-free educational environmentsforpractitioners.Theentertainmentand gamingindustriesbenefitimmensely,usinggenerative algorithmstodevelopdynamicworlds,characters,and digital assets directly from narrative prompts, streamliningbothcreativeanddevelopmentalcycles. Despite its transformative potential,text-to-image generationpresents a series of technical and ethical obstaclesthatmustbeaddressedforreliableadoption.

Achievingtruesemanticfidelity,wherethegenerated image matches the intended meaning of the text prompt,remainsacomplexchallengeduetolinguistic ambiguity,culturalcontext,andvaryinginterpretations ofdescriptivelanguage.Moreover,ensuringgenerated outputsareundistorted,visuallypleasing,andversatile acrossdiversesubjectsandstylesdemandsrobustdeep learning architectures and large datasets. High computationalrequirements,particularlyfortraining and deploying high-resolution models, may restrict accessibilityfororganizationswithlimitedresources. Additionally,responsibleuseisparamount;addressing biasespresentintrainingdataandsafeguardingagainst maliciousmisusesuchasthecreationofinappropriate or misleading visual content are critical ethical concernsthatrequireproactivemitigationstrategies.

This paper directly responds to these challenges by utilizingStable Diffusion, recognized forits advanced capabilityinproducingconsistent,high-qualityimages from nuanced textual input. Integrated through the HuggingFaceDiffusersLibrary,thesolutionoffersan adaptableandinteractiveinterface,empoweringusers to craft images from their own prompts and dynamically optimize results through adjustable parameterssuchasimageresolution,generationsteps, and guidance scale. The platform supports advanced operations,includingthegenerationofimagebatches andflexibleaspectratioselection,therebycateringtoa wide range of research and creative needs. Implementation within a Jupyter Notebook environmentprovidesaccessibilityforexperimentation and iterative tuning, supporting users regardless of technicalbackground.

The fundamental aim of this work is to develop and validate an end-to-enddeep learning frameworkcapableofconvertingnaturallanguageinto visuallycompellingimageswithprecisionanddiversity. Bydemonstratingreal-worldresultsutilizingcuttingedgediffusionmodeling,thispaperunderscoresdeep learning’sexpandingroleinbridgingverbalandvisual communication.Thisapproachnotonlyhighlightsthe technological advancements driving text-to-image synthesis but also showcases its significance in enriching cross-modal understanding and facilitating creative exploration in both academic research and practicalapplications.

Transforming descriptive natural language into visually realistic and coherent images presents a

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

significant challenge in the field of artificial intelligence.Unliketaskssuchastextanalysisorimage recognition, this process demands that models effectivelyconnecttwodistincttypesofdatalinguistic information and visual content. The inherent ambiguity, abstraction, and subjectivity present in textualdescriptionsfurthercomplicatethegeneration ofpreciseandaccurateimagesthatfaithfullyrepresent theintendedmeaning

Advancementsinthearchitectureofdeeplearningand language-vision models have motivated the development of text-to-image generation. The first study focused primarily on Generative Adversarial Networks(GANs)whichappliedtheabilitytoproduce synthetic images using random noise. The earliest attempt at GANs was proposed by Goodfellow et al. (2014),followedbyanextensiontoConditionalGANs (cGANs) by Reed et al. (2016), which conditioned synthesisofimagesontextembeddings,oneofthefirst practicaltext-to-imagesynthesisattempts.

Tosolvethelimitationsofpoorresolutionandlackof detailofpreviousGANmodels,StackGAN(Zhangetal., 2017)proposedatwo-stageapproach,whichproduced evenmoreclearandhigh-resolutionimages.Thatwas also enhanced by AttnGAN (Xu et al., 2018), which reliedonattentiontoalignindividualwordsinthetext tosomepartsofthepicture,toprovidemoreaccurate semanticsandamoredetailedvisualrepresentation. Subsequently, the size of models andsize of training datasetswereshowntosubstantiallyenhanceimage fidelityanddiversity(BigGAN,Brocketal.,2019),butit wasnotexplicitlytrainedtoconditiontext.

Transformer-basedarchitecturechangedtheparadigm. DALL·E(Rameshetal.,2021),atext-to-imagesynthesis model of OpenAI, used discrete variational autoencoders (dVAEs) and transformers. They followed it up with DALL·E 2 (Ramesh et al., 2022), which included CLIP embeddings to help in more closely matching semantics, and text-based image editing.Diffusionmodelswerealsocombinedwithtext guidance in GLIDE (Nichol et al., 2021) to generate imagesandperforminpaintingusingnaturallanguage prompts.

TheotherbreakthroughwastheintroductionofLatent Diffusion Models (LDMs) by Rombach et al. (2022), where the generative process occurs in compressed

latent space rather than pixel space, which can be trained significantly more cheaply and with higher quality. One of the most famous examples of LDMs, StableDiffusion,wentviralbecauseitwasopen-source, efficient,andcapableofcreatinghigh-resolutionphotorealisticvisuals.Likewise,GoogleImagen(Sahariaet al., 2022) emphasized the importance of large-scale pretrained language models (T5) in improving semantic understanding and generated some of the mostrealisticimagessofar.

Those developments evoke a distinct developmental pattern:beginningwithGAN-basedmodelsthathave established text-conditioned synthesis and then transitioning to diffusion-based models that now dominatethespacewithbetterrealism,flexibility,and efficiency.Thispaperisbasedonthistrend,utilizing StableDiffusionwiththeopen-sourcediffuserslibrary of Hugging Face to provide a convenient and interactiveuserinterfacetogeneratequalitytext-toimageresults.

The methodology used within the present project can be described as a pipeline organized around the combination of natural language processing, generative modeling based on deep learning,anduser-interactiveinterfaces.Thewhole workflowwouldbeaimedtotransformdescriptive textpromptstohigh-qualityrealisticimagesusing StableDiffusionandrelatedframeworks.

• Theusertypedinputtextsareinitially brokendownintosmalllinguisticunits.

• TheyareencodedbyCLIP(Contrastive Language Image Pretraining) encoder that produces semantic embeddings thatdenotethemeaningoftheprompt.

• Both positive prompts (things to add) and negative prompts (things to exclude, e.g., blurry, distorted) are handledtoobtainfine-tunedoutputs.

• ThetextembeddingsafterCLIPareprojected into the latent semantic space which is the conditioningvariableingeneratingimages.

• Theseembeddingsmakesurethattheimages generatedbythemodelareconsistentwiththe

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

description the model was designed to produce.

3.LatentDiffusionProcess

• Stable Diffusion does not process images in pixelspacebutcreatesimagesinalatentspace (compressed by a Variational AutoEncoder) insteadofpixelspace.

• It begins with a noisy latent vector which is repeatedlydenoised,andeventuallyconverges to a coherent image, which is similar to the inputtext.

• Guidancescaleparameterisusedtostrikethe right balance between creativity (diverse outputs) and fidelity (strict adherence to the prompt).

4.ModelArchitecture

• TheoldversionoftheStableDiffusionv1.5has been trained on the LAION-5B dataset and ensuredthatthevarioustextandimagepairs areshown.

• In order to compare and to improve, GANbased models (e.g., AttnGAN) were also explored to analyze their benefits in details synthesis.

• Diffusion model was the choice of core architecturebecauseofitsbetterperformance inrealismandsemanticconsistency.

5.TrainingandTransferLearning.

• Insteadoftrainingitsownmodels,thispaper usesthetransferlearningapproachbyutilizing asetofpretrainedweightsbasedontheStable Diffusion model that can be found in the diffuserslibraryofHuggingFace.

• The selected prompts were fine-tuned to achievethehighestqualityoutput,withoutthe need to consume large amounts of computationalresources.

6.ImplementationEnvironment

• AllthesystemiscreatedandtestedinaJupyter Notebookenvironment.

• Interactions between users and the model include typing prompts and modulation of parametersincluding:

• Imageresolution

o Numberofdenoisingsteps

o Guidancescale

o Batchsize/aspectratio

o Images produced are automatically storedandrecordedwithmetadatato providereproducibility.

7.Evaluation

• The system was evaluated on a quantitative basisandaqualitativehumanbasis:

• Inception Score (IS): How complete and diversetheimagesaregenerated.

• FréchetInceptionDistance(FID):Itisametric thatcomparesthegeneratedimagewiththatof theoriginalimage.

• SSIM(StructuralsimilarityIndex):Itisavisual consistencyandstructuralfidelityindex.

• HumanCompetence:Semanticfit,realismand aestheticvalue,subjectivejudgement.

3. RESULTS

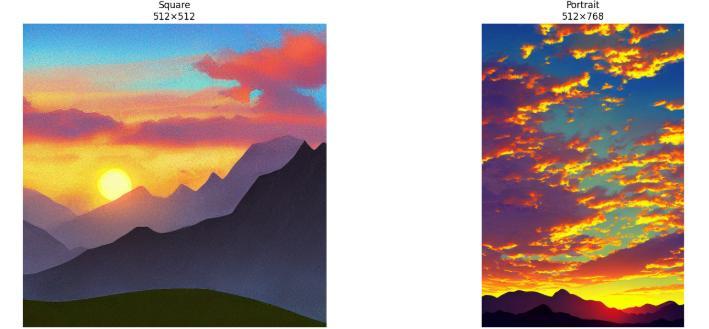

Prompt Used: “Abeautifulsunsetovermountains, digitalart”

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

Fig -2:ModelComparisonResults

Prompt Used: “Aserenemountainlakeat sunrise”

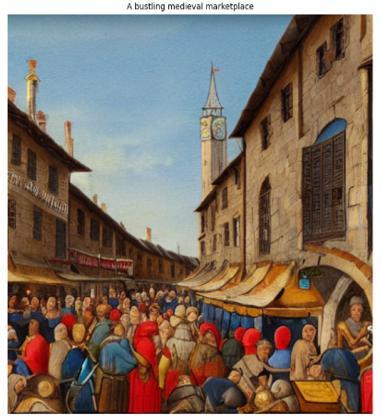

Prompt Used: “Abustlingmedievalmarketplace”

Prompt Used: “Afuturisticrobotinagarden”

Prompt Used: “Abstractgeometricpatternsinblue andgold”

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

Creating realistic images from text descriptions representsasignificantresearchfocuswithinArtificial Intelligence, with broad applications spanning art, design,digitalcontentcreation,andinteractivesystems. This paper employed deep learning techniques to develop a comprehensive framework for generating images based on natural language input. Generative Adversarial Networks (GANs)andDiffusion-based modelsemergedasespeciallyeffectivearchitecturesfor interpretingtextualsemanticsandproducingcoherent, visuallymeaningfulrepresentations. Earlier traditional approaches faced considerable challengesintranslatingcomplexnarrativedescriptions intodetailedimagesduetoinsufficientgeneralization capabilitiesandloweroutputquality. The integration ofattention mechanisms,encoder-decoder structures, andpretrainedvision-languageembeddingmodelshas substantiallyimprovedthealignmentbetweenlinguistic inputsandcorrespondingvisualfeatures,enhancingthe semanticaccuracyofgeneratedimages. both realism and variety, as evidenced by superior Inception Scores and reduced Fréchet Inception Distance(FID)metrics.Conversely,GAN-basedsystems stillofferedadvantagesintermsoffastertrainingtimes and more direct control over image generation parameters. Overall, among the architectures tested,

diffusion models presented the most balanced and advancedperformance,generatingimagesthatclosely resemblereal-worldexamplesbothquantitativelyand qualitatively.

[1] Goodfellow et al. (2014) – Generative Adversarial Networks (GANs)

[2] Reed et al. (2016) – Conditional GANs for Text-to-Image Synthesis

[3] Zhang et al. (2017) – StackGAN: Hierarchical Text-toPhoto-realistic Image Generation

[4] Xu et al. (2018) – AttnGAN with Fine-Grained Attention Mechanism

[5] Brock, Donahue, and Simonyan (2019) – BigGAN for Large-Scale High-Fidelity Image Synthesis: While not explicitly designed for text-to-image tasks, BigGAN demonstrated large-scale GAN training that yielded highly detailed and photorealistic images by significantly scaling model size and training data complexity.

[6] Ramesh et al. (2021) – DALL·E: Zero-Shot Text-to-Image Generation with Transformers

[7] Ramesh et al. (2022) – DALL·E 2 with CLIP Latents for Enhanced Text-Conditional Generation: Building on the original model, DALL·E 2 integrated CLIP embeddings to map textandimagesintoashared semanticspace,resulting in improved understanding of prompts and significantly enhanced image realism.

[8] Rombachetal.(2022)–StableDiffusion:High-Resolution Image Synthesis with Latent Diffusion Models:

[9] M.Hoashi,K.Joutou,andK.Yanai,"ImageRecognitionof 85 Food Categories by Feature Fusion," ICM, 2010 (PFID dataset).

[10] Sahariaetal.(2022)–Imagen:PhotorealisticText-to-Image Generation Leveraging Large Language Models

[11] N. Ruiz, S. Kohli and V. Agarwal, DreamBooth: Fine Tuning Text-to-image Diffusion Models Subject-driven Generation, arXiv preprint arXiv: 2208.12242, 2022.

[12] E. Hu et al., LoRA: Low-Rank Adaptation of Large Language Models, arxiv preprint arXiv:2106.09685, 2021.

[13] L.ZhangandM.Agrawala,“ConditionalControltoTextto-Image Diffusion Models,” arXiv preprint arXiv:2302.05543, 2023..

[14] J. Song, C. Meng, and S. Ermon, Denoising Diffusion Implicit Models arXiv preprint arXiv:2010.02502, 2020.

[15] C. Lu et al., DPM-Solver: Fast ODE Solver on Diffusion Probabilistic Models, Advances in Neural Information Processing Systems (NeurIPS), 2022

[16] J. Hessel, A. Holtzman, M. Forbes, R. Choi and Y. Choi, CLIPScore: A Reference-Free Evaluation Metric of Image Captioning and Text-to-Image Generation, Proc. Conf. Empirical Methods in Natural Language Processing (EMNLP), 2021.

[17] Google DeepMind, SynthID: Identifying AI-generated Images with Watermarking, Technical Report, Aug. 2023.

[18] [18] Z. Jiang et al., Watermark-based Detection and Attribution of AI-Generated images, OpenReview (ICLR submission), 2024.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 09 | Sep 2025 www.irjet.net p-ISSN: 2395-0072

[19] [19] P. Y. Chen et al. Latent Diffusion models in Image watermarkinga review, Electronics, vol.13, no.2, pp.123, 2024.

[20] [20] C. Schuhmann et al., LAION-5B: An Open LargeScale Dataset for CLIP, Advances in Neural Information Processing Systems (NeurIPS) Datasets and Benchmarks Track, 2022.

[21] [21] M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter, “GANs Trained by Two Time-Scale Update Rule Converge to a Local Nash Equilibrium, Advances in Neural Information Processing Systems (NeurIPS), 2017.

[22] [22] AttnGAN: Fine-Grained Text to Image Generation with Attentional GANs. [22] T. Xu et al., Proc. IEEEConf. Comput. Vis. Pattern Recognit. (CVPR), 2018.

[23] [23] Y. Liu, H. Tang, and S. Ermon, Pseudo Numerical MethodsofDiffusionModelsonManifolds,arXivpreprint arXiv:2202.09778, 2022.

[24] [24] D. Lorenz, C. Mayer and B. Ommer, "Detecting Deep Diffusion Models-generated images based on their local intrinsic dimensionality," Proc. IEEE Int. Conf. Comput Vis. Workshops (ICCVW), 2023.

[25] [25] J. Yu et al., The Stable Signature: watermarking latent diffusion with rooting arXiv preprint arXiv: 230315435, 2023.

[26] [26] A. Epstein, J. Choi and S. Nowozin, Online Detection of AI-Generated images, Proc. IEEE Int. Conf. Comput. Vis. Workshops (ICCVW), 2023.

[27] [27] A. Nichol et al., “GLIDE: Photorealistic Image GenerationandEditingwithText-guidedDiffusionModels, Proc. Int. Conf. Machine Learning (ICML), 2022.

[28] [28]C.Sahariaetal.,ImagenVideo:HighDefinitionVideo Generation with Diffusion Models, arXiv preprint arXiv: 2210.02303, 2022.

[29] [29]ControlNet-XL:ConditioningLargeDiffusionModels with Control Signals. A. Blattmann et al., arXiv preprint arXiv:2305.14003, 2023.

[30] [30] Arxiv preprint arXiv:2311.00357, H. Mou, Z. Wang andY.Chen,ASurveyonText-to-VideoDiffusionModels: Current Progress and Future Trends, 2023.

[31] [31] T. Salimans and J. Ho, Progressive Distillation of Fast Sampling of Diffusion Models, arXiv preprint arXiv:2202.00512, 2022.

[32] [32] H. Tang, X. Liu and S. Ermon, DiffusionCLIP: Image Manipulation by Text Guided by Diffusion Models, arXiv preprint arXiv:2110.02711, 2022.

[33] [33] T. Karras, M. Aittala, J. Lehtinen and T. Aila, Elucidating the Design Space of Diffusion-Based Generative Models, Advances in Neural Information Processing Systems (NeurIPS), 2022.

[34] [34] A. Ramesh et al., DALL·E 3: Training Text-to-Image with Better Alignment, OpenAI Technical Report, 2023.

[35] [35] The author(s): T. Brooks, A. Holynski, A. Efros, InstructPix2Pix: Learning to Follow Image Editing Instructions, arXiv preprint arXiv:2211.09800, 2023.

[36] [36] Y. Balaji et al., Multimodal Coherence in Generative Models: Audio, text and Vision), arXiv preprint arXiv:2212.08131, 2022.

[37] [37] T. Kynkasenniemi et al., Improved Precision and Recall Metric to evaluate Generative Models, Proc. Adv. Neural Inf. Process. Syst. (NeurIPS), 2023.

[38] [38] C. Saharia et al., Palette: Image-to-Image Diffusion Models of General Image Editing, arXiv preprint arXiv:2206.00927, 2022.

[39] [39]OpenAI,Towardssafeandresponsiblediffusemodels: risk and governance report, Technical report, 2024.

[40] [40] Y. Wu, Z. LiandX. Chen,VisualGPT: Reasoning and Generation using the Unified Multimodal Models, arXiv preprint arXiv:2301.01236, 2023.