International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

J. Chandrika1 , Dr. M. Saravanamuthu

J. Chandrika1 , Dr. M. Saravanamuthu

2

1Student, Department of Computer Applications, Madanapalle institute of technology and science, India

2Asst Professor, Department of Computer Applications, Madanapalle institute of technology and science, India ***

Abstract -Allnationsalreadyspendalotoftimerecycling. The most crucial task to enable cost-effective recycling is trashsorting,whichisoneofthetasksdesiredforrecycling. Inthisessay,wemakeanefforttoidentifyeachindividual pieceoftrashintheimagesandcategoriseitaccordingtoits suitability for recycling. We get knowledge of various techniquesandprovideathoroughassessment.Aidvector machines (SVM) with HOG features, basic convolutional neural networks(CNN),andCNN withresidual blocksare themodelsweemployed.Wedrawtheconclusionfromthe comparisonresultsthateasyCNNnetworks,withorwithout residual blocks, perform well. The target database's issue with garbage categorization can now be successfully resolvedthankstodeeplearningtechniques.

Keywords: convolutional neural networks, trash classification

Currently, the world generates 2.01 billion lots of municipalsolidwasteannually,whichishugedamagetothe ecologicalenvironment.Wastemanufacturingwillextendby wayof70%ifcutting-edgeconditionspersist[1].Recycling is becoming an essential section of a sustainable society. However,thewholeprocessofrecyclingneedsabighidden cost,whichiscausedthroughselection,classification,and processingoftherecycledmaterials.Eventhoughshoppers are inclined to do their own garbage sorting nowadays in manycountries,theymaybeburdenedabouthowtodecide the correct category of the rubbish when disposing of a massivevarietyofmaterials.Findingancomputerizedway to do the recycling is now of magnificent value to an industrial and information-based society, which has not solely environmental results but also recommended financialeffects.

TheindustryofartificialGeniushaswelcomeditsthird wavewithenoughdatabase.Deepgettingtoknowbeganto exhibit its excessive effectivity and low complexity in the area of pc vision. Many new thoughts were proposed to attainaccuracyinpictureclassificationandobjectdetection. Amongquiteanumberdeepmodels,convolutionalneural networks(CNNs) [2, 3] specially have led to a series of breakthroughsforphotoclassification.CNNscaptureaspects ofphotographswith“strongandprimarilyrightassumptions about the nature of images” [2]. Owing to the fewer connectionsofCNNsincontrasttoabsolutelylinkedneural networks, CNNs are simpler to be educated with fewer

parameters.Therefore,inthispaper,wewouldliketocheck outspecificmodelsbasedonconvolutionalneuralnetworks to do garbage classification. Overall, this learn about is to discoverasingleobjectinanimageandtoclassifyitintoone of the recycling categories, such as mental, paper, and plastic.

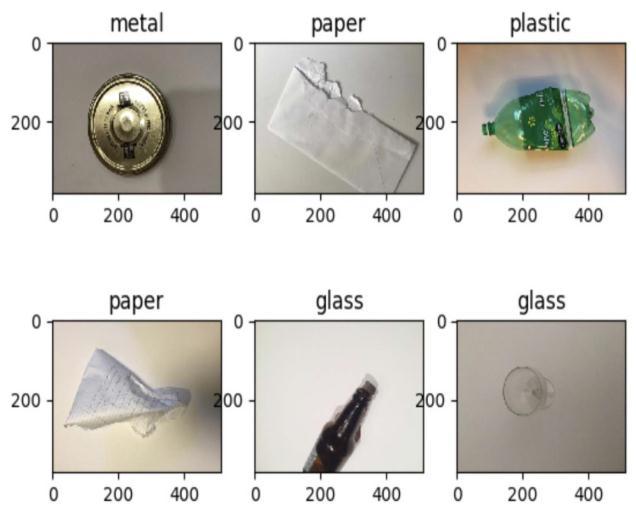

Fig.1.Sampleimagesofthegarbageclassificationdataset. Therestofthispaperisequippedasfollows.Sec.IIdescribes thegarbagephotographdataset.Detailsofstudiedmodels are described in Sec. III. Sec. IV presents comprehensive evaluation research and discussion, followed by using conclusionofthisworkinSec.V.

For garbage classification, we utilize the photos of the datasetcommittedtotherubbishclassificationventureon Kaggle.Thisdatasetconsistsoftotally2527picsinwhicha singleobjectofgarbageisexistingonacleanbackground. Lighting and pose configurations for objects in distinctive photographsisdifferent.Allthesepicshavethedimensionof 384 × 512 pixels and belong to one of the six recycling categories:cardboard,glass,metal,paper,plastic,andtrash.

Toeducatedeepneuralnetworks,wewantalargequantity ofcoachingimages.Withflippingandrotation,weaugment thedatasetto10108images,whichwasoncerandomlycut upintoinstructsetsof9,095snapshotsandcheckunitsof 1,013 images. Some sample pictures in this dataset are showninFig.1

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

Since all the objects were positioned on a clean background, we first of all attempt to capture gradient elements of photographs and then construct a classifier based totally on aid vector computing device (SVM) to do classification.

Thegradientfeatureswerentarehistogramoforiented gradients (HOG) [4]. The distribution of gradients of exceptionalinstructionscanonewayortheotherdescribe appearanceandshapeofobjectswithinanimage.TheHOG descriptor is invariant to geometric and photometric transformations.Thephotoisdividedintosmallrectangular areasandtheHOGfacetsarecompiledineveryregion.The orientedgradientsofeachmobilearecountedin9histogram channels.AftertheblocknormalizationusingL2-Normwith constrainedmostvalues,thecharacteristicvectorsofmobile histogramsareconcatenatedtoafeaturevectoroftheimage.

TheextractedfeaturevectorsarefedtoanSVM,whichis a canonical classificationtechnique earlier than the era of deeplearning.AnSVMclassifierisconstructedbymeansof discovering a set of hyperplanes between one-of-a-kind classesinahigh-dimensionalspace.Thegainingknowledge of algorithm tries to locate the hyperplane that has the greatest whole distance to the nearest coaching records point of any class, which ability the lowest error of the classifierattheidenticaltime.

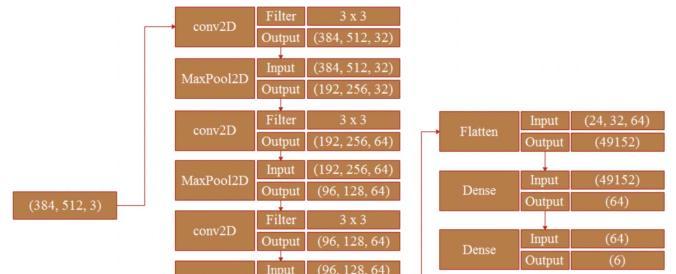

To check out performance of a basic CNN, we construct a simpleCNNstructuretogetfamiliarinspection,whichmay additionallyassisttocomprehendtheoverallperformance difference between models. This architecture uses 2D convolutional (conv. in short) layers to seize facets of images.Sincefiltersofsize3×3permitextraapplicationsof nonlinear activation features and decrease the number of parametersthanlargefilters[5],thebuiltsimpleCNNmodel uses 3 × three filters for all the conv. layers. Between 2D conv. layers we add the max pooling layers to limit dimensionsoftheenterandthenumberofparameterstobe learned. This should keep important aspects after conv. layers whilst stopping overfitting. After the conv. blocks there is a flatten layer, which flattens the function matrix intoacolumnvector.Thisapprovesthemannequintouse two utterly linked layers at the give up to do the classification.

Inthisarchitecture,weusetwoactivationfunctions.Inall the conv. layers and after the flatten layer we use the RectifiedLinearUnitfeature(ReLU)describedasy=max(0, x)tointroducenonlinearityintothemodel,whichoughtto avoid the trouble of gradient vanishing at some stage in backpropagationandhasalowercalculationcomplexity.In thefinal denselayer, weusethesoftmaxcharacteristic as activation,whichfitsthecrossentropylossfeaturewell.Fig. twoillustratesshapeoftheeasyCNN.

Inempiricalexperiments[6],researchersfoundthatvery deepconvolutionalneuralnetworksarechallengingtotrain. The accuracy can also become overly saturated and all at oncedegrade.Therefore,theresidualcommunitywasonce proposedtocurbthisproblem.

IntheResNetproposedin[6],theresidualblocktriesto analyzetheresidualsectionoftheproperoutput.Itmakes use of the shortcut connection of identity mapping to add formerlypartsofthenetworkintotheoutput.Suchshortcuts won’taddmore

Fig.2.StructureofsimpleCNN.

parameters or greater complexity. But the residual section is much less difficult to be trained than authentic functions in empirical experiments. In a variant of the ResNet, called ResNet50, researchers use the bottleneck structureintheresidualblock.Ineachresidualblock,there aretwoconv.layerswithafilterofsize1×1beforeandafter theordinarythree× 3conv. layer.These 1×1conv.layers limitandthenamplifydimensions,which“leavethethree× 3layerabottleneckwithsmallerinput/outputdimensions” [6] and keep the same dimensions of the identification sectionandtheresidualpart.

In the mannequin of ResNet50, we first off use a conv. layerandapoolinglayertogettheroughfacetsofimages. After the ordinary conv. block, the model makes use of definitelysixteenresidualblockswithangrowingdimension of features. The ultimate residual block is linked with an common pooling layer to downsample the characteristic

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

matrix, a flatten layer to convert the characteristic matrix intoavector,adropoutlayerandaabsolutelylinkedlayerto classify the aspects of an image into one category. The dropoutlayer,viewedtobeawayofregularization,cannow notsolelyaddnoisetothe hiddengadgetsofamodel,but canalsoaveragetheoverfittingblundersandreducethecoadaptionsbetweenneurons.

TheresidualblocksalsouseReLUasactivationfunction to make the most of its advantages. The same as the easy CNNarchitecture,ResNet50additionallyusessoftmaxasthe activation feature in the closing layer. Fig. 3 illustrates structureoftheResNet50model.

To make a evaluation between fashions with and except residual blocks, we additionally build a undeniable communityofResNet50barring the identity shortcuts. This undeniable community nonetheless carries the bottleneckblock,whichactsonthechangingofdimensions and discount of parameters. Without the identification mapping,thismannequinisconstructedprimarilybasedon theoriginal

IrjetTemplatepatternparagraph.Defineabbreviationsand acronymsthefirsttimetheyareusedinthetext,evenafter theyhavebeendescribedintheabstract.Abbreviationssuch asIEEE,SI,MKS,CGS,sc,dc,andrmsdonownothavetobe defined.Donotuseabbreviationsinthetitleorheadsexcept theyareunavoidable.functionratheroftheresidualfunction. Themeasurementofthefilter,dimensionsoffunctionmatrix andchoiceofactivationfeaturesofsimplenetworkarethe identicalwithResNet50

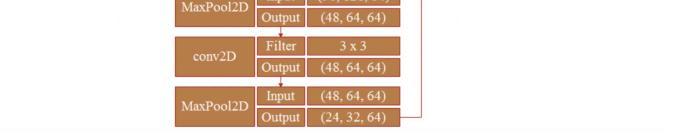

Wearealsothinkingtheperformanceifwemixtypicalhome madefacetswithCNNelementsTherefore,webuildanew community to collectively reflect onconsideration on two types of features. This network has two parts at the first stage: the convolutional section and HOG part. The

convolutional section includes four conv. layers with max poolinglayers(similartoshapeofthesimpleCNNmodel). TheHOGpartfirstlyresizesthephotointo200×200pixels.

It then extracts HOG elements of the photo with L2Normalization.ConcatenationofflattenedCNNelements and HOG aspects is fed to three We are also thinking the performanceifwemixtypicalhomemadefacetswithCNN elements Therefore, we build a new community to collectivelyreflectonconsiderationontwotypesoffeatures. This network has two parts at the first stage: the convolutional section and HOG part. The convolutional sectionincludesfourconv.layerswithmaxpoolinglayers (similartoshapeofthesimpleCNNmodel).TheHOGpart firstly resizes the photo into 200 × 200 pixels. It then extractsHOGelementsofthephotowithL2Normalization. ConcatenationofflattenedCNNelementsandHOGaspectsis fedtothree

Forallthe4CNNmodelsnotedabove,weusethemove entropyasthelossfunction.Thecross-entropylossfeature measures the subtle variations between classification results.Basedonthelossfunction,wecandiscoverthefinest parametersettingsbywayofthegradientdescentalgorithm.

For the aforementioned CNNs, we use each the Adam optimizerandtheAdadeltaoptimizertoseethedifferences. The Adam optimizer is considered as a aggregate of RMSprop and momentum. It computes man or woman adaptive getting to know prices for extraordinary parametersfromestimatesoffirstandsecondmomentsof thegradients.Thishastheimpactofmakingthealgorithm moreefficaciouslyattainconvergencegivenalotofdata.The Adadelta optimizer is a everyday scenario of RMSprop. It restricts the window of amassed past gradients to some fixedsizeasanalternativeofsummingupallpastsquared gradients(likeAdagrad),whichavoidsearlyquitofstudying inducedwiththeaidofgradientvanishing.

Fig.4. StructureofResNet50model.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072

To construct the SVM classifier, the radial basis kernel is usedforcharacteristicprojection,andthelibSVMlibrary[7] isused forimplementation.

The experiment with the ResNet50 model employs the pretrained weights of the model that was trained on ImageNet dataset. For the simple CNN and HOG+CNN models, the weights have been randomly initialized. For ResNet50, undeniable community of ResNet50, and the HOG+CNNfashionstheratioofdropoutlayerisallsetat0.5.

To get a greater correct description of the models, the datasetisbreakuprandomlyforthreetimes.Allthemodels areskilledwiththeshuffleddatasetof9,095teachimages and1,013test/validationpicturesfor40epochs.Theeffects showedunderaretheaverageofalltheexperiments.Dueto ourhardwarelimitation,theeasyCNNstructureiseducated witha batchsizeof32,and ResNet50, plainnetwork,and HOG+CNNfashionsarewith16

SupportVectorMachine:TheSVM-basedapproachachieves testaccuracyround47.25%theuseofthesametrainingand takealookatsetswithdifferentmodels.TheHOGfeatures mayadditionallynownot

Fig.5.Structureofhybridmodel.

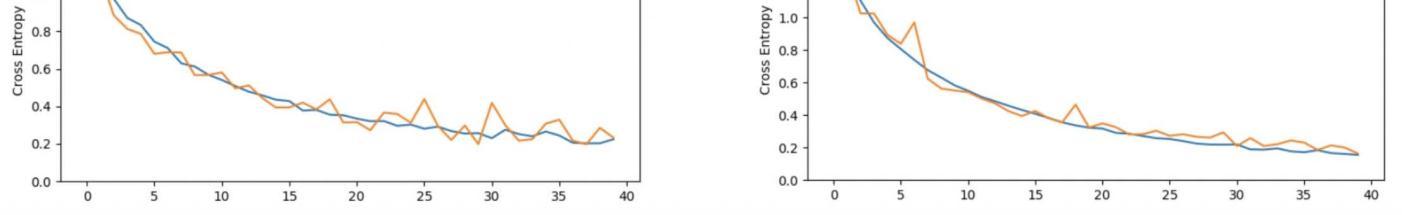

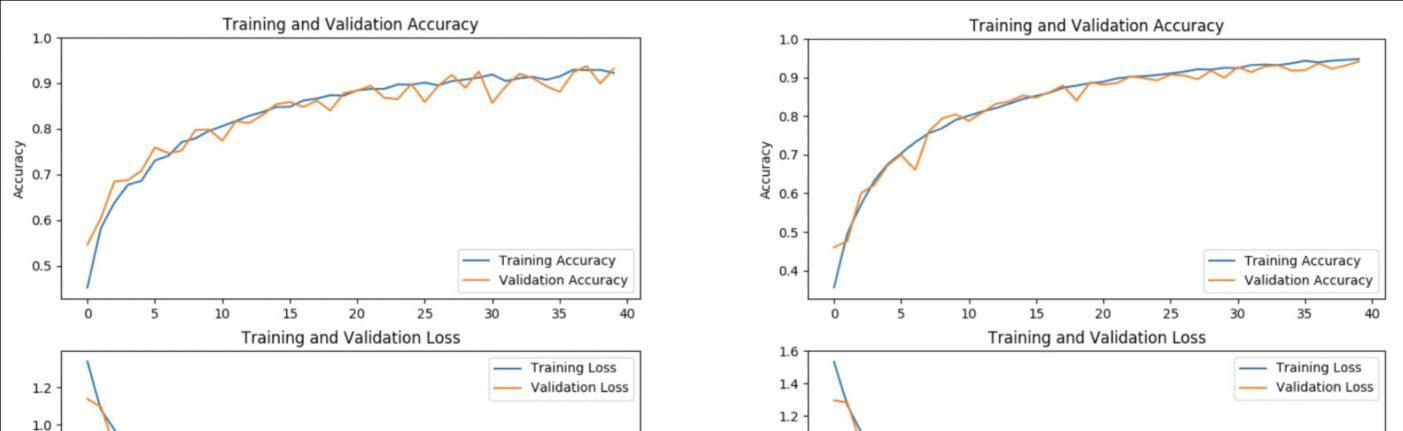

Fig:6

Leftcolumn:trainingbytheAdamoptimizer

Rightcolumn:trainingbytheadadeltaoptimizer

Fig.6indicatestheevolutionsoftraining/testaccuraciesand training/testlossesasthenumberofepochsincreases.The easy mannequin achieves a education accuracy over 94% and check accuracy over 93% the use of a 90/10

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

training/testing information split with the Adadelta optimizer.UsingtheoptimizersofAdamorAdadeltahasno apparenteffectsontheperformance,whichonlyreasonsa differenceround2.5%intheaccuracy.Butboththeaccuracy andlosscurvesfluctuategreaterinthelatterpartoftraining with Adam than with Adadelta. In addition, the training accuracyandlossconvergedfasteratthecommencingwith Adadelta.

TheconfusionmatrixinFig.7indicatesthattheeasyCNN architecture is successful with nearly all classes barring plastic.Thereisalargelikelihoodthatthemannequinmay additionallymistakeplasticrubbishwithglassandpaper,or mistakemetallicgarbagewithglass.

2. ResNet50: Table II indicates classification overall performance of the ResNet50 model. The ResNet50 mannequinachievesadescribethefeaturesveryprecisely. Only average classification overall performance can be obtained,giventhatsolelysixcategoriesaretobeclassified. Therefore, this technique can be taken as a baseline for similarlycomparison.

3.SimpleCNNArchitecture:TableIsuggestsclassification overall performanceofthe easyCNN architecture.Results obtainedprimarilybasedontwooptimizersarecompared.

Ascanbeseen,theusageoftheAdadeltaoptimizeryields barelybettercoachingandtestaccuracies

From the effects of this study we can see, the problem of garbage picture classification can be solved with deep learning strategies at a pretty high accuracy. The combinationofprecisefeatureswithCNNsorevendifferent transferringfashionsmaybeanefficientmethodtodothe classification.However,itisunrealistictogetaimageofan object on the clean background each time when people classify the garbage. Due to the giant variety of garbage classesinactuallife,themodelneverthelesswantsalarger andgreaterpreciselycategorizedstatisticssupplytakenin greaterintricatesituations.

[1] S. Kaza, L. Yao, P. Bhada-Tata, and F. Van Woerden, Whata Waste2.0: AGlobal Snapshot ofSolid Waste Managementto2050.TheWorldBank,2018.

[2] A.Krizhevsky,I.Sutskever,andG.E.Hinton,“ImageNet classification with deep convolutional neural networks,”in Proceedings ofthe25thInternational ConferenceonNeuralInformationProcessingSystems -Volume1,LakeTahoe,Nevada,pp.1097–1105,Dec. 2012, [Online]. Available: https://dl.acm.org/doi/10.5555/2999134.2999257 [Accessed:Sep.23,2019].

[3] Y. LeCun, K. Kavukcuoglu, and C. Farabet, “Convolutionalnetworksandapplicationsinvision,”in Proceedingsof2010IEEEInternationalSymposiumon CircuitsandSystems,Paris,France,pp.253–256,May 2010. doi:10.1109/ISCAS.2010.5537907.

[4] N. Dalal and B. Triggs, “Histograms of Oriented Gradients for Human Detection,” in 2005 IEEE ComputerSocietyConferenceonComputerVisionand Pattern Recognition (CVPR’05), San Diego, CA, USA, vol.1,pp. 886–893,2005.doi:10.1109/CVPR.2005. 177.

[5] K. Simonyan and A. Zisserman, “Very Deep Convolutional Networks for Large-Scale Image Recognition,”inarXiv:1409.1556[cs],SanDiego,CA, USA, pp. 1–14, May 2015, [Online]. Available: http://arxiv.org/abs/1409.1556 [Accessed: Sep. 27, 2019].

[6] K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition(CVPR),LasVegas,NV,USA,pp.770–778, Jun.2016.doi:10.1109/CVPR.2016.90.

[7] C.-C. Chang and C.-J. Lin, “LIBSVM: A library for support vector machines,” ACM Trans. Intell. Syst. Technol., vol. 2, no. 3, pp. 1– 27, Apr. 2011. doi: 10.1145/1961189.1961199.

[8] M. Yang and G. Thung, “Classification of Trash for Recyclability Status,” Stanford University, CS229, 2016. [Online]. Available: http://cs229.stanford.edu/proj2016/report/ThungYa ng-Classificat ionOfTrashForRecyclabilityStatusreport.pdf[Accessed:Sep.23,2019].

[9] C. Bircanoglu, M. Atay, F. Beser, O. Genc, and M. A. Kizrak, “RecycleNet: Intelligent Waste Sorting Using Deep Neural Networks,” in 2018 Innovations in Intelligent Systems and Applications (INISTA), Thessaloniki, pp. 1–7, Jul. 2018. doi: 10.1109/INISTA.2018.8466276.

[10] H. Khan, “Transfer learning using mobilenet,” 2019. https: //www.kaggle.com/hamzakhan/transferlearning-usingmobilenet[AccessedSep.23,2019].

[11] P. Gupta, “Using CNN [ Test Accuracy- 84%],” 2019. https: //kaggle.com/pranavmicro7/using-cnn-testaccuracy-84[accessedSep.23,2019].

[12] N.S.KeskarandR.Socher,“ImprovingGeneralization Performance by Switching from Adam to SGD,” ArXiv171207628 Cs Math, Dec. 2017, [Online]. Available: http: //arxiv.org/abs/1712.07628 [Accessed:Jun.15,2020].

Volume: 09 Issue: 08 | Aug 2022 www.irjet.net p-ISSN: 2395-0072 © 2022, IRJET | Impact Factor value: 7.529 | ISO 9001:2008 Certified Journal | Page1596