Exploring The Potential of Generative Adversarial Network: A Comparative Study Of GAN

Harsh Shah 1 , Kaustubh Kabra2 , Onasvee Banarse3 , Akash Mete41,2,3,4 Student, Department of Computer Engineering, All India Shri Shivaji Memorial Society’s Institute of Information Technology, Pune. India. ***

Abstract - GenerativeAdversarialNetworks(GANs),aclass of deep learning models that creates new data samples that resemble the original data, are in-depth examined in this research study. The article covers many GAN subtypes, including vanilla GANs, MedGANs, StyleGANs, and CycleGANs, andanalysestheirdesignsandtrainingapproaches.Thestudy examinesthemanyGANapplications,includingtext-to-image synthesis,dataaugmentation,andpictureandvideocreation. There is also discussion of the difficulties each type of GAN method faces, including mode collapse, instability, and vanishing gradients.

In-depth analysis is also given to the technical features of GANs, including the generator and discriminator networks, training loss functions, and regularization techniques. The research study examines current advancements in GANs, including self-attention, adversarial autoencoders, and attention mechanisms. Additionally, the paperaddressestheethicalissuesrelatedtoGANs,suchasthe possible exploitation of data created by GANs and bias in training data.

The future potential and developments of GANs are discussed in the study, including its use to unsupervised representation learning and the creation of novel GAN architectures. The study emphasizes the need for more study toovercomeGANs'problems andbroadentheirapplicationto other fields. GANs are a fast-developing subject of study with enormous potential in many areas.

Key Words: Generative Adversarial Networks, GAN architectures,GANapplications,ComputerVision,Anomaly Detection

1. INTRODUCTION

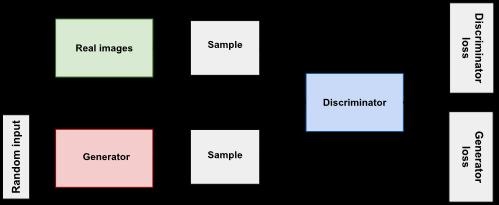

Generative Adversarial Networks (GANs) have gainedsignificantattentioninrecentyearsfortheirabilityto generate realistic data in various fields such as computer vision, natural language processing, and healthcare. GANs arecomposedoftwoneuralnetworks:ageneratornetwork that creates fake data and a discriminator network that distinguishesbetweenthegeneratedfakedataandrealdata. The two networks compete against each other, with the generatornetworkattemptingtoproducedatathatcanfool thediscriminatornetwork,andthediscriminatornetwork

improving its ability to distinguish between fake and real data. The discriminator network learns to discriminate between the fake data and the actual data, while the generator network learnsto produceartificial data that is comparabletothetrainingdata.Bothnetworksdevelopover timebecauseofthiscompetitivedynamic,producinghighqualitydatathatisnearlyindistinguishablefromrealdata.

Inthisreviewpaper,wepresentacomprehensive analysis of GANs and their various types, including VanillaGAN,StyleGAN,CycleGAN,andMedGAN.Webeginby providinganoverviewoftheGANarchitectureandworking principle, followed by a literature survey of various GAN models used in different fields. For each GAN type, we discusstheirarchitecture,workingprinciple,applications, relatedwork,challenges,andfuturedirections.Additionally, we provide a comparative analysis of these GAN models basedontheirperformance,advantages,andlimitations.

Furthermore, we discuss the current state of researchinthefieldofGANsandhighlightsomeoftherecent developments in GANs, including attention mechanisms, progressive growing, and disentangled representations. Finally,weconcludethereviewpaperwithfutureresearch directions in GANs, highlighting potential areas for improvementandthechallengesthatneedtobeaddressed for the effective implementation of GANs in real-world applications.

GANsworkontheprincipleofadversarialtraining, in which two neural networks, the generator and the discriminator,competeinatwo-playerminimaxgame.The generator network takes a random input and generates synthetic data, while the discriminator network tries to distinguish between the synthetic data and real data. The twonetworksaretrainedsimultaneously,withthegenerator attempting to generate synthetic data that can fool the discriminator into believing that it is real, while the discriminator attempts to accurately classify the real and syntheticdata.Throughthisadversarialtrainingprocess,the generator network learns to generate synthetic data that closely resembles the real data. This approach has shown remarkable success in generating high-quality synthetic imagesandhassincebeenextendedtoothertypesofdata, suchasaudio,video,andtext.

ThepotentialforGANstorevolutionizeavarietyof fields is immense, and the current pace of research in the area indicates that we have only scratched the surface of whatthesemodelscanachieve.Someofthemajorchallenges in GAN research, such as mode collapse and instability during training, are being addressed by novel training methods and architectures, but there is still much to be explored in terms of optimization, scalability, and applicability to real-world problems. One exciting area of research is the exploration of GANs in combination with other deep learning techniques such as reinforcement learning and attention mechanisms. As these models continue to evolve, they have the potential to unlock new levels of creativity and enable breakthroughs in fields rangingfromhealthcaretoart.

2. TYPES OF GAN’S

2.1 VanillaGAN

TheinitialarchitecturesuggestedbyIanGoodfellowand his colleagues in 2014, which popularized the idea of Generative Adversarial Networks (GANs), is known as VanillaGAN or standard GAN. A min-max game is used to trainthe twoneural networks thatmake upVanillaGAN,a generatorandadiscriminator.

beenusedtobuildnovelversionsofpre-existingdatasetsfor training machine learning models as well as realistic photographsofobjects,settings,andfaces.Ithasalsobeen used to identify photos that do not fit inside the predicted distributionfordetectinganomaliesinphotographs.

Traininginstability,whichcanresultinthegeneratorand discriminatorbecomingtrappedinasuboptimalstate,isone of VanillaGAN key problems. Mode collapse, when the generator only produces a small number of pictures, and hyperparameter tuning, where determining the ideal combinationofhyperparameterscanbetime-consumingand challenging,arefurtherdifficulties.

Several studies have explored the effectiveness of VanillaGANingeneratingrealisticimages.Inproposed[1]the original architecture of VanillaGAN, which consists of a generator and a discriminator that are trained in an adversarial manner. The generator takes a random noise vector as input and generates fake images, while the discriminatortries todistinguish between the fake images andtherealones.Astudy[2]improvedtheperformanceof VanillaGANbyincreasingthedepthofthegeneratornetwork. Theyshowedthattheirmodelwasabletogenerateimages thatwerecomparableinqualitytotherealones.In[3]have proposedanapproachtoimprovethestabilityofVanillaGAN trainingbyreducingthevanishinggradientproblem.They achieved this by using spectral normalization in the discriminatornetwork.Theproposedmethodimprovedthe quality of the generated images and the stability of the trainingprocess.Thesestudiesdemonstratethepotentialof VanillaGANingeneratinghigh-qualityimageswithavariety ofapplications.

FuturepossibilitiesforVanillaGANincludecreatingnovel picture generation structures and methods, enhancing trainingstability,anddealingwithissueslikemodecollapse.

Adiscriminatornetworkandageneratorarebothusedby VanillaGAN.Thegeneratorcreatessyntheticimagesusinga random noise vector as input, which are then fed into the discriminatoralongsideactualimagesfromadataset.Itisthe discriminator'sresponsibilitytotellactualphotosfromfake ones.Thegeneratorhasbeentaughthowtoproducevisuals thatwill makethediscriminatorbelievetheyarereal.The generator and discriminator networks are updated alternately during the training phase. The discriminator is updated to appropriately distinguish between real and artificialimages,andthegeneratorisupdatedtoreducethe lossbetweentheoutputofthesyntheticimagesandthatof thediscriminator.

Anomaly detection, data enrichment, and image productionarejustafewoftheusesforVanillaGAN.Ithas

A versatile method for creating realistic images, VanillaGANhasseveralusesincomputervisionandmachine learning.Althoughithassomeissueswithmodecollapseand training stability, VanillaGAN is still a well-liked and successful GAN model. It has the potential to turn into an evenmoreadaptableandpracticaltoolinthefieldofartificial intelligencewithcontinuousresearchanddevelopment.

2.2 StyleGAN

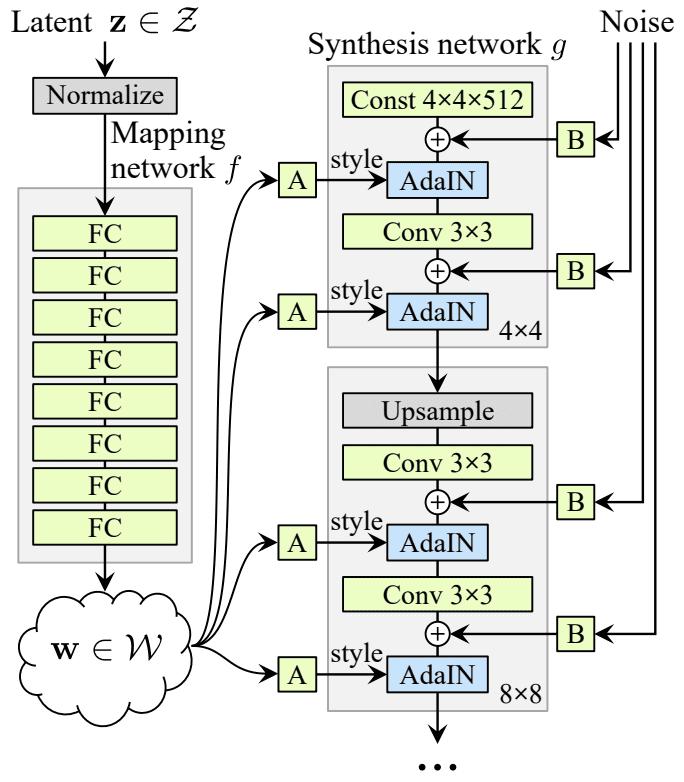

Researchers from Nvidia released StyleGAN, a cuttingedgegenerativemodel,in2019[4].Itisanexpansionofthe GANframeworkthatenablesthecreationofdifferent,highquality images with more control over their properties. StyleGAN, in contrast to conventional GANs, introduces a mappingnetworkthatchangestheinputnoisevectorintoa middle latent space. The final image is created by feeding thislatentspaceintoasynthesisnetwork.Researcherscan regulatethechangesintheimagesthataregeneratedand

obtain high-quality results with more information and variabilitybymanipulatingthemappingnetwork.

StyleGAN and VanillaGAN share the same fundamental GANdesign,howevertherearesomesignificantvariances.It employs a progressive growth methodology, whereby the generated images' resolutioncontinuallyrises throughout training. Additionally, a mapping network is introduced, whichlearnstomaparandomnoisevectortoalatentspace thatregulatesmanyfeaturesoftheresultingimage.

variation,leadingtoevenmorerealisticimages.Overall,the StyleGAN family of models has demonstrated impressive performance in generating high-quality images and providinguserswithcontroloverimagefeatures,makingita promising avenue for future research in generative modelling.

Numerous applications have made use of StyleGAN, includingthecreationofphotorealisticphotos,3Dobjects, and realistic human faces. It has received a great deal of praise for its capacity to generate high-quality and varied images,andthecomputervisionfieldnowfrequentlyusesit asthebasisforgenerativemodels.

Theamountofcomputingpowerrequiredfortrainingand producing high-quality images is one of StyleGAN major problems.OnpowerfulGPUs,trainingStyleGANmighttake daysorweeks,andproducinghugeimagescanbelaborious and memory intensive. The difficulty of altering certain componentsofthecreatedimagewithoutchangingothersis anotherdifficulty.

Thedevelopmentofnewmethodsformanagingpicture attributesandforutilizingGANsininteractivedesigntools are among the prospects for StyleGAN. The training procedurecanalsobemademoreeffectiveandscalable.

Fig-2: Architecture of StyleGAN

InStyleGAN,thegeneratornetworkemploysastyle-based methodology in which the latent space is divided into various components that regulate various aspects of the image.Thefeaturesofthecreatedimage,suchasthefacial expression,hairstyle,andclothing,canthenbecontrolledin a fine-grained manner. The training process involves minimizingamulti-scaleadversariallossthatencouragesthe generator to produce images that are both realistic and diverse.

StyleGAN is a generative model that has received significant attention in recent years due to its ability to generatehigh-qualityimageswithimpressiveresolutionand realism.OneofthesignificantadvantagesofStyleGANisits abilitytodisentangletheimagefeaturesandcontrolthem independently.Inthepaper[4]proposedStyleGAN,which usedanewmappingnetworkandstyle-basedgeneratorto achievestate-of-the-artresultsonseveralimagesynthesis benchmarks. In the paper [5] introduces a progressive training technique to improve the training stability and reduce memory consumption, enabling the generation of images with higher resolution. This paper [6] proposed StyleGAN2-ADA, which introduced adaptive discriminator augmentation and improved the generator’s stochastic

With its capacity to produce high-quality images while giving users fine-grained control over image attributes, StyleGAN marks a significant advancement in the field of generative image modelling. Even though there are still issues to be solved, such as computational efficiency and feature control, StyleGAN has already made a substantial contributiontocomputervisionandentertainmentandhasa lotofpotentialforfurtheruse.

2.3 CycleGAN

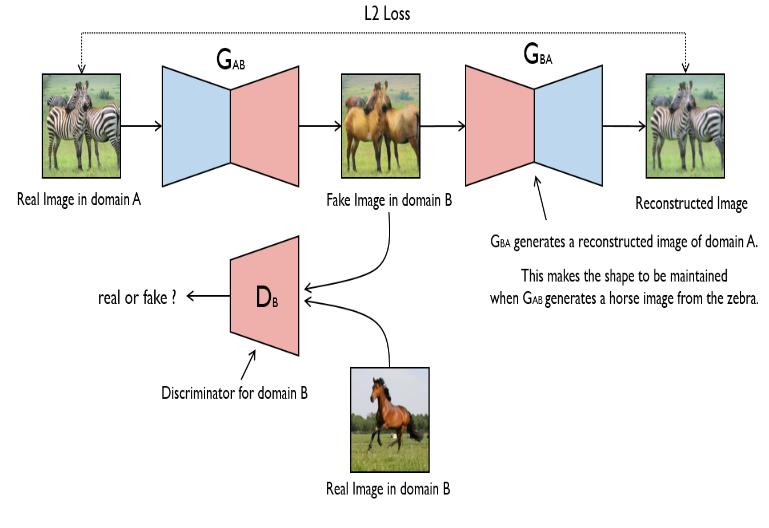

CycleGAN is a kind of generative model developed for image-to-image translation problems across two domains withoutthenecessityforpairedtrainingdata,asdescribed in a 2017 publication by Jun-Yan Zhu and his colleagues. CycleGAN, in contrast to conventional GANs, uses a cycle consistencylosstolearnamappingbetweentwounpaired picturedomains,whereastypicalGANsneedpairedtraining data for supervised learning. Two generators and two discriminators make up the model; the generators map images from one domain to the other, while the discriminatorstellactualimagesfromproducedones.

CycleGAN employs an adversarial network with two generatorsandtwodiscriminatorsthatiscycle-consistent. Whilethediscriminatorslearntotellthedifferencebetween created and genuine images, the generators learn to map imagesfromonedomaintoanother.Cycleconsistencyloss, whichassuresthatthetranslatedimagecanbemappedback totheoriginalimagedomainwithoutlosinginformation,is thecorenoveltyofCycleGAN.ThislosshelpsCycleGANlearn

to do image-to-image translation tasks without active supervision and gets over the absence of paired training data.Inordertomotivatethegeneratorstocreateaccurate and cycle-consistent translations, the training procedure entails minimizing both adversarial loss and cycle consistencyloss.

CycleGANisanimportantvariantoftheGANarchitecture thatisusedforimage-to-imagetranslationtasks.Oneofthe earliest applications of CycleGAN was in style transfer, where an image is transformed into the style of another image. In the context, [7] proposed the original CycleGAN architecturethatiscapableoftranslatingimagesfromone domain to another, without the need for paired data. The authorsdemonstrateditseffectivenessinapplicationssuch astranslatingphotosintothestyleoffamouspaintings.Ina follow-upwork[8],proposedavariantofCycleGANcalled DualGAN, which further improved image translation performance by incorporating a dual-learning scheme. Another application of CycleGAN is in the field of medical imageanalysis.Theauthorspropose[9]anovelvariantof CycleGANcalledPairedCycleGANforapplyingandremoving makeup in images. The proposed method leverages the asymmetricnatureofmakeupapplicationtoachievebetter resultsthantraditionalCycleGAN,whichassumessymmetry between input and output domains. The authors train the network on a paired dataset of images with and without makeup and show that the proposed PairedCycleGAN outperformsstate-of-the-artmakeuptransfermethods.

CycleGANhasbeenusedforavarietyoftasks,including convertingpicturesofhorsesintozebras,turningpicturesof thedaytimeintopicturesofthenight,andalteringapicture from summer to winter. Its capability to carry out highquality image-to-image translation without the need for pairedtrainingdatahasreceivedalotofattention.

Thenecessityforalotoftrainingdatainordertoproduce high-quality image translations is one of CycleGAN's key problems.Thiscanbeparticularlychallenginginfieldswith little access to paired training data. Controlling visual aspectsisanotherhurdlethatarisesduringtranslation.

Inordertoenhanceimagequalityandofferbettercontrol over image features, additional loss functions and architectures are being developed for CycleGAN in the future. CycleGAN could also be used in areas other than image-to-imagetranslation,likenaturallanguageprocessing.

CycleGAN represents an important development in the field of generative image modeling, with its ability to perform image-to-image translation tasks without paired trainingdata.Althoughtherearestillissuestoberesolved, CycleGAN has already made a big difference in computer visionandgraphicsandhasalotofpotentialinthefuture.

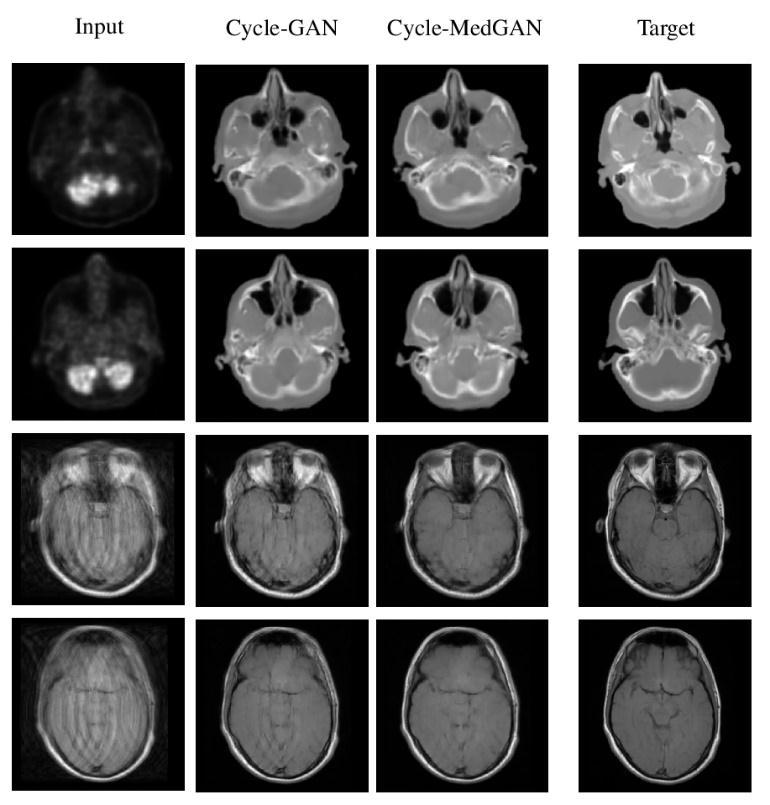

2.4 MedGAN

Agenerativeadversarialnetwork(GAN)calledMedGAN wascreatedexpresslyforthepurposeofproducingartificial medical data. It was suggested in 2018 by Choi et al. as a strategytoprovidevariedandaccuratemedicaldataforuse in research that protects patient privacy. A generator networkandadiscriminatornetworkarebothincludedin the modified GAN architecture that is used by MedGAN. Whilethediscriminatornetworkistrainedtodifferentiate between actual and fake medical data, the generator network uses random noise as input to make synthetic medical data. The discriminator is trained to accurately identifytheinputasrealorsynthetic,whilethegeneratoris educated to provide data that deceives it. The training procedure is carried out repeatedly until the generator generatessyntheticdatathatisidenticaltoactualmedical data.

Incorporating domain-specific knowledge and assuring privacy preservation are only two more aspects that MedGAN offers to handle the difficulties associated with producingmedicaldata.Thegeneratornetwork'sgoalisto generate data that faithfully replicates the distribution of actualmedicaldatabylearningtheunderlyingstructureof the underlying medical data. Additionally, MedGAN uses methods to make sure that the data generated does not containprivatepatientdata.

MedGAN is a type of generative adversarial network designedspecificallyformedicalimagegeneration.Several studies have explored the use of MedGAN for various applications,includingmagneticresonanceimaging(MRI) synthesis,computedtomography(CT)imagegeneration,and retinal image synthesis. In this paper [10], provide an overviewofdeeplearningtechniquesformedicaldiagnosis. They discuss the applications of deep learning in medical diagnosis,includingimageclassification,segmentation,and detection,aswellasthechallengesassociatedwithmedical datasuchassmalldatasetsandclassimbalance.Thepaper also covers recent developments in deep learning for medical diagnosis and provides insights into future directions for research in thisarea.Similarly,thestudy In thispaper,[11]proposedMedGAN,aGAN-basedframework for medical image translation, which aims to address the challenge of insufficient and unbalanced training data in medical imaging. They showed that MedGAN could successfully translate medical images between different modalities,suchasCTandMR,whilepreservingimportant diagnostic features. MedGAN has the potential to aid in diagnosis, treatment planning, and medical research. The paper[12],proposesanovelframeworkcalledMulti-Scale MedGAN,whichincorporatesmultiplelevelsofinformation intheimagegenerationprocess,resultinginmorerealistic and accurate images. Overall, MedGAN has shown great promiseinthefieldofmedicalimagesynthesisandhasthe

potential to revolutionize the way medical images are generatedandusedfordiagnosisandtreatment.

MedGAN can be used to produce a range of accurate medical data for use in research that protects patient privacy. It is possible to create fictitious electronic health records (EHRs), medical photographs, and other kinds of medical data using this method. Machine learning models andotherapplicationsthatrequiresensitiveorlimitedreal medical data can be trained using fictitious medical data producedbyMedGAN.Makingsurethatthegenerateddata appropriately matches the distribution of actual medical dataisoneofthechallengesofusingMedGAN.Forthedata to be valuable for applications further down the line, rigorousexaminationandvalidationofthegenerateddatais necessary.

Future directions for MedGAN and other GANs for the creationofmedicaldataincludeinvestigatingfreshmethods forcombiningdomain-specificexpertiseandenhancingthe models'privacypreservationfeatures.Standardbenchmarks and evaluation measures are also required in order to compare the effectiveness of various GAN models for the creationofmedicaldata.

Apromisingmethodforproducingdiverse,realistic,and privacy-preservingsyntheticmedicaldataisMedGAN.Itcan helpresearcherswhoareconcernedaboutprivacynavigate the difficulties of dealing with limited or delicate medical data.MedGAN and otherGANsforthecreation ofmedical data have the potential to revolutionize the fields of healthcareandmedicalresearchwithadditionalstudyand development.

3. APPLICATION 3.1 VanillaGAN

The act of synthesizing a target facial expression on a source face image while maintaining other source image elementslikeidentity,position,andlightingisknownasfacial expressiontransfer.Bydiscoveringtheunderlyingpatterns in a collection of facial expressions, VanillaGAN may be utilizedtocreatebrand-newexpressions.

A dataset of source and destination facial expressions must initially be gathered in order to produce facial expression transfer using VanillaGAN. The target facial expressionsaretheintendedexpressionstobetransmitted, whereas the source facial expressions are often neutral or another expression. The mapping from the source to the targetfacialexpressionsisthenlearnedusingthedatasetto trainaVanillaGANmodel.

The discriminator network learns to discriminate betweenactualtargetexpressionphotosandcreatedtarget expression images while the generator network learns to generate images of the target expression from the source

imageduringtraining.Oncethemodelhasbeentrained,any inputfaceimagemaybeutilizedtocreatenewimageswith theappropriatefacialexpression.

Thereareseveralusesforfacialexpressiontransmission intheentertainment,gaming,andvirtualrealityindustries.In videogames,movies,andTVprogrammers,itmaybeutilized todevelopcharacterswhoarerealisticandvulnerable.Itmay alsobeusedinmedicalsettingstoidentifyandtreatmental healthissuesincludingdepressionandanxiety.

3.2 StyleGAN

DataGathering:Thefirststepentailsgatheringasizable collectionof3Dmodelsofpeople'sfaces.Severaltechniques, including 3D scanning, photogrammetry, and manual modelling,couldbeusedtoaccomplishthis.

Data Pre-processing: In order to make the 3D models compatiblewithStyleGAN,thedatawouldnextneedtobe pre-processed.Thiscanentailexporting themodelsas2D picturefilesinatexturemaporUVmapformat.

Training StyleGAN: After pre-processing the data, StyleGANmaybetrainedonthedatasettoproducefresh2D portraits of people's faces. While learning to discriminate betweenactualandproducedimages,thediscriminatoralso learnstomaprandomnoisetorealistichumanfaces.

StyleMixing:OneofStyleGAN'sdistinctivefeaturesisits capacity for style mixing, which enables granular control over the appearance of the images that are produced. Avatars with certain qualities, like a particular hairdo or attitude,mightbecreatedwiththis.

Projectingto3D:Toconstruct3Davatars,theresulting2D imagescanthenbeprojectedbackinto3Dspace.Different techniques,includingtexturemappingormeshdeformation, couldbeusedtoachievethis.

Finalstep:optimisationandrefinement.Thecreated3D avatars might be made more realistic and tailored to the needsoftheintended application,suchasvideogames or virtualrealityexperiences,byoptimisingandrefiningthem.

Overall,StyleGANisapromisingtechnologyfordifferent applicationsintheentertainmentandgamingsectorssinceit hasthepotentialtobeastrongtoolforcreatingrealistic3D avatarswithfine-grainedcontrolovertheirlook.

3.3 CycleGAN

Theappearanceofanobjectinanimageischangedfrom onedomaintoanotherwhenanobjectistransfiguredusing CycleGAN.Forinstance,wecoulduseCycleGANtochangea horse'simageintoazebra'simageorawinterylandscape's imageintoasummeryone.ACycleGANcanbetrainedona

setofpairedimages,oneimagefromthesourcedomainand theotherfromthetargetdomain,todothis.

3.4 MedGAN

A generative adversarial network called MedGAN was created exclusively for the purpose of producing artificial medicalimages.Inordertotrainmachinelearningmodels forusageinthemedicalfield,itismadetoproducerealistic images. Here's how it might be used to create artificial medicalimages:

Data Collection: To use CycleGAN for object transformation,asetofpairedimagesmustbegatheredfirst. Forinstance,wecouldgatheracollectionofphotosshowing horsesandzebras,witheachphotoofahorsebeinglinked with a photo of a zebra having the exact identical lighting andpose.

TrainingCycleGAN:TheCycleGANisthentrainedusingan adversarial loss function on the matched images. Two generatingnetworksandtwodiscriminatornetworksmake up CycleGAN. While the other generator network uses an imagefromthetargetdomainasinputandcreatesanimage inthesource domain, the firstgenerator network uses an image from the source domain as input. The two discriminator networks give feedback to the generator networks and assess the realistic quality of the created images.

Testing: New images from the source domain can be transformedintothetargetdomainusingCycleGANonceit hasbeentrained.Thegeneratornetworkthatturnshorses intozebras,forinstance,maybeusedtoprocessanimageof ahorse.Whileretainingkeyelementsoftheoriginalhorse image, like the stance, lighting, and general structure, the finalimageshouldhaveazebra-likeappearance.

Numerous potential uses for object transformation utilisingCycleGANincludeonlinehomedecoration,virtual garmentfittings,andproductpromotion.Withouttheusage of real prototypes, a clothes company may, for instance, utiliseCycleGANtocreatephotosofapersonsportingashirt in several colours. A furniture store may similarly use CycleGAN to show clients how a space would look with variousfurnitureplacementsoraccents.

Data Gathering: The first stage would be to gather a sizable dataset of medical pictures, such as those from Xrays, ultrasound, MRI, or CT scans. With the use of this dataset,MedGANwillbetrainedtocreateartificialmedical images.

DataPre-processing:Inorderforthemedicalimagestobe compatible with MedGAN, they would need to be preprocessed. This could need a number of procedures, includingcropping,resizing,andimagenormalisation.

Afterpre-processingthedata,MedGANcanbetrainedon the set of data to produce fresh, artificial medical images. Whilelearningtodiscriminatebetweenactualandproduced images,thediscriminatoralsolearnstomaprandomnoiseto realisticmedicalimages.

AnomalyDetection:Machinelearningmodelsforanomaly detection can be trained using synthetic medical pictures createdusingMedGANthatcontainparticularanomaliesor abnormalities. This might be helpful for identifying uncommonorchallenging-to-diagnoseillnesses.

ImageSegmentation:Machinelearningmodelsforimage segmentationcanbetrainedusingsyntheticmedicalimages of particular anatomical structures that are produced by MedGAN. This might be helpful for automatically locating and classifying particular areas of interest in medical imaging,liketumoursororgans.

Evaluation and Validation: Synthetic medical images created by MedGAN can be assessed and verified to make sure they accurately and realistically resemble genuine medical images. This could use a number of techniques, includingasevaluatingtheeffectivenessofmachinelearning models that were trained on the fabricated images or contrastingthecreatedimageswithactualmedicalimages.

Overall,MedGANhasthepotentialtobeaneffectivetool for creating artificial medical images that can be used to trainmachinelearningmodelsforanomalydetection,image segmentation,andmedicaldiagnosis.MedGANcouldassist inenhancingtheprecisionandefficacyofmachinelearning models for a variety of medical applications by producing realisticandvariedmedicalimages.

4. CONCLUSIONS

The study paper's conclusion emphasises how GenerativeAdversarialNetworks(GANs)haveatremendous potential for creating fresh data samples that match the originaldata.WetalkedaboutseveralkindsofGANs,their architectures,trainingprocedures,applications,difficulties theyencounter,andethicalissuesrelatedtodataproduced byGANs.ThefuturepotentialofGANs,includingtheirusein unsupervisedrepresentationlearningandthedevelopment of additional GAN architectures, was also covered in the article.

New opportunities have emerged for a number of industries, including entertainment, the arts, and medical, thankstoadvancesinGAN research.However,inorder to increase their application to different sectors, further researchanddevelopmentareneededtoaddressissuessuch modecollapse,instability,andbiasintrainingdata.

In the upcoming years, new ground-breaking applications of GANs are predicted by the most recent research in the field, which includes work on adversarial autoencodersandattentionmechanisms.Itisfascinatingto thinkaboutthehugeadvancesinGANresearch,whichwill increasethenumberofapplicationsinavarietyofindustries andcompletelyalterhowwecreatefreshdatasamples.

REFERENCES

[1] Goodfellow,Ian&Pouget-Abadie,Jean&Mirza,Mehdi& Xu, Bing & Warde-Farley, David & Ozair, Sherjil & Courville, Aaron & Bengio, Y.. (2014). “Generative AdversarialNetworks.AdvancesinNeuralInformation ProcessingSystems”.3.10.1145/3422622.

[2] Radford A, Metz L, Chintala S. 2015 Nov 19. “Unsupervised Representation Learning With Deep ConvolutionalGenerativeAdversarialNetworks”.arXiv preprintarXiv:1511.06434.

[3] Salimans T, Goodfellow I, Zaremba W, Cheung V, RadfordA,ChenX.“ImprovedTechniquesForTraining GAN’S ”. Advances in neural information processing systems.2016.

[4] Karras, Tero & Laine, Samuli & Aila, Timo. (2019). “A Style-Based Generator Architecture For Generative Adversarial Networks”. 4396-4405. 10.1109/CVPR.2019.00453.

[5] Karras,Tero&Laine,Samuli&Aittala,Miika&Hellsten, Janne & Lehtinen, Jaakko & Aila, Timo. (2020). “Analyzing And Improving The Image Quality Of Stylegan”. 8107-8116. 10.1109/CVPR42600.2020.00813.

[6] SitzmannV,MartelJ,BergmanA,LindellD,WetzsteinG. “Implicit Neural Representations With Periodic ActivationFunctions”.AdvancesinNeuralInformation ProcessingSystems.2020;33:7462-73.

[7] J. -Y. Zhu, T. Park, P. Isola and A. A. Efros, "Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks," 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017,pp.2242-2251,doi:10.1109/ICCV.2017.244.

[8] Hoffman J, Tzeng E, Park T, Zhu JY, Isola P, Saenko K, EfrosA,DarrellT.Cycada:“Cycle-ConsistentAdversarial Domain Adaptation”. In International conference on machinelearning2018Jul3(pp.1989-1998).Pmlr.

[9] Huiwen Chang, Jingwan Lu, Fisher Yu, and Adam Finkelstein. "PairedCycleGAN: Asymmetric Style Transfer for Applying and Removing Makeup." CVPR 2018,June2018.

[10] Hafiz,AbdulMueed&Bhat,Ghulam.(2019).“ASurvey of Deep Learning Techniques for Medical Diagnosis” . 10.1007/978-981-13-7166-0_16.

[11] Armanious K, Jiang C, Fischer M, Küstner T, Hepp T, NikolaouK,GatidisS,YangB.“MedGAN:Medicalimage translationusingGANs”.ComputerizedMedicalImaging AndGraphics.2020Jan1;79:101684.

Gu, Y., Zeng, Z., Chen, H. et al. “MedSRGAN: Medical ImagesSuper-ResolutionUsingGenerativeAdversarial Networks”. Multimed Tools Appl 79, 21815–21840 (2020).https://doi.org/10.1007/s11042-020-08980-w