International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Samay Pandey, Dr.Nita Patil, Nishal Poojary, Aarya Walve

1HOD, Dept. of Computer Engineering, K.C. College of Engineering and Management Studies and Research, Maharashtra, India

2Student, Dept. of Computer Engineering, K.C. College of Engineering and Management Studies and Research, Maharashtra, India *** -

Abstract -

The Emotion Predictor Application is a Pythonbased system designed to predict human emotions from visual inputs such as images, videos, and live webcam

streams. The project integrates a custom web scraper to automatically gather emotion-specific images from public sources, building a dedicated dataset for model training. Utilizing Convolutional Neural Networks (CNNs), the model classifies emotions like happiness, sadness, anger, fear, surprise, and neutrality accurately. The system’s architecture emphasizes real-time performance and userfriendly design, making it accessible for real-world applications such as mental health support, educational tools, and user experience enhancement. The project highlights the potential of AI to bridge the gap between humanemotionalexpression and machine understanding

Key Words: Emotion Detection, Deep Learning, CNN, Image Processing, Real-time Prediction, Machine Learning

In today’s digital world, emotions play a vital role in communication and decision-making, yet most systems cannot understand human feelings. The Emotion Detector Application addresses this gap by using deep learning to predict emotions from facial expressions in images,videos,andlivewebcamfeeds[1][4].

The system uses a Convolutional Neural Network (CNN) trainedonacustomdatasetbuiltthroughautomatedweb scraping. This approach ensures better handling of realworldvariationslikelighting,angles,andfacialdiversity.

Designed for speed and simplicity, the application enables real-time emotion prediction while maintaining strongdataprivacy.Itspotentialusecasesincludemental health support, online learning, customer service, and user experience research. The project highlights how AI can help machines understand emotions, making digital interactionsmorehuman-like.

As human beings, emotions are a fundamental part of everyday life. They influence actions, relationships, and

decisions, yet most technological systems can still not

interprettheseemotionalcues.Althoughhumansnaturally detectemotionsthroughfacialexpressions,bodylanguage, and tone of voice, machines have historically lacked this capability.

ThemotivationbehindtheEmotionDetectorApplicationis to enhance digital systems by introducing emotional awareness, enabling them to interpret and respond to human emotions intelligently. This can improve user experiences across various sectors such as mental health, online learning, customer service, and entertainment, whereemotionalunderstandingisessentialformeaningful interaction.

Advancements in machine learning, particularly deep learning,alongwithincreasingcomputationalpower,have made it possible to perform real-time emotion detection with improved accuracy[1][2]. With this project, the team aims to harness these technologies to develop an applicationthatmakes human-computerinteractionmore empathetic,intuitive,anddynamic.

In the age of digital communication, understanding emotional context has become both a challenge and a necessity. Despite significant progress in artificial intelligence and computer vision, most systems are still limitedtodata-drivendecision-makingwithoutaccounting for the user’s emotional state. This lack of emotional intelligence in machines leads to impersonal interactions thatfailtoadapttousers'moodsandintentions.

The core problem addressed by the Emotion Detector Application is the gap between human emotional expression and machine interpretation. Existing systems often struggle to classify emotions accurately, especially under varying conditions like changes in lighting, facial angles,partialocclusion,orculturaldiversity.

To resolve this issue, the project proposes an AI-based emotion detection system that can process facial expressions from images, videos, and live camera feeds,

delivering reliable predictions in real-time. The application uses deep learning techniques to classify emotions while prioritizing data privacy and ethical handlingofuserinputs.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Aim:

The primary aim of this project is to design and develop

anintelligentEmotionDetectionApplicationthatutilizes machine learning and computer vision techniques to accurately predict and classify human emotions from visual data such as static images, recorded videos, and real-timecamerafeeds.

Objectives:

● ToBuildaCustomEmotionDataset:

Develop a dedicated image dataset using a web scraping tool for collecting emotion-specific images from the internettoenhancedatadiversityandmodelrobustness.

● ToTrainaDeepLearningModel:

Implement and train a Convolutional Neural Network (CNN) for multi-class emotion classification, capable of recognizingemotionslikehappiness,sadness,anger,fear, surprise,andneutral.

● ToAchieveReal-TimePerformance:

Optimizethemodelandapplicationpipelineforreal-time emotion detection, with minimal delay between input processingandemotionoutput,especiallyforlivecamera streams.

● ToDesignaUser-FriendlyInterface:

Create an intuitive graphical user interface (GUI) allowinguserstoselectinputsources(images,videos,or livewebcam)andvisualizepredictedemotionswithhigh clarity.

● ToEnsureEthicalAIPractices:

Ensure the system respects user privacy by handling all inputs securely, avoiding unnecessary data storage, and adheringtoethicalAIdesignstandards.

1. A Comprehensive Survey on Deep Facial Expression Recognition

Authors:MohamedElharrouss,etal.

Publishedin:AlexandriaEngineeringJournal,2023

Summary: This survey provides an extensive overview of deep learning techniques applied to facial expression recognition (FER). It discusses various convolutional neural network (CNN) architectures, challenges like data

scarcity and variability in facial expressions, and the importance of large-scale datasets. The paper emphasizes theneedforrobustmodelscapableofhandlingreal-world scenarios, which is directly relevant to applications like yoursthatprocessdiversevisualinputs.

2.DeepLearning-BasedEmotionRecognitionfromRealTimeVideos

Authors:WenbinZhou,etal.Publishedin:2023

Summary: This study introduces a framework utilizing CNNs for emotion detection in real-time video feeds, particularly in educational settings. By adopting Russell's model of core affect, emotions are categorized into four quadrants based on valence and arousal. The approach demonstrates the feasibility of real-time emotion recognition using webcam inputs, aligning well with your app'slivecamerafeedanalysis.

3. Emotion Recognition in Videos Through Deep Neural NetworkModels

Authors: Stanford University CS231n Students Published in:2024

Summary: This paper explores various deep learning architecturesforenhancingemotiondetectionaccuracyin videos. Utilizing the RAVDESS dataset, the study experiments with preprocessing techniques like resizing anddataaugmentationtoimprovemodelperformance.The findings underscore the complexities of video-based emotion recognition and the potential of deep neural networksinaddressingthesechallenges.

4. Facial Emotion Recognition in Real Time Using Deep Learning

Authors:PublishedinIRJET,2024

Summary:Thisresearchpresentsareal-timefacialemotion recognitionsystemleveragingCNNsandRNNs.Thesystem processes live video feeds to identify and classify human emotions, demonstrating high accuracy and responsiveness. The integration of deep learning techniquesforreal-timeanalysisisparticularlypertinentto applications like yours that require immediate emotion detectionfromliveinputs.

3. METHODOLOGY

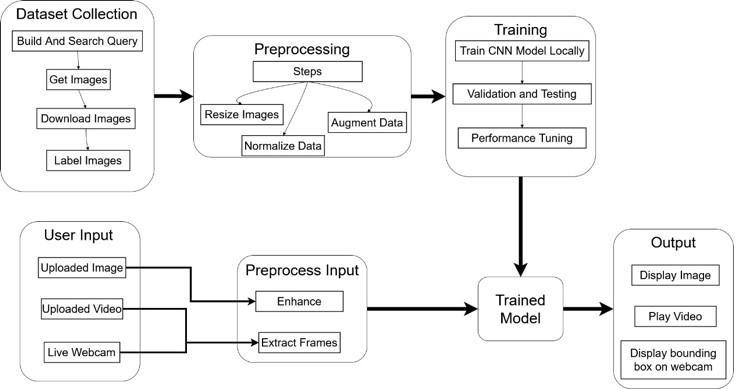

The development of the Emotion Detector Application follows a structured and systematic approach, which is illustratedinthefigurebelow:

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

figure1: Methodology

Themethodologyisdividedintothefollowingstages:

1. DatasetCollection

The initial step involvesthe creation ofa custom dataset usingaself-builtwebscraper.Thescraperautomatesthe process of building search queries, retrieving images fromtheweb,downloading them,andmanuallyorsemiautomatically labelling them based on the emotion represented.Thisensuresthedatasetistailoredforrealworlddiversityinfacialexpressionsandimagequality.

2. Preprocessing:

The collected images undergo essential preprocessing steps, including resizing, normalisation, and data augmentation. Resizing ensures uniform input dimensions for the model, while normalization standardizes pixel values for efficient learning. Augmentation techniques like rotation, flipping, and brightness adjustment are applied to increase data varietyandpreventoverfitting.

3. ModelTraining:

A Convolutional Neural Network (CNN) is designed and trained on the preprocessed dataset. The training stage involves splitting the data into training, validation, and testsets.Themodel’sperformanceisevaluatedandtuned using techniques like hyperparameter adjustment and optimization,ensuringhighaccuracyandgeneralizability onunseendata.

4. UserInput&Preprocessing:

Oncethemodelistrained,theapplicationallowsusersto inputdatathroughthreemodes:

UploadedImagesUploadedVideosLiveWebcamFeed

Input data isfurther preprocessed based onits type.For videosandwebcamfeeds,frameextractionisapplied;for static images, enhancement techniques are used to ensurequalitybeforeprediction.

5. EmotionDetection&Output:

ThepreprocessedinputispassedtothetrainedCNNmodel, whichpredictsthecorrespondingemotionlabel.Theresult isthenpresentedinauser-friendlymanner:

● Forimages,theemotionisdisplayedalongsidethe image.

● For videos, detected emotions are displayed frame-wiseduringplayback.

● For live webcam, real-time emotion detection is visualised using bounding boxes on faces with emotion labels.

This stepwise methodology ensures that the system is efficient,accurate,andsuitableforreal-worldapplications where both speed and precision are critical. The model architecture and training strategy in this project are consistent with recent approaches in facial emotion detection,emphasizingfeatureextractionandclassification accuracy[2][3].

The final emotion detection application was successfully developedandtested.Belowarethekeyinterfacescreens:

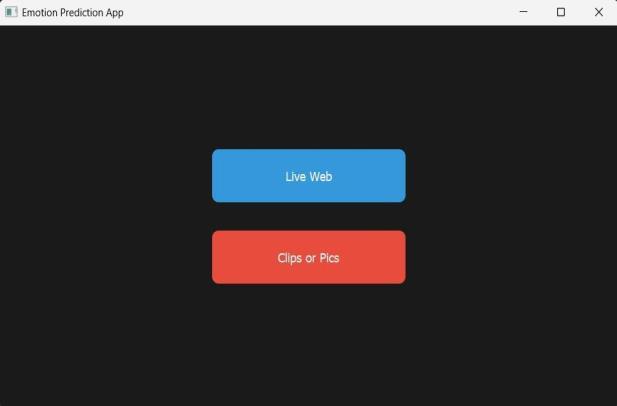

1. MainScreen

figure2:MainMenu

Theappopenstoasimpleinterfacefeaturingtwobuttons:

● LiveWeb launchesreal-timeemotiondetection viawebcam.

● ClipsorPics allowsuserstouploadanimageor avideoforofflineemotionprediction.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

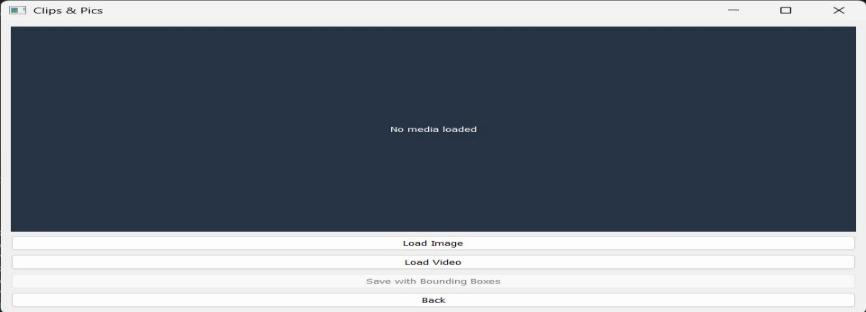

1. NoMediaLoadedScreen

figure3:VideoandImageprocessing

When the Clips or Pics option is selected, the interface prompts the user to load either an image or a video for processing.

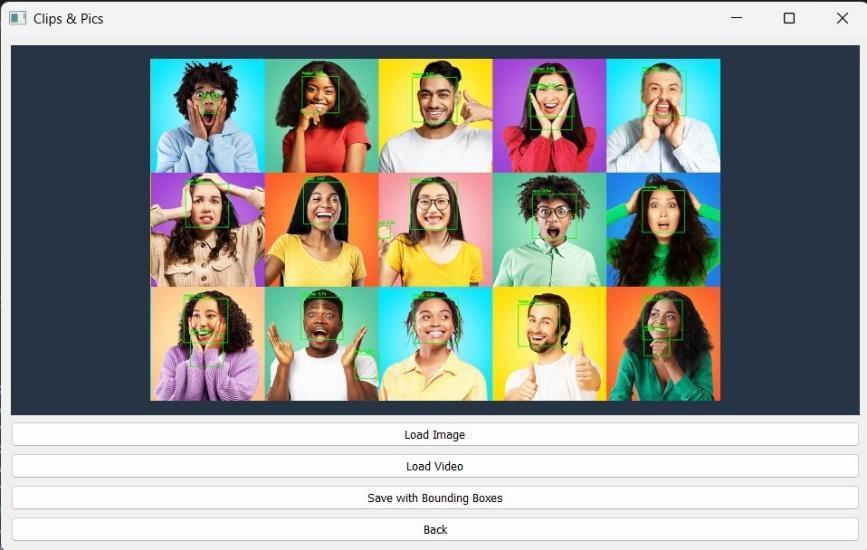

3. ImageUploadandProcessing

figure4:ImageUploaded

Onceanimageisuploaded,thesystembeginsprocessing itforemotiondetection.

4. ImagePredictionDisplay

figure5:ImageProcessed

After processing, the application displays the image with bounding boxes and corresponding emotion labels over detectedfaces.

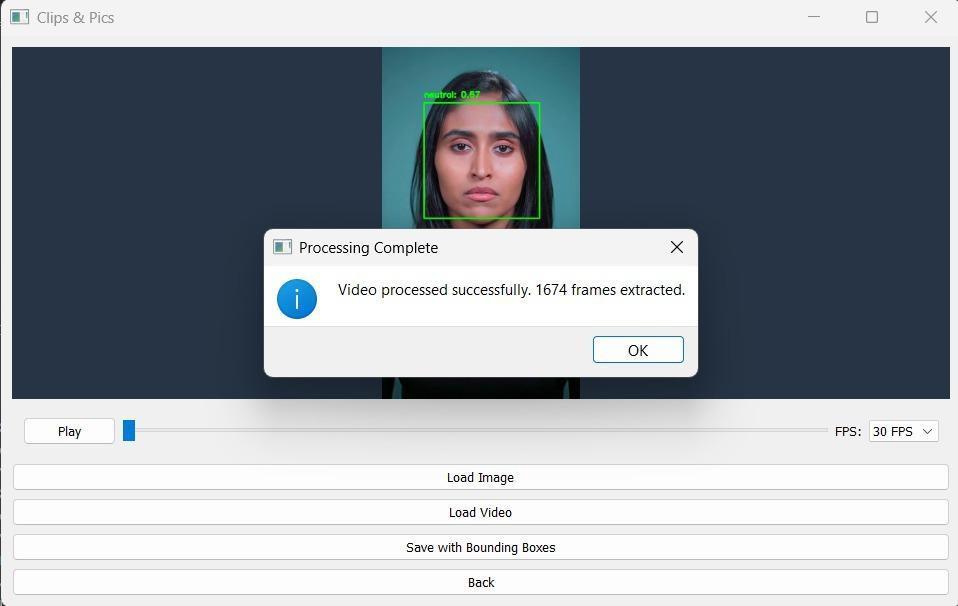

5. VideoProcessingCompletion

figure6:VideoProcessed

Once all frames are extracted, the application shows the totalnumberofframesprocessedbeforeplayback

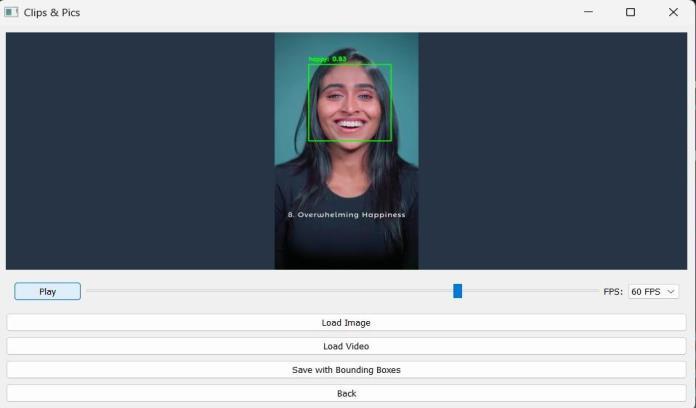

6. VideoPlaybackwithPredictions

figure7:VideoPlayback

The predicted emotions are displayed frame-wise while thevideoisplayedinsidetheapplicationinterface.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

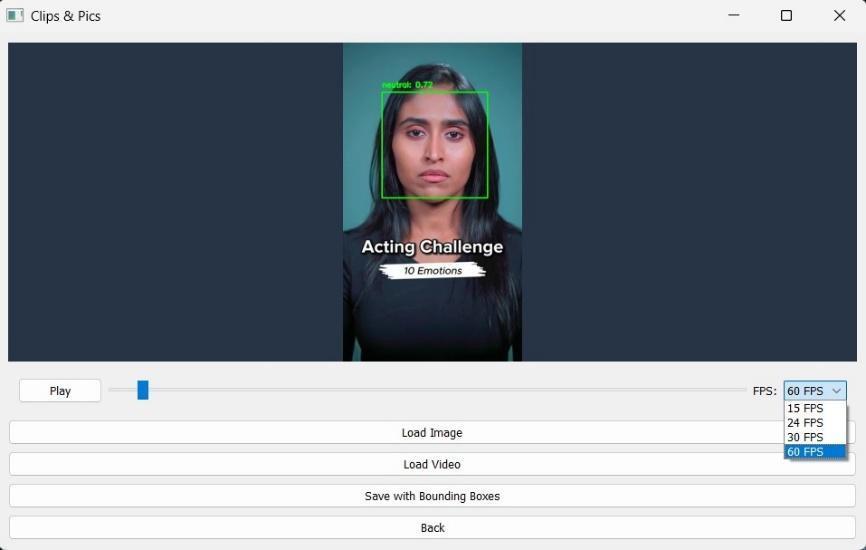

7. FrameRateAdjustment

figure9:FrameRateAdjustment

Users are provided an option to modify the Frames Per Second (FPS) for smoother or slower playback,62 dependingontheanalysisrequirement.

4. CONCLUSIONS

The proposed Emotion Detector Application successfully classifies facial emotions from images, videos, and live webcam feeds using a trained CNN model. The system offers accurate predictions and a user-friendly interface, makingitsuitableforfutureuseinemotion-awaresystems. The approach followed in this application aligns with the research community’s findings on emotion classification usingdeeplearning[1][4]

ACKNOWLEDGEMENT

We extend our sincere gratitude to Dr. Nita Patil for her invaluable guidance, continuous support, and insightful feedback throughout this project. Her expertise and encouragement were crucial to the successful completion ofthisresearch.

[1] Mellouk, A., & Handouzi, M. (2020). Facial emotion recognition using deep learning: Review and insights. ProcediaComputerScience,176,689–694.

[2]ElBoudouri,Y.,&Bohi,A.(2025).EmoNeXt:AnAdapted ConvNeXt for Facial Emotion Recognition. arXiv preprint arXiv:2501.08199.

[3] Roy,A.K.,Kathania,H.K.,Sharma,A.,Dey,A.,&Ansari,M. S. A. (2024). ResEmoteNet: Bridging Accuracy and Loss Reduction in Facial Emotion Recognition. arXiv preprint arXiv:2409.10545.

[4] Schoneveld,L.,&Othmani,A.(2022).TowardsaGeneral Deep Feature Extractor for Facial Expression Recognition. arXivpreprintarXiv:2201.07781.