International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

Sujay

Maladi1 , Hrishikesh Akundi2 , Pallavi Vanamada3 , Rahul Bellamkonda4

1234Department of Computer Science and Engineering, GITAM (Deemed to be University), Visakhapatnam, Andhra Pradesh, India

Abstract – Plant diseases present a major problem to the agriculture sector, significantly impacting crop yields. Timely detection of plant diseases with good precision and accuracy is crucial for controlling outbreaks and reducing their effects on agricultural production. Although there have been recent developments in the use of Artificial Intelligence and Machine Learning for the detection of plant diseases, these solutions are dependent on large datasets of annotated images which may not be readily available in real life. This study examines the application of Few-Shot Learning approach for plant disease identification in scenarios with limited datasets. Few-Shot Learning enables a model to recognize new classes with very small number of examples, making it highly efficient. It imitates human learning abilities to generalize from minimal examples. This contrasts traditional machine learning methods, which usually require huge amounts of labeled data to achieve good accuracy. In this paper, the PlantVillage dataset has been used and EfficientNet, a pre-trained Convolutional Neural Network which was trained on the ImageNet dataset has been used along with Siamese Networks and a Triplet Loss function for FSL. The proposed approach is tested and compared with the regular fine-tuning transfer learning The outcomes reveal that Few-Shot Learning with EfficientNet and Siamese Networks outshines the conventional transfer learning methods for the detection of plant diseases especially in the scenarios where quick adaptability is needed.

Key Words: Machine Learning, Deep Learning, Few-shot Learning,TransferLearning,Fine-tuning,SiameseNetworks, TripletLoss,EfficientNet

Theagriculturesectoristhebackboneinprovidingfoodfor human beings and animals, as well as in helping achieve sustainable development goals, especially SDG 2: Zero Hunger.Theagriculturesectormainlydealswithplants,and currently,plantdiseasesareamajorproblem.Plantdiseases that are not detected and managed early on may spread throughouttheentirecrop.Thisnotonlyreducesproduce qualitybutalsocauseshugeeconomiclossesforfarmersand mayharmtheecosystem.Manualplantdiseasedetectionis susceptible to human error and demands extensive knowledgeinthefield.

So,thereisaclearneedforautomatedplantdiseasedetection system[1].Imagesofplantleavescanbeusedtoeasilydetect thepresenceofadiseaseandidentifyitstype[2].Artificial Intelligence and Machine Learning have introduced many

solutionsfordetectingandclassifyingplantdiseasesbutmost of these approaches are impractical due to the need of collecting and annotating large datasets of plant images. Hence,thisstudyusesanapproachcalledFew-ShotLearning.

Few-ShotLearningisamachinelearningapproachwherea modellearnstomakepredictionsbytrainingwithonlyavery small number of examples. This approach is mainly used whendataislimitedandthemodelneedstoadjustefficiently to new tasks. Few-Shot Learning is generally used in applicationslikefacerecognition,medicalimaging,natural languageprocessing,visualsearch,robotics,gameAI,etc.

Few-Shot Learning can be studied using the N-way-K-shot structure where N indicates the number of classes and K indicatesthenumberofexamplesprovidedforeachoftheN classes. Forexample,ifitis4-way-2-shottask,itmeansit contains4classesofimagesand2examplesofeachclassare provided.ThehighertheNvalue,themoredifficultthetaskis butthehighertheKvalue,theeasierthetaskgetsbecause more supporting information is available to derive an inference.

In Few-shot learning, the K value is typically less than or equalto10.Few-shotlearningisgivenspecificnameswhen K=0andK=1.IfK=0,itiscalledzero-shotlearning,andif K=1,itiscalledone-shotlearning.

Deep learning approaches based on convolutional neural networks (CNNs) have proven highly effective for plant diseaseclassificationashighlightedin[3,4,5,6].However, withtherapidemergenceofnewplantdiseases,thereisa needforplantdiseasedetectiontechnologiesthatcanquickly adjusttochangesandoperateeffectivelywithlimiteddata [7,8].Inthiscontext,few-shotlearningappearstobeagood solution.

In [9] the authors proposed a few-shot learning approach usingSiameseNetworks.Here,thefeaturesareextractedbya two wayconvolutional neural network (CNN) withshared weights, a spatial structure optimizer (SSO) enhanced the metriclearningandak-nearestneighbor(kNN)classifieris used for classification. In [10] a low-shot learning method was proposed, where support vector machine (SVM) was employed to segment disease spots while preserving edge

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

information,and improvedconditional deepconvolutional generative adversarial networks (C-DCGAN) generated augmentedimagesfortrainingVGG16model.Theyachieved anaverageaccuracyof90%.In[11]theauthorsproposeda methodology for plant disease detection based on a semisupervisedfew-shotlearningapproach.Theyimprovedthe accuracy of the model by adaptively choosing the pseudolabeledsamplestofine-tunethemodel.Asdiscussedin[12], the author proposed a few-shot learning strategy which involvedapre-trainedmodelontheImageNetdatasetand refiningitwiththehelpofasimilardatasetforplant-based featuresextraction.Here,threeCNNbaselinearchitectures wereused:GoogleNet,DenseNetandMnasNet.M.H.Saadand A. E. Salman [13] proposed a one-shot learning approach utilizing Siamese network. They also provided a unique method forimage segmentation. Also,M.Rezaei etal.[14] introduced a few-shot learning method integrated with a featureattentionmodule.Thefeaturelearnersusedhereare ResNet50andVisionTransformers(ViT).Allofthesestudies yieldedverygoodresults,butnoneofthemusedEfficientNet.

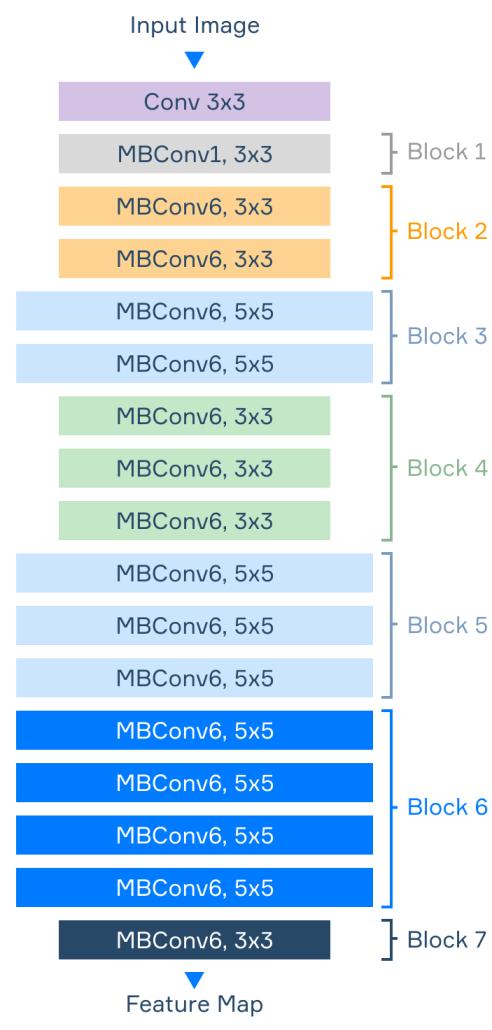

EfficientNet is a CNN based architecture which is mainly designedforproblemsrelatedtocomputervisionandimage recognition.EfficientNet-B0isthebaselinearchitecturefrom whichEfficientNet-B1toEfficientNet-B7arederived.Thisis doneusingcompoundscaling.Fig-1showstheEfficientNetB0architecture.Accordingto[15,16]EfficientNetshowcased betterperformancecomparedtoothersimilararchitectures suchasResNet,AlexNet,VGG16andInceptionV3.Hence,this studyemploysEfficientNet.

ThisstudyusedthePlantVillagedataset,whichwaslaunched as a part of the PlantVillage project, an initiative led by researchersfromPennsylvaniaStateUniversity.Thisdataset wasselectedforthisstudybecauseitiswidelyrecognizedas abenchmarkforplantdiseaseclassificationproblems.This datasetcontains54303imagesofplantleaveswith38classes asshowninTable-1.Itincludes14differentcropspeciesand 26differentdiseases[2].SomeexamplesareshowninFig-2.

Data augmentation techniques including rotation, flipping, croppingandGaussianblurringwereappliedtoincreasethe diversityofthedataset.Also,alltheimageswereresizedto equaldimensionsof256×256.Thedatasetwasdividedinto asourcedomainDsof34classesandatargetdomainDt of4 classes.Theimagesaresplitinto80%fortrainingand20% fortesting.

Thisstudyutilizedbothlocalandcloud-basedenvironments forimplementation.Thelocalsetupincludedacomputerwith the following specifications: Intel(R) Core(TM) i7-9750H CPU,NVIDIA(R)GeForce(R)GTX1660TiGPU(6GBGDDR6

International Research Journal of Engineering and Technology (IRJET) e-ISSN:

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net

memory),32GBofDDR4RAM,anda64-bitWindows10Pro operatingsystemandthestudywasconductedusingPython inJupyterNotebook. GoogleColab,acloud-basedplatform providedaccesstoadvancedcomputationalresourcessuch asGPUs

Table-1: PlantVillageDatasetOverview

Crop

Cherry

Corn

Corn

Corn

Corn

Grape

Grape

Grape

Grape

Orange

Peach

Peach

Pepper

Pepper

Potato

Squash

Strawberry

Tomato

Tomato

Tomato

Tomato

Tomato

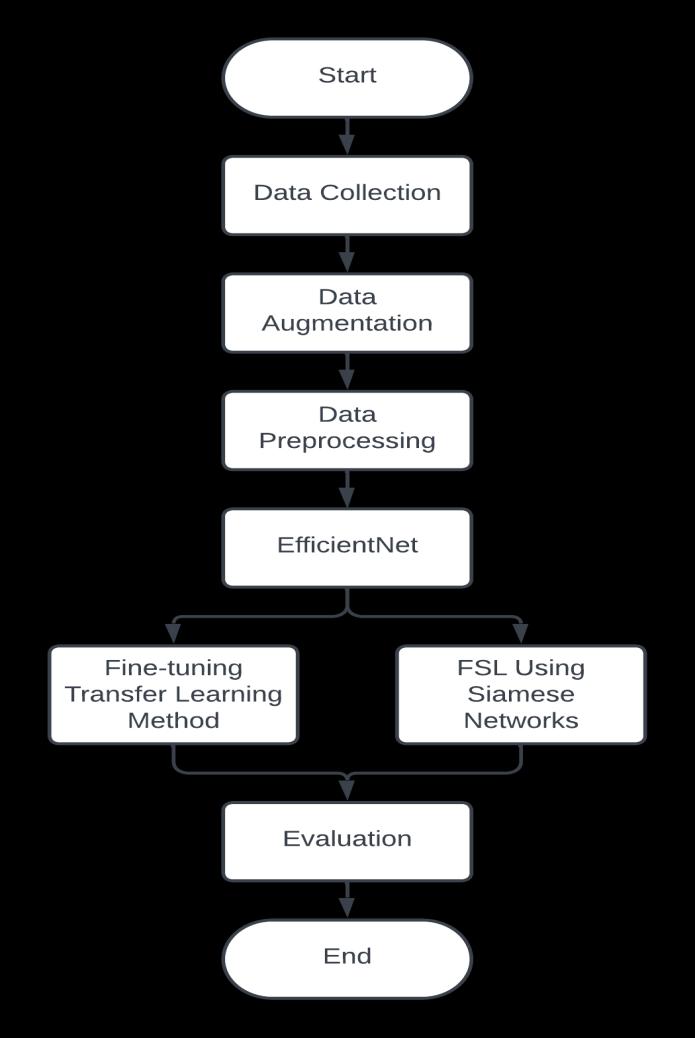

Thesystemarchitectureofthisstudyisbasedonproposals given in [17, 18]. Concepts like pre-training, fine-tuning, transfer learning, few-shot learning, etc. were used in this study.Bytheendofthestudy,usingperformancemetrics,the performanceofaregularfine-tuningtransferlearningmodel is compared with that of a few-shot learning model using Siamese networks. Fig-3 shows the complete system methodology.

Fig-3: SystemMethodology

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

In studies like this, transfer learning is an important part [19].Transferlearningisatechniquewhereamodeltrained on one task (source) is reused or fine-tuned for another related task (target). The knowledge gained from a large, general dataset can be used to improve performance on a smallerorspecificdataset.Knowledgecanbetransferredby freezing some layers in the network and fine-tuning other layers.

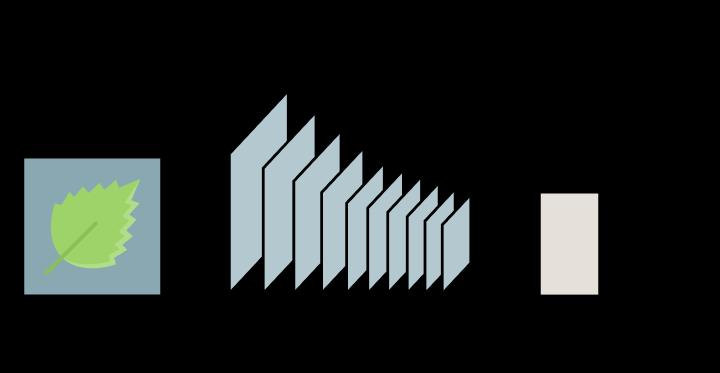

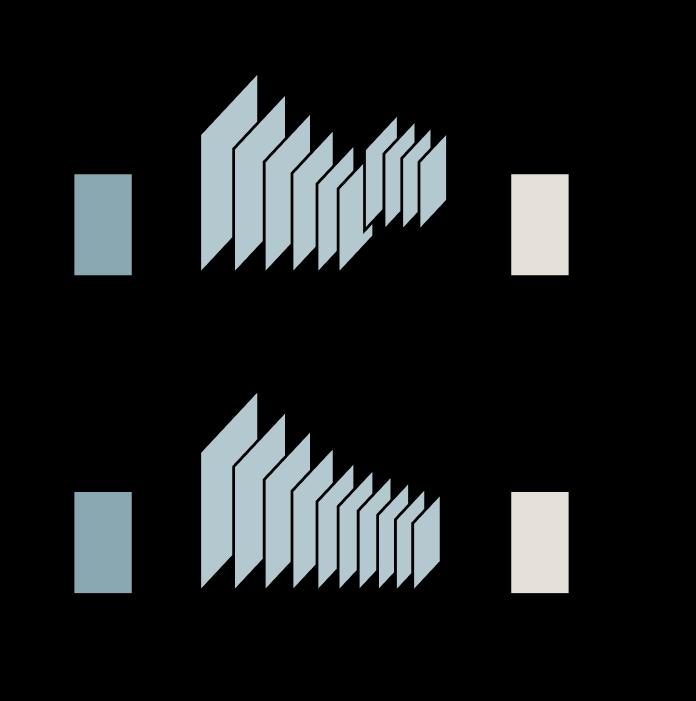

Fig-4illustratestheprocedurewhereaconvolutionalneural networkbasedarchitectureisadaptedforfeatureextraction ofplantleaves.Here,theimage xi isembeddedintoafeature vector fi.Then,anSVMclassifierisemployedtodetermine thelabelofinputimage.Fig-5showstheFew-ShotLearning architecture. The upper section is a plant leaf classifier trainedonsourcedatasetandthelowersectionisachieved byfreezingtheCNN.

4.1 Pre-training

Pre-training is the process of training a model on a huge datasetbeforefine-tuningitonsmallerdatasets.Thesehuge datasetsaregenericandthemodellearnsgeneralfeatures

from such datasets. This step is crucial as training models fromscratchonsuchhugedatasetscanbecomputationally expensive.Afterpre-trainingthemodelcanbefurthertrained orfine-tunedonasmaller,task-specificdataset.

ImageNetisalarge-scaledatasetwithimagesbroadlyusedin computervisionandimagerecognitiontasksfortrainingand benchmarkingmachinelearningmodels.ImageNethasover 14 million labeled images organized into 21 thousand categories.Ithaswidediversityastheimagesaretakenfrom variousreal-worldscenarios,coveringarangeofobjectsand environments.ImageNetservesasthemainfoundationfor manypopularpre-trainedmodelslikeResNet,VGG,AlexNet, Inception,MobileNet,DenseNet,EfficientNet,etc.

These models can be used for feature extraction purposes andtheycanactasafoundationfortransferlearningtasks. This study mainly focused on EfficientNet because of its potentialforsuperiorperformancecomparedtoothersimilar models.

EfficentNet was introduced in 2019, mainly designed to achieve high accuracy with fewer parameters and computational resources compared to other convolutional neural networks.EfficientNetusesasystematiccompound scalingmethodtobalancethedepth,width,andresolutionof thenetworkeffectively.EfficentNethasarangeofvariants from B0 to B7. Smaller variants like B0 are suitable for resource-constrainedenvironments.LargervariantslikeB7 areoptimizedformaximumaccuracy.

In this study, EfficientNet-B5 pre-trained on ImageNet is utilizedasthebaselinearchitecturebecauseofitsbalanced performance and efficiency. ImageNet is a general dataset that does not exclusively focus on plants. So, the convolutional neural network is first used for feature extractionofplantleaves.Itthenlearnedtomapimagesof plant leaves xi into f(xi), which represents essential characteristics.

For task specific purposes, the network is then fine-tuned. Fine-tuninginvolvestheprocessofadjustingapre-trained convolutionalneuralnetworklikeEfficientNettoaspecific task–inthiscase,plantleaves.Around80%ofthelayersof EfficientNet network are frozen. So, these weights are not affected during training. General features like edges and shapesarepreserved.Theremaininglayersaretrainedwith the source dataset Ds Then, the fully connected layers are replacedwithsupportvectormachine(SVM)classifier,which is trained to make predictions. Then, a dimensionality reduction is applied to simplify the representation while preserving essential features. An embedding dimension of 128isselectedforthistask.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

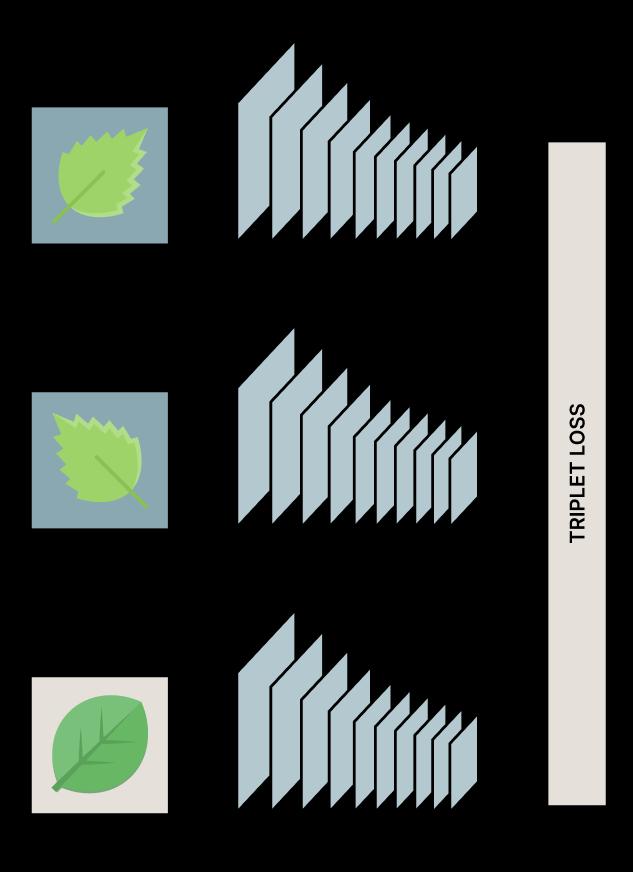

Forlearningimageembeddings,wecanuseSiamesenetwork. In[17]theauthorsmentionedtwoarchitectures:

1. Siamese network with two subnets and contrastive loss.

2. Siamese network with three subnets and triplet loss.

The authors stated that the Siamese network with three subnetsandtripletlossproducedthebestresultscompared tothebaselinefine-tunedmodelandtheSiamesenetwork withcontrastiveloss.So,thisstudyfocusedontheSiamese networkwiththreesubnetsandtripletloss

A three subnet Siamese network with triplet loss is an architectureoftenusedfortasksrelatedtosimilaritylearning suchasfacerecognition,objectidentification,etc.Itcanalso be employed in plant disease classification. This Siamese networkhasthreeinputs:Anchor(Xa),Positive(Xp),Negative (Xn).Ananchorislikeareferenceimage,positiveisanimage similartotheanchor,andnegativeisanimagedifferentfrom theanchor.Thetripletlossfunctionisgivenby:

Here, all the subnets in the Siamese network architecture sharethesameweights.ThisisillustratedinFig-6.

SVMsarealgorithmsusedforclassificationandregression problems. They work well for tasks which require a clear margin of separation between classes. The aim of SVM classifier is to reduce misclassification and improve generalizationonunseendata.SVMscanbeappliedtohandle multiclassproblemsusingtechniqueslikeone-vs-alloronevs-onestrategies.Thisstudyusedaone-vs-allstrategy.Inthis approach for a K class problem, K separate classifiers are trained.Eachofthemdistinguishesoneclassfromallother classes.Here,eachembedding (fi) isassignedtoaclassbased ontheSVMclassifierwiththehighestconfidencescore.

Now, knowledge transfer from source domain Ds to target domainDt isdone.Thegoalistoadaptamodeltrainedon one domain (Ds) to perform well on another domain (Dt). Aftertrainingtheconvolutionalneuralnetworkonthesource domainDs theSVMclassifierisretrainedusingembeddings from the target domain Dt. The embeddings for both the methodswerederivedfromtheCNNtrainedonDs.During thisphaseonlytheshallowclassifierisretrainedusingthe trainingdatafromDt.Thisstudyuseddifferentamountsof trainingdataperclass,rangingfrom1imageto50images. ComparingitwiththeN-way-K-shotstructure,Kvaluevaries from1to50.So,thisnumbercanbecalledasthenumberof shots.

For the baseline fine-tuning method, EfficientNet is first trainedonthesourceDs andfewlayersofthenetworkare thenfine-tunedusingsubsetsoftargetdomainDt.Thetestset has been kept constant in both the methods and the experimentisrepeatedmultipletimestoensurereliability.

Fortheexperiment,50epochswereusedwithabatchsizeof 34.Adamoptimizerwithmarginm=1wasusedintriplet lossfunction.Theexperimentwasconductedwiththehelpof KeraspoweredbyTensorFlow.

Performancemetricsforthisexperimentcanbecalculated using confusion matrix. These performance metrics help evaluatethequalityofthemodel’spredictions.Belowarethe commonlyusedmetrics:

Accuracy = (TP + TN) / (TP + TN + FP + FN) (2)

Precision = TP / (TP + FP) (3)

Recall = TP / (TP + FN) (4)

F1-Score = 2 * (Precision * Recall) / (Precision + Recall) (5)

Where, TP refers to True Positives, TN refers to True Negatives,FPreferstoFalsePositives,andFNreferstoFalse Negatives.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

Since,thisismulticlassproblem,themulticlassaccuracycan becalculatedusingequation(6).Inthisstudy,thedatasets classeswerebalancedmakingmultiwayaccuracyaneffective measureofperformance[17,20]

Accuracy= (∑i Nii) / (∑i,j Nij) (6)

Where Nij referstothenumberofimagesof i classifiedbythe algorithmas j Inthisexperiment, i,j ∈{1,2,3,…,34}forthe mainbranchintheDsdataset,and i,j ∈{1,2,3,4}fortheFewShotLearningintheDt dataset.

Boththemethods,i.e.,regularfine-tuningtransferlearning and the proposed methodology, Few-Shot Learning with EfficientNet and Siamese Networks were evaluated. The experiment was conducted using different amounts of examples per class, ranging from 1 image to 50 images. Results show that the proposed methodology, Few-Shot LearningwithEfficientNetandSiameseNetworks,achieved anaccuracyof90.2%usingasfewas10shots,whereasthe regular fine-tuning transfer learning model consistently scoredsignificantlyloweraccuracythanproposedmodelfor everyvalueofnumberofexamplesperclass.Bothmethods struggledtodifferentiatebetweenleavesanddiseasesthat closely resemble each other. These methods are likely to achieveevenbetterresultsondatasetswhereplantdiseases aremorevisuallydistinct.Thenumberofshotsvsmaximum accuracyisshowninFig-7.

AsshowninFig-7,theproposedmethodologygiveshigher accuracyasthenumberofshotsincreases.Asthenumberof shotsincrease,thechallengeoflearningwithsmallnumberof examplesdiminishes.However,usinglargernumberofshots defeatstheprimarypurposeofemployingFew-ShotLearning and it starts to resemble traditional supervised learning wherelargerdatasetsareavailabletouse.

In recent years, Few-Shot Learning has been gaining significantattentioninvariousdomains.Manyrecentstudies haveappliedFew-ShotLearningtechniquestodiversetasks demonstratingitseffectiveness[21,22,23,24]

EfficientNetisoneofthelatestconvolutionalneuralnetwork architecturesandcanbeconsideredasstate-of-the-art.While othersimilarconvolutionalneuralnetworkarchitecturescan also be used in this experiment, EfficientNet gives better accuracy with fewer parameters compared to other architectures [25]. This highlights its potential to use in similarstudiesandapplications.

ThisstudyshowsthatFew-ShotLearningwithEfficientNet andSiameseNetworksusingtripletlossgavebetterresults comparedtotheregularfine-tuningtransferlearningmodel. ThestudyalsohighlightsthepotentialofFew-ShotLearning fordevelopingeffectiveplantdiseaseidentificationsolutions withminimalannotateddata.Also,thefindingsconfirmthat Few-ShotLearningiswell-suitedfortasksrequiringquick adaptationandwheredataislimited.

[1] S.Sladojevic,M.Arsenovic,A.Anderla,D.Culibrk,andD. Stefanovic,“DeepNeuralNetworksBasedRecognitionof Plant Diseases by Leaf Image Classification,” ComputationalIntelligenceandNeuroscience,vol.2016, pp.1–11,2016,doi:10.1155/2016/3289801.

[2] S.P.Mohanty,D.P.Hughes,andM.Salathé,“UsingDeep Learning for Image-Based Plant Disease Detection,” Frontiers in Plant Science, vol. 7, Sep. 2016, doi: 10.3389/fpls.2016.01419.

[3] G. G. and A. P. J., “Identification of plant leaf diseases usinganine-layerdeepconvolutionalneuralnetwork,” Computers & Electrical Engineering, vol. 76, pp. 323–338, Jun. 2019, doi: 10.1016/j.compeleceng.2019.04.011.

[4] M.H.Saleem,J.Potgieter,andK.M.Arif,“PlantDisease DetectionandClassificationbyDeepLearning,”Plants, vol. 8, no. 11, p. 468, Oct. 2019, doi: 10.3390/plants8110468.

[5] J.LiuandX.Wang,“Plantdiseasesandpestsdetection basedondeeplearning:areview,”PlantMethods,vol. 17, no. 1, p. 22, Dec. 2021, doi: 10.1186/s13007-02100722-9.

[6] J. Lu, L. Tan, and H. Jiang, “Review on Convolutional Neural Network (CNN) Applied to Plant Leaf Disease Classification,” Agriculture, vol. 11, no. 8, p. 707, Jul. 2021,doi:10.3390/agriculture11080707.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 11 Issue: 12 | Dec 2024 www.irjet.net p-ISSN: 2395-0072

[7] J.B.Ristaino etal.,“The persistent threatof emerging plant disease pandemics to global food security,” Proceedings of the National Academy of Sciences, vol. 118,no.23,Jun.2021,doi:10.1073/pnas.2022239118.

[8] R.W.Mwangi,M.Mustafa,K.Charles,I.W.Wagara,and N. Kappel, “Selected emerging and reemerging plant pathogens affecting the food basket: A threat to food security,”JournalofAgricultureandFoodResearch,vol. 14, p. 100827, Dec. 2023, doi: 10.1016/j.jafr.2023.100827.

[9] B. Wang and D. Wang, “Plant Leaves Classification: A Few-ShotLearningMethodBasedonSiameseNetwork,” IEEE Access, vol. 7, pp. 151754–151763, 2019, doi: 10.1109/ACCESS.2019.2947510.

[10]G.Hu,H.Wu,Y.Zhang,andM.Wan,“Alowshotlearning methodfortealeaf’sdiseaseidentification,”Computers andElectronicsinAgriculture,vol.163,p.104852,Aug. 2019,doi:10.1016/j.compag.2019.104852.

[11]Y. Li and X. Chao, “Semi-supervised few-shot learning approachforplantdiseasesrecognition,”PlantMethods, vol. 17, no. 1, p. 68, Dec. 2021, doi: 10.1186/s13007021-00770-1.

[12]P.UskanerHepsağ,“Efficientplantdiseaseidentification usingfew-shotlearning:atransferlearningapproach,” MultimediaToolsandApplications,vol.83,no.20,pp. 58293–58308, Dec. 2023, doi: 10.1007/s11042-02317824-2.

[13]M. H. Saad and A. E. Salman, “A plant disease classification using one-shot learning technique with field images,” Multimedia Tools and Applications, vol. 83, no. 20, pp. 58935–58960, Dec. 2023, doi: 10.1007/s11042-023-17830-4.

[14]M.Rezaei,D.Diepeveen,H.Laga,M.G.K.Jones,andF. Sohel,“Plantdiseaserecognitioninalowdatascenario usingfew-shotlearning,”ComputersandElectronicsin Agriculture, vol. 219, p. 108812, Apr. 2024, doi: 10.1016/j.compag.2024.108812.

[15]Ü.Atila,M.Uçar,K.Akyol,andE.Uçar,“Plantleafdisease classificationusingEfficientNetdeeplearning model,” Ecological Informatics, vol. 61, p. 101182, Mar. 2021, doi:10.1016/j.ecoinf.2020.101182.

[16]A. K. Bairwa, S. Joshi, and S. Chaudhary, “Machine Learning Based Plant Disease Detection Using EfficientNet B7,” 2024, pp. 1–12. doi: 10.1007/978-3031-47372-2_1.

[17]D.Argüesoetal.,“Few-ShotLearningapproachforplant disease classification using images taken in the field,” Computers and Electronics in Agriculture, vol. 175, p.

105542, Aug. 2020, doi: 10.1016/j.compag.2020.105542.

[18]A.Medelaetal.,“FewShotLearninginHistopathological Images:ReducingtheNeedofLabeledDataonBiological Datasets,”in2019IEEE16thInternationalSymposium onBiomedicalImaging(ISBI2019),IEEE,Apr.2019,pp. 1860–1864.doi:10.1109/ISBI.2019.8759182.

[19]J.Chen,J.Chen,D.Zhang,Y.Sun,andY.A.Nanehkaran, “Using deep transfer learning for image-based plant disease identification,” Computers and Electronics in Agriculture, vol. 173, p. 105393, Jun. 2020, doi: 10.1016/j.compag.2020.105393.

[20]A. B. Rad et al., “ECG-Based Classification of ResuscitationCardiacRhythmsforRetrospectiveData Analysis,”IEEETransactionsonBiomedicalEngineering, vol. 64, no. 10, pp. 2411–2418, Oct. 2017, doi: 10.1109/TBME.2017.2688380.

[21]J. Wang, K. Liu, Y. Zhang, B. Leng, and J. Lu, “Recent advances of few-shot learning methods and applications,”ScienceChinaTechnologicalSciences,vol. 66,no.4,pp.920–944,Apr.2023,doi:10.1007/s11431022-2133-1.

[22]Y.Song,T.Wang,P.Cai,S.K.Mondal,andJ.P.Sahoo,“A ComprehensiveSurveyofFew-shotLearning:Evolution, Applications, Challenges, and Opportunities,” ACM ComputingSurveys,vol.55,no.13s,pp.1–40,Dec.2023, doi:10.1145/3582688.

[23]W.Lietal.,“LibFewShot:AComprehensiveLibraryfor Few-Shot Learning,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 12, pp. 14938–14955, Dec. 2023, doi: 10.1109/TPAMI.2023.3312125.

[24]W.ZengandZ.Xiao,“Few-shotlearningbasedondeep learning: A survey,” Mathematical Biosciences and Engineering, vol. 21, no. 1, pp. 679–711, 2023, doi: 10.3934/mbe.2024029.

[25]M. Tan and Q. v. Le, “EfficientNet: Rethinking Model ScalingforConvolutionalNeuralNetworks,”May2019.