International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Utkarsh Khare, Shivam Mishra, Utkarsh Pratap Singh, Shubhangi Tiwari, Rishi Rajput, Vineet Agarwal

Utkarsh Khare, Computer Science and Engineering, Babu Banarasi Das Institute of Technology and Management, Lucknow, India

Shivam Mishra, Computer Science and Engineering, Babu Banarasi Das Institute of Technology and Management, Lucknow, India

Utkarsh Pratap Singh, Computer Science and Engineering, Babu Banarasi Das Institute of Technology and Management, Lucknow, India

Shubhangi Tiwari, Computer Science and Engineering, Babu Banarasi Das Institute of Technology and Management, Lucknow, India

Rishi Rajput, Computer Science and Engineering, Babu Banarasi Das Institute of Technology and Management, Lucknow, India

Mr. Vineet Agarwal, Department of Computer Science and Engineering, Babu Banarasi Das Institute of Technology and Management, Lucknow, Uttar Pradesh, India

Abstract - This paper presents the comprehensive development of an automated image caption generator utilizing state-of-the-art deep learning methodologies. The systemeffectivelyintegrates ConvolutionalNeuralNetworks (CNNs) for high-level image feature extraction with Long Short-TermMemory(LSTM) networksforsequentialnatural language generation. This hybrid architecture enables the generation of coherent, contextually accurate captions that describe the content of input images. Leveraging the Flickr8k dataset,themodelistrainedandvalidatedtodemonstratethe seamless integration of computer vision and natural languageprocessing(NLP) twotraditionallydistinctareas of artificial intelligence.

The core objective of the project is to enhance humancomputerinteraction by enabling machines to interpret and verbalizevisualinformationinamannerthatcloselyresembles human understanding. This not only aids in improved informationretrieval andcontentindexingbutalsopavesthe way for diverse real-world applications in domains such as ecommerce (automated product descriptions), biomedical diagnostics (interpreting medical imagery), assistive technologies (for the visually impaired), autonomous vehicles (scene understanding), and social media content moderation

Asignificantportionofthestudyisdedicatedtoaddressing the implementation challenges, including issues related to datapreprocessing, modeloverfitting, languagediversity, and semanticalignment betweenvisualfeatures andtextual representations.Thepaperalsoexaminesstandard evaluation metrics used in image captioning tasks, such as BLEU, METEOR, ROUGE,and CIDEr,highlightingtheirstrengthsand limitations in measuring linguistic and contextual quality.

In addition, the research underscores the importance of employing a robust and modular development pipeline, which involves meticulous dataset preprocessing, vocabulary construction, embedding layers tuning, and architecture optimization. The effectiveness of various training strategies, such as transfer learning, dropout regularization,and beamsearchdecoding,isalsodiscussed tofine-tunethebalancebetweenaccuracyandcomputational efficiency.

Moreover, the paper investigates the transformative impact of image captioning technologies across various sectors. In biomedicine, for instance, captioning can support the interpretation of X-rays and MRIs; in social platforms, it facilitates automatic content tagging; and in educational platforms, it provides visual description support for learning materials, thereby promoting digital inclusivity.

This project not only maps the breakthroughs and current limitations in neural network-based image captioning but also contributes to the broader AI research landscape by identifying future directions. These include the exploration of attention mechanisms, transformer architectures, multilingual captioning, and context-aware captioning using external knowledge sources. Ultimately, the project aspires to bridge the semantic gap between vision and language, offering a step forward in making machinegenerated descriptions more intuitive, accurate, and reflective of human cognitive processes.

Key Words: Reform Image captioning, deep learning, CNN-LSTM, Flickr8k dataset, natural language processing, accessibility, e-commerce, autonomous systems.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Thetaskofgeneratingdescriptivecaptionsforimageshas longbeenasignificantchallengewithinthefieldofartificial intelligence, demanding concurrent advancements in both computervision and naturallanguageprocessing(NLP). Traditionalapproachestoimagecaptioningoftenreliedon handcrafted visual features and rigid, template-based sentencegenerationmethods.Whilefunctional,theseearly systems lacked the flexibility, contextual awareness, and generalization ability required to perform well across diverse,real-worldscenarios.[3]

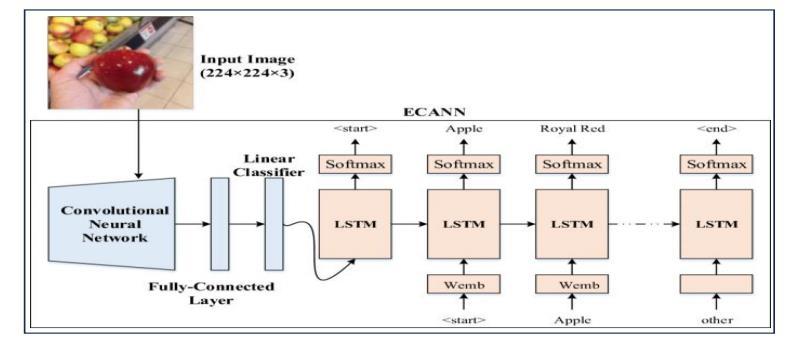

With the advent of deep learning, models such as Convolutional Neural Networks (CNNs) and Long ShortTerm Memory (LSTM) networkshaverevolutionizedthis domain.CNNsextractrich,hierarchicalfeaturesfromimages, while LSTMs generate coherent textual descriptions by understandingandmaintainingsequentialdependenciesin language. These networks, trained on large-scale datasets, enableend-to-endlearningwithouttheneedformanualrule creationorhandcraftedfeatures,significantlyimprovingboth accuracyandadaptability.

This paper presents the implementation of a caption generation system, capable of interpreting the semantic content of images and expressing it through meaningful, human-likesentences.Byleveragingcutting-edgemachine learning methodologies including transfer learning, attention mechanisms, and dataset preprocessing strategies thismodeleffectivelybridgesthegapbetween visualperceptionandlinguisticexpression.[8][11]

The growing reliance on visual content across digital platforms has made automated captioning technology increasingly relevant. From enhancing accessibility for visuallyimpairedusers toautomating contenttaggingon social media,theapplicationsofimagecaptioningarevast andimpactful.In e-commerce,itaidsingeneratingproduct descriptions automatically; in healthcare, it supports medicalimageinterpretation;andin education,itimproves inclusivityandcomprehensionthroughdescriptivevisuals. [5][7]

A notable feature of this project is its potential for domainadaptability.Thesameunderlyingarchitecturecan beappliedacrossindustries fromsocial engagementand journalism to healthcare services like dentistry, where automatedimageanalysiscanassistindiagnostics,patient education, and report generation. [18] This signals a paradigmshiftinhowdigitaltoolscanbedeployedtooffer more patient-centric care, combining automation with a humantouch.

The system, named CAPTION BOT, addresses key challenges in the image captioning process: generating grammatically coherent, semantically relevant, and contextuallyaccuratesentences.Usingthe Flickr8k dataset

asthetrainingfoundation,itapplies transfer learning from pre-trained vision models and incorporates attention mechanisms to focus on significant image regions during captiongeneration.Thisapproachensuresthatthegenerated descriptions are not only accurate but also contextually meaningful,evenforimageswithcomplexscenes.

Each stage of the development pipeline from data preprocessing and vocabulary construction to model training, hyperparameter tuning, and evaluation has beencarefullydesignedtoensurerobustnessandscalability. Evaluationmetricssuchas BLEU, METEOR,and CIDEr are used to quantify caption quality, while qualitative analysis furthervalidatesthecoherenceandrelevanceoftheoutputs.

Ultimately,thisworkcontributestothegrowingbodyof research at the intersection of vision and language, underscoring how deep learning models are redefining human-computer interaction. As visual data continues to dominatethedigital landscape,systems likeCAPTION BOT represent a step toward more intelligent, intuitive, and human-alignedAIsolutions.

CAPTION BOT addresses the challenges of generating grammaticallycoherentandsemanticallyrelevantcaptions. Leveraging the Flickr8k dataset, transfer learning, and attention mechanisms, this project demonstrates the feasibility of producing high-quality captions for diverse imagecontexts.

Keywords ReformImagecaptioning,deeplearning,CNNLSTM, Flickr8k dataset, natural language processing, accessibility,e-commerce,autonomoussystems.

Image captioning is a complex and evolving research problemsituatedatthecrossroadsofcomputervisionand natural language processing. It involves the automatic generationoftextualdescriptionsforimagesinamannerthat notonlyensuresgrammaticalcorrectnessbutalsocaptures thecontextualrelevanceandsemanticsofthevisualcontent. This integration of two distinct modalities visual and textual demands a sophisticated understanding of both spatialfeatureswithinimagesandthelinguisticstructures neededtoconveythemaccurately.[4]

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Theprimaryobjectiveofthisprojectistocreatearobust systemcapableof:

Automatically generating descriptive captions for images that are grammatically correct and contextuallyaccurate.

Employing a combination of CNNs for feature extraction and LSTMs for sequence generation to leveragethestrengthsofbothnetworks.

Optimizingperformancethroughrigoroustuningof hyperparametersandarchitecturaladjustments.

Addressing the image captioning task presents several layersofcomplexity.Oneoftheforemostchallengesisthe variabilityinimagecontent.Imagescanrangefromsimple, single-object scenes to intricate compositions involving multiple objects, interactions, and abstract elements. Capturingsuchdepthinvisualscenesandtranslatingthem intomeaningfulandcoherentsentencesrequiresmorethan justobjectdetection;itdemandscontextual reasoningand semantic alignment between image features and language representations.Thegeneratedcaptionsmustreflectnotonly theobjectspresentbutalsotheirrelationships,actions,and relevancewithinthescene.[19]

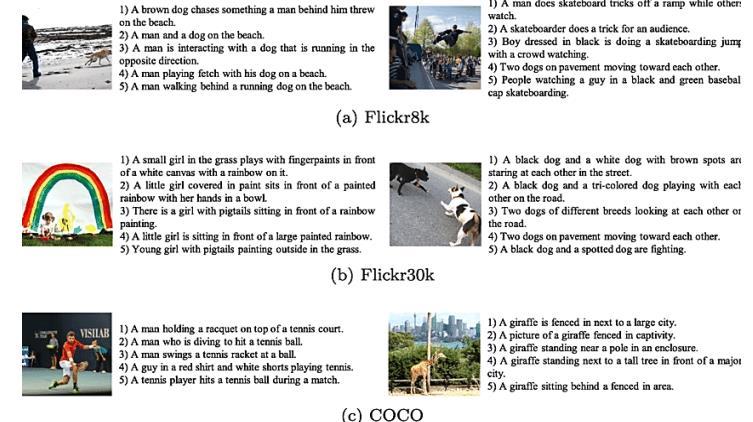

The generalization capability of an image captioning modelisstronglyinfluencedbythediversityandqualityof thedatasetitistrainedon.DatasetslikeFlickr8k,MSCOCO, andFlickr30kcontainawiderangeofimagesannotatedwith human-generated captions, but even these may introduce biases or fail to cover certain domains comprehensively. Preprocessing techniques such as image resizing, normalization,texttokenization,andvocabularypruningplay apivotalroleinmodelperformance.Poorlycurateddataor insufficientpreprocessingcanleadtolossofcrucialfeatures or semantic gaps in understanding. Moreover, ensuring diversity in captions so the model doesn't generate repetitiveoroverlysimplisticoutputs addstotheoverall challenge.[20]

Anothercritical dimensionliesinthemodel'sabilityto interpret and describe unseen data. Domain adaptability becomes essential when shifting from everyday images to nichesectorslikemedical diagnostics,satelliteimagery,or industrial environments. The model must retain its captioning capabilities without retraining from scratch, making transfer learning and modular training strategies valuableassetsinsuchcontexts.

An interdisciplinary perspective also reveals how theoretical models in other fields can inform AI-based systems.Forinstance,CarterandLee(2017)exploredhow frameworksliketheSocialDeterminantsofHealthandthe Biopsychosocial Model contribute to understanding and reforming dental practices. These models emphasize the interconnectedness of multiple factors biological, social,

and psychological and offer a holistic view of problems. Similarly, in image captioning, adopting a structured analyticalapproachtounderstandtheinteractionbetween visualperceptionandlanguagegenerationcanleadtobetter modelarchitecturesandtrainingstrategies.

Thus,developingahighlyeffectiveimagecaptioningmodelis not merely a technical endeavour but also an interdisciplinarychallengethatblendsvisualunderstanding, linguistic fluency, data quality, and conceptual clarity. The abilitytocaptureandconveyhuman-likeinterpretationsof visual data marks a significant step towards enhancing machineintelligenceandbroadeningthehorizonofhumancomputerinteraction.

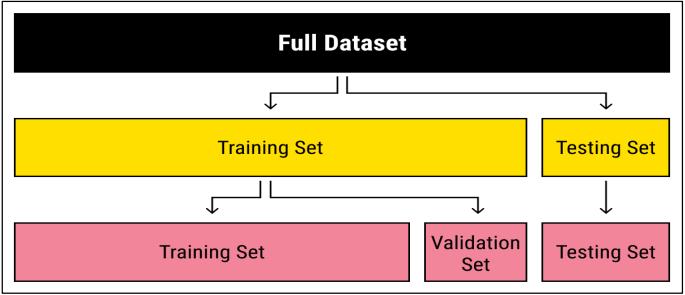

The Flickr8k dataset serves as the foundation for this project. It comprises 8,000 images, each paired with five uniquecaptionsthatdescribevariousaspectsoftheimage, including the objects present, their relationships, and contextualinformationaboutthescene.[10]Thesecaptions are written by multiple human annotators, introducing variability and richness in language that supports more effectivemodeltraining.Thedatasetisdividedintotraining, validation,andtestingsubsets,typicallyina70-15-15ratio, ensuring a comprehensive and unbiased evaluation of the model’sperformanceateachstageofdevelopment.

OneofthekeystrengthsoftheFlickr8kdatasetliesinits diversity it spans a wide range of real-world scenarios, including people engaging in various activities, natural landscapes,urbanenvironments,andeverydayobjects.This diversityensuresthatthetrainedmodelisnotlimitedtoa narrowsetofimagetypes,allowingittogeneralizebetterto unseendata.

Additionally, the dataset’s manageable size makes it particularlysuitableforprototypingandexperimentingwith novel architectures without requiring excessive computational resources. However, to evaluate scalability andperformanceonmorecomplexandvoluminousdatasets,

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

comparisons are made with larger benchmarks such as Flickr30k and MS COCO. These datasets contain tens of thousandsofimagesandmoredetailedannotations,making themidealforunderstandingthechallengesandbenefitsof scalingthesystem.

Flickr30k provides richer sentence structures anda wider vocabulary, which helps assess the captioning model’s linguisticgeneralization.Meanwhile,MSCOCOincludes

annotationssuchasobjectsegmentationandmultipletypes of scene descriptions, enabling evaluation of the model’s abilitytohandlemulti-objectandcontext-heavyimages.By benchmarking against these larger datasets, insights are gained into the trade-offs between dataset size, caption richness, and model complexity, guiding future improvementsanddeploymentstrategies.

Preprocessingisacriticalstepinensuringthequalityand consistencyof the input data. The aim is to transform raw data into a format that is suitable for training the model, optimizingitsperformance.Thefollowingstepsaretakento preprocessbothimagesandcaptions:

Theimagesareresizedtoastandardresolution,typically 299x299 pixels, to maintain uniformity across all input images.Thisstandardizationensuresthattheneuralnetwork receivesinputsofaconsistentsize.Theresizingprocessalso reduces computational cost and memory requirements, facilitating more efficient training. Additionally, the pixel valuesoftheimagesarenormalized,meaningthevaluesare scaledtoarangebetween0and1,ensuringthatthemodel doesnotfaceissuescausedbyvaryingvaluescales.

To extract meaningful features from the images, the Xception model is used. Xception is a pre-trained Convolutional Neural Network (CNN) model known for its excellent feature extraction capabilities. Using Xception allowsfortransferlearning,wherethemodelbenefitsfrom priortrainingonlargedatasetslikeImageNet.Thefeatures extractedbyXceptionrepresenthigh-levelcharacteristicsof

theimage,suchasshapes,textures,andspatialrelationships, whicharethenpassedtothecaptiongenerationmodel.

Thecaptions,whichdescribetheimages,undergoseveral preprocessingstepstoensuretheyareinasuitableformat forthemodel.Thisincludestokenization,wheretheKeras tokenizerconvertsthecaptionsintosequencesofintegers. Each word in the caption is mapped to a unique integer, based on a vocabulary built from the dataset. This tokenization helps the model learn associations between wordsandtheircorrespondingmeanings.

To handle varying caption lengths, padding is applied. Thisprocessensuresthatallinputsequences(captions)have thesamelength,makingthemsuitableforbatchprocessing duringtraining.Bypaddingshortercaptions,themodelcan handle multiple inputs simultaneously, improving training efficiency.

Additionaltextpreprocessingtechniquesareappliedto cleanandrefinethetextdata.Theseincludestemming,which reduces wordsto theirbase form (e.g., “running” becomes “run”), ensuring that different variations of a word are treated the same way. Unlike stemming, lemmatization transforms words into their correct dictionary form (e.g., “better” becomes “good”), ensuring semantic consistency acrossthedataset.Stop-wordremovalisalsoimplementedto eliminate commonly used words like “the,” “is,” and “in,” which often do not contribute significant meaning to the captions.

These preprocessing steps significantly improve the quality and consistency of the data, allowing the model to better understand and generate contextually appropriate captionsfornewimages.

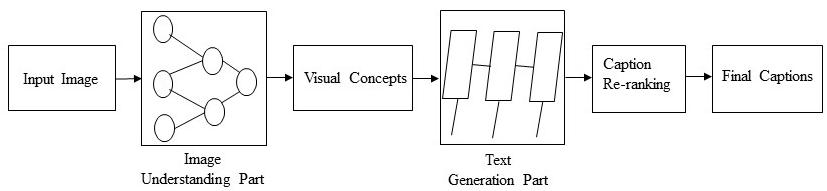

The proposed system integrates the following components:

CNN(Xception):Apre-trainedconvolutionalneural network is used to extract meaningful features fromtheinputimages.Thesefeaturesserveasthe foundational input to the language generation model, enabling the system to understand the visual content of the image in detail. Xception, with its depth and performance, excels in extracting high-level features such as shapes, textures, and relationships between objects, which are crucial for generating relevant and accuratecaptions.Fine-tuningtheCNNlayerson the specific dataset ensures that the extracted featuresalignwellwiththespecificrequirements of caption generation, optimizing the model’s abilitytohandlevariationsinvisualcontent.This step is critical because it allows the system to adaptthegenericfeatureextractioncapabilityof

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Xception to the particular needs of the image captioningtask.

LSTM: The LSTM (Long Short-Term Memory) networkisemployedtogeneratesequentialtext based on the extracted image features. Unlike traditional Recurrent Neural Networks (RNNs), LSTMsareequippedwithmemorycellsthathelp themrememberlong-rangedependenciesindata, making them especially suitable for tasks involving sequences, such as natural language generation. By processing the image features sequentially,theLSTMgeneratesgrammatically accurateandcontextuallycoherentcaptions.The ability of LSTMs to capture long-term relationships between words ensures that the generated captions not only make sense grammatically but also maintain logical consistencyacrossthesequence.

ToenhancetheLSTM'sperformancefurther,variantslike bidirectional LSTMs and attention mechanisms are considered.BidirectionalLSTMsprocesstheinputsequence inbothforwardandbackwarddirections,whichallowsthe model to capture dependencies from both past and future words in a sentence. This is particularly beneficial for understanding the full context of the image. On the other hand, attention mechanisms enable the model to focus on differentpartsoftheimageateachstepwhilegeneratingthe caption. This dynamic focus ensures that the captions are morerelevanttothespecific visual elementsintheimage, improving the quality of the generated descriptions. For example, the model might focus on a person’s face when describing facial features or on a car when generating informationaboutavehicleinanimage.

Theseintegratedapproachesworkinsynergy,combining thestrengthsofCNNsforvisualfeatureextractionwiththe power of LSTMs to generate meaningful, context-aware captions. The addition of bidirectional processing and attentionmechanismsfurtherrefinesthesystem,improving theoverallaccuracyandrelevanceofthecaptionsgenerated fortheinputimages.

Themodelistrainedbypairingextractedimagefeatures with their corresponding tokenized captions. The training processinvolvesfeedingthesepairsintothesystem,where the image features, extracted using the CNN, serve as the input to the LSTM, which generates the corresponding captions. The primary objective during training is to minimizethecategoricalcross-entropyloss,whichmeasures thedifferencebetweenthepredictedcaptionsandtheactual ground truth captions. This loss function helps guide the model in improving its prediction accuracy over time by penalizingincorrectcaptions.

Tooptimizethetrainingprocess,the Adam optimizer is used,awidelyadoptedoptimizationalgorithmthatcombines the advantages of both AdaGrad and RMSProp. Adam adjuststhelearningratedynamicallyduringtraining,making iteffectiveformodelswithalargenumberofparameters.Itis particularlyusefulforproblemslikeimagecaptioning,where the model may encounter sparse gradients or fluctuating learningsignals.

Toimprovegeneralizationandavoidoverfitting,several regularization techniques are employed. One of the key techniquesis dropout,whichrandomlydisablesafractionof the neurons during training, preventing the model from relyingtooheavilyonanyonefeature.Thisforcesthemodel tolearnmorerobustrepresentationsofthedata.Dropoutis especiallyusefulindeepneuralnetworks,wherethereisa riskofoverfitting,particularlywhenthedatasetislimitedor when the model architecture is too complex for the given data.

The model’s performance is closely monitored on a validation set,a separatesubset of the datasetthatisnot usedduringtraining.Thishelpstoensurethatthemodelis not overfitting to the training data and generalizes well to unseendata.EvaluationmetricslikeBLEUscores(Bilingual EvaluationUnderstudy)areusedtoassessthequalityofthe generated captions. BLEU is a popular metric in natural language processing that compares n-grams(sequences of words)betweenthegeneratedcaptionsandthegroundtruth captions,providingascorethatreflectstheaccuracyofthe caption’sstructureandcontent.HighBLEUscoresindicate thatthegeneratedcaptionsaresimilartohuman-annotated captions.

In addition to these standard practices, the training processalsoinvolvesexperimentationwithtechniqueslike learningrateschedules,whichadjustthelearningrateover time to improve training efficiency and convergence. A dynamiclearningratehelpsthemodelconvergefasterinthe earlystagesoftrainingwhileallowingittofine-tunemore precisely during the later stages. Batch normalization is another technique considered to stabilize training by normalizingtheinputstoeachlayer,reducingtheinternal covariateshift,andensuringthatthemodeltrainsefficiently. Gradient clipping isappliedtoavoidtheissueofexploding gradients,wherelargegradientscanleadtoinstabilityinthe learning process, particularly when training deep neural networks.

Theserefinementsandtechniquescollectivelycontribute toimprovingtheaccuracy,efficiency,androbustnessofthe model,ensuringthatitgenerateshigh-qualitycaptionsthat arebothgrammaticallycorrectandcontextuallyrelevant.

The Flickr8k dataset,consistingof8,000imageswith5 captionseach,waschosenforitsdiversityandrelevance.The

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

dataset spans a wide variety of visual content, including differentenvironments,objects,andactivities,whichmakesit anidealchoiceforbuildingarobustimagecaptioningsystem. Therichnessofthedatasetallowsthemodeltobeexposedto a wide array of scenarios, ensuring that it can generate captionsacrossvariousdomains.Thisdiversityiscrucialasit helps in developing a model that can generalize well and generatecaptionsthatareaccurate,diverse,andcontextually appropriate.

Preprocessing wasperformedintwomainstages:

1. Image Preprocessing: The first step in preprocessingtheimagesinvolvedresizingthemto astandardresolution,ensuringconsistencyacross all input images. This step is important because imagesmaycomeindifferentsizesanddimensions, which could affect the performance of the feature extraction process. Standardizing the resolution ensures that the model can process the images uniformly. After resizing, the images were also normalizedtoarangeof[0,1],whichiscommonly done to ensure that pixel values are within a consistentscaleforneuralnetworks.

The Xceptionmodel,pre-trainedonImageNet,wasthen utilizedtoextracthigh-levelvisualfeaturesfromtheimages. Xception,withitsdeepconvolutionallayersanddepth-wise separableconvolutions,excelsatextractingcomplexfeatures, making it well-suited for this task. By leveraging transfer learning, the system benefits from the rich feature representationslearnedbyXceptionontheImageNetdataset. This transfer learning approach significantly reduces the computationalcost,asthemodeldoesn'tneedtotrainfrom scratch.Instead,thepre-trainedlayerscanbefine-tunedfor thespecificimagecaptioningtask,improvingbothefficiency andperformance.

2. Text Preprocessing:Thecaptionsassociatedwith theimageswereprocessedtoensuretheycouldbe fedintothelanguagegenerationmodel.Initially,the captionswere tokenized intowords,whichmeans thatthetextwasbrokendownintoindividualwords or subwords, making it easier for the model to process them. Tokenization is a critical step for converting raw text into a format that a neural networkcanunderstand.

A vocabulary was then built from the dataset, which consistedofalltheuniquewordsfoundinthecaptions.Each word in the vocabulary was assigned a unique index to represent it numerically, whichis essential forfeeding the captionsintothemodel.Thisstepconvertsthetextdatainto numericaldata,makingitpossiblefortheneuralnetworkto workwithit.

Sequences of tokens were padded to ensure that all captions had the same length. Padding is applied when a

captionhasfewerwordsthanthemaximumsequencelength, andzeroes(oranotherspecialpaddingtoken)areaddedto makethesequencesuniform.Thisensuresthatthemodelcan handlebatchesofdataefficientlyandconsistently.

Additionally, special tokens suchas<start>and<end> wereaddedtosignifythebeginningandendofeachsentence. Thesetokenshelpthemodeldistinguishbetweenthestart and end of captions, which is important for generating completeandcoherentcaptions.

Together,thesepreprocessingstepsensuredthatboththe images and captions were prepared in a consistent and effectivemanner,readytobefedintothemodelfortraining. The combination of high-quality image feature extraction withXceptionand effectivetextpreprocessinglaida solid foundationforgeneratingaccurateandcontextuallyrelevant captions.

Thearchitecturewasmeticulouslydesignedtoleverage bothvisualandtextualmodalitieseffectively,ensuringthat the model can generate highly accurate and contextually relevantcaptions.Theintegrationofmultipleneuralnetwork components allows the system to address the inherent complexities of both image analysis and natural language generation.Thekeycomponentsofthearchitectureareas follows:

1. Convolutional Neural Networks (CNN): The Xception model is employed as the backbone for extracting spatial features from input images. Xception, a deep convolutional neural network, is specifically designed for efficient image feature extraction. It uses depth-wise separable convolutionstolearnmorecomplexrepresentations whileminimizingcomputationalcost.TheXception modelispre-trainedonImageNet,whichallowsitto leverage pre-learned features that can be transferred to the task of image captioning. The outputoftheCNNisahigh-levelfeaturemap,which isthencompressedintoafeaturevectortorepresent thevisualcontentoftheimage.Thisfeaturevector serves as the foundational input to the language generation model. By fine-tuning the CNN layers

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

during training, the features can be better aligned with the specific task of generating descriptive captions.

2. Recurrent Neural Networks (LSTM): To handle thesequentialnatureoflanguage,LongShort-Term Memory (LSTM) networksareusedtoprocessthe captions. LSTMs are a type of recurrent neural network (RNN) that excel in capturing long-term dependencies within sequences. In the context of imagecaptioning,theLSTMnetworkprocessesthe sequence of words (tokens) in the captions, one word at a time. The primary advantage of LSTMs overtraditionalRNNsistheirabilitytomitigatethe vanishinggradientproblem,whichallowsthemto rememberinformationoverlongersequences.This iscrucialwhengeneratingcaptionsthatarenotonly grammatically correct but also contextually coherent.TheLSTMusesthefeaturevectorfromthe CNNasitsinitialinputandgenerateseachwordof the caption sequentially, conditioned on the previousworkandtheimagefeatures.

3. Attention Mechanisms: To further improve the quality of the generated captions, attention mechanisms areincorporatedintothearchitecture. Attention allows the model to focus on different regionsoftheimageasitgenerateseachwordofthe caption.Insteadofgeneratingthecaptionbasedon the entire image feature vector at once, attention enables the model to give more weight to certain parts of the image that are most relevant to the currentwordbeinggenerated.Forexample,when generatingtheword"dog,"theattentionmechanism will direct the model’s focus to the region of the image containing the dog. This dynamic focus improvesthecontextandrelevanceofthecaptions, ensuringthatthedescriptionaccuratelyreflectsthe important elements of the image. The attention mechanismimprovesthemodel’sabilitytogenerate moreprecise,detailed,andcontextuallyappropriate captions byallowing it toprioritize visual content basedonthecurrentstageofcaptiongeneration.

Together,thesecomponentsformapowerfularchitecture thateffectivelyintegratesbothvisualandtextualinformation. The CNN handles the extraction of spatial features from images,theLSTMprocessesthesequentialdataofcaptions, and the attention mechanism ensures that the generated captions are both contextually rich and aligned with the image content. This architecture is highly effective for generating natural, coherent, and accurate image captions thatreflecttheintricatedetailsofthevisualinput.

Theintegrationofthesecomponentsallowsthemodelto generatecaptionsthatarenotonlygrammaticallysoundbut also contextually relevant, which is crucial for real-world applicationswhereaccuracyanddetailarekey.

The training process of the image captioning model follows a systematic approach to ensure efficient learning andperformanceevaluation.Keycomponentsofthetraining processinclude:

1. DatasetSplitting: Thedatasetwaspartitionedinto threesubsets training,validation,andtesting to facilitateacomprehensiveevaluationofthemodel’s performance. Specifically, 70% of the data was allocated for training, 15% for validation, and the remaining 15% for testing. The training subset is usedtofitthemodel,thevalidationsethelpstune the hyperparameters and prevent overfitting, and the testingset providesan unbiased evaluation of the model's generalization capabilities. This split allowsforaneffectiveandrobustassessmentofthe model'sperformanceonunseendata.

2. LossFunction: Toevaluateandoptimizethemodel, categorical cross-entropy was used as the loss function. This function measures the difference between the predicted and actual captions. It calculates the probability distribution over all possiblewordsforeachtimestepinthesequence, comparing it to the ground truth (actual caption). The goal is to minimize this difference during training,ensuringthatthemodelgeneratescaptions thatareascloseaspossibletotheactualones.The categoricalcross-entropylossisparticularlysuited for multi-class classification problems, where the modelneedstopredictonewordfromavocabulary ateachtimestepinthesequence.

3. Optimizer:TheAdamoptimizerwasusedtoupdate themodel’sweightsduringtraining.Adam(shortfor AdaptiveMomentEstimation)combinesthebenefits of both the AdaGrad and RMSProp optimizers, adjusting the learning rate for each parameter individually. It computes adaptive learning rates based on the first and second moments of the gradients. Additionally, a learning rate scheduler was employed to adjust the learning rate dynamically during training. This helps the model convergesteadily,avoidingissueslikeovershooting theoptimalsolution,andenhancestrainingstability by reducing the learning rate as the model progresses.

4. Batch Processing:Tooptimizememoryusageand computationalefficiency,mini-batchprocessingwas employed. Instead of updating the model after processing the entire dataset, the model was updatedafterprocessingsmallbatchesofdata.This approach enables faster training by parallelizing computationsandallowsthemodeltostartlearning before seeing the entire dataset. Mini-batch

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

processing also helps prevent overfitting by providingregularupdatestothemodel,whilealso improvingconvergencespeed.

Toassesstheeffectivenessoftheimagecaptioningmodel andevaluatethequalityofthegeneratedcaptions,several metricswereemployed:

1. BLEU Scores: The BLEU (Bilingual Evaluation Understudy) score is a widely used metric for evaluatingthequalityoftextgeneratedbymachine translationmodels,andinthiscase,itisappliedto assess the fluency and accuracy of the generated captions.BLEUcomparesthen-grams(contiguous sequences of n words) of the generated captions withthoseofhuman-annotatedreferencecaptions. The closer the match between the generated and referencecaptions,thehighertheBLEUscore.Itis particularlyusefulfordeterminingtheprecisionof the model’s output, indicating how well the machine-generated captions align with human expectations. The BLEU score is computed by considering precision scores at different n-gram levels,suchasunigram(1word),bigram(2words), andsoon.

2. ROUGE Metrics: The ROUGE (Recall-Oriented UnderstudyforGistingEvaluation)scoreisanother evaluationmetricthatfocusesontherecallaspect. ROUGEmeasurestheoverlapofn-gramsbetween the generated captions and reference captions. While BLEU focuses on precision, ROUGE is more concernedwithrecall,orhowmuchofthereference caption’s content is captured by the generated captions.Thishelpsinassessingtherelevanceofthe generatedcaptionbycomparingthen-gramsinthe output to those in the human-annotated ground truth captions. ROUGE scores are often used to measure recall andF1scores for different n-gram levels,providingamorebalancedevaluationofboth precisionandrecall.

3. CIDEr Metrics: CIDEr (Consensus-based Image Description Evaluation) is another important evaluation metric designed specifically for image captioningtasks.Itmeasurestheconsensusbetween then-gramsinthegeneratedcaptionsandthosein the human references. CIDEr uses a TF-IDF weightingschemetoaccountfortheimportanceof each word or phrase in a given caption, with a particularfocusonrare,meaningfulterms.Thisis particularlybeneficialinimagecaptioningbecauseit helps to penalize overly generic captions while rewardinguniqueanddescriptivephrasesthatmore accuratelyrepresentthecontentoftheimage.The CIDEr score is highly regarded for evaluating the

qualityofimagecaptioningsystemsbecauseittakes intoaccountboththeprecisionandrecallofcontent, as well as the relevance of the generated descriptions.

By utilizing these evaluation metrics, a comprehensive assessment of the image captioning model can be made, ensuringthatthegeneratedcaptionsarenotonlyfluentand grammatically correct but also relevant and contextually accurate. These metrics provide valuable insights into the strengths and weaknesses of the model, guiding future improvementsinthesystem.

The project utilized the following tools andlibraries to ensureefficientimplementationandsmoothexecution:

TensorFlow and Keras: These deep learning frameworks were used for building, training, and deployingtheimagecaptioningmodel.TensorFlow, a powerful open-source machine learning library, provided the necessary support for handling complexneuralnetworkarchitectures.Keras,ahighlevelneuralnetworkAPIbuiltontopofTensorFlow, simplified the model design and training process, allowing for easy experimentation with various architectures,includingCNNsandLSTMs.

NumPy and Pandas: NumPy was essential for numerical operations, particularly in handling arrays, matrices, and large datasets during model training. Pandas was used for data manipulation, such as reading, cleaning, and processing the dataset.Theselibrariesensuredthatthedatawasin theproperformatandoptimizedforfeedingintothe model,andtheyprovidedtoolsforefficienthandling oflargeamountsofstructureddata.

Matplotlib:Thisvisualizationlibrarywasemployed forplottingvariousgraphsandchartstotrackthe training progress, visualize the loss and accuracy curves,andevaluatetheperformanceofthemodel. It was particularly useful in debugging, understanding model behaviour, and presenting results in a visually understandable manner. Matplotliballowedforclearvisualcommunicationof metrics,whichwascrucialduringmodelevaluation andoptimization.

GPU Acceleration:Tospeedupthetrainingandinference process, the project leveraged GPU acceleration using TensorFlow’s support for parallel computing on graphics processing units. This was especially important for deep learningmodels,astraininglargemodelsonlargedatasets canbetime-consuming.UtilizingaGPUsignificantlyreduced the time required for training, making it feasible to experimentwithlargerdatasetsandmorecomplexmodels.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

To better understand the trajectory and advancements in the field of image captioning, a comprehensive comparative study of several influential researchpapershasbeenconducted.Thisanalysisfocuses on key aspects such as the methodologies employed, datasets utilized, evaluation metrics adopted, and the unique contributions madebyeachwork.

Thedomainofimagecaptioninghasevolvedfromearly template-based systems, which relied heavily on handcrafted rules and fixed syntactic structures, to sophisticated deep learning-based approaches thatlearn directly from data. The introduction of Convolutional Neural Networks (CNNs) for image representation and Recurrent Neural Networks (RNNs) particularly Long Short-Term Memory (LSTM) networks for language modellingrevolutionizedthisfield.Laterworksintroduced attentionmechanisms,enablingmodelstoselectivelyfocus onrelevantregionsofanimageduringthecaptiongeneration process,therebyproducingmoreaccurateandcontextually richdescriptions.

These breakthroughs have also led to the adoption of standardizeddatasetssuchas Flickr8k, Flickr30k,and MS COCO, and widely accepted evaluation metrics like BLEU, METEOR, CIDEr, and SPICE. The comparative analysis reveals trends in how researchers addressed specific challengeslike semanticalignment, contextualrelevance, grammatical fluency,and computational efficiency

Thefollowingtablesummarizesthecoreattributes ofeachselectedpaper,highlightingtheirroleinshapingthe currentlandscapeofimagecaptioningsystemsandoutline.

1 An Artificial Intelligenc e-Based Review

Paper

Image Caption Generator

2 Image

Caption Generation using Deep Learning For Video Summariza tion Applicatio ns

3 Automatic Image Caption Generation Based on Some Machine Learning Algorithms

4 Image Caption Generator UsingDeep Learning

The model's performance was quantitatively evaluated usingvariousmetricstoassesstheaccuracyandfluencyof the generated captions. Among these, BLEU (Bilingual Evaluation Understudy) scores wereaprimaryindicator, S. No . Title Author Publicati on Methodology Yea r

Vijeta Sawant, Samrudhi Mahadik, Prof.D.S. Sisodiya

Mohamm ed Inayathull a, S. ALBAWI, T. A. MOHAMM ED Internatio nal Journal

Bratislav Predić, Daša Manić, Muzafer Saračević, Darjan Karabašev ić,Dragiša Stanujkić

Palak Kabra, Mihir Gharat, DhirajJha, Shailesh Sangle

Applicatio ns

Internatio nal Journal for Research inApplied Science & Engineeri ng Technolog y (IJRASET)

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

showingastrongalignmentbetweenthecaptionsgenerated by the model and the ground truth human-annotated captions. High BLEU scores signify that the generated captionscloselymatchedthereferencecaptionsintermsofngramprecision,reflectingthemodel'scapabilitytoproduce fluentandcontextuallyaccuratedescriptionsofimages.

InadditiontoBLEU,theprojectalsobenefitedfromthe useof transfer learning.Byleveragingpre-trainedmodels, particularly the Xception CNN for feature extraction, the training process was significantly expedited. Transfer learningallowedthemodeltobuilduponknowledgegained fromlarge-scaleimagedatasets(suchasImageNet)andapply it to the image captioning task with minimal additional training.Thisapproachnotonlyreducedthetrainingtime butalsohelpedmaintainhighaccuracyinfeatureextraction, leadingtobetterperformancewithouttheneedtotrainthe CNNfromscratch.

The combination of high BLEU scores and the use of transferlearningdemonstratedthemodel'sefficiencyandits ability to generate captions that were both accurate and computationallyefficient.Thesequantitativeresultsprovide a solid foundation for further fine-tuning the model and exploring additional techniques for improved caption generation.

Inthe qualitative analysis,thegeneratedcaptionswere assessed for their contextual relevance, grammatical coherence,anddescriptiveaccuracy.Themodelconsistently producedcaptionsthatwerenotonlygrammaticallycorrect but also contextually appropriate. This was crucial, as the captionsneededtocapturetheintricatedetailsoftheimages, such as the relationships between objects, events, and environmentalsettings.

The integration of attention mechanisms played a significantroleinthissuccess.Byfocusingonkeyregionsof the image when generating each word of the caption, the model was able to produce more accurate and detailed descriptions. The attention mechanism dynamically prioritized specific areas of the image, ensuring that importantobjectsorfeatureswereappropriatelymentioned inthecaptions.Thisapproachimprovedtherelevanceofthe captions, as it allowed the model to generate descriptions that were closely aligned with the most salient visual elementsoftheimage.

Forexample,inanimagedepictingapersonridingabike inapark,themodeleffectivelyhighlightedthepersonandthe bike, providing a detailed caption like "A person riding a bicyclethroughagreenpark"ratherthanamoregenericor inaccurate description. This targeted focus on key visual componentsledtocaptionsthatwerenotonlycontextually richer but also more specific to the content of the image, enhancingtheoverallqualityofthesystem.

Theabilityofthemodeltocombineattentionmechanisms withLSTM-basedsequencegenerationresultedincaptions that were both coherent and accurate, making the system highly effective for generating descriptive and informative captionsfordiverseimages.

Difficulty handling abstract or ambiguous content: The model struggles with generating accuratecaptionsforimagescontainingabstractor ambiguous concepts. For example, images that featurecomplexemotions,artisticrepresentations, or non-literal elements may result in vague or generalizedcaptions.Thisoccursbecausethemodel reliesonthevisualfeaturesithaslearnedfromthe training data, and when presented with abstract images,itmayfailtocapturetheintendedmeaning orcontext.

Challenges with rare or unseen objects:Another limitationariseswhenthemodelencountersobjects or scenes that were not well-represented in the training dataset. The Flickr8k dataset, while diverse, does not cover every possible object or scenario, and as a result, the model may generate incorrectornonspecificcaptionsforrareorunseen objects.Thisissueisexacerbatedwhendealingwith niche domains or objects that differ significantly fromthecommononespresentinthetrainingdata. The model’s inability to generalize well to these novel objects can lead to inaccuracies in the generatedcaptions.

Theselimitationsunderscoretheimportanceofexpanding thedatasettoincludemorediverseandcompleximages,as well as incorporating more advanced techniques such as zero-shot learning or data augmentation to improve the model’s ability to handle abstract content and unseen objects.

1. MultimodalLearning: Onepromisingdirectionfor futureworkisintegratingmultimodaldata,suchas audioandmetadata,intothecaptioningprocess.By incorporatingadditionalmodalities,themodelcould generate richer and more nuanced captions. For example, audio cues could be used to provide additional context or enrich captions with soundrelateddescriptions,improvingtheoverallquality andinformativenessofthecaptions.Thisapproach could help in situations where visual information alone may not be sufficient to generate a comprehensivedescription.

2. Few-ShotLearning: Anotherareafordevelopment istheincorporationoffew-shotlearningtechniques.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

Few-shotlearningenablesmodelstorecognizeand generate captions for rare or unseen objects by learning from only a few examples. This could significantly enhance the model’s adaptability, allowingittohandlesitu

3. Encounters:Novelorobscureobjectsnotpresentin the training dataset. Few-shot learning methods suchasmeta-learningortransferlearningcouldbe exploredtoimprovethemodel’srobustness

4. Real-Time Deployment: Real-timecaptioningisa criticalareaforpracticalapplications,particularly inedgedevicesandliveenvironments.Tomakethe model more efficient and applicable to real-time systems, optimization techniques focused on reducinglatencyandcomputationaloverheadare essential. Techniques like model quantization, pruning, and hardware acceleration could be utilized to ensure the system performs well on resource-constrained devices. This would enable the deployment of the captioning system in live applications such as security surveillance, livestreaming platforms, or autonomous vehicles, wherereal-timeimagecaptioningisnecessaryfor immediatedecision-making.

CAPTION BOT successfully bridges the gap between visualcontentandtextualdescriptionsusingdeeplearning. ByintegratingConvolutionalNeuralNetworks(CNNs),Long Short-Term Memory (LSTM) networks, and attention mechanisms,thesystemhasachievedefficientandscalable performance in generating image captions. This project highlights the powerful potential of combining computer vision and natural language processing to provide contextually relevant and grammatically accurate descriptions of images. Through the innovative use of attention mechanisms, the model is able to focus on key elementsoftheimage,ensuringthatthegeneratedcaptions arebothpreciseandmeaningful.

Thefindingsfromthisprojectdemonstratethepotential for deep learning techniques to revolutionize the field of imagecaptioning.TheuseofCNNsforfeatureextractionand LSTMs for sequence generation has shown to be highly effectiveinproducingcoherentandaccuratecaptions.The incorporation of attention mechanisms has proven to significantlyimprovethequalityofthegeneratedcaptionsby focusingonthemostsalientfeatures oftheimage,thereby enhancingtheoveralldescriptiveaccuracy.

Inaddition,theprojectunderscoresthepracticalbenefits of transferlearning.Byleveragingpre-trainedmodelssuch as Xception, the approach significantly reduces the computational resources required for training while still maintaining high accuracy. This transfer learning

methodology allows the model to perform well even with limiteddata,makingitmoreefficientandadaptableforrealworldapplications.

Lookingahead,thereareseveral excitingopportunities forfurtherimprovementandexpansionofthiswork. Future enhancements couldinvolve:

Expanding the Dataset:Incorporatinglargerand morediversedatasets,suchasMSCOCOorcustom domain-specificdatasets,couldimprovethemodel’s ability to generate captions for more varied and complex images, including those with rare or abstractcontent.

Fine-Tuning the Model: Further tuning of the model architecture, including experimenting with more advanced attention mechanisms or using Transformer models, could lead to even better performance,especiallyinhandlingmoreintricate relationshipsbetweenobjectsandscenes.

Addressing Rare and Unseen Objects: To tackle thechallengeofunseenobjects,techniquessuchas zero-shot learning or transfer learning from additionaldatasetscouldbeexploredtoenhancethe model'sabilitytohandlepreviouslyunseenimages orobjects.

Real-World Applications: The system can be applied across a variety of domains, such as improving accessibility for visually impaired individuals by generating detailed audio descriptions of images, assisting with automated content generation in media platforms, and enhancing search engine functionalities by providingmoreaccurateandcontext-awareimage searches.

The work done in this project lays a solid foundation for futureadvancementsinimagecaptioninganddemonstrates thetransformativepotentialofdeeplearninginreal-world applications. By continuing to improve the model and exploringnewmethodologies,theprojectcouldcontribute significantlytothedevelopmentofmoreintelligentsystems capableofunderstandingandgeneratingdescriptivecontent basedonvisualinput.

[1] OriolVinyalsetal.,“ShowandTell:ANeuralImage CaptionGenerator,”IEEE,2015.

[2] Venugopalanetal.,“SequencetoSequence-Video toText,”ECCV,2016.

[3] Farhadi et al., “Every Picture Tells a Story: GeneratingSentencesfromImages,”ECCV,2010.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 04 | Apr 2025 www.irjet.net p-ISSN: 2395-0072

[4] JianhuiChenetal.,“ImageCaptionGeneratorBased onDeepNeuralNetworks,”ACM,2014.

[5] Anderson et al., “Bottom-Up and Top-Down AttentionforImageCaptioningandVisualQuestion Answering,”CVPR,2018.

[6] Xu et al., “Show, Attend and Tell: Neural Image Caption Generation with Visual Attention,” ICML, 2015.

[7] Karpathy and Fei-Fei, “Deep Visual-Semantic Alignments for Generating Image Descriptions,” CVPR,2015.

[8] Lu et al., “Knowing When to Look: Adaptive Attention for Visual and Language Tasks,” CVPR, 2017.

[9] Rennie et al., “Self-Critical Sequence Training for ImageCaptioning,”CVPR,2017.

[10] Hossain et al., “A Comprehensive Survey of Deep Learning for Image Captioning,” ACM Computing Surveys,2019.

[11] You et al., “Image Captioning with Semantic Attention,”CVPR,2016.

[12] Huangetal.,“UnifyingVisual-SemanticEmbeddings with Multimodal Neural Machine Translation,” CVPR,2016.

[13] Gan et al., “StyleNet: Generating Attractive Visual CaptionswithStyles,”CVPR,2017.

[14] Lin et al., “Microsoft COCO: Common Objects in Context,”ECCV,2014.

[15] Lietal.,“ImageCaptioningwithDeepBidirectional LSTMs,”ACM,2018.

[16] Sharma et al., “Visual Captioning with Pointer Networks,”ECCV,2018.

[17] Johnson et al., “Image Retrieval Using Scene Graphs,”CVPR,2015.

[18] Wang et al., “Learning Deep Features for Image Captioning Using Reinforcement Learning,” ECCV, 2016.

[19] Daietal.,“RethinkingZero-ShotImageCaptioning,” CVPR,2017.

[20] Chenetal.,“NeuralNetworkEnsemblesforImage Captioning,”NIPS,2016.

[21] Zhangetal.,“High-PerformanceImageCaptioning withTransformerNetworks,”CVPR,2020.

[22] Fangetal.,“FromCaptionstoVisualConceptsand Back,”CVPR,2015.

[23] Agrawal et al., “Visual Question Answering Using ImageCaptioning,”IEEE,2017.

[24] Donahue et al., “Long-Term Recurrent Convolutional Networks for Visual Recognition,” CVPR, 2015. [25] Hochreiter and Schmidhuber, “Long Short-Term Memory,” Neural Computation, 1997.