International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

Adwaid C. S.1, Bineeta S. Joseph2, Sneha Prasad B.3, Sneha S. Suresh4, Ms. Athira Sarath5

1,2,3,4 B.Tech student, Department of Computer Science and Engineering, Rajadhani Institute of Engineering and Technology, Kerala, India

5Professor, Department of Computer Science and Engineering, Rajadhani Institute of Engineering and Technology, Kerala, India ***

Abstract - This project focuses on developing an AIdriven shell interface that utilizes Natural Language Processing (NLP) to interpret and execute user commands in plain language. By translating natural language into shell commands, it simplifies system operations and automates repetitive tasks, making shell interactions more user-friendly and productive. The project uses a commandline interface (CLI) as the front end, eliminating the need for a graphical user interface (GUI), while Python serves as the programming language for the back-end development, ensuring efficient and seamless execution of tasks. Natural Language Command Execution which enables users to interact with the shell using plain language, making it accessible to all skill levels. Task Automation which simplifies repetitive operations by understanding and executing multi-step commands efficiently. Customizable Commands allows users to create and modify custom commands for personalized workflows.

Key Words: AI-powered shell, natural language processing (NLP), voice assistant, command automation, system control, Python, human-computer interaction, speech recognition, intelligent shell interface, terminalautomation.

In the rapidly evolving landscape of computing, the command-lineinterface(CLI)remainsafundamentaltool for developers, system administrators, and power users. Despiteitsefficiencyandprecision,theCLIoftenpresents asteeplearningcurveduetothenecessityofmemorizing complexcommandsandtheirspecificsyntax.Ourproject, "AI-Powered Shell Interaction Using Natural Language and System Automation," aims to bridge this gap by integrating artificial intelligence (AI) and natural language processing (NLP) into shell environments. This integration enables users to interact with the system using everyday language, thereby enhancing usability and accessibility. This project aims to combine three advanced technologies Artificial Intelligence (AI), Natural Language Processing (NLP), and system automation.ByleveragingAIandNLP,theprojectallows users to interact with a computer system using natural language commands, making it easier and more efficient to execute complex tasks without needing to understand

traditional shell scripting. The core idea is to build a systemthatinterpretsnaturallanguageinput,convertsit intoexecutableshell commands,andautomatesa variety of system management tasks such as file handling,process management, system monitoring, etc. Traditional CLI usage demands an understanding of various commands, flags, and scripting languages. This requirement can be daunting for newcomers and even seasoned professionals who operate across multiple operating systems, each with its unique command structure.

Traditional command-line interfaces (CLI), such as Bash and PowerShell, remain core tools for system administrators and developers. These interfaces provide powerful control over the operating system through precise commands. However, they require users to memorizecomplexsyntax,flags,andscriptingrules.This learning curve often creates a barrier for beginners or users from non-technical backgrounds. Additionally, different operating systems follow varying command structures, making it difficult for users to switch or operateacrossplatforms.Thelackofflexibilityinnatural language interaction means users must know exact commands,andanyerrorinspellingorformatoftenleads tofailureorunintendedoperations.

In conventional systems, routine tasks like file management, checking system status, or accessing internettoolsareperformedmanuallyusingspecificshell commands. For example, users must know commands like ls for listing files, ifconfig for network info, or cal for calendar views. This rigid requirement forces users to learncommand-linescriptingevenforsimpleoperations. Whilepowerful,thismethodisnotintuitive,especiallyfor users unfamiliar with terminal environments. The absence of natural language support and real-time automationmakessuchsystemslessefficientformodern usecaseswhereflexibilityandaccessibilityarecritical.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

Several AI-enhanced terminals and experimental tools have begun exploring the integration of natural language with system interfaces. Tools like Shell Genie suggest shell commands based on user input in plain English, while platforms like Warp Terminal offer command prediction and AI-powered suggestions. However, these toolsoftenstopshortofdirectlyexecutingthecommands and may lack deeper automation features. Most existing tools do not include voice output for accessibility or intelligent error handling, and many require internet acceslimiting offline usage. While promising, these platformsoftenservemore asteachingaidsorassistants rather than fully autonomous, user-friendly shell environments.

A.Working

The AI-Powered Shell Interaction System is designed to bridge the gap between traditional command-line interfaces (CLIs) and modern, user-friendly communication methods using Artificial Intelligence (AI) andNaturalLanguageProcessing (NLP). Traditionalshell environments require users to memorize and accurately type complex commands. This poses a significant challenge,especiallyforbeginnersornon-technicalusers. Theproposedsystemovercomesthisbyenablingnatural language-based interaction with the shell, thus making it accessible, intuitive, and efficient.The system follows a structured workflow involving several key phases: Login Phase,NLPProcessingPhase,CommandExecutionPhase, andVerificationPhase. Thesestagesensurethattheuser isauthenticated,theirinputisunderstoodcorrectly,tasks are executed reliably, and feedback is delivered effectively.

The Login Phase is the initial and most essential part of the proposed system, as it ensures the security and authenticationofusersbeforegrantingthemaccesstothe AI-powered shell. When the system is launched, it prompts the user to enter a valid username and password. These credentials are then matched against a pre-defined set of authorized users stored in the system. If the credentials are verified successfully, the user is granted access to interact with the shell. Otherwise, accessisdenied,andthesystemexitsordisplaysanerror message. This phase prevents unauthorized access, protects sensitive shell commands from misuse, and ensures that only permitted users can execute systemlevel operations. It provides a controlled and secure environment for shell usage, which is especially important when the system is deployed in multi-user or enterprisesettings.

The user enters the NLP (Natural Language Processing) Phase,whichisthecoreintelligentlayerofthesystem.In this phase, the user is able to type commands using simple, natural English sentences instead of technical shell syntax. For example, a user might input "show me thelistoffiles"insteadofusingtheshellcommandls.The systemprocessesthisinput usingNLPtechniques,where itfirsttokenizesthesentenceandappliesa bag-of-words model. It then passes the processed input into a pretrained deep learning model built using PyTorch, which identifies the user’s intent. Based on this intent, the system determines which shell command should be executed. This phase plays a critical role in translating human language into machine-executable instructions, removingtheneedforuserstomemorizeorlearnspecific commands.Itaddsalayerofintelligenceandadaptability totheshell,makingitmuchmoreintuitiveandaccessible.

The Command Execution Phase begins after the user’s intent has been successfully identified. In this phase, the system maps the predicted intent to a corresponding predefinedshellcommandandexecutesitusingPython’s system interface. The system supports a wide variety of commands,suchaslstolistfiles,pwdtoshowthepresent working directory, neofetch to display system information, ifconfig to check network configurations, curlwttr.intofetchreal-timeweatherupdates,andcalto displaythecalendar.Theexecutionisdoneautomatically, and the results are displayed to the user through the command-line interface. This phase eliminates the need for the user to know the actual shell command. It increasesefficiencyandconveniencebyautomatingtasks and simplifying the interaction process. Even complex or multi-step commands can be executed with a single natural language input, making the shell environment muchmorepowerfulanduser-centric.

The shell command is executed, the system enters the VerificationPhase,whereitvalidatestheexecutionresult and communicates the outcome to the user. If the commandhasbeenexecutedsuccessfully,theappropriate outputisdisplayed.Iftheinputisnot recognizedorifan error occurs, the system returns a user-friendly error message explaining the issue. Additionally, to enhance accessibilityand interactivity,the system includesa textto-speech (TTS) feature that provides voice output for certain responses. This means that users can receive spoken confirmations for their commands, making the system more engaging, especially for users with visual impairments or those who prefer auditory feedback. However, it is important to note that the current implementation supports only text-based input and

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

voice-based output; it does not yet support voice-to-text input, though this can be considered for future development. This phase ensures that the user is always informed about the success or failure of their request, thus improving system transparency and trustworthiness.

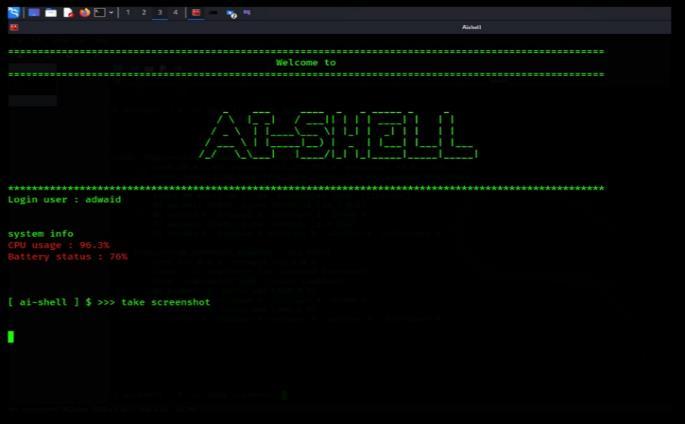

A. User interface ofAIshell

Fig.3. User interfaceof AI shell

The initial interface of the AI-powered shell where users begin their interaction. This layout is minimal and userfriendly, setting the tone for the natural language-based operations.

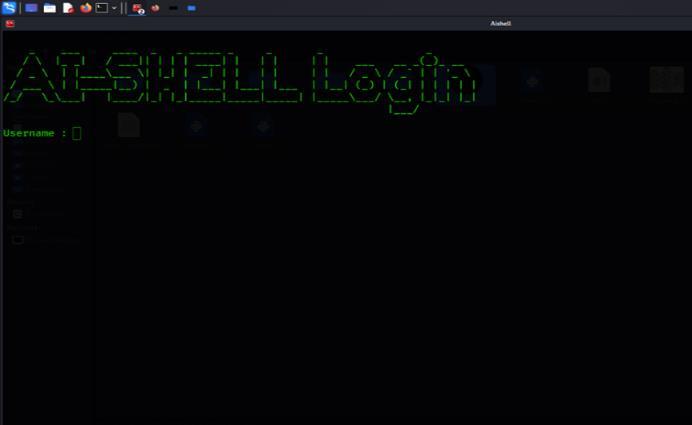

B. LoginPhase

4. LoginPhase

User entering the username as part of the secure login process. The shell begins by verifying identity to ensure authorizedaccess.

C. Password input

5. Passwordinput

Password input screen where the system securely captures credentials before granting access to the shell environment.

D. General Information Interface Command Execution –ifconfig

Fig6. GeneralInformationInterfaceCommand Execution – ifconfig

After successful login, the shell presents general system information to keep users updated on the system status beforeinteractionbegins.

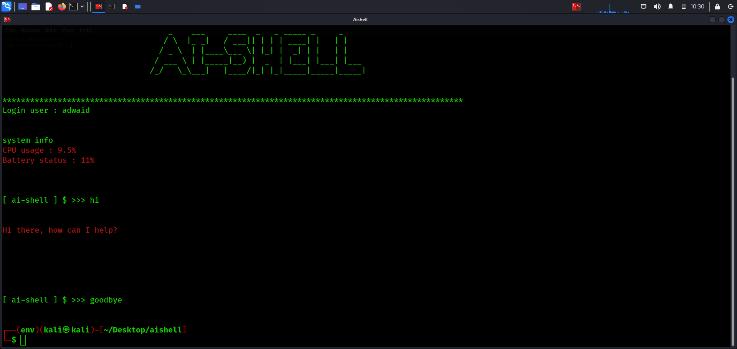

E. CommandExecution – hi

Fig 7.CommandExecution – hi

The user requests the system's network configuration usingnaturallanguage.Theshellaccuratelyinterpretsand executesthehi.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

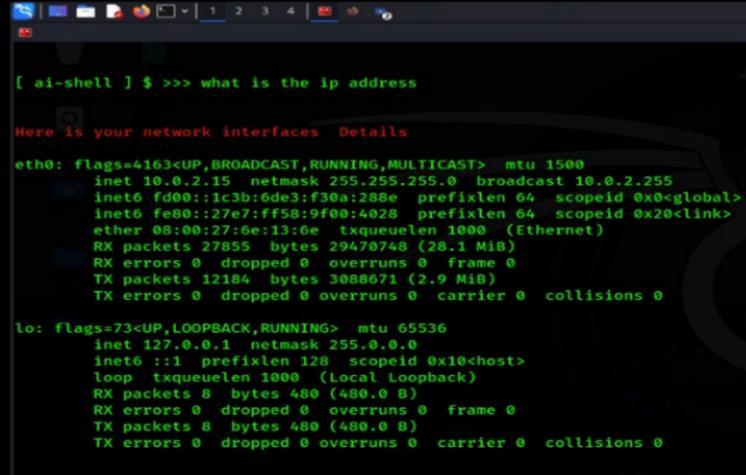

F.CommandExecution–ifconfig

Fig.8.CommandExecution–ifconfig

The user requests the system's network configuration usingnaturallanguage.Theshellaccuratelyinterpretsand executestheifconfigcommand.

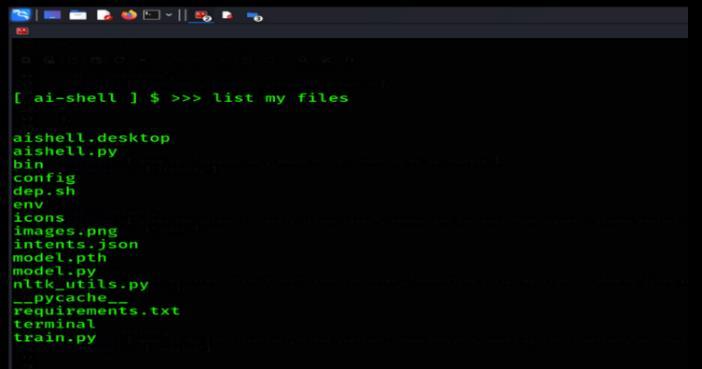

G.CommandExecution–ls

Fig9.CommandExecution–ls

Demonstrateslistingdirectoryfilesusingaplain-language command.Theshellsimplifiesthistechnicaloperationfor easierinteraction.

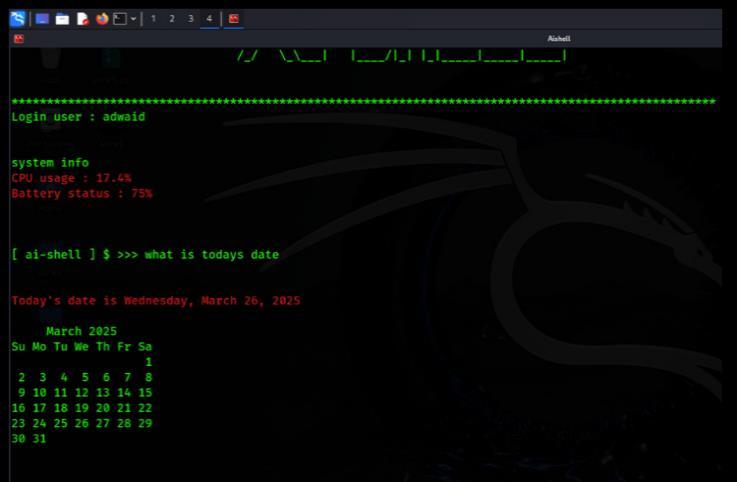

H.6.6DateandCalendarDisplay

Fig10 DateandCalendarDisplay

The user requests the calendar, and the shell responds with the current month’s calendar view and highlighted date,alltriggeredthroughanaturallanguagecommand.

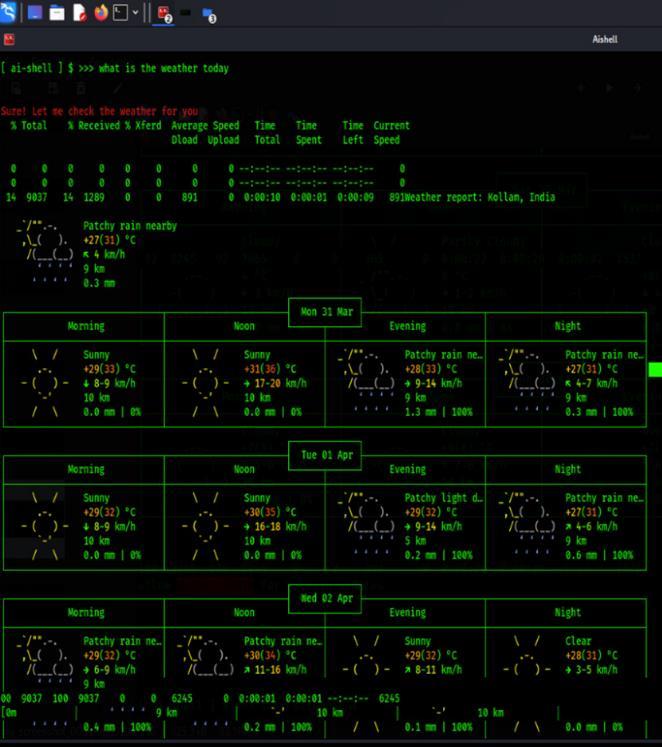

I.WeatherInformation

Fig11.WeatherInformation

The shell displays current weather conditions fetched via natural language input. This feature enhances real-time systeminteraction.

J.Linuxversion

Fig.12Linuxversion

AI-SHELL interface showing the execution of natural languagecommandsintheterminal.

The AI-powered shell interaction system begins with a securelogin process to ensure that onlyauthorized users can access and execute commands. When the system is launched, users are prompted to enter their credentials, which typically include a User ID and Password. This authentication step is crucial for maintaining security, preventing unauthorized access, and ensuring that sensitive system operations are performed only by verified individuals. Once the credentials are entered, the system validates them against a pre-stored user data in

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 07 | Jul 2025 www.irjet.net p-ISSN: 2395-0072

python program. If the entered details match an existing record,theuserisgrantedaccesstotheshellinterface.In case of incorrect credentials, the system provides an appropriateerrormessage.

After successful authentication, the shell interface initializes and displays important system information, such as CPU usage, battery status, and network details. This ensures that users have an overview of the system’s currentstatebeforeexecutinganycommands.TheAIshell is now ready to accept natural language inputs, allowing users to interact with the terminal without needing to remembercomplexshellcommands.

Theloginprocessnotonlysecuresthesystembut also personalizes the user experience by maintaining session details and tracking user-specific preferences. Tshis ensures that the AI shell provides an efficient, secure,anduser-friendlyenvironmentforinteractingwith theoperatingsystem.

Note: The AI-powered shell can speak responses to user commands but doesn't support voice- to-voice commands. Users must type commands, and the AI provides vocal responses. While this improves accessibility with voice feedback, it limits the system to text-basedinputfor interaction.

ACKNOWLEDGEMENT

We sincerely express our heartfelt gratitude to everyone who contributed to the successful development of AI Powered Shell Interaction. Special thanks to our mentors and advisors for their valuable guidance, technicalexpertise,andcontinuedsupport,whichplayeda crucialroleinshapingthisproject.

We also acknowledge the collaborative efforts and dedication of our project team members. Each individual played a vital role in various stages of the project, including requirement analysis, design, model training, coding,testing,anddocumentation.Ourabilitytoworkin synergy helped us overcome technical challenges and implement features such as intent recognition, command execution

[1]D.T.MorrisandD.E.Asumu,“Intelligentnatural language interface for asignal processingsystem,” IEEEProc.Comput.Digit.Tech.,1999.

[2]H.Sharma,N.Kumar,G.K.Jha,andK.G.Sharma, “A natural language interface based on machine learning approach,” Trends in Network and Communications, 2011.

[3]Y.ZhangandX.Wang,“Enhancingcommand-line interfaces with AI-based command prediction,” IEEE Trans.Human-MachineSyst.,2023

[4]L.ChenandM.Liu,“Naturallanguageprocessing techniques for shell command automation,” Proc. IEEE Int.Conf.Artif.Intell.,2022.

[5]R.SinghandP.Kumar, “AI-drivenautomationin Unix shell environments,” IEEE Trans. Autom. Sci. Eng.,2021.

[6] J. Hernandez and S. Roberts, “Conversational agentsforsystemadministrationtasksusingNLP,” Proc. IEEE Symp.Intell.Syst.,2020.

[7]D.T.MorrisandD.E.Asumu,“Intelligentnatural language interface for asignal processingsystem,” IEEEProc.Comput. Digit.Tech.,1990.

[8] J. Hernandez and S. Roberts, “Conversational agentsforsystemadministrationtasksusingNLP,” Proc.IEEESymp.Intell.Syst.,2020.

[9] S. Bharadwaj, Explainable and Efficient Neural ModelsforNLPtoBashCommand,Master’sthesis, IndianInstituteofScience,2021.

[10] B. Manaris, R. Glanville, and T. Gillis, “Developing a natural language interface for the UNIXoperatingsystem,”ACMSIGCHIBull.,1994