International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

Abhijeet R Pandipermbil1 , Prof. Deepali Dhainje2

1Student, Dept. of Computer Science, Fergusson College, Maharashtra, India

2 Professor, Dept. of Computer Science, Fergusson College, Maharashtra, India

Abstract - In the growing field of Natural Language Processing (NLP), efficientsummarizationoftext isrequiredin order to reduce vast amounts of information. The present study proposes a comparative case study of extractive summarization methods through the use of SpaCy, NLTK, TextRank, and K-Means clusteringondomainspecificdatasets obtained from BBC News and Kagglearticledatasets.Withthe same preprocessing and evaluation techniques(BLEUscores), the study exploresthestrength,weakness,andcontent-domain suitability of every algorithm. It is evidenced that SpaCy and TextRank perform better in politics and technology topics, yet K-Means takes a huge lead for entertainment-based material. The study's findings contribute to practical NLP applications through algorithm preference based on content characteristics

Key Words: Text Summarization, SpaCy, NLTK, TextRank, KMeans, NLP, BLEU Score, Extractive Summarization, Case Study

TextsummarizationremainsacriticalareaofNLP,enabling efficient content consumption across domains like journalism, academia, and business intelligence. In an era where vast textual data is generated daily, effective summarizationtoolsreducereadingtimewhilepreserving keyinformation.Extractivesummarizationtechniquesselect and rank significant sentences, making them particularly suitableforapplicationswherefactualintegrityandcontext retention are critical [1][2]. This paper investigates four summarizationtechniques SpaCy,NLTK,TextRank,andKMeans acrosscategorizeddatasets.Ratherthanproposing a novel algorithm, the focus is on applying and analyzing thesetechniquesinrealisticcasestudysettingsusingwidely usednewscorpora.

Theresearchcameras,ingeneral,divideintoextractiveand abstractivesummarizationparadigms.Extractivemethods, forinstance,TextRank[3]andK-Means[4],selectsentences deemed representative based on relevance scores or clusteringmechanisms,respectively.

Abstractivesummarization,ontheotherhand,isexpectedto generatenewsentencesandthereforeneedsdeepersemantic understanding[5].

SpaCyandNLTKexistasgrundlegendePythonlibrariesfor NLP. SpaCy supports neural pipeline integrations and dependencyparsing,whileNLTKisversatilefortokenization, POS tagging, and scoring sentences [6][7]. Previous works havedemonstratedtheuseofSpaCyinconjunctionwithdeep learningarchitecturesinsummarizationproblemsbyZhang et al. [8]. Also, NLTK has been used for extractive summarizationbyGuptaandKumar[9]withdecentresults.

TextRankalgorithmworksbasedoncentralitymeasuresona graphofsentences,fromthepointofviewofimportance[3]. It has been shown suitable for news summarization, especiallyforcontentthathasalotofpolitical ortechnical discourse[10].K-Meansclusteringhasalsobecomepopular for groupingsentences onthe basis ofsemantic similarity, combinedwithTF-IDForwithGloVeembeddings[4][11].

Two datasets were considered, both of which are publicly downloadable:

1. BBC News Dataset: 4900+ articles in categories: politics,tech,sport,business,andentertainment.

2. Kaggle English Articles Dataset: 3101 articles across similarcategories.

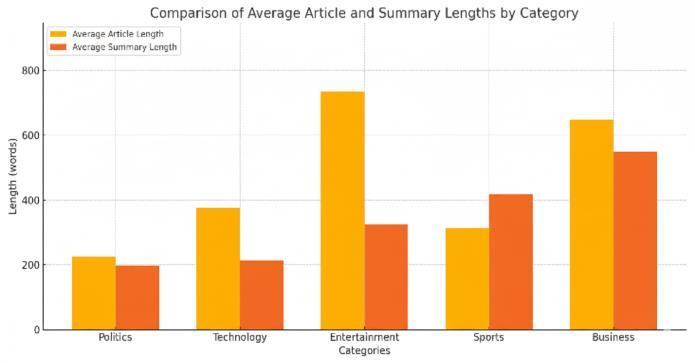

For each dataset, 20 articles from every domain were selected so that there is an equally balanced domain distribution.Tounderstandthestructuralvarianceinthese datasets, we provide a histogram of average article and summary lengths per category (Figure 1). This will offer insightintocontentdensityandaidintheinterpretationof modelperformanceinSection4.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

Fig -1:Histogramshowingtheaveragelengthofarticles andtheircorrespondingsummariesacrosscategoriesin thedataset.BusinessandTechnologyarticlestendtobe longer,afactortakenintoaccountformodelperformance.

1) Pre-processingwasdoneinmultiplestepsonall datasets

2) Convertinguppercasecharacterstolowercase andremovingpunctuations

3) Tokenization was performed via SpaCy and NLTK

4) Stop-wordremoval

5) Lemmatization(NLTK)

6) Nameentityrecognition(SpaCy)

7) Vectorization based on TF-IDF and GloVe embeddings (for clustering and similarity measurements)

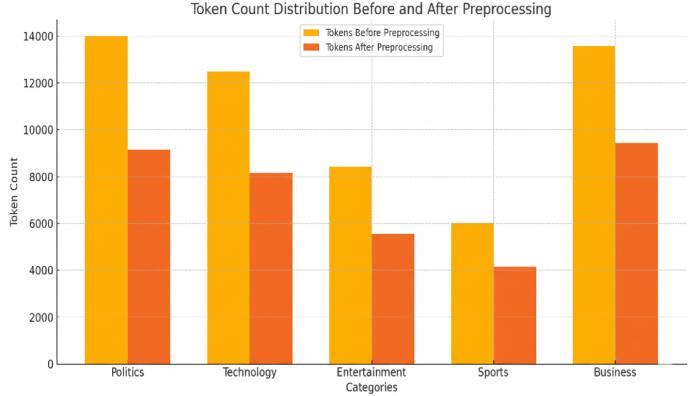

Figure2illustratestokencountsbothbeforeandafterpreprocessing and is a representation of the efficiency of the pre-processinganditseffectsondataefficiency.

Fig -2:Tokencountsbeforeandaftertextpre-processing comparedacrossallcategories.Thereisasignificant reductionintokencountsfollowingcleaningandstopwordremoval.

SpaCyandNLTK:Implementedwiththein-builtextractive summarizersprovidedbythelibraries.

Sentence scoring was based on two schemes: TF-IDF and wordfrequency.

TextRank:Oneconstructeda similaritygraphofsentences withcosinesimilarityandrankedthemwithpageranklogic [3][10].

K-Means:ClusteringofsentenceswasdonebyusingTF-IDF vectors. One sentence from each cluster was then chosen basedonitsclosenesstothatcluster'scentroid.Theclusters were kept flexible depending on the length of the article [11][12].

Theevaluationwasdonebymeasuringhowmuchsimilarity thegeneratedsummarieshadwiththereferences,usingthe BLEU (Bilingual Evaluation Understudy) score [13], which trades-off precision on matches with higher n-grams and includesabrevitypenalty.

COMPARISON OF RESUTS USING BLEU

ThispaperpresentsarigorouscomparisonbetweenNLTK andSPACYmodelsappliedtoadiversedatasetofover500 randomly selected articles, employing BLEU scores for evaluation.Theuniquenessofourworkliesintheinclusion offivedistinctarticledatasets,givingusacompletepictureof howwellourmodelsfillsacrucialgapbyprovidingaconcise yetthoroughcomparativeanalysiscontributingsignificantly to the understanding of natural language processing techniques.

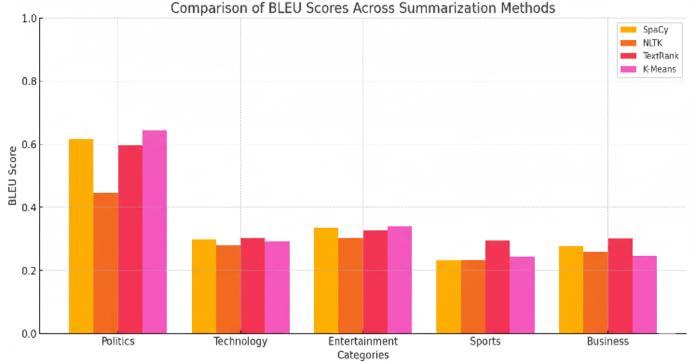

Figure 3 shows the summarization models' relative performanceacrossdomains.ThiscomparativeBLEUscore chartoffersaclearvisualreferenceforrelativeperformance versuscontentalignment.

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 12 Issue: 10 | Oct 2025 www.irjet.net p-ISSN: 2395-0072

Fig -3:BLEUscorecomparisonofSpaCy,NLTK,TextRank, andK-Meansacrossfivecontentcategories.TextRank winsPoliticsandBusiness,withK-Meanswinningin Entertainment.

Observations:

1. TextRankwinsinpoliticalandtechnicalcontent summarization.

2. K-Means has higher BLEU in Entertainment, being better for semantic capturing through clustercentroids.

3. SpaCy gives rather consistent scores for all categories.

4. NLTK scores decently but lags behind in complex domains due to its simple scoring scheme

5. DISCUSSION

The research underlines the importance of summary techniquesaligningwiththecontentstructure.Graph-based algorithms like TextRank work best in such structured domains,drawingstrengthfromlinkagebetweensentences. K-Means, functioning with GloVe, brings about a content groupingbasedoncontextualsimilarity,thusworkingbest for entertainment and lifestyle domains. Rule-based summarization methods such as SpaCy and NLTK are procedurallytransparentandprovidefastsummaries,which fail, however, to capture semantic relations. The results supporttheexistenceofthehybridapproachclusteringwith graph centralities or sentence embeddings for finer summarization [14][15]. Practical implementation within journalism, education, and business reporting can actually profitfromanalgorithm-selectionpipelinethatreliesonthe document'stimecategoryandsummaryintent.

Thehigher-levelcomparativestudyhasrevealedthatnoone techniquetakesthecrownforallcontenttypes.TextRankis the best method for very dense, fact-packed domains like politicsandbusiness.K-Meansphaseswellwithtale-giving

domainslikeentertainment.SpaCyandNLTKposeaneasy solutionforshortsummarizationbutmustbecombinedwith asemantictoolforfullpower.

Futuredirectionsmaycomprise:

Hybridmodelscombiningclusteringandneural summarization.

Summary length control via reinforcement learning.

Incorporationofanabstractivesummarization layerforthehybridoutput.

Real-time summarization pipelines for multilingualormultimediacontent.

[1] Papineni et al., "BLEU: a method for automatic evaluationofmachinetranslation,"ACL,2002.

[2] Mridha et al., "A Survey of Automatic Text Summarization,"IEEEAccess,2021.

[3] MihalceaandTarau,"TextRank:BringingOrderinto Text,"EMNLP,2004.

[4] AgrawalandGupta,"TextSummarizationusingKmeans,"IJSRP,2014.

[5] Devlin et al., "BERT: Pre-training of Deep BidirectionalTransformers,"arXiv,2018.

[6] MishraandGupta,"ExtractiveTextSummarization UsingNLTKandspaCy,"IJARCCE,2022.

[7] JadhavandChaudhari,"ExtractiveSummarization UsingNLTK,"2015.

[8] Zhangetal.,"AHybridTextSummarizationModel BasedonDeepLearningandspaCy,"arXiv,2018.

[9] Kumar and Sharma, "NLTK and SpaCy for Summarization,"IJET,2018.

[10] Ramadhan et al., "Implementation of TextRankinProductReviews,"ICICoS,2020.

[11] Haider et al., "Text Summarization with GloVeandK-Means,"IEEETENSYMP,2020.

[12] DeshpandeandLobo,"TextSummarization usingClustering,"IJETT,2013.

[13] Yadavetal.,"FeatureBasedAutomaticText Summarization,"IEEEAccess,2022.

[14] Rahnama and Ghadiri, "Text Summarization using SpaCy and Transformers," arXiv,2021.

[15] SinghandJain,"ExtractiveSummarization UsingNLTKandDeepLearning,"IJCIDM,2021.