Computer Vision

2012

[1,2]

Computer Vision

[1] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25.

[2] Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009, June). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248255). Ieee.

Computer Vision

2012

[1,2]

[1] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25. AlexNet [1]

[2] Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009, June). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248255). Ieee.

• Object-centric dataset

• Millions of images of 1,000 object categories

Computer Vision

[1] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25.

[2] Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009, June). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248255). Ieee.

[3] Zhou, B., Lapedriza, A., Xiao, J., Torralba, A., & Oliva, A. (2014). Learning deep features for scene recognition using places database. Advances in neural information processing systems, 27.

• Object-centric dataset

• Millions of images of 1,000 object categories [3]

• Scene-centric dataset • Millions of images of 365 Place categories

Computer Vision

Performanceof ComputerVisionsystems

2012

2014 [1,2] [3] Explainability

[1]

[1] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25.

[2] Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009, June). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248255). Ieee.

[3] Zhou, B., Lapedriza, A., Xiao, J., Torralba, A., & Oliva, A. (2014). Learning deep features for scene recognition using places database. Advances in neural information processing systems, 27.

• Object-centric dataset • Millions of images of 1,000 object categories • Scene-centric dataset • Millions of images of 365 Place categories

Computer Vision

Performanceof ComputerVisionsystems

2012

2014 [1,2] [3] Explainability

[1]

[1] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25.

[2] Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009, June). Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248255). Ieee.

[3] Zhou, B., Lapedriza, A., Xiao, J., Torralba, A., & Oliva, A. (2014). Learning deep features for scene recognition using places database. Advances in neural information processing systems, 27.

• Object-centric dataset • Millions of images of 1,000 object categories • Scene-centric dataset • Millions of images of 365 Place categories

We need more than “great performing black boxes”

Increasing number of Deep Learning uses. Some of them involve important decision making.

Esteva, A., Kuprel, B., Novoa, R. A., Ko, J., Swetter, S. M., Blau, H. M., & Thrun, S. (2017). Dermatologist-level classification of skin cancer with deep neural networks. Nature, 542(7639), 115.

Benign or malign?

• CNN on 129,450 clinical images

• Annotations from 21 certified dermatologists

• CNN obtained the same accuracy as the experts

After its publication, the authors noticed a bias in their algorithm

“Is the Media’s Reluctance to Admit AI’s Weaknesses Putting us at Risk?” (2019)

We need more than “great performing black boxes”

Increasing number of Deep Learning uses. Some of them involve important decision making.

After its publication, the authors noticed a bias in their algorithm

“Is the Media’s Reluctance to Admit AI’s Weaknesses Putting us at Risk?” (2019)

Explainability in Comptuer Vision

Input

Understanding the relation between input and output (Local Explanations)

● What is the model “looking at”?

● What parts of the input (image) are contributing the most for the model to produce a specific output?

Internal Representation of the model (Global Explanations) ?

● How is the model encoding the input?

● What is the representation learned by the model?

Understanding the relation between input and output (Local Explanations)

● What is the model “looking at”?

● What parts of the input (image) are contributing the most for the model to produce a specific output?

Understanding why images are correctly classified

in deep scene cnns. ICLR, 2015.

Understanding why images are correctly classified

Understanding why images are correctly classified

Base of Image regions (superpixels)

Understanding why images are correctly classified

Base of Image regions (superpixels)

Understanding why images are correctly classified

Base of Image regions (superpixels)

Understanding why images are correctly classified

Base of Image regions (superpixels)

Understanding why images are correctly classified

Base of Image regions (superpixels)

Understanding why images are correctly classified

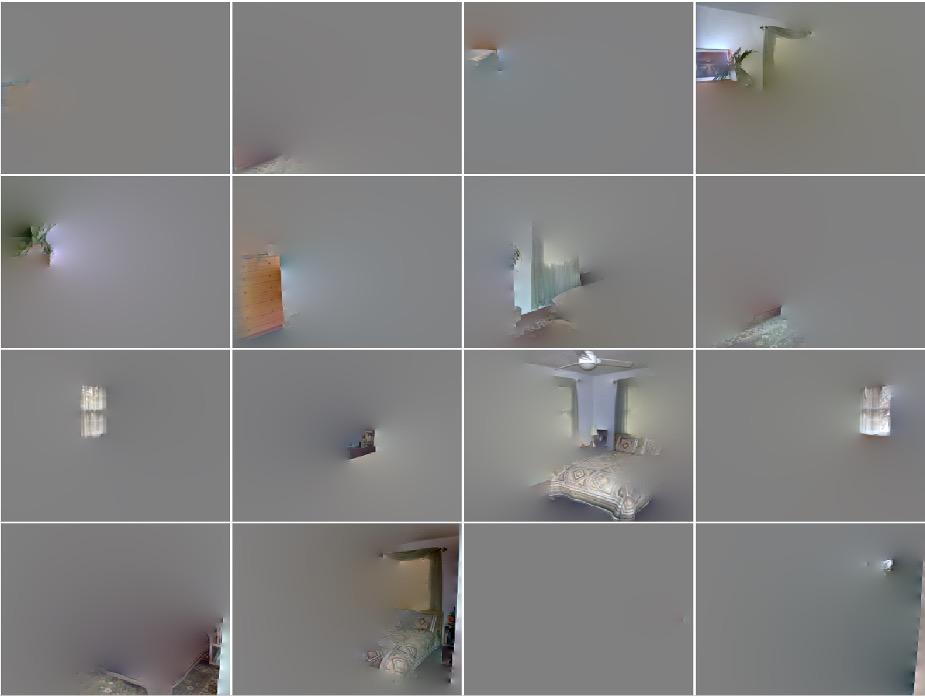

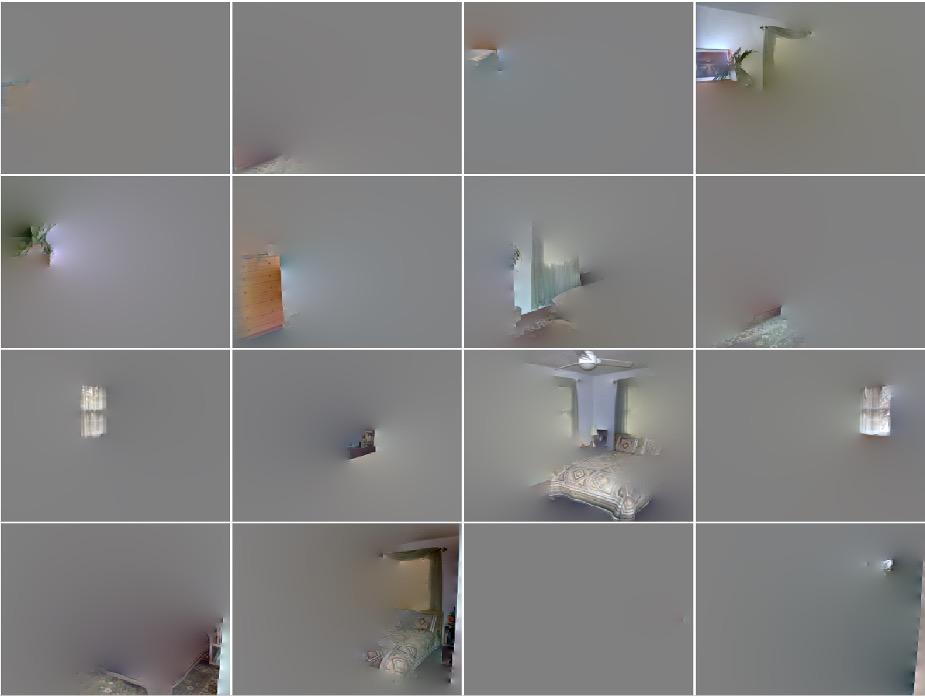

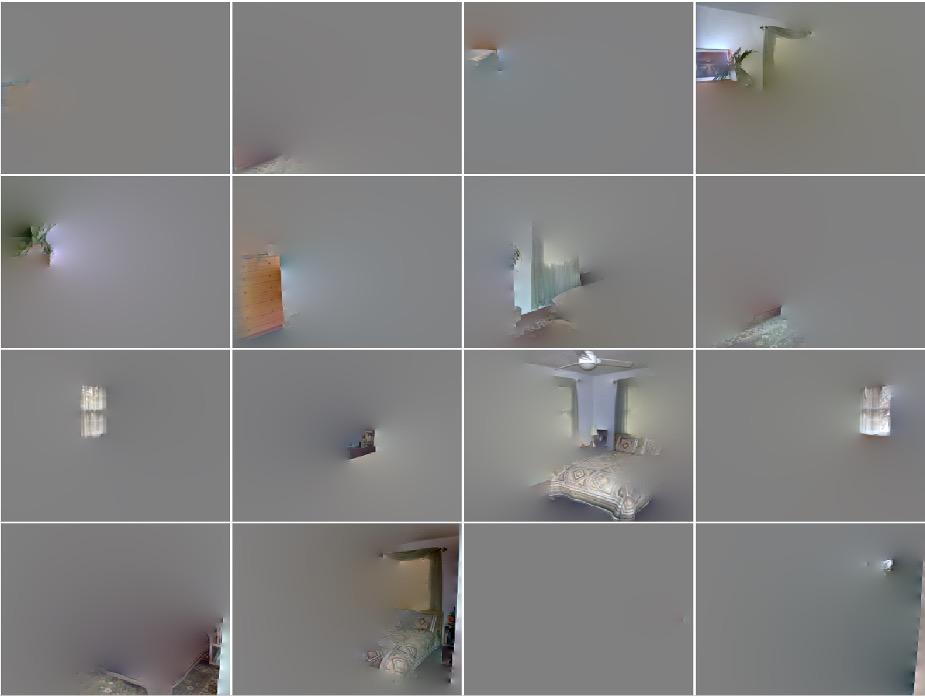

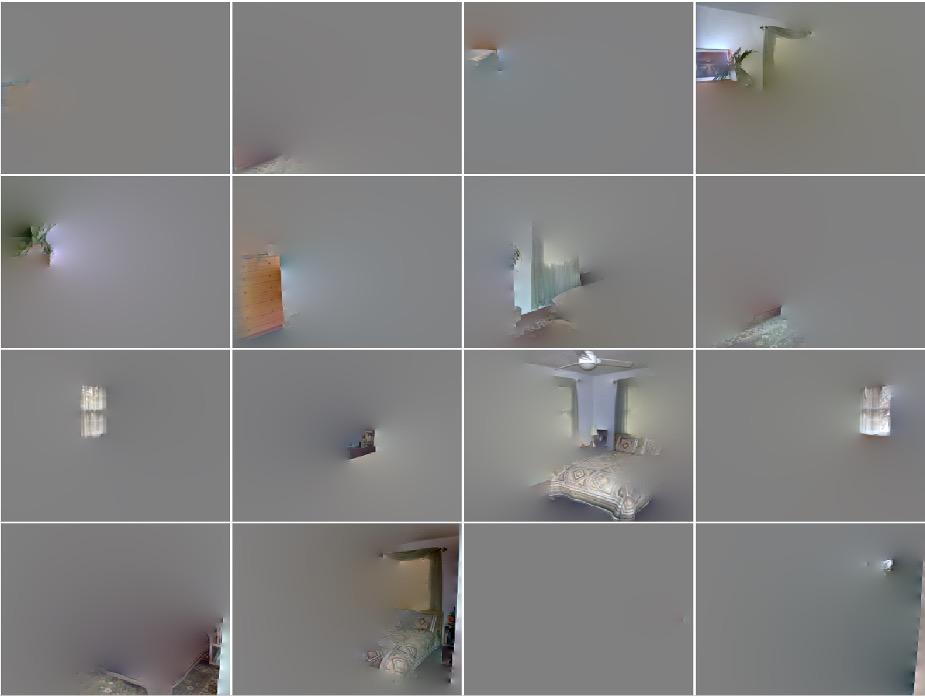

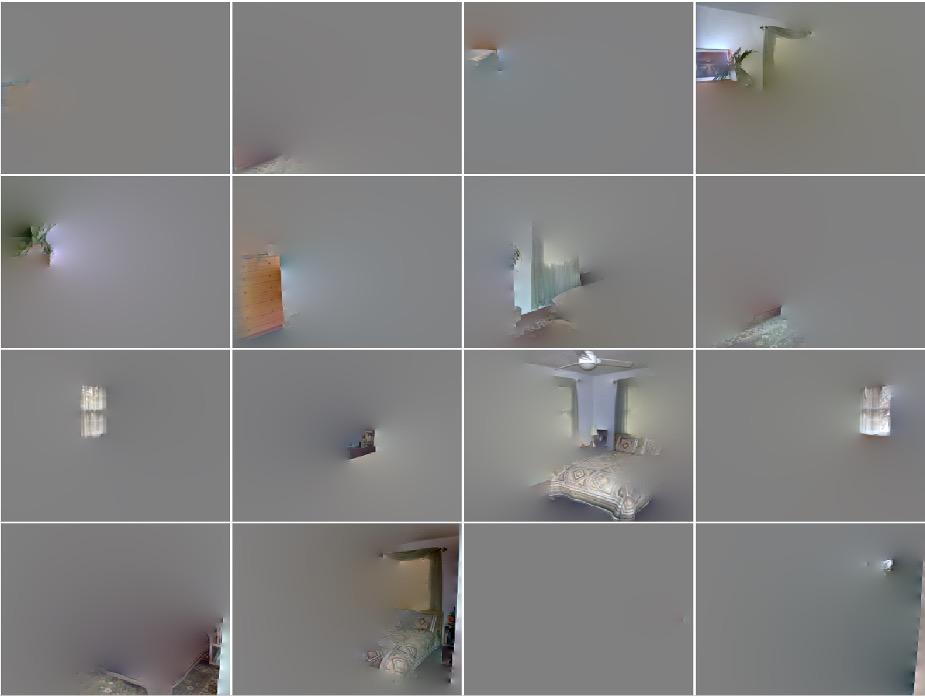

Bedroom Bedroom (minimal image)

Bedroom

Understanding why images are correctly classified

Dining Room Dining Room

Understanding why images are correctly classified

Dining Room Dining Room (minimal image)

Dining Room

Techniques based on analyzing how the output changes when making small perturbations in the input

Visualizing the minimal image

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A. & Torralba, A.,

Object detectors emerge in deep scene CNNs. ICLR, 2015.

Techniques based on analyzing how the output changes when making small perturbations in the input

Visualizing the minimal image

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A. & Torralba, A.,

Object detectors emerge in deep scene CNNs. ICLR, 2015.

Occluding small regions of the input and observing how the output changes

Zeiler, Matthew D., and Rob Fergus. "Visualizing and understanding convolutional networks." European conference on computer vision. Springer, Cham, 2014

Techniques based on analyzing how the output changes when making small perturbations in the input

Visualizing the minimal image

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A. & Torralba, A.,

Object detectors emerge in deep scene CNNs. ICLR, 2015.

Occluding small regions of the input and observing how the output changes

When occluding the region that is most informative for the model, the drop of the true class score is stronger.

Zeiler, Matthew D., and Rob Fergus. "Visualizing and understanding convolutional networks." European conference on computer vision. Springer, Cham, 2014

Class Activation Map (CAM)

Heatmap visualizations

Highlighting the areas of the image that contributed the most to the decision of the model.

B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, and A. Torralba. "Learning Deep Features for Discriminative Localization". Computer Vision and Pattern

(CVPR), 2016.

CAM results on Action Classification

CAM results on more abstract concepts

Mirror lake

View out of the window

CAM on Medical Image

Input Chest X-Ray Image

P., Irvin, J., Zhu, K., Yang, B., Mehta, H., Duan, T., ... & Ng, A. Y. (2017). Chexnet: Radiologist-level pneumonia

detection on chest x-rays with deep learning. arXiv preprint arXiv:1711.05225.

CAM on Medical Image

Input Chest X-Ray Image

detection on chest x-rays with deep learning. arXiv preprint arXiv:1711.05225.

Chexnet: Radiologist-level pneumonia

Explainability for Debugging

Esteva, A., Kuprel, B., Novoa, R. A., Ko, J., Swetter, S. M., Blau, H. M., & Thrun, S. (2017). Dermatologist-level classification

of skin cancer with deep neural networks. Nature, 542(7639), 115.

Explainability in Comptuer Vision

Understanding the relation between input and output (Local Explanations)

Make simple perturbations in the image and observe how the classification score changes.

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., & Torralba, A. “Object detectors emerge in deep scene cnns”. ICLR, 2015.

Class Activation Maps

B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, and A. Torralba. "Learning Deep Features for Discriminative Localization". Computer Vision and Pattern Recognition (CVPR), 2016.

#Grad-CAM Ramprasaath R. Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, Dhruv Batra. Grad-CAM: Why did you say that? Visual Explanations from Deep Networks via Gradient-based Localization. ICCV 2017 [video]

Explainability in Comptuer Vision

Understanding the relation between input and output (Local Explanations)

Make simple perturbations in the image and observe how the classification score changes.

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., & Torralba, A. “Object detectors emerge in deep scene cnns”. ICLR, 2015.

Class Activation Maps

B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, and A. Torralba. "Learning Deep Features for Discriminative Localization". Computer Vision and Pattern Recognition (CVPR), 2016.

#Grad-CAM Ramprasaath R. Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, Dhruv Batra. Grad-CAM: Why did you say that? Visual Explanations from Deep Networks via Gradient-based Localization. ICCV 2017 [video]

Internal Representation of the Model (Global Explanations)

Understanding the internal representation of the model

Understanding what the units in the CNN are doing

Understanding what the units in the CNN are doing

3D Matrix

Feature maps

Understanding what are the units in the CNN doing

3D Matrix

Understanding what are the units in the CNN doing

3D Matrix

Visualization of image patches that strongly activate units

● Take a large dataset of images

● Per each image, take a forward pass in the model and keep the maximum score and corresponding location the specific unit.

● Visualize those images in the dataset that produce the highest response in this unit.

Unit 1

Visualizations for units in the last convolutional layer of an AlexNet trained for Place categorization

Crowdsourcing Units

● Word Description

lighthouse

● Mark the image that do not correspond to the concept you just wrote

● Which category does your short description mostly belong to?

❏ Scene

❏ Region or Surface

❏ Object

❏ Object part

❏ Texture or Material

❏ Simple elements or colors

Several object detectors emerge in the last convolutional layer for Place

Simple shapes and colors

Textures, Regions, Object parts

Objects and Scene Parts

Network Dissection

Probabilistic formulation to pair units with concepts in the BROADEN visual dictionary

BRODEN Visual Dictionary

● 1,197 concepts; 63,305 images

Broden: Broadly and Densely Labeled Dataset

Bau, D., Zhou, B., Khosla, A., Oliva, A., & Torralba, A. Network dissection: Quantifying interpretability of deep visual representations. In

of the IEEE conference on computer vision and pattern recognition (pp. 6541-6549), 2017.

Network Dissection

dissection: Quantifying interpretability of deep visual representations. In

IEEE conference on computer vision and pattern recognition (pp. 6541-6549), 2017.

Interpreting Face Inference Models using Hierarchical Network Dissection

Teotia, D., Lapedriza, A., & Ostadabbas, S. (2022). Interpreting Face Inference Models Using Hierarchical Network Dissection. International Journal of Computer Vision, 130(5), 1277-1292.

Divyang Teotia Sarah Ostadabbas

Agata Lapedriza

Divyang Teotia Sarah Ostadabbas

Agata Lapedriza

Hierarchical Network Dissection: main idea

Apparent age: [20-30]

Motivation

• Significant improvements in Deep Learning based Face classification models.

• Concerning biases and poor performance in underrepresented classes.

• Significant improvements in Deep Learning based Face classification models.

Goals

• Concerning biases and poor performance in underrepresented classes.

• Understanding the representations learned by FaceCentric CNNs.

• Exploring the use of explainability to reveal biases encoded in the model.

Face dictionary

12 Global concepts

38 Local concepts.

We work with academic datasets with categorical labels corresponding to social constructs, apparent presence, or stereotypical representations of these concepts. However we acknowledge that these concepts are more fluid in essence, as further discussed in Sect. 4. of our paper.

Face dictionary: Examples

Global concepts

Apparent Age

[CelebA, Liu 2015]

Local concepts

Facial attributes [CelebA, Liu 2015]; AU, [EmotioNet, Benitez- Quiroz et al. 2016]

Algorithm for unit-concept pairing

Analyze the activation of this unit when you do a forward pass with all the images in the dictionary.

Algorithm for unit-concept pairing

[20-30]

• Stage Ⅰ: Paring Units with Global Concepts

Analyze the activation of this unit when you do a forward pass with all the images in the dictionary.

• Stage Ⅱ : Pairing Units with Facial Region

Eye Region

Nose Region

Cheek Region

Mouth Region

Chin Region

• Stage Ⅲ : Pairing Units with Local Concept

Unit-concept pairing qualitative visualizations

Dissecting Face-centric models

• We perform our dissection on five face-centric inference tasks: age estimation, gender classification, beauty estimation, facial recognition, and smile classification.

● For all the models we dissect the last convolutional layer.

Dissecting General Face Inference Models

Apparent Age Estimation

Dissecting General Face Inference Models

Apparent Age Estimation

Dissecting General Face Inference Models

Apparent Gender Estimation

Dissecting General Face Inference Models

Smile classification (smile vs. non smile)

Can Hierarchical Network Dissection reveal bias in the representation learned by the model?

Controlled experiments: bias introduced on the local concept eyeglasses

• Target task: apparent gender recognition

• Datasets with different degrees of bias: from Balanced to Fully biased

Balanced (P = 0.5)

• Model: ResNet-50

Fully biased (P = 1)

Apparent gender recognition wearing eyeglasses

Controlled experiments: bias introduced on the local concept eyeglasses

Controlled experiments: bias introduced on the local concept eyeglasses

Limitations

● There are many facial concepts that are not included in the dictionary and this limits the interpretability capacity of Hierarchical Network Dissection.

● For the local concepts in our face dictionary, the segmentation masks were computed automatically. This generates some degree of noise that can affect the unit-concept pairing, particularly for the local concepts with small area.

Take home messages

Take home messages

Understanding the relation between input and output

What elements in the input are responsible for the model to produce a specific output?

Internal Representation of the model

How is the model working internally? E.g.

Take home messages

Understanding the relation between input and output

What elements in the input are responsible for the model to produce a specific output?

Internal Representation of the model

How is the model working internally?

Class Activation Maps Analyzing the units of the model

The interest for Explainability

● Debugging

● Reusing the model for downstream tasks

● Audit the model: Bias Discovery and Fairness in AI