& Engineering Technology (IJRASET

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538 Volume 10 Issue IX Sep 2022 Available at www.ijraset.com

& Engineering Technology (IJRASET

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538 Volume 10 Issue IX Sep 2022 Available at www.ijraset.com

Abstract: Big Data is an idea used to portray informational collections that are excessively enormous or complex for standard social data sets to catch, handle, and interact in an opportune way. Huge information has at any rate, one of the going with credits: a huge volume, a quick speed, or a wide assortment. Computerized logic, the web, social media, and the Internet of Things are both speeding up the complexity of knowledge by new directions and wellsprings of data. Sensors, PCs, video, log documents, value based programming, web based media, for instance, all create huge measures of information progressively. Keywords: Analysing Massive Data, Security, Visualizing data, Hadoop, MongoDB, Semi Structured data

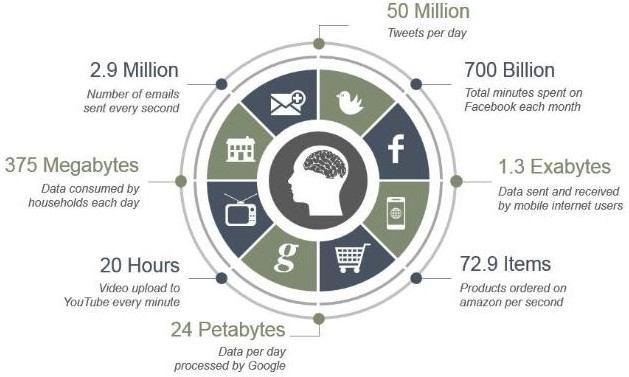

Enormous information analytics is the utilization of forefront reasonable methodologies against immense, distinctive educational records that unites structure, semi composed and unstructured information, from various sources, and in sizes ranging from terabytes to zettabyte. Assessment of huge data grants specialists, examiners, and business customers to make better and faster decisions using data that was at that point unusable. Affiliations can utilize progressed assessment methodologies, for example, text evaluation, AI, farsighted appraisal, information mining, encounters, and common language intending to get novel considerations from effectivelyinconspicuous information sources to independently or close byexisting endeavor information. Data is made from various sources like online media, the monetary region, etc. Enormous data assessment examines immense and different kinds of data to uncover concealed models, connections, and various encounters. Attributes associated with Big data analytics are 5 V’s Volume, Variety, Value, Velocity, Veracity. [1] Volume suggests the tremendous proportion of data that is being made every day however speed is the speed of advancement and how rapidly the data is gathered for being inspected. [2] The collection gives information about such data, forinstance, coordinated look like tables having lines and fragments, unstructured like sound reports, video records, picture archives, semi coordinated like .xml reports, etc. Worthinsinuates deciding accommodating or huge data from the gigantic data. Speed implies the rate at which data is created. Veracity insinuates the openness weakness of the data and duty. The amazing goal of a massive data analysis is to process data with high volume, speed, grouping, and veracity using a variety of traditional and computationally informative techniques.

Massive data has been amassed a couple of spaces like clinical benefits, strategy the board, retail, regular science, and other interdisciplinary legitimate assessment. Online applications experience colossal data a significant part of the time, like social figuring, web text and records, and web search requests. Social figuring consolidates casual association examination, online organizations, recommender systems, reputation structures, and conjecture markets. Considering the advantages of immense data, it gives newopen entryways in thedata planning tasks for the approaching investigators. [3]

Journal for Research in Applied Science & Engineering Technology (IJRASET)

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538 Volume 10 Issue IX Sep 2022 Available at www.ijraset.com

To cope with the problems, we need to understand various computational intricacies, data protection, and computational techniques to break down large amounts of data. For example, certain factual approaches that work well with small amounts of data do not scale to large amounts of data. Similarly, severalstatistical procedures that work well for small amounts of data struggle to dissect massive amounts of data. Numerous expertshave looked at the many challenges that the health care industryfaces. Data stockpiling and investigation; information revelation and computational intricacies; adaptability and interpretation of information; and data managementarethefourbroad categories under which the challenges of big data examination are classified.

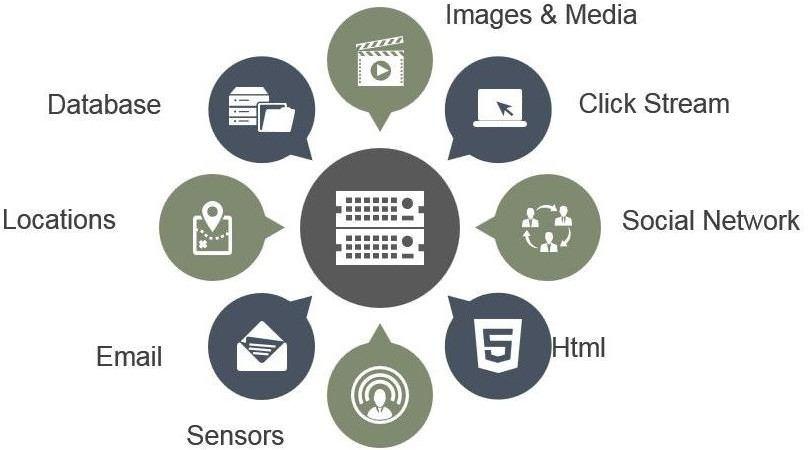

1) Facebook, Twitter, Tumbler, and other social media sites, Personal documents

2) Photos on Instagram, WhatsApp, Picasa, and other social media platforms and videos on YouTube

3) Mobile data content: instant messaging Internetsearches, connection via social media

4) Sensor information incorporates fixed sensors, home control, climate/contamination sensors, traffic sensors/webcam, science sensors, and security/reconnaissance recordings/pictures.

5) Recordings/photographs of safety/surveillance

6) Machine information like street cameras, satellites, games, clinical gadgets

7) Transactional information like Invoices, instalment orders, stockpiling records, conveyance receipts

2 Sources of Big Data Analytics [4]

1) Creating companies that are smarter and moreeffective. For example, the New York Police Department isimplementing a new pattern recognition technology called patterner to better solve crimes using big data by allowing investigators to dig through "hundreds ofthousands" of case files. The result seems to be one that lets prosecutors minimize some of the work they must do by partly automating a procedure that has previously been done manually.

2) Analyze consumer actions and improve company processes. For instance, Amazon increased its rates to draw morebuyers and raise sales by an average of 25% each year.Prices are determined based on the website operation,pricing by rivals, commodity supply, itemtastes, orderhistory, estimated profit margin, and other considerations.

3) Service cost control. For instance, Parkland Hospital in Dallas, Texas, usesadvanced analytics to identify high risk patients and assist them in overcoming illnesses. As a result, this facility has seen a decrease in 30 day readmissions and has saved over $500,000 per year. [5]

4) Cutting edge items. For example, Netflix discovered consumer direct andpurchasing habits by collecting input from their 151 million allies and executing data appraisal models. After that, they will use that knowledge to suggest movies and TV shows based on their allies' preferences. Google self driving vehicles: The data vehicles get through these sources furnishes them with the information expected to make safe driving decisions. Before self driving vehicles change the auto business, which will expand the security of streets around the world. Smart yoga tangles: The tangle will want to give criticism on your stances, score your training, and even guide you through an at home practice. [6]

Factor

Engineering Technology (IJRASET

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538 Volume 10 Issue IX Sep 2022 Available at www.ijraset.com

1) Descriptive Analytics: This analytics is a starter phase of information handling that makes a rundown of chronicled information to yield helpful data and perhaps set up the information for additional examination. For instance, Google Analytics devices is the best model for worker through the instrument which help comprehend what really occurred before and approveif a limited timecrusade was fruitful ornot founded onessential boundaries like online visits. [7]

2) Predictive Analytics: This analytics includes removing information from existing informational indexes determined to recognize patterns and examples. These patterns and examples are then used to foresee future results and patterns. While it is anything but a flat out science, prescient examination furnishes organizations with the capacity to dependably gauge future patterns and practices. For instance, Southwest Airlines investigations sensor information on their planes to recognize designs that demonstrate a likely breakdown, along these lines permitting the carriers to the fundamental fixes before its timetable. [8]

3) Perspective Analytics: This analytics is the following stage up in information decrease. It uses an assortment of measurable, demonstrating, information mining, and AI strategies to contemplate later and verifiable information, accordingly, permitting experts to make forecasts about what is to come. For Example, Google self driving vehicle is an ideal illustration of viewpoint Analytics. It examines the climate and chooses the bearing to take dependent on information.

4) Diagnostic Analytics: This analytics is a type of cutting edge investigation that looks at information orsubstance to address the inquiry, "For what reason did it happen?” It is described by procedures, for example, drill down, information revelation, information mining, and relationships. For example, social media is showcasing effort, you can utilize indicative Analytics to survey the quantity of posts, specifies, blossoms, fans, online visits, audits,pins, and so forth, and investigate the disappointment and achievement pace of the mission at an essential level. [9]

1) Identifying the Issue: The team researches the business context, as well as any related experience, such as whether the enterprise or business unit has attempted similar ventures in the past and what lessonsthey might draw from them. In terms of personnel, technologies, time, and data, the team evaluates the tools required to fund the project. Framing the marketdilemma as an analytics issue that can be solved in subsequent stages, as well as formulating initial hypotheses (IHs) to validate and begin understanding the data, are important activities in this process.

2) Designing Data Requirement: The existence of alogical sandbox, in which the team will deal with data and conduct tests for the duration of the project. To bring details into the sandbox, the team must use ELT(concentrate, load, and adjust) or split, change, and burden. The ELT and ETL are often abbreviated as ETLT. In the ETLT cycle, information can be updated so that the community can deal on it and break it down. The community must also fully acclimate to the knowledge at this point and find ways to condition it.

Journal for Research in Applied Science & Engineering Technology (IJRASET)

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538 Volume 10 Issue IX Sep 2022 Available at www.ijraset.com

3) Pre processing Data: The group settles on thesystems, techniques, and work process it can use during themodel structurestage. The group researchesthe information to see more about the connections between factors, at that point picks the primary factors and the best models.

4) Performing Analysis over Data: Team makes datasets for use in examination, educating, and improvement. Furthermore, considering the work acted in the advancement arranging measure, the group creates and carries out models in this stage. The group likewise talks about if its present instruments would be sufficient for running the reproductions, or whether it would require a steadier system for demonstrating and work process execution.

5) Visualizing Data: The group, in a joint effort with significant partners, decides whether the consequences of the undertaking are a triumph or a disappointment dependent on the measures created in recognizing the issue. The gathering should perceive key revelations, assess the business regard, and encourage a record, tosummarize, and give disclosures to accomplices.

1) Hadoop is a product structure utilized for grouped document frameworks and taking care of enormous information. It measures datasets of enormous information through the MapReduce programming model.

2) Xplenty is a stage to incorporate, measure, and get ready information for examination on the cloud. It will bring all your information sources together. Its instinctive realistic interface will assist you with carrying out ETL, or a replication arrangement.

3) Lumify is a free and open source instrument for enormous information combination/coordination, investigation

4) DataWrapper is an open source stage for information representation that guides its clients to produce basic, exact, and embeddable outlines rapidly.

1) Healthcare: The degree of information produced inside medical care frameworks is not inconsequential. Customarily, the medical care industry slacked in utilizing Big Data, on account of restricted capacity tonormalize and merge information. In any case, presently Big information investigation have improved medical services by providing personalizedmedication and assessments. Experts are mining the data to see what meds are more effective for conditions, perceive plans related to sedate outcomes,and gains other critical information that can help patients and reduce costs. With the expanded utilization of mHealth, onlineHealth, and wearable advances, the measure of information accessible is quick. This incorporates information from electronic health records, symbolism, patient produced information, sensor information, and different types of information. It is possible to predict diseases that would increase in specific areas by combining medical services data with geological informational sets. It is easier to schedule diagnostics and loading serums and immunizations because you know what to expect.

2) Science: Data extracted from IoT devices allows for the organization of devices based on their accessibility. Various organizations and governments have used such mappings to expand their capabilities.IoT is similarly logically understood as a method for gathering content data, and this unmistakable data is used in therapeutic and delivery environments.

a) Drugs Testing: Big Data developments, according to a McKinsey poll, could save drug makers $40 billion to $70 billion in innovative work costs. To test medications and therapies, the FDA and NIH use Big Data analytics to access a vast volume of data.

b) Climate Predictions: The National Oceanic andAtmospheric Administration assembles informationthe entire day from ground, ocean, and space based sensors. Day by day they utilize massive data to examine and remove the esteem from more than 20 terabytes of information.

3) Security: Since Government significantly acts in every one of the spaces, accordingly it assumes asignificant part in improving Big Data applications inevery area. Allow us to view a portion of the significant security zones:

a) Network safety and Intelligence: The public authority dispatched an organization insurance creative work plan that relies upon the ability to analyze tremendous enlightening files to improve the security of U.S. PC associations. For instance, satellite and online media data. It contains a grouping of data from masterminded unclassified and profoundly private associations.

b) Criminality Prediction: Advanced, ongoing investigation can be used by police agencies to provide useful intelligence that can be used to better interpret criminal activity, detect wrongdoing/episode trends, and recognize risks in certain areas.

Impact Factor

Factor 7.894

International Journal for Research in Applied Science & Engineering Technology (IJRASET)

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538 Volume 10 Issue IX Sep 2022 Available at www.ijraset.com

4) Business: Big data is discovering use in practically all ventures today. Here is a rundown of the top sections utilizing large information to give you a thought of itsapplication and degree.

a) Manufacturing: To build profitability by utilizing large information to upgrade store network the executives. Assembling organizations utilize these logical apparatuses to guarantee that are apportioning the assets of creation in an ideal way that yields the greatest advantage.

b) Transportation: Better road routing, traffic monitoringand regulation, and logistics are all advantages. Governments primarily do this to avoid traffic jams ina particular location.

Major benefits of using Big Data Analytics: [10]

A boost in productivity

Improved experience and commitment

Better business decision making

It was possible to save money.

1) Improve client incorporations: Complete coordinated,semi coordinated, and unstructured data from touchpoints your customer has with the association to get a 360 degree viewpoint on your customer's direct and motivations for improved custom fitted publicizing. Data sources can consolidate web based media, sensors, mobile phones, evaluation, and call log data. [11]

2) Detect and moderate extortion: Screen trades persistently, proactively seeing those weird models and works on showing bogus activity. Using the power of tremendous data close by judicious/prescriptive examination and relationship of undeniable and worthbased data helps associations with expecting and lighten distortion. [12]

3) Drive inventory network efficiencies: Gather and inspect big data to choose how things are showing up at their goal, recognizing inadequacies and where costs and time can be saved. Sensors, logs, and contingent data can help track fundamental information from the circulation community to the goal.

Many people believe that data evaluation will come later, andsome people are concerned that working with massive data stores will lead to security breaches and increased checks. Theability to arrange and isolate monumental plans of intelligence, which is being developed mechanically, could spark reformist changes in human society. Driving technologists and researchers all over the world foresee positive outcomes from Big Data; however, others are concerned about the expected gains.

Information has been created dangerously fast lately. For a layman, dissecting this information is troublesome. Keeping that in mind, we analyze the various investigation questions, issues, and techniques used todecipher huge information in thisarticle. As per the consequences of this investigation, each enormous information stage has its own one of a kind accentuation. Some are intended for cluster preparing, while others dominate at constant investigation. Each enormous information stage likewise has explicit usefulness. Variousprocedures utilized for the examination incorporate measurableinvestigation, AI, information mining, clever investigation, distributed processing, and information handling. The future enthusiasts will focus harder on these methods to take care of issues of enormous information viably and effectively.

All Rights are Reserved | SJ Impact Factor 7.538 | ISRA Journal Impact Factor 7.894 |

Journal for Research in Applied Science & Engineering Technology (IJRASET)

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538

Volume 10 Issue IX Sep 2022 Available at www.ijraset.com

I take immense pleasure in expressing my deep gratitude toour beloved professor G. Suresh Reddy, Professor & Head, Department of Information Technology, VNRVJIET, and I take tremendous delight to communicate my deep gratitude toour beloved Guide Dr. G. Madhu, Professor of Information Technology, VNRVJIET, for his significant ideas and uncommon bits of knowledge, for the consistentwellspring ofsupport and motivation all through my task work.

[1] https://www.researchgate.net/publication/328783489

[2] https://www.weforum.org/.../how much data is generated each day cf4bddf29f

[3] https://www indeed com/career advice/career development/big data analytics

[4] https://www.geeksforgeeks.org/different sources of data for data analysis

[5] https://www.parklandhealth.org/parkland financial assistance

[6] https://www.investopedia.com/articles/investing/052014/how googles selfdriving car will change everything.asp

[7] https://smartbear.com/blog/use google analytics find devices customers use

[8] https://www.bizjournals.com/losangeles/news/2019/02/21/southwest airlines uniquely painted planes.html

[9] https://blog.hootsuite.com/what is social media analytics/ [10] https://blockgeni.com/benefits of big data analytics

[11] J. Padhye, V. Firoiu, and D. Towsley, “Insights of customer service unstructured data” Univ. of Massachusetts, Amherst, MA, CMPSCI Tech. Rep. 99 02, 2009.

[12] https://reolink.com/blog/protect yourself from extortion/

Factor 7.894