ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538

Volume 10 Issue XI Nov 2022 Available at www.ijraset.com

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538

Volume 10 Issue XI Nov 2022 Available at www.ijraset.com

Kaushik Kumar Jha1, Balram Kumar Jha2, Suyash Ajit Byale3, Vivek Sitaram Dadewa4, Prof. Pushpanjali. S. Sajjanshetti5

Abstract: Hand signs are a viable type of human-to-human correspondence that has various potential applications. Being a characteristic method for collaboration, they are generally utilized for correspondence purposes by discourse impeded individuals around the world. As a matter of fact, around one percent of the Indian populace has a place with this class. This is the key motivation behind why it would meaningfully affect these people to integrate a structure that would figure out Indian Gesture based communication. In this paper, we present a method that utilizes the Pack of Visual Words model (BOVW) to perceive Indian communication via gestures letter sets (A Z) and digits (0 9) in a live video transfer and result the anticipated marks as text as well as discourse. Division is done in view of skin tone as well as foundation deduction. In this paper we are going to use convolutional neural network for sign language detection.

Index Terms: Convolutional Neural Network, Deep Learning, Feature Extraction, Image Processing, Image Classification, Sign Language Detection.

Correspondence has consistently assumed a fundamental part in human existence. The type to interface with others and put ourselves out there is an essential human need. Nonetheless, in view of our childhood, schooling, society, thus on, our viewpoint and the manner in which we speak with others can contrast generally from everyone around us. What's more, guaranteeing that we are perceived in the manner we plan, assumes a vital part. In spite of this reality, typical people don't have a lot of trouble collaborating with one another and can communicate their thoughts effectively through discourse, motions, non verbal communication, perusing, composing, discourse being generally utilized among them. In any case, individuals impacted by discourse impedance depend just on gesture based communication, which makes it more challenging for them to speak with the rest of the larger part. This suggests a necessity for communication via gestures recognizers which can perceive and convert gesture based communication into communicated in or composed language as well as the other way around. Such identifiers, nonetheless, are restricted, exorbitant, and awkward to utilize. Presently, analysts from various nations are chipping away at these sign language recognizers, which is the principal reason behind the development of automatic sign language recognition systems.

1) Image processing & pattern recognition.

2) Training TensorFlow for sign language.

3) Design a suitable algorithm for hand gesture recognition.

4) Detecting sign language in real time.

Speech impaired people use hand signs and gestures to communicate. Normal people face difficulty in understanding their language. Hence there is a need of a system which recognizes the different signs, gestures and conveys the information to the normal people. It bridges the gap between physically challenged people and normal people.

Shagun Katoch et al. [1] stated that In this paper, creator present a strategy that utilizes the Pack of Visual Words model (BOVW) to perceive Indian gesture based communication letters in order (A Z) and digits (0 9) in a live video transfer and result the anticipated names as text as well as discourse. Division is done in view of skin tone as well as foundation deduction. SURF (Speeded Up Hearty Highlights) highlights have been separated from the pictures and histograms are produced to plan the signs with comparing names. The Help Vector Machine (SVM) and Convolutional neural network (CNN) are utilized for grouping. An intuitive Graphical UI (GUI) is likewise created for simple access.

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538 Volume 10 Issue XI Nov 2022 Available at www.ijraset.com

Yogeshwar I. Rokade et al. stated that this paper, a strategy is proposed for the programmed acknowledgment of the finger spelling in the Indian communication via gestures. Here, the sign as motions is given as a contribution to the framework. Further different advances are performed on the information sign picture. First and foremost division stage is performed in light of the skin tone to recognize the state the sign. The recognized area is then changed into paired picture. Afterward, the Euclidean distance change is applied on the acquired twofold picture. Line and segment projection is applied on the distance changed picture. For highlight extraction focal minutes alongside HU's minutes are utilized. For grouping, brain organization and SVM are utilized.

Ananya Roy et al. [3] stated that to perceive the American Communication via gestures and converts it to message. Input given to the framework is a picture of the hand portraying the essential letter set. The histogram of the information picture is then registered and checked for likeness with the histograms of pre saved pictures by utilizing the Bhattacharyya Distance Metric. OpenCV is utilized as a device for picture handling in the proposed framework. The picture whose histogram is the most comparative with the histogram of the information picture is then checked for its related letter set and the letters in order is printed out.

Sawant Pramada, Deshpande Saylee, Nale Pranita, Nerkar Samiksha [4] proposed that foster a keen framework which can go about as an interpreter between the sign language and the communicated in language progressively and can make the correspondence between individuals with hearing weakness and ordinary individuals both compelling and productive. The framework is we are executing for Two fold sign language however it can recognize any communication through signing with earlier picture handling.

Jinalee Jayeshkumar Raval et al. [5] stated that filling the hole between in an unexpected way abled individuals like challenged and the others. Picture handling joined with AI helped in shaping an ongoing framework. Picture handling is utilized for pre handling the pictures and extricating different hand from the foundation. These pictures acquired in the wake of removing foundation were utilized for shaping information that contained 24 letters in order of the English language. The Convolutional Brain Network proposed here is tried on both a uniquely designed dataset and furthermore with constant hand motions performed by individuals of different complexions. The exactness got by the proposed calculation is 83%.

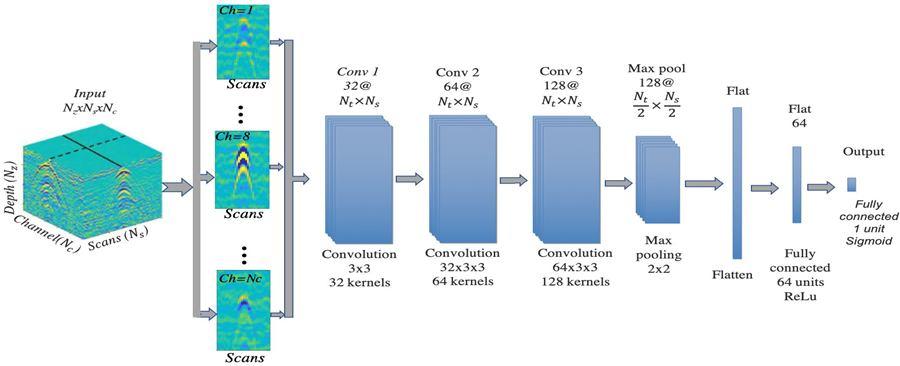

The proposed system is detecting sign language from image. In that we are going to use deep learning architecture for classification is convolutional neural network.

Fig1. Proposed Architecture

1) CNN: Convolutional Neural Networks (which are furthermore called CNN/ConvNets) are a sort of Fake Brain Organizations that are known to be areas of strength for hugely the field of separating confirmation similarly as picture request. Four primary tasks in the Convolutional Neural Networks are convolutional layer, max pooling layer, relu and completely associated layer.

Fig2. CNN Architecture

ISSN: 2321 9653; IC Value: 45.98; SJ Impact Factor: 7.538 Volume 10 Issue XI Nov 2022 Available at www.ijraset.com

In this project we will detect the signs from image using deep learning model which will calculate the features and classify the sign to respective category. Also we will trying to improve the performance of model.

[1] Shagun Katoch a, Varsha Singh b, Uma Shanker Tiwary “Indian Sign Language recognition system using SURF with SVM and CNN” Array 14 (2022) 100141

[2] Yogeshwar I. Rokade, Prashant M. Jadav " Indian Sign Language Recognition System" International Journal of Engineering and Technology (IJET).

[3] Ananya Roy, Dr. Sandhya Arora" Recognition of Sign Language using Image Processing” International Journal of Business Intelligence and Data Mining · January 2018).

[4] Sawant Pramada, Deshpande Saylee, Nale Pranita, Nerkar Samiksha “Intelligent Sign Language Recognition Using Image Processing” IOSR Journal of Engineering (IOSRJEN).

[5] Jinalee Jayeshkumar Raval, Ruchi Gajjar “Real time Sign Language Recognition using Computer Vision” 2021 3rd International Conference on Signal Processing and Communication (ICPSC)