Welcome to the 2023 collection of prize-winning Extended Research Projects.

This independent study programme gives students in their Lower Sixth year the opportunity to study a question of their choosing with guidance from a supervisor in their field. There are few restrictions to where their interest and passion can take them and the process culminates in the submission of either a 3,000 to 4,000 word written piece or a production project such as a film or computer programme

with supporting materials. All will have engaged in their own research and presented their views, positions, intentions and/or judgements in an academic manner. The project seeks to encourage them to be intellectually ambitious alongside developing the creativity, independent study skills and broader scholastic attributes which will allow them to thrive at the finest institutions of higher learning.

After a rigorous process of external marking and viva voce interviews, the prize-winning projects presented here represent the very best of the over 250 unique submissions made by students across the Haberdashers’ Elstree Schools. They showcase the remarkable application, courage and ambition of all of the students in completing such exceptional pieces of

independent work alongside their A Level subjects and many cocurricular commitments.

We are immensely proud of the achievements of our students; the depth and range of the projects they have completed is inspiring and we are excited to share them with you.

Creative Faculty

STEM Faculty

PAGE

Humanities and Social Sciences Faculty

1ST PLACE ABIGAIL SLEEP (CLASSICS)

2ND PLACE JANA LAI (PSYCHOLOGY)

3RD PLACE RAYAAN AHMED (ECONOMICS)

HIGHLY COMMENDED SOPHIE GRAHAM (ENGLISH)

HIGHLY COMMENDED ZACK FECHER (POLITICS)

HIGHLY COMMENDED ARYAN JANJALE (PHILOSOPHY)

PAGE 141

PAGE 151

PAGE 161

PAGE 171

PAGE 195

PAGE 209

Creative Faculty

Fraser Hauser

CREATIVE WRITING

It is hard to say what The Artist’s Parable is truly ‘about,’ and indeed I fear it would defeat the point of the work to do so. Rather, I believe the work was an attempt – failed, mind you – to put to rest some lingering questions about the nature of art that had been lingering in my mind for a long time. These are questions such as: can art be truly genuine? does this matter? and what should art be? It is for this reason that the work is multi-disciplinary, for I wanted to target these questions from different angles; personally, I believe the musical component of this work to be inseparable from the written element. Either way, it is a work I’m very glad to have produced and one that I feel supplemented greatly my study of English, History and Music at A level. Plus, I believe The Artist’s Parable set my application to study English literature at university firmly on course, and I’m grateful to have had the opportunity to submit this work as my ERP.

The Artist’s parable.

By Fraser Hauser

‘All art is at once surface and symbol.

Those who go beneath the surface do so at their own peril.’

- Oscar Wilde, Prelude to ‘The Picture of Dorian Gray.’

PROSE , he thought, is like a glass of wine; sipped at intervals by men in suits from half carafes at au Babylone in Paris; guzzled from cartons by the accepting middle aged at children’s parties; or necked, red, thick and cheap from bottles by teenagers on car seats at night. It can be formal and scientific, or acrid and intoxicating; he pictured bottles stacked neatly on the sommelier’s rack, and then smashed or vomit – spewed at the plaza de toros de las vantas, where he had felt so very alienated. Why then, he thought, must books be picked to pieces? Why must we enjoy them too much or not at all? They, like wine, have lost their regal quality, and are ‘available at half price!’ – he read from a bookshop window. But no, he thought, they haven’t lost it entirely; he remembered how a teacher had told him to shy away from pretty covers and how that had stopped him reading. He remembered how a friend had mocked him for choosing the bottle with a pretty label and how he hadn’t allowed himself to enjoy it. This was, he concluded, no world to birth his work into. Oh! how he would hate to have his wine left broken at a bullring or to have it logged and documented like an artifact at a museum only to be spat out into a plastic cup. He cringed to picture his novel wrung out on the academic’s table until devoid of all meaning, its spine weary with pencil annotations. Placed unopened on a bedroom shelf to bolster its owner’s ego or perhaps their chances in bed. ‘Useless!’ he said aloud, and vowed never to be a writer.

The artist left the library at dusk, and London was cold and dry-blue with streetlights as students pushed past him to get to the entrance. He held a thin

slip of paper - a receipt from Trattoria du Romano near Venice. Cradled it, damp and creased with April rain, but that was not important. All he needed was the address. Scribbled frantically on the back, it read: ‘Chalk bridge, Towpath Rd, river lee navigation, The Wyrd, 11ish?’

That was where he needed to be.

MUSIC, he continued, is much like tobacco. He readjusted his clarinet case until it sat flush with his back, and recalled how he had always played, and how difficult it would be to stop. For him, music had become not so much a hobby or career; it was an inveterate propensity that – though he would never admit it – somewhat defined his character. He loved music. He loved it very much. “But I’m no musician!” he said (out loud, though no one heard). He, unlike everyone else, wore no headphones; he had grown to despise how visual, how aesthetic music seemed to be. Unlike Prose, he thought, one’s eyes are free to wander unhindered with music, and even if one shuts them their mind drifts to personal associations – perversions even – that they might have with it. And then we dramatise! We make our lives like movies, lives that are not ours, not anyone’s, the lives of those one aspires to be. Smoking is cool, he thought, but the chic brings wrinkles, the buzz, consequences. Unlike Prose, so much of music must be built upon falsifications; music is, like smoking, too good for this world. Those he knew who wrote music the best – ‘music heard so deeply that it is not heard at all, but you are the music while

the music lasts 1;’ music that only poetry can describe – were those who, stuffing pipes at piano stools or disappearing behind a stage door for an origami-grade rolled cigarette, could falsify with integrity, and make movies of us all. Indeed, they seemed so sure of their music that one would struggle to question their authenticity; it hung around their person, exuded their character with an unfaultable accuracy. You could smell and see - sense -music on them, wherever they were and were not; on their clothes, their face, and through their half glasses left idle on a pub table. Whether music was doing them good was to them neither here nor there; they could not do with the half-glass alone, and would not be convinced otherwise. How easy for them it must be! he thought. Their work, that was so true to themselves and yet fit so seamlessly into others’ lives was to him a mystery of creation; one must simply be good at lying, or at convincing themselves they are not, he concluded. He plugged what he could of the address into his phone then proceeded as required, pausing only briefly to light a cigarette in the dull warmth of Smithfield, long after hours. Sounds of people moving hung in the air: the shutting of pub doors; the wavering hum of trains beneath; an argument over money or perhaps the request for a lighter. It might have seemed quite harmonious to a quiet mind.

1 TS Eliot, The Dry SalvagesThe artist’s however, was full of fragments. He heard distinctly a small moment from a night in Dublin months ago, and there too was a looping scrap from a score 2 he had glanced at before leaving the library that he would surely return to. But beyond these were hints at things more profound, perhaps the ineffable power he had (and still) felt, holding, hearing that halfsilence - both in and out of time - before the applause of the night before; a fleeting power which faded and died unnoticed at Exmouth market.

POETRY then, he asserted, must be like drugs; or perhaps a strong spirit sent for when the wine was done, and we simply couldn’t wait. It is like a psychedelic: intoxicating to the point such that it cannot be explained; powerful such that it can make or break, at least define the mind; and alien, such as to change someone forever. It is an attempt to explain the unexplainable; to remember a forgotten melody from a mere fragment 3. To pick up the pieces. That, is the poet’s job. To assemble a long-smashed vase, from fragments swept away, or to reconstruct a skeleton from bones buried long ago. No, he thought, rather to construct from those old components a new vase, a new skeleton, and then to make it breathe; to find new meaning in their intricacies. Intricacies, that scared him. That seemed out of reach. Truthfully, he had not the mind to see them, nor to express them effectively; to seem free within a sonnet or grounded in free verse; to interpret perceptively the most innocent of things. The psychedelic had chewed him

2 ‘Score,’ here refers to a sheet music score.

3 This is not his idea – It was written in a letter from Philip Larkin to JB Sutter, that the artist had read and forgotten.

up and spat him out, and now he could not - would not - trust his eyes. What appeared, then disappeared before him often had no bearing on others; his mind, was scarred. Perverted. Then again, it always had been.

The artist arrived at Kings cross in the final, lambent light of day, glad he had walked there. Minutes before, his mind had briefly fallen silent, and he had seen a picture in a woman, sitting on a curb across the Pentonville Road in the dry-blue lamplight, her face reflected in the freshly fallen tarn water of a puddle before her. For a moment, it had been clear in his head, though he would have to wait to see if it had been made clear in execution.

Deep below the city, the artist considered his chances. Perhaps he could in any case simply hang it on his wall at home or put it on the internet. That would be enough, but only if it was good. Briefly, it seemed as if the whole world rested upon this single photograph, and not the score in his bag nor the address on the receipt or any coherent amalgamation of his past beyond the woman at the curb.

Upon emerging back into the world however, the photograph was quite forgotten, lost in an invasive, periphrastic line of thought provoked by some poem the man opposite him on the tube had been reading. He stepped outside the station, unsure of where he was going.

ART, or at least what he saw in galleries, was more difficult to place. He thought it much like lust or longing; emotions often brought on quickly,

without a clear cause; emotions that, until experienced, are a mystery one feels they understand. As a child, he had been bored by the Beatles in his father’s car; how could they sing song after song about something seemingly so simple and dull? so superficial - gross even. It was not until he thought himself assailed by love that he saw the songs for what they were or seemed to be, and pushed the songs on others, blithe in their ignorance! With love in his system, the songs made sense. Perhaps then, Art is like a cocktail, deceptive in strength, that to its drinker is a risk they are used to and yet unaware of. Can I hold it together this evening after a couple Manhattans? he remarked to himself rhetorically and laughed. Often, he would skip the odd meal to make drunkenness cheaper; as, when he was younger, he would when supposedly love-sick. But Hemingway had been right; art is more beautiful when hungry, 4 and drunkenness more intense when empty. Indeed, it was not until he walked, nauseated, 5 empty-bellied and adult through the Giardini della Biennale - at an hour far earlier than one would expect – that he, upon sitting down with his family at a restaurant in Burano later that day, felt he could (and should) explain the art he had seen with great clarity and scholarship, whether or not they wished to hear it. So much for them; he ate and drank heartily, and paid when it was over.

By now, the sun had long set, and save for the odd drunk or curious walker the artist was alone. It was a Tuesday, and the city had gone to sleep. “Perhaps

4 Ernest Hemingway – A Moveable Feast.

5 ‘Emotional,’ perhaps.

I could be an artist,” he thought.’ He found the nearest bench and sat down; closed his eyes briefly, then forced them open. Beyond him lay a narrow, effulgent path of white toward the crescent moon in the sky or perhaps the aircraft light of an idle crane that parted the treacle-black water of the Walthamstow wetlands. A plane passed slowly, as if hovering overhead, and a man approached the artist slowly.

‘Surely, it must be him! And why else would he be out here?’ the man muttered beneath his breath. He had hidden his face from the artist behind a book of poetry on the tube - had left the station through a different exitbut was now convinced that he and the artist were heading to the same place, which (to say the least) puzzled him slightly. But he had to say something.

On the bench, the artist tensed up slightly as the man approached, his face concealed by darkness; it had been the aircraft light, which had turned off without warning moments before. So much for that. In between the man’s footsteps, the artist heard clearly the dissonant hum, the din of the London beyond that he had long drowned out. Patiently, he sat, waiting for the man to pass; but that did not happen.

‘Sorry, is it Adam?’ The man asked assertively.

Film, he confessed, was something he knew little about. It was to him rather like beer; cheap, and often comforting provided one enjoys it at home, perhaps in packs of four, six or twelve; enough to draw one back, and to balance the books at the end of the month. Easy, and inconsequential;

background noise with a partner, for when music was too obtrusive. A comfort. ‘There is no need to venture out;’ to invite extra cost atop the fixed monthly fare for something hoppier that requires more attention. £7 2/3rds pints, hazy in twelve-percent opaqueness, eyes squinting in an indie cinema.

Deceptive prices. Drinking noise. The preference of the bearded IPA nuts, Amber Leaf in hand. And it was either this, or Haribo-vomit seats to view the domestic blockbuster of tomorrow; Budweiser-float with a paper straw. Crying children. Johnny Depp’s redemption story. He simply could not get excited. Film, could never be a destination; he would simply wait, and pay for it to come to him.

‘Um, Yes?’ The artist replied. He pretended not to recognise his inquirer, but in fact recalled his face even in the dark. The man’s figure, now rotund and plump, had changed (grown) since they had last met, and a leather portmanteau hung from his left shoulder. The artist placed him in his early fifties.

‘I’m not sure if you’ll remember me, but I believe we have worked together; Einstein on the beach? 6 2016? You conducted, I’m sure!’

‘Oh… Yes, I did. Sorry, what’s the name?’

‘It’s David, don’t you remember? I played the judge and doubled as a dancer, believe it or not!’

6 An Opera written in 1975 by Philip Glass and Robert Wilson that has no single coherent narrative.

‘I um-’

‘Are you heading to the Wyrd? ’

David, the opera singer, spoke with a certain pomp which the artist found deeply irritating. That - unlike his figure - hadn’t changed.

‘Um, err, yes although I’m not really sure where it is… Katherine? gave me this address but it got smudged in the rain haha… and lea valley navigation (he showed David the receipt) doesn’t quite cut it…’ he laughed again.

‘Yes, no that isn’t ideal, but I’ll show you where it is! It isn’t far… but what brings you here, may I ask?’

‘I err, just liked Katherine’s work and happened to bump into her on a train; she then invited me here.’

‘Huh; I’d of thought you were too high brow for the Wyrd myself. Its not much of a destination, more of a hang-out, and I definitely wouldn’t call it a party. But I should not be too negative; we do have fun! But come, we’re late already.’

The opera singer set off down the narrow path at quite a speed and slipped, heavy footed, into the near-pitch black.

2.

2.

It did not surprise the artist that the Wyrd was in fact a boat, although other aspects of it most certainly did. Docked to the Canal’s edge by a loose cleat hitch, its long, thin hull stretched out diagonally across the water like a forgotten Ever Given,7 so low the artist suspected she had grounded out.

“That’s well parked,” The artist remarked. David the opera singer chuckled briefly.

As they drew closer, the Wyrd’s colour changed distinctly from a orange–yellow to a dark–ish blue that had been obscured by lights; lights from the industrial park beyond the metal fence of the far bank, that mixed with the dull, stagnant green of the water below. The artist noticed too a strange obelisk-like structure on the boat’s roof that was revealed to be a small, minaret-style chimney puffing out a thin line of smoke.

“I’ll just send them a message to let them know we’re here; one moment.’

The artist nodded and turned toward the metal fence; ‘TRESPASSING STRICTLY FORBIDDEN,’ a sign read. He took a few steps back. Looked down to the stern of the boat. Beyond the central windows – which, clouded by condensation, offered no view inside – a thin border of white light seeped through what he presumed to be a blackout curtain, and illuminated briefly a small wooden coracle that sat angularly in the water and was tied to a loop on the boat’s roof.

‘Strange,’ he said aloud.

7 One of the largest container ships in the world, which famously blocked the Suez Canal for a sixday period in March 2021.

‘OK they know now, should be -,’

The Wyrd’s front hatch flew open and smashed against its hinges with an idle thud.

‘Oop, shit! Sorry David, is Adam here?’ Katherine popped out from the hatch much like a jack-in-the-box and scanned the canal path.

‘Yep I’m -,’ The artist began.

‘Oh thERE he is! You two had best come in; I think the weather’s about to turn.’

Adam stood, frozen for a moment.

‘After you,’ said David, gesturing toward the boat.

Katherine ducked back into the small, red-tinted room she had emerged from and began fiddling with a series of locks on the inner door while the artist climbed in behind her. She wore a pristine set of navy coveralls and a joint rested against her ear.

‘I can’t believe I’m doing this,’ the artist thought, standing huddled in a darkroom, the opera singer’s belly protruding uncomfortably into his back. The air was thick and he felt his suit cling to sweat.

‘Sorry, this is quite the operation; it’s a soundproof door you see. Just one more. Oh, and I will warn you Adam, it won’t be what you expected in there.’ Katherine placed her set of keys in an old, empty can of film and then placed that on a small shelf. ‘See for yourself.’ Slowly, she pushed the door open with her right shoulder, and slid off to one side.

She was right.

It sounded like a rainforest. Like the start of Nunu 8 . Various butterflies and moths scrapped over the glow-worm hanging lightbulbs then gave up and came for one’s sweat. They had escaped from the terrariums, of which there were at least six; each between four and five feet tall, convex in shape and placed atop shallow plinths. At the far wall, a man sat reading on a large wing chair placed upon a dais, and two further terrariums stood in alcoves on either side of him, as if marking a throne. He did not look up and kept his face concealed.

Have I found Kurtz?! The artist thought. Willard had to look a bit harder.

He made to speak, but his throat and eyes began to burn for the stench on board was inescapable. It oozed from the very walls; from the innumerable nosegays and bouquets of freesia, daphnes, amaryllises, plumerias – proteas even – that lined the outer wainscot of the cabin, and much of the border between walls and ceiling; from the two Mabkharas burning Bakhoor by the entrance; from a cannabis pipe atop a spinet piano. If one were to cough, they would never stop - but Adam couldn’t help it. He stumbled toward the port wall, dropped his bag and felt a glass press against his lips.

‘Fucking hell! So violent! Drink this, it’ll help; see, that door is a sort of airlock too.’ Katherine’s voice was clear and comforting, and he felt the water soothe his throat. She gestured to the man that the guest had arrived.

8 A track by Mira Calix, released 2003, composed largely of insect-noise samples.

‘Fuck me!’ the artist spluttered between sips. ‘That chimney should let a bit more out!’

He poured some of the water onto a handkerchief and cleared his eyes of onion-tears. Katherine and David, both smirking slightly, had sat down on a small sofa opposite where he was standing, and were pouring glasses of wine. He smirked back, though mainly at their contrary appearances; whilst she was slight, elegant and quite beautiful, the sepia-tinged, frowsty lamplight aboard the Wyrd did David no favours in appearance; he seemed a chubby infant, with sweaty stubble and a cigar.

‘Ah, I see my wife has helped you,’ Kurtz’s voice bellowed from across the room. ‘She’s awfully good at it these days. Most respond like you did, sometimes worse! But I like it that way.’ The man rose whilst speaking, crossed the tessellated slate floor and thrusted his hand out toward Adam, who took it. His face was furrowed, his hair grey, and his eyes were deeply set and jaundiced. However, he still retained an element of youth. ‘It’s Aitzaz,’ he said, ‘and I know who you are; it is a great honour.’

‘Aitzaz,’ the artist replied. ‘Good to meet you.’ He needed a cigarette but left asking if he could smoke one for too long; the man continued: ‘I am most pleased that you came here, though due to my wife’s work not mine; I hear you are a fan of it.’

‘Yes, I think Katherine’s novels are brilliant,’ She sat lighting the joint she had prepared and did not hear him. ‘Very creative. Eh, um; sorry can I smoke a cigarette in here?’

‘I’d rather you didn’t,’ Katherine butted in, almost in unison with David, who’s Brick House Churchill gave off thick, gooey smoke and who’s shirt was stained red with Chateau Giscours . ‘No, not in here!’

‘Ridiculous!’ Aitzaz asserted, directly toward his wife; he was the shorter of the pair. ‘Be my guest,’ He produced a pack of Dunhill silvers from his pink, collared shirt pocket and held them right up to the artist’s mouth; all the text on the carton was in Arabic, and Adam took one with his hand and lit it tentatively. ‘Do not flatter her too much!’ Aitzaz continued. Laughed, but Katherine did not. ‘The novels are successful, but such high praise is unhealthy.’

‘Oh, I think you should give people praise every once in a while. Especially when they deserve it. Otherwise it can all get too much, and you get too hard on yourself.’ Adam glanced back at Katherine. She had covered her mouth and nose with her coveralls and was hiding behind them, poking her head out occasionally for brief pulls on the joint or sips of her wine. She said nothing and put on a N95 mask.

‘You know, Adam, I have met you once before; you may not remember.’ He strolled back toward his throne, but paused and stood on the centrally positioned, damasked rug that stank of mould. ‘Please, sit down.’ He gestured toward a second sofa and lit a cigarette for himself.

‘Thanks.’ Adam did not recall having ever met this man but was relieved to finally be offered a seat.

‘It was two, three years ago I believe. I had been commissioned as the artist for the Lebanese pavilion at the Venice Biennale and was in my prime regarding both the reception of my work as well as my prolificacy. I had created a multi-disciplinary work of great scale that was re-constructed in the Giardini by a highly paid team of foreign labourers with assistance from some of my trusted artist friends who had European or American passports. And this was mostly funded by my family for the money provided to me by arts funds was not enough. But that is not important. I was a very different man then, and I remember feeling as if no one could possibly top the work I had created when I arrived on opening day, but then I walked around! And I recall you distinctly directing a group of musicians in the British pavilion and that I found it rather bewildering but excellent. Improvised, I believe. We talked briefly afterwards, but you seemed slightly subdued and claimed the performance had been no good. Either way, you were not too keen on praise then my friend. But enough! What is it you are doing these days? For I am most interested. And will you take anything? A drink perhaps, or something stronger?’

Adam sat bolt upright, and was only slightly short of mortified, for he did not remember this meeting in the slightest, and it had not been his artwork. He had merely coordinated a small musical element -blagged it, drunk- and had left before lunchtime.

‘Its Mahler nine, isn’t it? LSO?’ David shouted over the noise, cutting off his conversation with Katherine.

‘No that -,’

‘Ah, yes, for I was there last night. Magical, just magical! And you can hold silence well, my friend!’ Aitzaz spoke over him.

‘Tha – thanks, I appreciate it, but Mahler nine is done as of yesterday.’ The fumes were starting to get to him. ‘I – I, shouldn’t say this really, but I have two new commissions. Royal Opera House. Opera called Lucia, about James Joyce’s daughter. Oh yeah, and Turangalîla. But I’m, But I’m not sure if I’m up to it really. I want to try something else.’

‘Lucia? Why I think I’m in that one chap! And don’t worry I’m sure it will be splendid.’ David did not seem to notice Adam’s sombreness.

‘Don’t want to do it! But why? You have such a skill. A way with things. And you are young my friend! Under 30 and conducting at this calibre?’

David and Aitzaz spokes simultaneously, amidst the lingering bug sound, and watched as Adam’s head drooped toward his knees in sadness and the rain began to fall outside.

‘Please can we talk about something else?’ Adam muttered, much like a child.

‘Don’t worry Adam, I expected Aitzaz to suck up to you this way. It must be uncomfortable. He even told me to keep quiet, I might cramp his style, he said.’ Katherine put a hand on Adam’s shoulder, then removed it, and the room fell silent. Aitzaz retreated toward the door opposite the entrance which opened, as expected, onto a small room in which he was growing drugs. He returned shortly afterwards holding another pipe which he gave readily to Adam.

‘Please do not Embarrass me, Katherine. And yes, we may talk about something else. I was going to ask what you thought of the boat, for it is only a temporary project of mine that I will exhibit as a series of photographs. You however are lucky enough to see the whole thing.’

‘Fucking hell Aitzaz, just ask him out already.’

‘SHUT UP KATHERINE!’ He snapped and lunged toward her, but she didn’t flinch even slightly, for he looked quite pathetic. ‘GIVE ME THIS ONE FUCKING THING BETWEEN ALL OF YOUR ATTENTION! I HAVE MY SUCCESSES TOO!’

Adam and David quite simultaneously reached for their phones and scuttled towards the door, making up then amending their excuses as they moved. As they wrestled with its various locks and escutcheons, their hosts were still at each other, careering round the terrariums as if they were not there at all and the room was perfectly normal. In his occasional glances back, they seemed as if they were dancing to a simple motif Adam thought he heard from behind the spinet piano but resolved that he must have simply made up.

‘Hurry up David! You’ve been here before! You must know how the door works.’

‘I DO ITS JUST QUITE COMPLICATED.’

In one swift movement, David finished unlocking the door, though not in a conventional sense, as he and the artist fell atop of one another into the adjacent room having torn the large metal door clean off its hinges. ‘Oh my good lord, I’m ever so sorry.’

Adam could take no more and began laughing uncontrollably as he pulled himself back up, leaving David face down in the darkroom. He could still hear the piano, but the two had ceased their performance, Katherine soon joining him in unrestrained laughter and Aitzaz’s face scrunching inward, from shock to apoplexy. Stubbing out his cigarette, Aitzaz let out a enigmatic grunt and strolled back to his chair, at which point Adam noticed a knocking sound from the outer hatch.

‘Answer that, Katherine. And it better not be the police,’ Aitzaz grumbled between breaths.

3.

3.

Katherine was eager to move the boat that night, despite Aitzaz’s bid to remain for the purpose of a photograph he had not yet taken; however upon receiving an ultimatum to stop blocking the canal or be reported from a man at the hatch, he promptly folded, and Katherine donned a black poncho and resolved to move the boat out onto the wetlands for the night, and search for a spot in the morning. Adam and David had no say in the matter and were by then too drunk or drugged and confused to object.

‘Don’t worry about the door,’ said Aitzaz, having calmed down slightly. ‘I’ll make something of it.’

‘I do have to ask man, what’s with this place?’ Adam replied, giggling slightly through sips of David’s wine. ‘Like, you don’t live here do you?’

‘No, no I do not. But I do at the moment. It is a new, risky project of mine but I do not regret it. You see Adam, despite what Katherine might think, I am not a failure; I have worked on film sets, produced concept albums, successful exhibitions and have published a collection of poetry and a handful of novels. But, I am not happy with them, and I found having to keep these things separate tiring and unfulfilling. And it is not because they did not do well - again, I was at the Biennale a few years ago - but rather because I didn’t feel that they were genuine. If that means anything. But I feel that in this Boat I have created a space that is truly mine regardless of how strange it is and I had to do something new, even if it reduced me to madness’ He paused, and leaned over to one of the small airplane windows, noting that they were now moving. ‘I feel as if to confine one’s self to a single medium is so awfully restrictive and gives way to a condition of split self; and that is why

I use this boat as a shebeen of sorts because I get so many opinions on it from people of so many disciplines. They think, “Aitzaz Haidar has lost his mind!” but usually come round to understand it once I explain. It is an essay on pathways, routes out, entrapment and freedom within limits. I shall exhibit it as photographs purely because of its illegality; otherwise I should want everyone on here. It is my safe space, an outward manifestation of myself, and that is what I want my art to be beyond anything else.’

‘He gives the speech to everyone Adam, maybe you can explain it to me! I just come here because it is lawless and I’m rather fond of Ka – never mind.’ David stage – whispered crudely toward him, in clear earshot of his host.

‘All that said, I still respect a good craft, and that is why I encouraged you to take those commissions. But I feel you have a similar condition to myself in all this, and I am sorry if I came across pressuring.’

Adam sat back in his seat such that his head met its backrest and picked up the pipe once more. What did make of this man, Aitzaz? It was a gloomy mix of fascination, pity, confusion, and deep respect; that he should, after all his success, have the courage to make something as different, as bizarre, as this, that which Adam didn’t grasp as art in of itself; that was quite something. And yes, he did feel much the same way himself. He did not want to be a musician, a filmmaker, a poet, or a novelist; it was a matter of definition, of confinement, for if you want to be those things, ‘you will invariably become

them.’9 He thought of all the praise he had received, for being something he did not want to be; how his fiancée had been ‘so glad to be marrying a conductor;’ how his parents only put up with him when he told them of the next great project, the next pay-check. He thought of hanging around, outside stage doors, with people so set and happy with being musicians; drugs wearing off, and having nothing to write about; and wishing, longing to be like others. Katherine, definitely; perhaps not David. But now, there would be no more of that; if there was space for this man, there would be space for him, and he would make what he wanted, when he wanted, and only take up that commission if he felt like it; he had enough money already, and his fiancée worked in finance.

Adam could sense the wetlands were close, and had given up on going home that night. Conversation ground to a halt on board, and the Wyrd inched slowly along the canal, almost crawling along its bed. The rain that fell outside had grown heavier such that from the windows it looked as if a thick sheet of glass were falling out of the sky, glinting with light then falling to the ground on perpetual loop. Inside, the sound of insects, idle conversation and the background piano merged into a strange kind of silence that one got used to, much like a city soundscape; now, he could imagine staying, and fell into a strange, comforting reverie in his new home, as if it had always been there and nothing could disturb him.

9 Quote by Oscar Wilde.

Aitzaz was the only one standing up, and was taking innumerable photos then fiddling with the broken door which still brought laughter to Adam’s mouth.

‘I’m slightly worried that the Wetland’s might be too choppy for my third guest,’ he said.

Adam thought nothing of this comment for a few minutes, presuming Aitzaz had made a mistake, but then winced at the thought of the space being unknown to him in any way.

‘Thir – third guest?’ He mumbled, his head spinning.

‘Ah, yes I probably should have told you, though I presumed you heard the piano playing, no? Its not a pianola!’

As if on demand, a man of small beard, trimmed hair and average height stumbled up off the piano, gripping it for balance. Adam recognised the face; it was one he had seen on television, at Glastonbury, at the Hammersmith Apollo; it was a musician he adored, manifested upon this boat. But no, for he was real; his eyes bloodshot and his pupils the size of beachballs.

‘Careful, Careful.’ Aitzaz mumbled, backing off. ‘He’s been on some stronger stuff.’

David, Adam and Aitzaz froze as the man’s face darted about the room, and small, frightened yelps fell out of his mouth. Without the piano, the room seemed achingly loud, and beneath their feet, the boat began to rock with unnerving speed; they had arrived at the wetlands.

‘No-one move,’ Atizaz asserted, as if he hadn’t created the situation and was now the hero within it. They sat, or stood completely still for what felt like hours, until a crackle of lighting and a huge gust of wind to the side of the boat set the man wild, clawing at the windows for a means of escape.

‘Fuck! Keep him away from the side hatch and Jesus! Guard the terrariums!’ Aitzaz screamed, running round the room aimlessly once more as Adam and David shielded themselves in their respective seats, fully aware their host was out of his depth. The man, quite unknown to Aitzaz, had found the side hatch, opened it without challenge, and proceeded to launch himself out into the water, pushing the boat further down in the process. Before anyone heard the splash, water began flowing freely into the boat on the starboard side, tilting it downward and pushing the port side terrariums off their plinths until they smashed, ear-splitting, onto the slate below.

It was chaos. All manner of insects that Adam had never seen – never knew existed – clambered freely about the cabin or took flight within it. There were titan beetles, bizarre moths, mantises, various hand-sized cockroaches, and soon after the lights were broken by water, the scene was lit purely by a host of luminescent fireflies. How Aitzaz had got hold of these bugs was a mystery; how he had kept them alive was a miracle. Not that that mattered. It was every man for himself, and Adam forced himself to sobriety upon seeing David collapse, paralysed by some strange, foreign hornet Aitzaz had collected.

Unsure whether to scream or laugh, Adam swiftly manoeuvred himself out on to the boat’s exterior, his hands on the roof and his feet underwater, lodged on the gunwale. He was heading for the coracle, and looking for Katherine, though she had long since jumped ship for a reason he did not understand. The water was cold as he swam for the small wooden vessel, and looked as though it stretched for miles out into a roaring sea rather than for a mere thirty or so metres. He could not believe he was in London, and had no idea what had happened to Aitzaz. But there was no time for heroics; he clambered into the tiny boat, cut the connecting rope, and began rowing furiously with a small pink plastic oar he found in the footwell towards the bench he had sat on earlier.

‘This is fucking ridiculous!’ He cursed himself as he moved slowly across the water. Glancing down, he saw what he thought was a piece of bamboo longed onto his tie, but was in fact a huge stick insect that spanned the entire length of his torso that he batted away into the water out of fear and anger. On the verge of tears, he reached the bank, relieved to be safe. There are some dangerous, dangerous weirdos out there! He thought. I thought you knew to always steer clear of them. You fucker! You’re about to be married for fucks sake! Stop hanging around on illegal bug smuggling boats with idiots! Fuck you! This will make the press! And David will talk about it if he makes it out alive. And God! Don’t say that he will die. And Aitzaz too! You’re a conductor! Go home!

He glanced at his watch; it was far too late to take a train. Out on the water, he saw the Wyrd go down slowly until it disappeared under, and the silhouette vanished; merged into the treacle black.

He would start work on the commission tomorrow, but it was at this point that he realised, - with an awful, awful sinking feeling - that he had left his scores on board. The end.

Benjy Ezra

ART

Benjamin Ezra chose ‘Narrative Storytelling through Sculpture’ as his ERP title, intertwining an interest in Classical Mythology and a desire to explore sculpture. His project dissects the conventional portrayal of classical stories through the atemporal medium of sculpture. Using the stories of Prometheus and Pandora as a nucleus, he crafted twin sculptures of his own, illustrating themes of ascension and retribution in a tangible form. Benjamin is presently studying English Literature, Art, and Mathematics, with aspirations to enrol in an Art Foundation program next year.

Storytelling through Sculpture

Accompanying walkthrough text

Narra$ve in Ancient Greek Art

Introduction

Art is prehistoric, developed by some of the first humans as a means of communica=ng – telling stories as drawn or engraved on cave walls. Pictures, symbols, and art as a whole was used as a narra=ve device before text or speech existed. How has this been developed? And in ancient Greece, how was art used to retell the stories we all know today of myth and monster? This civiliza=on was known for great sculptures and architecture – The Parthenon’s pediments crowded with sculptured retellings of myth. How is a wriGen story told through sculpture, and how or what can I create to develop and respond to this?

Beginning Research into this Concept

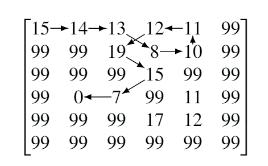

Most Greek stories were wriGen in the form of poetry: The Iliad and The Odyssey being key examples, and many we learn through ancient ar=facts and poGery. The book ‘Image and Myth’ examines a key difference between these two different narra=ves. Luca G iuliani argues that prose exists in succession, and therefore can be craQed by the author to be read and experienced in a specific, measured way and speed. The reader cannot choose any way of understanding the story other than that provided, and hence the story remains sequen=al for every readeri. However, art exists all within the same instance – it is temporally unified. Every part of the story told exists in the same frozen moment of depicted =me. The ar=st is far less capable of structuring the process of recep=on as a temporal sequence, meaning every person experiences the story in their own order and can take more varied impressions – how does an ar=st ensure the intended storyline is being told? How can a single image or sculpture depict mul=ple series of events in the correct order?

In the ar=cle ‘Greek Art’ by John Griffiths Pedley, he examines the methods ar=sts within this period used to create such narra=ves. When comparing the representa=on of mythological figures within Greek art to other periods of =me or cultures, such as Hindu or Egyp=an gods, there is a no=ceable difference in content. OQen the art becomes symbolic and inhumane – animal heads, obvious superhuman imagery such as wings, mul=ple arms, or distor=on of body shape and size. Yet in Greek representa=ons of stories and gods, rather than aGemp=ng to create a new form, superior or different in nature to the ordinary man, aGempts are made to depict superiority via perfec=on of the already used human form and shapeii. This involves, Pedley argues, the use of idealism and aesthe=cally pleasing curves, angles, or propor=ons, depic=ng speed, strength, or power within a given mythological creature. It is not a different form to the human, but simply a heightened one iii Knowledge of anatomy is taken advantage of to create what should be, as opposed to what is – crea=ng a godly sculpture or image in the sense that it becomes an amalgama=on of perfect forms, muscles, curves, and sizes. Pedleys research into this field can prove extremely useful when needing references and methods to respond to this theme, in order to appropriately engage with this style of sculpture and image. Yet while it provides support in narra=on of character and form, it does not explore much in terms of narra=on of events

In the book ‘Sculpture and Vase Pain=ng in the Archaic and Classical Periods’, Susan Woodford analyses the different stories depicted through Greek poGery, mostly from the perspec=ve that the viewer is already familiar with any of the narra=ves. For example, a vase in the Eleusis Museum (above, Figure 1) portrays the story of Odysseus and the cyclops Polyphem us on its neck. To make the story clear, the painter chose to illustrate the easily recognisable scene of blinding , having three men poin=ng their spears in the single eye of a cyclops (indicated by his great size in comparison) – which a viewer would

Figure 1. Funerary Proto-A2c Amphora with a depic9on of the blinding of Polyphemos by Odysseus and his companions, Archaeological Museum of Eleusis, Greece Figure 650 BC, probablyhence assess is Polyphemus. Their leader, leaping and the only form in white, is therefore featured in an obvious way, which in turn also portrays the story though the lens of this ‘hero’ – his dynamic pose, and unique colour depic=ng him as a saviour. The cyclops raises one ineffectual hand in an aGempt to push the stakes away, and with the other it holds a wine cup. This is crucial, because while the painter depicts this storyline all in one, it is not intended to be received as the three men aGacking Polyphemus while he is drinking; the wine cup is present to clarify that the cyclops had been made drunk prior to the ins=tu=on of the aGack planiv. The ar=st was not interested in depic=ng a single moment, but the story in its en=rety. Contras=ngly, we are provided with another example in which a painter depicts the story of Troy on a vase (above, Figure 2), but rather than depic=ng the story through one image, they use the cyclical shape of the vase to progress the story leQ to right. These are two strikingly different narra=ve approaches – a story in one image, and a broken-down story in mul=ple images

This leaves me with a series of decisions to make, as well as more research to inves=gate. In responding to this, how do I wish to depict a story? Shall it be done in succession, or with no temporal sequence? The methods which I will replicate Greek styles and tradi=onal art can be learned, and more inspira=on can be found My next steps will be to find a specific story to explore and to develop these themes of sculptural narra=ve.

Ini$al Explora$on of form and narra$ve

Selecting a Story

AQer reading more and researching different classical stories, ini=ally the story of Icarus intrigued me the most, and seemed to have lots of possibili=es for explora=on. In this par=cular myth, Daedalus, a mythical inventor, created a set of wax wings for he and his son, Icarus, to escape cap=vity from King Midas. Yet Icarus ignored his father’s warnings, flying too close to the sun, meaning the wax melted, and he fell to his tragic death in the ocean. I think it was honestly the opportunity of depic=ng wings, along with the concepts present in the story – perseverance, tragedy, and ambi=on that en=ced me.

However, aQer looking at different ways the story has been depicted before, I realised that the story would not allow me to truly develop and explore narra=ve sculpture. Firstly, the order of events relies too much on outside influence: the sun, the ocean, the tower in which they were held cap=ve, all which hold important influences on the stages of the story (such as the mel=ng of the wings, or the cause of death). Therefore, as I am intending to create a figure-based sculpture, there would not be much opportunity to include landscape and scenery. Furthermore, the myth is too simple – too liGle happens; there are only really 3 different stages of the story. I would much rather use a story with many more details and context to allow me to really stretch my development and experiment with what I can create as a response to a narra=ve text.

Following this decision, I managed to decide on a story that really interested me and abided by the rules I had set to allow my experimenta=on to be as wide as possible - the story of Prometheus, which I soon realised =ed heavily into that of Pandora, being a connec=on I wanted to visually explore. I read widely to understand the key aspects of the story, using the extracts below as key inspira=on.

Prometheus, however, who was accustomed to scheming, planned by his own efforts to bring back the fire that had been taken from men. So, when the others were away, he approached the fire of Jove, and with a small bit of this shut in a fennel-stalk he came joyfully, seeming to fly, not to run, tossing the stalk so that the air shut in with its vapours should not put out the

flame in so narrow a space. Up to this 9me, then, men who bring good news usually come with speed. In the rivalry of the games they also make it a prac9ce for the runners to run, shaking torches aWer the manner of Prometheus.

In return for this deed, Jupiter, to confer a like favour on men, gave a woman to them, fashioned by Vulcanus [Hephaistos (Hephaestus)], and endowed with all kinds of giWs by the will of the gods. For this reason she was called Pandora. But Prometheus he bound with an iron chain to a mountain in Scythia named Caucasus for thirty thousand years, as Aeschylus, writer of tragedies, says. Then, too, he sent an eagle to him to eat out his liver which was constantly renewed at night.v

Pseudo-Hyginus, Astronomica 2. 15 (trans. Grant) (Roman mythographer C2nd A.D.)

He hid fire; but that the noble son of Iapetos stole again for men from Zeus the counsellor in a hollow fennel-stalk, so that Zeus who delights in thunder did not see it. But aWerwards Zeus who gathers the clouds said to him in anger: ‘Son of Iapetos (Iapetus), surpassing all in cunning, you are glad that you have outwided me and stolen fire a great plague to you yourself and to men that shall be. But I will give men as the price for fire an evil thing in which they may all be glad of heart while they embrace their own destruc9on.’

So said the father of men and gods, and laughed aloud. And he bade famous Hephaistos make haste and mix earth with water and to put in it the voice and strength of human kind, and fashion a sweet, lovely maiden-shape, like to the immortal goddesses in face [Pandora] . . . But when he had finished the sheer, hopeless snare [Pandora the first woman created by the gods], the Father sent [Hermes] . . . to take it to Epimetheus as a giW. And Epimetheus did not think on what Prometheus had said to him, bidding him never take a giW of Olympian Zeus, but to send it back for fear it might prove to be something harmful to men. But he took the giW, and aWerwards, when the evil thing was already his, he understood.vi

Hesiod, Works and Days 42 ff (trans. Evelyn-White) (Greek epic C8th or C7th B.C.)

AWer crea9ng men Prometheus is said to have stolen fire and revealed it to men. The gods were angered by this and sent two evils on the earth, women and disease; such is the account given by Sappho and Hesiod.vii

Sappho, Fragment 207 (from Servius on Virgil's Aeneid) (trans. Campbell, Vol. Greek Lyric II) (Greek lyric C6th B.C.)

There is so much occurring in these myths and all of the external influences – a jar, chains, fire, an eagle, are much more easily depicted three-dimensionally as opposed to landscapes. More importantly, there are many deep narra=ves, strong concepts of punishment, kindness, curiosity, and power, which have so much opportunity to be displayed through sculpture. In an ar=cle of hers, Helen Huckel highlights these different narra=ves. To Hesiod, Prometheus was a trickster, and to Aeschylos, he was a martyr who sacrificed himself for mankind’s benefit. In some versions of the story, he is the creator of man from clay, and in others it was he who gave mankind a conscious existence viii . Yet in all aspects, it was he who willingly defied the Chief god, and allowed man to develop, grow, and exist in safety – fire being a substance s=ll extremely crucial today. What caused this complex =tan to have pity on humanity? Was this really his crime? And did this empathe=c, pi=ful crime jus=fy the crea=on of Pandora, who is blamed for the opening of her jar when she was made to do just that? The contrast between Prometheus’ total control over his ac=ons, and Pandora’s calculated posi=on on the god’s chess board is unique, and through these ques=ons these characters are =ed together – being exactly what I want to depict through my sculpture.

S tage 1 – Sketching and Photoshoot

Beginning the development of a physical sculpture, I wanted to explore with different ways I could depict Prometheus, and at the same =me develop my sculptural skills, as I have never touched the subject before. Hence, I first researched different ways this story has been sculpted in the past, and then created a series of sketches to photoshoot based on.

Some of the images I found most interes=ng are shown above, but the main themes of the sculptures, as seen in Figures 3 and 4, are a bold, courageously posi=oned Prometheus, amongst stone, and chained with an eagle on him. Despite Figure 5 being an old p ain=ng, the way the ar=st has chosen to depict him is s=ll worth looking at; here Prometheus is more cau=ous, careful, in the moment of stealing fire. OQen it was illustra=ons as opposed to sculptures that focus more on the suffering aspect of his story. The rest of the reference images I used can be found in the folder ‘1. Small Prometheus’ in my folder collec=on.

This sparked interest and ideas for me which in turn became the following sketches. The main ideas I wanted to portray were the heroic, brave side of Prometheus; the more secre=ve, trickster side; and by contrast the human connec=on he has. These manifested in the forms of pose, inclusion of things like fires, rods, or campfires (represen=ng his theQ), and the inclusion of a smaller, human form.

Figure 3. MGA Sculpture Studio, llc. Figure 4. Prometheus, cap;vated (1872-1879) by Eduard Müller (18281895); Museumsinsel Berlin-MiMe, Berlin

Following the planning, I set up a photoshoot to allow me to see these ideas in reality and aid me in crea=ng the form using reference images. I recognised the lack of development some of the ideas had – such as Prometheus wrapped around a small mountain, as well as the level of difficulty of some considering this piece is to help me get to grips with sculpture.

Figure 6. Model (Oliver) posing as Prometheus, climbing a mountain with a flame on top

Figure 7. Prometheus aUer stealing fire

Figure 8. The upper view of (Figure 8)

Figure 6. Ini;al Sketches for photoshoot to plan development of small Prometheus

Figure 6. Model (Oliver) posing as Prometheus, climbing a mountain with a flame on top

Figure 7. Prometheus aUer stealing fire

Figure 8. The upper view of (Figure 8)

Figure 6. Ini;al Sketches for photoshoot to plan development of small Prometheus

Overall, I preferred the pose shown in Figure 7, as it seemed a good star=ng point to build skill from, and began to portray different aspects of Prometheus that I wanted to depict in the same instant – the flame, showing his theQ, and kindness to humani ty, but his expression and stance showing his carefulness and worry about his fate; the dilemma of his decision pictured well.

Stage 2 – Creating the F igure

AQer deciding which pose to use, I used these images to develop my skills and learn how to create a figure three-dimensionally, as seen in the images of the sculpture below. I found various YouTube tutorials helpful in finding where to start, and propelled me into the pieceixxxi

Stage 3 – Review

Looking back at the crea=on of this, it was an extremely useful process to enable me to springboard onto the final piece I would eventually create. When approaching this small form, I went about crea=ng the limbs by crea=ng them separately and connec=ng them – something that nega=vely affected the cohesion of the body’s form, and meaning my next approach to this would be to create the form all in one, and hence perfec=ng the limbs on the same body of clay, also meaning the structure is much stronger. Further, ensuring a body is standing, as I has planned for this, was much harder than I thought, and something I did not consider too much in the beginning, meaning the pose was not dynamic nor structurally intelligent to ensure it could hold itself up, meaning it had to rely on the lateaddi=on of a stone-like form on the ground, which in turn did take away from the power that the form was supposed to have, standing alone.

The nudity of the sculpture was reflec=ve of the fact he is a =tan: oQen depicted nude, but I think the addi=on of some level of clothing would add more to the piece and be interes=ng to create. Further,

Figure 9. Final Small Prometheus Outcomethe inclusion of the torch resembles the theQ aspect of the story, but his pain, his cap=vity, and his punishment are irrelevant here. Overall, this process was very helpful to understand the art of sculpture, but the crea=on does not well resemble the en=re story, only really a specific moment –how can I develop more a sense of =me through this fixed piece?

Manifesta$on of narra$ve in final form

Planning Final Forms

Moving on to planning a proper piece as a final response, I knew I needed to include both Pandora and Prometheus but needed to determine how to link them together. Ini=ally, I created the sketches below (in Figure 11), contras=ng Prometheus’ bold brandishing of his stolen flames – showing his resourcefulness, but also his kindness to humans, with Pandora’s weakness and curiosity, reflected by his tall stature, and her lying down on the opposite side.

In addi=on, to =e together the temporal aspect of the story, I knew all parts of the story needed to exist in the same =me, and I portrayed these through symbolic objects. For Prometheus, that meant including the bird, his arm chained, and perhaps some small humans at his feet. For Pandora, that meant the giQs of the gods, such as clothing or jewellery, and the pile of clay from which she was moulded.

However, I reformed these ideas (Figure 12), and I reshaped the general structure of the arrangement. This was ini=ally caused more by prac=cality – having Prometheus stand would be logically hard considering he is directly in front of Pandora, meaning he cannot lean on much, and further, the size of the kiln I have access to inhibits such a height. However, once laying him down, I found that rather than showing the contrasts between the characters in this story, the similari=es between this =tan, and a human, in their moments of punishment, were much more interes=ng to experiment with and develop, promp=ng those final sketches.

This set in mo=on my final series of photoshoots for both Prometheus and Pandora (all images available in the folder collec=on).

Figure 11. Ini;al Plans for final outcome Figure 12. Later, refined sketches of final outcome arrangement

During the photoshoots, I experimented with other poses of pandora, such as siing or crouching as opposed to leaning, but I ul=mately felt that the posi=on shown in Figures 13 and 14 well reflected the grace and innocent aspect of her, in contrast to the drama=c opening of the jar, resembling different narra=ves well. Further, the Prometheus pose I used ended up being quite different, with the posi=on mirroring Pandora’s more, beGer reflec=ng the pain he is in, but also providing a more dynamic contrast with the high raise of the torch.

Creating the Final Sculptures

Furthering my research, I had the opportunity to visit an exclusive Rodin exhibi=on – a Sculp=st present in the 19th century who created large, incredible figures and forms in the classical style. The images I took where extremely helpful to both inspire and show me more accurately how to achieve a real, tangible feeling and shape of a form, which I used heavily in reference to during my sculp=ng of the final outcome. These images can be found in my folder collec=on.

The crea=on of Pandora was lengthy and difficult. It was s=ll really the second sculpture I’ve made, and the first of that size, but using my smaller prac=ce I approached her form much more cohesively, but arriving at the clothes was a challenge. Rather than relying on my images, which no longer aligned so much with the stage my sculpture was at, I used a cloth, arranging it around my sculpture to inspire how I approached crea=ng and arranging Pandora’s clothes (as seen in Figures 17 and 18, as well as in the folder collec=on)

Figure 13. Pandora Photoshoot Reference Image Figure 14. Pandora Photoshoot Reference Image Figure 15. Prometheus Photoshoot Reference Image

AQer finishing Pandora, I made Prometheus, and wanted him s=ll to remain mostly nude, due to his being a =tan, but added a small sec=on of clothing, further crea=ng some contrast between the two.

I also decided to not include the pre-planned eagle in the piece for three reasons: logis=cally, it would have to be quite small and would be briGle and highly likely to break; it could poten=ally distract from the main forms of the two central characters; I could s=ll allude to, and therefore symbolise this aspect of the story without the eagle itself.

Final Photos

Along with the full photoshoot in the collec=on, below are some of the best images of the final, completed arrangement, with both sculptures complete.

Figure 17. Arranging rags to aid clothing crea;on

Figure 18. Arranging cellophane to create more creases

Figure 17. Arranging rags to aid clothing crea;on

Figure 18. Arranging cellophane to create more creases

Conclusion

In this piece, Pandora and Prometheus are connected by their punishments. They are both depicted in their worse moments, but their story and their strengths are highlighted and resembled through different lenses of the sculpture, all in this fixed narra=ve. Pandora is shown at her worst; giving in to tempta=on and releasing, unbeknownst to her, the worst evils into the world. Yet here she is seen lying down, comfortable. Her nature of innocence and grace is clear, but the controlling and punishing ac=ons of Zeus overarch this, through the opening of the jar. Her robes resemble the beauty and craQ giQed by Aphrodite and Athena, and her floral wreath is a marriage wrath, common in Greek =mes for brides to wear, symbolising her giving away to Epimetheus, with no choice. Moreover, the depic=on of the seven deadly sins as snakes is a key image used oQen in classical stories, taken from Medusa, who was raped by the Gods, and punished as a twisted, nonsensical result. Similarly, she was created by Zeus simply to serve as a punishment for mankind. The contents of the jar in this way resemble the control Zeus has over her – using her through this jar, and punishing her throug h it when she is his very crea=on, accentua=ng the cruel hypocrisy of the Gods, and the lack of agency she has in this fate. This complicated moment of punishment she lies in is arranged cyclically to that of Prometheus’, who I wanted to be on her other side, crea=ng a totally outward, three-dimensional narra=ve – She begins where he ends, and vice versa. Her leaning down, opening the jar s=ll contrasts with his high raise of the flame torch he stole, yet he, a =tan, is similarly being punished, this =me for his own ac=ons, despite the fact they come from a posi=ve, empathe=c, fatherly place. He lies there, not in grace, but in pain, in a lower posi=on, weak, and on the ground, but bringing focus to his brave, strong ac=on of defying the ruling Gods, all for the sake of humankind. His punishment of chains is included, and a slit at his liver to show its infinite cycle of destruc=on and healing.

Here, these two very different people – a god and a =tan; a young, used woman and an incredibly powerful, age-old man; a jar-opener and a fire-giver, are united by their pain and punishment. The focus is brought to the jus=ce of their ac=ons, and despite their suffering one is brought to acknowledge the worth of the ac=ons which led them there – advancing mankind. The cruelty of the gods which are not present is also brought to aGen=on, and overall, for me, this piece sparks the concept of rebellion against an unjust system.

Reflec=ng on my ini=al concept, can a story be well told through sculpture? Without a doubt, yes. The inability to develop a visual temporal narra=ve might hinder the ability to create an en=rely accurate narra=ve, but at the same =me it allows the ar=st to completely transform the process of understanding a story. It does not maGer so much that the original narra=ve is kept, because the existence of a complex story, shown in one beau=ful moment – whichever the sculptor chooses, allows a story to be told through different lenses, and allows the viewer to have to think, to visually explore and rediscover a story in whichever frame it is chosen to be displayed. The ar=st has the freedom to create whichever links, show whichever concepts, and challenge ideas which they find interes=ng. This in no way is an improvement nor degrada=on of the wriGen form, but a completely different way of experiencing a story, and has allowed me to explore and create a piece I find intriguing, prideful, and through-provoking.

i Image and Myth: A History of Pictorial Narra9on in Greek Art, Luca Guiliani, Translated by Joseph O’Donnell, (2013), pp. 24-172

ii Idealism in Greek Art, Percy Gardner, The Art World, Vol.1, No.6, (1917), pp. 419-421

iii Greek Art, John Griffiths Pedley, Art Ins9tute of Chicago Museum Studies, Vol.20, No.1, Ancient Art at The Art Ins9tute of Chicago (1994), pp. 32-53

iv An Introduc9on to Greek Art: Sculpture and Vase Pain9ng in the Archaic and Classical Periods, Susan Woodford, 2nd Edi9on, (2015), pp.62-96

v Pseudo-Hyginus, Astronomica 2. 15 (trans. Grant) (Roman mythographer C2nd A.D.)

vi Hesiod, Works and Days 42 ff (trans. Evelyn-White) (Greek epic C8th or C7th B.C.)

vii Sappho, Fragment 207 (from Servius on Virgil's Aeneid) (trans. Campbell, Vol. Greek Lyric II) (Greek lyric C6th B.C.)

viii The Tragic Guilt of Prometheus, Helen Huckel, American Imago, Vol.12, No.4, (1955), pp. 325-336

ix How to Sculpt in 4 steps: haps://youtu.be/jCIGcAz0Snk

x Sculp9ng Timelapse – HEAD MODELLING (Tutorial): haps://youtu.be/64bpcvDM4Ug

xi Speed Sculp9ng – What You NEED to know: haps://youtu.be/H4WtpO8vfTU

Joshua Jonas

MUSIC

Joshua Jonas chose to produce an album of music as it was a chance for him to exercise his creative talents, whilst pursuing other academic interests. The EP consists of 3 songs across metal, Lo-Fi, and pop genres, and Josh handled the entire production process, including writing, mixing, and mastering. Joshua Jonas is studying Biology, Chemistry and Maths and wants to take Biochemistry at university.

Standoff Sequence

Initially, I am drawing an influence from MF DOOM’s album “Mm..Food” in which he has multiple interludes like this that seamlessly transition into the next track, which is evident in this piece transitioning into the metal track. I am sampling an episode of Spiderman 1967 called “To Catch a Spider”. With this track, my main influences were Iron Maiden’s album “Powerslave” and Rage Against the Machine’s self-titled album in which several elements of these albums are incorporated into this track. With my overall EP, I am aiming for the over-arching theme to be the focus on guitar parts and this track almost solely focuses on the guitar. Initially, I have a slow rhythmic build up which is similar to some of the tracks off the Rage Against the Machine album, containing distorted power chord stabs and high-pitched licks. The chorus continues with a simplistic hook containing many dissonant intervals at a faster pace, accompanied by the drums which fully enter at this point and drive the chorus forward. During the latter half of the chorus, a lead guitar counterm elody playing in thirds enters similar to some of the tracks on Powerslave and providing melodic variation. During the verse, there is an extremely simplistic guitar line playing, reminiscent of RATM’s verses on their album. The guitar solo follows and contains many elements from Iron Maiden’s solos on Powerslave such as tapping, doubling the guitar in thirds and frequent modulations. Following this, the chorus occurs again, helping to solidify this as the main idea within the track. Immediately at the end, there is a short transition in which the rhythm of the track shifts from straight rhythms to triplet rhythms in the outro. Driving guitars and loud drums help to set the pace of this section, varying the pace of the song as a whole to provide contrast for the listener.

Ship In A Bottle

The aim of this song is to be a catchy sequence that transitions into the next song. With this track, I am again drawing an influence from MF DOOM as he tends to include many short interludes within his songs. I am sampling an episode of Bagpuss called “Ship In A Bottle”. I have chopped up some audio sections in the song so that certain phrases end on the beat which adds to the syncopation of the song. I have added a bouncy drum loop, some jazzy guitar chords, and a syncopated bassline that all add to the groove of the song. Halfway through the song, a plucky guitar line plays that provides contrast for the listener.

Contemplating Things:

With this track, my main influence was Denzel Curry’s album “Melt My Eyez See Your Future” and specifically the track “Mental”. The track contains a very mellow instrumental which I implemented in my track. While Curry’s track had a clear spoken word influence, my track aimed in the Lo-Fi hip-hop direction. The chorus of my song is very simplistic, consisting of an electric piano, some drums, a bass, and my vocals. As well as this, the harmony consists of only 2 chords throughout, ensuring that the listener’s full focus is on the vocals. To link this song with the EP as a whole, there is a guitar solo after the 2nd chorus which is also simplistic in nature, as well as a call and response section in the final prechorus between the guitar and the vocals, which adds variation towards the end of the song.

STEM Faculty

Aran Asokan

CHEMISTRY

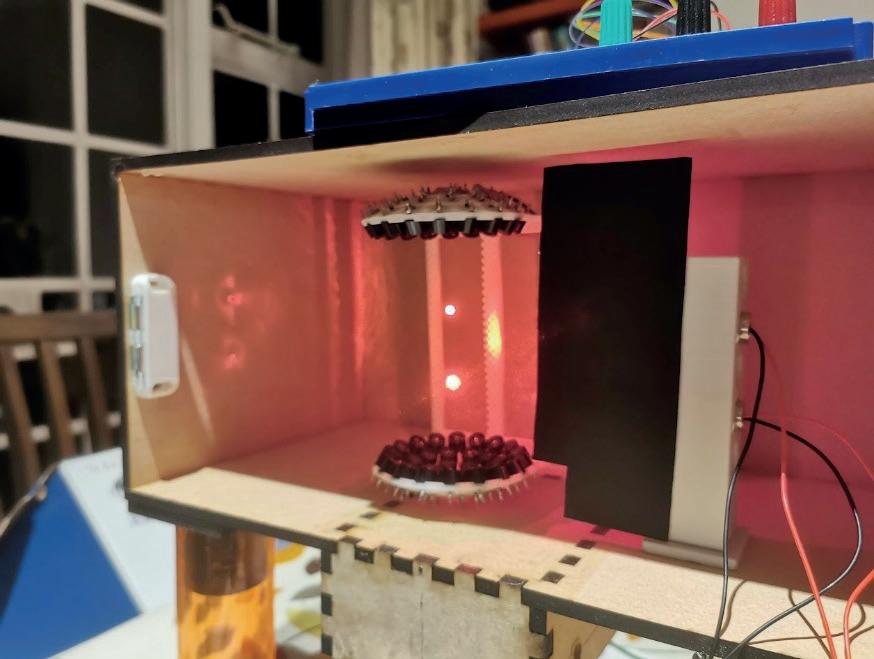

Aran Asokan chose “Carbon Mineralisation” as the focus of his ERP and its relevance in the context of overcoming coral bleaching. The project delved into the prospects of mineralisation as a carbon sequestering method and went into detail about how climate change is affecting coral reefs and how mineralisation can tackle the issue. Aran Asokan is studying Physics, Chemistry, Mathematics and Further Mathematics at A-level and is pursuing an engineering degree at university.

ERP: Is Carbon Mineralisation the Key to Tackling the Climate Crisis?

Introduction

Environmental conscientiousness has rapidly become one of the greatest concerns in society. The importance of adaptation of our means of energy generation towards sustainable methods has been emphasised greatly, but the reversal of damages incurred by humanity is relatively understated.

Current environmental revitalisation agendas revolve around carbon sequestration methods characterised in one of three ways: biological, technological, and geological This paper aims to highlight the importance of geological approaches, and to integrate them with a biological system too, in the form of coral reefs.

Despite the global relevance of CO2 removal, engineered geological methods remain a niche solution, with carbon mineralisation still in its infancy. The process encapsulates the formation of carbonaceous rocks, largely in volcanic geological formations, using carbon dioxide extracted from the atmosphere: an acceleration of a naturally occurring, though much slower version of the process which often takes centuries [1]. Although the process seems propitious conceptually, its scalability and viability has sparked debate over whether it is worth developing.

Instead of geological methods, technological advancements in Direct Air Capture (DAC) have received much more attention. Currently, over 90% of CO2 is captured from emissions immediately upon leaving factories [2]; however, DAC in its current state is not viable due to the number of capture sites needed to cover the entire atmosphere.

One carbon capture company, Climeworks, uses a potassium hydroxide filter a series of several reactions to produce pure carbon dioxide [3]. Air is pulled into a contactor system; the mixture passes through a filter containing potassium hydroxide solution, causing CO2 molecules to bind to the solution. Calcium hydroxide pellets pass through the solution, forming calcium carbonate. A centrifuge separates the potassium hydroxide and calcium carbonate; the KOH is fed back into the filter to be reused. The calcium carbonate is heated to high temperatures, forming calcium oxide and gaseous carbon dioxide, at which point the pure carbon dioxide gas can be sent for storage. A particular focus will be placed on carbonation/mineralisation for this.

As of September 2022, the International Energy Agency reported 18 operational direct air capture plants across the US, UK, and Canada [4]- evidently a long way away from the number needed to

Figure 1. An illustration of a typical DAC process [3]reverse emissions stemming from the industrial revolution. Nevertheless, carbon capture and storage (CCS) methods will prove to be a necessity in rectifying decades of greenhouse gas emissions: it is estimated that CCS can reduce 85-90% of CO2 emissions from large emitters and from operations with high energy consumption rates [5]

One limitation with DAC is that the gaseous carbon dioxide removed from the atmosphere is inevitably recycled to be used in various places, such as in carbonated drinks, fire extinguishers, and as a refrigerant. However, this way of managing CO2 means that any volume of it that is sequestered eventually re-enters the atmosphere and must be re-captured. This creates an unsustainable loop in which costly DAC units are constantly running. Instead, the aim of DAC should be to lower the total volume of CO2 circulating around by finding a more long-term method of storage. In tandem with DAC technology, carbon mineralisation can provide a much more robust overall process for carbon capture, storage, and utilisation

Chapter 1: Conceptualisation of Mineralisation

Carbon mineralisation is a process facilitated by exposing certain rock species to aqueous/gaseous CO2 to encourage accelerated mineral formation in the pores of these rocks (displayed in the picture above) [1] In the context of aqueous carbonation in concrete, the reaction starts off by CO2 dissolving in a water film covering the mineralisation site to form carbonic acid [7]. This acid dissociates into 2 H+ ions and a CO3 2- ion. Similarly, the rock undergoing the process, in this case Ca(OH)2, provides a positive ion - here, Ca2+ - to react with the carbonate ion, forming a carbonate mineral precipitate. Though the general reactions taking place are well understood, specific kinetics and mechanisms are currently unknown as carbonation is an under-explored chemistry process.

Basaltic and ultramafic rocks are classifications with high pyroxene, olivine, plagioclase, Mg, Fe, and Ca contents and low Si contents; displaying the greatest affinities with carbon dioxide, these types are the most favourable for mineralisation Silicates, too, are often used for mineralisation research

Figure 2. An image of mineralised carbon dioxide in rock pores [6]purposes, both due to their abundance in the Earth’s crust and their fine balance between good reactivity with carbonic acids and the ability to form stable carbonates upon reaction.

The H+ reacts with a water molecule to form a hydronium ion, which then reacts with a hydroxide ion to form two molecules of water [7].

Ex-situ refers to the extraction of such rock types, to be used in high temperature and pressure reactors to forcibly control the reaction; the conditions needed range from 45-185°C and 1-150atm (depending on the sample being carbonised) [8]. Although the maintenance of such extreme conditions creates a large associated cost, ex -situ allows for iterative modification of the process to optimise reaction rates via additives and catalysts, and minerals produced can be sold for cement manufacturing, which helps combat the steep costs.

Additionally, ex-situ allows for the resolution of biohazard concerns surrounding mining tails; asbestos fibres interlaced within mined rocks are often inhaled by workers on-site, resulting in various health effects such asbestosis. CO2 mineralisation acts to plug up pores in the rock, preventing the asbestos fibres from escaping and entering respiratory systems. The specific use of mining tails drives process costs down significantly: so much so that it becomes cheaper than in -situ - $8 per metric ton of CO2 mineralised compared to $30 for in-situ in basaltic formations, as estimated by USGS [9]. The main limitation of ex-situ stems from the large volumes of rock that must be extracted to mineralise enough CO2 to create a considerable impact on atmospheric CO2 levels. It is estimated that there is currently somewhere between 7.82 and 43.1 Gt of CO2 in the atmosphere, making it apparent that mineralisation techniques are currently far away from the scale required, as currently the annual volume of CO2 mineralised is in the 10,000s (tons).

In-situ mineralisation, in contrast, details pumping CO2 into underground rock formations. With a comparatively small maintenance cost due to shorter machine use periods, in-situ has a much greater efficacy over the large-scales of mineralisation needed to help the environment. Although one would expect in-situ to be a significantly slower process as ex-situ processes are engineered to maximise the surface area of rock carbonised, the total area of exposed rock in in-situ is so much greater, due to the total volume of basaltic/ultramafic rock underground, that the process is quite rapid (though still

Figure 3. Showing a reaction pathway, in which gaseous CO2 dissolves in aqueous solution to form carbonic acid. The carbonic acid dissociates into a hydrogen carbonate ion and a H+ . Simultaneously, calcium hydroxide in the reacting mineral dissociates into a hydroxide ion and a Ca2+ The Ca2+ reacts with the carbonate ion from the hydrogen carbonate, forming calcium carbonate.slower) Research conducted by CarbFix, an Icelandic carbon mineralisation company, reduced the mineralisation period to 2 years, as described by Ó.Snæbjörnsdóttir [10]. The unnatural efficiency levels make this rather captivating, as it creates the prospect of reversing the adverse effects of CO 2 emissions over the last century in a very short period.

In-situ could be viewed as better primarily because it does not require the accruement of a storage site for the carbonate minerals since they can simply be left underground; however, ex-situ is arguably more cost-effective since the sequestered carbonates can be sold as a commodity- this is not possible when the carbonates are kept underground (it is clear to see why there are contending views in the field). In-situ is easily scalable as there are ample areas of rock that can be sufficiently carbonised (below), making it quite promising; a 2013 assessment of CO2 storage potential in the US, carried out by USGS [9], calculated that around 3000Gt of CO2 could be stored in sedimentary basins alone.

Combined with the additional area of ultramafic rocks, the CO 2 storage capabilities far exceed the CO2 volume in the atmosphere – especially exciting as this makes a future with a more stable climate far more substantial without even considering storage capacity globally.

Chapter 2: Opportunity for Application of Mineralisation