Welcome to the October 2024 issue of

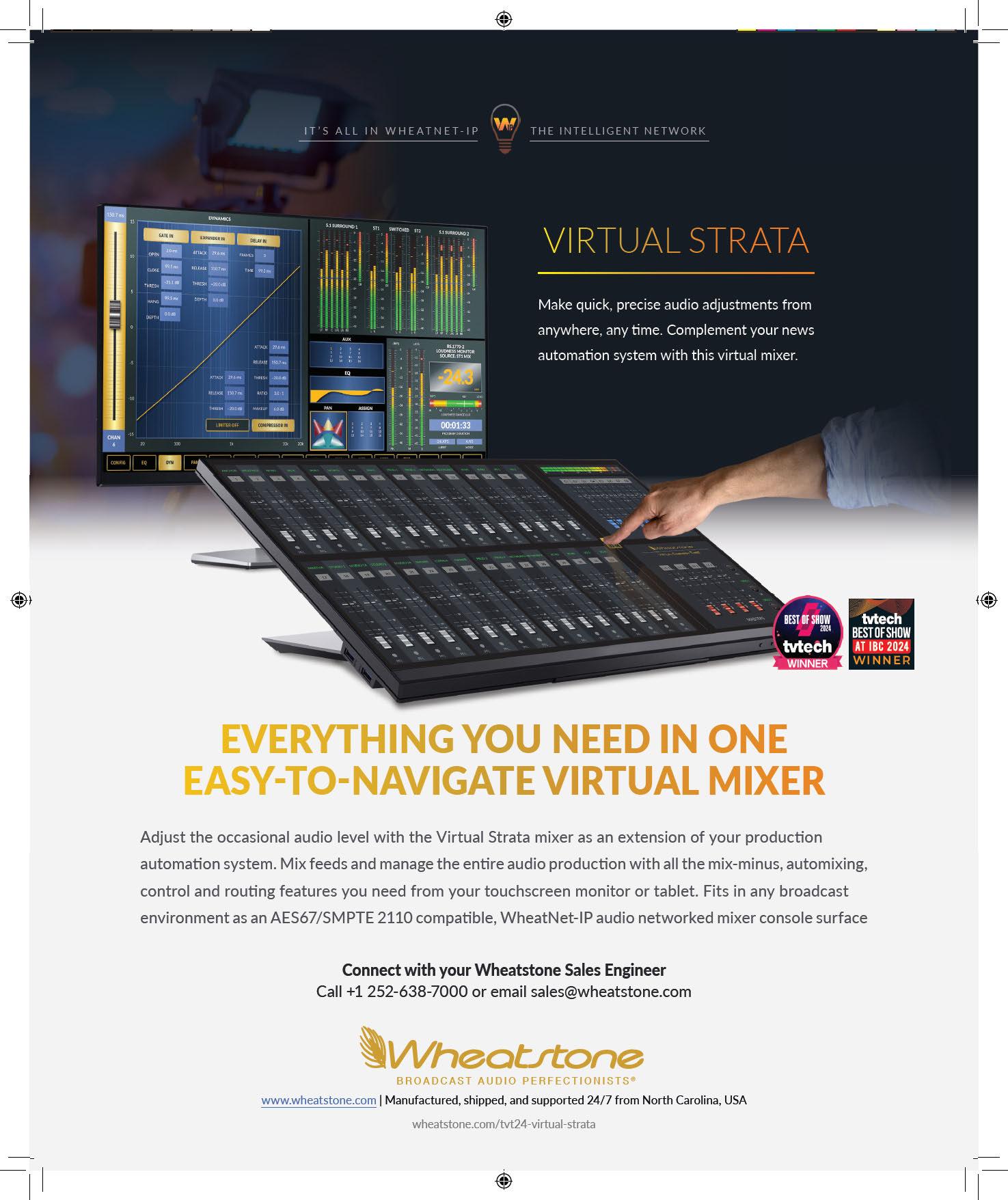

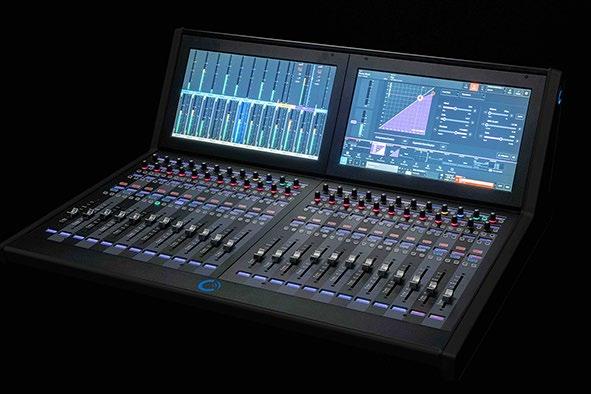

Make quick, precise audio adjustments from anywhere, any time. Complement your news automation system with this virtual mixer.

Adjust the occasional audio level with the Virtual Strata mixer as an extension of your production automation system. Mix feeds and manage the entire audio production with all the mix-minus, automixing, control and routing features you need from your touchscreen monitor or tablet. Fits in any broadcast environment as an AES67/SMPTE 2110 compatible, WheatNet-IP audio networked mixer console surface

Fred Dawson

“AI is not going to replace you, but someone using AI is going to replace you.” I heard that phrase numerous times at last month’s IBC Show in Amsterdam. Like the NAB Show, AI was the main topic of conversation both in the sessions and on the show floor. As to how the M&E sector currently feels about the technology, it depends on who you ask. But according to a recent exclusive survey conducted by Caretta Research on behalf of TV Tech and our sister brand TVBEurope, our industry is taking a (predictably) slow and methodical approach to AI, tempered with cautious optimism.

As expected, our industry is already reaping the benefits of AI in the application of facial recognition, speech to text and certain forms of generative AI to reduce and eventually eliminate repetitive tasks, according to our survey, (p. 14). But that’s the “low-hanging fruit” of our industry; something we’ve gotten used to as the process has been perfected over the past decade.

and

Of course with AI, it’s really all about the data and whether there is enough to make the decision-making process reliable and at this stage, for some vendors the risks are too high. Take Imagine Communications for example. As a provider of Ad Tech to the broadcast industry, you would think that AI would be a main focus for their products—and it is, but mostly used internally for product development. As Steve Reynolds, CEO of Imagine Communications told me at IBC when I asked him about using data to drive algorithms in an AI environment, “If you have incomplete data sets, those data-driven algorithms don’t work very well, and sometimes, by the way, they work demonstrably less well. Having a partial data set is actually worse than just the old algorithmic systems.”

Elsewhere in this issue, John Footen discusses a trend that could pose an existential threat to the future of generative AI and the potential “collapse” where AI basically feeds on itself. “Essentially, the more effective AI becomes in serving our needs, the less beneficial it ultimately may become,” John writes in his Media Matrix column on p. 18. “This phenomenon is referred to as model collapse.”

So while AI is still the current industry buzz, it appears that much of it is not ready for primetime just yet. And when it is, will we be ready?

Tom Butts Content Director tom.butts@futurenet.com

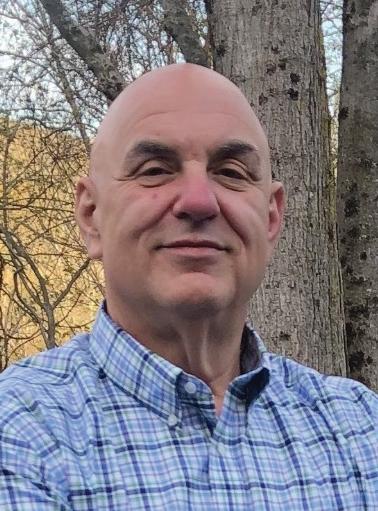

Meet Joe Palombo, Our New Publisher!

With this issue, we introduce Joe Palombo as the new Publisher for TV Tech and TVBEurope! A veteran of the media tech B2B business for more than 35 years, Joe brings extensive experience from various roles— including sales and content creation—to

his new position.

“The broadcast market has endured many changes over the years and TV Tech and TVBEurope have clearly stood the test of time, remaining relevant and influential,” Joe said. “This is a great opportunity and I look forward to helping expand the brands’ influence and impact in the M&E industry.” Welcome aboard, Joe!

Vol. 42 No. 10 | October 2024

FOLLOW US www.tvtech.com x.com/tvtechnology

CONTENT

Content Director

Tom Butts, tom.butts@futurenet.com

Content Manager

Terry Scutt, terry.scutt@futurenet.com

Senior Content Producer

George Winslow, george.winslow@futurenet.com

Contributors: Gary Arlen, James Careless, Fred Dawson, Kevin Hilton, Craig Johnston, Phil Rhodes and Mark R. Smith

Production Managers: Heather Tatrow, Nicole Schilling Art Directors: Cliff Newman, Steven Mumby

ADVERTISING SALES

Managing Vice President of Sales, B2B Tech Adam Goldstein, adam.goldstein@futurenet.com

Publisher, TV Tech/TVBEurope, B2B Tech Joe Palombo, joseph.palombo@futurenet.com

SUBSCRIBER CUSTOMER SERVICE

To subscribe, change your address, or check on your current account status, go to www.tvtechnology.com and click on About Us, email futureplc@computerfulfillment.com, call 888-266-5828, or write P.O. Box 8692, Lowell, MA 01853. LICENSING/REPRINTS/PERMISSIONS

TV Technology is available for licensing. Contact the Licensing team to discuss partnership opportunities. Head of Print Licensing Rachel Shaw licensing@futurenet.com

MANAGEMENT

SVP, MD, B2B Amanda Darman-Allen VP, Global Head of Content, B2B Carmel King MD, Content, Broadcast Tech Paul McLane VP, Head of US Sales, B2B Tom Sikes VP, Global Head of Strategy & Ops, B2B Allison Markert VP, Product & Marketing, B2B Andrew Buchholz Head of Production US & UK Mark Constance Head of Design, B2B Nicole Cobban FUTURE US, INC. 130 West 42nd Street, 7th Floor, New York, NY 10036

All contents © 2024 Future US, Inc. or published under licence. All rights reserved. No part of this magazine may be used, stored, transmitted or reproduced in any way without the prior written permission of the publisher. Future Publishing Limited (company number 2008885) is registered in England and Wales. Registered office: Quay House, The Ambury, Bath BA1 1UA. All information contained in this publication is for information only and is, as far as we are aware, correct at the time of going to press. Future cannot accept any responsibility for errors or inaccuracies in such information. You are advised to contact manufacturers and retailers directly with regard to the price of products/services referred to in this publication. Apps and websites mentioned in this publication are not under our control. We are not responsible for their contents or any other changes or updates to them. This magazine is fully independent and not affiliated in any way with the companies mentioned herein.

If you submit material to us, you warrant that you own the material and/or have the necessary rights/permissions to supply the material and you automatically grant Future and its licensees a licence to publish your submission in whole or in part in any/all issues and/or editions of publications, in any format published worldwide and on associated websites, social media channels and associated products. Any material you submit is sent at your own risk and, although every care is taken, neither Future nor its employees, agents,subcontractors or licensees shall be liable for loss or damage. We assume all unsolicited material is for publication unless otherwise stated, and reserve the right to edit, amend, adapt all submissions.

Please Recycle. We are committed to only using magazine paper which is derived from responsibly managed, certified forestry and chlorine-free manufacture. The paper in this magazine was sourced and produced from sustainable managed forests, conforming to strict environmental and socioeconomic standards.

The NAB last month contended that the FCC’s proposed rules for disclosing AI content in advertising are misguided and risk “doing more harm than good.” In a blog post, Rick Kaplan, NAB chief legal officer and executive vice president, commented on an FCC proposal to implement new requirements to disclose AI-created content in TV and radio ads that drew immediate opposition from both Republican-appointed commissioners commissioner Nathan Simington and commissioner Brendan Carr.

In August, the NAB and the Motion Picture Association asked the FCC to extend the time for comments for the proposal, which the FCC partially granted by extending the deadlines to Sept. 19 for comments and Oct. 11 for replies. Those dates make it unlikely that the FCC could enforce the rules prior to the November election.

In the blog, Kaplan admitted that “artificial intelligence (AI) is reshaping the entire political landscape, influencing not only how campaigns are conducted but also how voters access and process information about them. Its rise brings serious risks, including the spread of deepfakes—AI-generated images, audio or video that distort reality. These deceptive tactics threaten to undermine public trust in elections and NAB supports government efforts to curtail them.”

But Kaplan noted that those problems are best addressed by Congress, not the FCC. “Unfortunately, due to the FCC’s limited regulatory authority, this rule risks doing more harm than good,” he said. “While the intent of the rule is to improve transparency, it instead risks confusing audiences while driving political ads away from trusted local stations and onto social media and

other digital platforms, where misinformation runs rampant.”

Kaplan also contended that “deepfakes and AI-generated misleading ads are not prevalent on broadcast TV or radio. These deceptive practices thrive on digital platforms, however, where content can be shared quickly with little recourse. The FCC’s proposal places unnecessary burdens on broadcasters while the government ignores the platforms posing the most acute threat. This approach leaves much to be desired.”

Kaplan added that the FCC’s proposed disclaimer in broadcast ads is too generic to do much good. “This generic disclaimer doesn’t provide meaningful insight for audiences,” he said. “AI is often used for routine tasks like improving sound or video quality, which has nothing to do with deception. By requiring this blanket disclaimer for all uses of AI, the public would likely be misled into thinking every ad is suspicious, making it harder to identify genuinely misleading content.”

❚ George Winslow

TV Tech’s Best of Show Awards for the 2024 IBC Show were judged by a panel of industry experts on the criteria of innovation, feature set, cost efficiency and performance in serving the industry. All nominees will be featured in a Best of Show Program ebook, available soon. In addition to TV Tech, other Future brands that participated in the Best of Show Awards included TVBEurope, Radio World, Installation and ITPro. Nominees paid an entry fee to enter.

Here are this year’s winners:

Adder Technology: ADDERLink INFINITY

1100 Series

Adobe: After Effects

AJA Video Systems: OG-ColorBox

AWS: Cloud-based broadcast operations, monitoring, and control

Aputure: STORM 1200x

Bolin Technology: R9-418N PTZ Camera

Bridge Technologies: VB440 Canvas Display

Canon: EOS C80 6K Camera

Cobalt Digital: BIDI4-GATEWAY

Comprimato: Twenty-One Encoder

CuttingRoom: CuttingRoom

Dina: Dina

ENCO: enCaption Sierra

Evergent, Mux: QoE and Advanced Churn Management

Evertz: Dreamcatcher BRAVO

Studio

MultiDyne: MDoG Series of IP Gateways

MwareTV: TVMS App Builder

NEP Group: Mediabank

GlobalM: SDVN Orchestration

Grass Valley: Sport Producer X

IMAX: StreamSmart On-Air

IN2CORE: QTAKE Monitor

Matrox Video: Matrox Avio 2 IP KVM

Maxon: ZBrush for iPad

MediaKind: Fleets and Flows

Adeline AI

Proton Camera Innovations: QuickLink

SLXD Portable Digital Wireless Systems

Sound Devices: A20-

SpherexAI

Telos Alliance: Linear Acoustic AEROseries DTV Audio Processors

Vizrt: TriCaster Mini S

Wheatstone: Strata Mixing Console with Remote Virtual mixing

Witbe: Mobile Automation

Wohler Technologies: MAVRIC

Xperi/TiVo: TiVo Broadband

Last month Saankhya Labs and Sinclair announced a collaboration on the design and launch of a variety of mobile phones equipped with ATSC 3.0 receivers to advance a trial of Direct-to-Mobile broadcast in India.

Like the announcement earlier in the year that Brazil had selected the ATSC 3.0 physical layer for its TV 3.0 standard, the Saankhya Labs-Sinclair news might leave U.S. broadcasters saying something like, “Nice, but how does that affect me?”

Unlike the Brazil announcement, the Indian D2M news may bear fruit in the form of bigger audiences and higher revenues for Next-Gen TV broadcasters here in the not-too-distant future.

The Saankhya Labs-Sinclair initiative is targeting the first half of 2025 for trials of the smartphones and other consumer devices, such as USB dongles, set-top boxes and gateways and low-cost featurephones—all of which will be powered by the Indian high-tech company’s Pruthvi-3 ATSC 3.0 chipset.

How long after that will it be until Indian tourists visiting the United States, which totaled about 1.26 million in 2022 according to Statista, begin using their new 3.0-equipped smartphones and featurephones here when visiting?

How long before those unwitting ATSC 3.0 ambassadors begin watching Next-Gen TV during their visits, leaving family and friends living here wondering why they can’t do the same thing?

These interactions will only grow if India gives 3.0 the nod for D2M broadcasting, greenlighting CE

and high-tech companies to pursue its market of 1.2 billion mobile phone users with Next-Gen TV-enabled phones.

While admittedly my insight into Indian domestic telecommunications policy is miniscule, the Saankhya-Sinclair collaboration is a good sign they are confident 3.0 will power India’s D2M broadcasting. When speaking directly with Saankhya Labs representatives in April at the NAB Show, I was assured 3.0 was on track in regulatory circles and would ultimately be selected for D2M.

As the mass market for 3.0-enabled phones ramps up in India, what’s to stop those phones from showing up here, whether or not U.S. wireless companies approve. All consumers will need are SIM cards from their wireless companies, and they will be able to make calls, take pictures, run apps and do all of the other things people like to do with their mobile phones plus watch OTA television.

I spoke on the phone with Mark Aitken, president of Sinclair-subsidiary ONE Media, and senior vice president of advanced technology at the station group, while he was taking a mini-European vacation after IBC 2024.

He characterized the announcement as “the biggest thing in my professional life.” For anyone listening to what he has been saying for several years, that’s not surprising. He, along with Sinclair, have been determined to get 3.0 on cell phones in the United States—one way or another. Aitken hasn’t been shy about explaining the strategy involving India, which to some might have sounded like a pipe dream.

But it’s no flight of fancy. The strategy is well-thought-out, diligently pursued and poised to pay off in a big way for TV viewers across America and U.S. broadcasters.

During last month’s carriage dispute between Disney and DirecTV, FCC Commissioner Nathan Simington criticized the way the commission currently regulates traditional linear TV and streaming services, arguing that the current rules “entrenches marketplace power at the expense of the consumer.” He contends the FCC exerts an “uneven hand” in the media landscape with hefty “legacy rules for some, and close to zero rules for others.”

Prior to the eventual settlement of the dispute, Simington said, “As the Disney/ DirecTV distribution negotiation drags on, it is a moment to recognize a few truths.

“One: about a third of linear network content distributed in the United States is now delivered by over-the-top, streaming platforms. Two: there is zero harmonization,

whether in our rules or in industry practice, between network video content distribution over traditional linear MVPDs versus overthe-top, streaming MVPDs. Three: as is so often the case in major disputes between networks and distributors, independently owned and operated affiliates and station groups are left behind, powerless to distribute content to consumers in the way they prefer to consume it.

“The linear media marketplace is governed by a two-tiered system of rules—legacy rules for some, and close to zero rules for others,” he concluded. “We must balance the scales. A future Commission should take seriously the question of its own uneven hand in the media marketplace. We must either unleash the video marketplace from

outdated rules or balance it with smart and targeted reforms, but what cannot persist is a system that entrenches marketplace power at the expense of the consumer.”

Simington didn’t directly discuss how the FCC should address the DirecTV/ Disney carriage dispute, but DirecTV did issue a statement praising the statement and calling for reform of regulations governing TV and video markets.

The FCC, which has been exploring ways to address blackouts over carriage disputes, announced recently that it is seeking public comment on whether the commission should require cable and satellite pay-TV providers to refund subscribers who face programming blackouts on their cable or satellite television subscription.

❚ George Winslow

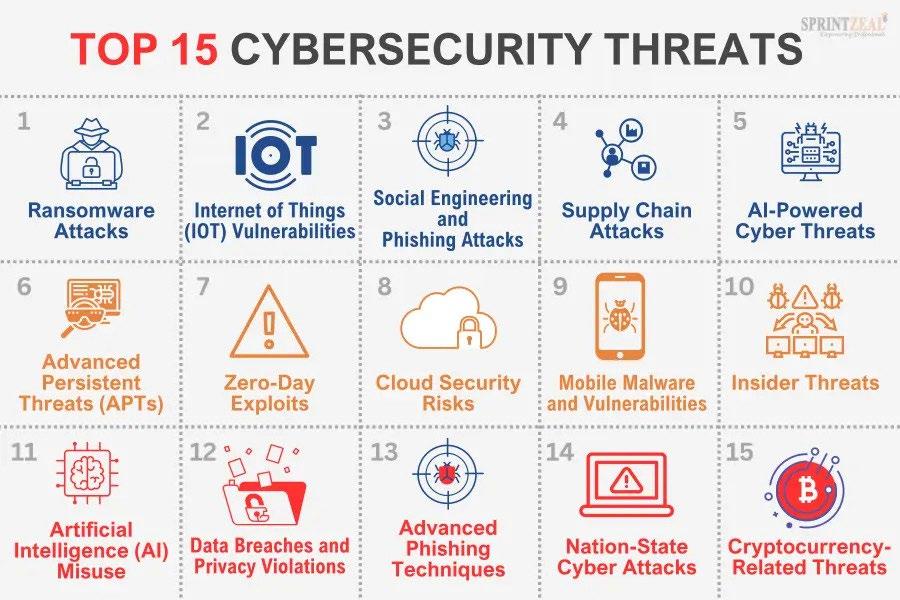

By James Careless

With the total value of the global digital video market estimated at $193 billion in 2023 and expected to explode to more than $503 billion by 2032—according to IMARC Group—that growth rate of nearly 11% represents a market that needs to make content protection a top priority.

“The growth trajectory means it will be a dereliction of responsibility for companies in the digital content space that derogate or underfund efforts to protect their investments and IP,” said Emeka Okoli, senior vice president of business solutions with Zixi, a secure content streaming platform provider for companies such as Amazon, Bloomberg, Apple, Fubo and Roku. “All linearized content, including big screen VOD assets are fair game to the bad guys who also see a goldmine.”

In the past, broadcasters and content producers could live with a certain level of piracy and look the other way, according to Asaf Ashkenazi, CEO of Verimatrix; developer of streaming security solutions such as Verimatrix Streamkeeper whose Counterspy module uses AI to anticipate attacks. “However, today’s incredibly competitive market forces everyone to be more efficient and make sure they don’t share their value with cybercriminals and pirates.”

Given the multibillion dollar value of digital video content, it’s not surprising that it’s under attack from many directions. “Key threats include piracy, content leakage, theft and illegal distribution, all of which compromise content monetization,” said Ian Hamilton, CTO of Signiant, known for its SaaS solutions that support secure content exchange within and between media organizations. “Piracy and illegal distribution result in lost revenue from unauthorized access, while content leakage and theft devalue exclusive releases.”

“From an Akamai lens, since we’re dealing mainly with streaming services delivering video content to end-users, primary threats to content include theft, piracy, rebroadcasting, automated scraping, and account hijacking,”

noted Tedd Smith, solutions engineering manager at Akamai Technologies. “These types of actions can be incredibly damaging, causing everything from decreases in viewership, loss of content rights, brand damage, increase in enforcement costs, and revenue loss.”

There is a mind boggling variety of threat actors doing their best to steal content and then profit from that theft, according to Hamilton. “Threat actors range from organized crime groups and hackers to insiders, individual pirates, and even state actors,” he said. “Organized crime groups run piracy operations for profit, distributing stolen content through illegal channels. Hackers may target content for financial gain or to disrupt media companies. Insiders, such as employees or contractors, might leak content for personal or financial reasons. Individual pirates steal content for notoriety or minor profits, while state actors may pursue political or economic objectives.”

To make matters worse, today’s video content pirates are not the stereotypical lone hackers sitting in a darkened theater with a camcorder, or recording video streams on their home computer and then sharing

those copies with friends. In many cases, “they are organized just like a profitable digital streaming business, often operating across borders with sophisticated operations centered around one goal: making money at the expense of hard-working content providers and video distributors,” said Ashkenazi.

The advent of AI isn’t helping. “AI enables threat actors to automate attacks, enhance social engineering, and create deep fakes,” said Hamilton. “It facilitates rapid identification of vulnerabilities and streamlines the theft and distribution process.”

The bottom line: “Anyone who can steal content probably will,” observed Ben Jones, director of solutions marketing at MediaKind. “Their motivations include profit from selling pirated content or bypassing paywalls to watch stolen content for their own personal gain.”

Advances in technology are aiding thieves in their attempts to steal digital video content, but technology is also helping content producers and distributors to better protect themselves against such theft. This technology is being harnessed by companies like Akamai, MediaKind, Signiant, Verimatrix and Zixi, to deter and disable such attacks when they occur.

At MediaKind, their clients are increasing their use of digital watermarking—hiding unique, traceable identifier data within the content, so that owners of pirated content can be found and alerted. “At any point in the chain where there’s been a detection

of leaked content, we can work out where that occurred using watermarking,” Jones said. “With this data, content owners can point their lawyers at the pirates, and force them to take the content down.”

Increasing the complexity of cipher blocks and secure algorithms is also making it harder for thieves to steal content digitally. “The older encryption system used a 42 bit key; now we are moving into 228 and 256 bit keys, which are much more military style and secure,” said Jones. “We’re also using measures such as DRM [digital rights management] and real-time content monitoring online.”

Zixi’s SDVP and telemetry plane, ZEN Master, includes high AES encryption. “We also provide DRM functionalities that utilize DTLS [Datagram Transport Layer Security], which safeguards against stream ripping, eavesdropping, man-in-the-middle attacks and fraudulent endpoints by verifying the receiving device’s certificate of authenticity,” Okoli said.

“Furthermore, Zixi’s platform includes DPDK (Data Plane Development Kit) high-performance networking, enabling

“Key threats include piracy, content leakage, theft and illegal distribution, all of which compromise content monetization.”

IAN HAMILTON, SIGNIANT

content to operate at the network layer, bypassing the operating system and effectively blocking potential OS-based ransom malware. With Zixi’s new multi-hop product, we can offer customers upstream metadata observability which will help companies proactively trace their content incoming digital journey and footprint.”

Signiant’s content protection protocol employs integrated multilayered strategies to protect its clients. “This includes using AI for real-time monitoring and threat detection, applying strict access controls based on least privilege, and employing

encryption,” said Hamilton. As well, “Minimizing copies of content by interfacing with customer-owned storage reduces exposure and potential vulnerabilities.”

Akamai and Verimatrix also use the many content protection strategies described above.

Constant vigilance is another important tool: “Visibility is a key component of content protection as well, especially when dealing with direct-to-consumer delivery,” Smith said. “This is critical during live events when immediate mitigation is a top priority. Having real-time visibility into traffic patterns and user behavior allows streaming services to quickly catch things like stream-sharing and rebroadcasting so access can be revoked.”

Overall, “our commitment is to make piracy unprofitable, illegal distribution unsustainable, and content security an enabler of business growth rather than merely a compliance checkbox,” said Ashkenazi.

Verimatrix’s position is shared by the content protection companies interviewed for this story, all of which want to ensure that crime does not pay for pirates, and that legitimate video producers/distributors continue to profit from the multi-billion dollar value of their content. l

Survey by Caretta Research conducted for TV Tech and TVBEurope details how the industry is reacting to AI and deploying AI-based technologies

By Jenny Priestley and Tom Butts

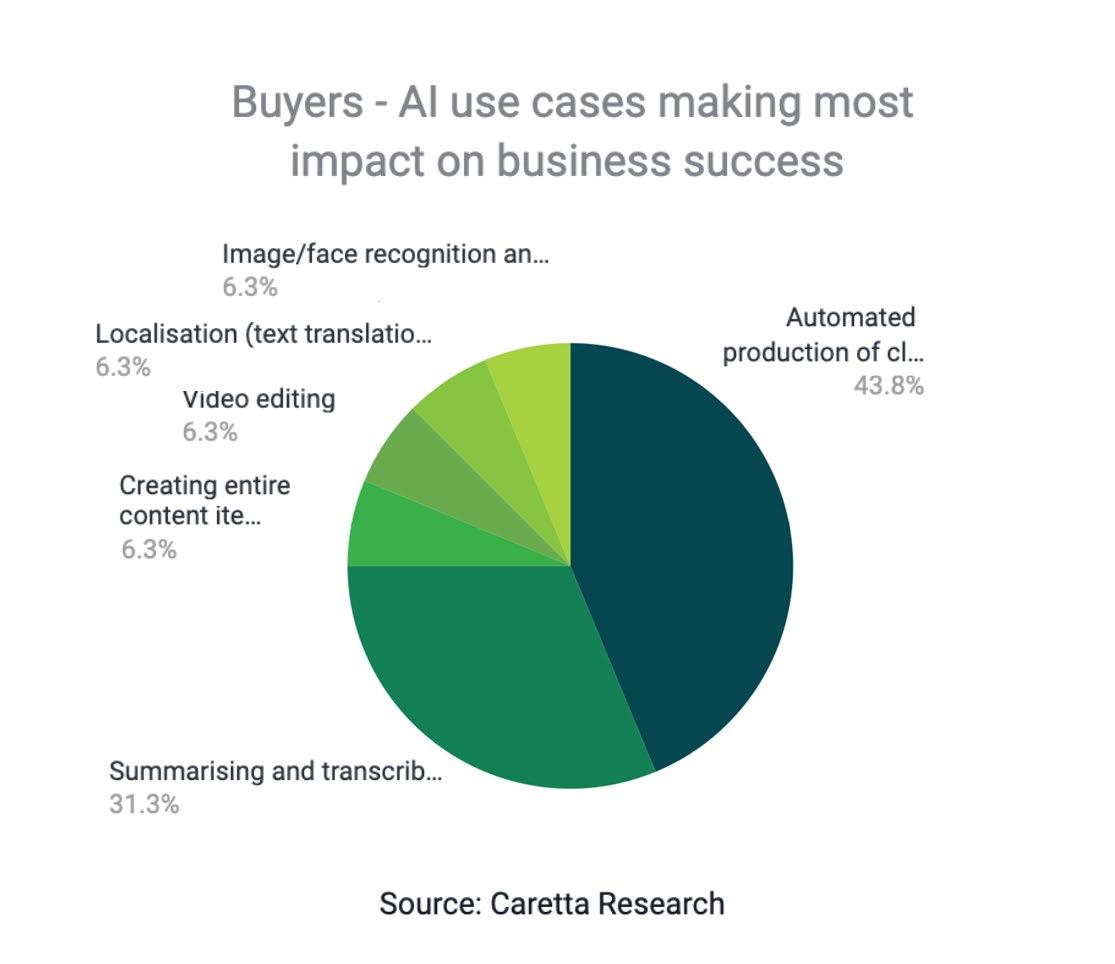

A study of how the Media & Entertainment industry feels about artificial intelligence (AI) has revealed most media and broadcast companies view its adoption as a positive, while also expressing a “wait and see” approach to its implementation.

The research, carried out by Caretta Research on behalf of TV Tech and sister brand TVBEurope, shows many broadcasters currently use AI in “back office” processes, with 85% of respondents stating they believe AI will have a “significant impact” on the media and broadcast industry, particularly when it comes to transforming the economics of their business.

TOO EARLY TO TELL?

However, despite the cautious optimism expressed by respondents, there is a feeling that it is still “too early to tell” exactly how AI will impact M&E, particularly among those who are buying technology and an attitude that the most effective use of AI is where it

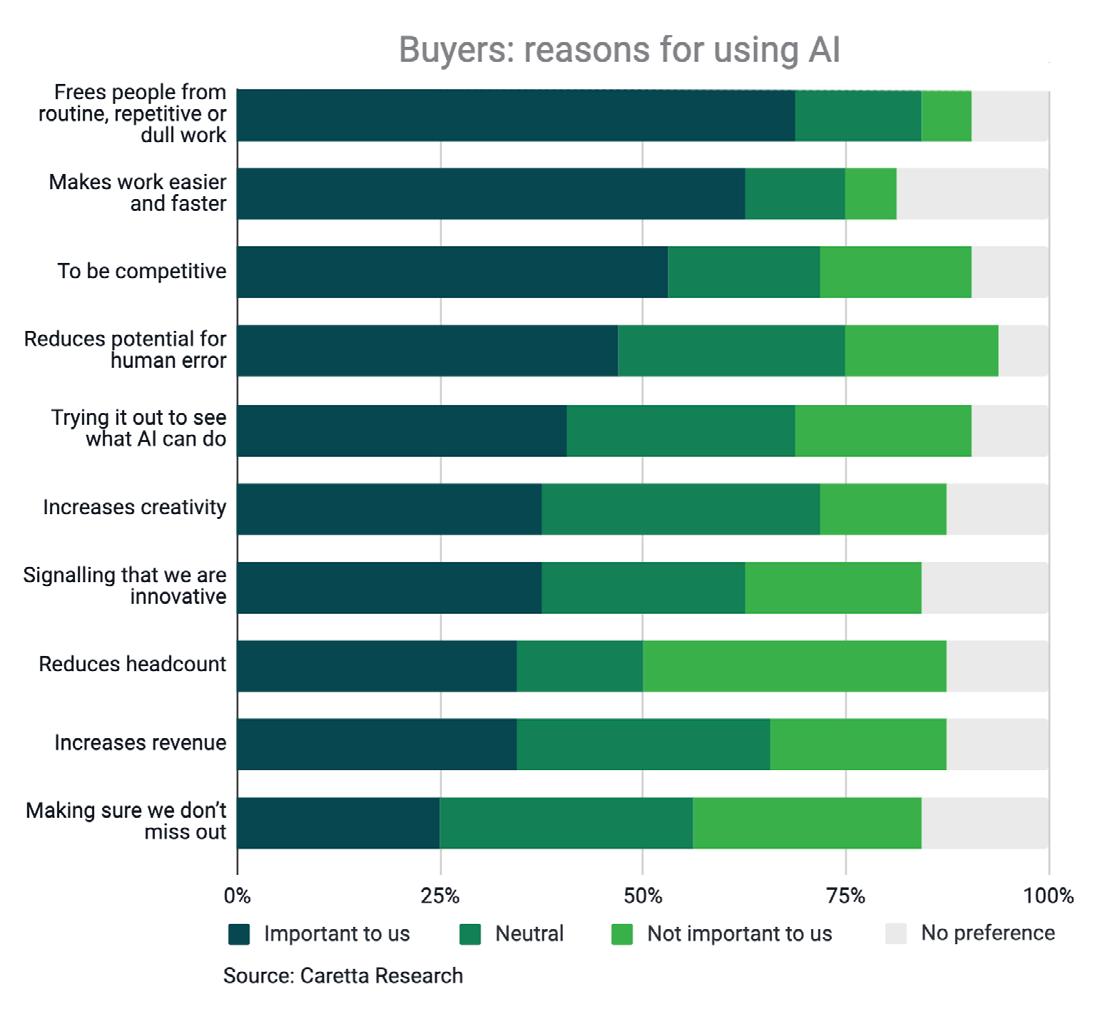

has already found success in recent years: speech-to-text and automation of softwarebased processing (Fig. 1).

There’s been much talk about the possibility of AI replacing humans within

the media industry, but the research found that most respondents view it as something that helps humans with their work. Asked which business use cases AI will have the biggest impact on, 44% of technology

buyers said automated production of clips and highlights, followed by summarizing and transcribing (speech-to-text) at 31% (Fig. 2).

In fact, 61% of both buyers and vendors said they see AI replacing routine operational tasks, while 18% of buyers see it replacing or assisting complex creative tasks.

Some 69% of respondents who buy technology said they view AI as something that will free people up from routine, repetitive or mundane work, while 63% said AI will make work easier and faster (Fig. 3).

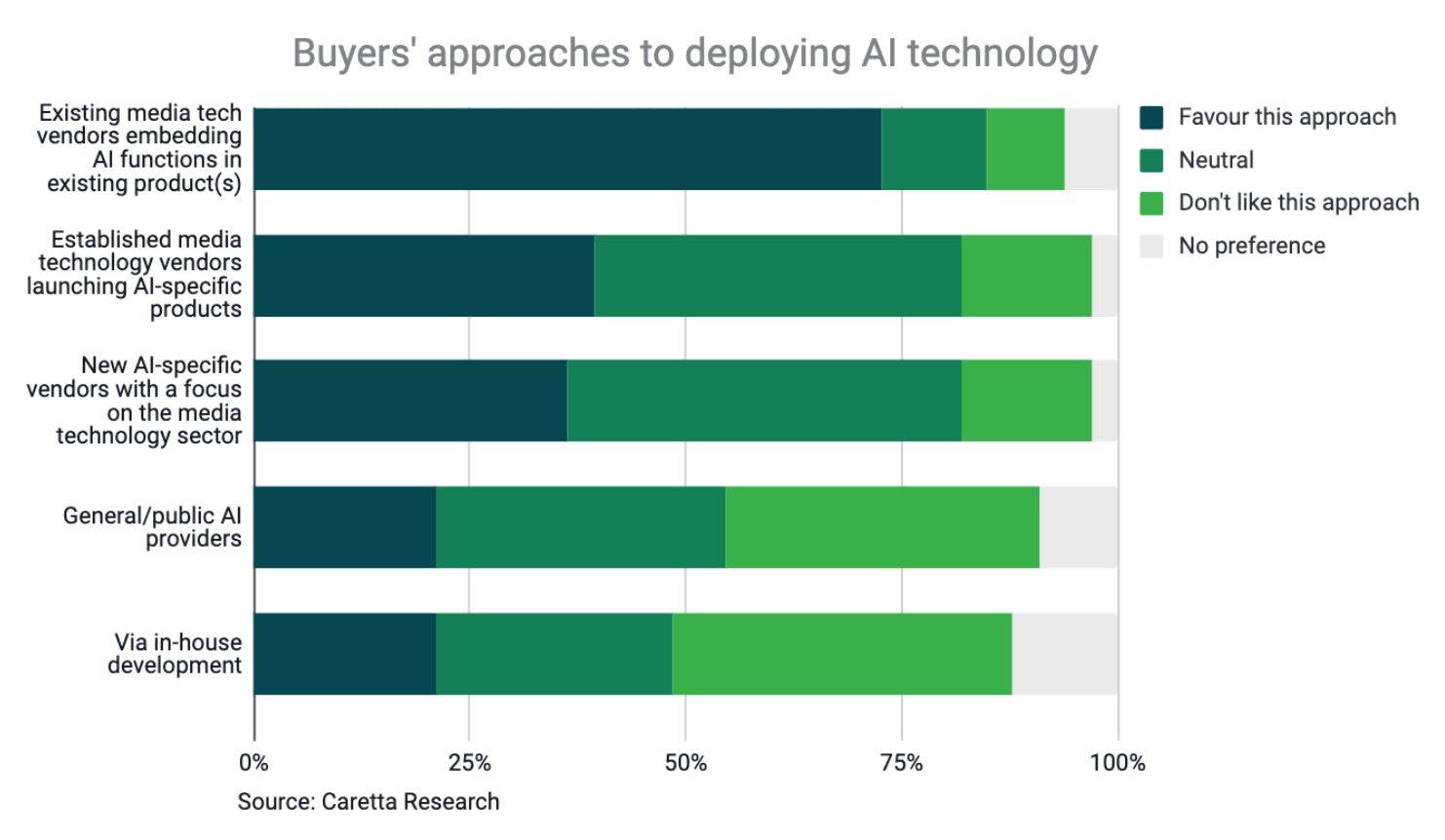

As to how broadcasters are approaching the deployment of AI, 73% said they are

“This is the very first industry-wide research that cuts through the AI hype and focuses on how broadcasters and media companies are actually using AI tools in their day-to-day operations.”

ROBERT AMBROSE, CARETTA RESEARCH

looking to existing media tech vendors to embed AI functions in their existing products, while a further 39% said they want established media tech vendors to launch AI-specific products (Fig 4).

As to what’s holding them back from adopting AI, 43% of respondents who buy technology cited the unavailability of people who understand how to harness the technology, closely followed by 41% who said they were concerned about biased results.

WHO’S

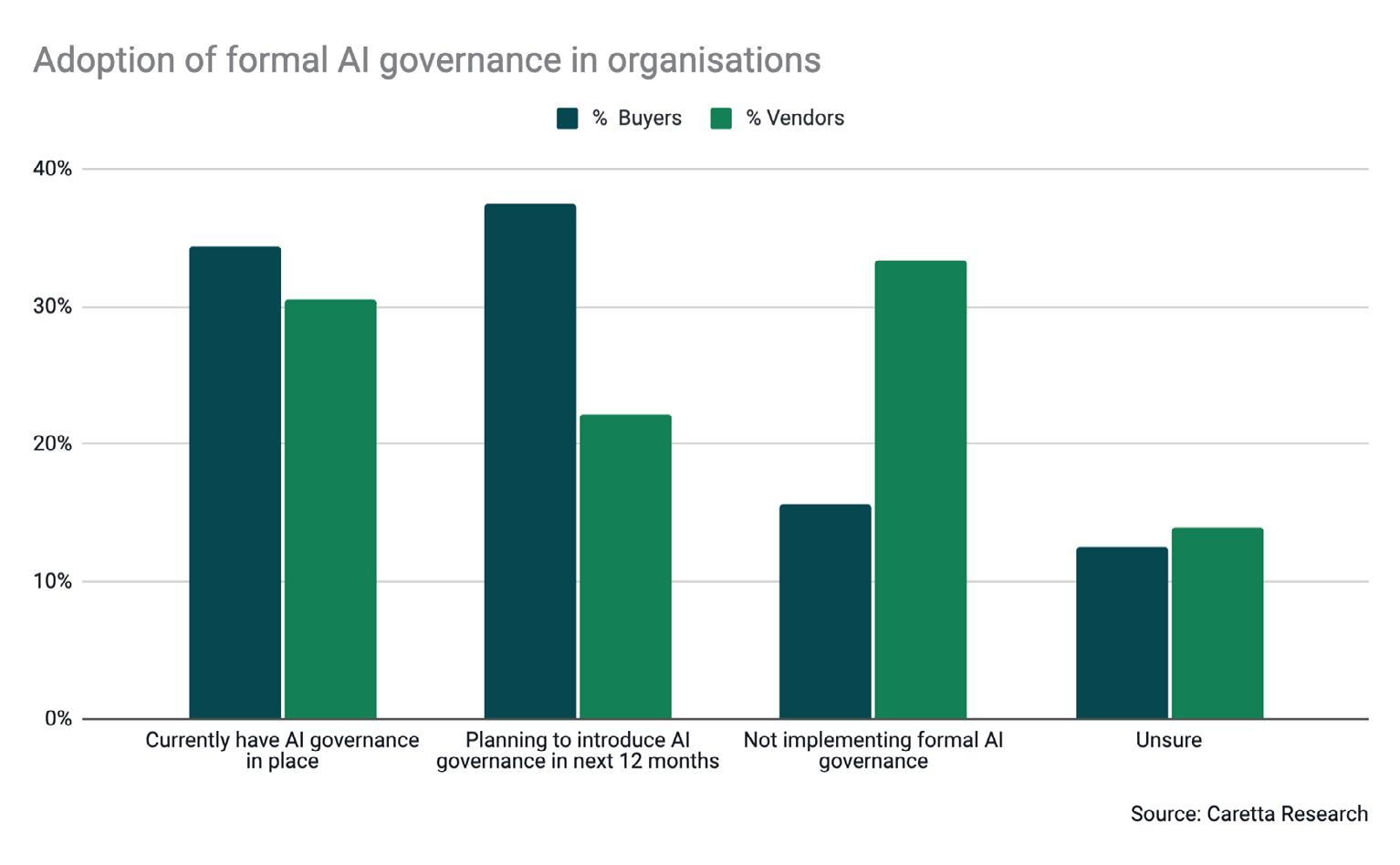

In terms of who will oversee and manage the impact of AI on media production—aka the so-called “formal governance” of AI—this is where buyers and vendors diverged. While nearly a third of both buyers and vendors said they currently have formal AI governance in place, 33% of vendors said that they are not implementing AI governance and only 16% of buyers said the same.

This could reflect a traditional business approach to a technology still in its infancy and the reluctance of putting too many

guardrails on an emerging market (Fig. 5).

“This is the very first industry-wide research that cuts through the AI hype and focuses on how broadcasters and media companies are actually using AI tools in their day-to-day operations,” said Robert Ambrose, co-founder of Caretta Research. “It gives everyone at the early stage of adopting AI a chance to check if they’re doing the right thing, and it lets technology vendors see clearly the sort of AI capabilities their customers want to use.

“What stands out to me is how confident media and broadcast companies are when

it comes to adopting AI technology,” he added. “A very small proportion of technology users are fearful of any negative impacts of AI on the creative process, such as a loss of creativity or fears of inappropriate content being created. In fact, the biggest fear is nothing new for AI, it’s simply the fear of change as AI brings in new ways of working and—potentially—new competitors.” l

More details on the survey will be made available on tvtech.com and tvbeurope.com in the near future.

While some in the M&E industry tiptoe around sensitive outcomes, others tout cautious approach

By Fred Dawson

If anyone doubted the normalization of AI in the M&E space is a fait accompli, the IBC Show in Amsterdam put that notion to rest last month with the help of some new developments that promise to lower the threat of unwanted consequences.

One of the more significant cases in point involves the emergence of neuro-processing units (NPUs) as AI-optimized chipsets, which by supplementing CPUs and GPUs in network appliances and CPE could alleviate core AI processing workloads while addressing privacy rules that can restrict use case development. Notably, NPUs now implanted in some highend set-top boxes offer a new perspective on how cable operators, telcos, DBS providers and even NextGen TV broadcasters with their ATSC 3.0 signal gateways might be battling with smart TV OEMs and cloud-based super-scalers for whole-home dominance in the future.

For example, Vantiva, a company quietly operating under the radar since combining the home networking units of Technicolor and CommScope, introduced an NPU-driven STB labeled ONYX, a far-field voice-controlled (FFV) device that officials said opens the door to next-gen AI-supported use cases. These start with things like identifying and locating specific events in video, sharpening resolution, decreasing film grain, and greater content personalization but will evolve to more functionalities in tandem with the development of home-oriented large-language models. With immense AI data processing power, such devices have the potential to interact verbally at a personal level with everyone in the household by utilizing facial, voice and device recognition and compilations of past user experience while avoiding the privacy violations that come with shipping information to the cloud, “We’re working with our [MVPD] customers to explore applications that can be developed to help them drive user engagement and monetization,” said Vantiva CTO Charles Cheevers.

At the production end of the M&E service pipeline, the industry’s massive shift to cloudorchestrated workflows across cloud-centric and hybrid implementations involving both premises- and cloud-based appliances has unleashed an explosion of AI-assisted solutions that are designed to automate a big share of the workload. But reliability is a big issue.

Indeed, the saturation AI branding across 14 IBC exhibit halls at a moment when many aspects of the technology have yet to meet industry reliability standards prompted one vendor CEO to joke it might be smart to convert his own company’s high-profile AI messaging to mean “Actual Intelligence.” To get beyond those dependability risks some vendors are going out of their way to provide verifiable assurance that their AI implementations pass muster.

For example, Telestream—which showcased a range of AI-powered solutions aimed at streamlining ingestion, content enhancement and delivery in customers’ media processing, production and post-production workflows— shares the level-of-confidence percentages scored by all company solutions using AI, according to Colleen Smith, Telestream’s senior vice president of product marketing and channel enablement. While reaping the benefits to the fullest extent possible, “we’re

The 2024 IBC Show, which took place at the RAI Amsterdam, Sept. 13–16, attracted 45,085 visitors, a 4.69% increase from 2023. The number of exhibitors was also up to 1,350, 100 more than last year.

taking a cautious approach to AI,” she said.

There are many other issues beyond reliability, privacy and processing power consumption, not to mention the widely debated potential for job losses, that the industry has to deal with as AI saturates the ecosystem. For example, there’s a “greatequalizer” aspect to AI that threatens to undermine the competitive advantages various vendors have enjoyed, raising the question of “how all these organizations will survive,” noted Juan Martin, CTO and co-founder of streaming platform provider Quickplay.

“We’re a digital transportation company helping customers to build platforms and orchestrate solutions across a multiple partner ecosystem,” Martin said. As a gateway to the streaming marketplace, the company’s success depends on its ability to “sift through the options for our customers.”

At the show, Quickplay announced it has positioned its cloud-native content management, processing orchestration, generative-AI tools, dynamic advertising and other capabilities for access in the Amazon Web Services (AWS) Marketplace to address the industry’s need for “the smartest, fastest, most effective ways to engage and monetize viewers.” By offering its open-architecture approach to orchestrating what it deems bestof-breed solutions from a bevy of partners, the company is helping customers to operate in the OTT market with suppliers that go beyond reliance on AI algorithms in building wellconceived solutions, Martin said.

On a much broader collaborative scale,

The IBC session “Design your Weapons in the Fight Against Disinformation” discussed the topic of deepfakes and the impact of AI on news.

another initiative aimed at sifting through solutions has launched as an IBC “accelerator project” targeting the dark side of AI, where deepfakes have become a threat to the broadcast news business.

“I don’t think there could be any more existential threat to media than we have in the form of misinformation, disinformation, manipulated images, fake images,” said Mark Smith, IBC Council chairman and head of the IBC Accelerator Program. “There’s a

whole tsunami of content that’s coming at these trusted brands in our world of news broadcasters and news agencies.”

During a session moderated by Smith entitled “Design your Weapons in the Fight Against Disinformation,” Tim Forrest, content editor at U.K.-based Independent Television News, noted, “The fakes are getting better. The technology to make them is improving, too, and it’s getting easier and easier to use.” He said “a qualified guess” is that there are about

34 million deepfake images, video and text messages spreading across the globe daily.

The new effort to shore up the ability of legitimate newscasters to counter the scourge was the brainchild of consultant Allan McLennan, president and CEO of the PADEM Group, and Anthony Guarino, until recently executive vice president of global production and studio technology at Paramount. As described by McLennan, the goal is to foster awareness of the deepfake threat and cooperation in doing something about it across the intensely competitive global news industry.

Six months since getting underway, some of the world’s biggest newscasters have joined the fight, including the Associated Press, CBS/Paramount, BBC, ITN, Globo, and many more, McLennan said. “We’re creating a path to sharing information and addressing disinformation together,” he noted. Along with promoting greater awareness of the threat, the participants are sharing results from their experiments with technologies that can be used to flag disinformation so that it doesn’t get into the news pipelines, which is hard to do when everybody is competing to be first in breaking news.” l

The contemplation of artificial intelligence has a long history, arguably predating the invention of digital computers. Since the advent of modern computing, the hype cycle for AI has repeated itself numerous times. In 1970, Marvin Minsky, a pioneering figure in AI, was quoted in Life magazine saying, “In from three to eight years we will have a machine with the general intelligence of an average

human being.” This forecast did not materialize then and remains unfulfilled. Once again, the current AI hype cycle is approaching the “trough of disillusionment,” but it is expected that we will soon reach a new “plateau of productivity” as the latest advancements are assimilated.

I have been somewhat taken aback by the rapidity with which the present cycle has moved past its peak, leading to more grounded

expectations throughout the industry. Over the past several years, there has been extensive discussion about the hype and associated fears of AI.

In this column, I will delve deeper into the challenges inherent in AI technology itself. Many of these challenges exist independently of their application in media and can arise in any context. Although my focus will be on technology, it is crucial to acknowledge substantial non-technical concerns such as economic implications, rights and royalties, cultural transformations, and the legal and regulatory landscape surrounding the technology.

Within the scope of technological challenges, there are those where a resolution path is foreseeable and others where no clear solution currently exists. An example of a challenge with a visible path to resolution is electricity usage. We have multiple methods to generate electricity and can eventually develop the necessary infrastructure. Here, however, I will concentrate on a few challenges for which there is currently no evident way to resolve.

When talking about any form of technology, it’s crucial to first define the term. In the context of AI, this definition is quite expansive, covering a range of underlying technologies. Commonly, AI now refers predominantly to Generative AI, particularly Large Language Models (LLMs) and associated technologies such as Generative Adversarial Networks (GANs) and others. This article will concentrate specifically on issues related to LLM technology.

By now, we are all aware of some inherent issues in the predictive nature of LLM technology. One of the most prominent concerns is the tendency for these models to “hallucinate,” producing results that contain objectively false, bizarre, or highly improbable information. While a certain degree of this can be beneficial, especially in creative tasks, it poses significant challenges in many situations. Completely avoiding this issue is difficult. Architectures such as RAG (Retrieval Augmented Generation) aim to mitigate this by automatically supplementing the prompt

with additional constraining data from traditional data systems like databases. Though this approach is promising, its inconsistent performance makes it difficult to rely on these tools for automated operations. For use cases that demand greater predictability and reliability, it’s best to use historical automation technologies or trained human operators.

AI has demonstrated its proficiency in handling generic tasks, particularly those with abundant training data. Unfortunately, in creative fields, this often results in subpar content. Most data available to train AI content systems tends to be of low quality and lacks exceptional creativity. Furthermore, due to the AI’s inherent tendency to generate outputs that align with the “average” result, models typically produce quite unremarkable content. To date, AI has not consistently delivered high-quality, creative outputs, and achieving such results seems implausible without human intervention.

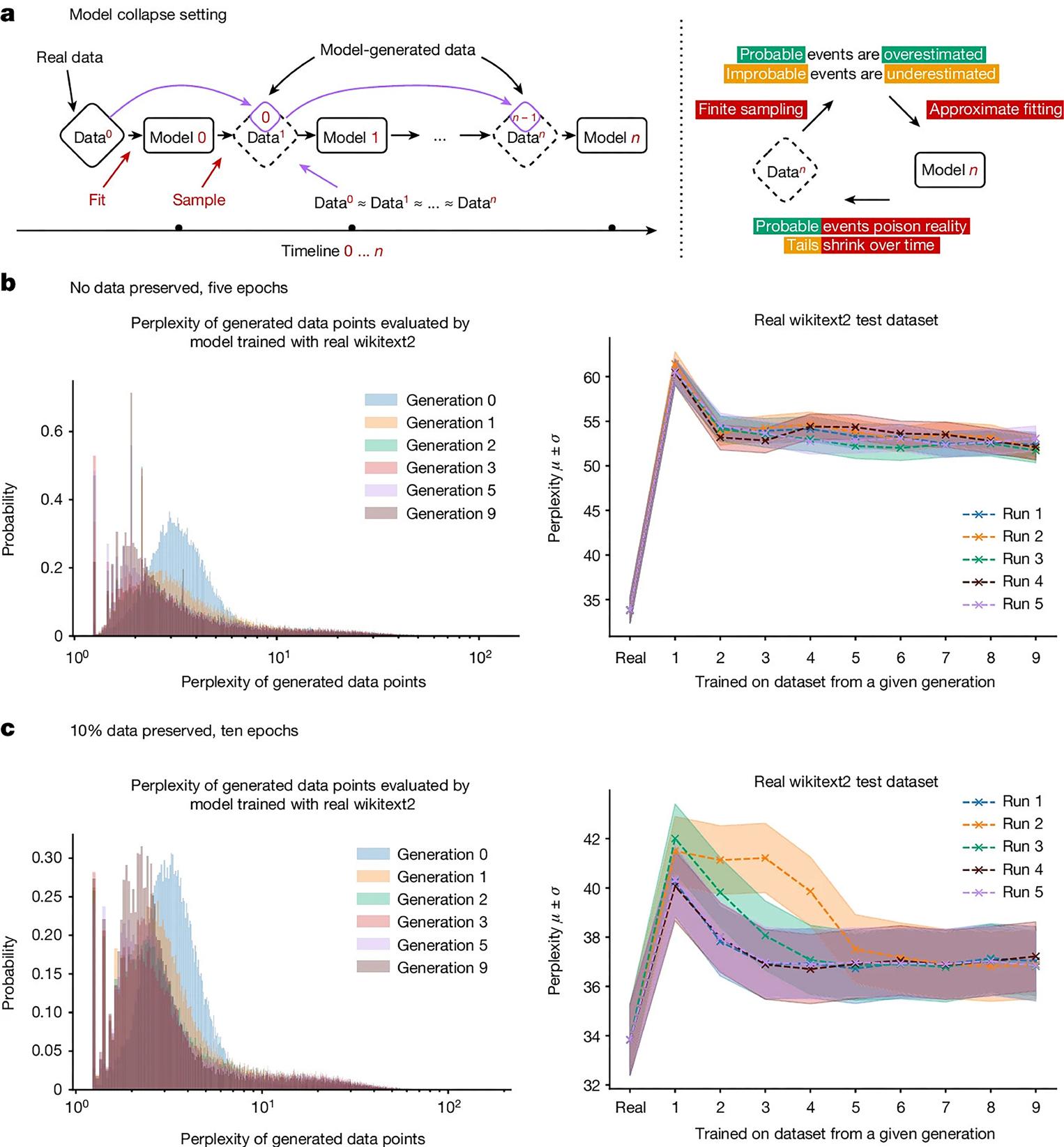

Arguably, the greatest danger to the future of AI is AI itself. Numerous academic studies conducted over the past year have highlighted an apparent irony in our current approach. Essentially, the more effective AI becomes in serving our needs, the less beneficial it ultimately may become. This phenomenon is referred to as model collapse.

As illustrated in Fig. 1, LLMs (Large Language Models) are particularly compelling because they excel at predicting the next likely word, pixel, or other data points in their outputs. These predictions are highly accurate because they tend to average out the possible results. I previously mentioned this issue as a quality concern where the models generally generate “average” content.

A more intrinsic issue emerges from this statistical behavior. If we deploy AI in the real world and the volume of data produced by AI systems increases, the model becomes increasingly skewed by this averaging effect. Paradoxically, the more we use AI effectively, the more challenging it becomes to train it for future utility, leading us towards even more homogenized content.

Even with first-generation models today, you can observe a kind of uniformity in system outputs. As an experiment, try entering 12 different prompts about an elephant or another favorite animal into your preferred image generation platform. Then, use an image search engine to find real-world animal pictures. You’ll notice a distinct similarity in the AI-generated images compared to the natural diversity found in actual photos.

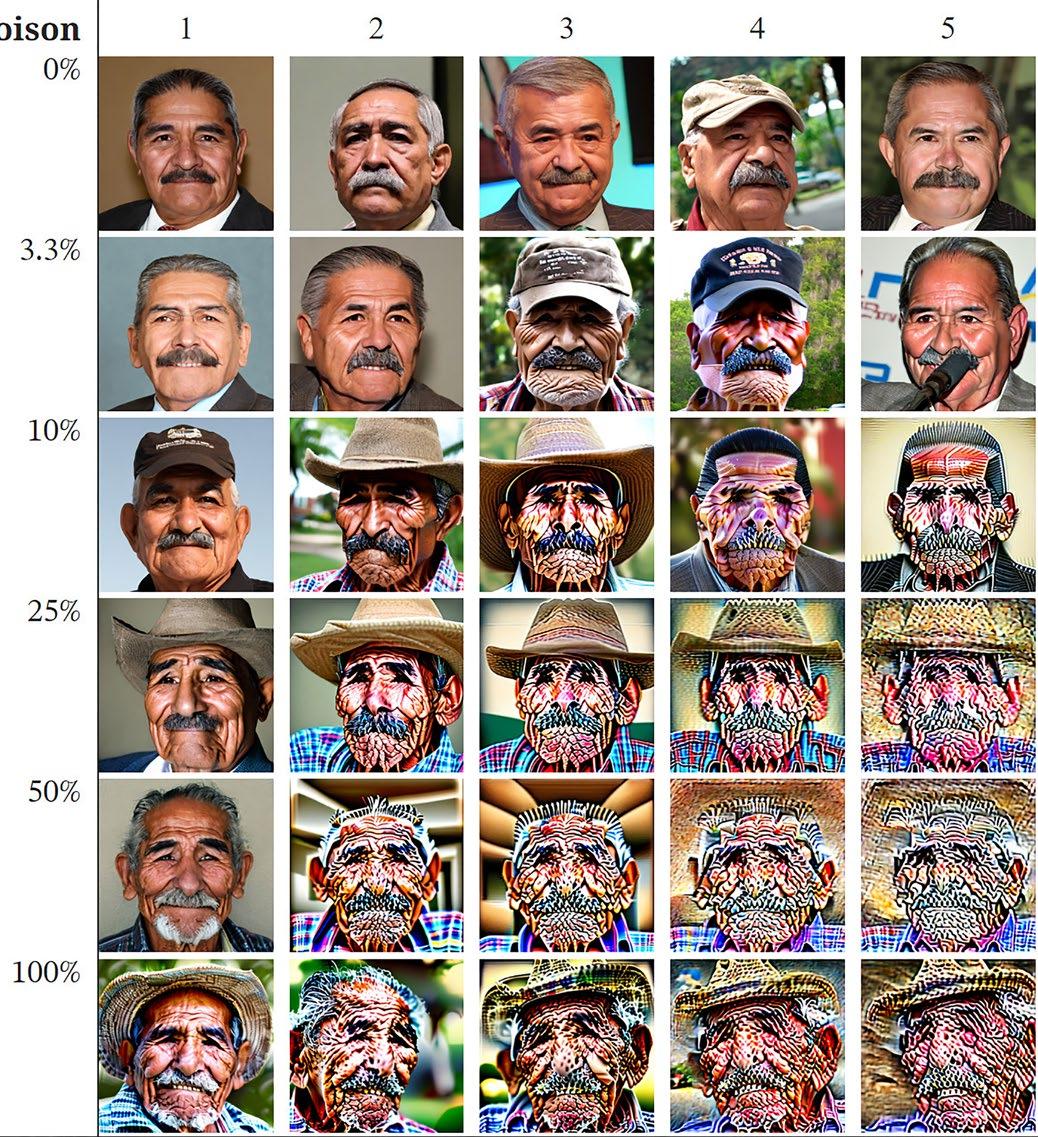

After merely five generations of training on datasets containing AI images, models start producing remarkably similar images. The greater the proportion of AI-generated data within the dataset, the more pronounced this problem becomes.

Introducing AI-generated data into the training set may lead to various other issues, especially if a broader variability is permitted to prevent uniformity. Rare events, like visual artifacts, could become more prevalent and rapidly degrade the dataset in unusual ways, as illustrated in Fig. 2.

I compare the model collapse issue to the problems our industry has faced with analog generational loss. Just as we experienced increased dropouts and image fuzziness with successive generations of tapes, model collapse behaves similarly in its impact.

There are various techniques under discussion to prevent model collapse, but they all present their own challenges. One commonly suggested method is to train exclusively on data that hasn’t been generated or influenced by AI. However, if AI effectively serves its purpose, such data will become increasingly scarce. This situation presents a significant irony not lost on this particular human!

One of the principal challenges with large language models (LLMs) lies in the inherent bias present in the datasets used for their training. These datasets are frequently sourced from the internet or private data collections.

Considering that over 99.999 percent of worldwide information and experiences are neither online nor digitized, these sources introduce a significant bias in training. The sheer volume of “data” consumed by an individual human daily far exceeds the amount available on the internet. And most of this is neither particularly compelling nor worth preserving and will never be in a form a computer can absorb.

Moreover, the simplest and most apparent realities are seldom directly published on

websites, blogs, or other online platforms. Consequently, data is inherently biased towards what we find interesting enough to publish. A pertinent example is the prevalence of smiling faces in image searches for people. Individuals typically post and store images where they are smiling, which skews the dataset. This bias results in generated images frequently depicting smiling individuals, which does not reflect real-life diversity.

I am inherently optimistic. Despite the challenges I’ve mentioned, I am enthusiastic about the innovative solutions being tested to address them. It is crucial, however, for media technologists to gain a deeper understanding of the fundamental workings of these technologies to effectively implement them within their organizations.

In my next article, I will explore the essential skillsets we need to develop over the next few years to ensure successful adoption. l

John Footen is a managing director who leads Deloitte Consulting LLP’s media technology and operations practice. He can be reached via TV Tech.

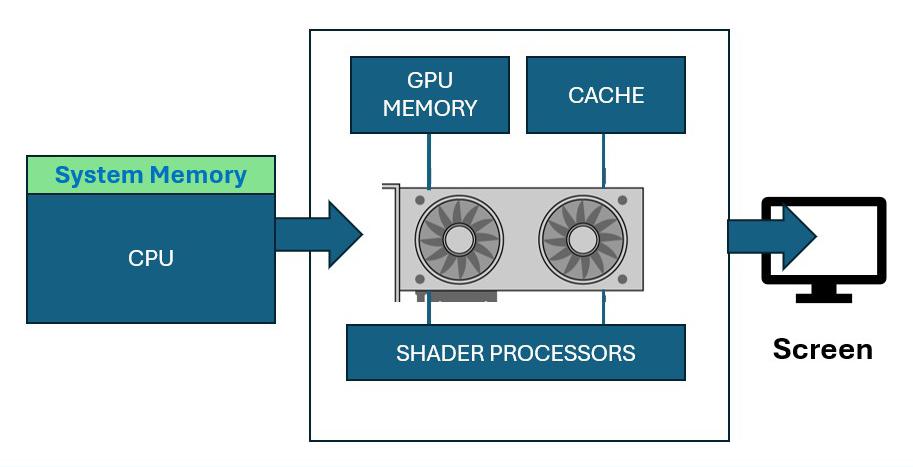

In this installment we will investigate how a cloud processing system is structured to address and react to artificial intelligence technologies; how a GPU and CPU (central processing unit) compare architecturally and application-wise. It is important to know that GPU (server) devices are generally employed more frequently than CPU servers in AI cloud environments, particularly for tasks related to machine learning and deep learning.

KARL PAULSEN

There is comparatively little parallel processing done in this type of CPU operation vs. GPU operation for AI processes. A CPUbased cloud’s principal functionality is for arithmetic/computational functionality for database or ordered processes such as those in human resources, pharmaceutical or financial functions. The general practice compute or storage-based cloud is composed of a great deal of servers made up primarily as CPU-type architectures with a modest number of multiple cores (somewhere around 4 to 64 cores per processor) per CPU and a lot of general purpose I/O (input/output) type interfaces connected into the cloud network. These CPU devices are designed mainly for single-thread operations.

The primary reason for this is that CPUs are not well-adapted for multirepetitive operations that require continual incremental changes in the core systems such as for deep learning, machine learning (ML), large language models (LLM) or for applications aimed at AI.

While CPUs are versatile and essential for many tasks, the GPU’s efficiency for deep learning is much better and used in most all-AI multithreaded workflows, which will be exemplified throughout this article.

GPUs—the more familiar term for a “Graphics Processing Unit”—are designed with thousands of cores with their primary purpose to enable many calculations simultaneously. Functionally, this makes the GPU-based compute platform ideal for the highly parallel

nature of deep learning tasks, where large matrices of data need to be processed quickly. GPUs, when not specifically employed in AI practices, are often found in graphics functions—usually in the graphics cards. The GPU is integral to modern gaming, enabling higher-quality visuals and smoother gameplay. The same goes for certain (gaming) laptops and/or tablet devices and where the applications or performance vary widely.

A GPU is composed of many smaller and more specialized cores vs. the CPU. By working together, the GPU cores deliver massive performance when a processing task can be divided up across many cores at the same time (i.e., in parallel). This functionality is typical of graphical operations such as shading or polygon processing and replication or real-time rendering, (Fig. 1).

One routine part of AI is its ability to make predictions, aka “interference,” which in AI means “when a trained model is used to make predictions.” The GPU is often preferred when the application requires low-latency and high throughput. Real-time imager recognition and natural language processing are the more common applications where GPUs are used in the cloud. The diagram in Fig. 2 depicts where inference fits in such an AI workflow.

In certain cases, CPUs may still be used for inference, as when power efficiency is more critical or when the models are not as complex. How systems in the cloud apply the solution is sometimes automatic and sometimes driven by the coding solution

as defined by the user.

A method in AI that teaches computers to process data in a way that is inspired by the human brain is called “deep learning.” Such models can recognize complex patterns in pictures, text, sounds and other data to produce accurate insights and predictions. Training deep-learning models will require processing vast amounts of data and adjusting millions (or even billions) of parameters, often in parallel. This capability for parallel processing is a key element in the architecture of GPUs which in turns allows them to handle such AI-tasks much faster and more efficiently than CPUs.

Although CPUs can be employed in training models, the process is significantly slower, making them less practical for training large-scale or deep-learning models. Companies like NVIDIA have developed GPUs specifically for AI workloads, such as their Tesla and A100 series, which are optimized for both training and inference. NVIDIA makes the A100 Tensor Core GPU, which provides up to 20X higher performance over the prior generation and can be partitioned into seven GPU instances to dynamically adjust to shifting demands. As mentioned earlier, general-purpose CPUs are not as specialized for AI, and their performance in these tasks often lags that of GPUs.

The AI software ecosystem, including frameworks like TensorFlow, PyTorch and CUDA, are heavily optimized for GPU acceleration, making it easier to achieve performance gains and as such GPUs will be heavily deployed in cloud-centric implementations. In smaller-scale applications these frameworks can run on CPUs, but they don’t usually offer the same level of performance as when running on GPUs.

Despite their higher cost per unit com-

pared to CPUs, GPUs can be more cost-effective for AI workloads since they can process tasks more quickly, which can lead to lower overall costs, especially in largescale AI operations. For some specific AI tasks or smaller-scale projects, CPUs may still be cost-effective, but they generally offer lower performance for the same cost found in large-scale AI applications.

As AI and ML applications grow, demand for GPU servers has also increased, particularly in AI-focused cloud services provided by companies like Google Cloud, AWS and Azure. Other vendors, such as Oracle, moderate their cloud solutions with specialization in data processing business operations.

Lightweight models routinely utilize simpler CPU-based solutions that need less computationally intensive tasks. Besides such lightweight implementations, often deployed

“on-prem” or in a local datacenter, general-purpose computing may employ CPUs, which are essential for tasks that require versatility or are not easily parallelized.

A general-purpose computer is one that, given the application and required time, should be able to perform the most common computing tasks. Desktops, notebooks, smartphones and tablets are all examples of general-purpose computers. This is generally NOT how a cloud is engineered, structured or utilized.

In scenarios where budget is a concern and the workload doesn’t require the power of

GPUs, CPUs can be a practical choice. However, in similar ways to Moore’s Law, the price to performance ratios for GPUs keep expanding.

Per epochai.org, “…of [some] 470 models of graphics processing units (GPUs) released between 2006 and 2021, the amount of floating-point operations/second per $ (hereafter FLOP/s per $) has doubled every ~2.5 years. So stand by… we may see the cost of GPUs vs. CPUs impacting what kind of processors are deployed into which kinds of compute devices shifting sooner rather than later.

While CPU servers are still widely used in AI clouds for certain “compute-centric” single thread tasks, GPU servers are more commonly employed for the most demanding AI workloads, especially those involving deep learning, due to their superior parallel processing capabilities and efficiency. Given what we hear and read on almost every form of media today, AI will absolutely be impacting what we do in the future and where we do it as well. l

Karl Paulsen recently retired as a CTO and is a long-time contributor to TV Tech. He can be reached at karl@ivideoserver.tv

There have always been some tradeoffs alongside advances in lighting

Wood carvers will sometimes use chainsaws to “rough in,” but finishing requires more subtle sculpting tools. In lighting, as with other crafts, no single tool is perfect for everything. Coarse or refined, our tools shape the process and impact the results. Tools change, and process follows. When I began using lighting CAD, I wondered how it would affect my design process. After all, working with a keyboard and mouse is very different from drafting with plastic lighting templates and pens. It turned out that the resulting designs still held true to my intent, but the process was certainly different. Any tradeoff was a bargain I was happy to make.

There have always been some tradeoffs alongside advances. On balance, there was no doubt it was a net gain. As with my old plastic lighting templates, now languishing in storage, I’m never going back.

While no one wants to backslide from the advances of LED lighting, let’s admit that not all the changes are for the better. These new LED variants work, but they’re not quite the same. Changes in lighting styles ebb and flow with shifting tastes, but I suspect the current trend towards flatter light is steered as much by our new equipment as by our creative intent. Quite frankly, I miss shadows. In a medium that’s basically two-di-

mensional (since the camera has one “eye,” and our screens are flat), a lack of shadows results in fewer three-dimensional visual cues. If our goal is to keep images interesting, shadows are still key. That’s why “hard light” is important.

By tradition, there are two main categories of lights: “Hard light,” which creates highlights and shadows, and “soft light,” which moderates shadows with diffused “ambient” fill. Although that’s a somewhat simplistic explanation, it conveys the idea.

The main “hard light” in video studios has been the Fresnel, while “soft light” includes any fixture (or modifying accessory) that imparts a relatively large light-emitting surface. The interplay of these two qualities of light creates a sense of depth through highlights and shadows. Artists refer to this quality of shading as “chiaroscuro.”

A basic soft light is relatively easy to make. Clump together enough LEDs into a panel, and you’ve got a crude soft light. But a proper Fresnel requires a lot more optical design.

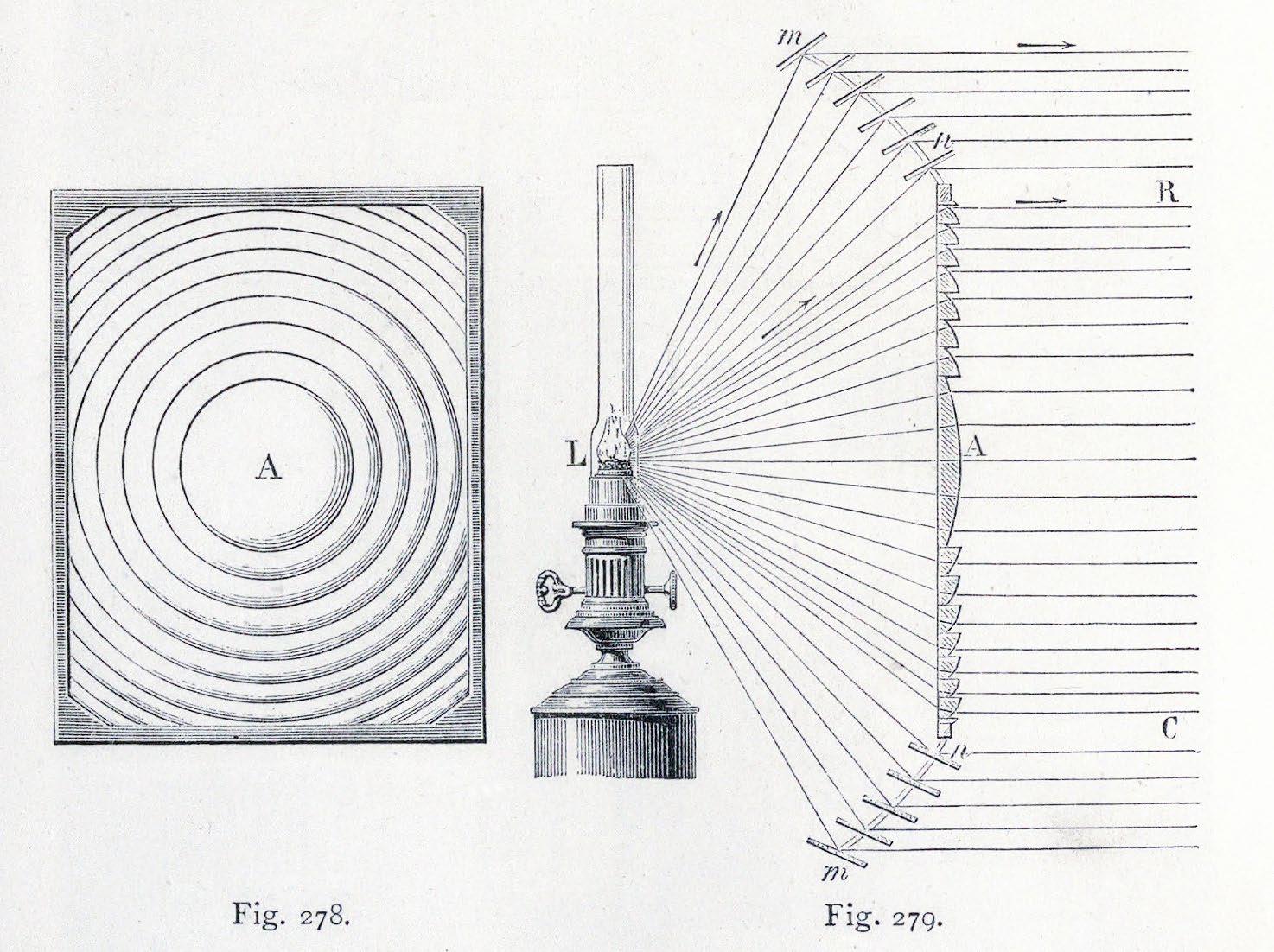

The best of today’s LED Fresnels are quite good, but their beam quality at flood is different than with legacy incandescent Fresnels. That difference lies in the collimation of the beam. Centuries-old designs for lighthouse lanterns worked out this problem based on a single point of radiating light. With the advent of LED, the light propagation behind the lens is different.

Many LED Fresnels are more like softedge wash lights than their namesake; their barndoors are more useful as glare shields, rather than for shaping the beam. The name may be the same, but the tool has changed. For all its advantages, the light from current LED Fresnels isn’t collimated the same. Here’s why.

The compact “luminous capsule” of incandescent and HMI radiates light 360°. Although wasteful from an energy efficiency

standpoint, it’s optically useful behind a Fresnel lens. An incandescent lamp at full flood (when the lamp is closer to the lens), allows the light to cover the entire Fresnel lens.

By reaching all the concentric rings that comprise a Fresnel lens, the light is collimated into a larger, organized beam that’s malleable. At the same time, by filling much of the lens, the larger surface area imparts a “wrapping” quality that provides a subtle roll-off in the shadow it casts, which is more flattering to the face. This is why larger Fresnels have been so popular as talent key lights.

THAT’S [NOT] A WRAP

That “wrap” isn’t the same with today’s LED Fresnels.

LED emitters are both larger and more directional when compared to the radiant 360° “luminous capsule” of incandescent and arc lamps. While more efficient, there’s a tradeoff: When an LED Fresnel is at full flood, the light only engages the very center rings and bull’s eye of the lens. And, because the relative softness of a light source is directly proportional to the size of the light-emitting surface, an LED Fresnel can’t “wrap” the face quite

working surface area of the lens is smaller at flood) than the incandescent version. It’s not the same, but “good enough.”

While clever R&D continues to improve these fixtures with the use of secondary optics (as with the ARRI Orbiter Fresnel accessory), and by redesigning the lenses (as with the innovative DMG Lion),

While no one wants to backslide from the advances of LED lighting,

there are some workarounds to minimize the issue.

One technique to minimize the “wrapping” deficit is to partially spot the fixture focus. This increases the illuminated lens area, although you trade off some beam shaping control because the light collimation declines the further it’s spotted.

An alternate solution to address the reduced beam shaping is to switch to a completely different type of fixture.

“Profile” spotlights (roughly the same as legacy ellipsoidals) can be a better choice, particularly when working at distances greater than 12 feet.

Shutter cuts from a “profile” fixture hold up over distance, whereas tight Fresnel barndoor cuts lose beam edge cohesion over longer distances. And since every light becomes a point source when far enough away, “wrap” eventually becomes irrelevant at these greater distances.

The advantages of LED light fixtures over legacy incandescent versions are tremendous, yet we’ve also lost something along the way. While current LED Fresnels fill the gap, I haven’t stopped hoping for a revised design that restores some of what was lost in the process without giving up the gains. “Good enough” isn’t a final destination. It’s just the latest point on the rising line of progress. l

Sony’s ECM-L1 is a new professional-quality lavalier microphone that features plug-in power and professional audio technology that ensures every word and nuance is captured with precision. The 3.5mm mini plug providing plug-in power support is designed for an extensive range of uses, including direct connection to Sony’s Alpha series cameras equipped with aux audio inputs or wireless microphone transmitters. Featuring a compact pin design, it can be discreet without sacrificing sound performance, making it perfect for vlogging, interviews, documentaries, and livestreams.

ADDERView Matrix C-Range is a new addition to the Adder’s KVM matrix portfolio, bringing enterprise-grade KVM capabilities to more streamlined applications with a ‘plug and play’ architecture that means users can deploy and configure an IP KVM matrix easily. Ideal for broadcast studios, space-constrained control rooms and industrial remote access control environments, ADDERView Matrix delivers a streamlined IP KVM matrix solution and an unrivaled user experience for organizations that prioritize ease of use and minimal maintenance.

The ECM-L1 is designed to meet top-tier reliability and quality demands. Its capsule is built with a fixed electrode plate made of a rigid ceramic. Its superior vibration properties deliver excellent sound quality with improved rise time and high resolution. Externally, the microphone capsule is covered with a machined brass exterior to suppress unnecessary external resonance, enabling clear, crisp sound pickup. z www.electronics.sony.com

GV’s new LDX 110 and compact LDX C110 version are entry-level native UHD cameras suitable for a wide range of applications, including sports, studio and entertainment. The LDX 110 series cameras offer a native UHD 2/3-inch CMOS-Imager equipped with Global Shutter technology. Both models support native HDR and WCG (Wide Color Gamut) operation (PQ, HLG, S-Log3), with onboard custom LUT processing within the camera head for precise HDR-to-SDR conversion.

The LDX C110 features XCU connectivity, a feature only previously found on higher-end models. The LDX 110 series also includes NFC licensing functionality and the Grass Valley Scanner App, providing enhanced options management and diagnostics even while the camera is not powered. The LDX 110 series supports video formats from 1080i and 1080p up to native UHD. z www.grassvalley.com

The Brightcove AI Suite launches with five new AI-powered solutions: AI Content Multiplier, AI Universal Translator, AI Metadata Optimizer, AI Engagement Maximizer, and AI Cost-to-Quality Optimizer. Each is available to existing customers as a pilot program for a limited time at no additional cost. General availability is planned for later this year. The offerings help customers create and optimize content, grow engagement and monetization, and reduce the costs of creating, managing, and delivering content without sacrificing quality.

As part of this initiative, Brightcove is using models from industry leaders like Anthropic, AWS and Google to add the power of their generative AI models to Brightcove’s solutions. Additionally, Brightcove is integrating AI solutions from other partners, including CaptionHub and Frammer. The Brightcove AI Suite will initially focus on solutions in four key areas: content creation, content management and optimization, content engagement and monetization and quality and efficiency. z www.brightcove.com/en

Comprising a single-head ADDERView Matrix C1100 user station, and a choice of DisplayPort or HDMI transmitter connectivity via the ADDERView Matrix C110 computer access modules, users can add a network switch, pre-configured for KVM and AV-over-IP functionality, to build a simplified IP KVM matrix that suits their requirements. Each user station is a master controller of up to 16 sources at any one time, and a single computer access module can connect up to 256 different user stations, ensuring deployment for any user scenario is simple, scalable and flexible.

z www.adder.com/en

Designed to operate as a fully self-contained unit, Ikegami’s new IPX-100 includes an integral signal generator that allows system configuration before cameras or other sources are connected. ST 2110 connectivity can be set up independently by IT specialists prior to an event. On the day of the event, the cameras can then be connected easily to the IPX-100 by a conventional SMPTE-hybrid fiber cable with up to 2.174-mile (3.5 km) cable extension. The camera head is powered by the IPX-100 so there is no operational difference compared with using a conventional fiber-cabled camera.

The IPX-100 converts the broadcast signals to ST 2110-compliant signals and serves as a gateway to IP. The IPX-100 is also available with an optional 3G/HD-SDI input/output to serve as an external SDI-to-IP or IP-to-SDI converter.

z www.ikegami.com

Sennheiser’s new Spectera digital wireless audio transmission system delivers bidirectional wideband functionality by leveraging WMAS (Wireless Multichannel Audio System). Using WMAS technology enables Spectera to reduce wireless system complexity, while at the same time increasing capability, enabling time-saving workflows and offering full remote control and monitoring, including permanent spectrum sensing. Spectera features bidirectional bodypacks that manage both digital IEM/IFB and mic/line signals at the same time. The solution is resistant to RF fading and allows for flexible use of a wideband RF channel, for example for digital IEMs with a latency down to 0.7 milliseconds. Bidirectional digital wideband transmission addresses many common challenges, such as overly complex frequency coordination and complicated rack cabling for high channel counts as well as the large footprint typical of a multichannel wireless system. z www.sennheiser.com/en-us

Calrec’s new compact Argo M audio console targets broadcasters and other media professionals requiring a highly capable console in a small footprint with Argo M. Available with 24 or 36 faders, Argo M offers the exact same feature set and operational familiarity as the company’s larger Argo Q and Argo S consoles. A plug-and-play SMPTE ST 2110-native console, Argo M features integrated DSP processing and no networking or PTP sync required for independent operation. It also has built-in analog and digital audio I/O and GPIO, 3 x modular I/O slots for further expansion and a MADI I/O port via an SFP.

The new AV Delay Compensator is LYNX Technik’s latest addition to its greenMachine Testor solution, streamlining the testing and measurement process of audio timing errors and instantly correcting such problems. Testor is well-known in the industry as an audio and video signal test signal generator for UHD/HD/SD 12G, 3G SDI signals hosted on the greenMachine platform. The new AV Delay Compensation feature enables facilities to measure and test embedded audio timing errors and fix them in an instant.

The console supports 5.1.2, 5.1.4, 7.1.2 and 7.1.4. immersive paths for input channels, busses, metering and monitoring as standard and the same Calrec Assist UI for remote control on a standard web browser. The Calrec Connect AoIP system manager makes managing IP streams easy and adds essential broadcast functionality.

z www.calrec.com

Panasonic Connect’s new AK-UCX100 captures clear, high-quality content in live event environments that can pose challenges with shifting lighting conditions and dynamic backgrounds. The camera’s new imager offers high sensitivity and dynamic range, easily adapting to both a darkened auditorium and venues with dazzling lighting effects. Its colorimetry has also been optimized to account for any new challenges with LED lighting and large display walls while the camera’s HD low-pass filter helps manage the effect of moiré in LED walls. This allows venues to easily create the same experience for fans whether they are in-person or tuning in from home.

With improved resolution and 4K capabilities, the AK-UCX100 achieves a resolution of 2000 TV lines both horizontally and vertically, capturing detailed, ultra-high-definition images of the subject from any angle. Plus, the wide dynamic range and color gamut of HLG/BT.2020 lets content creators capture colorful, immersive stage performances for the most advanced display technologies. z www.connect.panasonic.com/en

Measured values can be sent over a network to a second greenMachine that precisely corrects all AV delay issues in the video path. Each stage of the broadcast signal chain can introduce delay, whether due to latency issues, transmission or signal processing methods. Through advanced signal processing, Testor’s AV Delay Compensation feature enhances efficiency and accuracy in maintaining AV timing and lip sync.

z www.lynx-technik.com

By Mike Buetsch Director of Broadcast Engineering & Production Chess.com

PALO ALTO, Calif.—Chess is a unique game, and the reasons why people become interested in it are varied. Much like other games, it requires skill, attention, and strategic thinking. But the length of a match is never the same, and the longer it is, the longer the tension builds. For a viewer, catching every second counts. Especially if they’re tuning in virtually.

At Chess.com, we have a loyal, international, growing community of chess enthusiasts, currently producing for 150 million users and counting. Chess.com consistently delivers more than 300 productions annually, encompassing live tournaments, interviews, instructional content, and community events.

Because we want to make chess available to everyone—and

the demand keeps increasing— investing in a higher quality production is the natural next step. From the basic OBS setups we were using to keep up with the increasing demand, we decided to create a completely remote broadcasting solution that lives entirely in the cloud. At first, the idea was to build a physical studio, but that was quickly scratched—since we are a fully remote company, it made the most sense to create a fully remote broadcasting solution. This meant we wouldn’t be tied to physical constraints, and every member of the production team would be able to access whatever they needed for the productions.

Working closely with teams at Vizrt, NDI, and ecosystem partner Advanced Systems Group (ASG), the answer came in the form of a “virtual production truck,” with a setup that uses Vizrt’s TriCaster Vectar and NDI technology at its core, hosted on Amazon Web Services (AWS), with Viz Flowics providing HTML5 graphics.

We looked at just about every single vision mixer available in the cloud, and ultimately landed on TriCaster Vectar as the tool that best supported our needs. We needed to be able to ingest a hefty number of inputs while also being able to support a wide range of production models. TriCaster Vectar enables us to operate both in small crew environments, where the Technical Director wears a lot of hats like audio and video playout, but also to flex up to a larger, fully remote crew where we break those roles away from the switcher.

With TriCaster Vectar’s native ability to incorporate highquality HTML5 graphics, we can add audience comments from different social media platforms, including YouTube Live and Twitch, during live games. It’s crucial for us to let fans share their experiences and reactions, and nurture that sense of community.

Chess is an incredibly graphics-heavy broadcast, and

TriCaster Vectar being able to bring in HTML5 graphics without consuming the available NDI inputs, was also a big win for us. We regularly use between 8-10 animation buffers as our production graphics channels; it’s basically become 10 additional inputs strictly for graphics and allows us to fully max out the 44 inputs for cameras and video playback on larger productions.

It took just about a month and a half to put together the virtual production truck. I know it sounds insane, but we connected everything through cloud-based software, while leveraging the flexibility of NDI.

This virtual production workflow allows Chess.com’s employees in Europe, South Africa, India, and the United States to have access to all the live production tools they’d expect to see in a traditional studio or broadcasting truck. Telos Infinity VIP provides intercom to 18 physical intercom panels installed in employees’ home offices, as well as offering up to 41 virtual panels so they can seamlessly collaborate despite being fully remote.

With the scalability of this cloud-based system, Chess. com can continue to expand our production capabilities without the need for frequent hardware upgrades, so we can grow as our community grows. l

Mike Buetsch is a seasoned professional with over a decade of experience in gaming and esports live production who joined Chess.com two years ago. He can be reached at mike.buetsch@chess.com.

More information is available at www.vizrt.com and https://ndi.video.

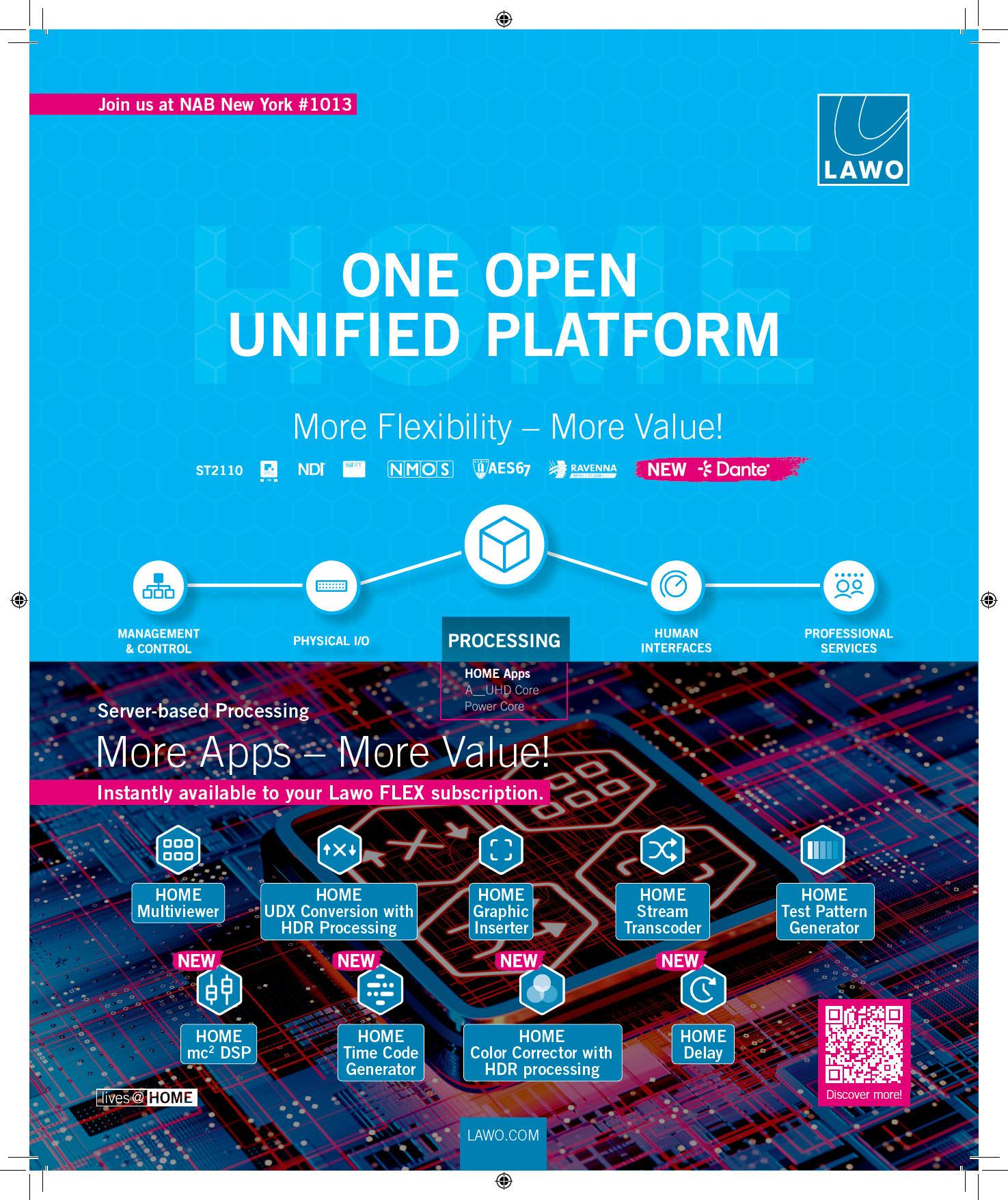

With the industry changing ever faster, existing dedicated hardware may not be able to handle new delivery channels and formats. Lawo’s HOME Apps are designed to address this with a flexible, instantly scalable processing solution that runs anywhere. Lawo’s HOME Apps decouple processing from the hardware it runs on, providing users with the broadcast and media functionality users need, when and where they need it, with seamless scalability and a revolutionary commercial model. They also offer microservices for macro agility.

The current HOME Apps portfolio, integrated into the HOME management platform, comprises the following: HOME Multiviewer; HOME UDX conversion with HDR processing; HOME Stream Transcoder; HOME Graphic Inserter; HOME mc² DSP; HOME Test Pattern/Test Tone Generator (TPG); HOME Color Corrector with HDR Processing; HOME Timecode Generator; HOME Delay. www.lawo.com

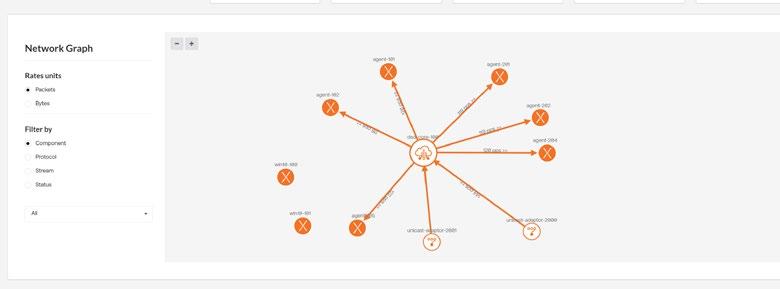

TVU Networks’ Software as a Service platform provides visibility and operational control across cloud, on-premise and hybrid environments, giving media organizations a complete understanding of every stage of their production workflows. By offering a fully integrated virtual Network Operations Center at no additional charge for all TVU SaaS subscribers, the NOC gives broadcasters real-time visibility into their entire media pipeline—from contribution to final playout. It extends beyond traditional cloud offerings by providing deep operational control across diverse infrastructure setups on the cloud, on-prem or in a hybrid environment.

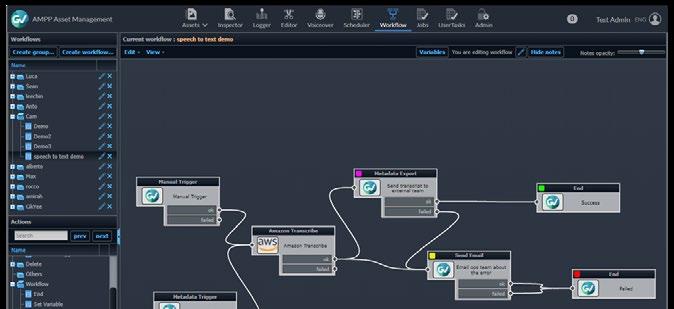

Dalet powers end-to-end content production and media supply chain workflows with Dalet Flex media asset management (MAM) and workflow orchestration, enabling sports organizations and entertainment companies to produce, manage, package, distribute and monetize content effortlessly. Key integrations with digital storefronts like Veritone Digital Media Hub further enhance library and archive monetization opportunities.

Dalet Flex has been updated to include a range of operational efficiency capabilities, including enhanced growing-file management and editing capabilities that accelerate workflows. Improved UX functionality provides faster, easier metadata management and new cost-monitoring capabilities track cloud storage and processing costs. The solution also allows users to organize and curate assets in a central library, search for and update metadata, perform rough cut edits, exchange sequences with editing solutions, and trigger workflows.

www.dalet.com

PlayBox Neo’s software-centric Cloud2TV is based on the concept of virtual channel playout, and incorporates an intuitive web-based control interface with administrator-adjustable rights assignment, TV channel management, systems and user action logging and notifications. Cloud2TV applications are the main components with each functioning individually but in close coordination with the others to provide a full set of broadcast services supporting the workflow of an entire TV channel.

TVU’s platform also offers UDX for up/down/cross-conversion, browser-based video scopes and manual or AI-powered color correction for any IP source and supports NDI, SRT, SMPTE ST 2110, SDI, MPTS and other standards. www.tvunetworks.com

Basic Cloud2TV applications are Content Ingest, Graphic Editor, Media Browser with Meta Data Handling & Clip trimmer, Quality Control & Verification tools, Transcoder, Playlist Manager and Audit Log. New features include updated auto Import functionality; prevention of publishing Playlists that contain media files that have not been QC checked; audio normalization after QC check and other capabilities. www.playboxneo.com/cloud-solutions

AWS Elemental MediaConnect, a high-quality transport service for live video, delivers the reliability and security of satellite and fiber-optic combined with the flexibility, agility, and economics of IP-based networks. It features secure, reliable live video transport; the ability to achieve uninterrupted, high-quality video delivery by adding a QoS layer over standard IP transport; industry-standard encryption for secure live video control and distribution; savings of 30% or more compared to a typical satellite primary distribution use case, which has 70 destinations.

More specifically, AWS Elemental MediaConnect Gateway transmits compressed live video between on-premises multicast environments and AWS, providing real-time critical video quality metrics, with broadcast-standard alerts to identify issues and maintain confidence that video is delivered.

www.aws.amazon.com/mediaconnect

swXtch.io has add support for new features in its cloudSwXtch intelligent media network to help broadcasters move live production workflows to the cloud. cloudSwXtch now supports JPEG-XS compression flows, allowing the movement of visually lossless video at very low latency into and through the cloud. Support for JPEG-XS compression also addresses the challenge of managing the heavy bandwidth and high costs of uncompressed video in the cloud while retaining premium broadcast-quality pictures.

swXtch.io has also implemented Network Media Open Specifications (NMOS) workflows in cloudSwXtch, streamlining the management and orchestration of media workflows in the cloud and ensuring they align with on-prem systems. Benefits include a unified language for endpoints and routing control systems and less time spent on provisioning, utilizing and upgrading network infrastructure. www.swXtch.io

Haivision StreamHub is a powerful mobile and IP stream receiver, decoder, and distribution platform that takes in live feeds and files from Haivision Pro and Air transmitters, Rack encoders, and the MoJoPro app. It can be deployed on-prem or in the cloud for integration with all types of live production workflows. StreamHub has been designed to meet the demand of fieldbased camera operators and live broadcast producers needing to share live video contributions and return feeds over mobile and IP networks.

Evertz DreamCatcher BRAVO Studio virtual production control suite is a collaborative, all-in-one production platform that provides ingest, playback, live video/audio switching, replays, graphics and more, making it ideal for sports, local news, studio, and non-broadcast productions.

Supporting both H.264 and HEVC with resolutions up to 4K UHD, StreamHub is designed to receive, decode, and distribute live video for remote production workflows and includes a two-way IFB or audio intercom. This allows for real-time communication and the management of video returns to provide remote operators with studio feeds, confidence monitoring, and teleprompters.

www.haivision.com

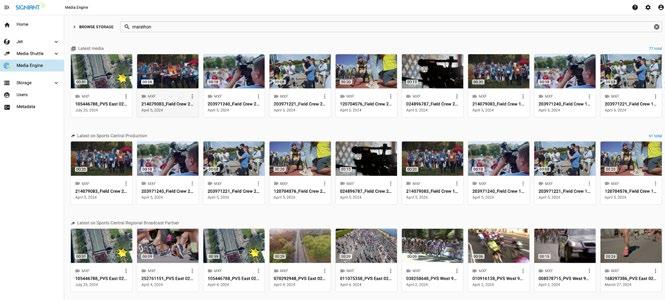

Signiant Media Engine offers a unique solution to the challenge of managing media assets in cloud object storage spread across multiple storage types and locations by providing a unified view across all Signiant-connected storage locations—whether on-premises or in the cloud. This single pane of glass view enhances visibility and access to all media assets, allowing users to quickly find and use content without the need to ingest files or make decisions between storage types or locations. By streamlining access and eliminating barriers between different storage environments, Signiant Media Engine ensures that teams can efficiently and effectively interact with the content they need, no matter where the assets are stored.

www.signiant.com

Blackmagic Cloud Store provides users with high performance network storage. By understanding Blackmagic Cloud media sync and with support for DaVinci Resolve proxy files, this means that the camera media will sync the moment shooting begins, allowing the post production team to start working immediately.