S Y N I M M E R S I V E

M.ARCH THESIS DESIGN PROJECT

FOR BARTLETT SCHOOL OF ARCHITECTURE (2022)

Exhibited: Ars Electronica ‘22 (Austria), London Festival of Architecture ‘22

Software Input: Unity (Central Processing Plat form), HTC Vive (Motion Capture System), Arduino (Wearable Devices)

Syn-, “acting together” or “united”, presents an immersive dreamscape in which the experience and data analysis of togetherness are explored.

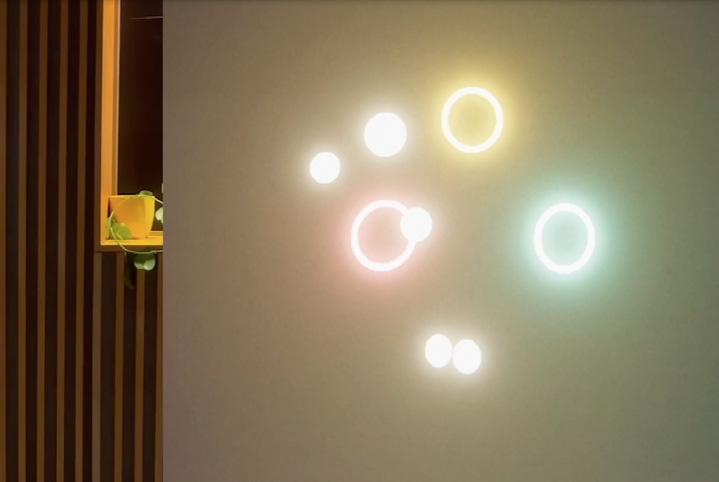

Guided by a system of interactive lights and the ambiguous presence of a performer, participants are prompted to engage in synchronised movement in order to trigger the release of oxytocin, a hormone proven to elevate self-other awareness.

Utilising this method of empathy enhancement, the performance aims to blur judgements and pre-conceived social boundaries, while guiding participants through three stages of cognitive awareness.

TEAM MEMBERS: COURTNEY KLEIN, EVELYNN ZHANG, KIKI LIN

TEAM MEMBERS: COURTNEY KLEIN, EVELYNN ZHANG, KIKI LIN

AREA

Image: Sceneoftheparticipantinteractingwiththelivedatavisualisationofhisheartratevariability

S T A R R Y N I G H T

Software Input: Unity (Central Environment with MediaPipe Blaze Pose), Cinema4D (Animation)

Inspired by the same canvas of night sky people experience despite be ing physically apart, ‘Starry Night’ is envisioned as a collective virtual space where users are able to connect with family, friends, or strangers.

Using real-time body pose tracking through the computer’s webcam, distant users will unified under a virtual dark dome once they put on their VR headset. They are only able to view their own, as well as other user’s head, both wrists and both ankles represented as glowing stars. Users are prompted into interacting with one another by collectively partici pating in choreographed movements to position the stars into specified targets set out within the Unity environment. Once all stars are locked in place, a star constellation will be projected into the sky, lighting up the dome.

Project Duration: 3 Days

Image:

EnvironmentbuiltinUnityand

TEAM MEMBERS: ADAM BRAUN, EVELYNN ZHANG, KIKI LIN, MARY JANE MOUSSA Display(right)ofthe

E M B O D I E D S O U N D

Software Input: Abelton (Audio Processing), Touchdesign (Visual & Audio Interaction), Microsoft Kinect (Motion Tracking)

‘Embodied Sound’ is an interactive audio-visual per formative piece influenced by the current embod ied experience the pandemic has imposed on us.

The performance relates the physicality of social distancing measures ‘choreographed’ upon bodies to the urban soundscape created by the constant announcements of reminders which people have grown so accustomed to as part of their day-to-day lived life.

Improvised movements created by the dancer di rectly trigger and influence the sound played in the space. As the dancer’s body interprets and orches trates a series of audio in real-time, a dialouge starts to emerge between the body in motion, the sound scape created, as well as the visual representation of the moving body and sound particles through space, resulting in a engaging interactive mode of performance.

Project Duration: 3 Days

TEAM MEMBERS: ALEX WARD, DAPHNE CHU, EVELYNN ZHANG, TAFARA MANIKATOUCHDESIGNER MICROSOFT KINECT DANCE

Image: TroubleshootingofAbeltonintheprocessingofsound,whichcanbe manipulatedbythedancer’smovement.

Image: Touchdesignerasthecentralplatformfortheinputofmotioncapture data,musicmanipulationandoutputof aresponsivevisualprojection.

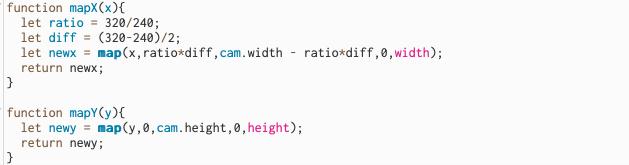

INTERACTIVE PROJECTION

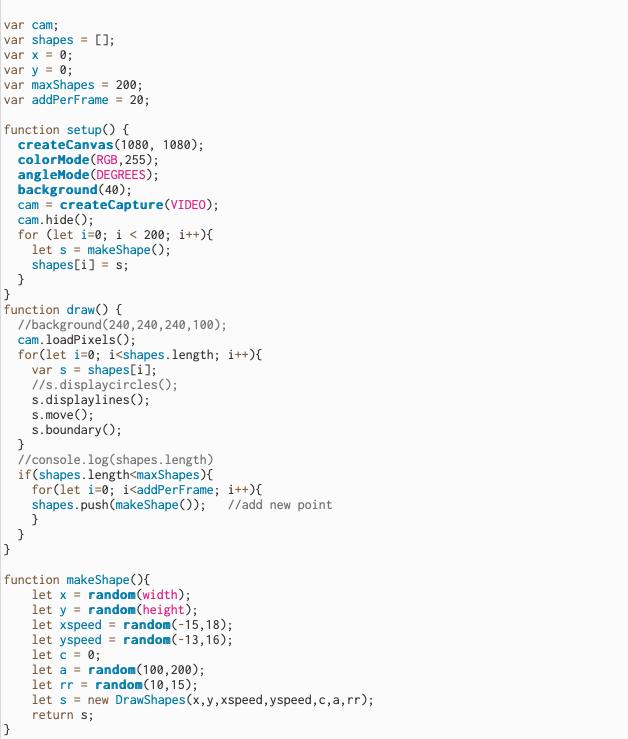

LEFT: P5 JAVASCRIPT

LEFT: P5JS

S E L F P O R T R A I T

This p5 sketch paints a live por trait of anyone that sits in front of the computer with a webcam enabled.

The expressionistic strokes gen erated via the code updates the canvas with the palette of colours captured by the camera as it re sponds in real-time to the chang ing motion of the subject, giving it an intimate interaction between the painter (which is the comput er in this case) and the painted (can be human, objects or space in general).

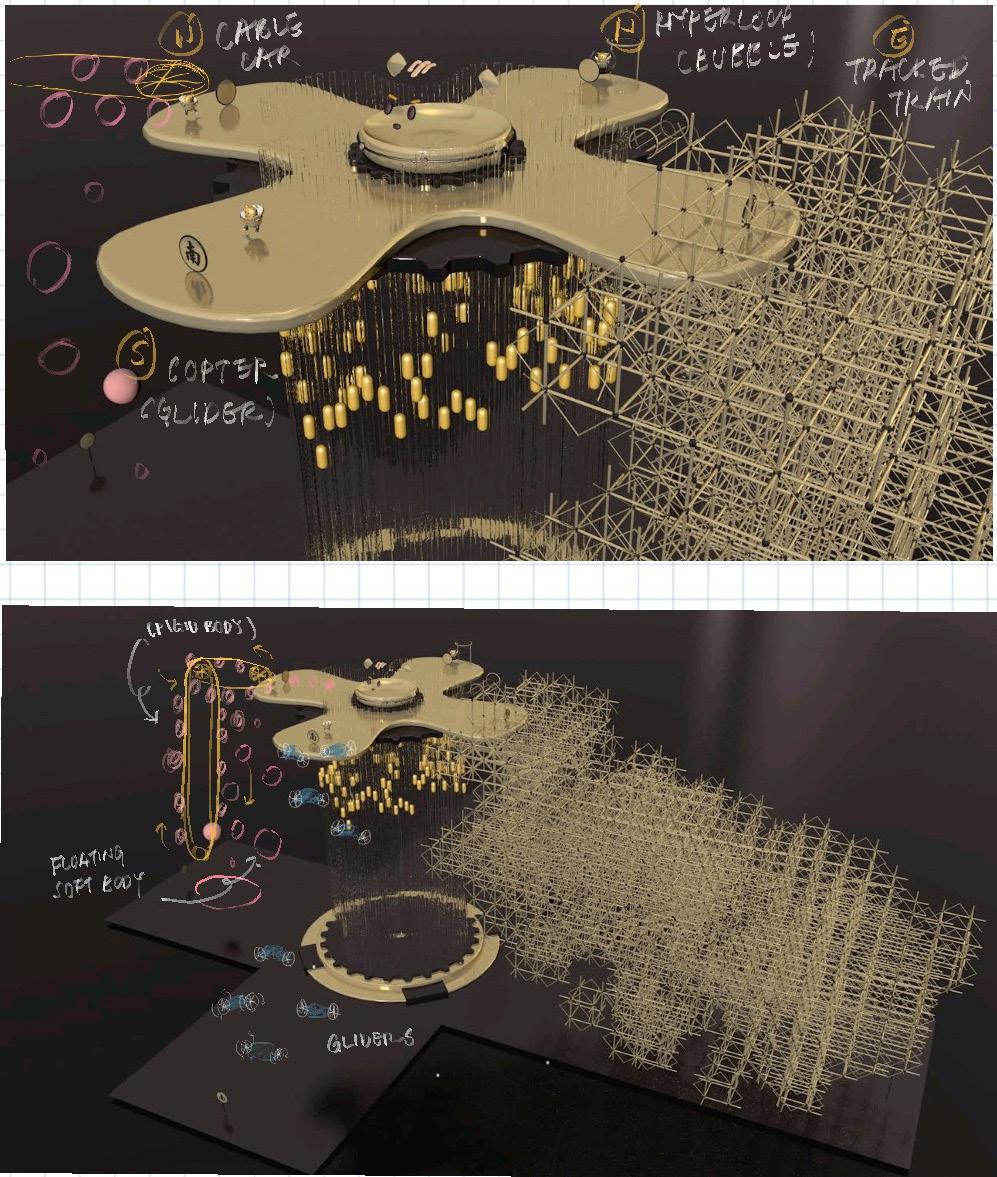

Located in the center of a constantly the station master who oversees the

The station is a place for the convergence serving the little inhabitants of the city. elevated central platform rotates dynamically a fine piece of clockwork - responding as they alight from one transportation into another.

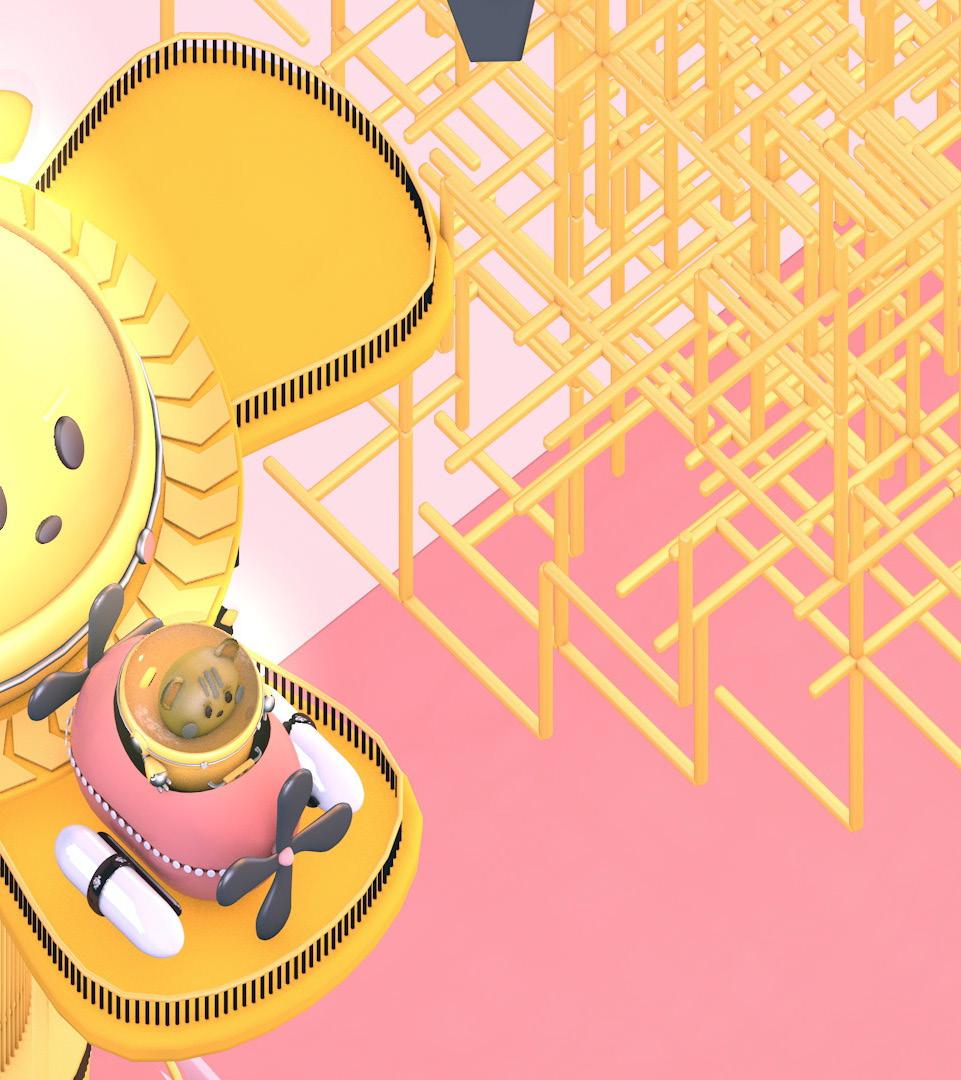

T O R A C E N T R A L E X C H A N G ( S K E T C H W O R K)RIGHT: CINEMA4D

#5

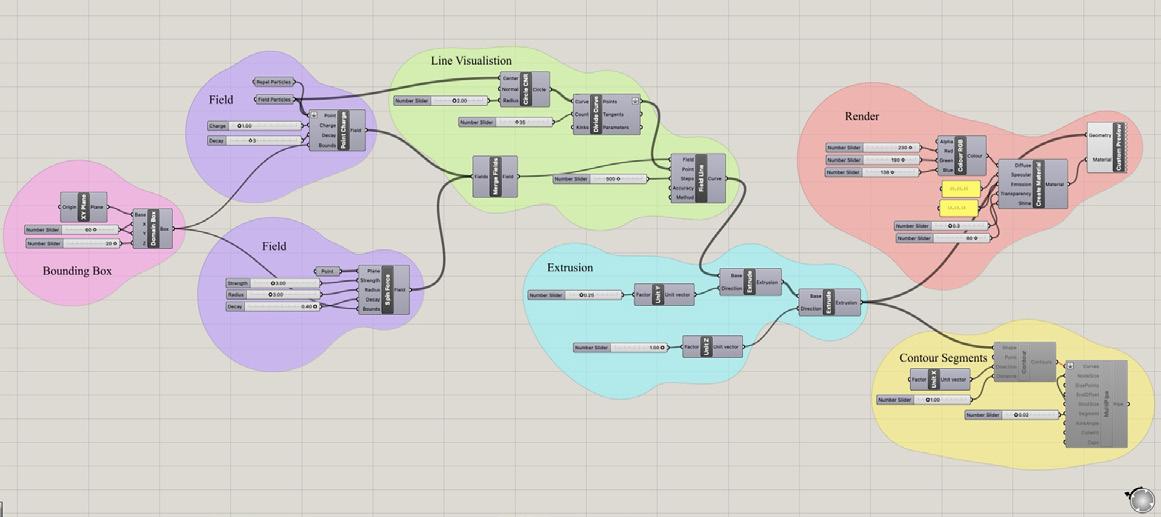

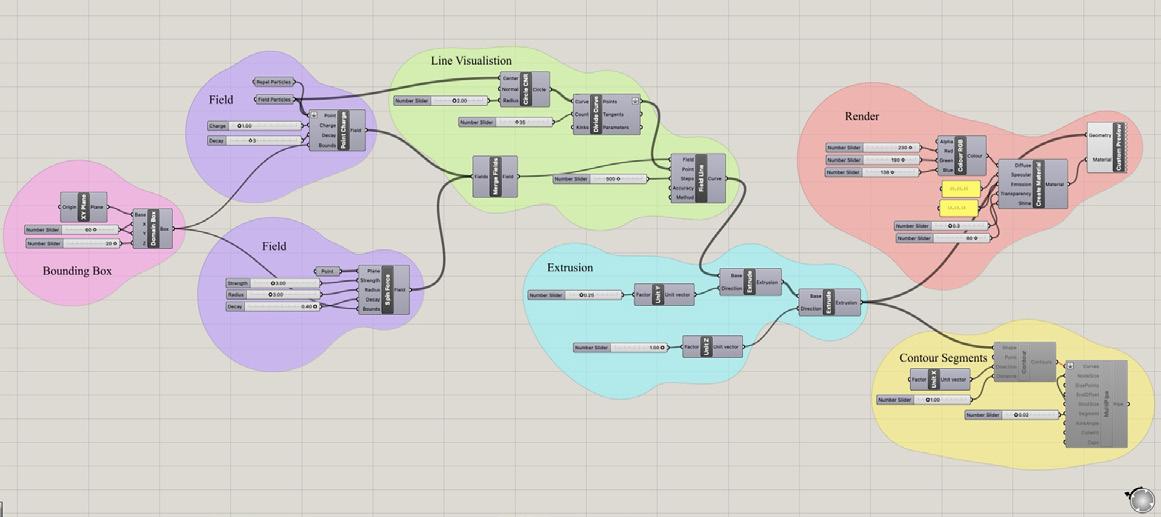

ABOVE: RHINO, GRASSHOPPER

P A R T I C L E F I E L D

This short exercise on grasshopper experiments with the creation of a dynamic morphing gemetrical space through the usage of several changing parameters.

The final visuals are generated primarily from both ‘Spin Force’ and ‘Point Charge’ functions.

Econstantly rotating platform, Tora Robotto is Tora Central Exchange Station.

convergence of different transport systems city. Located on top of a sky tower, the dynamically - almost representational of responding intelligently to the little passengers transportation mode, transit through and departs