NASA find cosmic question mark in space

Human longevity in the 21st century

Researchers make robots controlled by mushrooms

The History of water on Mars

NASA find cosmic question mark in space

Human longevity in the 21st century

Researchers make robots controlled by mushrooms

The History of water on Mars

As a seasoned editor and journalist, Richard Forsyth has been reporting on numerous aspects of European scientific research for over 10 years. He has written for many titles including ERCIM’s publication, CSP Today, Sustainable Development magazine, eStrategies magazine and remains a prevalent contributor to the UK business press. He also works in Public Relations for businesses to help them communicate their services effectively to industry and consumers.

Something interesting happened in my family recently, my wife swam the English Channel to France in a relay as part of a team. It wasn’t long before she was swimming through sea-tossed plastic and discarded rubbish, but the most astonishing part was that the rubbish was very old, certainly not freshly blown from nearby shores. A chocolate bar wrapper that spiralled past her goggles, was from the early 1960s and of course, it’s been rolling around the sea as part of a ‘shoal’ of vintage human leftovers for decades, hidden to all but the fish and now a swimmer in an unlikely place. Plastic bags tumbled about the waves in higher numbers than the jellyfish.

Thankfully we are now taking this seriously in the EU, but the damage has been real, the pollution is a tide that’s not going away. We are having a brutal lasting impact on nature due in part to the way we have been using non-degradable and even toxic materials in, and for, our products.

There is one chilling prediction, that by 2050, the sea will have more plastic in it than fish by weight. And it’s not just the seas that are our dumping ground, our rubbish is a modern weed everywhere you look. Further, we are now finding out about the far-reaching impact of hidden toxic chemicals like the per- and poly-fluoroalkyl substances known as PFAS, revealed prolifically in everyday goods, like paint, waterproof clothing and kitchenware.

We need to change the way we think about products and materials.

The year 2050 has become something of a deadline for Europe. It’s a date that the EU has fixated on for achieving the kind of transformation that is hard to envisage when we are talking about a geography made up of 27 countries.

Ursula Von Der Leyen, President of the European Commission, stated in 2019 that she envisions Europe to be the first climate-neutral continent in the world by 2050 with this ambition made into practical steps through the European Geen Deal. Steps include creating a fully circular economy. “It futureproofs our union” she said. As part of this, there is a drive to banish the so-called ‘take-make and dispose of’ tradition of manufacturing to instead nurture a culture in Industry that develops sustainable and toxic-free manufacturing, with environmentally friendly, degradable, recyclable and sustainable materials and processes.

This is very ambitious and be in no doubt, a big practical and logistical challenge for many businesses. Nevertheless, it is the kind of thinking and leadership that’s necessary for a cleaner future, and a Europe and world that we would want to live in and that we can sustain.

Richard Forsyth Editor

EU Research takes a closer look at the latest news and technical breakthroughs from across the European research landscape.

Researchers in the NORTH project are developing hybrid nanoparticles designed to combine temperaturebased diagnostics with other functions such as drug delivery, as Professor Anna Kaczmarek explains.

The SCANnTREAT project is combining a new imaging modality with X-ray activated photodynamic therapy, which could lead to more efficient treatment of cancer, as Dr Frédéric Lerouge explains.

15

What drives the progression of neurodegenerative diseases like ALS, Alzheimer’s, and MS?

Unravelling the mechanisms of neuroinflammation is at the heart of Professor Bob Harris’s research at the Karolinska Institutet.

We spoke with Prof. Dr. Mathias Hornef about the ERC-funded EarlyLife project, which investigates how early-life infections impact the gut epithelium, microbiome, and immune system development in neonates.

Researchers in the FORGETDIABETES project are working to develop a fully implantable bionic invisible pancreas, which will relieve the burden associated with managing type-1 diabetes, as Professor Claudio Cobelli explains.

23 Longevity in the 21st Century

In the 21st century, scientists are exploring the mechanisms of aging, from telomere degradation to epigenetic clocks and senolytics, to extend both lifespan and healthspan and revolutionize how we age. By Nevena Nikolova

We spoke to McKenna Davis and Benedict Bueb about the work of the transdisciplinary INTERLACE project, which has developed an array of tools, guidance materials, and further resources to build capacities and empower cities and other stakeholders to implement urban nature-based solutions.

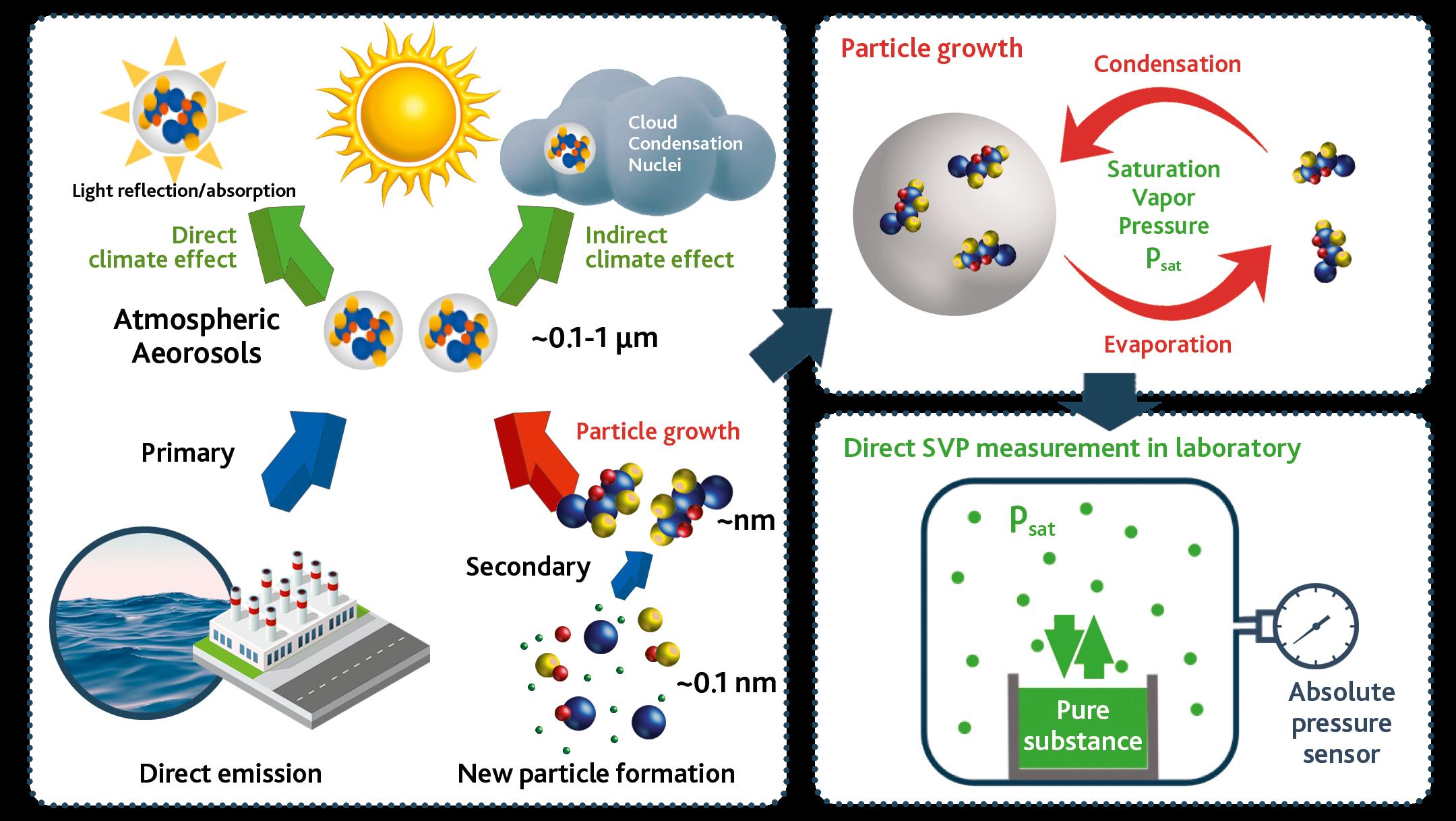

28 A novel instrument for the accurate and direct measurement of saturation vapor pressures of lowvolatile substances

Henrik Pedersen and Aurelien Dantan tell us about their work in developing new instruments for measuring saturation vapour pressure and its wider relevance to understanding the influence of aerosols on the climate system.

30

We spoke to Professor Thomas Kätterer about his research into the impact of different management practices on soil carbon sequestration, and its wider relevance in helping meet emissions reduction targets.

Iron is an important electron acceptor in soil environments for organisms that respire organic carbon to gain energy. Professor Ruben Kretzschmar is investigating the influence of these processes on nutrient and contaminant behaviour.

The Hippiatrica brings together prescriptions for treating different ailments in horses, now Dr Elisabet Göransson is working to bring them to a wider audience.

36 At the Edge of LanguageAn Investigation into the Limits of Human Grammar

Researchers at the University of Aarhus are looking at how people process different types of sentence structures and probing the limits of grammar, as Professor Anne Mette Nyvad explains.

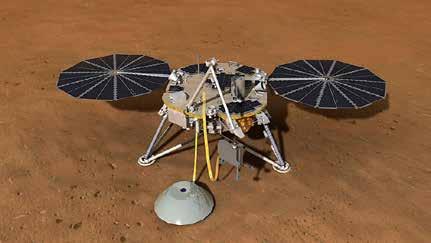

38 MarsFirstWater

The team behind the MarsFirstWater project are investigating the characteristics of water on early Mars, research which holds important implications for future space missions to the planet, as Professor Alberto Fairén explains.

42 OLD WOOD NEW LIGHT

We spoke to Professor Dan Hammarlund about his work as the coordinator of a research project dedicated to making dendrochronology data available to researchers.

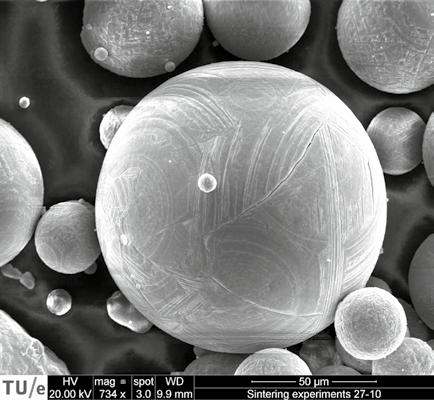

44 MetalFuel

Professor Philip de Goey is exploring the potential of iron powders as an alternative carrier of energy in the MetalFuel project, part of the goal of moving towards a more sustainable society.

46 IPN RAP

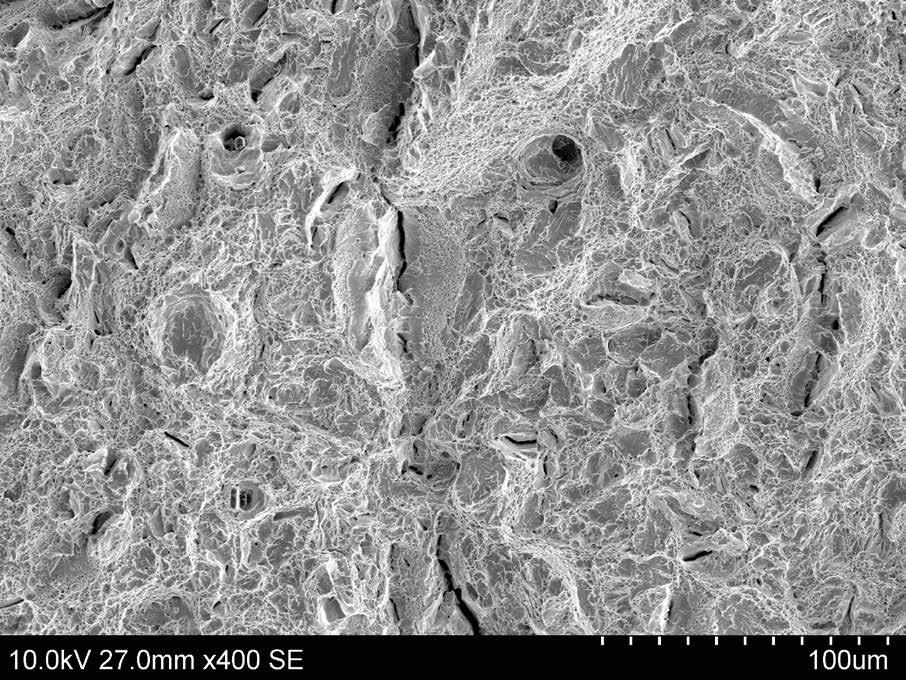

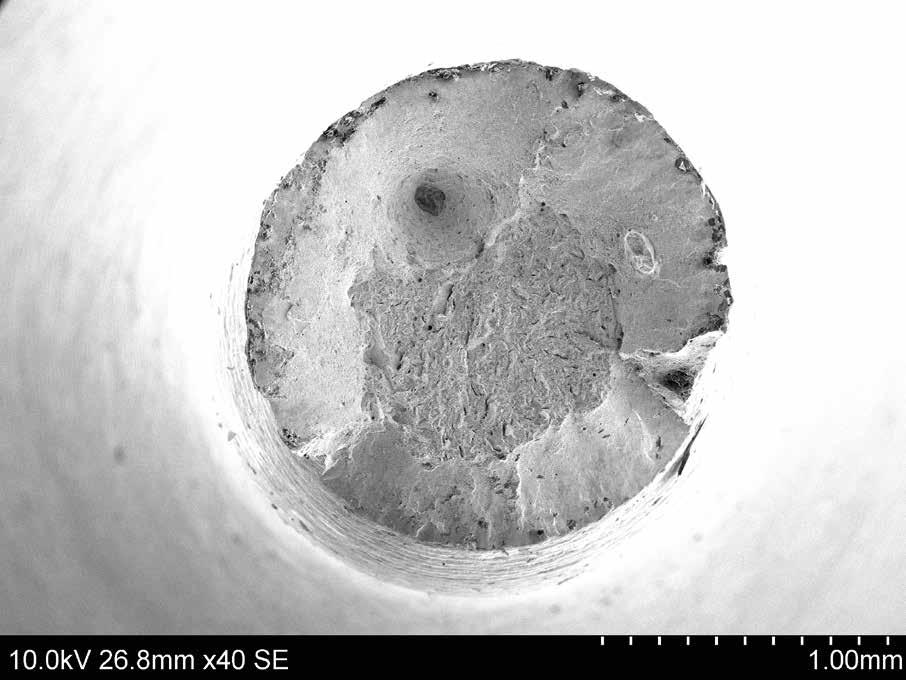

The IPN-RAP project team is creating new knowledge on how to assess the properties of certain steels, ready for their use in artillery products, as Andreas Gaarder and Knut Erik Snilsberg explain.

48 Wide-angle neutron polarisation analysis to study energy and quantum materials

We spoke to Professor Elizabeth Blackburn about how she and her team are using neutron scattering techniques to investigate energy and quantum materials.

50 AUTHLIB: Neoauthoritarianisms in Europe and the liberal democratic response

The AUTHLIB project team is investigating the factors behind the growing appeal of illiberal forces, which can then inform the development of tools to defend liberal democracy, as Professor Zsolt Enyedi explains.

52 The establishment, growth and legacy of a settler colony

We spoke to Professor Erik Green about his research into how the settler economy in the Cape Colony evolved, its impact on indigenous people, and its long-term legacy.

54 MERCATOR

Professor Youssef Cassis and his colleagues in the Mercator project are looking at the way past financial crises are remembered and how memories of them influence the bankers of today.

EDITORIAL

Managing Editor Richard Forsyth info@euresearcher.com

Deputy Editor Patrick Truss patrick@euresearcher.com

Science Writer Nevena Nikolova nikolovan31@gmail.com

Science Writer Ruth Sullivan editor@euresearcher.com

Production Manager Jenny O’Neill jenny@euresearcher.com

Production Assistant Tim Smith info@euresearcher.com

Art Director Daniel Hall design@euresearcher.com

Design Manager David Patten design@euresearcher.com

Illustrator Martin Carr mary@twocatsintheyard.co.uk

PUBLISHING

Managing Director Edward Taberner ed@euresearcher.com

Scientific Director Dr Peter Taberner info@euresearcher.com

Office Manager Janis Beazley info@euresearcher.com

Finance Manager Adrian Hawthorne finance@euresearcher.com

Senior Account Manager Louise King louise@euresearcher.com

EU Research

Blazon Publishing and Media Ltd 131 Lydney Road, Bristol, BS10 5JR, United Kingdom

T: +44 (0)207 193 9820

F: +44 (0)117 9244 022

E: info@euresearcher.com www.euresearcher.com

© Blazon Publishing June 2010 ISSN 2752-4736

The EU Research team take a look at current events in the scientific news

The current Bulgarian EU Commissioner cannot stay on in the new European Commission for personal reasons. Iliana Ivanova, who has been responsible for Innovation, Research, Culture, Education and Youth in the first von der Leyen cabinet, announced on her Facebook profile: “I have been immensely honoured and privileged to serve as a commissioner in the last year of the term of the current European Commission!”

Ivanova thanked the Bulgarian authorities and citizens for the trust, the broad support and the opportunity to represent Bulgaria at the highest European level. “During this dynamic period, I have made every effort to discharge the duties assigned to me within a portfolio of key importance for the future, with a considerable budget. I am proud of everything that my colleagues and I have been able to

achieve in such a short time,” she said.”I am convinced that Bulgaria will remain well-represented in the coming years in making the most important decisions for the European policies,” she said.

Iliana Ivanova was appointed by the Council of the European Union as the new European Commissioner from Bulgaria on September 19, 2023. She replaced Mariya Gabriel for the remainder of the Commission’s term. Gabriel resigned from the post to become deputy prime minister and foreign minister of Bulgaria, with the prospect of taking over as prime minister under a rotation arrangement. Ivanova had been a member of the European Parliament from 2009 to 2012. From 2013 until being proposed by the Bulgarian government for European Commissioner in 2023, she represented Bulgaria at the European Court of Auditors in Luxembourg.

Biohybrid robot with fungal electronics could help usher in an era of sustainable robotics.

Building a robot takes time, technical skill, the right materials -- and sometimes, a little fungus. In creating a pair of new robots, Cornell University researchers cultivated an unlikely component, one found on the forest floor: fungal mycelia. By harnessing mycelia’s innate electrical signals, the researchers discovered a new way of controlling “biohybrid” robots that can potentially react to their environment better than their purely synthetic counterparts.

The team’s paper published in Science Robotics. The lead author is Anand Mishra, a research associate in the Organic Robotics Lab led by Rob Shepherd, professor of mechanical and aerospace engineering at Cornell University, and the paper’s senior author. “This paper is the first of many that will use the fungal kingdom to provide environmental sensing and command signals to robots to improve their levels of autonomy,” Shepherd said. “By growing mycelium into the electronics of a robot, we were able to allow the biohybrid machine to sense and respond to the environment. In this case we used light as the input, but in the future it will be chemical. The potential for future robots could be to sense soil chemistry in row crops and decide when to add more fertilizer, for example, perhaps mitigating downstream effects of agriculture like harmful algal blooms.”

Mycelia are the underground vegetative part of mushrooms. They have the ability to sense chemical and biological signals and respond to multiple inputs. “Living systems respond to touch, they respond to light, they respond to heat, they respond to even some unknowns, like signals,” Mishra said. “If you wanted to build future robots, how can they work in an unexpected environment? We can leverage these living systems, and any unknown input comes in, the robot will respond to that.”

Two biohybrid robots were built: a soft robot shaped like a spider and a wheeled bot. The robots completed three experiments. In the first, the robots walked and rolled, respectively, as a response to the natural continuous spikes in the mycelia’s signal. Then the researchers stimulated the robots with ultraviolet light, which caused them to change their gaits, demonstrating mycelia’s ability to react to their environment. In the third scenario, the researchers were able to override the mycelia’s native signal entirely. Experts believe that this advance lays the groundwork for building sturdy, sustainable robots. In the future, these hardy, light-activated cyborgs could be deployed to harsh environments on Earth or even on missions outside our planet.

The research and innovation sector has broadly embraced the long-awaited report on EU competitiveness from former Italy prime minister Mario Draghi.

Research and innovation have never been more present in mainstream policy debates after this week former Italian prime minister, Mario Draghi, placed research and innovation at the heart of his recommendations for boosting EU competitiveness. That followed on from an earlier report by another former Italian prime minister, Enrico Letta, on the future of the single market, in which he called for a ‘fifth freedom’ for research and innovation.

The Draghi report is expected to have a major role in shaping EU policy over the next five years, and the research community is naturally delighted by its call for more public research and innovation funding, including €200 billion for the successor to Horizon Europe to run from 2028-2034, Framework Programme 10 (FP10). Draghi also calls for better coordination of public research and innovation expenditure across EU member states, via a ‘Research and Innovation Union’ and a ‘European Research and Innovation Action Plan.’

This more Europe-centric approach recommended by the report would prioritise collective EU-wide ambitions, rather than national value for money. Björnmalm hopes this message will be picked up by the expert group report on FP10, due next month. “This outdated mindset of seeing the EU funding programme as primarily a means to redistribute member states’ funds limits Europe’s potential to become a global leader in science and technology,” he said.

Another ambition that’s regularly floated making it into the Draghi report is the creation of a new funding instrument modelled on the US advanced research project agencies. It is suggested this would be done by reforming the European Innovation Council’s (EIC) pathfinder instrument which supports deep tech projects. Björnmalm notes strong similarities with the European Competitiveness Research Council proposed by CESAER.

The bulk of the full 328-page report is dedicated to detailed policy recommendations for specific sectors including clean technologies, semiconductors, defence, and pharmaceuticals, and addresses issues industry has been complaining about for years, such as burdensome regulations and access to capital. It also includes recommendations to support the development of AI models in several strategic industries.

Cecilia Bonefeld-Dahl, director general of the trade association DigitalEurope, said the report provides “a long list of positive ideas” including simplifying AI regulation, better commercialisation of research, and integrating technology into strength industries. Nathalie Moll, director general of the European Federation of Pharmaceutical Industries and Associations, said the publication “shows ambition at the highest level” to address the issues facing the pharma industry, with Europe struggling to attract R&D investment.

Astronomers have found clues in the form of a cosmic question mark, the result of a rare alignment across light-years of space.

NASA’s James Webb Space Telescope has captured a stunning image of a cosmic ‘question mark’ formed by distant galaxies, offering astronomers a unique glimpse into the universe’s starforming past. This rare alignment, caused by a phenomenon known as gravitational lensing, provides valuable insights into galaxy formation and evolution 7 billion years ago. The image, taken by Webb’s Near-Infrared Imager and Slitless Spectrograph (NIRISS), reveals a pair of interacting galaxies magnified and distorted by the massive galaxy cluster MACS-J0417.5-1154. This cluster acts as a cosmic magnifying glass, allowing astronomers to see enhanced details of much more distant galaxies behind it.

Gravitational lensing occurs when a massive object, such as a galaxy cluster, warps the fabric of space-time around it. This warping can magnify and distort the light from distant objects behind the cluster, creating optical illusions in space. In this case, the lensing effect has produced a rare configuration called a hyperbolic umbilic gravitational lens. This unusual alignment results in five images of the galaxy pair, four of which trace the top of the question mark shape. The dot of the question mark is formed by an unrelated galaxy that happens to be in the right place from our perspective.

While the galaxy cluster has been observed before by NASA’s Hubble Space Telescope, the dusty red galaxy that forms part of the question-mark shape only became visible with Webb. This is due to Webb’s ability to detect infrared light, which can pass

through cosmic dust that traps the shorter wavelengths of light detected by Hubble. This capability allows Webb to peer further back in time and observe galaxies as they appeared billions of years ago, during the universe’s peak period of star formation. The galaxies in the question mark are seen as they were 7 billion years ago, providing a window into what our own Milky Way might have looked like during its “teenage years.”

The research team used both Hubble’s ultraviolet and Webb’s infrared data to study star formation within these distant galaxies.

Vicente Estrada-Carpenter of Saint Mary’s University explains the significance of their findings: “Both galaxies in the Question Mark Pair show active star formation in several compact regions, likely a result of gas from the two galaxies colliding. However, neither galaxy’s shape appears too disrupted, so we are probably seeing the beginning of their interaction with each other.” This observation provides valuable information about how galaxies evolve through interactions and mergers, a process that has shaped the universe we see today.

Marcin Sawicki, one of the lead researchers, emphasizes the broader implications of this discovery: “These galaxies, seen billions of years ago when star formation was at its peak, are similar to the mass that the Milky Way galaxy would have been at that time. Webb is allowing us to study what the teenage years of our own galaxy would have been like.” As Webb continues to reveal new wonders of the cosmos, each discovery brings us closer to understanding our place in the vast tapestry of the universe.

Researchers identify mechanism underlying allergic itching, and show it can be blocked.

Why do some people feel itchy after a mosquito bite or exposure to an allergen like dust or pollen, while others do not? A new study has pinpointed the reason for these differences, finding the pathway by which immune and nerve cells interact and lead to itching. The researchers, led by allergy and immunology specialists at Massachusetts General Hospital, a founding member of the Mass General Brigham healthcare system, then blocked this pathway in preclinical studies, suggesting a new treatment approach for allergies. The findings are published in Nature.

“Our research provides one explanation for why, in a world full of allergens, one person may be more likely to develop an allergic response than another,” said senior and corresponding author Caroline Sokol, MD, PhD, an attending physician in the Allergy and Clinical Immunology Unit at MGH, and assistant professor of medicine at Harvard Medical School. “By establishing a pathway that controls allergen responsiveness, we have identified a new cellular and molecular circuit that can be targeted to treat and prevent allergic responses including itching. Our preclinical data suggests this may be a translatable approach for humans.”

When it comes to detecting bacteria and viruses, the immune system is front and foremost at detecting pathogens and initiating long-lived immune responses against them. However, for allergens, the immune system takes a backseat to the sensory nervous system. In people who haven’t been exposed to allergens before, their sensory nerves react directly to these allergens, causing itchiness and triggering local immune cells to start an allergic reaction. In those with chronic allergies, the immune system can affect these sensory nerves, leading to persistent itchiness.

Previous research from Sokol and colleagues showed that the skin’s sensory nervous system -- specifically the neurons that lead to itch -- directly detect allergens with protease activity, an enzyme-driven process shared by many allergens. When thinking about why some people are more likely to develop allergies and chronic itch symptoms than others, the researchers hypothesized that innate immune cells might be able to establish a “threshold” in sensory neurons for allergen reactivity, and that the activity of these cells might define which people are more likely to develop allergies.

The researchers performed different cellular analyses and genetic sequencing to try and identify the involved mechanisms. They found that a poorly understood specific immune cell type in the skin, that they called GD3 cells, produce a molecule called IL-3 in response to environmental triggers that include the microbes that normally live on the skin. IL-3 acts directly on a subset of itch-inducing sensory neurons to prime their responsiveness to even low levels of protease allergens from common sources like house dust mites, environmental molds and mosquitos. IL-3 makes

sensory nerves more reactive to allergens by priming them without directly causing itchiness. The researchers found that this process involves a signaling pathway that boosts the production of certain molecules, leading to the start of an allergic reaction.

Then, they performed additional experiments in mouse models and found removal of IL-3 or GD3 cells, as well as blocking its downstream signaling pathways, made the mice resistant to the itch and immune-activating ability of allergens. Since the type of immune cells in the mouse model is similar to that of humans, the authors conclude these findings may explain the pathway’s role in human allergies. “Our data suggest that this pathway is also present in humans, which raises the possibility that by targeting the IL-3-mediated signaling pathway, we can generate novel therapeutics for preventing an allergy,” said Sokol. “Even more importantly, if we can determine the specific factors that activate GD3 cells and create this IL-3-mediated circuit, we might be able to intervene in those factors and not only understand allergic sensitization but prevent it.”

New research has found limits to how quickly we can scale up technology to store gigatons of carbon dioxide under the Earth’s surface.

Underground CO2 storage, a key component of carbon capture and storage (CCS) technology, is often viewed as a vital solution to combat climate change. In light of the urgency to address global warming, many potential methods of carbon capture have been meticulously investigated. While the concept of storing CO2 underground is promising, recent research from Imperial College London highlights significant limitations and challenges associated with scaling up this technology.

Current international scenarios for limiting global warming to less than 1.5 degrees Celsius by 2100 rely heavily on technologies that can remove CO2 from the Earth’s atmosphere at unprecedented rates. These strategies aim to remove between 1 and 30 gigatons of CO2 annually by 2050. However, the estimates for how quickly these technologies can be deployed have been largely speculative. The Imperial study indicates that existing projections are not likely feasible at the current pace of development.

“There are many factors at play in these projections, including the speed at which reservoirs can be filled as well as other geological, geographical, economic, technological, and political issues,” said Yuting Zhang, lead author from Imperial’s Department of Earth Science and Engineering. “However, more accurate models like the ones we have developed will help us understand how uncertainty in storage capacity, variations in institutional capacity across regions, and limitations to development might affect climate plans and targets set by policymakers.” The experts found that storing up to 16 gigatons of CO2 underground annually by 2050 is possible, but would require a substantial increase in storage capacity and scaling that current investment and development levels do not support.

“Although storing between six to 16 gigatons of CO2 per year to tackle climate change is technically possible, these high projections are much more uncertain than lower ones,” noted co-author Dr. Samuel Krevor, also from Imperial’s Department of Earth Science and Engineering. “This is because there are no existing plans from governments or international agreements to support such a largescale effort. However, five gigatons of carbon going into the ground is still a major contribution to climate change mitigation.”

The team’s analysis suggests a more realistic global benchmark for underground CO2 storage might be in the range of 5-6 gigatons per year by 2050. This projection aligns with growth patterns observed in existing industries, including mining and renewable energy. By applying these historical growth patterns to CO2 storage, the researchers have developed a model that offers a more practical and reliable method for predicting how quickly carbon storage technologies can be scaled up. “Our study is the first to apply growth patterns from established industries to CO2 storage,” explained Dr. Krevor. “By using historical data and trends from other sectors, our new model provides a realistic and practical approach for setting attainable targets for carbon storage, helping policymakers to make informed decisions.”

The research, funded by the Engineering & Physical Sciences Research Council (EPSRC) and the Royal Academy of Engineering, highlights the importance of setting realistic goals in the global effort to combat climate change. While underground carbon storage presents a promising strategy, understanding its potential and limitations is crucial for making the best use of this technology.

New species of Antarctic dragonfish highlights its threatened ecosystem

The species highlights both the unknown biodiversity and fragile state of the Antarctic ecosystem.

A research team at the Virginia Institute of Marine Science at William & Mary has uncovered a new species of Antarctic fish, which could reshape how scientists view biodiversity in the Southern Ocean. The newly discovered species Akarotaxis gouldae, also known as the banded dragonfish, was identified during an investigation of museum-archived larvae samples. While the research team examined the samples, they noticed key differences in some fish, including two distinct dark vertical bands of pigment on their bodies, a shorter snout and jaw, and a longer body depth. These observations led them to suspect the presence of a new species. To confirm their hypothesis, the researchers used mitochondrial DNA analysis and constructed a phylogenetic tree to illustrate the relationship between A. gouldae and other Antarctic dragonfish species.

The banded dragonfish is limited to a small area along the Western Antarctic Peninsula. This region is also targeted by the Antarctic

krill fishery, raising concerns about the potential impact of human activities on this rare species. Because the fish produces very few offspring, it is particularly vulnerable to environmental changes.

“The discovery of this species was made possible by a combination of genetic analysis and examination of museum specimens from across the world, demonstrating the importance of both approaches to the determination of new species and of the value of specimen collections. The work also highlights how little we know of the biodiversity of Antarctica in general and the west Antarctic peninsula in particular,” says NSF Program Director Will Ambrose.

A new study shows 57 million tons of plastic pollution is pumped out yearly and most comes in Global South.

A new study from Leeds University shines a light on the enormous scale of uncollected rubbish and open burning of plastic waste in the first ever global plastics pollution inventory. Researchers used A.I. to model waste management in more than 50,000 municipalities around the world. This model allowed the team to predict how much waste was generated globally and what happens to it. Their study, published in the journal Nature, calculated a staggering 52 million tonnes of plastic products entered the environment in 2020 -- which, laid out in a line would stretch around the World over 1,500 times.

It also revealed that more than two thirds of the planet’s plastic pollution comes from uncollected rubbish with almost 1.2 billion people -- 15% of the global population -- living without access to waste collection services. The findings further show that in 2020 roughly 30 million tonnes of plastics -- amounting to 57% of all plastic pollution -- was burned without any environmental controls in place, in homes, on streets and in dumpsites. Burning plastic comes with ‘substantial’ threats to human health, including neurodevelopmental, reproductive and birth defects. The researchers also identified new plastic pollution hotspots, revealing India as the biggest contributor -- rather than China as has been suggested in previous models -- followed by Nigeria and Indonesia.

Dr Costas Velis, academic on Resource Efficiency Systems from the School of Civil Engineering at Leeds, led the research. He said: “We need to start focusing much, much more on tackling open burning and uncollected waste before more lives are needlessly impacted by plastic pollution. It cannot be ‘out of sight, out of mind’.” Each year, more than 400 million tonnes of plastic is produced. Many plastic products are single-use, hard to recycle, and can stay in the environment for decades or centuries, often being fragmented into smaller items. Some plastics contain potentially harmful chemical additives which could pose a threat to human health, particularly if they are burned in the open.

According to the paper’s estimated global data for 2020, the worst polluting countries were: India: 9.3 million tonnes -- around a fifth of the total amount; Nigeria: 3.5 million tonnes; and Indonesia: 3.4 million tonnes. China, previously reported to be the worst, is now ranked fourth, with 2.8 million tonnes, as a result of improvements collecting and processing waste over recent years. The UK was ranked 135, with around 4,000 tonnes per year, with littering the biggest source. Low and middleincome countries have much lower plastic waste generation, but a large proportion of it is either uncollected or disposed of in dumpsites. India emerges as the largest contributor because it has a large population, roughly 1.4 billion, and much of its waste isn’t collected.

The contrast between plastic waste emissions from the Global North and the Global South is stark. Despite high plastic consumption, macroplastic pollution -- pollution from plastic objects larger than 5 millimeters -- is a comparatively small issue in the Global North as waste management systems function comprehensively. There, littering is the main cause of macroplastic pollution.

Researchers say this first ever global inventory of plastic pollution provides a baseline -- comparable to those for climate change emissions -- that can be used by policymakers to tackle this looming environmental disaster. They want their work to help policymakers come up with waste management, resource recovery and wider circular economy plans, and want to see a new, ambitious and legally binding, global ‘Plastics Treaty’ aimed at tackling the sources of plastic pollution.

Dr Velis said: “This is an urgent global human health issue -- an ongoing crisis: people whose waste is not collected have no option but to dump or burn it: setting the plastics on fire may seem to make them ‘disappear’, but in fact the open burning of plastic waste can lead to substantial human health damage including neurodevelopmental, reproductive and birth defects; and much wider environmental pollution dispersion.”

Theranostic techniques enable clinicians to diagnose and treat a condition at the same time, while they can also provide rapid feedback on the effectiveness of treatment. Researchers in the NORTH project are developing hybrid nanoparticles designed to combine temperature-based diagnostics with other functions such as drug delivery, as Professor Anna Kaczmarek explains.

The idea of diagnosing medical conditions on the basis of temperature variations is fairly well established, and a variety of techniques are currently used in medicine to monitor temperatures within the body, such as thermocouples. It is known for example that cancer cells have a slightly elevated temperature in comparison to healthy cells, yet the currently available monitoring techniques are highly invasive. “Putting a thermocouple into the human body involves very invasive techniques,” explains Anna Kaczmarek, Professor in the Department of Chemistry at Ghent University in Belgium. “The research community has been looking for improved, non-invasive ways of measuring temperature at the nanoscale, and luminescence thermometry has emerged as a potential route to achieving this.”

This is a topic Professor Kaczmarek is exploring as Principal Investigator of the ERC-backed NORTH project, in which she and her team are working to develop new multi-functional hybrid nanoparticles. This research centres on periodic mesoporous organosilicas (PMOs), a group of very ordered porous materials which Professor Kaczmarek says can be used in various different ways. “There are a lot of possibilities with PMOs to create materials which are biodegradeable, biocompatible, and also highly porous,” she outlines. Researchers in the project are now looking to combine these PMOs with a group of chemical elements called lanthanides, some of which are well-suited to luminescence thermometry. “They’re not affected by the environment in which they’re being used, which is beneficial for luminescence thermometry,” explains Professor Kaczmarek.

The team behind the NORTH project is investigating these lanthanides with respect to their potential in luminescence thermometry, as well as in other functions. There are 15 lanthanides, many of which have luminescence properties, and researchers have identified several which are particularly interesting for biological applications. “We’ve narrowed it down and are working with a few of these lanthanides,” says Professor Kaczmarek. Researchers typically use combinations of these elements, as this approach tends to be more

Scanning Transmission Electron Microscopy image with high-angle annular dark-field detector (HAADF-STEM) of hybrid PMO-inorganic thermometers developed in project NORTH. The inorganic inner cores generate the thermometry properties, whereas the cavities and porous nature of the PMO walls allows loading it with an anti-cancer drug.

sensitive than using a single lanthanide. “We work for example with combinations of holmium and ytterbium. We’re also exploring thuliumbased systems, such as thulium combined with ytterbium, or also with neodymium or erbium,” outlines Professor Kaczmarek.

A lot of thermometers are currently made using lanthanides, yet these are purely inorganic particles that can’t really be loaded with drugs or used to produce an effective photodynamic therapy (PDT) agent. This is where the PMOs come into play. “The PMO not only makes the hybrid material more biocompatible, but it also adds porosity. You can then load a PDT agent or an anti-cancer drug such as doxorubicin for example,” says Professor Kaczmarek. The project team is now looking to develop effective nanoparticles using these materials; Professor Kaczmarek says size and shape are important considerations. “Larger particles can be toxic, while we also don’t want the nanoparticles to be too small, as that causes some retention problems. Around 100 nanometres would be

ideal,” she continues. “We’re looking to develop spherical particles, as we also know that rod shapes can be toxic.”

The idea is that the particle would be activated with light once it reaches a specific location in the body, such as the site of a tumour for example. This would then allow researchers to monitor temperatures, and potentially release an anti-cancer drug. “We aim to use two wavelengths of light simultaneously, one of which could be used to activate the material to show a temperature read-out,” explains Professor Kaczmarek. A lot of progress has been made in this area over the course of the project, with Professor Kaczmarek and her colleagues demonstrating that their hybrid particles can combine thermometry and drug delivery. “We see that there’s some signal interference, which is related to the presence of spectral overlaps. But we also see that we can still use the nanoparticles as a thermometer and as a drug release agent simultaneously without any issues,” she continues.

Researchers are also investigating the possibility of combining thermometry with PDT, work which is still in its early stages. This is one of the main topics currently on the project’s agenda, alongside research into achieving ondemand drug delivery. “We don’t want a drug to be slowly released while the theranostic material is on the way to a specific site like a tumour, we want the drug to be released when it gets there. We’re still trying to optimise that and to implement PDT,” outlines Professor Kaczmarek. The ultimate aim is to use these nanoparticles to diagnose and treat human patients, yet Professor Kaczmarek says there is still much more work to do before they can be applied clinically. “There are still concerns around the biocompatibility and performance of these materials,” she acknowledges. “There is still a long road ahead before any thermometer materials reach clinics.”

These are issues Professor Kaczmarek plans to address in a follow up ERC- funded proof-ofconcept project called LUMITOOLS, building on the progress that has been made in NORTH. Through this project Professor Kaczmarek aims

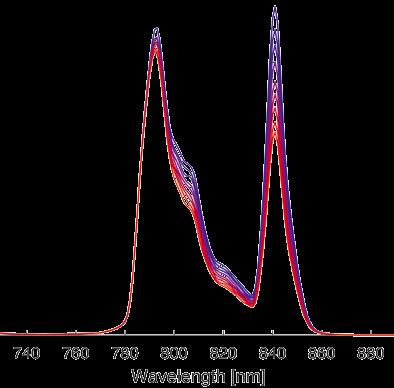

Luminescence thermometry map of newly developed near infrared thermometers. The blue line represents 20°C, the red line 60°C. The ratio of the two peaks is used to build a calibration curve.

thermometry can also be used as a feedback tool,” continues Professor Kaczmarek. “For example, if you want to combine thermometry and photothermal therapy to treat a tumour then you need feedback.”

The cancerous tissue would need to be heated to a high temperature, but it would also be extremely important to avoid over-heating nearby tissue and causing new problems. These nanoparticles could provide rapid feedback to a clinician in these kinds of circumstances,

“The research community has been looking for improved, noninvasive ways of measuring temperature at the nanoscale, and luminescence thermometry has emerged as a potential route to achieving this.”

to overcome the main concerns around these materials, and move them closer to practical application. “How can we convince the medical community to start using these materials? This in large part comes down to degradeability,” she says. If these concerns can be addressed, then these kinds of multi-functional materials could prove extremely useful for the medical community. “There are many potential applications, and not just in diagnostics -

helping guide treatment and tailor it to the needs of individual patients. “The idea is to provide feedback during therapy,” says Professor Kaczmarek. The project team is now also working towards this objective, with researchers testing combinations and improving the nanoparticles, with the long-term goal of bringing them to practical application. “We’ve developed some very interesting materials and thermometers in the project,” continues Professor Kaczmarek.

NanOthermometeRs for THeranostic

Project Objectives

Developing multifunctional nanoplatforms, which combine both temperature sensing (as a diagnostic tool) and the therapy of disease (drug delivery/photodynamic therapy/photothermal therapy), as proposed in the ERC Stg project NORTH, can change the way that certain diseases are treated.

Project Funding

This project is funded by the European Research Council ERC Starting Grant NanOthermometeRs for THeranostics (NORTH) under grant agreement No 945945.

Follow up project: European Research Council ERC Proof of Concept project LUMInescence ThermOmeters fOr cLinicS (LUMITOOLS) under grant agreement No 101137651.

Contact Details

Project Coordinator,

Prof. dr. Anna M. Kaczmarek

Ghent University

Department of Chemistry

Krijgslaan 281-S3, 9000 Ghent

Belgium

T: +32 9 264 48 71

E: anna.kaczmarek@ugent.be

W: https://nanosensing.ugent.be

Prof. Dr Anna M. Kaczmarek studied Chemistry at Adam Mickiewicz University in Poland and acquired a PhD at Ghent University, Belgium in the field of lanthanide materials. After several post-doctoral positions in Belgium and visits abroad she created the NanoSensing Group (Ghent University) which focuses on developing (hybrid) luminescent nanothermometers for biomedical applications. Privately she is mom to 6 month old daughter Marie.

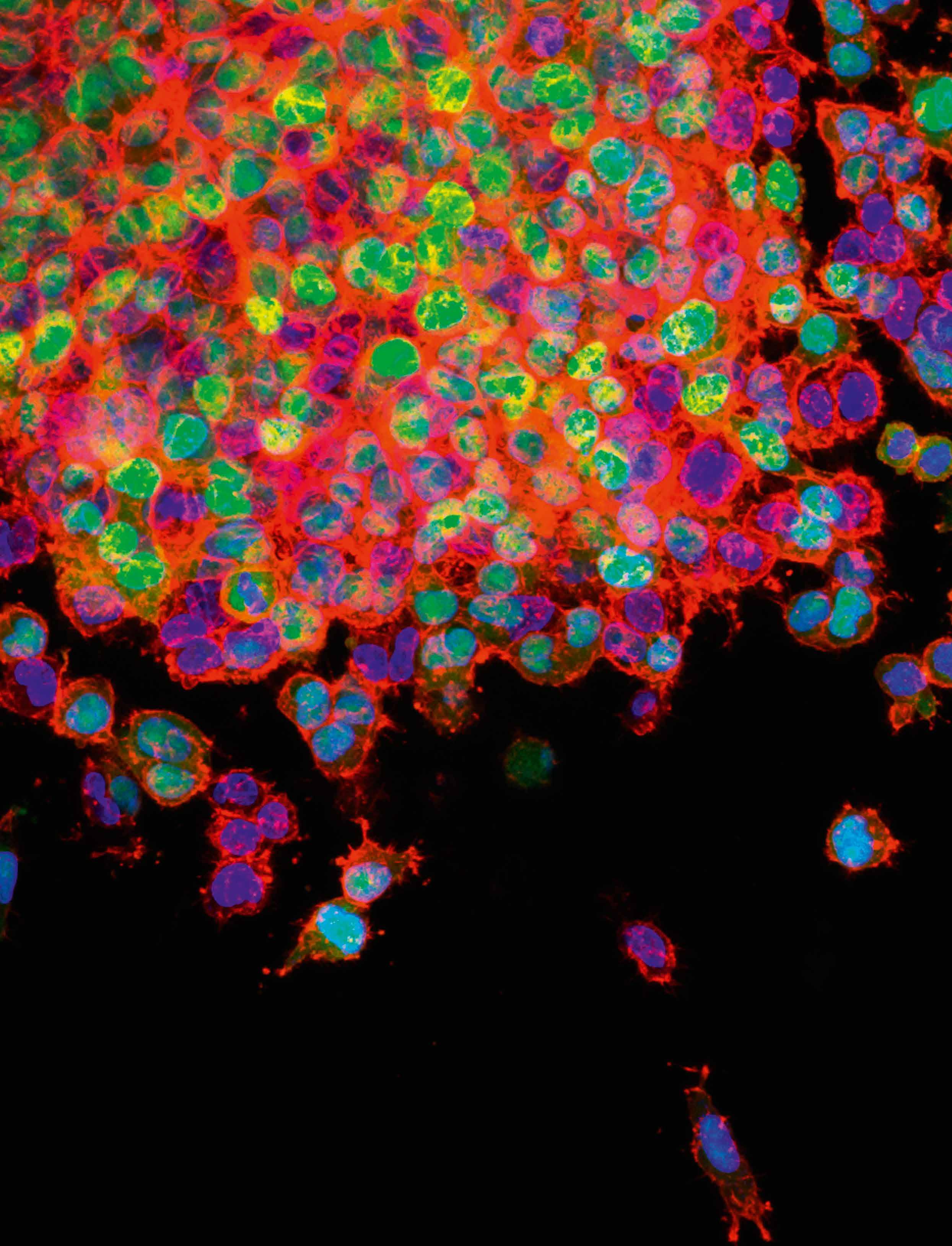

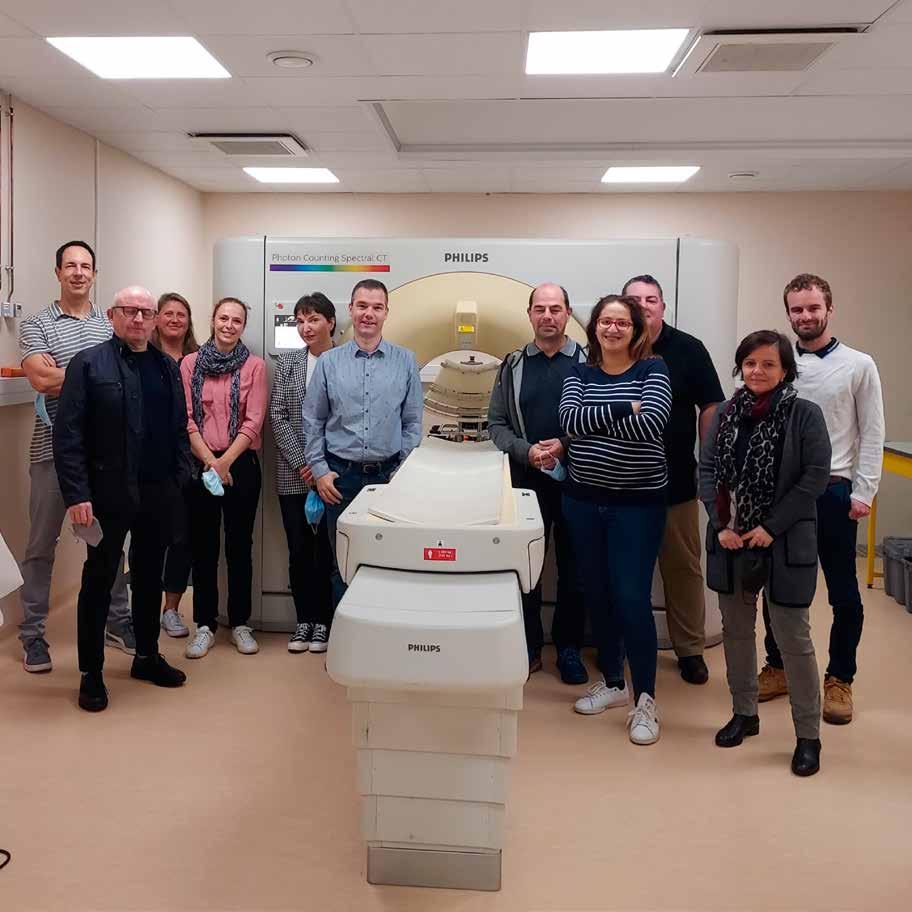

Cancer is one of the biggest causes of death in Europe, and researchers continue to work on improving diagnosis and treatment. We spoke to Dr Frédéric Lerouge about the work of the SCANnTREAT project in combining a new imaging modality with X-ray activated photodynamic therapy, which could lead to more efficient treatment of cancer.

An imaging modality called SPCCT (spectral photon counting scanner CT) could provide detailed information about a tumour (when using specific contrast media), including its volume and location, information which can then be used to guide cancer treatment. As Principal Investigator of the SCANnTREAT project, Dr Frédéric Lerouge is looking to combine this imaging modality with X-ray activated photodynamic therapy, which will provide a powerful method of treating cancer. “With photodynamic therapy patients are injected with a molecule called a photo-sensitiser, which then goes all over the body. This molecule is sensitive to light at a certain wavelength, for example red light,” he explains. “The idea is that once a patient has ingested this molecule, doctors can conduct an endoscopy for example, and effectively shine a light inside the patient. This red light activates the molecule, which is then lethal to the cancer.”

This approach is already used to treat certain types of cancer, notably esophagus cancer, yet it has some significant limitations. In particular, photodynamic therapy is not currently effective on tumours located deep within the body, as light can’t reach the photo-sensitiser molecule itself and activate it, an issue that Dr Lerouge and his colleagues in the project are addressing. “We are developing a new strategy to bring light close to a photo-sensitiser molecule and activate it,” he says. This work involves the development of nanoprobes, tiny devices with a diameter of between 9-10 nanometres, which generate reactive oxygen species under low energy x-ray irradiation. “When we shine x-rays on the nanoprobes they in turn emit light, which can then be re-absorbed by the photo-sensitiser,” continues Dr Lerouge. “This is related to a mechanism called scintillation.”

The ability to activate the photo-sensitiser molecule in a controlled way, and to turn it on and off at will, opens up the possibility of targeting cancer treatment more precisely than is currently possible. One

approach commonly used to treat cancer is radiotherapy, where high-energy x-rays are shone directly on a tumour to destroy it, yet Dr Lerouge says this also leads to

to image a tumour with the scanner and treat it afterwards, we will therefore be able to reduce side-effects and improve treatment efficiency,” says Dr Lerouge.

“With photodynamic therapy patients are injected with a molecule called a photo-sensitiser, which then goes all over the body. This molecule is sensitive to light at a certain wavelength, for example red light.”

side-effects. “Radiotherapy can destroy a tumour, but it may also destroy surrounding healthy tissue, which can lead to various side effects,” he outlines. The new approach to treatment that researchers are developing in the project is designed to have less complications. “Indeed, with the nanoprobes designed during the project it will be possible

A more precise and targeted method of treating cancer could also reduce the likelihood of a recurrence of the disease, which is a major concern. Even if a cancer is treated successfully with current methods, some cancerous cells may be left in the body, which leaves people vulnerable. “These cancerous cells may effectively lie dormant for a time,

but they can lead to the development of a tumour in future. If all of the cancer cells can be eradicated, this could prevent the recurrence of the disease,” explains Dr Lerouge.

Photodynamic therapy is already used in cancer treatment, now Dr Lerouge is exploring the possibility of widening its use, particularly in treating pancreatic cancer, which is typically diagnosed at quite a late stage. “The aim would be to provide an effective and efficient treatment method,” he says.

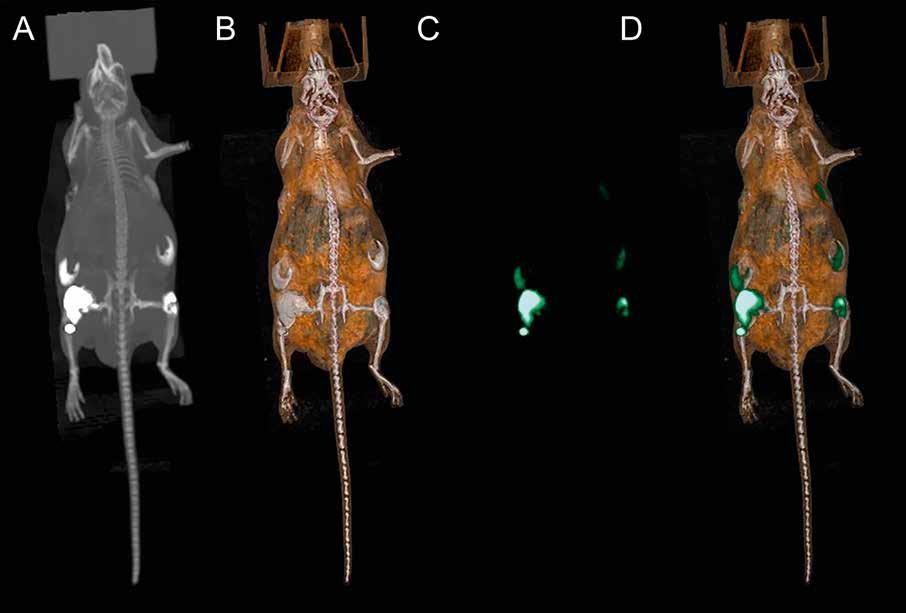

Researchers in the project are currently conducting tests on mice, which are both imaged with a conventional scanner, and also imaged with the SPCCT modality following injection with the nanoprobes. “When you use this specific imaging technique you can choose to see only the areas where the nanoprobes are located, which gives us a very accurate diagnosis,” explains Dr Lerouge.

“When you merge the two images it gives you a more precise picture of where the nanoprobes are, that can then be used to guide treatment. We’re interested not just in improving diagnosis, but also in monitoring the pathology during treatment.”

This will allow clinicians to assess the effectiveness of treatment, and if necessary adapt it to reflect the degree of progress that

has been made. If everything is going well, and the patient is responding positively to treatment, then it might be possible to reduce the dose of x-rays to be delivered for example, or the opposite might be the case. “If we see that things aren’t going our way we can choose to increase the x-rays a little bit. The main consideration is always the wellbeing

of the patient,” stresses Dr Lerouge. The information gained in the process can then be very useful in guiding the treatment of other patients in future. “We want to provide this information in databases to help improve treatment as much as possible, from the very early stages when the pathology is initially diagnosed,” continues Dr Lerouge.

Photodynamic therapy triggered by spectral scanner CT: an efficient tool for cancer treatment

Project Objectives

The projects aims at combining two cuttingedge technologies for the treatment of cancer : spectral photon counting scanner CT a ground-breaking imaging modality and a new treatment known as X-rays activated Photodynamic Therapy (X-PDT). The perfect match between these two technologies is ensured with specifically designed probes acting both as contrast media and therapeutic agents.

Project Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 899549.

Project Consortium

• The University Claude Bernard Lyon 1 (UCBL) - Chemistry Laboratory

• The University Claude Bernard Lyon 1 (UCBL) - CREATIS

• Inserm UA7 unit Synchrotron Radiation for Biomedicine (STROBE)

• Inserm The UA8 Unit “Radiations: Defense, Health and Environment”

• Maastricht University (UM) - D-Lab

• Philips Medical Systems Technologies, Ltd., Israel (PMSTL)

• Lyon Ingénierie Projets https://www.scanntreat.eu/governance.html

Contact Details

Project Coordinator, Dr Frederic Lerouge

Chemistry Laboratory

UMR 5182 ENS/CNRS/University claude bernard Lyon 1

9 Rue du Vercors

69007 Lyon

T: +33 6 14 14 50 86

E: Frederic.lerouge@univ-lyon1.fr

W: https://www.scanntreat.eu/

Dr Frederic Lerouge is assistant professor at the Claude Bernard Lyon 1 University, a position he has held since 2007. His main research interests focus on the design of inorganic nanomaterials and their surface modifications for applications in health and environment.

A major next step for Dr Lerouge and his colleagues will be to test the technology on a broader range of small animal models, gaining deeper insights into its overall effectiveness and identifying any ways in which it could be improved. This work is still at a relatively early stage however, and Dr Lerouge plans to establish a successor project beyond the conclusion of SCANnTREAT later this Summer, in which he intends to pursue further research. “We need to do some more work and gather more data. We also have ideas about how to develop the technology, which will evolve depending on the results of our research, in terms of the quality of diagnosis and efficiency of treatment,” he continues. “We are looking to improve the technology, and to ensure that we will be able to actually use this system.”

The long-term vision is to use this technology to treat cancer in human patients, and with healthcare budgets under strain, costeffectiveness is an important consideration. The SCANnTREAT system itself is expensive, the result of dedicated work in the laboratory, yet Dr Lerouge believes it can lead to financial benefits over the long-term by reducing the need for further action following treatment, which currently is a major cost in cancer care. “Some patients are treated for cancer, but they remain in a fragile state and they are not restored to full health. They may then need further treatment for other conditions,” he explains. “The idea is that once this treatment has been administered it is over, and the patient can then get back to normal life. This is one of the issues that people who work on cancer treatment look at very closely.”

The project’s research represents an important contribution in this respect, and in future Dr Lerouge plans to build on the progress made in SCANnTREAT, and move the new treatment closer to practical application.

“We are looking to establish a new consortium, to push this technology forward and to test it on different cancer models,” he says. Researchers hope to probe the limits of this technology, and also to look at how it can be improved further. “We intend to look at what we can achieve in terms of the efficiency of treatment, and also at what kinds of cancer we could treat with this approach,”

The ultimate goal is to develop a new method of treating cancer that can be administered to human patients. “We hope to treat human patients with this technology within the next five years or so,” says Dr Lerouge.

What drives the progression of neurodegenerative diseases like ALS, Alzheimer’s, and MS?

Unraveling the mechanisms of neuroinflammation is at the heart of Prof. Bob Harris’s research at the Karolinska Institutet. His team is pioneering new therapeutic approaches by targeting microglial activity and harnessing advanced stem cell techniques. Their goal is to transform treatment strategies for these debilitating conditions.

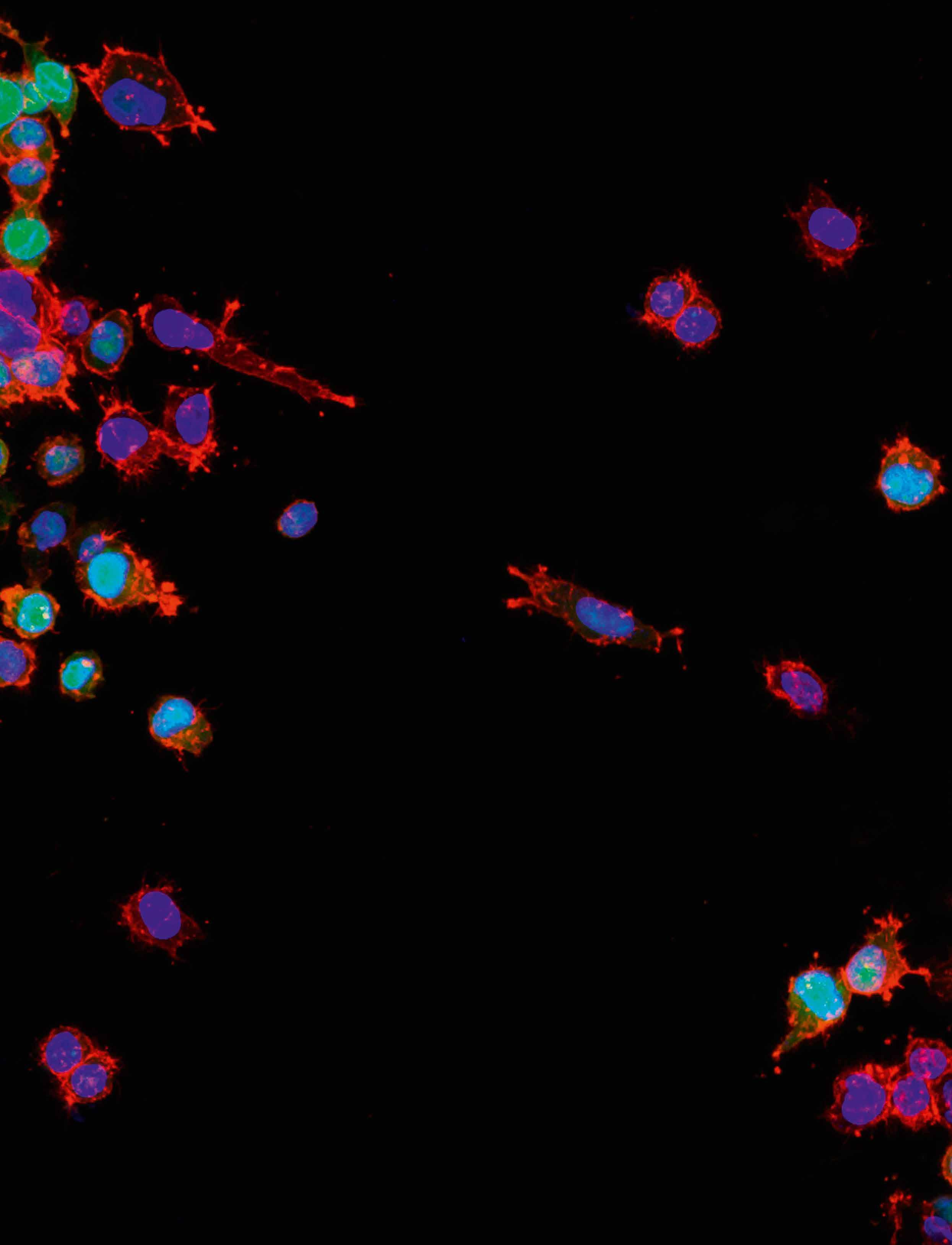

Neurodegenerative diseases like Amyotrophic Lateral Sclerosis (ALS), Alzheimer’s disease (AD), and chronic Multiple Sclerosis (MS) are marked by a common culprit: neuroinflammation. This inflammation is driven by the chronic activation of microglia, the brain’s resident immune cells, and begins as a protective mechanism. However, when prolonged, it is a cause of destructive cytotoxicity and neurodegeneration. We spoke with Prof. Bob Harris, the leader of the research group Applied Immunology and Immunotherapy at the Centre for Molecular Medicine, Karolinska Institutet. He and his research group are focused on understanding the underlying mechanisms of chronic neurodegenerative diseases and translating this knowledge into practical treatment solutions. Given that no effective treatments currently exist for neurodegenerative diseases, their objective is to develop novel therapeutic platforms to address this significant unmet medical need.

Normally, microglia play a role in maintaining brain health. They release factors that promote neuronal survival and restoration of neuronal function after injury. But perpetual activation prevents them from executing their physiological and beneficial functions. Chronically activated microglia release harmful cytokines, oxygen radicals, and other molecules that impair neuronal function and threaten cell survival. Additionally, the essential phagocytic function of microglia- their ability to clear out cellular waste and misfolded proteins— is compromised in neurodegenerative diseases. This failure results in the buildup of toxic aggregates in the central nervous system (CNS), which in turn triggers further microglial activation, creating a vicious cycle of inflammation and damage. For example, in ALS, overactive microglia gather around dying motor neurons. Their numbers directly correlate with the extent of neuron damage.

Similarly, in MS, activated microglia are found at sites of demyelination, interacting with T and B cells through factors released behind a closed blood-brain barrier (BBB). Scientific evidence shows that activated microglia and monocytes can have both beneficial and detrimental effects at different disease stages. This means that approaches that modulate microglial activity can potentially be used in the treatment of a wide range of neurodegenerative diseases. “Strategies that modulate microglial activity and clearance function are thus promising for treatment of a range of neurodegenerative diseases” explains Prof. Harris.

Immunotherapy, which involves modulating immune cells to reduce or stop inflammatory disease processes, has revolutionized the treatment of certain cancers and autoimmune diseases.

However, currently, there is no effective immunotherapy for neurodegenerative diseases. According to Prof. Harris, before a tissue can be healed, the inflammatory process driving the disease must be halted.

“It is impossible to rebuild a house that is still burning, so first the fire must be put out – only then will rebuilding be efficient. The same principle applies to immunotherapy – but in a perfect world the therapeutic intervention would not only reduce the neuroinflammation but also activate the regenerative processes,” he explains.

Unruly cells can be trained to behave

“If we think about how macrophages and microglia can be activated, it is like a Yin-Yang, with one activation state, represented by white, being helpful, while the other, represented by black, is harmful. In disease situations there is a dominance of the harmful cells over the helpful cells,” explains Bob Harris. His research team has developed a microglia microglia/ macrophage cell therapy protocol. A large number of beneficial cells have been specifically activated by exposure to a specific combination of cytokines. This activation successfully induced an immunosuppressive M2 phenotype. By transferring M2 microglia and macrophages into mouse models with Type 1 diabetes and Multiple Sclerosis, they prevented or significantly reduced disease severity. In

their MS model, known as experimental autoimmune encephalomyelitis (EAE), the team transferred M2 microglia intranasally into mice. This resulted in a significant reduction in inflammatory responses and less demyelination in the central nervous system (CNS). Similarly, the team conducted a cell transfer of M2 macrophages into a mouse model for Type 1 diabetes. Remarkably, a single transfer protected over 80% of treated mice from

Though DNA origami nanobiologics are a relatively new technology, they have become a prime focus in biomedical research due to their capability to deliver pharmaceuticals with precision, coupled with the natural biocompatibility of DNA. Additionally, the ability to engineer these structures into complex, combinational biomolecular formations enhances their appeal. The DNA

“Strategies that modulate microglial activity and clearance function are thus promising for treatment of a range of neurodegenerative diseases.”

developing diabetes for at least three months. This was achieved even when the transfer was conducted just before the clinical onset of the disease. The team found that harmful cells recovered from the blood of patients with multiple sclerosis can be retrained using their activation protocol. Once retrained, these cells were able to modulate the function of other harmful cells in cell essays. “Our activation protocol (IL-10/ IL- 4/TGFb) has been successfully used by other researchers in other disease settings and to reduce rejection of transplants, clearly demonstrating its widespread applicability,” says Bob Harris.

origami technique involves using hundreds of short DNA strands to create a single-stranded DNA scaffold, which is then intricately folded into specific three-dimensional nanoscale shapes, akin to the art of folding paper into delicate origami shapes. The development of scaffold DNA routing algorithms now allows for the precise control of nanoscale structures through an automated design process. Modern DNA origami designs, which use open wireframe structures, offer the most flexibility. This technology can incorporate existing FDA-approved drugs, repurposing them for conditions like ALS. Prof. Harris and his collaborators developed a DNA origami construct in which DNA was made into

cylindrical rods, in which a repurposed cancer drug (Topetecan) was loaded. The surface of the construct was modified to express a carbohydrate molecule that would specifically bind to receptors on microglia. “It’s like packing a present in a parcel box, wrapping it in paper, and adding an address and postage. We are able to specifically deliver immunomodulatory drugs to the harmful microglia, forcing them to be less harmful” says Bob Harris. The MS symptoms were significantly improved through a single treatment. In their research, they demonstrated that topoisomerase 1 (TOP1) inhibitors, like topotecan, reduce inflammatory responses in microglia and reduce neuroinflammation in vivo, providing a promising therapeutic strategy for neuroinflammatory diseases. Their lab continues to develop a range of DNA origami constructs, each loaded with different therapeutic cargoes aimed at modulating specific functions of microglia and macrophages.

Amniotic epithelial cells (AECs) are a type of stem cell derived from human placenta that exhibit strong immunomodulatory properties, contributing to the safe development of the baby. They have the ability to differentiate into a variety of cell types, which makes them very versatile. The clinical use of human amniotic membranes has been recognized for over a century, with the first application in treating burned and ulcerated skin reported in 1910. Instead of using the entire amniotic membrane, more long-term and enhanced effects have been achieved by using isolated AECs. At Karolinska Institutet, a protocol has been developed to recover AECs from the innermost layer of the amniotic sac in discarded placental tissue, and these cells display potent immunomodulatory and immunosuppressive properties. After AEC transplantation, there is no host rejection in either mice or humans. These cells have shown disease-modifying properties in various conditions, including ischemia, bronchopulmonary dysplasia, and liver

diseases, primarily by reducing inflammatory damage. AECs are currently undergoing testing in several clinical trials. In a mouse model of Alzheimer’s disease, intrathecal administration of AECs significantly reduced amyloid plaque burden in the brain, possibly through enhanced microglial phagocytosis.

The Harris lab is currently testing protocols for the adoptive transfer of immunomodulatory AECs in different experimental models of neurodegeneration to stimulate anti-inflammatory and restorative responses in the degenerating CNS. Their unpublished results indicate that a single injection of cells could significantly reduce the severity of an experimental model of multiple sclerosis. ”AECs are a special type of stem cell with particular properties that make them excellent candidates for cell therapy in a wide range of neurodegenerative diseases,” says Bob Harris.

As neurodegenerative diseases involve a multitude of cell types including both immune cells and CNS cells, multiple immunotherapeutic approaches will likely be necessary to target these different cell types. There is also significant variability in the disease progression among patients with the same neurodegenerative condition. “Having developed these three separate therapeutic principles, we are now testing them in different combinations in our experimental neurodegenerative disease models. There will likely be an optimal combination that allows different targeting of microglia at different phases of the disease process”, explains Bob Harris.

The Harris lab has a clear vision to Make A Difference, not only by increasing scientific knowledge within the field of neurodegenerative diseases but also by improving the life quality of patients with these incurable diseases. “Meeting neurodegenerative disease patients, their carers, and families is a humbling experience, but it gives us such energy to conduct our research,” he concludes.

Project Objectives

There are currently no effective treatments for any neurodegenerative disease, and this remains a major unmet medical need. The origin and progression of many neurodegenerative diseases are still not clearly understood, and basic research is required to provide a platform for the development of novel and effective therapies. The objective of our research programme is to address this unmet need by developing multiple therapeutic platforms.

Project Funding

This project is funded by the Swedish Medical Research Council, Alltid Litt Sterkere, Neurofonden, Ulla-Carin Lindquist Stiftelse for ALS Research, Karolinska Institutet Doctoral funding.

Project Collaborators

• Prof Björn Högberg, Karolinska Institutet

• Assoc Prof Roberto Gramignoli, Karolinska Institutet

Contact Details

Professor Bob Harris

CMM L8:04, Karolinska University Hospital Visionsgatan 18, S-171 76 Stockholm, Sweden

E: robert.harris@ki.se

W: https://ki.se/en/research/groups/ immunotherapy-robert-harris-research-group

W: https://www.cmm.ki.se/research-groupsteams/robert-harris-group-2/

Bob Harris is Professor of Immunotherapy in Neurological Diseases and leads the research group Applied Immunology and Immunotherapy at the Centre for Molecular Medicine, a designated translational medicine center at Karolinska Institutet. They conduct a strongly interconnected research program aimed at using knowledge gained from projects in basic science to applications in a clinical setting, with a focus on understanding why chronic neurodegenerative diseases of the nervous system occur, and then devise ways to prevent or treat them.

We spoke with Prof. Dr. Mathias Hornef about the ERC-funded EarlyLife project, which investigates how early-life infections impact the gut epithelium, microbiome, and immune system development in neonates. Using advanced techniques and mouse models, the project aims to uncover the influence of these infections on long-term health and disease susceptibility.

The immediate postnatal period is a critical period for the development and maturation of the immune system. A timed succession of non-redundant phases allow the establishment of mucosal hostmicrobial homeostasis after birth. The enteric microbiota, mucosal immune system, and epithelial barrier are key factors that together facilitate a balanced relationship between the host and microbes in the intestine. The enteric microbiota consists of a diverse community of mainly bacterial microorganisms that colonize the gut. The mucosal immune system represents a defense mechanism that is able to recognize and respond to pathogenic threats while maintaining tolerance to commensal bacteria. The epithelial barrier, formed by a variety of highly specialized cells lining the gut surface, facilitates nutrient digesting and absorption but restricts commensal microorganisms to the gut lumen and prevents enteropathogens from entering sterile host tissues. Early-life infections significantly impact all three components, which may reduce the host’s fitness during the course of the infection and influence the outcome but also lead to long-term consequences.

The ERC-funded EarlyLife project investigates the dynamic changes in the gut epithelium of neonates during early-

life infections. The study seeks to create a comprehensive map of postnatal epithelial cell type differentiation and analyze the impact of early-life bacterial, viral, and parasitic infections on cell differentiation and function. The main long-term goal is to understand how early-life infections influence the development of the epithelium, the immune system, and the establishment of the microbiome, and thereby the risk for inflammatory, immune-mediated, and metabolic diseases later in life.

The gut epithelium forms the luminal surface of the intestine and comes into direct contact with the enteric microbiome and potentially pathogenic microorganisms. It is composed of a variety of different cell types with highly specialized function and plays a critical role in maintaining a balance between the host and its microbiota. Traditionally, the gut epithelium was considered to largely represent a passive physical barrier between the sterile host tissue and the microbially colonized gut lumen. However, research during the last decades has shown that epithelial cells actively participate in establishing hostmicrobial homeostasis. Additionally, these

cells trigger an early immune response and communicate intensively with the underlying immune system when the host is exposed to enteropathogenic microorganisms. This interaction is particularly significant in neonates, who transition from a sterile inutero environment to a microbiota-rich external world immediately after birth and mature their tissue and immune system during the postnatal period. Upon birth, neonates undergo rapid colonization by environmental and maternal bacteria. This sudden exposure requires a delicate balance between immune tolerance of beneficial commensal bacteria and activation against potential pathogenic threats. The gut epithelium plays a vital role in this process.

Colonization happens very quickly, reaching adult levels in the small intestine within hours to days. The baby’s body must support and tolerate this rapid bacterial growth. Additionally, neonates are most susceptible to infections during early life, so at the same time, they have to react to infections and try to defeat pathogens. All this happens while they are massively limited by energy supplies because the maternal energy supply via the umbilic cord is suddenly interrupted and they need to establish uptake of breast milk and enteral feeding. The severity of this energetic

bottleneck is illustrated by the fact that neonates often lose weight during the early transition period. This temporal energy deficit may, in turn, explain why neonates react to different to infection.

“Neonates are very different from adults. They are not just small adults; they have unique physiological and immunological needs. Our group works on understanding these differences, particularly in adaptive immune development, infectious diseases, and microbiota establishment, using neonatal infection models in mice. This type of research is difficult in humans because we can’t get tissue samples from healthy newborns” explains Prof. Hornef. His research team aims to understand how neonates are different from adults in terms of energy constraints, tissue maturation, and the balance between immune tolerance and antimicrobial host responses.

adult host. Whereas the adult host releases invaded epithelial cells into the gut lumen in a process called exfoliation to prevent pathogen translocation, infected epithelial cells in the neonate are engulfed by neighboring cells and subjected to lysosomal degradation.

Prof. Hornef and his research team are additionally exploring the innate immune recognition in the gut epithelium. Innate immune receptors in the gut help recognize and respond to microbial threats. When these receptors bind to microbes, they trigger the release of immune mediators that recruit immune cells and produce antimicrobial molecules to eliminate invaders. However, uncontrolled activation can lead to inflammation and tissue damage. In the lab, the researchers are studying the molecular basis and regulation of these receptors in intestinal epithelial cells.

“Infections in early life not only impact immediate health but can also prime the immune system in ways that affect long-term disease susceptibility.”

“We are addressing the link between infection and the gut epithelium. The gut epithelium consists of several cell types, such as goblet cells, Paneth cells, stem cells, tuft cells, M cells, enteroendocrine cells, and enteroabsorptive cells that fulfill different functions. It has a complex structure with crypts and villi and undergoes continuous proliferation. In neonates, many aspects are different. For example, newborn mice lack certain cell types, like Paneth cells, which produce antimicrobial peptides crucial for maintaining the barrier against microbial exposure. Understanding these differences and how infections impact them is critical” says Prof. Hornef.

The EarlyLife project employs advanced techniques such as single-cell RNA sequencing, spatial transcriptomics, and epigenetic profiling to study the gut epithelium. By using mice models, Dr. Hornef and his team have discovered that the emergence of M cells, that transfer luminal particulate antigen to the Peyer’s patch, the site of immune activation, determines the maturation of the early adaptive immune system. Interestingly, certain microbial stimuli present during infection can accelerate the emergence of M cells and thus lead to earlier immune maturation and immune responses. This may be advantageous during infection but may still come with unwanted consequences in respect to the control of inappropriate responses. Also, they found that the fate of intestinal epithelial cells invaded by the enteropathogen Salmonella differs markedly between the neonate and

The main question for the EarlyLife project is how early-life infections affect longterm health. Preliminary findings suggest that neonatal infections can have lasting effects on the gut epithelium, microbiome composition, and immune function. This concept is supported by observations in humans. Children in developing countries with frequent infections often exhibit stunted growth and altered immune responses. “Infections in early life not only impact immediate health but can also prime the immune system in ways that affect long-term disease susceptibility. Understanding these processes is crucial for developing targeted interventions” concludes Prof. Hornef. By translating EarlyLife’s findings from mice models to human health, and identifying key molecular and cellular changes in the neonatal gut epithelium during infections, researchers hope to develop new strategies to prevent and treat infections in newborns and reduce childhood mortality worldwide. This includes potential therapies designed to modulate the gut microbiome and enhance immune responses in vulnerable neonates. Additionally, the project explores the concept of neonatal “priming,” where early life exposures shape immune and epithelial cell functions. These insights could lead to novel approaches for preventing chronic diseases that originate from a dysregulated early immune system maturation.

Gut epithelial dynamics and function at the nexus of early life infection and longterm health

Project Objectives

Infections of the gastrointestinal tract cause significant childhood mortality and morbidity worldwide. EarlyLife aims to map postnatal intestinal epithelial cell and tissue differentiation. It studies age-dependent differences that explain the enhanced susceptibility of the neonate host to infection and investigates the impact of early life infection on long-term gut health. Using advanced analytical methods and innovative models, it explores how early life infections influence enteric function in the neonate host but also immune development and long-term disease susceptibility, focusing on the gut microbiota, mucosal immune system, and epithelial barrier.

Project Funding

This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (Grant agreement No. 101019157).

Project Collaborators

ERC Work Program • Martin von Bergen, Helmholtz Centre for Environmental Research, Germany • Thomas Clavel, RWTH Aachen University, Germany • Ivan Costa, RWTH Aachen University, Germany Research Group • Ivan Costa • Thomas Clavel • Oliver Pabst • Michael Hensel • Martin von Bergen • Geraldine ZimmerBensch • Jochen Hühn • Lars Küpfer • Frank Tacke • Tim Hand • Jens Walter • Marc Burian

Contact Details

Dr. rer. nat. Christin Meier

Administrative Coordinator

Universitätsklinikum Aachen (UKA)

Pauwelsstraße 30, D 52074 Aachen

T: +49 241 80-37694

E: cmeier@ukaachen.de

W: https://www.ukaachen.de/

Mathias Hornef studied medicine in Tübingen, Lübeck, New York, and Lausanne. He has held positions at the Max von Pettenkofer Institute in Munich, the Karolinska Institute in Stockholm, and the University of Freiburg and Hannover Medical School. Now as a director of the Institute of Medical Microbiology at University Hospital RWTH Aachen, his research focuses on interactions between bacteria, viruses, and parasites, the intestinal epithelium and the mucosal immune system in the neonatal host.

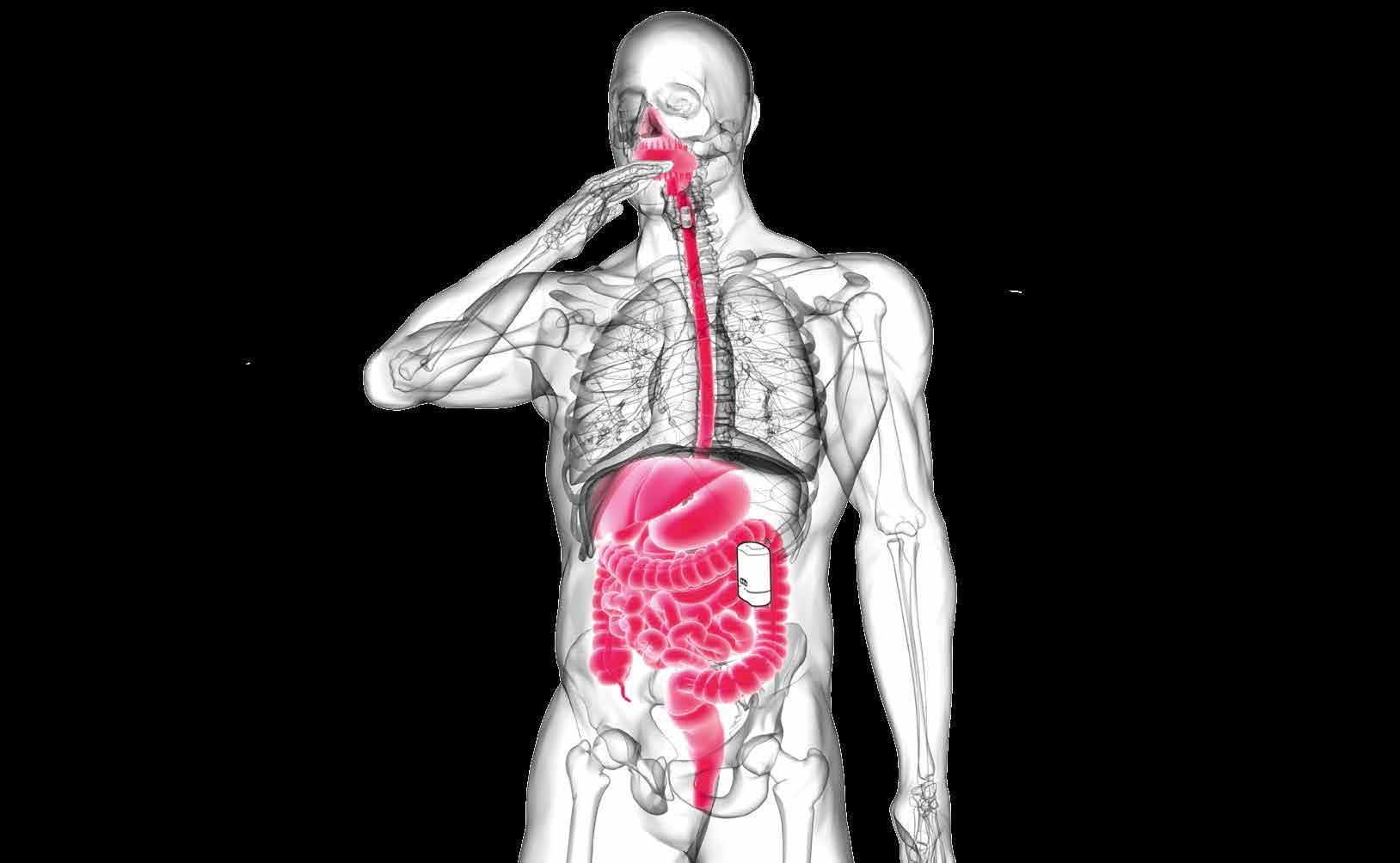

Type-1 diabetes is a chronic, incurable condition which still requires careful management and regular injections of insulin. Researchers in the FORGETDIABETES project are working to develop a fully implantable bionic invisible pancreas, which will relieve the burden associated with managing type-1 diabetes, as Professor Claudio Cobelli explains.

An autoimmune condition which leaves the pancreas unable to secrete insulin, type-1 diabetes affects millions of people across the world, and the number is projected to rise further over the coming decades. Managing the disease is quite an onerous task, as patients typically need to monitor their own carbohydrate consumption and inject themselves with insulin from an exogenous source throughout the day. “Managing the condition can impose a heavy burden on patients,” acknowledges Claudio Cobelli, Professor of Bioengineering at the University of Padova in Italy. The situation has improved over the recent past, with technological progress helping to relieve this burden on patients and improve their quality of life. “One important development has been the ability to continuously monitor glucose

Concept overview of the FORGETDIABETES project.

The device is implanted in the jejeunum, and includes the magnetic system to attract the ingestible capsule and the system to transfer the insulin from (i) the capsule to the reservoir, and from (ii) the reservoir to the body. Electronic components and sensors allow the device to run automaticly, and communicate with the patient.

concentration. This has been a big step forward,” outlines Professor Cobelli. “A second revolution has been in the ability to inject insulin subcutaneously using various technologies, such as insulin pens.”

by a pump. While hybrid closed loop systems are a major advance in the field, Professor Cobelli says they still have some limitations. “Subcutaneous insulin infusion is very practical, but it isn’t entirely

“I’ve been working to develop an intraperitoneal control algorithm, which involves looking

at

how

the

glucose signal can be used to predict the amount of insulin to be infused. The

control algorithm is tailored to the patient, it’s an adaptive control algorithm.”

A further step forward has been in the development of so-called hybrid closed loop systems, in which a sensor monitors an individual’s glucose levels, then an algorithm calculates the amount of insulin that should be subcutaneously injected

optimal, as insulin takes time to get into the blood. So people still have to be careful with meal planning and exercise,” he explains. As Principal Investigator of the EU-backed FORGETDIABETES project, Professor Cobelli is part of a team working to develop a bionic invisible