Design Studies

Title

Machinable inspiration: using deep convolutional autoencoders and evolutionary algorithms for content-based image retrieval to support early stages of the design process

Authors

(1,2) Kapsalis, E.; (1) Jaeger, N.; (3) Kapsalis, R.

(1): University of Nottingham, UK

(2): University of Derby, UK

(3): code4thought.eu

Abstract

Design inspiration and formation are crucial aspects in shaping innovative and functional solutions, as they lay the foundation for creative exploration and the development of ideas that address complex challenges in various domains. Precedent design projects, which can be found in large databases, serve as invaluable resources for designers seeking inspiration and guidance, offering a wealth of knowledge and examples that can inform and enhance their own creative process. In this paper, we propose a Content-Based Image Retrieval (CBIR) system for technical drawings, specifically designed to support the early stages of the design process. The primary aim of our system is to enable efficient retrieval of drawings from large databases based on visual similarity to basic forms generated by the designer. Our approach leverages a deep Convolutional Autoencoder (CAE) to recognise 2D drawings, which can be trained on extensive datasets. Additionally, we employ a suite of Interactive Evolutionary Algorithms (IEAs), i.e., Biomorpher, to facilitate the automatic generation of design alternatives and enhance the retrieval process. The retrieval itself is performed using the k-Nearest Neighbours (KNN) algorithm. We present a comprehensive case study to demonstrate the real-world applicability of the proposed CBIR system, showcasing its potential to streamline the design process and improve design efficiency. Our results indicate that the datadriven techniques employed by our system enable rapid identification and retrieval of relevant technical drawings, thereby supporting designers in exploring various design alternatives and fostering creativity. By harnessing the power of deep learning and evolutionary algorithms, our CBIR system offers a novel and effective approach to managing and navigating the complex landscape of technical drawings in the digital age.

Keywords: deep neural networks, autoencoders, evolutionary algorithms, contentbased image retrieval, form-finding, design inspiration

1. Introduction

The significance of digital design is becoming increasingly evident, as numerous digital applications, techniques, and tools have been developed to support different stages of the design process. For example, digital tools can be powerful aids for designers seeking expertise and creative inspiration. In the ideation phase of design, most designers seek assistance in precedent projects, related cases and scenarios, and connected experiences to resolve design problems (Goldschmidt, 2001; Leclercq & Heylighen, 2002). Digital applications, such as social media (e.g., Pinterest) and online databases (e.g., the RIBA collection), can help designers access precedent projects or explore innovative design solutions. In recent years, the increasingly available design databases and rapidly advancing data science and artificial intelligence (AI) technologies have enabled new data-driven methods and tools that support design-by-analogy (Goel, 2015; Kittur, 2019; Koch, 2019; Zhang, 2020).

1.1. Machine learning for content-based image retrieval

Machine learning algorithms build data models to teach computers how to make predictions or take decisions so as to improve performance on some sets of tasks, such as speech or image recognition (Mitchell, 1997; Chanal, 2021). Over the years, machine learning scientists have explored different methods to train algorithms to execute various tasks. Artificial neural networks are perhaps of the most discussed methods recently. To carry the artificial/human intelligence analogy one step further, an artificial neural network is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain (Yang, 2014). Deep neural networks, or deep learning networks, have several hidden layers with millions of artificial neurons linked together (Cai, 2022). A deep learning network derives data analysis features by itself and learns more independently (Schmidhuber, 2015).

Pattern recognition is an area in visual imagery analysis that deep neural networks have greatly contributed to during recent years (Kim, 2010; Abiodun, 2019). Pattern recognition is a data analysis method that automatically recognises patterns and regularities in data (Davies, 2018). A pattern refers to a set of items, objects, shapes, images, events, cases, situations, features or abstractions where facets of a set are

alike in an unequivocal sense (Abiodun, 2019). In that context, deep neural networks are used to recognise unfamiliar objects and shapes – even if they are partially obscured – and classify those into categories (Howard, 2007). Pattern recognition via deep learning has applications in diverse fields, with content-based image retrieval being a most notable example (Gkelios, 2021; Ong, 2017; Wang, 2018). Content-based image retrieval is a method for searching and retrieving images from large unlabelled datasets (Simran, 2021). This process is based on image features (i.e., visual patterns) rather than metadata such as keywords, tags, or descriptions associated with the image (Dubey, 2022). Unsupervised deep learning algorithms use advance analysis techniques, such as histograms of oriented gradients to define and recognise shapes, and have proven to be robust with content-based retrieval (Simran, 2021).

1.2. Evolutionary computation

Apart from machine learning – a process where algorithmic systems eventually become able to learn – the exponential development of AI have also nurtured algorithmic ability to evolve. Evolutionary computation is a sub-field of AI and involves the use of algorithms that can evolve with the availability of more data and experience (Baeck, 1997; Fogel, 2000). Computational models using evolutionary algorithms can program themselves by taking inspiration from Darwinian evolution theory and simulating biological breeding (Slowik, 2020). Evolutionary computation has been used to solve problems that have too many variables for traditional algorithms, such as optimisation problems (Chanal, 2021). In an optimisation context, computation begins with a possible set of solutions to a problem, then evaluates and modifies the possible set to arrive at the most suitable output (Deb, 2001). Over recent years, several approaches have been tested and applied to evolutionary computation, such as the genetic algorithms, genetic programming, differential evolution, evolution strategy, and evolutionary programming (Sen, 2015; Slowik, 2020).

Genetic algorithms were firstly developed by Holland (1992) have since been tried on various optimisation problems with a high degree of success (Norouzi, 2014; Yongbin,2021; Sang, 2021). In genetic algorithms, the evolution usually starts from a population of randomly generated individuals, and is an iterative process, with the

population in each iteration called a generation (McCall, 2015). In each generation, the fitness of every individual in the population is evaluated; the fitness is usually the value of the objective criterion in the optimisation problem being solved (ibid). The new generation of candidate solutions is then used in the next iteration of the algorithm (Mitchell, 1998). In this way, the process keeps “evolving” better solutions over generations, till it reaches a stopping condition (ibid).

While most genetic algorithms evolve through iterative criterion-based evaluations, an “interactive” subset of this family – i.e., interactive evolutionary algorithms – use human evaluation to arrive to an optimal solution (Eiben, 2015). Interactive evolutionary algorithms have been efficiently employed in cases where when the form of fitness function is not known (García-Valdez, 2013; Dooyum, 2018) or the result of optimisation should fit a particular user preference (Kadziński, 2020; Gong, 2014). Generally, conventional genetic algorithms identify the suitability criteria and then automatically search for the optimum solution, while interactive approaches use input from users as subjective evaluation criteria. In a design context, interactive evolutionary algorithms are ideal for form-finding tasks wherein geometries need to be optimised against limited design constraints or the only objective is the generation of multiple design scenarios without any optimisation criteria present.

1.3. Theoretical background

Many researchers have recently proposed algorithm-driven pattern recognition models to retrieve of precedent projects from databases. For example, Ahmed et al. (2014) designed a rule-based algorithmic system that would enable the user to easily access knowledge from precedent projects. The user searches for semantically similar floorplans just by drawing parts of the new plan (ibid.). Essentially, an automatic floorplan recognition system analyses the floor plans and finally retrieves the corresponding semantic information. The retrieved structural and semantic information can be saved in a repository for later access during retrieval (ibid.)

Evangelou et al. (2021) introduced a computational method for the recognition of structural elements in architectural floor plans. The proposed method requires minimal user interaction, in the form of a single, user-defined query region, and is capable of effectively analysing floor plans in order to recognise different types of structural elements in various notation styles (ibid.). Their model is not dependent on

learning from labelled samples, and there is no subsequent need for using annotations in order to retrieve precedent drawings form large datasets (ibid.). Recent work from Kalsekar et al. (2022) propose a deep learning-based model, the Rotation Invariant Siamese Convolution Network (RISC-Net), which is able to retrieve similar floorplan images from a dataset, even in the presence of rotation. Their approach captures several hidden attributes required to identify a floor layout through the application of a neural network and successfully retrieving them from a large database by comparing the embedding through another neural network (ibid.)

A growing body of research has emerged in recent years, showcasing the remarkable potential of genetic algorithms for supporting the design generation process. For instance, Mueller & Ochsendorf (2015) utilised a set of interactive evolutionary algorithms to explore design alternatives while including designer’s preferences. Their approach could improve upon existing methods by giving the designer control over the diversity of designs considered, the rate of convergence, and the multi-objective trade-off between formulated quantitative goals, e.g., structural volumes, and unformulated qualitative goals, such as architectural value. Saldana-Ochoa et al. (2020) presented a computer-aided design framework for the generation of non-standard structural forms using interactive evolutionary algorithms. Their framework relied on the implementation of a series of operations (generation, clustering, evaluation, selection, and regeneration) to create multiple design options and to navigate in the design space according to objective and subjective criteria defined by the designer (ibid). Through this interactive manner, the machine can learn the nonlinear correlation between the design inputs and the design outputs preferred by the human designer and generate new options by itself (ibid.) In a recent work by Uusitalo et al. (2022), researchers explored the use of interactive evolutionary algorithms (IEAs) in the context of product design. The authors implemented the interactive evolutionary algorithms in a way that enabled the algorithms to learn from the designer's preferences and produce new design suggestions based on these preferences (ibid.) The algorithmic ability to quickly generate new design alternatives allowed researchers to explore a wider range of possibilities, ultimately leading to more innovative and well-informed design decisions while fostering design inspiration and overcoming fixation (ibid.).

1.4. Purpose

So far, there has been very little research utilising deep learning techniques for retrieval of design artefacts other than floorplans. A notable example derives from the work of Zhang and Jin (2021) who demonstrated how analogical design can be supported by sketch-based retrieval of visually similar examples through a deep learning model. Elsewhere, Jiang et al. (2021) used a convolutional neural networkbased method to perform image-based search using visual similarity in images from patent documents. However, the majority of undertaken studies performed searches based on textual information (e.g., image labels and annotations) rather than schematic representations (Jiang, 2021). Similarly, most visual architectural databases remain quite modest in terms of indexing and often fail to exploit the support advanced indexing structures offer to query refinement, relevance feedback and other critical aspects of retrieval (King, 2021). In conjunction with that, describing architectural work just using textual information is not sufficient, as verbal descriptions are subjective and often imprecise (Ahmed, 2014). Such limitations are significant in the case of digital images but even more restricting when applied to CAD representations (Koutroumanis, 2007).

In this paper, we present a content-based image retrieval (CBIR) system for technical drawings based on data-driven techniques. The proposed system can extract features representing technical drawings based on their schematic depiction without the need to provide any type of textual information, such as labels or annotations. Our intention is to create a tool for designers who wish to boost their creativity in the early design stages and draw inspiration by browsing populous databases of precedent projects. The main function of this tool is to assist with selecting referenced drawings from databases according to their visual similarity to basic forms, which designers have previously created as rudimentary solutions for a given design problem The paper also includes a case study to validate the applicability of the proposed CBIR system in real-world projects.

2. Methods used

The CBIR system draws on the main benefits of deep learning techniques and interactive evolutionary algorithms, as explained in Sections 1.1 and 1.2. Specifically, it harnesses the robustness of the deep neural networks to manipulate unlabelled

data. It also utilises a set of interactive evolutionary algorithms to generate a diverse array of design alternatives according to designer’s preferences.

In particular, drawings from an external database are analysed in a custom-made deep convolutional autoencoder (CAE), which we developed to extract a vector comprised of latent features from each drawing. These vectors are subsequently stored in a reference library. We then utilise a suite of interactive evolutionary algorithms (IEA) to generate another set of drawings, which incorporate the designer’s preferences to a given design problem and include a wide range of basic forms, representing a variety of rough design scenarios for a prospective solution. At a next stage, the generated drawings are submitted as queries to the CAE, which then extract a vector of latent features from each query drawing. A similarity metric (i.e., kNN) is then employed to evaluate the similarity score between the vector of the query drawing and the vectors in the reference library. The vectors in the reference library that have the highest similarity score compared to the vector of the query drawing are identified. Finally, the reference drawings that correspond to the identified reference vectors are retrieved and displayed. We repeat the process for all remaining drawings in the query dataset. Figure 1 visualises the process described above.

2.1. Autoencoder architecture

Autoencoders were first introduced in the 1980s by Hinton and the PDP group (Rumelhart, 1986) and have been part of the classical landscape of neural networks for many years (Bourland, 1988; Hinton, 1993; Kamimura, 1995). More recently, autoencoders have taken central stage in the deep learning conversations because they can learn with great efficiency and execute reconstructions relatively fast (Baldi, 2011; Bank, 2020). Autoencoding has become a frequently employed deep learning technique with respect to content-based image retrieval (Daoud, 2019; Krizhevsky, 2012; Rupapara, 2020).

In the case of images, autoencoders reconstruct the input image x by using a condensed representation, called code h. The network may be viewed as consisting of two main parts: an encoding function h=f(x) and a decoding function r=g(h) (Goodfellow, 2016). Internally, it has a hidden set of layers – called latent-space representation – that is used as an intermediary between the input and output layers. The encoding and decoding functions are most usually implemented through artificial neural networks.

Neural networks store 2D images in four-dimensional (4D) tensors of shape (samples, height, width, channels). For instance, a batch of 128 grayscale images of size 256 × 256 could thus be stored in a tensor of shape (128, 256, 256, 1). The encoder f(x) receives an input image x and reduces its dimensionality by transforming it into the condensed representation h such that the dimensionality of h is smaller than that of x. Then, the decoder g(h), analyses the condensed representation (h) to reconstruct a copy of the image (r). Hence, the reconstructed copy of the image is also expressed as r=g(f(x)). As it may be obvious, the output of the autoencoder will not be exactly the same as the input as some information will be lost in the way. The structure and hyperparameters of the autoencoder are usually optimized to minimize this loss of information. The term “hyperparameter” is used to specify the parameters regulating the design of the model (e.g., learning rate and regularization), and they are different from the more fundamental parameters representing the weights of connections in the neural network (Aggarwal, 2018).

For this study, we propose a convolutional autoencoder (CAE) that enables the extraction of a condensed vector of 512 latent features for representing architectural

or industrial drawings. A major advantage of CAEs is their capability to preserve the spatial locality of the image, which is essential for retaining geometries and topologies of drawings. The structure of the proposed CAE is shown in Figure 2

In the architecture illustrated in Figure 2, the input drawing is encoded to generate the latent code h, which corresponds to a vector that includes 512 latent features. The encoding function is implemented through a stack of convolutional and maxpooling layers. Convolutional layers consist of a set of learnable filters. Every filter is small in size (width x height) and can learn local patterns in small 2D windows. The size of these windows was selected to be 3x3 (width x height), as it is a commonly used size in literature that also fits well in our task (Chollet, 2017). These filters are activated when they see some type of visual feature (e.g., an edge of some orientation) with the rectified linear unit (ReLU) function (Figure 3a) apart from the last Conv2D, which is activated with the sigmoid, non-linear function (Figure 3b).

The output feature map might differ in spatial dimensions from the input. To ensure that input and output are the same, a technique called “padding” is used. In padding rows and columns are added in the input feature map. These rows and columns are filled with zero values in our case (zero-padding). Grayscale images are encoded as integers in a range between 0-255. In neural networks, data should be normalized as “it isn’t safe to feed into a neural network data that takes relatively large values” (Chollet, 2017), for this reason each value was divided by 255 and image data was normalized in a 0-1 range.

As it was previously mentioned, input data of the autoencoder will not be exactly the same as output data, but the range of values and the shape of data must be the same. Thus, sigmoid, non-linear function (Figure 12b), is used in the last layer, as this function guarantees that the output of that layer will always be in [0,1]. Maxpooling layers are used for spatial down-sampling, by taking the maximum value over an input window for each channel of the input. The window size was set to 2x2.

A flattening layer is then applied to convert the two-dimensional (2D) feature maps that are generated by the stacked layers to a vector of 512 latent features. A fully connected layer is next applied to generate the condensed, latent representation of the drawing (h) that includes 512 latent features. During the decoding function, the feature vector (h) is decoded to reconstruct the drawing (r), by applying the reverse of the operations that were employed to map (x) to (z). These reverse operations include a fully connected layer that increases the size of the feature vector, a reshape layer that converts the feature vector (z) into 2D feature maps, and a stack of convolutional and up-sampling layers. Up-sampling layers are used to increase the dimensionality of the reconstructed drawing (r).

The proposed convolutional autoencoder (CAE) was implemented in Keras, a deep learning Application Programming Interface 1 (API) running on top of Tensorflow 2 , which is a machine learning platform.

2.1.1. Training of the CAE model

To train the CAE for retrieving images from large databases, we recommend that a splitting technique is used, as often mentioned in literature – see for instance (Joseph, 2022; Nguyen et al., 2021). Data splitting is a commonly used approach for model validation, where developers split a given dataset into two disjoint sets: training and testing (Joseph, 2022) A commonly used ratio is 80:20, which means 80% of the data is for training and 20% for testing. During our trials, we merged the reference drawings and query drawings, and used 80% of this joint set to train the CAE model.

We utilise an evaluation metric to estimate how well the CAE models the training data. The goal is to minimize the loss between the input (reference drawings) and the target outputs (query drawings). The selection of this metric depends on the learning task type. In this case, the autoencoder receives a drawing image as an input and produces a drawing image with the same size as output. The image is then scaled in range [0,1] (by dividing each pixel by 255) so it can be treated as a binary

1 Application Programming Interface (API) is a software interface that allows two applications to interact with each other without any user intervention. https://www.guru99.com/what-is-api.html

2 https://www.tensorflow.org/about/bib

classification problem. In binary classification problems, a common method to check the loss is binary cross entropy (Equation 1).

Equation 1: Binary cross entropy where:

N = number of outputs,

y = expected output,

ŷ = predicted output.

2.1.2. Similarity measure and image retrieval

K-Nearest Neighbors (KNN) algorithm has been employed to evaluate the level of similarity between query and reference drawings. KNN is a non-parametric learning algorithm, which uses nearby points to generate predictions (Burkov, 2019). In this case, KNN is used to calculate the proximity of latent feature vectors (Xq) from a query drawing to those (Xr) of reference drawings, which have already been stored to the reference library. KNN then returns k (i.e., k = 1,2,3..) proximal cases, which would correspond to the most proximate reference vectors to the given query vector. In this case, the closeness between the query (Xq) and reference vectors (Xr) is given by the negative cosine similarity function, which is defined as:

Equation 2: Negative cosine similarity

According to Equation 2, if the angle between two vectors is 0 degrees, then two vectors point to the same direction, and cosine similarity is equal to 1. If the vectors are orthogonal, the cosine similarity is 0. For vectors pointing in opposite directions, the cosine similarity is -1.

The K-Nearest Neighbours (KNN) algorithm was implemented using the Scikit-learn (also known as Sklearn) library. Scikit-learn is a Python module integrating a wide range of state-of-the-art machine learning algorithms for medium-scale supervised and unsupervised problem (Pedregosa et al., 2012).

2.1.3. Performance testing

Performance testing refers to metrics that assess how DNN tools (e.g., a DNN CAE) and models (e.g., a CBIR system) operate through a training task. The most common criterion to evaluate performance of CAEs is hyperparameter tuning (or, hyperparameter optimization), which refers to finding the optimal combination of hyperparameters that maximize the performance of the model (Bergstra & Bengio, 2012; Snoek et al., 2012) Performance enhancement of the CAE is beyond the scope of this dissertation. Thus, no hyperparameter tuning was applied to the model. The performance of the constructed CBIR system was evaluated based on the precision criterion (Daoud et al., 2019; Alsmadi, 2017; Wan et al., 2014); namely, the rate of number of retrieved (i.e., reference) images that are relevant to the (given) query image to the total number of retrieved (i.e., reference) images. Equation 3 provides the mathematical function for the precision metric.

Equation 3: Precision metric for CBIR system performance

2.2. Interactive Evolutionary Algorithms for generating design alternatives

For constructing a query dataset, use the Biomorpher software, which runs inside Grasshopper3D 3 , to generate a population of basic design forms. Biomorpher employs interactive evolutionary algorithms (IEA) to allow users create and evolve numerous designs, based on performance parameters (e.g., structural, functional, or geometric criteria), and interactively explore previous or new solutions until they select the most suitable one (Harding, 2018). Considering that, the user initially constructs a crude 3D model (phenotype) based on a series of mathematical rules (genomes), which referred to topological parameters. Genomes can refer to dimensional characteristics (e.g., height, width) of the phenotype or relationships between its constituent geometries. Each genome is defined by min and max bounds, and is graphically expressed with a slider component, through which the user can manipulate its value. Adjusting the value of any of these rules would give a different design outcome, which can be also visualised in the Rhinoceros viewport.

The next step is to connect the phenotype and the genomes into the Biomorpher component.

At this step, Biomorpher essentially generates a user-defined number of design solutions (genotypes) by randomly tweaking the given genomes and calculating potential solutions. The user can also set the mutation rate, or how likely the genes of a design are to change. After each generation, Biomorpher uses the K-means algorithm to group similar genotypes into twelve clusters and returns a tree structure of type {X;Y} where X is the cluster id and Y is the specific design number (Figure 4a); the number of designs may vary from cluster to cluster. It also visualises the most representative (closest to the cluster centroid in parameter space) design of each cluster in a 3D orientable view (Figure 4b).

After the user has investigated the twelve designs, one (or more) need to be selected to proceed with the next generation. The selected designs can therefore be thought of as the parent phenotypes, whose genes are used in the evolutionary process. The next generation is created based on mutations of each of the parents' genomes. The parent selection can be visual-based or guided by some performance measurements. Depending on both human selection and performance-based criteria, at each generation a fitness score is assigned to each phenotype from 0.0 to 1.0. Performance values are initially supplied via the input to the Biomorpher component but are automatically calculated by triggering the Grasshopper canvas to recalculate for each generation. This selection process continues until the user has reached a satisfactory design outcome. It is important to mention that the Biomorpher workspace contains a historical record of choices made by the designer. Previous populations can be accessed and reinstated, thus forming a new evolutionary branch. Initially beginning from a single, crude design form, the user is

eventually able to generate as many design alternatives as desired to build the query dataset and then feed it into the trained CAE to retrieve similar drawings from precedent projects.

3. Results from case study

In this paper, we also present the application of the developed CBIR system to an existing design project. The project forms part of the PhD research of one of the authors and refers to the design of a new type of access ramp for mobility-disabled individuals. In the early stage of design, we use the Biomorpher plug-in to generate multiple design scenarios from a crude design form we previously created. Next, we use the CBIR system to identify similar projects within various sources. We report the main findings in the following lines.

3.1. Building the data sources

To construct the reference database, we have compiled precedent projects from the following public collections: Archello Architectural Drawing Guide, Architizer Library, RIBApix Collection, and USPTO Patent Database. We have applied an initial screening so as to only include items that would refer to architectural or industrial drawings in greyscale. Included drawings presented objects in plan, elevation, crosssection, and axonometric views. In total, over 200,000 drawings were included

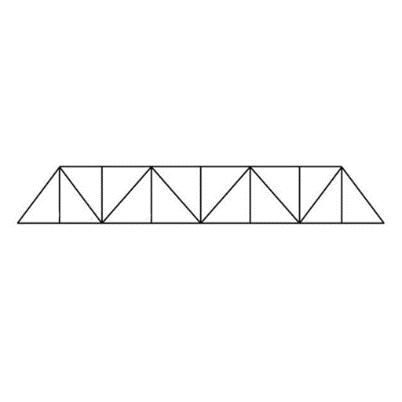

For the query dataset, we have initially created a basic ramp-type form in Rhinoceros 3D. Then, we parametrised it in Grasshopper 3D and developed a visual script to express and manipulate its topological parameters. Specifically, the analysed parameters were (a) ramp width, (b) ramp length, (c) count of ramp sections, (d) arrangement of ramp sections (vertical/horizontal), (e) height of handrails, and (f) shape of handrails. The visual script and initial design form (phenotype) are illustrated in Figures 5 and 6.

The next step included the evolutionary exploration of various design alternatives in Biomorpher. Using Biomorpher, we generated multiple forms and were able to interactively select among them by further evolving the most suitable genotypes.

Beginning from the phenotype illustrated in Figure 6 above, we arrived at hundreds of query drawings, as Biomorpher could automatically adjust the given genomes, combine those, and return numerous genotype designs. Figure 7 below presents initial settings and K-means clusters of the first generation (Generation 0). The clusters tab visualises the entire population and how it has been grouped into 12 different clusters. Each design is displayed as a dot, and since it would become overwhelming to show the actual geometry of each, the total number of dots corresponds to the population size. Each cluster contains at least one dot, but the number varies from cluster to cluster (depending on how closely related the designs are). The ordering of the clusters i.e., the relation between, them has no meaning. However, within each cluster it is possible to determine the dot (design) that is closest to the centroid, and this is referred to as the representative design. In other words, this is the design that best represents all the designs within its cluster. The representative design is the dot at the centre and the distances to the other dots

represent the normalised euclidean distances between them. Representative designs of all twelve clusters are shown in Figure 8.

Since the research focus of this effort has been on exploring design alternatives rather than optimising those, the evolutionary process was driven by visual/aesthetic criteria instead of performance measurements. For this reason, performance criteria (such as minimum-maximum genome values) were omitted in the analysis, and the evolutionary process would continue until we selected a satisfactory design. In many cases, this was achieved by further evolving one or more of the previously generated

designs. Taking on from the example presented in Figure 8, I selected three designs from the initial generation, as illustrated in Figure 9, and further evolved those. Figure 10 presents the design outcomes of the new generation (Generation 1).

Next, we exported different views of the selected designs – namely, top, left, right, and isometric views – to black-and-white drawings. Each drawing was formatted to 512*512 pixels (width*length) in terms of resolution. Figure 11 presents an example of different views for a selected design.

Overall, we ran 20 generations, which returned a total of 1356 drawings. In every generation, we omitted genotype solutions that did not represent ramp-type objects. This filtering process dropped the number drawings to 1125. Those drawings comprised the query dataset, against which drawings from the reference databases were sought.

3.2. Image retrieval

As a next step, we imported all the drawings from the reference databases (i.e., over 200,000 images) into the CAE. The computational setup for this task included a highperformance desktop computer with the following specifications:

o CPU: Intel Core i9-12900 3.8 GHz, 16-core processor

o GPU: NVIDIA GeForce RTX 3080 graphics card, with 12 GB VRAM

o RAM: 64 GB DDR5 memory

o Storage: 1 TB SSD storage

o Operating System: Windows 11

In this way, we managed to extract vectors of latent features for every image included in the reference databases, thus building the reference library. Using a system with the aforementioned characteristics, the training process was completed in 7 hours and 12 minutes.

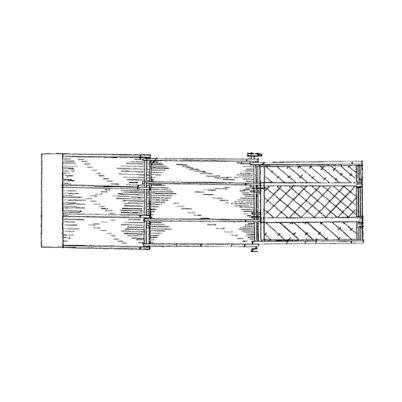

Next, we imported all 1125 generated images from the query dataset into the constructed CBIR system. The system browsed over 200,000 drawings from the reference database. For each image of the query dataset, the system returned the 2, 3, 5, or 7 most similar drawings (i.e., k=2 or k=3 or k=5 or k=7 closest cases, using the KNN algorithm) from the reference database. After manually filtering all 7875 retrieved drawings, we omitted 7839 cases as irrelevant for the purposes of this research, according to specific exclusion criteria. This was because those referred to (a) duplicate drawings (n=6013), or (b) non-ramp type objects (n=1162), or (c) no meaningful analogies could be drawn between retrieved representations and a prospective solution (n=664). In the end 36 drawings were selected for further design exploration, a sample of which are presented in Figure

3.3. Performance of the CBIR system

The performance of the constructed CBIR system was assessed using the precision metric, as given by Equation 3. Table 1 below presents the results.

It is apparent from Table 1 that the investigation of k=7 nearest cases increased the possibility of retrieving reference images, which would be more relevant to the query image provided.

4. Discussion

In this paper, we presented a CBIR system for technical drawings based on datadriven techniques. Our intention was to support the early stages of the design process, by creating a system that could retrieve drawings from large databases based the criterion of visual similarity to basic forms created by the designer For this reason, we developed a deep CAE, which can be trained to recognise 2D drawings from large databases. We also utilised a suite of IEAs (i.e., Biomorpher), which is capable of evolving a single design form to produce a variety of design alternatives and reinforce the retrieval process in an automated manner. The retrieval can be conducted according to the nearest neighbour algorithm (KNN). The paper also includes a case study to validate the applicability of the proposed CBIR system in real-world projects.

The approach adopted in this effort contributes to the rapidly expanding field of datadriven techniques and tools for design-thinking purposes, and particularly design-byanalogy. The methodological significance of this work can be corroborated by a recent state-of-the-art review on data-driven design-by-analogy (Jiang, 2021), which found that most of the current research has focused on merely mining textual information as analogy candidate. Contrastingly, the proposed approach here refers to a visual-based search, in which schematic representations are used as an explicit mode to retrieve similar drawings. For architects and designers, this method can be extremely advantageous as they often prefer visual depictions to textual descriptions for idea generation (Linsey et al., 2011; Moreno et al., 2014; Atilola, 2016; Jiang, 2021). Also, this possibly is among the first research efforts to employ deep-learning

models to recognise drawings from precedent projects in architectural databases (e.g., RIBA library) based on the criterion of visual similarity.

Prior to this investigation, little evidence existed to support a combined application of evolutionary computation and deep learning models in the early stages of architectural design. The vast majority of similar research has utilised machine learning tools to generate floorplans based on precedent projects (Castro Pena et al., 2021), thus overlooking other elements of the built environment (e.g., bridges and frames) or particular projections (e.g., cross-sections and axonometries). Opensource architectural databases are hard to manipulate and application of visualbased or projection-specific search criteria is impossible. This is probably because included projects are only indexed according to their location and spatial typology (e.g., furniture, public housing, educational building). Using interactive evolutionary computation in a parametric modelling environment allowed us to timely generate numerous design alternatives and visualise those in different projections. In this way, we were able to create a rich and diverse dataset of query drawings in terms of schematic representation as well as projections, which increased the likelihood of retrieving visually similar drawings from the reference database.

A major advantage of the CAE is the constrained dimensionality of the latent code (h) which is smaller than the dimensionality of the input drawing image. In fact, the reduced dimensionality of (h) allows the CAE to learn salient features that enable effective representation of the drawing. Another crucial advantage of the proposed CAE is the capability to extract a latent features vector from the images without the need to segment the image. Nevertheless, the constructed CBIR had a notable limitation, as after the completion of the image retrieval process, I needed to manually filter out non-relevant drawings. This activity was tedious and highly timeconsuming. The reason for selecting a manual filtering method – rather than an automated one – was that all but one of the inclusion criteria (i.e., non-ramp type objects, non-adaptive elements, no meaningful analogies) referred to qualitative or design-oriented values, which were difficult to parametrise using mathematical forms. As such, I wasn’t able to apply a machine learning model, which would assist with moderation of inclusion criteria and, finally, selection of relevant drawings. Further work is required to investigate data-driven methods (for instance, through the use of machine learning models) to facilitate a less taxing, non-manual selection

based on qualitative criteria. A possibly fruitful avenue for future research is the work currently undertaken in the context of Reinforcement Learning from Human Feedback (Bai et al., 2022; Christiano et al., 2017). This set of machine-learning techniques defines metrics that are designed to better capture human preferences often originating from natural language prompts, as recently shown in ChatGTP . An extension of this would be the quantification of qualitative criteria – emerging from human preferences or design requirements – and eventually lead to filtering of cases based on these criteria.

This work set out to seek inspiration from precedents, namely architectural objects and industrial patents, in a bid to discover ramp-type artefacts and use those as reference projects for the proposal stage of this thesis. For this reason, I searched in three architectural collections and a global patent database and browsed over 100,000 drawings in total. The search strategy did not include any type of text (e.g., keywords or quotes) and was based on schematic representations instead. Specifically, a convolutional autoencoder was first trained to recognise 2D drawings and then tested to return ramp-type ones from the searched databases. The autoencoder would receive as input ramp-type drawings, which was previously autogenerated using a set of design and evolutionary algorithms mostly compiled in the Biomorpher tool. The retrieval was conducted according to the nearest neighbour algorithm (KNN).

In conclusion, this paper presents a novel CBIR system for technical drawings, designed to facilitate the early stages of the design process by efficiently retrieving from large databases. By leveraging a deep CAE and incorporating IEAs such as Biomorpher, our system enables designers to explore a wide range of design alternatives while fostering creativity and inspiration. The case study presented validates the applicability of our proposed system in real-world projects, demonstrating its potential to revolutionise design workflows and enhance overall efficiency. As future work, we plan to extend our system to accommodate additional design domains and explore the integration of more advanced algorithms to further improve performance.

References

A Burkov. (2019). The hundred-page machine learning book. Andriy Burkov.

https://books.google.gr/books?id=ZF3KwQEACAAJ

A Ponsi, & A Shugaar. (2015). Analogy and design. University of Virginia Press.

https://books.google.gr/books?id=7_rLrQEACAAJ

A. Terry Purcell, & Gero, J. S. (1996a). Design and other types of fixation. Design Studies, 17(4), 363–383.

https://doi.org/10.1016/S0142-694X(96)00023-3

A. Terry Purcell, & Gero, J. S. (1996b). Design and other types of fixation. Design Studies, 17(4), 363–383.

https://doi.org/10.1016/S0142-694X(96)00023-3

Aggarwal, C. C. (2018). Neural networks and deep learning: A textbook. In Neural Networks and Deep Learning (p. 497). Springer International Publishing.

https://doi.org/10.1007/978-3-319-94463-0_1

Ahmed, S., Weber, M., Liwicki, M., Christoph Langenhan, Dengel, A., & Petzold, F. (2014). Automatic analysis and sketch-based retrieval of architectural floor plans. Pattern Recognition Letters, 35(1), 91–100. https://doi.org/10.1016/J.PATREC.2013.04.005

Alsmadi, M. K. (2017). An efficient similarity measure for content based image retrieval using memetic algorithm. Egyptian Journal of Basic and Applied Sciences, 4(2), 112–122.

https://doi.org/10.1016/J.EJBAS.2017.02.004

Ana de Almeida, Taborda, B., Santos, F., Kwiecinski, K., & Eloy, S. (2016). A genetic algorithm application for automatic layout design of modular residential homes. 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), 002774–002778.

https://doi.org/10.1109/SMC.2016.7844659

Aniket Kittur, Yu, L., Hope, T., Chan, J., Hila Lifshitz-Assaf, Karni Gilon, Ng, F., Kraut, R.

E., & Dafna Shahaf. (2019). Scaling up analogical innovation with crowds and AI.

Proceedings of the National Academy of Sciences, 116(6), 1870–1877.

https://doi.org/10.1073/pnas.1807185116

Arshiya Simran, Kumar, S., & Bachu, S. (2021). Content based image retrieval using deep learning convolutional neural network. IOP Conference Series: Materials Science and Engineering, 1084(1), 12026. https://doi.org/10.1088/1757-899x/1084/1/012026

Atharva Kalsekar, Rasika Khade, Jariwala, K., & Chattopadhyay, C. (2022). RISC-Net : rotation invariant siamese convolution network for floor plan image retrieval.

Multimedia Tools and Applications, 1–25. https://doi.org/10.1007/S11042-02213124-3/FIGURES/13

Avraam Tsantekidis, Nikolaos Passalis, & Anastasios Tefas. (2022). Deep reinforcement learning. Deep Learning for Robot Perception and Cognition, 117–129.

https://doi.org/10.1016/B978-0-32-385787-1.00011-7

Azizi, V., Usman, M., Sohn, S. S., Schwartz, M., Moon, S., Petros Faloutsos, & Kapadia, M. (2022). The role of latent representations for design space exploration of floorplans.

SIMULATION, 00375497221115734. https://doi.org/10.1177/00375497221115734

Azizi, V., Usman, M., Zhou, H., Petros Faloutsos, & Kapadia, M. (2022). Graph-based generative representation learning of semantically and behaviorally augmented floorplans. The Visual Computer, 38(8), 2785–2800. https://doi.org/10.1007/s00371021-02155-w

Bai, Y., Jones, A., Kamal Ndousse, Askell, A., Chen, A., DasSarma, N., Drain, D., Fort, S., Ganguli, D., Henighan, T., Joseph, N., Saurav Kadavath, Kernion, J., Conerly, T., Sheer El-Showk, Elhage, N., Zac Hatfield-Dodds, Hernandez, D., Hume, T., &

Johnston, S. (2022). Training a helpful and harmless assistant with reinforcement learning from human feedback. In Arxiv

Baldi, P. (2011). Autoencoders, unsupervised learning and deep architectures. Proceedings of the 2011 International Conference on Unsupervised and Transfer Learning Workshop - Volume 27, 37–50.

Bank, D., Noam Koenigstein, & Raja Giryes. (2020). Autoencoders. In ArXiv.

Bergstra, J., & Yoshua Bengio. (2012). Random search for hyper-parameter optimization. Journal of Machine Learning Research, 13(10), 281–305.

http://jmlr.org/papers/v13/bergstra12a.html

Boden, M. A. (2016). AI: Its nature and future. Oxford University Press.

https://books.google.gr/books?id=yDQTDAAAQBAJ

Botagoz Kulzhanova, Ongdassynkyzy, D., Kuralay Ongdassynova, Aidar Duisenbay, Talgat

Chaimerden, Yana Paromova, & Petrova, Y. (2021). Biomimetics - a hint of future technologies in nature. Journal of Biomimetics, Biomaterials and Biomedical Engineering, 53, 59–66. https://doi.org/10.4028/www.scientific.net/JBBBE.53.59

Byrne, J. (2012). Approaches to evolutionary architectural design exploration using grammatical evolution. University College Dublin.

Cai, H., Lin, J., & Han, S. (2022). Efficient methods for deep learning. Advanced Methods and Deep Learning in Computer Vision, 159–190. https://doi.org/10.1016/B978-0-12822109-9.00013-8

Carlos Alberto Brebbia, Sucharov, L. J., P. Pascolo, on, & Nature. (2002). Design and nature

: comparing design in nature with science and engineering

https://ci.nii.ac.jp/ncid/BA58820105.bib

Castro, L., Adrián Carballal, Rodríguez-Fernández, N., Santos, I., & Romero, J. (2021). Artificial intelligence applied to conceptual design. A review of its use in architecture. Automation in Construction, 124, 103550.

https://doi.org/10.1016/J.AUTCON.2021.103550

Chanal, P. M., Kakkasageri, M. S., & Kumar, S. (2021). Security and privacy in the internet of things: computational intelligent techniques-based approaches. Recent Trends in Computational Intelligence Enabled Research: Theoretical Foundations and Applications, 111–127.

https://doi.org/10.1016/B978-0-12-822844-9.00009-8

Chen, T.-J., Mohanty, R. R., & Krishnamurthy, V. R. (2021). Queries and cues: Textual stimuli for reflective thinking in digital mind-mapping. Journal of Mechanical Design, 144(2). https://doi.org/10.1115/1.4052297

Cheng, P., Mugge, R., & Jan P.L. Schoormans. (2014). A new strategy to reduce design

fixation: Presenting partial photographs to designers. Design Studies, 35(4), 374–391.

https://doi.org/10.1016/J.DESTUD.2014.02.004

Christiano, P. F., Leike, J., Brown, T., Miljan Martic, Legg, S., & Amodei, D. (2017). Deep reinforcement learning from human preferences. In I. Guyon, U Von Luxburg, S

Bengio, H. Wallach, R. Fergus, S Vishwanathan, & R. Garnett (Eds.), Advances in Neural Information Processing Systems (Vol. 30). Curran Associates, Inc.

https://proceedings.neurips.cc/paper/2017/file/d5e2c0adad503c91f91df240d0cd4e49Paper.pdf

Daoud, M. I., Saleh, A., I Hababeh, & R Alazrai. (2019). Content-based image retrieval for breast ultrasound images using convolutional autoencoders: A feasibility study. 2019

3rd International Conference on Bio-Engineering for Smart Technologies (BioSMART), 1–4. https://doi.org/10.1109/BIOSMART.2019.8734190

Davies, E. R. (2018). Basic classification concepts. Computer Vision, 365–398.

https://doi.org/10.1016/B978-0-12-809284-2.00013-7

Deb, K. (2001). Multi-objective optimization using evolutionary algorithms. Wiley.

https://books.google.gr/books?id=OSTn4GSy2uQC

Dutta, K., & Siddhant Sarthak. (2011). Architectural space planning using evolutionary computing approaches: a review. Artificial Intelligence Review, 36(4), 311.

https://doi.org/10.1007/s10462-011-9217-y

Esma Bige Tunçer. (2009). The architectural information map: Semantic modeling in conceptual architectural design. Delft University.

Evangelou, I., Michalis Savelonas, & Papaioannou, G. (2021). PU learning-based recognition of structural elements in architectural floor plans. Multimedia Tools and Applications, 80(9), 13235–13252. https://doi.org/10.1007/s11042-020-10295-9

García-Valdez, M., Trujillo, L., Fernández, F., Julián, J., & Olague, G. (2013). EvoSpaceInteractive: A framework to develop distributed collaborative-interactive evolutionary algorithms for artistic design. In P. Machado, J. McDermott, & A. Carballal (Eds.), Evolutionary and Biologically Inspired Music, Sound, Art and Design (pp. 121–132).

Springer Berlin Heidelberg.

Gero, S. (1996). An exploration-based evolutionary model of generative design process. Microcomputers in Civil Engineering, 11, 209–216.

https://cir.nii.ac.jp/crid/1571135651698505984.bib?lang=en

Ghosh, A., Chakraborty, D., & Law, A. (2018). Artificial intelligence in Internet of things.

CAAI Transactions on Intelligence Technology, 3(4), 208–218.

https://doi.org/10.1049/trit.2018.1008

Gimenez, L., Robert, S., Frédéric Suard, & Zreik, K. (2016). Automatic reconstruction of 3D building models from scanned 2D floor plans. Automation in Construction, 63, 48–56.

https://doi.org/10.1016/j.autcon.2015.12.008

Goel, A. K., Zhang, G., Wiltgen, B., Zhang, Y., Swaroop Vattam, & Yen, J. (2015). On the benefits of digital libraries of case studies of analogical design: Documentation,

access, analysis, and learning. Artificial Intelligence for Engineering Design, Analysis and Manufacturing, 29(2), 215–227.

https://doi.org/10.1017/S0890060415000086

Goldschmidt, G., & Smolkov, M. (2006). Variances in the impact of visual stimuli on design problem solving performance. Design Studies, 27(5), 549–569.

https://doi.org/10.1016/J.DESTUD.2006.01.002

Gong, D., Ji, X., Sun, J., & Sun, X. (2014). Interactive evolutionary algorithms with decisionmaker׳s preferences for solving interval multi-objective optimization problems.

Neurocomputing, 137, 241–251. https://doi.org/10.1016/J.NEUCOM.2013.04.052

Gonsalves, T., & Upadhyay, J. (2021). Integrated deep learning for self-driving robotic cars. Artificial Intelligence for Future Generation Robotics, 93–118.

https://doi.org/10.1016/B978-0-323-85498-6.00010-1

Goodfellow, I., Y Bengio, & Courville, A. (2016). Deep learning (p. 499). MIT Press.

http://www.deeplearningbook.org

Harding, J. E., & Shepherd, P. (2017). Meta-parametric design. Design Studies, 52, 73–95.

https://doi.org/10.1016/J.DESTUD.2016.09.005

Harding, J., & Cecilie Brandt-Olsen. (2018). Biomorpher: Interactive evolution for parametric design. International Journal of Architectural Computing, 16(2), 144–163.

https://doi.org/10.1177/1478077118778579

Hatchuel, A., Pascal Le Masson, Thomas, M., & Weil, B. (2021). WHAT IS GENERATIVE IN GENERATIVE DESIGN TOOLS? UNCOVERING TOPOLOGICAL GENERATIVITY WITH A C-K MODEL OF EVOLUTIONARY ALGORITHMS.

Proceedings of the Design Society, 1, 3419–3430.

https://doi.org/10.1017/pds.2021.603

Hemanth, D. J., & Estrela, V. V. (2017). Deep learning for image processing applications

IOS Press. https://books.google.co.uk/books?id=vsFVDwAAQBAJ

Holland, J. H. (1992). Genetic algorithms. Scientific American, 267(1), 66–73.

http://www.jstor.org/stable/24939139

Howard, W. R. (2007). Pattern recognition and machine learning. Kybernetes, 36(2), 275.

https://doi.org/10.1108/03684920710743466

Huang, G., Liu, Z., Der, V., & Weinberger, K. Q. (2017). Densely connected convolutional networks. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2261–2269. https://doi.org/10.1109/CVPR.2017.243

Huang, J., Mikhael Johanes, Frederick Chando Kim, Doumpioti, C., & Georg Christoph

Holz. (2021). On GANs, NLP and architecture: Combining human and machine intelligences for the generation and evaluation of meaningful designs.

Https://Doi.org/10.1080/24751448.2021.1967060, 5(2), 207–224.

https://doi.org/10.1080/24751448.2021.1967060

Isola, P., Zhu, J.-Y., Zhou, T., & Efros, A. A. (2018). Image-to-image translation with conditional adversarial networks. In arXiv.

Jaime de Miguel, Maria Eugenia Villafañe, Luka Piškorec, & Sancho-Caparrini, F. (2022). Deep Form Finding - Using Variational Autoencoders for deep form finding of structural typologies. Proceedings of the 37th International Conference on Education and Research in Computer Aided Architectural Design in Europe (ECAADe) & 23rd Conference of the Iberoamerican Society Digital Graphics [Volume 1], 1, 71–80.

https://doi.org/10.52842/CONF.ECAADE.2019.1.071

Jaime, Maria Eugenia Villafañe, Luka Piškorec, & Fernando Sancho Caparrini. (2020). Generation of geometric interpolations of building types with deep variational autoencoders. Design Science, 6, e34. https://doi.org/10.1017/dsj.2020.31

Jiang, S., Hu, J., Wood, K. L., & Luo, J. (2021). Data-driven design-by-analogy: State-of-theart and future directions. Journal of Mechanical Design, 144(2).

https://doi.org/10.1115/1.4051681

Jiang, S., Luo, J., Ruiz-Pava, G., Hu, J., & Magee, C. L. (2021). Deriving design feature vectors for patent images using convolutional neural networks. Journal of Mechanical Design, Transactions of the ASME, 143(6).

https://doi.org/10.1115/1.4049214/1091825

Jiao, L., & Zhao, J. (2019). A survey on the new generation of deep learning in image processing. IEEE Access, 7, 172231–172263.

https://doi.org/10.1109/ACCESS.2019.2956508

Jürgen Schmidhuber. (2015). Deep learning in neural networks: An overview. Neural Networks, 61, 85–117.

https://doi.org/10.1016/J.NEUNET.2014.09.003

Karla Saldana Ochoa, Patrick Ole Ohlbrock, Pierluigi D’Acunto, & Moosavi, V. (2020). Beyond typologies, beyond optimization: Exploring novel structural forms at the interface of human and machine intelligence. International Journal of Architectural Computing, 19(3), 466–490.

https://doi.org/10.1177/1478077120943062

Keiron O'Shea, & Nash, R. (2015). An introduction to convolutional neural networks. ArXiv: Neural and Evolutionary Computing.

https://doi.org/10.48550/arxiv.1511.08458

Khan, A., Sohail, A., Umme Zahoora, & Aqsa Saeed Qureshi. (2020). A survey of the recent architectures of deep convolutional neural networks. Artificial Intelligence Review, 53(8), 5455–5516.

https://doi.org/10.1007/S10462-020-09825-6

Kim, T. (2010). Pattern recognition using artificial neural network: A review. In Wael, K.

Tai-hoon, X. Yang, & Adi (Eds.), Information Security and Assurance (pp. 138–148).

Springer Berlin Heidelberg.

King, E., Katie Pierce Meyer, & King-Ip (David) Lin. (2021). Semi-automatic residential floor plan detection. ACM Journal on Computing and Cultural Heritage

https://doi.org/10.1145/3503046

Koch, S., Matveev, A., Jiang, Z., Williams, F., Alexey Artemov, Evgeny Burnaev, Alexa, M., Zorin, D., & Panozzo, D. (2018, December). ABC: A big CAD model dataset for geometric deep learning. http://arxiv.org/abs/1812.06216

Koutamanis, A., Halin, G., & Kvan, T. (2007). Indexing and retrieval of visual design representations. Conférence ECAADe 2007, 564–572.

Krizhevsky, A., Ilya Sutskever, & Hinton, G. E. (2012a). ImageNet classification with deep convolutional neural networks. Proceedings of the 25th International Conference on Neural Information Processing Systems - Volume 1, 1097–1105.

Krizhevsky, A., Ilya Sutskever, & Hinton, G. E. (2012b). ImageNet classification with deep convolutional neural networks. In F. Pereira, C. J. Burges, L Bottou, & K. Q. Weinberger (Eds.), Advances in Neural Information Processing Systems (Vol. 25).

Curran Associates, Inc.

https://proceedings.neurips.cc/paper/2012/file/c399862d3b9d6b76c8436e924a68c45b

-Paper.pdf

Kucer, M., Oyen, D., Castorena, J., & Wu, J. (2022). DeepPatent: Large scale patent drawing recognition and retrieval. 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 557–566.

https://doi.org/10.1109/WACV51458.2022.00063

Kwon, E., Huang, F., & Kosa Goucher-Lambert. (2022). Enabling multi-modal search for inspirational design stimuli using deep learning. Artificial Intelligence for Engineering Design, Analysis and Manufacturing, 36, e22.

https://doi.org/10.1017/S0890060422000130

Lawson, B. (2012). What designers know. Routledge.

Leslie Pack Kaelbling, Littman, M. L., & Moore, A. W. (1996). Reinforcement learning: A survey. J. Artif. Int. Res., 4(1), 237–285.

Lim, Y. W., Majid, H. A., Samah, A. A., Ahmad, M. H., Ossen, D. R., Harun, M. F., & F. Shahsavari. (2018). BIM AND GENETIC ALGORITHM OPTIMISATION FOR SUSTAINABLE BUILDING ENVELOPE DESIGN. International Journal of Sustainable Development and Planning, 13(1), 151–159.

https://doi.org/10.2495/SDP-V13-N1-151-159

Linsey, J. S., Clauss, E. F., T. Kurtoglu, Murphy, J. T., Wood, K. L., & Markman, A. B. (2011). An experimental study of group idea generation techniques: Understanding the roles of idea representation and viewing methods. Journal of Mechanical Design, 133(3).

https://doi.org/10.1115/1.4003498

Linsey, J. S., Markman, A. B., & Wood, K. L. (2012). Design by analogy: A study of the WordTree method for problem re-representation. Journal of Mechanical Design, 134(4).

https://doi.org/10.1115/1.4006145

McCall, J. (2005). Genetic algorithms for modelling and optimisation. Journal of Computational and Applied Mathematics, 184(1), 205–222.

https://doi.org/10.1016/J.CAM.2004.07.034

Miao, Y., Koenig, R., & Knecht, K. (2020). The development of optimization methods in generative urban design: a review. Proceedings of the 11th Annual Symposium on Simulation for Architecture and Urban Design, 1–8.

Miłosz Kadziński, Tomczyk, M. K., & Słowiński, R. (2020). Preference-based cone contraction algorithms for interactive evolutionary multiple objective optimization. Swarm and Evolutionary Computation, 52, 100602.

https://doi.org/10.1016/J.SWEVO.2019.100602

Mirza, M., & Osindero, S. (2014, November). Conditional generative adversarial nets

ArXiv. http://arxiv.org/abs/1411.1784

Mitchell, M. (1998). An introduction to genetic algorithms. MIT Press.

https://books.google.gr/books?id=0eznlz0TF-IC

Mitchell, T. M. (1997). Machine learning. McGraw-Hill Education.

https://books.google.gr/books?id=xOGAngEACAAJ

Moreno, D. P., Blessing, L. T., Yang, M. C., Hernández, A. A., & Wood, K. L. (2016). Overcoming design fixation: Design by analogy studies and nonintuitive findings.

Artificial Intelligence for Engineering Design, Analysis and Manufacturing, 30(2), 185–199.

https://doi.org/10.1017/S0890060416000068

Moreno, D. P., Hernández, A. A., Yang, M. C., Otto, K. N., Katja Hölttä-Otto, Linsey, J. S., Wood, K. L., & Linden, A. (2014). Fundamental studies in Design-by-Analogy: A focus on domain-knowledge experts and applications to transactional design problems. Design Studies, 35(3), 232–272.

https://doi.org/10.1016/J.DESTUD.2013.11.002

Mueller, C. T., & Ochsendorf, J. A. (2015). Combining structural performance and designer preferences in evolutionary design space exploration. Automation in Construction, 52, 70–82.

https://doi.org/10.1016/J.AUTCON.2015.02.011

Nauata, N., Chang, K.-H., Cheng, C.-Y., Mori, G., & Furukawa, Y. (2020, March). Housegan: Relational generative adversarial networks for graph-constrained house layout generation. http://arxiv.org/abs/2003.06988

Newton, D. (2019). Generative deep learning in architectural design. Technology|Architecture + Design, 3(2), 176–189.

https://doi.org/10.1080/24751448.2019.1640536

Nizam Onur Sönmez. (2018). A review of the use of examples for automating architectural design tasks. Computer-Aided Design, 96, 13–30.

https://doi.org/10.1016/J.CAD.2017.10.005

Norouzi, A., & A Halim Zaim. (2014). Genetic algorithm application in optimization of wireless sensor networks. The Scientific World Journal, 2014, 286575.

https://doi.org/10.1155/2014/286575

Oludare Isaac Abiodun, Aman Jantan, Abiodun Esther Omolara, Kemi Victoria Dada, Abubakar Malah Umar, Okafor Uchenwa Linus, Arshad, H., Abdullahi Aminu

Kazaure, Gana, U., & Muhammad Ubale Kiru. (2019). Comprehensive review of artificial neural network applications to pattern recognition. IEEE Access, 7, 158820–158846. https://doi.org/10.1109/ACCESS.2019.2945545

Olufunmilola Atilola, Tomko, M., & Linsey, J. S. (2016). The effects of representation on idea generation and design fixation: A study comparing sketches and function trees. Design Studies, 42, 110–136. https://doi.org/10.1016/J.DESTUD.2015.10.005

Ong, E.-J., Husain, S., & Bober, M. (2017). Siamese network of deep fisher-vector descriptors for image retrieval. ArXiv, abs/1702.00338

Paige Wenbin Tien, Wei, S., Darkwa, J., Wood, C., & John Kaiser Calautit. (2022). Machine learning and deep learning methods for enhancing building energy efficiency and indoor environmental Quality–A review. In Energy and AI (p. 100198). Elsevier.

Pedregosa, F., Gaël Varoquaux, Alexandre Gramfort, Michel, V., Thirion, B., Grisel, O., Mathieu Blondel, Müller, A., Nothman, J., Gilles Louppe, Prettenhofer, P., Weiss, R.,

Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., & Édouard Duchesnay. (2012). Scikit-learn: Machine learning in python. In Journal of Machine Learning Research

Pizarro, P. N., Hitschfeld, N., Sipiran, I., & Saavedra, J. M. (2022). Automatic floor plan analysis and recognition. Automation in Construction, 140, 104348.

https://doi.org/10.1016/J.AUTCON.2022.104348

Quang Hung Nguyen, Ly, H.-B., Lanh Si Ho, & Nadhir Al-Ansari. (2021). Research article influence of data splitting on performance of machine learning models in prediction of shear strength of soil

Raheem, A. A., Hameed, P., Ruban Whenish, Elsen, R. S., Aswin G, Amit Kumar Jaiswal, Konda Gokuldoss Prashanth, & Geetha Manivasagam. (2021). A review on development of bio-inspired implants using 3D printing. Biomimetics, 6(4).

https://doi.org/10.3390/biomimetics6040065

Reed, S. E., Zhang, Y., Zhang, Y., & Lee, H. (2015). Deep visual analogy-making. In C.

Cortes, N. Lawrence, D. Lee, M. Sugiyama, & R. Garnett (Eds.), Advances in Neural Information Processing Systems (Vol. 28). Curran Associates, Inc.

https://proceedings.neurips.cc/paper/2015/file/e07413354875be01a996dc560274708e

-Paper.pdf

Samuel, A. L. (1959). Some studies in machine learning using the game of checkers. IBM Journal of Research and Development, 3(3), 210–229.

https://doi.org/10.1147/rd.33.0210

Sang, B. (2021). Application of genetic algorithm and BP neural network in supply chain finance under information sharing. Journal of Computational and Applied Mathematics, 384, 113170. https://doi.org/10.1016/J.CAM.2020.113170

Sen, S. (2015). A survey of intrusion detection systems using evolutionary computation. BioInspired Computation in Telecommunications, 73–94. https://doi.org/10.1016/B9780-12-801538-4.00004-5

Severi Uusitalo, Kantosalo, A., Antti Salovaara, Takala, T., & Guckelsberger, C. (2022). Cocreative product design with interactive evolutionary algorithms: A practice-based reflection. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 13221 LNCS, 292–307.

https://doi.org/10.1007/978-3-031-03789-4_19

Shanaka Kristombu Baduge, Sadeep Thilakarathna, Jude Shalitha Perera, Mehrdad

Arashpour, Sharafi, P., Teodosio, B., Ankit Shringi, & Mendis, P. (2022). Artificial intelligence and smart vision for building and construction 4.0: Machine and deep learning methods and applications. Automation in Construction, 141, 104440.

https://doi.org/10.1016/j.autcon.2022.104440

Sharma, D., Gupta, N., Chattopadhyay, C., & Mehta, S. (2017). DANIEL: A deep architecture for automatic analysis and retrieval of building floor plans. 2017 14th

IAPR International Conference on Document Analysis and Recognition (ICDAR), 01, 420–425.

https://doi.org/10.1109/ICDAR.2017.76

Shiv Ram Dubey. (2021). A decade survey of content based image retrieval using deep learning.

https://doi.org/10.1109/TCSVT.2021.3080920

Shiv Ram Dubey. (2022). A decade survey of content based image retrieval using deep learning. IEEE Transactions on Circuits and Systems for Video Technology, 32(5), 2687–2704.

https://doi.org/10.1109/TCSVT.2021.3080920

Simonsen, C. P., Thiesson, F. M., Philipsen, M. P., & Moeslund, T. B. (2021). GENERALIZING FLOOR PLANS USING GRAPH NEURAL NETWORKS.

Proceedings - International Conference on Image Processing, ICIP, 2021-September, 654–658.

https://doi.org/10.1109/ICIP42928.2021.9506514

Simonyan, K., & Zisserman, A. (2014, September). Very deep convolutional networks for large-scale image recognition. 3rd International Conference on Learning

Representations, ICLR 2015 - Conference Track Proceedings

https://doi.org/10.48550/arxiv.1409.1556

Singh, V., & Gu, N. (2012). Towards an integrated generative design framework. Design Studies, 33(2), 185–207. https://doi.org/10.1016/J.DESTUD.2011.06.001

Slowik, A., & Kwasnicka, H. (2020). Evolutionary algorithms and their applications to engineering problems. Neural Computing and Applications, 32(16), 12363–12379.

https://doi.org/10.1007/s00521-020-04832-8

Snoek, J., Larochelle, H., & Adams, R. P. (2012). Practical bayesian optimization of machine learning algorithms. Advances in Neural Information Processing Systems, 25.

Socratis Gkelios, Aphrodite Sophokleous, Spyridon Plakias, Boutalis, Y. S., & Chatzichristofis, S. A. (2021). Deep convolutional features for image retrieval. Expert Syst. Appl., 177, 114940.

Stanislas Chaillou. (2019). AI architecture towards a new approach. https://www. academia.

edu/39599650/AI_Architecture_Towards_a_New_Approach

Taborda, B., Ana de Almeida, Santos, F., Eloy, S., & Kwiecinski, K. (2018). Shaper-ga: Automatic shape generation for modular house design. Proceedings of the Genetic and Evolutionary Computation Conference, 937–942.

https://doi.org/10.1145/3205455.3205609

Uyeh Daniel Dooyum, Rammohan Mallipeddi, Trinadh Pamulapati, Park, T., Kim, J., Woo, S., & Ha, Y. (2018). Interactive livestock feed ration optimization using evolutionary algorithms. Computers and Electronics in Agriculture, 155, 1–11.

https://doi.org/10.1016/J.COMPAG.2018.08.031

V. Roshan Joseph. (2022). Optimal ratio for data splitting. Statistical Analysis and Data

Mining: The ASA Data Science Journal, 15(4), 531–538.

https://doi.org/10.1002/sam.11583

Vaibhav Rupapara, Narra, M., Naresh Kumar Gonda, Kaushika Thipparthy, & Gandhi, S. (2020). Auto-encoders for content-based image retrieval with its implementation using handwritten dataset. 2020 5th International Conference on Communication and Electronics Systems (ICCES), 289–294.

https://doi.org/10.1109/ICCES48766.2020.9138007

van, Boonstra, S., Hofmeyer, H., T Bäck, & Emmerich, M. T. M. (2017). Configuring advanced evolutionary algorithms for multicriteria building spatial design optimisation. 2017 IEEE Congress on Evolutionary Computation (CEC), 1803–1810.

https://doi.org/10.1109/CEC.2017.7969520

Vasileios Machairas, Aris Tsangrassoulis, & Kleo Axarli. (2014). Algorithms for optimization of building design: A review. Renewable and Sustainable Energy Reviews, 31, 101–112

Wan, J., Wang, D., Steven C.H. Hoi, Wu, P., Zhu, J., Zhang, Y., & Li, J. (2014). Deep learning for content-based image retrieval: A comprehensive study. MM 2014Proceedings of the 2014 ACM Conference on Multimedia, 157–166.

https://doi.org/10.1145/2647868.2654948

Wang, G., Hu, Q., Cheng, J., & Hou, Z.-G. (2018). Semi-supervised generative adversarial hashing for image retrieval. ECCV.

Wang, L., Zhang, Z., Zhang, X., Zhou, X., Wang, P., & Zheng, Y. (2021). A Deep-forest based approach for detecting fraudulent online transaction. Advances in Computers, 120, 1–38. https://doi.org/10.1016/BS.ADCOM.2020.10.001

Wu, Y., Shang, J., Chen, P., Sisi Zlatanova, Hu, X., & Zhou, Z. (2021). Indoor mapping and modeling by parsing floor plan images. International Journal of Geographical Information Science, 35(6), 1205–1231.

https://doi.org/10.1080/13658816.2020.1781130

Xiaolei Lv, Zhao, S., Yu, X., & Zhao, B. (2021). Residential floor plan recognition and reconstruction. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 16712–16721.

https://doi.org/10.1109/CVPR46437.2021.01644

Yang, J., Jang, H., Kim, J., & Kim, J. (2018). Semantic segmentation in architectural floor plans for detecting walls and doors. 2018 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), 1–9.

https://doi.org/10.1109/CISP-BMEI.2018.8633243

Yang, Z. R., & Yang, Z. (2014). Artificial neural networks. Comprehensive Biomedical Physics, 6, 1–17. https://doi.org/10.1016/B978-0-444-53632-7.01101-1

Yin, X., P Wonka, & Razdan, A. (2009). Generating 3D building models from architectural drawings: A survey. IEEE Computer Graphics and Applications, 29(1), 20–30.

https://doi.org/10.1109/MCG.2009.9

Yongbin, Y., Chenyu, Y., Deng Quanxin, Nyima Tashi, Liang Shouyi, & Chen, Z. (2021). Memristive network-based genetic algorithm and its application to image edge detection. Journal of Systems Engineering and Electronics, 32(5), 1062–1070.

https://doi.org/10.23919/JSEE.2021.000091

Yoshimura, Y., Cai, B., Wang, Z., & Ratti, C. (2018, December). Deep learning architect: Classification for architectural design through the eye of artificial intelligence

http://arxiv.org/abs/1812.01714

Zhang, Z., & Jin, Y. (2020). An unsupervised deep learning model to discover visual similarity between sketches for visual analogy support. Proceedings of the ASME Design Engineering Technical Conference, 8. https://doi.org/10.1115/DETC202022394

Zhang, Z., & Jin, Y. (2021, August). Toward computer aided visual analogy support

(CAVAS): Augment designers through deep learning. Volume 6: 33rd International Conference on Design Theory and Methodology (DTM).

https://doi.org/10.1115/DETC2021-70961