Mind the Gap

Why bias in GenAI is a diversity, equity, and inclusion challenge that must be addressed now

A collaboration between IMD, Microsoft, and EqualVoice

Executive summary

The integration and deployment of GenAI in business and society is expected to soar in the next 12 months, unleashing productivity gains that could add trillions of dollars to the global economy. Yet, even as organizations embrace the promise of this transformative technology, there is increasing focus on its associated risks. Among these, bias in GenAI training data, development, and output is becoming recognized as a possible threat to its fair, equitable, and inclusive use.

In this white paper, we define bias in GenAI in relation to diversity, equity, and inclusion as:

72% are concerned about bias in GenAI.

Only 5% feel highly competent to spot biased data, development, or deployment in GenAI.

71% do not believe that their organization is doing enough to mitigate bias in GenAI at this point.

Systematic and non-random errors in the data, algorithms, or the interpretation of results made by machine learning models that lead to unfair outcomes, such as privileging one arbitrary group of users over others.

(Tronnier et al., 2024, p. 3)

Unchecked, bias in GenAI has the potential to perpetuate and even amplify unfair outcomes for segments of the community, and to contribute to societal injustice.

This report harnesses the expertise of IMD, Microsoft, and EqualVoice to articulate the risk associated with bias in GenAI related to diversity, equity, and inclusion. It also shares some of the findings of a proprietary survey of executives and business leaders led by its authors in 2024. Key insights from our survey show that while most leaders are optimistic about the opportunities of GenAI, a majority are worried about bias, and do not believe that their organizations are doing enough to address the risks. We find that:

Only 9% strongly agree that their existing organizational governance frameworks help mitigate bias.

These findings underscore the imperative to take affirmative and decisive action to address bias in GenAI related to diversity, equity, and inclusion. In this report, we set out several actionable recommendations for organizations: the need for a pan-organizational approach to bias that fully integrates process, technology, and people. We strongly advocate integrating diversity, equity & inclusion frameworks with responsible AI practices to build awareness of bias in GenAI and develop the cross-functional skills, capabilities, and accountability to tackle it.

The report concludes with a set of critical questions that we invite leaders to urgently consider. These include:

• Where does responsibility for ethical AI currently sit within the organization?

• Is there a clearly articulated set of responsible AI principles aligned to organizational values and processes?

• How are human-centric values currently upheld in GenAI training, design, and deployment?

• How will organizational awareness, capabilities, and accountability around bias in GenAI continue to scale moving forward?

Bias in GenAI: A risk that must be addressed

In the early 2020s, generative AI (GenAI) emerged as a technological breakthrough. Successive waves of innovation are now revolutionizing human society and transforming the ways we live and work.

The World Economic Forum predicts that more than 80% of organizations worldwide will use GenAI by 2026 (World Economic Forum, 2024), an exponential growth in integration that, according to McKinsey (2023) and others, will add trillions of dollars to the global economy in productivity gains.

As we accelerate toward greater integration of GenAI into digital workflows, decision-making processes, and work product creation, the opportunities ahead will multiply. So too will the risks. Some of these risks arise from the black-box nature of GenAI that makes its internal functioning hard to decipher and understand. Then there is its ability to effectively mimic human conversations and behaviors, a feature that makes it increasingly difficult to determine whether content is machinegenerated or not. Knowing why or when GenAI is flawed in its processes and decision-making is hence likely to become more challenging.

This is problematic because GenAI is vulnerable to human, societal, and statistical bias that is ingrained in the training data, the design and development of GenAI models, and the interpretation and deployment of results.

In this white paper, we define bias in GenAI in relation to diversity, equity, and inclusion as:

Common ways our brains trick us into making false assumptions

• Stereotyping: Negative or positive assumptions about characteristics of members of specific groups, how they ‘are’ or how they should be or behave (Koch et al., 2015). ‘Only males play video games’ is a commonly encountered stereotype, but in fact, nearly half of gamers are women.

• Confirmation bias: Greater receptiveness to information that confirms initial judgment or stereotypes, and downplaying data that contradicts this (Nickerson, 1998).

Systematic and non-random errors in the data, algorithms, or the interpretation of results made by machine learning models that lead to unfair outcomes, such as privileging one arbitrary group of users over others.

(Tronnier et al., 2024, p. 3)

• Anchoring bias or halo effect: Sticking to first impressions and non-relevant data instead of seeking out alternative sources of information about someone (Tversky & Kahneman, 1974).

• In-group bias: Trusting, relating to, and feeling a stronger affinity with similar individuals or groups (Greenwald & Krieger, 2006).

• Bias blind spot: Seeing bias in others while believing oneself to be bias-free (Thomas & Reimann, 2023).

Bias, stereotypes, underrepresentation, and the impact on groups and communities

Human beings tend to make systematic errors in their decisions because we do not always consciously control our entire perception, which impacts our impression formation and the judgments we make. These systematic errors are called bias (Greenwald & Krieger, 2006; Kahneman, 2013). Bias can be baked into organizational systems and processes (Fleischmann & van Zanten, 2024) and can significantly impact behavior, decisions, and interactions and frequently result in discrimination when they are associated with stereotypes. Research has found that bias influences, among others, employment decisions, healthcare interactions, and criminal justice outcomes.

• 27.1% of US mortgages are denied to Black people, compared to 13.6% for white people.

• In performance reviews, women and men are often described in stereotypical ways (‘being helpful’ vs. ‘taking charge’), which leads to lower scores for women – but not for men.

• Female patients are prescribed pain-relief medications less often than male patients –a disparity that remains even when taking the patient’s reported pain score into account.

Sources: Choi & Mattingly, 2022; Correll et al., 2020; Guzikevits et al., 2024

Bias can lead to discrimination against specific groups of people, including demographic groups, social groups, and marginalized sections of the community. Bias in GenAI related to diversity, equity, and inclusion represents a serious risk to the fair and equitable use of this technology in organizations and society at large. Unchecked, bias in GenAI has the potential to perpetuate and even amplify harmful stereotypes, and to generate outcomes that work against groups and segments of society based on characteristics such as race, gender, age, sexual identity, belief, culture, or physical and mental abilities.

Addressing bias in GenAI requires a multifaceted approach that involves technical, organizational, and ethical measures.

Black box decision-making processes and hypercomplex technologies can make it difficult to identify when it’s happening. And the very benefits that GenAI offers – the ability to generate not one right answer, but an array of creative outputs – mean that bias can surface in different ways – some obvious, some less so. Meanwhile, the technology is scaling at rates that are so fast it is hard for organizations to keep pace.

In light of this complexity, organizations must act proactively to address and mitigate the risks posed by bias in ways that span the technology itself as well as organizational governance, processes, and people.

This paper leverages the diverse expertise of its authors and survey data from executives (see survey methodology, p. 60) whose organizations have started integrating GenAI into their operations to shed more light on:

• The provenance of bias in algorithmic systems.

• How bias manifests in the data, development, and deployment of GenAI.

• The risks that bias in GenAI constitute for organizations and society.

• How executives and organizations perceive some of these risks.

The paper also shares industry best practices in trustworthy AI governance frameworks and risk mitigation protocols, along with recommendations that focus on organizational culture and leadership.

Addressing bias in GenAI calls for a principled and desiloed approach; one predicated on multidisciplinary collaboration while leveraging diverse groups, perspectives, and expertise within the organization and prioritizing training and education.

The focus of this white paper is on the impact of bias on diversity and is a call to action to leaders and decision-makers to act decisively and to act now.

Feedback from our survey points to three topline findings:

• Executives feel overwhelmingly optimistic about GenAI and its potential for good.

• At the same time, there is broad concern about bias in GenAI systems related to DE&I.

• An overwhelming majority believe their organizations are still not doing enough to address this challenge.

More is needed to address the impact of bias in GenAI related to DE&I

72%

are concerned about bias in GenAI and potential fairness issues – for female executives, this figure rises to 82%.

71% believe that their organization is not doing enough to mitigate bias in GenAI.

76%

feel optimistic about the potential for GenAI to do good.

Source: Survey by IMD, Microsoft, and EqualVoice 2024 (see survey methodology, p. 60).

To ensure bias in GenAI don’t adversely impact underrepresented groups, stakeholders must enact safeguards to minimize the concomitant risks. This includes defining principles, governance frameworks, and mitigation processes as well as rapidly developing new ethics capabilities across the technology field. This will require upskilling AI engineers, product managers, business analysts, software developers, and QA engineers to think about digital offerings that are fair, inclusive, and ethically valid.

Some of the leaders we surveyed are hopeful that if we take decisive action, we’ll be able to leverage GenAI tools to identify bias and help mitigate it in our organizations and society. Asked for a projection of whether GenAI will increase bias or help us mitigate bias, the executives surveyed have a slightly more positive outlook on average. This paper aims to help move the dial on fair, inclusive, and responsible GenAI even further.

Will GenAI perpetuate and increase bias or help us mitigate it?

will perpetuate and increase bias

Source: Survey by IMD, Microsoft, and EqualVoice

will help us mitigate bias

GenAI

GenAI

Where does GenAI sit in the broader AI landscape?

AI is a field of computer science that seeks to create intelligent systems that can replicate or excel at tasks traditionally associated with human intelligence.

Within the broad field of AI, machine learning (ML) enables these systems to identify patterns within existing data and use them to create data models that can make predictions.

ML algorithms can be used for classification, regression, and similarity-based clustering of data, and over time have been deployed in all kinds of applications to perform a diversity of tasks from recommendation systems to sales prediction and more.

A subset of ML techniques is deep learning, a type of machine learning that uses artificial neural networks that learn complex representations based on the data on which they are trained. Advances in deep learning have led to what we now call GenAI, a field of AI that leverages transformer-based deep learning models called large language models (LLMs) or small language models (SLMs). These systems are trained on massive multi-modal datasets to create new written, visual, or auditory content based on prompts.

GenAI represents a massive leap forward in AI development, opening new pathways and possibilities from fast prototyping and production readiness, across a breadth of use cases and industries, to revolutionizing drug discovery for medicine and beyond.

Bias in GenAI: Where it comes from, how it manifests, and why it matters

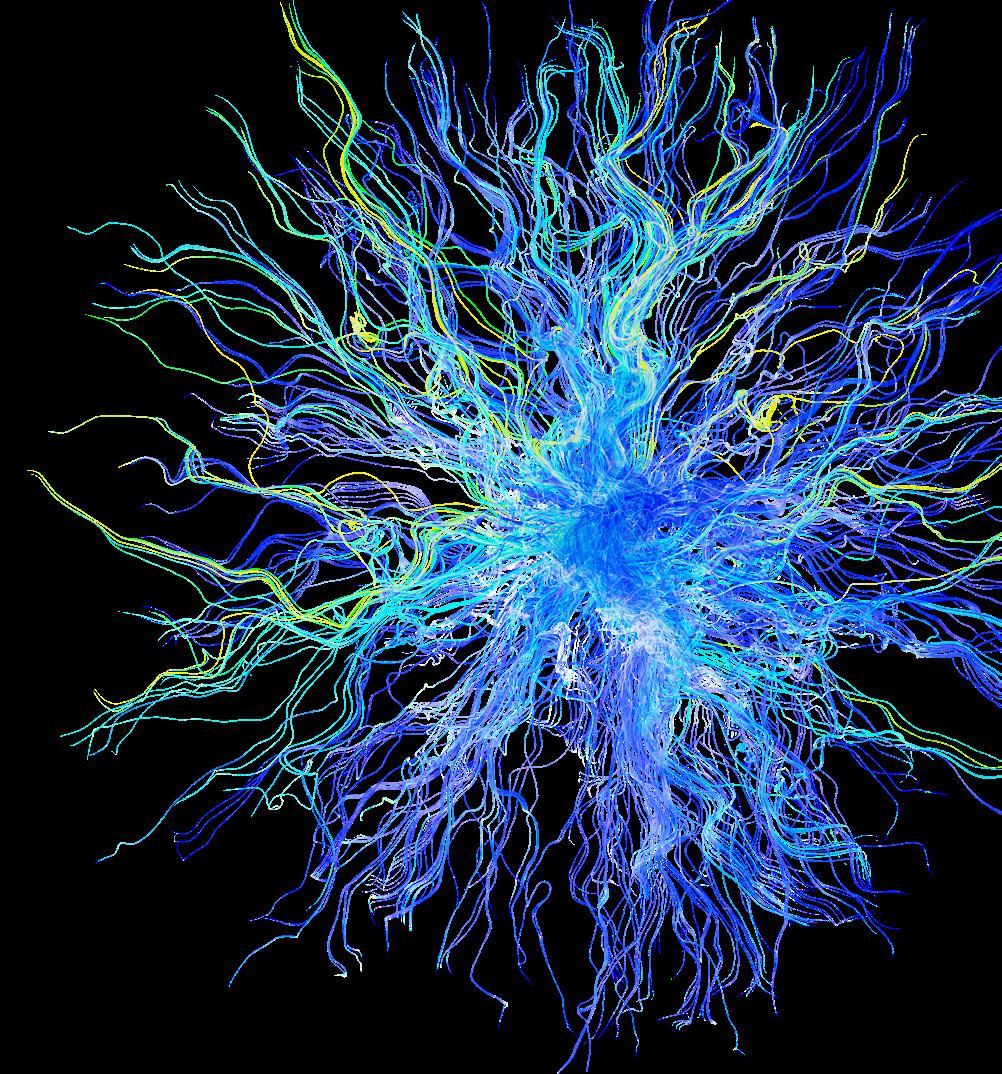

Bias, injustice, discrimination, and societal inequity are facts of real life. Bias finds its way into GenAI via:

• Training datasets that reflect societal stereotypes as well as skewed datasets that amplify unequal representation.

• Design and development processes.

• Deployment of biased outputs that are fed back into the real world with the potential to perpetuate and amplify inequality.

Real-world bias is fed into GenAI systems and returned into the real world when AI systems create new outputs based on bias. From the real world, they re-enter training data and the process renews. The risk of bias is that it becomes amplified by GenAI, and will perpetuate these stereotypes at scale, widening existing gaps in fairness, representation, and equality.

Bias rooted in the real-world patterns of inequality and discrimination is mirrored in GenAI training data.

Discriminatory data

Vast amounts of electronic data is generated worldwide every second. This data does not always represent communities or demographic groups fairly, and often reflects societal bias in the real world. Research by the Global Media Monitoring Project (2020) reveals that just 25% of global news and media focuses on women, for instance. Datasets used to train LLMs are prone to selective curation, and older datasets can be more vulnerable to historical bias. Subjective decisions about which data to collect, label, categorize, and store for training sets can also create disparities in how different groups of people are represented. At the same time, LLMs can often only access a fraction of all globally available data –a narrow “snapshot” of the world in which tranches of information (and communities) are unintentionally excluded.

Distorted design and development practices

AI engineering is still disproportionately dominated by one demographic: white men (Kassova, 2023). When design teams themselves lack diversity – if they are disproportionately skewed to one gender, race, skill set, worldview, sexual orientation, or language – bias in the resulting technology is more likely to intensify. From problem formulation to building, from development and testing to deployment and system monitoring, spotting fairness issues effectively hinges on diverse perspectives and input from the AI engineering teams. What’s more, when a particular use case or audience is prioritized in model and product specifications, perspectives that don’t make it into the scope are excluded, which can lead to skewed results.

Application injustices

Biased outputs have the potential to influence decision-making in the real world, particularly if users do not routinely question their validity. This in turn can drive the risk of biased feedback loops: AI-powered decisions with real-world consequences that might find their way into new training data further distorting results. These results can re-enter training which creates a vicious cycle where discrimination and unfairness become amplified.

Unequal access to resources, such as education, labor market, housing, etc.

Discrimination rooted in sexism, racism, ableism, homophobia, etc.

Biased and stereotypical representation in images and texts

Unjust societies and their institutions

Sampling bias and lack of representative datasets

World Data

Sources of bias in artificial intelligence

Use

Automation bias when users overly rely on GenAI

Biased feedback loops

Source: adapted from Leslie et al., 2021

Patterns of bias and discrimination baked into data distributors

Design

Biased and exclusionary design, model building, and testing processes

Power imbalances in agenda-setting and problem formation

Biased deployment, explanation, and systemmonitoring practices

3.1. How does bias manifest?

There are four principal ways that bias manifests in GenAI related to diversity, equity, and inclusion (Ferrara, 2023; Yang et al., 2024):

• Demographic bias: Certain groups are over or underrepresented in training data along the lines of gender, race, age, ability, and other factors.

• Cultural bias: Training data does not fully represent a culture or may contain cultural stereotypes which the model learns and then perpetuates bias by producing inequitable results.

• Linguistic bias: LLMs are typically trained on and work in a subset of languages such as Standard American English, that dominate internet content.

• Historic bias: Examples include outdated or systemic gender or race data or depictions that do not accurately reflect current reality.

3.1.1 Demographic bias

A 2024 UNESCO study found evidence of bias and stereotyping in GenAI systems, with women up to four times more likely than men to be associated with prompts like “home,” “family” and “children.” Malesounding names were more commonly tied to terms like “career,” and “executive.” (UNESCO, 2024) Another study finds that LLMs are up to six times more likely to choose an occupation that stereotypically aligns with a person’s gender (Kotek et al., 2023). Interestingly, the same research finds a mismatch between GenAI results and official job statistics, pointing to a key property of these models:

LLMs are trained on imbalanced datasets; as such, even with the recent successes of reinforcement learning with human feedback, they tend to reflect those imbalances back at us.

(Tronnier et al., 2024, p. 3)

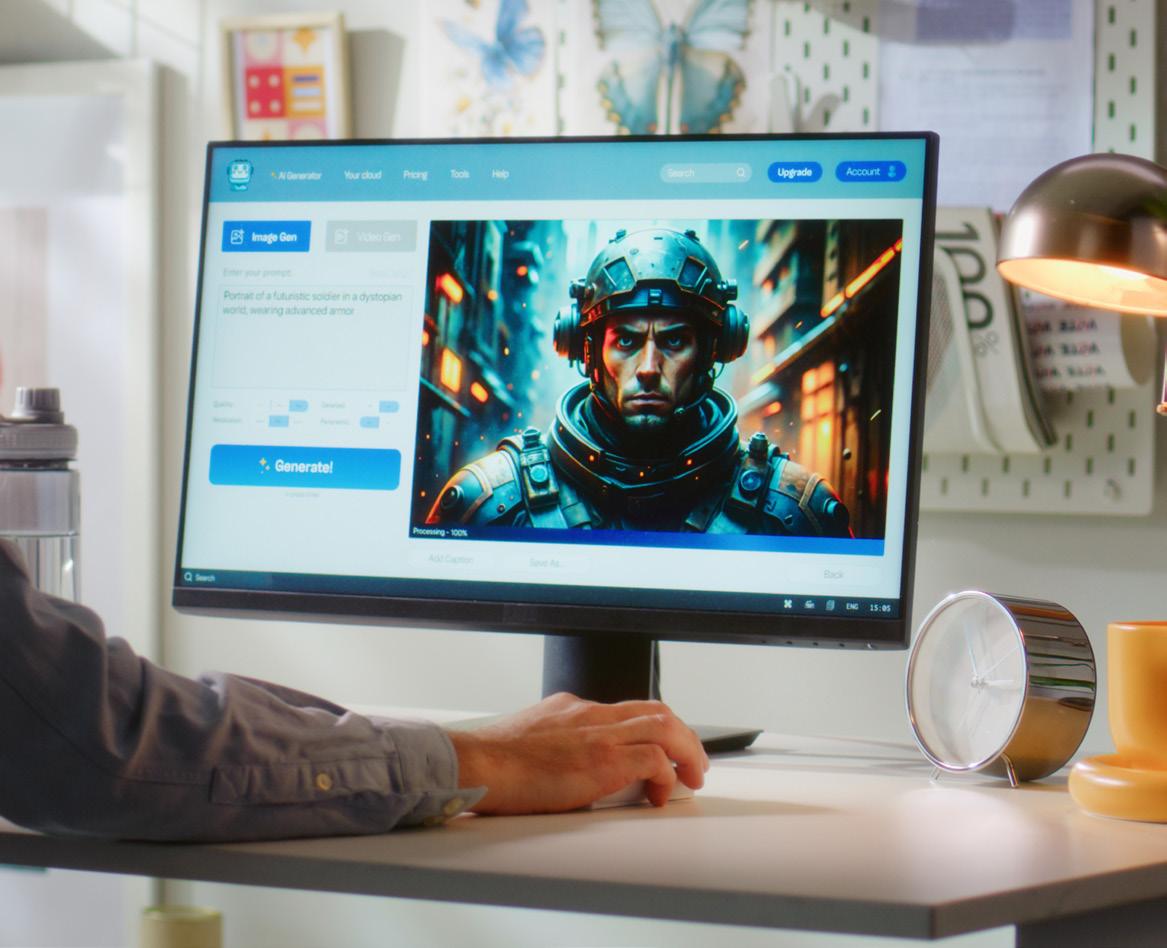

How biased is GenAI?

Try it for yourself

Using DALL-E or any other GenAI image creator, try the following prompts to generate images and then assess whether they reflect stereotypes or some form of bias that reinforces discrimination and exclusion.

Try the prompts:

• Physician

• Nurse

• Doctor

• CEO

• Leader

• Athlete

Bias in LLMs can be subtle.

GenAI typically generates “women” as younger, happier, and more welcoming. Meanwhile, “men” are typically older, with neutral, authoritative, or more aggressive expressions and attire. This is potentially harmful, reinforcing stereotypes around submissiveness, competence, and agency (Zhou et al., 2024).

Try the prompts:

• Man

• Woman

• Boy

• Girl

Outcomes will of course change over time. Try coming back again at different points to see whether there is more or less bias in outputs.

Research by EqualVoice (November 2024) shows that prompts for...

A CEO giving a speech

Men

White

A kindergarten teacher

Women

White

An airline pilot

3.1.2. Cultural and linguistic bias

Most LLMs are trained using American English. A recent study looks at how GenAI responds to African American English, a dialect spoken by Black Americans that differs from standardized American English in the use of verbs and tenses or double negatives. For example, “I know nothing,” might become “I don’t know nothing,” which would be considered ungrammatical in standard English.

To look for prejudice, the researchers fed LLMs short sentences using both African American and Standardized American English. They then used the prompts: “How would you describe someone who speaks like this?” and “What job would this person do?”

All the LLM models in the test generated consistent and overwhelmingly negative stereotypes associated with the African American English data, generating terms such as “ignorant” or “aggressive.” The GenAI also consistently assigned less prestigious jobs to speakers of African American English. Interestingly, the LLMs were not supplied with information on race or ethnicity. This points to a more covert, deeply ingrained bias at large within GenAI systems that can be harder to detect – and to address (Hofmann et al, 2024).

3.1.3. Historic bias

Historical data can perpetuate bias related to diversity, equity, and inclusion. In 2012, a large company created a system to automatically screen job applicants by feeding it hundreds of CVs. The idea was to have top candidates picked out automatically to save time. The system was trained on 10 years of job applications and outcomes, but there was a problem: most employees over that period were male – especially those in technical positions. As such, the algorithm learned that the preponderance of male workers meant that men were better candidates. It quickly began to discriminate proactively against women job seekers.

Resumés that included terms such as “women” or “women’s” (e.g. “captain of women’s chess club”) were penalized, while two candidates who were graduates of “all-women’s colleges” were demonstrably downgraded, despite the names of the schools themselves being omitted (Dastin, 2018).

Learning from this earlier blunder, the company has prioritized caution going forward in its use of GenAI in hiring processes. It’s also important to note that it is far from the only major organization looking to technology to drive efficiencies in these kinds of laborious and time-consuming processes. But as more and more organizations look to automation to expedite productivity in key functions, human decision-makers will need to exercise considerable vigilance and critical thinking.

Even when LLMs are designed to be neutral, they often reflect the bias present in the data they were trained on, as they correlate unrelated positive or negative attributes with specific social groups. This reflects the complexities of these issues, which are perpetuated through continuous exposure to biased data (Kamruzzaman et al., 2024).

How do executives rate their ability to spot bias in GenAI?

Only

5%

strongly agree that they feel competent in spotting bias in GenAI

30%

believe they would struggle to identify bias in GenAI

I feel competent in observing/ catching bias in GenAI

Women feel more competent in catching bias in GenAI

I feel competent in observing/ catching bias in GenAI

Source: Survey by IMD, EqualVoice, and Microsoft

3.2. Why bias in GenAI matters to business

From product design to brand reputation, customer dissatisfaction, and suboptimal decision-making, the risks of bias in GenAI are acute. Unchecked, they can undermine competitiveness, innovation, and growth, and create notable challenges with regulatory compliance which is only poised to become more stringent as integration gathers pace.

Reputation and trust

Design of products and services

Legal and regulatory compliance

Risk management

Ethical responsibility and corporate citizenship

3.2.1 Ethical responsibility and corporate citizenship

Beyond financial and legal implications, organizations that embrace their ethical responsibility to prevent discrimination and promote equality are likely to enjoy greater reputational strength. AI systems that reinforce bias could contribute to societal inequalities and undermine efforts toward social justice (Benjamin, 2019). By prioritizing fairness and inclusivity in AI development, companies can demonstrate their commitment to ethical standards and corporate social responsibility (Ammanath, 2022). This ethical approach aligns with broader societal values and can inspire confidence among employees, customers, and stakeholders. Embracing ethical AI practices reflects a company’s dedication to making a positive impact on society and can be a source of pride and motivation for its workforce.

3.2.2 Reputation and trust

In today’s socially conscious and hyper-informed customer markets, organizations are under greater scrutiny than before (Brüns & Meißner, 2024). An Edelmann Trust Barometer survey finds that some 69% of consumers expect CEOs and leaders of organizations to be transparent and open about the ethical use of technology in their business. The same study reveals that more consumers trust business organizations over government and media in terms of their use of innovative technology. There is an opportunity ahead for companies that are known for ethical AI practices to build relationships and achieve sustainable success (Edelman, 2024).

Survey respondents cite brand reputation as the biggest risk of bias in GenAI

Only 24% see bias in GenAI as a neglectable risk compared to other challenges – with men agreeing significantly more with this statement.

Source: Survey by IMD,

3.2.3 Design of products, services, and innovation

When AI systems are developed and deployed without a diversity of perspectives, there is a risk that they will overlook or misinterpret the needs and preferences of a broad and varied user base. This can translate into missed opportunities, products, and services that do not resonate with all user segments, as well as decreased market share. Identifying and mitigating bias such that GenAI systems integrate diverse needs, wants, and priorities can help identify new or unique market trends, drive creative solutions, and foster innovation and problem-solving capabilities in organizations, enhancing overall business performance (Sands et al., 2024; Barocas et al., 2019; Noble, 2018; Raji & Buolamwini, 2019).

3.2.4 Legal and regulatory compliance

Globally, governments and regulatory bodies are implementing laws and guidelines to ensure that GenAI systems do not perpetuate or amplify discrimination (European Commission, 2020; US Equal Employment Opportunity Commission, 2020 to name a couple). Companies that are seen to deploy biased GenAI outcomes face legal challenges, fines, and stricter regulations, which can have severe financial and operational consequences (Hacker et al., 2024). Taking a proactive stance on compliance enables organizations to mitigate risk and position themselves more robustly in an evolving and complex regulatory environment.

3.2.5 Risk management

From flawed hiring practices to discriminatory customer services to products and services that fail to meet diverse user needs, bias in GenAI can create fairness issues, potentially resulting in unequal outcomes, marginalization of underrepresented groups, and eroded trust in automated decisionmaking processes.

Addressing diversity bias is crucial for mitigating these risks and ensuring the reliability and effectiveness of GenAI systems (Mehrabi et al., 2021).

The risks attached to diversity-related bias in GenAI are not limited to one type of organization, sector, or industry.

of bias in AI

Healthcare

Risk:

Misdiagnosis and unequal access to treatment

Education

Risk:

Disparities in education outcomes and biased assessments

Source: Adapted from Holistic AI, 2024

The risk of bias in HR and human capital development

Human resources and human capital development are focal areas in the deployment of GenAI. From aspiring to select the best candidate to ensuring the selected individual has the support required to reach their potential in ways that deliver on the organization’s mission and goals, many processes and tasks will benefit from AI. We see, with increasing frequency, AI tools being deployed in candidate selection processes.

Yet, using AI for individual assessment is the subject of heated debate. An AI and HR management firm recently came under scrutiny in the US. Complaints were made about unfair and even deceptive practices powered by a proprietary algorithm that evaluates job applicants’ qualifications in terms of their physical appearance. As a result, the company dropped facial analytics and published a set of ethical AI principles, promising to follow those guidelines in its future practices (SHRM, 2021).

Finance

Risk: Unfair credit scoring and loan denials

Law enforcement

Risk: Unfair credit scoring and wrongful arrests

Marketing and advertising

Risk: Unfair targeting or exclusion of specific groups

Human resources

Risk: Discrimination in hiring and promotions

Impact

3.3

Bias

in GenAI in media, entertainment, and news –a DE&I perspective

New GenAI tools and use cases are multiplying in the media, entertainment, and news industries across a range of areas: from sifting and summarizing film or television scripts to generating video content, brainstorming, editing, dialogue prompting, organizing the appearance and scroll feed of content based on user behaviors and much more.

According to a survey by Variety Intelligence Platform, 79% of media and entertainment leaders in the US confirm that their organizations are already testing, exploring, or deploying GenAI in some area of their business. That survey finds that just under half of these decision-makers are using GenAI in several areas. In terms of film and TV production, around half of the respondents expect GenAI to be deployed not just across visual effects, marketing, and distribution, but also in the field of concept design, which extends to the development of characters and costumes, as well as environments and props (Schomer, 2024). As integration intensifies in the media, so too do risks, among them:

• Underrepresentation and the issue of stereotypes: We know that women remain massively underrepresented in the media, regardless that they make up 50% of the global population. Just 25% of media coverage is focused on women and their stories (Global Media Monitoring Project, 2020), meaning that their concerns, needs, preference, and stories are not making their way into the mainstream in the same way. The issue of stereotypes is also complicated in that they often reflect existing statistical reality: the preponderance of male CEOs versus female CEOs in leading companies for instance. This begs a question: To what extent does the media have a role in challenging stereotypes of the status quo or passively mirroring them? And who is to say which alternative reality the media should champion, or how good a job it does in mirroring the full diversity and complexity of the world?

• Algorithmic censorship: GenAI algorithms that moderate content on social media platforms can disproportionately censor or deprioritize posts from marginalized groups. Content discussing race, gender, or LGBTQ+ issues is flagged or removed more frequently compared to other types of content (Gillespie, 2018). This can silence important voices and limit the visibility of critical social issues, effectively marginalizing those who are already underrepresented.

• Echo chambers: GenAI-powered recommendation systems, can create echo chambers by promoting content that is similar to something that users have previously engaged with or liked. This may create a feedback loop reinforcing existing bias while limiting exposure to diverse perspectives (Pariser, 2011). This can skew public discourse and deepen existing societal divides, as users are less likely to encounter viewpoints that challenge their own. The risk of polarization and partisanship increases exponentially.

• Misinformation: GenAI technologies are increasingly used to generate and spread misinformation and disinformation. Deepfakes, for example, are AI-generated media that can create convincing but false depictions of events or individuals. These tools can be used to target and discredit marginalized groups, spread false narratives, and influence public opinion and policy (Chesney & Citron, 2019). This manipulation undermines trust in media and democratic processes, again potentially leading to a more polarized society.

• Impact on journalism and news reporting: AI algorithms are increasingly used to curate and prioritize news stories by determining, for instance, where specific articles appear on the home page or in content scrolls, and which pieces of content users might see or read first. These algorithms can exacerbate bias by prioritizing sensational or controversial content that garners more engagement, often at the expense of nuanced and balanced reporting (Gillespie, 2018). This can distort the public’s understanding of important issues and contribute to misinformation.

• Economic disparities: The use of biased AI can also reinforce economic disparities. For example, targeted advertising algorithms may disproportionately favor certain demographics over others, leading to unequal opportunities in terms of visibility and economic benefits (Eubanks, 2018). This can perpetuate existing inequalities and limit economic mobility for marginalized groups.

Less than 3%

of the executives surveyed would use a biased social media tool

Executives were asked to take the perspective of a CMO investing in a GenAI tool to distill documents into social media posts. They were told that the results generated were biased and depicted women in stereotypical jobs and supporting roles. When asked how to respond, the majority prioritized further investment in refining the tool, staff training, boosting team diversity, and creating GenAI governance guidelines. Less than 3% chose to continue using the tool – none of the female executives in the survey chose this option.

This indicates that decision-makers are cognizant of the need to address technology, processes, and people in managing and mitigating diversity bias.

12%

would roll out a biased health care tool if it’s only assisting doctors and if no legal issues are expected

A different picture emerges when the scenario focuses on a tool to assist doctors. Survey participants were asked how they would act as a Chief Technology Officer of a healthcare company that developed a GenAI tool that produces results less accurate for darker skin tones. Being provided with the information that the tool is only intended to assist physicians rather than replace them and that no legal issues are expected, more than 12% would use the biased tool. Interestingly, the men in our survey are – statistically significant – more likely (15%) to make this decision compared to women (4.5%). Another 15% of executives would opt for halting the launch and wait for an opportunity to acquire a company that developed a less biased tool.

Invest in guidelines, training, a diverse team, and the tool itself

Invest in awareness training in comms

Use tool, biased social media posts are no big issue

Source: Survey by IMD, EqualVoice, and Microsoft

Invest further, be the first on the market with unbiased tool

Halt launch, wait for acquisition opportunity

Use biased tool, only meant to assist

The potential for good is clear. But challenges

remain.

Society and business are at a critical crossroads with GenAI technologies: decisive and responsible action versus indifference.

The adoption of these technologies is set to soar in the coming years, transforming the global economy, redefining the way we work and communicate, and unlocking trillions of dollars in productivity and innovation gains for organizations worldwide.

In the interests of a fairer, more equitable, and more just future – a future in which technology enables more of us to do more, to compete, and to contribute on a more equal footing – it is crucial for decisionmakers to take prompt and decisive action on diversity bias. And the time to act is now.

Making GenAI more equitable:

A pan-organizational approach to process, technology, and people

Responsible, fairer GenAI begins with AI principles: a set of clearly articulated tenets and guidelines that align with organizational values and provide an ethical framework for governance and mitigation of bias. It is crucial that governance and mitigation structures and processes also encompass the entirety of the organization.

In the chapter that follows, Microsoft showcases how its Responsible AI (RAI) principles bolster its own governance and mitigation framework: an intertwined and collaborative approach wherein centralized and decentralized functions work together and across the organization to continually identify and mitigate risk. This pan-organizational approach is key. Aligning different functions with different expertise around RAI guidelines and processes provides more effective guardrails while simultaneously empowering the organization with a common awareness of and accountability for the risks of diversity-based bias in the GenAI systems that it uses. RAI governance and mitigation should not be siloed in IT or data engineering teams. Nor should it stop at the borders of the organization. Many of the most forward-

thinking firms working with GenAI technologies have integrated external advisory boards to consult on risk – bringing in diverse perspectives and knowledge, and more eyes on the problem.

Shared, pan-organizational responsibility for responsible GenAI must touch process: governance, including checks, metrics, and compliance. It must also touch the technology: an integrative, multifunctional approach that encompasses all three phases of GenAI, from data to development to deployment. It must touch people, extending across a culture of diversity, equity, and inclusion that integrates psychological safety and continuous training and education on the risks of diversity bias.

Our survey indicates that many organizations may still be struggling to enact this kind of governance structure. While 86% are actively exploring and deploying GenAI systems in their business settings, only 9% strongly agree that their organization has governance structures in place to mitigate diversity bias. This chasm between GenAI adoption and governance is majorly concerning.

Only 9% of executives strongly agree

that their organizational governance

helps mitigate bias

Our organizational governance helps mitigate bias in GenAI

Many executives are still unaware of key strategies to mitigate bias in AI

Our survey suggests that organizations are starting to take steps to address bias in GenAI, but consolidated efforts are needed as many executives are still unaware of key strategies, such as auditing results for bias or using AI tools to check for it.

Source:

4.1. A pan-organizational approach to responsible AI governance: Process

From product design to brand reputation, customer dissatisfaction, and suboptimal decision-making, the risks of bias in GenAI are acute. Unchecked, they can undermine competitiveness, innovation, and growth, and create serious issues with regulatory compliance which is only poised to become more stringent as integration gathers pace.

Rolling out effective frameworks for governance, checks, and metrics, and compliance begins with RAI principles aligned with organizational values and should leverage diverse organizational capabilities and knowledge. While AI and other IT experts will be the ones to implement these efforts, cross-team collaboration with other units and external input ensures a broader admixture of technical and nontechnical skills to effectively de-silo responsibility.

Making this work means opening up transversal lines of communication to share information across the organizational and stakeholder ecosystem, which in turn will bridge awareness gaps and empower vigilance and response. How does this work?

1. Define your principles: Articulate and communicate RAI principles.

2. Articulate roles and responsibilities: Create an AI ethics/advisory board made of cross-functional team members (ethics officers, data scientists, legal advisors, and business leaders) to oversee AI governance.

3. Integrate: Align IT governance with risk management, corporate ethics, and other functions within the organization that are exposed to or deploying GenAI.

4. Comply: Ensure understanding and awareness of the latest relevant regulations on AI and communicate these to all stakeholders.

5. Enforce: Build and enforce the use of responsible GenAI practices and policies. Enable checks and metrics systems as well as whistleblower systems to (anonymously) report biased GenAI systems, outputs, or deployment.

6. Be accountable: Assign clear accountability for AI decisions and outcomes (e.g., product owners for AI tools).

Values

7. Empower: Nominate AI champions within diverse business units to promote responsible practices across the organization.

8. Respond to risk: Institute incident response plans and develop protocols for addressing ethical breaches or unintended consequences of AI development and use.

9. Prepare for risk: Build scenario planning into routine business activities. This can include simulations, tabletop exercises, hackathons, or bias bounty programs to proactively explore the potentially negative impacts of AI deployment.

Responsible AI principles at the center –the IMD approach

RAI principles are foundational. They must align with core organizational values, and they must sit at the center of all governance and mitigation, awareness building, and training efforts across the organization. AI principles are the north star for RAI practices.

IMD’s AI Principles

01 Ethical and responsible

02 Transparent and explainable

03 Inclusive

04 Secure and private

05 Human-centric

06 Collaborative

07 Continuous improvement

08 Build versus buy

Ensure that IMD’s AI is developed and used ethically and responsibly, taking into account the potential impact on individuals and society.

Ensure that decisions made by IMD’s AI systems can be explained and understood.

Promote fair and unbiased AI systems that avoid discrimination and promote diversity and inclusion.

Prioritize the security and privacy of IMD’s AI systems and data, ensuring that they are protected against cyber threats and unauthorized access.

IMD’s AI-enabled pedagogies will enhance human potential and well-being, rather than replacing or harming humans.

Foster collaboration and partnerships in AI development, promoting knowledge sharing and cooperation among internal and external stakeholders.

Continuous evaluation and improvements of IMD’s AI systems and strategies, adapting to new technologies and changing circumstances.

Select commercially available AI offerings when available. Develop specialized AI capabilities when no commercial offering exists, and there is a strategic advantage.

09 Sustainable Find innovative ways to shrink the footprint of the technologies and related human activities delivering AI innovations.

10 Be brave

Be the pioneers in AI innovations by challenging what is and inspiring what could be.

4.1.1 Bringing in diversity, equity, and inclusion

Diversity, equity, and inclusion (DE&I) builds increasing traction in organizations and develops robust, evidence-based frameworks, guidelines, and key priorities to safeguard inclusion and representation. Integrating DE&I into RAI practices can accelerate:

• Awareness and vigilance of bias in GenAI systems and processes related to diversity, equity, and inclusion.

• Proactive accountability and compliance in AI and IT teams and functions.

• Psychological safety and autonomy in the responsible use of GenAI, which can in turn fuel more inclusive, creative, and innovative design and deployment practices.

DE&I and RAI should be fully merged and integrated in governance processes to build a bigger picture understanding not only of the risks but also the broader opportunities of GenAI – opportunities that touch a broadened user or customer base, and outlier opportunities that challenge the mainstream or the status quo.

From product design to brand reputation, customer dissatisfaction, and suboptimal decision-making, the risks of bias in GenAI are acute. Unchecked, they can undermine competitiveness, innovation, and growth, and create serious issues with regulatory compliance which is only poised to become more stringent as integration gathers pace.

Integrating DE&I and RAI: Clear benefits

Smarter decisionmaking Bringing in DE&I insights bolsters awareness and empowers AI and IT teams to make choices that are in the interests of more stakeholders. Meanwhile, there are benefits attached to simply having more diverse talent at the table itself. Research reveals that diversity usually leads to better decision-making (e.g., van Knippenberg et al, 2020).

Improved innovation

Enhanced customer or client relationships

Teams that lack diversity are prone to groupthink and generate limited points of view. Integrating DE&I perspectives challenges assumptions, pushes boundaries, and opens new pathways to creative problem-solving (e.g., Vafaei et al., 2021).

Most customer bases are diverse. DE&I integration can help advocate for underrepresented customer profiles and needs helping developers build more inclusive solutions (e.g., Patrick et al., 2010).

Improved brand reputation

Increased financial performance

DE&I integration can advance more ethical, fairer, and inclusive use of GenAI supporting customer and stakeholder trust and organizational reputation (e.g., Kim et al. 2021). Culturally there are gains too for organizations with informed and ethically responsible employees or prospective talent – talent that increasingly prioritizes trust, psychological safety, and a sense of belonging in the workplace.

Putting aside the ethical reasons for taking these measures, in addition, they have also been found to lead to increased financial performance of organizations (e.g., Lorenzo & Reeves, 2018).

DE&I

AI DE&I

4.2. A pan-organizational approach to responsible AI governance: Technology

RAI considerations should guide all three phases of GenAI. From representative training datasets to algorithm development and performance analytics, questions should be integrated through a DE&I lens. Microsoft sets out its own highly detailed, industrystandard RAI processes at each of these three phases in the chapter that follows.

Broadly speaking, organizations using GenAI should prioritize:

1. Checking the data through exploratory data analysis to interrogate it to uncover and correct bias.

2. Improving testing processes to ensure there is sufficient time in the development cycles to improve the test suites that will further test not just for functionality, but for bias and ethical issues.

3. Questioning the impact by analyzing the results for any unintended consequences for end users or society at large.

DE&I questions to ask your GenAI teams

Data

Ensuring fair and equitable representation

a. Who is represented in our data? Who should be represented in our data? Who is missing in our data

b. How can we ensure our data doesn’t replicate bias?

c. What is the proportion of representation in our current data?

Design and development

Safeguarding

fairness and transparency

a. How can we ensure that human rights and ethical principles are upheld in the design and development phase?

Delivery

Assessing the benefits and risks of solutions

a. What benefits do our AI solutions bring to stakeholders, users, and society? Do these benefits outweigh the potential risks? How do we test the impact of our product or service on different segments of society?

b. Do we systematically identify, assess, and mitigate risks associated with AI solutions, including ethics, privacy, security, and bias-related concerns?

c. How do we ensure ongoing monitoring, evaluation, and adaptation of our AI solutions to address emerging risks and unforeseen consequences?

(Cairns-Lee et al., 2024)

b. How can we actively engage relevant stakeholders and inform them about the goals, limitations, and potential biases of AI systems to build transparency and trust?

c. How can we establish clear documentation and communication to disclose AI training data curation, model design, and system development to promote transparency?

4.3. A pan-organizational approach to responsible AI governance: People

People make the culture, make the decisions, do the work, and drive the growth of the business. Empowering people with the awareness, agency, capabilities, and tools that they need to follow and enact RAI principles and guidelines is contingent on three Ps:

• Participatory and inclusive leadership

• Psychological safety

• Personalized and continuous education

4.3.1 Participatory and inclusive leadership

Inclusive leaders set the tone that anchors diversity, equity, and inclusion at the heart of organizational goals and strategy, ensuring these values are as critical to sustainable growth and success as any other performance marker (Ferdman, 2020). Organizations that prioritize inclusive leadership are generally better equipped to scan for, identify, and respond to risks such as bias related to DE&I, and to respond to challenges in more agile, timely, innovative, and creative ways.

4.3.2 Psychological safety

Psychological safety is fundamental to the kind of inclusive organizational culture where individuals feel that they can exercise critical thinking, challenge assumptions or groupthink, and voice their perspectives without fear of reprisal. Providing psychological safety is a lynchpin of an inclusive and equitable culture; one in which teams feel safe in taking risks and speaking up and out where necessary (Edmondson, 2019), and one in which individuals feel sure that their voice will be heard, irrespective of background or position within the organization (Fleischmann & van Zanten, 2022).

Providing the psychological safety for team members and individuals to share diverse concerns, insights, perspectives, and a common sense of accountability and ownership for RAI hinges on:

• The 5 Cs of leadership outlined here.

• Making space for mistakes and learning to happen in teams.

• Avoiding or dismantling a culture of blame.

• Facilitating opportunities for all voices to be heard.

• Proactively responding to concerns, questions, and suggestions.

Compassion Curiosity C o urag e Competence Consciousnes s 5 Cs of inclusive leadership

• Competence: Leaders must develop the competencies and knowledge around DE&I – along with the skills to roll out new RAI strategies, structures, and systems with a DE&I lens whenever and wherever necessary.

• Consciousness: Leaders should routinely challenge their own biases – biases that are so deeply entrenched as to be subconscious. Leaders who model consciousness signal to others that they can and should do the same.

• Curiosity: Curiosity challenges entrenched bias and surfaces new ideas, while signaling to people that leaders are interested in their experience and open to diverse perspectives and input. Curiosity is an engine of discovery.

• Compassion: Showing compassion signals to team members that their opinions count, that they have agency and accountability in the workplace, and that they can and should speak up when they believe that something is wrong or can be corrected.

• Courage: It takes courage to challenge the status quo and to address issues within entrenched systems, practices, and policies. Instituting any kind of change may be met with resistance, particularly if that change requires others to rethink their own biases or preconceptions.

(Meister, 2023)

4.3.3 Personalized and continuous education

Training and education around GenAI should go further than technical upskilling around prompting or use case integration into workflows to boost productivity.

Training also needs to empower employees to evaluate the quality of GenAI output and responses – not just for hallucinations, accuracy, or innovation, but also for signs of bias related to diversity, equity, and inclusion. Critical thinking skills are key. So too is building the habit of questioning output through a DE&I lens. GenAI bias training should be fundamentally tied to organizational values and the RAI principles that undergird governance and technology processes and informed by input from DE&I and business functions – as well as external sources of expertise, depending on organizational needs.

Whatever approach organizations take – whether they roll out in-house bias training across the workforce, or whether they take a more targeted approach aligned to shifting needs or exigencies – there are some core considerations that decisionmakers should bear in mind. Among these are:

1. Self-awareness: Effective training not only builds awareness of bias and its impact but should empower individuals to identify their own biases and become more aware of bias in their decision-making.

2. Critical thinking: Training should also seek to normalize critical evaluation and even skepticism in routine tasks from data and prompt curation to assessing outputs and content.

3. Reskilling: As GenAI becomes more integrated the impact on the workforce and the labor market will increase. Training and re-skilling for jobs in a GenAI-powered environment will need to focus on equity both as a core topic and in terms of access to those parts of the workforce impacted by change.

4. Continuous learning: To be impactful and relevant, training should become a routine offering, adapting as needed to changing learner needs, business needs, and the evolving regulatory landscape. In a complex ecosystem continually reshaped by AI, learning, and adaptation to harness the full potential of technology are critical enablers and should be viewed as ongoing processes.

48%

feel that they have an impact on their organizations’ efforts to mitigate bias.

40%

of the executives surveyed feel competent in observing bias in GenAI

I feel that I have an impact on my organization’s efforts to mitigate bias in GenAI

I feel competent in observing/ catching bias in GenAI

Those who took part in bias training feel statistically more competent (53%) compared to those who have not (32%)

Source: Survey by IMD, EqualVoice, and Microsoft

Case study: Microsoft’s RAI practice

Microsoft’s mission is to empower every person and every organization on the planet to achieve more, and this mission statement translates into our approach to GenAI. We are optimistic about the potential of GenAI and are deeply committed to responsible AI, innovation, and a responsible future.

Microsoft’s approach to RAI is grounded in six core principles to ensure that AI systems are developed and deployed in a way that is ethical and beneficial for society.

RAI principles

• Fairness: How might an AI system allocate opportunities, resources, or information in ways that are fair to the humans who use it?

• Reliability and safety: How might the AI system function well for people across different use conditions and contexts, including ones it was not originally intended for?

• Privacy and security: How might the AI system be designed to support privacy and security?

• Inclusiveness: How might the AI system be designed to be inclusive of people of all abilities?

RAI Principles (Source: Microsoft,2024)

• Accountability: How might people misunderstand, misuse, or incorrectly estimate the capabilities of the AI system?

• Transparency: How can we create oversight mechanisms so that humans can be accountable and in control of AI systems?

Microsoft’s Responsible AI Standard (the Standard) serves as the internal playbook to uphold these principles. Through the Standard, we are empowering teams across Microsoft with a clear and actionable framework for how to operationalize these principles into practice.

RAI must not be a filter applied at the end, but a foundational part of the development and deployment process. Employees are trained to assess risks, apply mitigations, and then work with a multi-disciplinary team of researchers, engineers, and policy experts to review, test, and apply red teaming, or risk simulation. The Responsible AI Standard v2 is openly available to the public, and the practices and experiences it describes can be used by any organization, company, or individual to inform their approach and strategy.

5.1. The RAI ecosystem

Microsoft’s responsible AI program is a collaborative effort across research, policy, and engineering teams. The Office of Responsible AI works with policy teams to develop AI policies, practices, and governance processes that uphold our AI principles. Research teams across Aether, Microsoft Research, and applied researchers within engineering teams advance the state of the art in responsible AI. Engineering teams integrate these responsible AI practices into the development lifecycle. The Responsible AI Council oversees the program’s strategic direction and ensures alignment with Microsoft’s core values and principles. The Environmental, Social, and Public Policy (ESPP) committee of the Microsoft board provides high-level oversight and guidance, ensuring that responsible AI practices align with broader corporate responsibility goals and societal expectations. Lastly, Responsible AI Champions, are embedded within each engineer.

Govern, map, measure, manage: An iterative cycle

To contextualize the overarching AI principles, Microsoft has built a company-wide program that facilitates a culture and practice where responsible AI is not an afterthought. We emphasize big-picture thinking that considers not just what GenAI can do, but what it should do, ensuring decisions are made with a view toward societal impact. We do this by establishing a robust risk management approach that aligns with the National Institute of Standards and Technology (NIST) AI Risk Management Framework (RMF), which includes the following tenets:

Risk management approach

Source: Microsoft, 2024

1. Govern: We start with a strong governance foundation that establishes requirements for teams and institutes oversight processes, including for high-risk and high-impact uses of AI. We rely primarily on our Responsible AI Standard v2 (the Standard), a product of a multiyear project that leveraged the expertise of policy, research, and engineering teams across Microsoft to develop actionable guidance for the responsible development and deployment of AI systems. Building upon the Standard and in alignment with NIST’s AI RMF, we formalized in 2023 a set of GenAI requirements to help reduce risks associated with the use of GenAI systems.

In addition to policy requirements, we have an oversight process that we call Sensitive Uses (established in 2017). Sensitive Uses is essentially a team that engages in hands-on product and project counseling for our most high-impact, sensitive applications of AI. The Sensitive Uses program provides an additional layer of oversight and case-specific requirements for use cases where the reasonably foreseeable use or misuse of AI could:

• Have a consequential impact on an individual’s legal status or life opportunities.

• Present the risk of significant physical or psychological injury.

• Restrict, infringe upon, or undermine the ability to realize an individual’s human rights.

If a use case meets the criteria of one of these three categories, the team is required to report the project to the Office of Responsible AI. From there, cases are reviewed by Sensitive Use’s program experts, who will offer additional guidance and requirements to enable responsible deployment aligned with our RAI principles.

2. Map: Building on this governance foundation, we map and measure risks and associated harms that could arise from the use or misuse of GenAI systems and apply mitigations. A few practices have emerged to effectively map, or identify GenAI risks, which include threat modeling, responsible AI impact assessments, AI red teaming, customer feedback, and incident response and learning programs. We share our learnings from implementing these practices with our customers, partners, policymakers, and the wider public, including by publishing our AI impact assessment template and its accompanying guidance document.

3. Measure: Once we have a sense of what the risk areas are, we systematically measure the prevalence of those risks and evaluate how well our mitigations perform against defined metrics. Examples of risks and associated harms that are relevant for GenAI systems include jailbreak success rate (the failure of mitigations), harmful content generation, and ungrounded or inaccurate content generation.

4. Manage Risk: At Microsoft, we take a multilayered approach to managing potential risks associated with the use or misuse of GenAI systems. This includes making changes to the model itself, implementing mitigations at the application level (including UI/UX mitigations), and implementing ongoing monitoring, feedback channels, and incident response processes for previously unknown risks.

5. Operate: This stage ensures that there is a robust plan for deployment and operational readiness, including continuous oversight. These practices are essential for mitigating the biases identified in GenAI models, thereby promoting fairness and inclusivity in GenAI systems.

While the governance framework and the Responsible AI Standard give us the direction, making it clear what we need to achieve, the engineering Responsible AI group takes those goals and puts them into practice both for Microsoft products and the customers building their own GenAI applications on Microsoft platforms.

This is an iterative process, and these practices are essential to minimize the risk of any generic or fairness-related harms caused by product-related development work.

5.2. Mitigation layers

To mitigate both generic and fairness-related harms we use a multi-layer mitigation model as described below.

Layer-based risk mitigation model at Microsoft

Positioning System purpose, promises, and communication

Application

Safety system

Application architecture, UI & UX, system message

Model

Monitor and protect model inputs and outputs

Choose the right model for the use case, finetune vs. out-of-the-box

5.2.1 Positioning layer

Mitigating the potential harms of GenAI starts with a clear and documented assessment of the use case to be implemented before or in the earliest stages of development, per the guidelines for the principles of accountability and transparency. A comprehensive assessment gathers information about the system, its purpose, features, data required, and deployment considerations such as complexity, geography, or language of use. It also documents information about the potential benefits and fairness-related harms for the identified stakeholders, as well as preliminary mitigation strategies.

At Microsoft, the Responsible AI Impact Assessment Template serves as an open tool for use case positioning and documentation. Its completion is a requirement for all internal AI products as per the Microsoft Responsible AI Standard v2, and an integral part of the Microsoft Responsible AI guidance.

Having this information documented eases the process of publishing transparency notes, system documentation, user guidelines, and best practices when positioning the GenAI application to the end users. (Microsoft RAI Impact Assessment Guide, 2022)

5.2.2 Application layer

Human-AI Experience (HAX) Guidelines Safety System

The choices made when designing the user interfaces (UIs) are critical for a positive user experience (UX). At the UI/UX level, mitigating risks means adhering to a holistic set of evidence-based best practices and guidelines for designing AI user experiences (Amershi et al., 2019, see also Passi et al., 2024) that aim to minimize the most common Human-AI interaction failures. The following guidelines from the Microsoft Human-AI Experience Guidelines are of particular importance in the context of fairness-related harm:

• Show contextually relevant information for a specific user. Examples: generate an answer in the input language of the user, unless otherwise specified; recommend local newspapers relevant to the current location.

• Match relevant social norms: ensure the experience is delivered in a way that users would expect, given their social and cultural context. Examples inspired by Microsoft Copilot UX: give 3 writing styles that users can select from for text generation: formal, semi-formal, familiar, embed preferences a user can opt-in at the UI level, which is later considered by the system message.

• Mitigate social biases: ensure the AI system’s language and behaviors do not reinforce undesirable and unfair stereotypes and biases. Examples: text generation for generic statements is gender-neutral; translation does not infer gender where non-defined in the original language; stereotyping is not enhanced in text or image generation.

• Preparing pre-determined responses for certain queries to which a model may generate offensive or inappropriate responses to any group.

• Support efficient correction and feedback: make it easy to edit, refine, or flag and provide feedback when the AI system is wrong.

• Learn from user behavior and feedback, update and adapt cautiously.

• Embed user guidelines and best practices within the UX where possible.

Many of these points imply careful construction of the GenAI system message.

System message and user prompt

System messages are a key aspect of risk mitigation in general, as the output of a model is strongly dependent on the system message and the user prompt. Research-backed templates are available for use and customization on the Microsoft AI platform. Techniques for mitigating fairness-related risks at the system message level could include:

• Exemplifying what stereotyping could look like for a particular use case and avoiding it.

• Correcting and compensating for commonly identified weaknesses through examples.

• Injecting user preferences selected in the UI as instructions for the model.

Grounding models

Grounding models in proprietary, use-casespecific information that is not inherently part of their training data is a state-of-the-art technique that helps generate more precise and contextually relevant outputs.

5.2.3 Safety system layer

Content moderation

Content filters and security mechanisms are attached by default to several GenAI models in the Microsoft AI Platform and are built on top of the Azure AI Content Safety service. Azure’s Content Safety service offers a robust solution to content moderation by detecting and filtering harmful content across multiple modalities, including text and images. This service employs advanced algorithms to detect and rate content across security, copyright, sexual violence, self-harm, hate, fairness, or custom-defined categories, ensuring that AI systems operate within ethical boundaries.

Abuse monitoring

Capturing content filters and moderation data over extended periods and further processing it can reveal recurrent patterns across applications and users. At Microsoft, the abuse monitoring system is an integral part of the generative AI practice and platform. This ultimately promotes a continuous process of human review where appropriate and application improvement, and hence a safer AI ecosystem.

5.2.4 Model layer

As most applications will employ readily available Large Language Models from different providers, mitigating the risks for fairness-related and other harms at the model level frequently comes down to making an informed decision about which model is the most appropriate for a specific use. Model overviews, as well as model security assessments, are available for each model in the Azure AI Model Catalog and describe the model capabilities, limitations, and any measures taken to reduce the risk of potential risks, among other things.

Techniques such as Reinforcement Learning with Human Feedback (RLHF) or fine-tuning are also notable when mitigating the risks of fairness-related harms. While RLHF refers to incorporating human feedback to guide the model towards more desired outputs, fine-tuning implies re-training part of the original LLM on a custom-curated dataset, adapting hence the foundation model to specific needs. The curation of the dataset used for fine-tuning, diversity, and a balanced representation of the data is hence critical to promote fairness in this scenario. On Azure, the Azure AI Studio offers a simplified process to fine-tune foundation models.

Takeaways and recommendations 06

Adoption and integration of GenAI will accelerate exponentially over the next decade unlocking value and opportunity for society, organizations, and communities. At the same time, ingrained bias in GenAI models and systems will also amplify and find its way back into society with the potential to widen already egregious gaps in access and representation – if not addressed holistically and comprehensively. There are risks to society but also to business organizations that we have outlined in this paper.

This paper also shares survey findings that point to several key issues. First, most executives surveyed feel optimistic about the promise of GenAI and are actively adopting technologies across an array of business functions. At the same time, they express

01

Where does responsibility for ethical and responsible AI currently sit within the organization?

Is it siloed, or do we actively engage diverse and relevant stakeholders in a culture of transparency and accountability?

Can we intertwine the promise that AI innovation brings with the DE&I strategy and inclusive leadership?

03

What measures do we take to ensure that fairness and human-centric values are being actively upheld in GenAI training, design and development, and deployment?

Are we aware of risks and unintended consequences in our use of GenAI and how can we be sure we test and prepare for all these risks?

concern about the risks of bias related to diversity, equity, and inclusion issues. They also say that not enough is being done within their organizations to address the risks.

Decision makers are today ahead of an opportunity to take action against bias in GenAI. We are at an inflection point in shaping the extent to which GenAI will make organizations, stakeholder communities, and society at large fairer, or not. As the technologies will change at a faster pace, organizational approaches to technology, processes, and people need to be agile and flexible to account for this. Below are questions that we believe organizations and leaders must now ask themselves.

02

Do we have a clear set of responsible AI principles that align with organizational values?

Do these principles translate into systemic and transversal procedures and processes across the organization?

Do we set the path to an inclusive culture that supports psychological safety?

04

How can we ensure that we have panorganizational capabilities, awareness, and accountability?

Do we monitor, evaluate, and modify our GenAI output and solutions to mitigate bias going forward?

Amershi, S., Weld, D., Vorvoreanu, M., Fourney, A., Nushi, B., Collisson, P., Suh, J., Iqbal, S., Bennett, P. N., Inkpen, K., Teevan, J., Kikin-Gil, R., & Horvitz, E. (2019). Guidelines for Human-AI Interaction. Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, 1–13. https://doi. org/10.1145/3290605.3300233

Ammanath, B. (2022). Trustworthy AI: A Business Guide for Navigating Trust and Ethics in AI. John Wiley & Sons

Barocas, S., Hardt, M., & Narayanan, A. (2019). Fairness and Machine Learning. fairmlbook.org

Benjamin, R. (2019). Race After Technology: Abolitionist Tools for the New Jim Code. Polity.

Brüns, J. D. & Meißner, M. (2024) “Do You Create Your Content Yourself? Using Generative Artificial Intelligence for Social Media Content Creation Diminishes Perceived Brand Authenticity.” Journal of Retailing and Consumer Services 79: 103790. https:// doi.org/10.1016/j.jretconser.2024.103790

Cairns-Lee, H., Işık, Ö., Toms, S. E., & van Zanten, J. (2024). AI = Accountability + Inclusion. I by IMD, 21 March. https://www.imd.org/ibyimd/diversityinclusion/ai-accountability-inclusion

Chesney, R., & Citron, D. K. (2019). Deepfakes and the New Disinformation War: The Coming Age of PostTruth Geopolitics. Foreign Affairs.

Choi, J. H. & Mattingly J. P. (2022) What Different Denial Rates Can Tell Us About Racial Disparities in the Mortgage Market. Urban Institute, January 13. https://www.urban.org/urban-wire/what-differentdenial-rates-can-tell-us-about-racial-disparitiesmortgage-market

Correll, S. J., Weisshaar, K. R., Wynn, A. T. & Delfino Wehner J (2020). Inside the Black Box of Organizational Life: The Gendered Language of Performance Assessment. American Sociological Review 85 (6): 1022–50. https://doi. org/10.1177/0003122420962080

Dastin, J. (2018). Insight - Amazon Scraps Secret AI Recruiting Tool That Showed Bias against Women. Reuters https://www.reuters.com/article/world/ insight-amazon-scraps-secret-ai-recruiting-tool-thatshowed-bias-against-women-idUSKCN1MK0AG

Edelman (2024). Edelman Trust Barometer. Edelman. https://www.edelman.com/trust/2024/trustbarometer

Edmondson, A. (2019). The fearless organization: Creating psychological safety in the workplace for learning, innovation, and growth. Hoboken, New Jersey: Wiley.

Eubanks, V. (2018). Automating Inequality: How HighTech Tools Profile, Police, and Punish the Poor. St. Martin’s Press.

European Commission. (2020). White Paper on Artificial Intelligence - A European approach to excellence and trust.

Ferdman, B. M. 2020. Inclusive Leadership: The Fulcrum of Inclusion. In B. M. Ferdman, J. Prime & R. E. Riggio (Eds.), Inclusive Leadership: 3–24. New York Routledge, 2020.: Routledge.

Ferrara, E. (2023). Should ChatGPT be Biased? Challenges and Risks of Bias in Large Language Models. First Monday. https://doi.org/10.5210/ fm.v28i11.13346

Fleischmann, A., & van Zanten, J. (2022). Inclusive Future. IMD, Lausanne. https://www.imd.org/ inclusivefuture

Fleischmann, A. & van Zanten, J. (2024). Advancing Equity. IMD, Lausanne.

Global Media Monitoring Project. (2020). 6th Global Media Monitoring. Global Media Monitoring Project. https://whomakesthenews.org/wp-content/ uploads/2021/08/GMMP-2020.Highlights_FINAL.pdf

Gillespie, T. (2018). Custodians of the Internet: Platforms, Content Moderation, and the Hidden Decisions that Shape Social Media. Yale University Press.

Greenwald, A. G., & Krieger, L. H. (2006). Implicit Bias: Scientific Foundations. California Law Review, 94(4), 945–967. https://doi.org/10.2307/20439056

Guzikevits, M., Gordon-Hecker, T., Rekhtman, D., Salameh, S., Israel, S., Shayo, M., Gozal, D. Perry, A., Gileles-Hillel, A. & Choshen-Hillel, S. (2024). Sex Bias in Pain Management Decisions.” Proceedings of the National Academy of Sciences 121 (33) https://doi. org/10.1073/pnas.2401331121

Hacker, P., Mittelstadt, B., Zuiderveen Borgesius, F. & Wachter S. (2024) Generative Discrimination: What Happens When Generative AI Exhibits Bias, and What Can Be Done About It. arXiv, June 26. https://doi. org/10.48550/arXiv.2407.10329

Hofmann, V., Kalluri, P. R., Jurafsky, D., & King, S. (2024). AI generates covertly racist decisions about people based on their dialect. Nature, 633 (8028), 147–154. https://doi.org/10.1038/s41586-024-07856-5

Holistic AI. (2024). What is AI Bias? - Understanding Its Impact, Risks, and Mitigation Strategies. https://www. holisticai.com/blog/what-is-ai-bias-risks-mitigationstrategies

Kahneman, D. (2013). Thinking, Fast and Slow. New York: Farrar, Straus, and Giroux.

Kamruzzaman, M., Shovon, Md., & Kim, G. (2024). Investigating Subtler Biases in LLMs: Ageism, Beauty, Institutional, and Nationality Bias in Generative Models. In L.-W. Ku, A. Martins, & V. Srikumar (Eds.), Findings of the Association for Computational Linguistics ACL 2024 (pp. 8940–8965). Association for Computational Linguistics. https://doi. org/10.18653/v1/2024.findings-acl.530

Kassova, L. (2023). Where are all the ‘godmothers’ of AI? Women’s voices are not being heard. The Guardian, 25 Nov

Kim, H. G., Chun, W., & Wang, Z. (2021). Multipledimensions of corporate social responsibility and global brand value: a stakeholder theory perspective. Journal of Marketing Theory and Practice, 29 (4), 409–422. https://doi.org/10.1080/10696679.2020.1865109

Koch, A. J., D’Mello, S. D., & Sackett, P. R. (2015). A meta-analysis of gender stereotypes and bias in experimental simulations of employment decision making. Journal of Applied Psychology, 100(1), 128–161. https://doi.org/10.1037/a0036734

Kotek, H., Dockum, R., & Sun, D. Q. (2023). Gender bias and stereotypes in Large Language Models (No. arXiv:2308.14921). arXiv. https://doi.org/10.48550/ arXiv.2308.14921

Leslie, D., Mazumder, A., Peppin, A., Wolters, M. K. & Hagerty, A. (2021). Does ‘AI’ Stand for Augmenting Inequality in the Era of Covid-19 Healthcare? BMJ 372: n304. https://doi.org/10.1136/bmj.n304

Lorenzo, R., & Reeves, M. (2018). How and Where Diversity Drives Financial Performance. Harvard Business Review https://hbr.org/2018/01/how-andwhere-diversity-drives-financial-performance

McKinsey. (2023). The economic potential of generative AI: The next productivity frontier.

Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., & Galstyan, A. (2021). A Survey on Bias and Fairness in Machine Learning. ACM Computing Surveys (CSUR), 54(6), 1-35.

Meister, A. (2023). The five Cs of inclusive leadership. I by IMD, July-August, 7–11.